EEG-Driven Dynamic Immersion Design for XR Gaming Experiences

Yunbing Han

a

College of Arts, Media and Design, Northeastern University, Boston, Massachusetts, U.S.A.

Keywords: Extended Reality, EEG Signals, Immersion Recognition, Dynamic Regulation, Adaptive Game Design.

Abstract: This study explores the dynamic identification and design method of Extended Reality game immersion based

on electroencephalography (EEG) signals. By using the EEG dataset from the Kaggle platform, the original

emotion labels (Positive, Neutral, Negative) were first remapped to the corresponding immersion levels (High,

Medium, Low) to construct an immersion recognition dataset suitable for classification models. The Orange

platform was used for visual modelling and training, and finally achieved a very high accuracy (98.5%) and

AUC value (0.999) under the Random Forest algorithm, which verified the feasibility and effectiveness of

predicting the user immersion state through EEG data. Based on these results, this paper proposes the

importance of real-time feedback for immersion based on physiological signals in game development and

design. The process of user EEG state acquisition, immersion recognition, and content adjustment enhances

the continuity and interactive depth of user experience. With the miniaturization and popularization of EEG

devices, real-time feedback for immersion based on physiological signals such as EEG is expected to become

a key infrastructure for designing next-generation immersive experiences.

1 INTRODUCTION

XR is often used as an abbreviation for Extended

Reality (Alcañiz et al., 2019), or X as a placeholder

for a variety of digital reality formats (Rauschnabel et

al., 2022), including Virtual Reality (VR), Extended

Reality (XR), Mixed Reality (MR), etc. Research

using electroencephalography (EEG) combined with

XR equipment has gradually increased and become

more widespread in recent years, with the number of

experiments and studies rising rapidly since 2017

(Nwagu et al., 2023). Whether the XR experience is

for entertainment purposes or for simulation training,

Presence and Immersion are important indicators of a

good user experience (Skarbez et al., 2017).

Therefore, helping XR programs with design

development and testing through physiological

signals can give more direct feedback. Early studies

utilized auditory evoked EEG data to assess VR

presence differences. Such methods demonstrated

that EEG could be used to objectively monitor

immersion/presence without interrupting the

experience (Savalle et al., 2024). Studies

demonstrated that a number of EEG features can be

used as objective biomarkers of immersion

a

https://orcid.org/0009-0001-9921-7685

(Tadayyoni et al., 2024), as well as providing a

machine learning approach to objectively measure

VR presence (Saha et al., 2024).

In recent years, there have been a number of

studies in this area on the design and application of

dynamic immersion via EEG. Chiossi et al. adjusted

the complexity of distracting elements in virtual

environments in real time by monitoring user EEG

metrics. The results showed that dynamic adjustment

based on alpha/theta waves can optimize the

environment to keep users engaged without

overloading them (Chiossi et al., 2024). Woźniak et

al. detected the player's level of concentration and

relaxation in real time via EEG as an input to control

the game organ. It demonstrated that this method

significantly enhanced player immersion and

engagement without adding additional cognitive load

(Woźniak et al., 2021). Iwane et al.'s approach is to

automatically optimize the control strategy when a

user's dissatisfaction or "wrong" reaction to an avatar

action is detected. This dynamic correction

mechanism, which uses EEG error signals as

feedback, demonstrates a cutting-edge approach to

improving interactive immersion (Iwane et al., 2024).

Han, Y.

EEG-Driven Dynamic Immersion Design for XR Gaming Experiences.

DOI: 10.5220/0014362100004718

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Engineering Management, Information Technology and Intelligence (EMITI 2025), pages 517-521

ISBN: 978-989-758-792-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

517

The aim of this study is to employ EEG data and

correlate it with immersion and presence during XR

experience, using the data for model training as well

as the positive impact of EEG data on XR game

design and development.

2 DATA & METHODOLOGY

The EEG data used in this study is from the publicly

available dataset EEG Brainwave Dataset: Feeling

Emotions on the Kaggle platform, which contains

EEG signals recorded by multiple subjects in

different experimental tasks. The dataset has a high

sampling frequency and contains multiple channels,

which can effectively reflect the EEG changes of the

subjects in different mental states, and is suitable to

be used as the basic data for immersion recognition

(Bird et al., 2018; Bird et al., 2019).

2.1 Immersion Label Construction

In immersive experience research, how to

scientifically label "immersion" is a key prerequisite

for supervised learning tasks. Since immersion is a

subjective psychological feeling, it is difficult to be

directly observed and measured, and usually needs to

be estimated with the help of indirect indicators.

The dataset used in this study is from Kaggle, and

the original labels are POSITIVE, NEUTRAL, and

NEGATIVE emotional states. These labels are

divided based on the EEG responses generated by

subjects during viewing or experiencing different

types of stimulus content (e.g., images, videos).A key

finding regarding electroencephalography (EEG)

during virtual reality experiences is the relationship

between specific brainwave patterns and immersion

levels. The study demonstrated that a number of EEG

features can be used as objective "biomarkers" of

immersion, which can be used to recognize the user's

level of immersion in real time and to dynamically

adjust the difficulty of VR tasks to maintain

engagement. Reece et al. found that although

subjective stress measures did not differ significantly

between flat screens and anxiety-analogous reality

presentations, EEG results showed an increase in

cortical activity associated with higher levels of

virtual reality immersion, which coincided with

enhanced descriptions of realism and sense of

presence (Tadayyoni et al., 2024) . Rebecca Reece et

al. emphasized the use of electroencephalography

(EEG) to measure event-related potentials as an

indicator of immersion state, demonstrating neural

responses that vary according to the immersion

context (Reece et al., 2022). Users are more likely to

be in an immersive state when they are in a positive

mood and have higher cognitive engagement, while

immersion is weaker or even interrupted when they

are in a neutral or negative mood. Therefore, this

study establishes the following correspondence

between emotional labels and immersion states:

POSITIVE corresponds to High immersion, in

which subjects show high emotional activity, positive

reaction and concentration. Combined with the

characteristics of XR game scenarios, this state often

corresponds to the user's deep involvement in the

task, with a strong sense of immersion in the

environment, resulting in an "oblivious" experience,

while NEUTRAL corresponds to Medium

immersion, where the user may still be in the middle

of the task in a neutral emotional state but lacks a

strong emotional drive. The experience is between

immersion and non-immersion, with a certain degree

of volatility, which is characterized by phases of

concentration and occasional emotional reactions.

NEGATIVE corresponds to Low immersion; in a

negative emotional state, the user is more likely to

experience distraction, fatigue, anxiety, or boredom.

This type of state may lead to interruption of

experience or difficulty in achieving game goals in

XR interactions, and is therefore regarded as the

context with the lowest level of immersion.

In order to ensure the rationality of labeling, this

study compared and referred to the relationship

models between emotion and immersion, such as the

Russell Emotion Circle and the Immersion

Experience Model, and adjusted the meanings of the

labels by taking into account the actual task intensity

of the XR usage scenario, user feedback and other

auxiliary factors. Finally, this study reassigned each

record in the dataset as "High immersion", "Medium

immersion" or "Low immersion" according to its

original emotion label. immersion", "Medium

immersion" or "Low immersion", and constructed a

three-classification dataset suitable for the immersion

recognition task. This label mapping not only ensures

the interpretability of the training data, but also

provides theoretical support and practical basis for the

subsequent application of immersion prediction

models in XR games.

2.2 Modeling Tools

In this study, EEG data are modeled and classified

using the Orange data mining platform, a visual

machine learning tool that can complete the process

of data import, feature selection, model training and

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

518

performance evaluation through drag-and-drop

modules.

In the modeling process, this study followed the

following procedure in the Orange platform: first,

import the data and adjust the labels. After importing

the data in CSV format into Orange, the original

emotion labels (POSITIVE, NEUTRAL,

NEGATIVE) were renamed and mapped, and unified

into three categories of labels: "High immersion",

"Medium immersion", and "Low immersion", which

were used for modeling. immersion", "Medium

immersion", and "Low immersion" for the

subsequent triple classification task. Then, the

classifier selection is carried out. In this study,

Random Forest is chosen as the main classification

model, which is an integrated learning method with

good robustness and can effectively deal with

nonlinear features and multi-category tasks. In

Orange, the modeling is carried out through the

"Random Forest" module, which is suitable for

exploratory experiments because it does not need to

adjust the parameters manually. Then, the model is

evaluated. To ensure the robustness of the results, this

study uses 5-fold cross-validation for performance

evaluation. The "Test & Score" module is used in

Orange to comprehensively analyze the model's

Accuracy, AUC value, F1 score and other indicators

to ensure that the model has a good generalization

ability.

3 EXPERIMENT & RESULTS

3.1 Model Performance Indicators

The actual test results (Table 1) show that the

Random Forest model performs well in the three

classification tasks in this study: the AUC value is as

high as 0.999, which reflects that the model has a very

high discriminative ability in distinguishing different

immersion levels; the overall accuracy is 98.5%,

which reflects that the majority of the samples have

been correctly categorized; the F1 score, precision

and recall are all 0.985, which indicates that the

model performs very balancedly in identifying

various types of immersion states; the MCC value is

0.977, which further verifies the stability and

reliability of the model. The F1 score, precision rate

and recall rate are all 0.985, indicating that the model

has a well-balanced performance in recognizing

various immersive states.

These results show that immersion recognition

based on EEG signals has significant feasibility in

experimental data, especially with high classification

performance under the random forest model.

Table 1: Random forest training result data

Model Random Forest

AUC 0.999

CA 0.985

F1 0.985

Prec 0.985

Recall 0.985

MCC 0.977

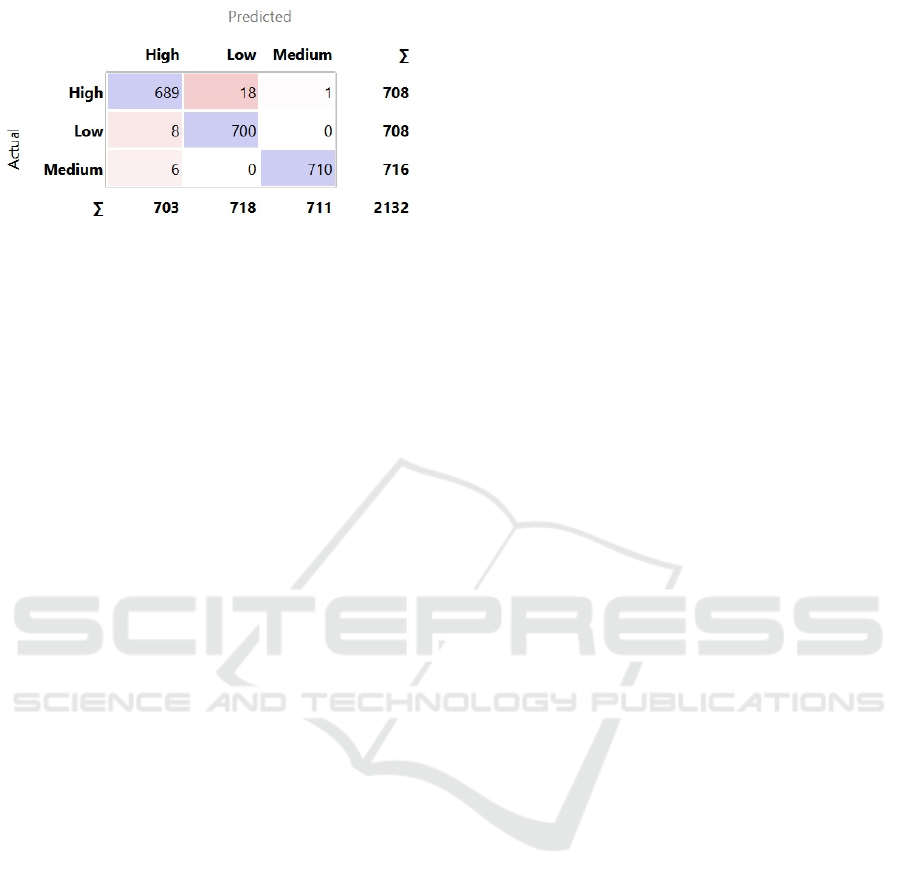

3.2 Confusion Matrix Analysis

To further analyze the model's categorization

performance on various immersion labels, this paper

generates a confusion matrix using the Orange

platform, as shown in Figure 1. The horizontal axis

represents the categories predicted by the model, and

the vertical axis is the true labels. The number in each

cell indicates the number of samples in that category

that were categorized into different categories. The

results show that for the samples with the real label of

High immersion, the model correctly classified 689

of them, 18 were misclassified as Low, and only 1

was misclassified as Medium; for the samples with

Low immersion, 700 were correctly classified, and

only 8 were misclassified as High; and for the

samples with Medium immersion, the model

recognizes them most accurately, and correctly

classified 710 of them, and only 6 were misclassified

as High; and for the samples with Medium

immersion, the model identifies them most

accurately. For samples with Medium immersion, the

model recognizes them most accurately, with 710

correctly classified, only 6 misclassified as High, and

the rest not misclassified.

Overall, the three immersion states showed high

distinguishability in the classification model. There

were fewer misclassifications between High and Low

immersions, indicating that they are significantly

different at the level of EEG features; while Medium

category was slightly confused with High category,

probably due to its transitional nature in terms of

emotional and cognitive states. The results further

verify the validity of the model developed in this

study, and indicate that the application of EEG signals

in the identification of the three types of immersive

states is highly feasible.

EEG-Driven Dynamic Immersion Design for XR Gaming Experiences

519

Figure 1: Confusion Matrix (Picture credit: Original)

4 APPLICATION OUTLOOK:

DYNAMIC IMMERSION

DESIGN IN XR GAMES

This study not only verifies the feasibility of using

EEG to predict immersion, but also proposes a vision

of its application in the optimization of XR game

experience, i.e., an EEG feedback-based immersion

adjustment system.

In traditional XR games, the content presentation

usually relies on a fixed rhythm and scene logic, and

lacks real-time perception and response to the user's

psychological state, which can easily lead to

problems such as fragmentation of experience, loss of

attention, or excessive fatigue. The immersion level

of users at different stages often fluctuates

significantly, while the game content cannot be

adjusted in time to match their psychological state,

limiting the continuity and personalization of the

immersion experience. At the same time, designers

often face a key dilemma: the lack of ability to

perceive the real-time immersion state of the user

during the game. Although certain feedback can be

obtained through user tests or subjective

questionnaires, these approaches are delayed,

subjective, and have limited coverage, making it

impossible to accurately determine the level of

immersion experience of a player in a specific

interaction node or plot segment.

However, using EEG to capture and predict user

immersion during game development and giving

timely feedback is strongly conducive to iterative

game development. The introduction of this

mechanism enables XR game content to "read the

user" and provide more targeted immersion

optimization strategies while ensuring the

consistency of the overall process. Its core value lies

in the shift from "content-driven" to "user-state-

driven", providing a technical foundation for

personalized immersion experiences.

It is foreseeable that this model will bring a new

paradigm for XR game design in the future, providing

a technical path and application scenario for

immersion-driven design.

5 CONCLUSIONS

This paper collects the existing EEG data, which are

processed and labeled to classify them, so that they

correspond to the sense of immersion during the XR

game. After random forest model training, it is

concluded that the system is able to accurately

calculate and predict the user's immersion during

gameplay, and classify the user's state as "High

immersion", "Medium immersion", or "Low

immersion", through real-time acquisition of user

EEG signals by the EEG headset device, and

capturing the user's cognitive and emotional states

during the interaction process. The system can

accurately calculate and predict the user's immersion

during the game and categorize the user's state as

"High immersion", "Medium immersion" or "Low

immersion". This kind of physiological signal

feedback is very helpful for XR games in the early

stage of development and design. The development

team can make timely adjustments to the game

content or difficulty based on the physiological signal

evaluation to ensure that users maintain an idealized

immersion during the game process, thus enhancing

the user experience. This plays an important role in

grasping the totality of XR content. In the future,

when intelligent hardware can provide XR devices

with convenient and fast EEG detection functions, the

model will not only stay in the testing stage, but also

become a key system for intelligent and automated

XR games. User experience and feedback will

become part of the game production and process,

opening up new feedback mechanisms and

interaction modes for XR games.

REFERENCES

Alcañiz, M., Bigné, E., & Guixeres, J. (2019). Virtual

reality in marketing: A framework, review, and

research agenda. Frontiers in Psychology, 10, 1530.

Bird, J. J., Ekart, A., Buckingham, C. D., & Faria, D. R.

(2019). Mental emotional sentiment classification with

an EEG-based brain-machine interface. In The

International Conference on Digital Image and Signal

Processing (DISP’19). Springer.

Bird, J. J., Manso, L. J., Ribiero, E. P., Ekart, A., & Faria,

D. R. (2018). A study on mental state classification

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

520

using EEG-based brain-machine interface. In 9th

International Conference on Intelligent Systems. IEEE.

Chiossi, F., Ou, C., Gerhardt, C., Putze, F., & Mayer, S.

(2024). Designing and evaluating an adaptive virtual

reality system using EEG frequencies to balance

internal and external attention states. International

Journal of Human-Computer Studies, 103433.

Iwane, F., et al. (2024). Customizing the human-avatar

mapping based on EEG error related potentials during

avatar-based interaction. Journal of Neural

Engineering, 21(2), 026016.

https://doi.org/10.1088/1741-2552/ad2c02

Nwagu, C., AlSlaity, A., & Orji, R. (2023). EEG-based

brain-computer interactions in immersive virtual and

augmented reality: A systematic review. Proceedings of

the ACM on Human-Computer Interaction, 7(EICS),

1–33.

Rauschnabel, P. A., Felix, R., Hinsch, C., Shahab, H., &

Alt, F. (2022). What is XR? Towards a framework for

augmented and virtual reality. Computers in Human

Behavior, 133, 107289.

Reece, R., Bornioli, A., Bray, I., Newbutt, N., Satenstein,

D., & Alford, C. (2022). Exposure to green, blue and

historic environments and mental well-being: A

comparison between virtual reality head-mounted

display and flat screen exposure. International Journal

of Environmental Research and Public Health, 19(15),

9457.

Saha, S., Dobbins, C., Gupta, A., & Dey, A. (2024).

Machine learning based classification of presence

utilizing psychophysiological signals in immersive

virtual environments. Scientific Reports, 14(1).

Savalle, E., Pillette, L., Won, K., Argelaguet, F., Lécuyer,

A., & Macé, M. J. (2024). Towards

electrophysiological measurement of presence in

virtual reality through auditory oddball stimuli. Journal

of Neural Engineering, 21(4), 046015.

Skarbez, R., Brooks, F. P., Jr., & Whitton, M. C. (2017). A

survey of presence and related concepts. ACM

Computing Surveys, 50(6), 1–39.

Tadayyoni, H., Campos, M. S. R., Quevedo, A. J. U., &

Murphy, B. A. (2024). Biomarkers of immersion in

virtual reality based on features extracted from the EEG

signals: A machine learning approach. Brain Sciences,

14(5), 470.

Tadayyoni, H., Campos, M. S. R., Quevedo, A. J. U., &

Murphy, B. A. (2024). Biomarkers of immersion in

virtual reality based on features extracted from the EEG

signals: A machine learning approach. Brain Sciences,

14(5), 470.

Woźniak, M. P., et al. (2021). Enhancing in-game

immersion using BCI-controlled mechanics.

Proceedings of the 2021 International Conference on

Interactive Media Experiences, 1–6.

EEG-Driven Dynamic Immersion Design for XR Gaming Experiences

521