A Study in Emotion-Aware Adaptive Interaction in Intelligent

Vehicle Cockpits

Sihui He

1,*

, Hexuan Ying

2

and Hao Zhong

3

1

School of Design, Royal College of Art, London, U.K.

2

High School Affiliated To Shanghai Jiao Tong University IB Center, Shanghai, China

3

School of Artificial Intelligence, Zhujiang College, South China Agricultural University, Guangzhou, Guangdong, China

*

Keywords: Multimodal Emotion Recognition, Intelligent Oockpit, Road Rage, Emotional Regulation.

Abstract: With the rapid development of intelligent cockpit technology in today's era, the emotional state of drivers has

become a significant factor influencing driving safety. Among these, negative emotional behaviors such as

‘road rage’ pose a significant threat to traffic safety. This study focuses on analysing the integrated application

of multi-modal emotion recognition and intelligent interaction. It first explores the causes of negative

emotions during driving and then examines the technical pathways for emotion recognition from both single-

modal and multi-modal perspectives, emphasising the advantages of multi-sensory collaboration in enhancing

recognition accuracy and response sensitivity. The study explores an emotion monitoring system based on

multi-modal perception and analyses adaptable human-machine interaction mechanisms. The aim is to

mitigate driver emotional fluctuations through proactive intervention strategies, thereby enhancing driving

safety and cabin experience. Additionally, this study identified current challenges in emotion recognition

accuracy, emotion classification dimensions. Based on this, it proposed future development directions,

including leveraging deep learning to enhance individual emotional personalisation and optimising the

collaborative mechanisms between multi-modal sensors and driving behavior big data. This study provides a

theoretical foundation and practical pathways for achieving more emotionally intelligent interaction systems,

driving the evolution of intelligent cockpits toward more humanised and emotional directions.

1 INTRODUCTION

In recent years, intelligent cockpits have gradually

become a hot topic of research in the automotive

industry and human-machine interaction field,

attracting widespread attention from all sectors of

society. With the continuous development of cutting-

edge technologies such as artificial intelligence, the

Internet of Things, and big data analysis, a series of

intelligent interaction functions such as intelligent

driving, automatic parking, and voice control have

emerged and are gradually being applied to mass-

produced models. These features not only effectively

reduce the operational burden on drivers during

driving but also significantly enhance the

convenience and safety of travel, driving the

transformation of automobiles from traditional

transportation tools to intelligent mobile terminals.

Currently, an increasing number of automakers and

technology companies are investing substantial

resources and R&D efforts into in-depth research on

intelligent driving assistance systems, striving to

integrate automation technology with human-centric

design to achieve a qualitative leap in driving

experience (Xu & Lu, 2024).

Against this backdrop, the driver's emotional

state, as a critical factor influencing driving behaviour

and road safety, has garnered significant attention

from researchers and the industry. In recent years,

emotion recognition and intervention functions have

gradually been integrated into the core design of in-

vehicle systems. Research indicates that emotion

recognition technology incorporating multimodal

data (such as facial expressions, voice tone, and

physiological signals) can significantly improve

recognition accuracy, particularly in complex and

dynamic driving scenarios, demonstrating strong

application potential. Additionally, adaptive

adjustment strategies based on real-time emotion

monitoring have been proven to effectively alleviate

negative emotions such as tension and anxiety in

drivers, reduce their cognitive load, and thereby

He, S., Ying, H. and Zhong, H.

A Study in Emotion-Aware Adaptive Interaction in Intelligent Vehicle Cockpits.

DOI: 10.5220/0014359000004718

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Engineering Management, Information Technology and Intelligence (EMITI 2025), pages 387-393

ISBN: 978-989-758-792-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

387

improve driving performance and road safety (Li,

2021).

Therefore, this paper will explore the application

value of emotion perception in intelligent cockpits,

focusing on how to achieve precise monitoring and

intelligent adjustment mechanisms for driver

emotional states. By analysing existing driver-centric

adaptive interaction system research and exploring

the complete perception-recognition-intervention

process mechanism, this study will thoroughly

analyse the feasibility and challenges of multimodal

fusion in real-world scenarios. Additionally, by

addressing the challenges faced in existing research,

this study will conduct a systematic evaluation of the

system's user acceptance, functional performance,

and human factors adaptability, thereby providing a

theoretical foundation and future outlook for more

humanised and emotionally intelligent intelligent

cockpit design.

2 ANALYSIS OF THE CAUSES OF

ROAD RAGE

Road rage refers to angry or aggressive behaviour

exhibited by drivers while driving. The causes of road

rage can generally be categorised into: age, driving

experience, driving frequency, road conditions, and

personal circumstances (Ren et al., 2021; Fan, 2024).

A study (Fan, 2024) using a binary logistic regression

model found that age and driving experience have an

inverse relationship with road rage. Among those

aged 36–48, the proportion of road rage incidents

caused by cutting in line was 55.1% of that among

those aged 18–24. while among driving frequency,

the proportion of road rage among infrequent drivers

was 66.4% of that among frequent drivers (Fan,

2024). Road conditions include traffic obstacles such

as congestion, rude behaviour such as cutting in line,

uncivil language, sudden appearances of pedestrians

or non-motorised vehicles, and dangerous behaviour.

Among these, rude behaviour is more likely to trigger

road rage than the other two. Personal circumstances

include gender, personality, family situation, and

education level. Male drivers are more prone to road

rage than female drivers; among family

circumstances, married but childless and married with

children have lower road rage probabilities than

unmarried individuals, and married with children

have lower probabilities than married but childless

individuals; among educational backgrounds,

postgraduate and undergraduate degrees are more

likely to trigger road rage (Ren et al., 2021; Fan,

2024). When driving an SUV, road rage caused by

frequent lane changes and U-turns is 56.6% higher

than in sedans, indicating that open spaces help

alleviate road rage (Fan, 2024).

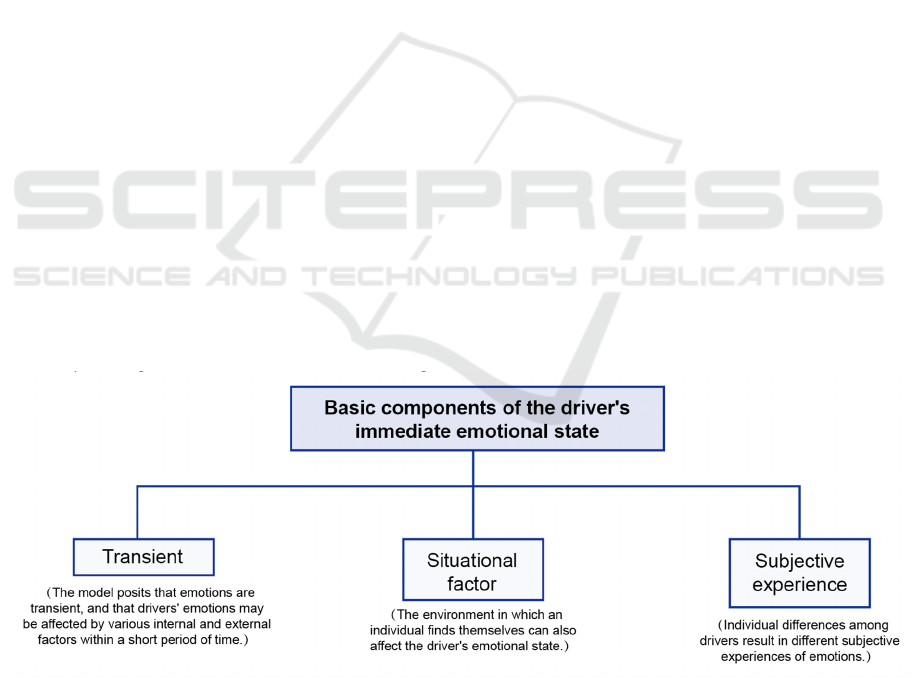

Based on the theoretical framework of the

Momentary Experience Model (as shown in Figure

1), it can be concluded that a driver's momentary

emotional state is composed of transient factors

(Cannon, 1927; Ningjian, 2024), situational factors

(Behnke & Beatty, 1981), and subjective experiences.

Therefore, by regulating a driver's momentary

emotional state, their emotional responses can be

intervened, thereby effectively alleviating the

potential risks associated with negative emotions and

enhancing overall driving safety and user experience.

Figure 1: Basic components of the driver's immediate emotional state. (Picture credit: Original)

Additionally, research has utilised the Kubler-

Ross Change Curve model (Kübler-Ross, 1973) to

simulate the detailed emotional changes associated

with road rage in traffic congestion scenarios (as

shown in Figure 2). By identifying the psychological

state at each stage, it is possible to analyse potential

behavioural characteristics, further inferring the risk

behaviours that may be triggered, thereby identifying

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

388

the highest-risk emotional stages and providing a

foundation for subsequent measures to mitigate these

risks. This also helps us clearly identify at which stage

most need to intervene in the driver's emotions,

providing theoretical support for subsequent

experimental testing.

Figure 2: Kuber-Ross change curve. (Picture credit: Original).

3 ROAD RAGE EMOTION

MONITORING METHOD

A driver's emotional state directly affects their

attention, judgment, and reaction speed while driving,

making it an important factor in traffic safety.

Emotion detection technology analyses data such as

facial expressions, physiological signals, and voice

characteristics to detect changes in a driver's

emotions in real time, providing a basis for risk

warning and emotional intervention. Applying

emotion detection to driving scenarios helps prevent

dangerous behaviour caused by negative emotions,

improving the overall driving experience and road

safety.

3.1 Emotion Detection based on Single

Modality

The in-vehicle system is based on the Android

operating system and includes features such as

communication, apps, and podcasts. The voice

recognition module currently has errors in identifying

dialects and Mandarin, requiring improved accuracy.

The dynamic visual monitoring system uses real-time

monitoring of the eyes to assess focus on the road

surface and confirm whether the interaction module

may have a negative impact on the user. The eye-

tracking system Super Cruise enters autonomous

driving mode after the user fails to respond to

warnings, reducing the risk of fatigued driving

(Operator, 2016). The in-vehicle system can monitor

vehicle status in real time when connected to the

internet. Gesture interaction has been proven through

experiments to be more efficient than touch

interaction (Wu, 2016). Currently, emotional

detection in automotive applications lacks data on

multi-sensory interaction, particularly olfactory

interaction. Eye-tracking is relatively more mature

compared to other modules, with abundant

experimental data available. The voice module has

diversified voice tones, but there are still issues with

dialect recognition. Currently, monitoring and

prevention of road rage are based on Spatial-

Temporal Attention Neural Networks (STANN),

which combine electroencephalography (EEG)

signals and eye movement signals, and are validated

using the public dataset SEED IV to ensure accuracy.

Additionally, by improving techniques such as

CenterFace, StarGAN, and KMU-FED, it is possible

to capture more facial information from drivers and

more accurately detect expressions of anger (Li,

2024).

A Study in Emotion-Aware Adaptive Interaction in Intelligent Vehicle Cockpits

389

3.2 Emotion Detection Based on

Multimodal Data

In the study of road rage detection mechanisms, the

method of integrating facial expressions with driving

behavior has demonstrated significant advantages.

This method uses in-vehicle devices to capture the

driver's facial expressions in real time, combining

them with driving behavior data such as steering

wheel angle change rate, acceleration, and braking

pedal operation to construct a Fisher linear

discriminant model for comprehensive judgment

(Huang et al., 2022). Experiments have shown that

compared to using facial expressions or driving

behavior features alone, the fusion method

significantly improves recognition accuracy,

providing an effective means for online monitoring of

road rage. Key technological research on intelligent

vehicle occupant monitoring systems (OMS) has also

provided new insights into road rage detection. The

passenger-side child monitoring and warning scheme

achieved through technologies such as OpenPose

demonstrates the potential of multimodal information

fusion in enhancing vehicle safety monitoring (Fu,

2022).

Emotion perception technology plays a critical

role in driving safety within intelligent cockpits,

especially in high-risk emotional scenarios such as

road rage. A driver monitoring system (DMS) based

on a 940 nm wavelength infrared light source

combined with facial micro-expression analysis can

real-time capture physiological features of anger in

drivers (such as tense brow muscles and drooping

corners of the mouth) (Shaobi, 2021). When road rage

symptoms are detected, the system can collaborate

with the cockpit environment control module for

proactive intervention: for example, dynamically

adjusting the lighting to a cooler tone via

environmental light sensors to reduce visual

stimulation; simultaneously activating the fragrance

system to release calming scents to alleviate

emotional escalation (Shaobi, 2021). This real-time

response mechanism effectively suppresses

aggressive driving behavior and reduces the risk of

traffic accidents caused by emotional outbursts.

However, precise perception of road rage still faces

significant challenges. While existing algorithms

perform well in laboratory settings, they are easily

influenced by individual differences in real-world

driving scenarios (e.g., variations in facial

expressions due to cultural backgrounds or dialectal

intonation changes) (Guo et al., 2023). Additionally,

intervention strategies for road rage are currently

limited to environmental regulation functions and

have not yet achieved deep synergy with vehicle

control layers (e.g., adjusting adaptive cruise control

following distance or steering sensitivity). Future

research should establish a dedicated road rage

dataset covering multiple driving scenarios and

design a layered response logic: primary

interventions use environmental adjustments to

alleviate emotions, and if emotions continue to

deteriorate, the level of autonomous driving

intervention is automatically increased, with

cognitive guidance provided by a voice assistant

when necessary (Guo et al., 2023). This tiered

response system can achieve a closed-loop safety

protection mechanism from emotion recognition to

behavior correction.

Existing multimodal emotion recognition systems

have achieved a certain level of accuracy, collecting

data from multiple sensors to identify and analyses

the driver's state and provide emotion regulation

measures (Zhang & Chang, 2025). Building on this,

the relationship between emotional changes and

driving state is a critical step that requires

experimental validation. Monitoring driving

behaviour styles under different emotional states,

testing and analysing factual data, and constructing a

reasonable logical structure will lay the foundation

for subsequent integrated mechanisms.

Research indicates that a driver's emotional state

(primarily negative emotions) can spontaneously

influence driving behaviour without cognitive control

(Eherenfreund Hager et al., 2017). Additionally,

drivers cannot clearly articulate the specific triggers

for these emotions (Hu et al., 2013). Based on this,

previous studies have shown that experimental tests

using driving simulators with emotionally charged

vocabulary can induce emotional responses in drivers

(Steinhauser et al., 2018). Some tests have used video

presentations to induce fluctuations in drivers'

emotions (Gu, 2021), while others have employed

emotionally charged music and evocative

recollections to induce emotional responses (Kwallek

et al., 1988).

In summary, it can be seen that drivers' emotions

can be influenced by external factors, and different

methods can cause varying degrees of emotional

changes. Therefore, based on the identified emotional

states, we need to design an integrated mechanism for

the overall cabin environment to achieve positive

intervention and interaction with drivers, thereby

regulating users' psychological states, enhancing

driving safety and experience, and achieving

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

390

personalised interaction optimisation—this is the

core challenge of our research.

3.3 Analysis of the Advantages of

Multi-Sensory Coordination and

Regulation

A multi-sensory coordination strategy integrates

multiple stimuli from hearing, touch, smell, and even

sight to provide a new solution for alleviating road

rage. Research shows that this strategy can effectively

improve drivers' reaction speed and warning

effectiveness when fatigued (Li et al., 2014). At the

same time, it can change drivers' perception of speed

through multi-sensory stimulation, thereby adjusting

driving behaviour and reducing the risk of traffic

accidents (Liu et al., 2013). In terms of emotional

regulation, multi-sensory coordination can

comprehensively intervene in drivers' emotions. For

example, using stimuli such as soft music, warm

lighting, and mild vibrations, creates a soothing

driving atmosphere, helping drivers detach from tense

or angry emotions and regain composure. This

strategy not only demonstrates the universality of

multi-sensory stimulation principles but also offers a

new perspective on addressing driving-related mental

health issues.

Multisensory coordination demonstrates multiple

advantages in road rage intervention. From a

physiological perspective, multisensory stimulation

can more quickly capture the driver's attention and

interrupt negative emotional cycles. From a

psychological perspective, multisensory coordination

may trigger positive emotional responses in drivers,

alleviating tension. From a behavioural perspective,

multisensory coordination can also indirectly reduce

road rage triggers by regulating driving behaviour.

With the widespread adoption of autonomous

driving technology and the continuous development

of intelligent cockpits, multi-sensory coordination

strategies are poised to become a new paradigm in

emotional management, enhancing road safety and

comfort more comprehensively by integrating

different sensory stimuli.

4 CURRENT LIMITATIONS AND

FUTURE PROSPECTS

4.1 Current Limitations

To reduce the safety risks posed by road rage drivers

and help them calm down more quickly, an

interactive system design has been adopted that

adjusts angry emotions through multi-channel

sensory stimulation. When the emotion sensing

system detects that the driver is in an angry state, the

adaptive system will address the issue based on the

following detailed principles. First, visual sensory

adjustment: the interior lights are switched to a cool

colour tone to help the driver transition to a calm

state, reducing stress and relaxing the mind, creating

a cool-toned environment throughout the vehicle.

Second is auditory sensory adjustment, where the in-

vehicle system automatically plays soothing music to

promote the relaxation of tense emotions, enhances

voice support, and provides positive feedback to the

driver to help restore their state. Third is olfactory

sensory adjustment, where the air conditioning

system releases mint-scented or plant-based essential

oils to alleviate physical fatigue, awaken the brain's

senses, and soothe the driver's anxious emotions.

Although this system has established a foundation

and feasible strategies for positive emotional

regulation, it still has certain limitations. First, the

accuracy and timeliness of emotional recognition are

easily affected. Multi-modal emotional recognition

algorithms are still susceptible to interference from

factors such as lighting, obstructions, and driving

behavior in real driving environments, leading to

reduced recognition accuracy. Especially when

dealing with drivers whose emotional expressions

vary greatly, errors in judgment and inappropriate

adjustments are more likely to occur. Secondly, the

multi-sensory intervention mechanism lacks

personalisation. Different drivers have individual

differences in their acceptance of colours, smells, and

sounds, and the current system has not implemented

personalised adaptive intervention schemes, which

may cause some users to feel uneasy or affect their

driving experience.

Therefore, future technical improvements and

personalised multi-modal sensory mechanisms are

also key areas that require attention. However,

existing research still faces numerous limitations. On

one hand, the algorithm complexity is high, and the

process of integrating multiple information streams

may affect real-time performance, making it difficult

to meet the rapid response requirements of complex

traffic scenarios. On the other hand, environmental

adaptability needs to be enhanced, as factors such as

varying lighting conditions and changes in driver

posture may affect detection accuracy. Additionally,

current research primarily focuses on group

characteristics and lacks in-depth consideration of

individual driver differences.

A Study in Emotion-Aware Adaptive Interaction in Intelligent Vehicle Cockpits

391

4.2 Future Prospects

Looking ahead, research into road rage detection and

intervention systems can be explored from multiple

dimensions, with the ultimate goal of developing an

intelligent emotional driving cockpit system. First,

explore the deep integration of multimodal data,

combining physiological signals such as heart rate

variability and skin conductance response, as well as

behavioral data such as voice features and gesture

recognition, to build more refined emotion detection

models, enhancing the comprehensiveness and

accuracy of identification. This will facilitate the

incorporation of deep learning and personalized big

data, further optimising the multimodal driver

emotion recognition system to improve identification

accuracy and generalisation capabilities. Second,

optimise algorithm structures by adopting lightweight

neural networks or improved algorithm workflows to

reduce computational complexity, ensuring the

system's real-time response capability in complex

traffic scenarios. Additionally, integrate machine

learning and AIGC technology to provide

personalised intervention strategies based on drivers'

historical behaviour and emotional responses,

achieving strategy optimisation. Additionally,

environmental adaptability testing should be

strengthened to ensure algorithm stability under

various lighting conditions and road conditions in

real-world driving scenarios. During system design,

human factors engineering and driving safety

assessments should be integrated to prevent sensory

interventions from causing new attention burdens.

Through continuous simulation and testing,

intervention intensity and frequency should be

optimised. Finally, personalised recognition research

is conducted, fully considering factors such as the

driver's age, gender, and driving habits to enhance the

targeting of recognition and the effectiveness of

interventions. This enables the system to accurately

identify emotions while adapting to individual

differences, providing drivers with customised

emotional management solutions. Through these

improvements, it is anticipated that more reliable and

efficient road rage detection and intervention

solutions will be provided for intelligent vehicle

safety driving, further reducing driving risks and

enhancing road safety standards.

5 CONCLUSIONS

This paper focuses on the identification of driver

emotional states and adaptive interaction mechanisms

in intelligent cockpits. It proposes an emotion

monitoring system that integrates multi-modal

sensory information from vision, hearing, and smell,

and designs a coordinated adjustment strategy based

on emotion recognition results. When the system

detects negative emotions such as ‘road rage’ in the

driver, it can employ proactive intervention measures

(such as in-cabin atmosphere adjustment, soothing

voice prompts, and aromatic scent release) to create a

calm cabin environment, thereby effectively

alleviating emotional fluctuations and enhancing

driving safety and user experience. This study

highlights the advantages of multi-modal

collaboration in improving emotion recognition

accuracy and human-machine interaction sensitivity,

and preliminarily validates its feasibility and practical

value in real-world application scenarios. Future

research, will further explore more refined emotional

modelling algorithms to enhance the system's

personalised response capabilities. Simultaneously,

by optimising edge computing performance and

sensor collaboration mechanisms, aim to drive the

transformation of intelligent cockpits from traditional

‘feature aggregation’ to ‘emotion-driven’ deep-level

human-machine collaboration, ultimately achieving a

more human-centric intelligent interaction system

and providing theoretical support for human-centred

intelligent cockpit design.

AUTHORS CONTRIBUTION

All the authors contributed equally and their names

were listed in alphabetical order.

REFERENCES

Behnke, R. R., & Beatty, M. J. (1981). A cognitive‐

physiological model of speech anxiety.

Communications Monographs, 48(2), 158–163.

Cannon, W. B. (1927). The James-Lange theory of

emotions: A critical examination and an alternative

theory. The American Journal of Psychology, 39(1/4),

106–124.

Eherenfreund Hager, A., Taubman Ben Ari, O., Toledo, T.,

et al. (2017). The effect of positive and negative

emotions on young drivers: A simulator study.

Transportation Research Part F: Traffic Psychology and

Behaviour, 49, 236–243.

Fan, Q. (2024). Multimodal road rage emotion recognition

based on deep transfer learning. Engineering

Technology II Information Technology.

Fu, S. (2022). Research and implementation of key

technologies for intelligent vehicle occupant

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

392

monitoring system (OMS) (Master’s thesis, Yangzhou

University).

Gu, W. J. (2021). Research on the influencing factors of

road rage: The mechanism of external causes and

subject traits.

Guo, Y., et al. (2023). Review on emotion recognition in

intelligent cockpit scenarios. Journal of Beijing Union

University.

Hu, T. Y., Xie, X., & Li, J. (2013). Negative or positive?

The effect of emotion and mood on risky driving.

Transportation Research Part F: Traffic Psychology and

Behaviour, 16(1), 29–40.

Huang, H., Lai, J., Liang, L., et al. (2022). Research on road

rage recognition method by fusing facial expressions

and driving behavior. Equipment Manufacturing

Technology, 3, 26–32.

Kübler-Ross, E. (1973). On death and dying. Routledge.

Kwallek, N., Lewis, C. M., & Robbins, A. S. (1988).

Effects of office interior color on workers’ mood and

productivity. International Journal of Biosocial

Research, 3(1), 10–38.

Li, Cunbo. (2024). Research on individual emotional state

recognition based on EEG (PhD dissertation,

University of Electronic Science and Technology of

China).

Li, R., Mo, Q., & Zhang, K. (2014). Research on fatigue

driving warning scheme based on multi‑sensory

coordination. Modern Electronics Technique, 37(5),

117–120.

Li, W. B. (2021). Research on the influence, recognition

and regulation methods of driver's emotional behavior

for automotive intelligent cockpit (Doctoral dissertation,

Chongqing University).

Liu, W., Ouyang, L., Guo, J., et al. (2013). Study on spacing

of deceleration vibrating marking groups based on

multi‑sensory stimulation to drivers. China Safety

Science Journal, 23(3), 86–90.

Ningjian, L. (2024). Cannon-Bard Theory of Emotion. In

The ECPH Encyclopedia of Psychology (pp. 178–179).

Springer, Singapore.

Operator (Auto Business Review). (2016). Eye tracking

improves fatigue driving. Operator (Auto Business

Review).

Ren, Q. W., Lu, X. W., Zhao, Y., Tang, Z. F., & Wu, H. Z.

(2021). Research on the endogenous and exogenous

logic and influencing factors of road rage. China Safety

Science Journal, 17(7).

Shaobi, T. (2021). The “sensing principle” in intelligent

vehicle cabins. Electronic Engineering Product World.

Steinhauser, K., Leist, F., Maier, K., et al. (2018). Effects

of emotions on driving behavior. Transportation

Research Part F: Traffic Psychology and Behaviour, 59,

150–163.

Wu, Gang. (2016). Using the “five senses” interaction

concept to explore the three-dimensional experience in

product design (Master’s thesis, East China University

of Science and Technology).

Xu, N., & Lu, J. Y. (2024). A review of multimodal

interaction design research for automotive advanced

driving assistance systems based on safety and

emotionalization. Chinese Journal of Automotive

Engineering, 14(3), 336–353.

Zhang, X. Y., & Chang, R. S. (2025). The impact of traffic

negative emotions on drivers’ driving decisions.

Chinese Journal of Ergonomics, 31(1), 13–17, 23.

A Study in Emotion-Aware Adaptive Interaction in Intelligent Vehicle Cockpits

393