Applications of Computer Vision and Human—Machine Interaction

in Environmental Monitoring

Bocheng Zhu

School of Software, Henan University, Kaifeng, China

Keywords: Edge Cloud Collaborative Architecture, Environmental Monitoring System, Artificial Intelligence.

Abstract: With the development of artificial intelligence, the integration of computer vision and human-computer

interaction is completely changing the paradigm of environmental monitoring technology. Traditional

environmental monitoring systems rely on decentralized sensor networks to transmit data back to a central

server, which limits real-time performance and decision-making efficiency. This study developed an

intelligent environmental monitoring system based on edge cloud collaborative architecture, multimodal data

fusion, and holographic imaging technology. Lightweight edge computing nodes are deployed at the sensor

end to perform local data preprocessing, reduce the cloud transmission load by 80%, and control the end-to-

end delay within 20ms. Cloud utilizes artificial intelligence algorithms to integrate structured and unstructured

data, generating high-precision environmental state models. Dynamic holographic images are formed through

light field projection and support gesture-based interaction to accurately locate problem areas. Finally, the

challenges and advantages of the technology were analyzed. In the future, with the help of algorithm

lightweighting, hardware innovation, and policy support, the system is expected to expand to scenarios such

as automated agriculture and urban flood warning, achieving large-scale application of ecological protection.

1 INTRODUCTION

Environmental disasters such as climate change,

forest fires, and water pollution occur frequently,

posing a serious threat to ecological balance and

human health. Traditional environmental monitoring

methods mainly rely on a distributed sensor network.

The collected data is transmitted to a central server

for processing and analysis via wired or wireless

means (Han, 2024). However, this model has

significant shortcomings in terms of real-time

performance, interaction efficiency, and data

visualization. For example, in remote areas or areas

with poor network coverage, data transmission delays

can be as long as several minutes or even hours,

severely restricting the timeliness of environmental

monitoring. In addition, traditional systems usually

rely on command - line interfaces or 2D charts to

present data, lacking intuitive spatial correlations,

making it difficult for non - technical personnel to

quickly understand and respond to environmental

changes.

The rapid development of computer vision and

human- machine interaction technologies provides

new possibilities for solving these problems. In recent

years, computer vision technology has made

remarkable progress in fields such as object detection,

image classification, and anomaly recognition. Deep

- learning models (such as ResNet, YOLO, etc.) have

achieved an accuracy rate of over 90% in

environmental monitoring tasks. At the same time,

human - machine interaction technology has evolved

from early keyboard input to current gesture

recognition, voice control, and holographic projection,

greatly improving the interaction efficiency between

users and machines. These technological

advancements have laid a solid foundation for

building a low -latency, high-precision intelligent

environmental monitoring system.

Currently, research in the field of environmental

monitoring mainly focuses on three aspects: sensor

network optimization, data fusion algorithms, and

visualization techniques.

In terms of sensor network optimization, the

introduction of edge computing technology has

significantly improved data processing efficiency.

For example, lightweight edge devices such as

NVIDIA Jetson can perform local data pre-

processing (such as noise reduction, compression),

reducing dependence on cloud resources, thereby

Zhu, B.

Applications of Computer Vision and Human—Machine Interaction in Environmental Monitoring.

DOI: 10.5220/0014320300004718

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Engineering Management, Information Technology and Intelligence (EMITI 2025), pages 61-65

ISBN: 978-989-758-792-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

61

reducing transmission delays and bandwidth

requirements. However, existing systems still have

deficiencies in multi-sensor collaboration and data

synchronization. Especially in dynamic environments,

differences in sampling frequencies and accuracies of

different sensors may lead to data fusion deviations

(Just Go for It Now, 2024).

In terms of data fusion algorithms, multi-modal

data fusion technology has become a research hotspot.

By combining structured data (such as temperature,

humidity) and unstructured data (such as images,

videos), AI algorithms can analyze the environmental

state more comprehensively. For example, a deep -

learning - based smoke detection model can

accurately identify the early signs of forest fires, and

spatio-temporal alignment technology can solve the

problem of time asynchrony of multi-source data.

Nevertheless, how to achieve real-time and precise

data fusion in highly dynamic environments remains

an urgent challenge to be addressed.

In terms of visualization techniques, traditional

2D charts and tables can no longer meet the

requirements for presenting complex environmental

data. In recent years, the rise of holographic

projection technology has provided a new means of

visualization for environmental monitoring (Chen,

2024). Through light-field projection and dynamic

particle effects, holographic images can intuitively

display the spatial distribution and change trends of

environmental parameters. However, issues such as

holographic interaction accuracy and tactile feedback

still need to be further optimized, for example, by

combining visual Simultaneous Localization and

Mapping (SLAM) technology to improve the

accuracy of gesture recognition (The Innovation

Geoscience Editorial Department, 2024).

The significance of this research lies in

constructing a new intelligent environmental

monitoring system by integrating edge computing,

multi-modal data fusion, and holographic imaging

technology. This system can not only significantly

reduce data transmission delays and improve the real-

time performance of monitoring, but also enhance the

user experience through an intuitive holographic

interaction interface, providing non-professional

personnel with an efficient tool for environmental

data analysis. In addition, the application scope of this

system is extensive, covering multiple scenarios such

as forest fire early warning, water pollution

monitoring, and urban flood prevention and control,

providing scientific and intelligent technical support

for ecological protection and disaster prevention and

control.

2 TECHNICAL METHODS AND

THEORIES

In the intelligent environmental monitoring system,

the key technical process mainly consists of several

core links: data collection, transmission, processing,

and presentation.

First is data collection. In different monitoring

scenarios (such as forests, water areas, cities, etc.),

various types of sensors are deployed, such as

temperature sensors, smoke sensors, chemical

substance sensors, etc. Each sensor performs its own

function and captures various signals in the

environment in real-time. The collected data is

transmitted to the lightweight edge computing node

at the sensor end, such as NVIDIA Jetson, via wired

or wireless means.

The edge computing node will perform

preliminary processing on the data, including data

filtering, noise reduction, anomaly detection, etc. By

processing the data locally, the amount of data is

greatly reduced. Then, the key data is uploaded to the

cloud via the network.

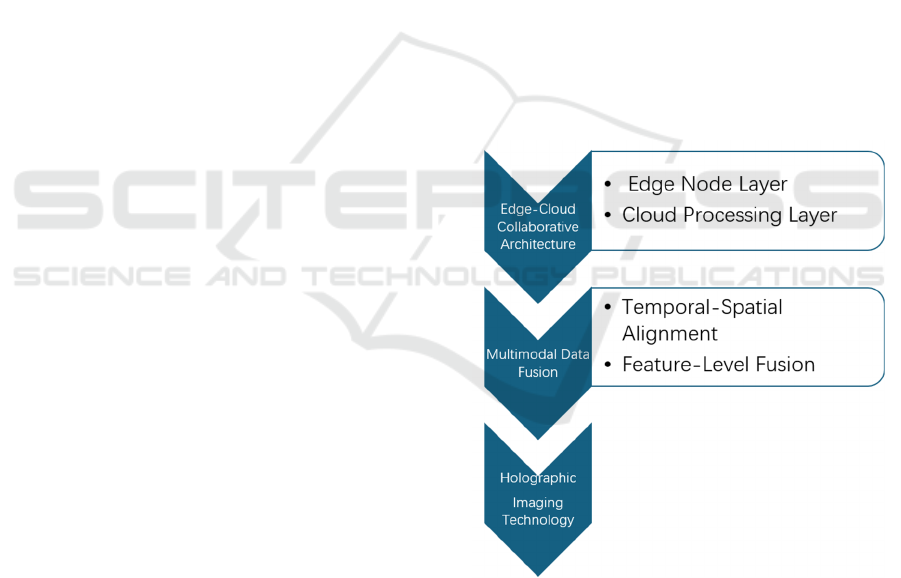

Figure 1. Technical Framework Diagram (Picture credit:

Original).

In the cloud, AI algorithms (such as ResNet,

spatio-temporal alignment technology) are used to

fuse and analyse the received structured data

(temperature, humidity, etc.) and unstructured data

(smoke images, video streams, etc.), generating a

high-precision environmental state model.

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

62

Finally, with the help of light-field projection

technology, multi-dimensional data is mapped into

dynamic holographic images and presented to the

user end. Inspectors can view and analyse

environmental information intuitively through

gesture interactions (zooming, rotating, etc.),

completing the entire monitoring process and

achieving efficient environmental monitoring. Figure

1 shows the technical framework.

3 MAIN CONTENT

3.1 Applications of Computer Vision

Technology in the Environment

For example, in forest fire detection, temperature and

smoke sensors are installed on trees for fire

prevention. In water pollution identification,

chemical substance sensors in the water are used to

detect the degree of water source pollution. In climate

prediction, humidity and light sensors are deployed in

the soil and grass to predict future climate changes.

Multiple monitoring points are set up in simulated

forest environments and actual forest areas, with

temperature sensors, smoke sensors, and cameras

collecting image data (The Innovation Geoscience

Editorial Department, 2023). The system is used for

fire detection, and experimental results are collected.

Under different weather conditions (sunny, cloudy,

light rainy) and terrain environments (mountainous

areas, plains), it is verified whether the system's

detection accuracy for forest fires can exceed 90% to

ensure accuracy. In addition, when a fire occurs, it is

verified whether the system can respond quickly

within 20ms and issue an alarm in a timely manner,

which buys precious time for firefighting.

3.2 Key Technical Analysis

3.2.1 Edge-Cloud Collaborative

Architecture

Lightweight edge computing nodes (such as NVIDIA

Jetson) are deployed at the sensor end to perform

preliminary data filtering and compression, reducing

the demand for transmission bandwidth. 80% of the

original data (such as image noise reduction, anomaly

detection) is processed locally, and only the key data

is uploaded to the cloud, reducing the latency to

within 20ms. The edge computing node plays a

crucial role in connecting the upper and lower parts

of the entire system. Taking NVIDIA Jetson as an

example, it is equipped with a powerful processor and

a dedicated graphics processing unit (GPU), with

excellent parallel computing capabilities, enabling it

to efficiently process various types of data. In the data

collection stage, the raw data collected by sensors

often contains a large amount of redundant

information and noise. If directly uploaded to the

cloud, it will not only occupy a large amount of

network bandwidth but also increase the processing

burden of the cloud, resulting in a significant increase

in system latency (Wang et al., 2022).

3.2.2 Multi-Modal Data Fusion

The cloud receives structured data (such as

temperature values) and unstructured data (such as

fire smoke images captured by cameras) from

different sensors and conducts fusion analysis

through AI algorithms. Advanced AI algorithms are

used in the cloud for multimodal data fusion analysis.

Convolutional neural networks (CNNs) in deep -

learning algorithms perform well in image feature

extraction. For fire smoke images, CNNs can

automatically learn the texture, color, shape, and

other features of smoke, identifying the presence of

smoke in the image and its concentration distribution.

For structured temperature data, recurrent neural

networks (RNNs) and their variants (such as LSTM,

GRU) can effectively process time - series data,

analyze the changing trend of temperature over time,

and capture abnormal temperature fluctuations (Him

Eur et al., 2022).

3.2.3 Holographic Imaging Technology

Holographic technology uses the GPU cluster of the

cloud server to construct a 3D environmental model

in real - time. Through the interference of reference

light and object light and the reconstruction of the

image by light - wave diffraction, the amplitude and

phase information of the light wave are recorded,

achieving stereoscopic image display. After being

combined with the processed multi - modal data, it

provides an intuitive visual interaction interface for

environmental monitoring (Wang et al., 2023).

3.3 Technical Challenges and Advantages

3.3.1 Precise Positioning Problem

During the holographic interaction process of the

intelligent environmental monitoring system, the

accuracy of mid - air touch is significantly lower than

that of physical screens. This is because when

Applications of Computer Vision and Human—Machine Interaction in Environmental Monitoring

63

interacting with a physical screen, users receive clear

physical feedback, while when performing gesture

interactions in the air, this direct feedback mechanism

is lacking, restricting positioning accuracy. In

complex dynamic environments, such as forest fire

scenes, environmental factors (such as smoke, light

changes) will interfere with the sensor's recognition

of user gestures, further increasing the positioning

error. To solve this problem, it is necessary to

combine visual Simultaneous Localization and

Mapping (SLAM) technology. Visual SLAM

technology can construct a real-time environmental

map by identifying and tracking feature points in the

environment, thereby accurately determining the

position of user gestures in space, reducing

positioning errors, and significantly improving

interaction accuracy (Liu et al., 2021). This allows

inspectors to more accurately operate the holographic

image through gestures, achieving precise positioning

and processing of problem areas.

3.3.2 Spatio - Temporal Alignment Problem

In the time dimension, the data collection frequencies

of different types of sensors vary significantly. For

example, a temperature sensor may collect data once

per second, while a camera can capture 30 frames per

second. This difference in frequency will lead to time

asynchrony of data. If the data is directly fused, the

analysis results will be deviated and unable to

accurately reflect the true state of the environment. To

solve the time - synchronization problem, methods

such as dynamic interpolation or resampling are

usually adopted. Dynamic interpolation inserts

reasonable data values within the time interval

according to the changing trend of known data points;

resampling resamples the data to make the data of

different sensors reach the same frequency in time,

ensuring the accuracy of data fusion.

In the space dimension, accuracy errors between

the GPS coordinates of sensors and the geographic

information (GIS) of images also cause problems. For

example, in the fire- positioning scenario, there may

be a certain deviation in the GPS positioning of

sensors, and errors may also occur during the

processing and matching of geographic information

in images. The superposition of these two factors will

lead to a deviation in fire positioning, affecting

subsequent rescue decisions. To solve the space-

alignment problem, it is necessary to accurately

calibrate the GPS data of sensors and the GIS

information of images. Through technical means such

as coordinate transformation and matching

algorithms, accuracy errors can be reduced, ensuring

the spatial consistency of data from different sources

(Yang et al., 2022).

3.3.3 Advantages of Holographic Interaction

The cloud - based AI model has a powerful gesture -

parsing ability and can accurately identify various

gesture actions of inspectors, such as pinch - to -

zoom, swipe - to - rotate, etc. When an inspector

makes a pinch gesture, the cloud - based AI model

will dynamically adjust the zoom ratio of the

holographic image according to the preset algorithm,

allowing the inspector to view the environmental data

of the area of interest in more detail. The swipe - to -

rotate gesture is used to change the viewing angle of

the holographic image, enabling the inspector to

observe the environment from different angles for

comprehensive monitoring. Through these gesture

operations, inspectors can intuitively see information

such as geographical location and terrain elevation

differences, enhancing their perception of the

environmental situation. In the forest fire monitoring

scenario, when an inspector "grabs" the holographic

fire source marker through a gesture, the cloud - based

AI model will quickly trigger the drone inspection

and fire - fighting command after recognizing the

operation, achieving efficient fire emergency

handling and greatly improving the monitoring and

response efficiency.

3.3.4 Advantages of Edge - Cloud

Collaborative Architecture

In the edge - cloud collaborative architecture, edge

nodes play a crucial role. Edge nodes such as

NVIDIA Jetson have strong local data - processing

capabilities and can process a large amount of raw

data locally at the data source. By performing

operations such as data filtering, noise reduction, and

anomaly detection, edge nodes can process 80% of

the original data locally and only upload the key data

to the cloud. This process greatly reduces the amount

of data transmission, thereby significantly reducing

the demand for transmission bandwidth and enabling

the end - to - end latency to be controlled within 20ms,

ensuring the real - time performance of the

monitoring system.

In addition, edge nodes are highly independent

and reliable. In the event of a network outage, edge

nodes can still execute local decisions based on

locally stored data and preset decision - making logic.

For example, when abnormal environmental

parameters (such as excessive smoke concentration,

sudden temperature increase) are detected, even if

EMITI 2025 - International Conference on Engineering Management, Information Technology and Intelligence

64

communication with the cloud is unavailable, edge

nodes can immediately trigger an alarm to alert

nearby personnel of potential dangers, buying

precious time for emergency handling, enhancing the

reliability and stability of the entire monitoring

system, and ensuring the continuous progress of

environmental monitoring work.

3.4 Analysis of Future Application

Scenarios

In automated agriculture, it can be used for

greenhouse monitoring, reducing the number of

manual inspections. Only cloud sensors are needed to

detect the growth of crops. In urban flood warning,

through sensor detection, supervisors can use

holographic technology to respond more quickly to

impending flood disasters and make reasonable

arrangements. In the face of forest fires, data from

sensors such as smoke and temperature are

transmitted to inspectors via the cloud. Inspectors can

use holographic technology to quickly identify the

location of the fire and prevent its spread.

4 CONCLUSION

This study aims to construct a low-latency, high-

precision intelligent environmental monitoring

system through multi- modal data fusion, an edge-

cloud collaborative architecture, and holographic

imaging technology, solving the bottlenecks of

traditional methods in real-time performance,

interaction efficiency, and data integration, and

providing scientific tools and low-cost, high-

reliability technical solutions for ecological

protection and disaster prevention and control. In the

future, through algorithm optimization, hardware

innovation, and policy support, large-scale

implementation is expected to be achieved, playing

an important role in ecological protection.

However, there are also some limitations. For

example, the accuracy of holographic interaction is

insufficient, the resolution of tactile feedback is low,

system deployment and maintenance costs are high,

and issues related to data processing and real-time

performance need to be continuously improved.

In the future, through algorithm optimization and

hardware innovation, it is possible to improve

accuracy, reduce latency, enhance sensing effects,

lightweight models, and with the support of policies,

achieve large-scale implementation and play an

important role in ecological protection.

REFERENCES

Chen, H. C.: ‘Research on Marker - less Gesture

Recognition Technology Based on Computer Vision

Technology.’ Electronic Components and Information

Technology, 2024, 8(10): 254 – 256

Han, Q. J.: ‘Application of Computer Vision Technology in

Crop Pest Monitoring.’ Southern Agricultural

Machinery, 2024, 55(S1): 58 - 60+77

Him Eur, Y., Rimal, B., Tiwary, A., & Amira, A.: ‘Using

artificial intelligence and data fusion for environmental

monitoring: A review and future perspectives.’

Information Fusion, 2022, 86 - 87: 44 – 75

Just Go for It Now.: ‘Literature Summary of 3D Detection

Based on Multi - Sensor Fusion (I).’ Artificial

Intelligence and Robot Technology, 2024, 28(6): 101 –

115

Liu, J., Liu, R., Chen, K., Zhang, J., & Guo, D.:

‘Collaborative Visual Inertial SLAM for Multiple

Smart Phones.’ ArXiv preprint arXiv:2106.12186,

2021

The Innovation Geoscience Editorial Department.:

‘Aerospace heritage: Footprints of human civilization

on and beyond Earth.’ Innovation Geoscience (English),

2024, 7(2): 200 – 215

The Innovation Geoscience Editorial Department.:

‘Carbon-negative transition by utilizing overlooked

carbon in waste landfills.’ Innovation Geoscience

(English), 2023, 6(4): 150 – 165

Wang, D., Li, Z. S., Zheng, Y. W., Li, N. N., Li, Y. L., &

Wang, Q. H.: ‘High - quality holographic 3D display

system based on virtual splicing of spatial light

modulator.’ ACS Photonics, 2023, 10(7): 2297 – 2307

Wang, J., Jian, W., & Fu, B. C.: ‘Edge - to – cloud

collaborative for QoS guarantee of smart cities.’ IFAC

- PapersOnLine, 2022, 55(11): 60 – 65

Yang, J., Lee, T. Y., Lee, W. T., & Xu, L.: ‘A design and

application of municipal service platform based on

cloud -edge collaboration for smart cities.’ Sensors

(Basel), 2022

Applications of Computer Vision and Human—Machine Interaction in Environmental Monitoring

65