Brain‑Computer Interface Signal Decoding Technology Based on

Deep Learning

Ruotian Luo

School of Engineering, Ulster University, Belfast, U.K.

Keywords: Deep Learning, EEG Signal Decoding, Brain‑Computer Interface (BCI).

Abstract: With a special emphasis on the potential of sophisticated classification algorithms to improve overall system

performance, this review article offers a thorough examination of the most recent developments in brain-

computer interface (BCI) systems. The paper examines various methodologies, including adaptive learning,

deep learning, and hybrid models, and evaluate their impact on decoding complex brain signals. Key findings

highlight the superior efficacy of deep learning approaches such as LSTM-FCN and 1D CNN in improving

accuracy and robustness. Transfer learning combined with advanced CSP algorithms also shows significant

improvements in handling limited training data. Furthermore, the integration of deep learning with the

EEG2Code method achieves remarkable information transfer rates. These advancements demonstrate

transformative potential for BCI applications in healthcare, assistive technologies, and human-computer

interaction. However, challenges remain in aligning algorithmic complexity with brain signal characteristics

and ensuring practical deployment for end-users. Future research should focus on optimizing algorithms for

real-time functionality, personalizing BCI systems, and exploring novel decoding modalities to further

advance this transformative field.

1 INTRODUCTION

The emerging field of brain-computer interfaces

(BCIs)aims to establish a direct communication link

between the human brain and external devices. This

innovative technology holds the promise of

transforming human interaction with the

environment, especially for individuals with motor

impairments. BCIs work by decoding the electrical

activity of the brain, often measured through

electroencephalography (EEG), and translating it into

control signals for various applications, such as

assistive devices, gaming, and even complex tasks

like continuous pursuit.

The development of BCI systems follows a multi-

stage process, starting with data acquisition where

raw brain signals are captured. After these signals are

examined, significant features are extracted, and

computers classify these traits to determine the user's

intents. BCI systems' efficacy is dependent upon the

accurate interpretation of brain signals which include

functional near-infrared spectroscopy (fNIRS)

data and electroencephalograms. Current

developments in EEG-based Brain-Computer

Interface technology showed enormous possibilities.

As reviewed by Värbu et al. (Värbu et al., 2022),

EEG-BCI systems interpret brain signals to facilitate

interactions between the brain and external devices.

Initially developed for medical purposes to aid

patients in regaining independence, these systems

have expanded into non-medical domains, enhancing

efficiency and personal development for healthy

individuals. Over the years, the field has seen the

evolution of classification algorithms from traditional

machine learning techniques, such as linear

discriminant analysis (LDA), to more advanced deep

learning models like convolutional neural networks

(CNNs).

The first step in the multi-stage process of

developing BCI systems is data acquisition, which

involves recording unprocessed brain signals. After

these signals are examined, significant features are

extracted, and computers classify these traits to

determine the user's intents. The effectiveness of BCI

systems has to rely upon the accurate interpretation of

cerebral signals like electroencephalograms and

functional near-infrared spectroscopy data.

Classification algorithms have evolved throughout

time in the field, progressing from more traditional

machine learning methods such as linear discriminant

Luo, R.

Brain-Computer Interface Signal Decoding Technology Based on Deep Learning.

DOI: 10.5220/0014300300004933

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Biomedical Engineering and Food Science (BEFS 2025), pages 27-32

ISBN: 978-989-758-789-4

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

27

analysis (LDA) to more advanced deep learning

models such as convolutional neural networks

(CNN). CNNs are frequently used because they can

find significant features from raw EEG data,

eliminating the requirement for costly preprocessing

and laborious feature engineering (Hossain et al.,

2023). Performance in a number of BCI applications,

such as driver attention monitoring, emotion

recognition, and motor imagery categorization, has

increased as a result.

2 TWO NEW DEVELOPMENTS

IN BRAIN-COMPUTER

INTERFACE SYSTEMS: AN

EMPHASIS ON MACHINE

LEARNING TECHNIQUES AND

CLASSIFICATION

ALGORITHMS

2.1 Overview of Classification

Algorithms in BCIs

In their thorough evaluation and analysis of brain-

computer interface (BCI) systems, Mansoor et al.

highlight the features and improvements of these

systems utilizing a variety of classification methods

(Mansoor et al., 2020). To improve the preciseness

and dependability of BCI systems, the authors

investigate the application of deep learning, transfer

learning, adaptive classifiers, matrix and tensor

classifiers, and other methods. They offer an

organized summary of recent techniques for feature

extraction, data collection, and categorization.

The study highlights the effectiveness of adaptive

classifiers in acquiring accurate results compared to

static classification techniques. It also emphasizes the

potential of deep learning techniques, particularly in

achieving faster processing speeds and higher

classification accuracy, for real-time BCI

implementation. The authors compare different

classification algorithms, noting the trade-offs

between performance and computational

requirements. For instance, linear discriminant

analysis (LDA) is highlighted for its suitability in

online BCI systems due to its low computational

demand, despite its linearity potentially providing

poor results on com-plex nonlinear EEG data.

The paper concludes that while artificial neural

networks (ANN) offer high accuracy for non-invasive

BCI techniques, their complex architecture may not

always align with the inherent characteristics of brain

signals. The authors suggest that further research is

needed to enhance accuracy for healthcare

applications and propose that future BCI systems

could support multiplatforms and be controlled via

smartphones for fail-safe mechanisms. The study's

conclusions encourage the creation of more precise

and approachable BCI systems, which could

completely transform how people utilize assistive

technology and technology in general.

2.2 Advanced Machine Learning

Approaches

An innovative machine-learning method for brain-

computer interfacing (BCI) was presented by Zhihan

Lv et al. with the goal of increasing the classification

accuracy of electroencephalogram (EEG) signals (Lv

et al., 2021). To create a data categorization model,

the authors integrate an enhanced Common Spatial

Pattern (CSP) method with a transfer learning

approach. A time-domain filter is incorporated into

the enhanced CSP algorithm to better capture the

temporal properties of EEG signals. The transfer

learning algorithm is used to apply knowledge gained

from one task to solve another related task, which is

particularly useful in BCI where data often comes

from different individuals with varying data

distributions.

The effectiveness of the proposed algorithms,

Adaptive Composite Common Spatial Pattern

(ACCSP) and Self Adaptive Common Spatial Pattern

(SACSP), is verified using a public EEG dataset. The

results demonstrate that both actual and imagined

movements show higher classification accuracy when

comparing left and right-hand movements at different

speeds versus same speeds. Traditional algorithms

achieved a baseline accuracy of 76.62%, while the

ACCSP and SACSP algorithms improved this to

83.58%, representing a 6.96% increase. Notably, the

ACCSP method's classification accuracy outperforms

the conventional CSP algorithm when the training

sample size is modest (e.g., 10 samples).

The work demonstrates that integrating transfer

learning with an updated CSP algorithm could

substantially boost the categorizing performance of

BCI systems. This is especially important since it

tackles the issues of lengthy training periods and poor

classification accuracy in BCI, which are crucial for

real-world uses including intelligent perception,

assistive medicine, and human-computer interaction.

The study suggests that future BCI technology may

further improve applications in gesture tracking and

BEFS 2025 - International Conference on Biomedical Engineering and Food Science

28

video gaming by utilizing these cutting-edge

machine-learning approaches, based on the improved

categorization accuracy shown in this study.

2.3 Deep Learning Models for EEG

Signal Classification

Elsayed et al. provides a valuable deep learning

approach for brain-computer interaction (BCI)

systems, specifically focusing on motor execution

(ME) electroencephalogram (EEG) signal

classification (Elsayed et al., 2021). The authors

propose a User-Independent Hybrid Brain-Computer

Interface (UIHBCI) model for identifying data from

fourteen channels of the electroencephalogram

(EEG) which capture the brain reactions of nine

individuals. Three steps make up the model: signal

processing, Deep Belief Network (DBN)

classification, and Independent Component Analysis

with Automatic EEG artifacts Detector method (ICA-

ADJUST) feature extraction.

The study employs two assessment models—

Audio/Video (A/V) and Male/Female (M/F)—to

identify relevant multisensory elements of

multichannel EEG that suggest certain mental

behaviors. When applied independently to these two

models, the DBN outperforms other cutting-edge

algorithms such as Linear Discriminant Analysis

(LDA), Support Vector Machine (SVM) and Hybrid

Steady-State Visual Evoked Potential Rapid Serial

Visual Presentation Brain-computer Inter-face

(Hybrid SSVEP-RSVP BCI). Even applied with

Brain-computer Interface Lower-Limb Motor

Recovery (BCI LLMR), yielding overall

classification rates of 94.44% for the A/V model and

94.44% for the M/F model.

The outcomes demonstrate the efficacy of the

integration of signal processing, feature extraction,

and DBN classification in BCI systems by showing

that the suggested UIHBCI model is successful in

classifying ME EEG signals.

2.4 The Comparative Study of Deep

Learning and Machine Learning

for fNIRS-BCI

Research contrasted deep learning with conventional

machine learning methods for interpreting brain

signals using functional near-infrared spectroscopy

(fNIRS) in the context of brain-computer interfaces

(BCI) (Lu et al., 2020). The purpose of the study is to

ascertain which method processes fNIRS data for

mental arithmetic tasks more effectively. Alongside

the deep learning technique, namely the long short-

term memory-fully convolutional network (LSTM-

FCN), the traditional machine learning techniques,

such as linear discriminant analysis (LDA), decision

trees, support vector machines (SVM), K-Nearest

Neighbor (KNN), and collective techniques, were

assessed.

Figure 1: Mechanism of LSTM-FCN for fNIRS-BCI Data

(Lu et al.,2020).

The fNIRS-BCI dataset used in the study was

collected from eight subjects performing mental

arithmetic tasks. Figure 1 depicts the LSTM-FCN

architecture for fNIRS-BCI data. The data first

underwent preprocessing to reduce physiological

noise. Subsequently, feature extraction was

performed to identify relevant channels and time

periods. The classical machine learning methods

required strict feature extraction and screening, while

the LSTM-FCN model was designed to automatically

learn features from the raw data.

According to the results, SVM outperformed the

other conventional approaches, achieving an average

accuracy of 91.0% for tasks related to the subject and

83.0% for tasks unrelated to the subject. However,

with an accuracy of 95.3% for tasks relevant to the

subject and 97.1% for tasks unrelated to the subject,

the deep learning technique LSTM-FCN

considerably surpassed the traditional methods.

Interestingly, LSTM-FCN demonstrated its stability

and efficacy in decoding fNIRS-BCI data by

achieving 100% accuracy for several participants

despite varying network dropout rates.

The study comes to the conclusion that deep

learning—specifically, the LSTM-FCN model—is a

more viable method for analyzing fNIRS-BCI data

than traditional machine learning techniques because

of its higher accuracy and capacity to automatically

learn features. This finding is significant as it

highlights the potential of deep learning to handle

complex and dynamic brain signal data, which is

crucial for advancing BCI applications in areas such

as assistive technologies and cognitive research.

Brain-Computer Interface Signal Decoding Technology Based on Deep Learning

29

2.5 Deep Learning for EEG-Based

Mental State Decoding

In order to decode mental states from

electroencephalogram (EEG) data in non-invasive

brain-computer interfaces (BCI), Dongdong Zhang

and colleagues created a deep learning-based method

(Zhang et al., 2019). The study addresses the

challenge of accurately predicting mental states using

EEG, which has traditionally suffered from limited

accuracy and generalization. The authors suggest a

brand-new 1D convolutional neural network (CNN)

architecture that uses different-length filters to extract

data from various EEG signal frequency bands. The

goal of this strategy is to increase prediction accuracy

and feature extraction.

The researchers looked at a dataset of 25 hours of

EEG recordings from five patients who were

undertaking a low-intensity control task. To maintain

inter-channel correlations, the data were preprocessed

using a bandpass filter and standardized. In order to

enable robust feature extraction, a relatively deep

network was trained for the proposed 1D CNN

employing a Resnet-like structure. The model's

performance was evaluated using fivefold cross-

validation.

The results demonstrate significant improvements

over traditional prediction methods such as KNN and

SVM. The proposed model achieved an accuracy of

96.40% in predicting mental states, outperforming

traditional algorithms and other published deep

learning architectures. In the more challenging

common-subject paradigm, the proposed model

achieved a prediction accuracy of 53.22%, surpassing

the performance of existing methods including EEG

Net, FBCSP Shallow Net, and Deep Conv Net.

The study's findings highlight the effectiveness of

using 1D convolutional neural networks for EEG

feature extraction and mental state prediction. This

technique presents an appealing option to further

develop both the precision and generality within BCI

systems, possibly broadening its applications in

monitoring mental states in a variety of real-world

situations

2.6 Continuous Pursuit Tasks in BCI

The use of deep learning (DL)-based decoders for

continuous pursuit (CP) activities has been examined

in noninvasive brain-computer interfaces (BCI)

which incorporate electroencephalography (EEG)

(Forenzo et al., 2024). Using motor imagery, users

perform CP tasks by tracking a moving target in 2D

space, a process that requires dynamic and continuous

control. The researchers developed a novel labeling

system to enable supervised learning with CP data,

which lacks clear labels for traditional supervised

learning methods. They trained DL-based decoders

using two architectures: EEGNet and a modified

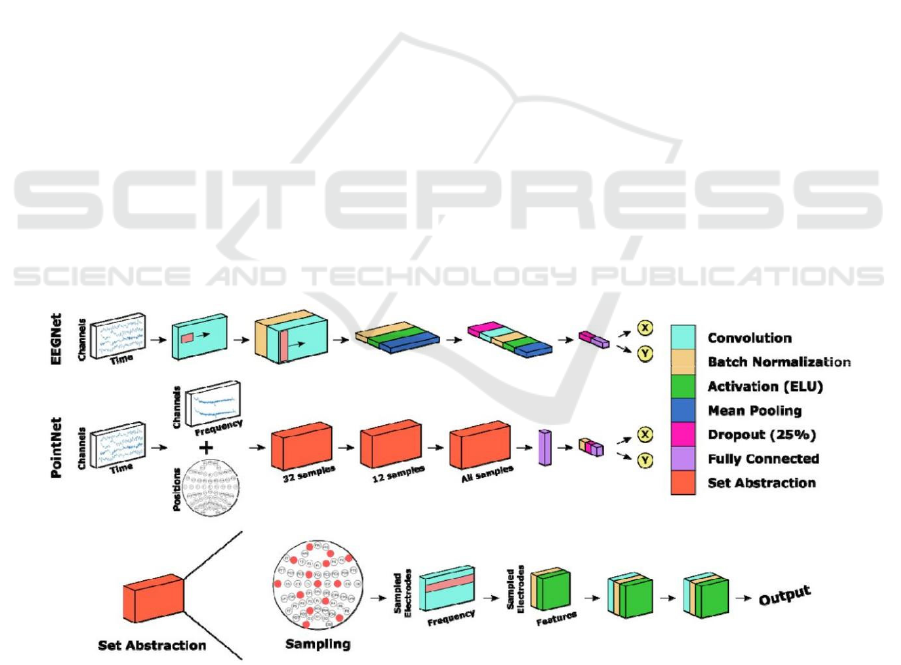

PointNet, shown as Fig2. The performance of these

DL models was evaluated over multiple online

sessions with 28 human participants.

Figure 2: The Implementing of EEGNet and PointNet Architecture (Forenzo et al., 2024).

The results showed significant improvements in

the performance of DL-based models as more training

data became available. In the very last period, both

DL models surpassed a standard autoregressive

decoder. Specifically, the normalized mean squared

error (NMSE) be-tween the cursor and target dropped

BEFS 2025 - International Conference on Biomedical Engineering and Food Science

30

from an initial value to 0.43 for EEGNet and 0.56 for

PointNet every session. Furthermore, during the

course of sessions, the correlation between the target

and cursor positions grew, with EEGNet reaching a

greater correlation by the last session. The study also

investigated transfer learning and mid-session

recalibration to enhance performance. Although

transfer learning failed to significantly improve early

session performance, mid-session recalibration

demonstrated promising benefits in several cases.

All things considered, the study indicates how well

DL-based decoders perform BCI in hard tasks like

CP, indicating that they may be utilized to expand

BCI applications in practical situations while also

enhancing the quality of life for normal people and

people with motor impairments.

2.7 Deep Learning for High-Speed BCI

Systems

To forecast visual input properties from EEG data,

deep learning and the EEG2Code technique have

been combined (Nagel & Spüler, 2019). The

disclosed BCI system is by far the quickest, since the

authors demonstrate that an individual may use this

method in an online BCI to obtain an information

transfer rate (ITR) of 1237 bits per minute. The top

person can distinguish between 500,000 distinct

stimuli with 100% accuracy utilizing just 2 seconds

of EEG data in a simulated online exercise with

500,000 targets.

The study uses deep learning, namely a

convolutional neural network (CNN), with the

EEG2Code approach to generate a nonlinear model

that forecasts random stimulation patterns according

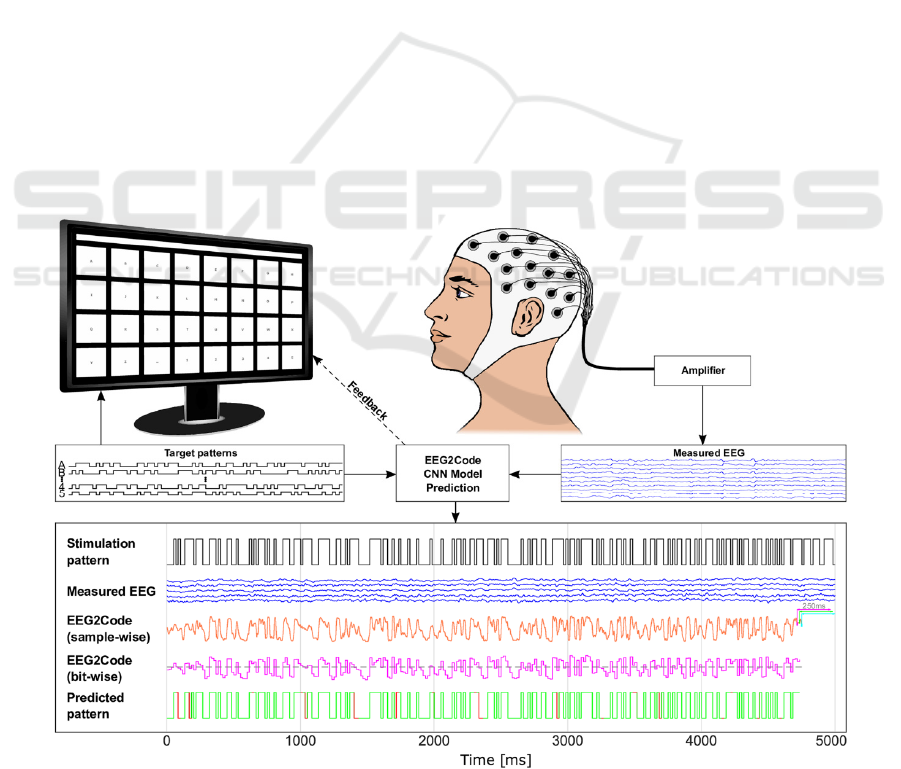

to VEP feedbacks. Figure 3 depicts a demonstration

of the EEG2Code CNN model. The authors suggest

that EEG signals include more information than is

commonly supposed. However, they also mention a

ceiling effect, which suggests that, not less than for

BCIs that rely on stimuli that are visual, more

powerful decoding approaches may not necessarily

result in greater BCI control.

The results highlight a significant improvement in

classification accuracy and ITR when using deep

learning compared to the previous ridge regression

model. The technique increased the ITR from 232

bits/min to 701 bits/min, a 202% improvement, while

also improving the pattern prediction accuracy from

64.6% to 74.9%. In a passive BCI environment, the

top subject obtained an online ITR of 1237 bits/min.

The system reached an average utility rate of 175

bits/min for asynchronous self-paced BCI spelling,

Users can create an average of 35 error-free letters

each minute.

Figure 3: Example of the EEG2Code CNN Pattern Prediction (Nagel & Spüler, 2019).

Brain-Computer Interface Signal Decoding Technology Based on Deep Learning

31

The authors come to the conclusion that although

the method they outlined may be able to gather a

significant quantity of data from EEG signals, the

maximum number of targets and the minimum trial

duration are still limitations for genuine BCI control.

They highlight two important points: the need to

make sure BCI systems continue to be feasible for

end-user applications, and the difference between

brain signal decoding performance and actual BCI

control performance.

3 CONCLUSION

This review research focuses on the substantial

breakthroughs in brain-computer interface (BCI)

systems made available through judicious application

of advanced classification algorithms. Among

various advances, deep learning techniques such as

LSTM-FCN and 1D CNN have demonstrated

superior capabilities in decoding intricate brain

signals, offering better accuracy and robustness

compared to traditional methods. The symbiotic

relationship between transfer learning and enhanced

CSP algorithms has also been validated, particularly

in overcoming the challenges of limited training data.

Building upon these advances, the integration of deep

learning with the EEG2Code method has achieved

unprecedented information transfer rates, revealing

the untapped potential of EEG signals in BCI

applications. Despite these advancements, the

alignment of algorithmic complexity with brain

signal characteristics and the practical deployment of

BCI systems for end-users remain ongoing

challenges. As highlighted in the review by Samal

and Hashmi (Samal & Hashmi, 2024), the continuous

advancements in non-invasive and portable sensor

technologies, such as EEG-based BCIs, are expected

to significantly enhance the precision and real-time

capabilities. As BCI technology evolves, it shows

great promise in revolutionizing multiple fields, from

assistive healthcare to human-computer interaction

and neuroscience research, heralding a new era of

more intuitive and effective BCI systems.

REFERENCES

Elsayed, N. E., Tolba, A. S., Rashad, M. Z., Belal, T., &

Sarhan, S. 2021. A deep learning approach for brain

computer interaction-motor execution EEG signal

classification. IEEE Access, 9, 101513-101529.

Forenzo, D., Zhu, H., Shanahan, J., Lim, J., & He, B. 2024.

Continuous tracking using deep learning-based

decoding for noninvasive brain–computer interface.

PNAS Nexus, 3(4), page 145.

Hossain, K. M., Islam, M. A., Hossain, S., Nijholt, A., &

Ahad, M. A. R. 2023. Status of deep learning for EEG-

based brain–computer interface applications. Frontiers

in Computational Neuroscience, 16 (1006763).

Lu, J., Yan, H., Chang, C., Wang, N. 2020. Comparison of

Machine Learning and Deep Learning Approaches for

Decoding Brain Computer Interface: An fNIRS Study.

In Shi, Z., Vadera, S., Chang, E. (eds), Intelligent

Information Processing X. IIP 2020, Cham, October

2020. Cham: Springer.

Lv, Z., Qiao, L., Wang, Q., & Piccialli, F. 2021. Advanced

machine-learning methods for brain-computer

interfacing. IEEE/ACM Transactions on

Computational Biology and Bioinformatics, 18(5),

1688-1698.

Mansoor, A., Usman, M. W., Jamil, N., Naeem, M. A.

2020. Deep learning algorithm for brain-computer

interface. Scientific Programming, 2020, 5762149, 12

pages.

Nagel, S., Spüler, M. 2019. World’s fastest brain-computer

interface: Combining EEG2Code with deep learning.

PLOS ONE, 14(9): e0221909.

Samal, P., Hashmi, M. F. 2024. Role of machine learning

and deep learning techniques in EEG-based BCI

emotion recognition system: a review. Artificial

Intelligence Review, 57(3), 50.

Värbu, K., Muhammad, N., & Muhammad, Y. 2022. Past,

present, and future of EEG-based BCI applications.

Sensors, 22(9), Article 3331.

Zhang, D., Cao, D., & Chen, H. 2019. Deep learning

decoding of mental state in non-invasive brain

computer interface. In Proceedings of the International

Conference on Artificial Intelligence, Information

Processing and Cloud Computing (AIIPCC '19), New

York, NY, USA, October 2019. New York: Association

for Computing Machinery.

BEFS 2025 - International Conference on Biomedical Engineering and Food Science

32