Emotion Recognition Using Machine Learning Models

on EEG Signals

Gizem Yildiz

a

and Önder Yakut

b

Department of Information Systems Engineering, Kocaeli University, Kocaeli, Turkey

Keywords: Electroencephalogram (EEG) Signal, Emotion Recognition, Machine Learning, Muse Headset, Principal

Component Analysis (PCA).

Abstract: This study proposes an emotion recognition model based on EEG signals. The performance of the proposed

model was compared with that of various machine learning models. After preprocessing the raw EEG data,

Principal Component Analysis (PCA) was applied for dimension reduction. Emotion classification was

performed using various classifiers such as LSTM, SVM, DNN, GRU, RNN, XGBoost, Logistic Regression,

and Random Forest using the obtained features. As a result of the studies, GRU achieved the most successful

result with an accuracy rate of 97.89%. LSTM achieved 96.25%, DNN 97.81%, Random Forest 95.78%,

Logistic Regression 94.61%, SVM 95.55%, XGBoost 96.72%, and RNN 95.55% accuracy rates. These results

show that emotional states can be classified with high accuracy by effectively processing EEG signals using

PCA.

1 INTRODUCTION

Emotion is a complex physiological behaviour in all

human beings, representing a physiological and

behavioural response to both internal and external

stimuli. The purpose of recognizing human emotions

is to identify them through various methods,

including body language, physiological indicators,

and audio-visual indicators. Emotion is crucial in

human-to-human communication and interaction.

Emotion is the outcome of the mental processes that

people undergo and can be described as a response of

their psychophysiological state (Chatterje and Byun,

2022).

Over the past few years, there has been a great

deal of research on engineering methods for

automatic emotion recognition. These can be grouped

into three broad categories. The first category

analyzes speech, body language, and facial

expressions. These audiovisual methods allow

emotion recognation without physical contact. The

second group mainly focuses on peripheral

physiological signals. Studies have demonstrated that

different emotional states modulates peripheral

physiological signals. In the third group, the focus is

a

https://orcid.org/0000-0001-9389-9366

b

https://orcid.org/0000-0003-0265-7252

on brain signals originating from the central nervous

system, captured using devices that measure brain

wave activity, including electroencephalography

(EEG) and electrocorticography (ECoG). Among

these brain signals, EEG signals have been shown to

possess informative properties in response to

emotional states. Davidson et al. suggest that the

experience of two emotions is associated with

electrical activity in the frontal lobe; which are

positive and negative emotions (Davidson and Fox,

1982). According to these studies, there has been

much debate about the connection between EEG

asymmetry and the emotions.

The electrocardiogram (ECG) signals provide

information that is useful for recognizing emotional

distress in people. Over the years, numerous studies

have been conducted on emotional distress,

particularly in the field of psychology. Mental health

conditions such as depression, anxiety, and bipolar

disorder are strongly influenced by emotional

distress. In the field of affective research, emotions

are commonly classified into two primary categories:

positive (happiness and surprise) and negative

(sadness, anger, fear, and disgust). EEG offers high

temporal resolution in capturing the brain’s electrical

48

Yildiz, G. and Yakut, Ö.

Emotion Recognition Using Machine Learning Models on EEG Signals.

DOI: 10.5220/0014295700004848

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences (ICEEECS 2025), pages 48-53

ISBN: 978-989-758-783-2

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

activity. In this work, three emotional states (positive,

negative, and neutral) are classified. Acknowledging

that brain activity is individual-specific and that

emotional responses vary across different brain

regions among individuals is crucial for

understanding how emotions can be identified

through neural signal. This study examines the

effectiveness of machine learning (ML) models in the

classification of human emotional states using EEG

data (Chatterje and Byun, 2022).

Determining what specific brain activity patterns

correspond to momentary mental experiences is a

significant challenge in applications involving brain-

machine interfaces. The sheer amount of information

necessary to represent the complex, nonlinear, and

unpredictable nature of EEG signals accurately is one

of the most critical challenges in EEG signal

classification. In this study, a variety of machine

learning (ML) models have been employed to

categorize different emotional states, including GRU

(Gated Recurrent Unit), LSTM (Long Short-Term

Memory), XGBoost (Extreme Gradient Boosting),

RF (Random Forest), DNN (Deep Neural Network),

SVM (Support Vector Machine), and RNN

(Recurrent Neural Network).

2 LITERATURE REVIEW

This literature review extensively examines the field

of EEG-based emotion recognition. This review

examines various aspects of the subject, including

signal processing, feature extraction, classification

techniques and areas of application. Our review

highlights the progress made in EEG based emotion

recognition while also emphasizing the emerging

challenges and potential directions within this

interdisciplinary area. New techniques have been

developed by researchers to make EEG-based

emotion recognition systems more sensitive,

applicable and usable.

In recent years, research on detecting emotions

using EEG signals has gained significant momentum.

In particular, the availability of low-cost EEG devices

and the sharing of open data sets among researchers

have accelerated work on this subject. In this context,

the “EEG Brainwave Dataset: Feeling Emotions”

published on Kaggle, which we also used in this

study, has been one of the sources frequently referred

to in research. The relevant dataset consists of four-

channel (TP9, AF7, AF8, TP10) EEG signals

obtained with the Muse EEG device in positive,

neutral, and negative emotional states.

Earlier studies on EEG-based emotion detection

focused on determining if emotional data could be

obtained from brain waves. Numerous studies have

examined the link between emotional experiences

and brain activity, with a particular focus on the

frontal regions. Within this context, frontal alpha

asymmetry has been explored as it reflects variations

in alpha brainwave activity of the frontal cortex,

which are connected to different emotional states. In

their work, Allen and Reznik identified frontal EEG

asymmetry as a potential marker for vulnerability to

depression. Although frontal asymmetry may help

detect individuals at greater risk for depression, large

scale longitudinal studies are still required to confirm

this finding (Allen and Reznik, 2015).

Frontal alpha asymmetry neurofeedback was

investigated by Mennella et al. as a strategy for

mitigating symptoms of anxiety and negative affect.

In their study, neurofeedback training was employed

to examine discrete changes in positive and negative

affect, anxiety, and depression, as well as variations

in alpha power across the left and right hemispheres.

These pioneering studies established a scientific

foundation for subsequent research into the neural

correlates of emotions using EEG (Mennella, Patron

and Palomba, 2017).

From the acquired EEG signals, J. J. Bird et al.

extracted statistical features across the alpha, beta,

theta, delta, and gamma bands, followed by feature

selection using techniques including OneR,

Information Gain, Bayesian Network, and

Symmetrical Uncertainty. The dataset, consisting of

2,548 features, was reduced using 63 features selected

by Information Gain, and ensemble classifiers such as

Random Forest trained on these features achieved

approximately 97.89% accuracy. The Deep Neural

Network (DNN) achieved 94.89% accuracy (Bird,

Faria, Manso, Ekárt and Buckingham, 2019).

Joshi and Joshi evaluated the performance of

RNN and KNN (K-Nearest Neighbour) algorithms in

classifying human emotions using EEG signals. In the

study, EEG signals corresponding to positive, neutral,

and negative emotions were analyzed. During the

preprocessing stage, channel selection was

performed, and discrete wavelet transform (DWT)

was used for feature extraction. The obtained features

were fed as input to the RNN and KNN algorithms.

The experiments showed that the RNN algorithm

achieved 94.84% accuracy, while the KNN algorithm

achieved 93.43% accuracy. These results

demonstrate that both algorithms performed well in

the EEG-based emotion recognition task. In

particular, the RNN's ability to model dependencies

Emotion Recognition Using Machine Learning Models on EEG Signals

49

in time series data provided an advantage in the

emotion recognition task (Joshi and Joshi, 2022).

Mridha et al. aimed to recognize emotions from

EEG signals using deep learning algorithms and

compared DNN, LSTM, and GRU models. The first

model was a DNN with 98.44% accuracy, the second

was an LSTM with 97.5% accuracy, and the third was

a GRU with 97.18% accuracy. The GRU model has

achieved up to 96% accuracy in identifying negative

emotions. This result shows that different model

configurations can exhibit varying levels of success

depending on the type of emotion (Mridha, Sarker,

Zaman, Shukla, Ghosh and Shaw, 2023).

Dhara et al. developed hybrid structure that

combines machine learning and deep learning

techniques for recognizing emotions using EEG

signals. In the study, various classifiers were tested

after feature extraction from raw EEG signals, and it

was noted that models with early-stage filtering

performed better. Specifically, using the hybrid

CNN-LSTM model, accuracy rates of 96.87% and

97.31% were achieved for valence and arousal

dimensions, respectively. These results demonstrate

that hybrid deep learning models can perform well in

EEG-based emotion recognition tasks (Dhara and

Singh, 2023).

In their study using various machine learning

models, Rachini et al. achieved high success rates

with accuracy rates of 99% for Random Forest, 98%

for SVM, and 94% for KNN (Rachini, Hassn, El

Ahmar and Attar, 2024).

Another study in this field was published by

Prakash and Poulose. In this study, the performance

of eight different supervised machine learning

algorithms was evaluated using the “EEG Brainwave

Dataset: Feeling Emotions” dataset. The models used

included Logistic Regression, Decision Trees,

Random Forest, Gaussian Naive Bayes (GNB),

AdaBoost, SVM, LightGBM, XGBoost, and

CatBoost algorithms. Additionally, PCA, t-SNE, and

LDA techniques were applied for dimension

reduction. Experiments conducted with five-fold

cross-validation revealed that the XGBoost algorithm

achieved the highest performance with an accuracy

rate of 92.79%. This was followed by CatBoost

(92.05%) and LightGBM (91.79%). On the other

hand, the Gaussian Naive Bayes algorithm had the

lowest accuracy rate at 72.83%. However, it has been

observed that the GNB model shows an

approximately 10% increase in accuracy after PCA is

applied. These results demonstrate that data

preprocessing and dimension reduction significantly

impact success, particularly for low performance

algorithms (Prakash and Poulose, 2025).

3 MATERIALS AND METHODS

3.1 Dataset

EEG Brain Wave Data Set: The Feeling Emotions

dataset was employed in this study to classify distinct

emotions. As presented in Table 1, participants in this

dataset were exposed to a series of video clips

designed to elicit three distinct emotional states:

positive, negative and neutral. For each condition, 6

minutes of brain wave activity data were recorded

from two adult subjects, one male and one female,

aged 20 and 22, to produce 36 minutes of brain wave

activity data (Bird, Faria, Manso, Ekárt and

Buckingham, 2019).

Table 1: The movies and scenes watched by the

participants.

Movie Scene Emotion

Marley and Me Death Scene Negative

Up Death Scene Negative

My Girl Funeral Scene Negative

La La Lan

d

Musical Scene Positive

Data were collected using the Muse headset from

extracranial electrodes positioned at TP9, AF7, AF8,

and TP10. Human emotions were elicited through

visual stimuli, and EEG recordings were obtained

according to the 10-20 electrode placement system.

The electrode configuration of the EEG setup is

illustrated in Figure 1. This study focused on

classifying three emotional states: positive, negative,

and neutral. The corresponding emotion graph

indicates that the signal patterns differ across these

emotional states, suggesting that variations in EEG

signal characteristics can serve as a fundamental basis

for emotion classification. (Bird, Ekart, Buckingham

and Faria, 2019).

Figure 1: EEG sensors on the Muse headband in the

international standard EEG placement system: TP9, AF7,

AF8, and TP10 (Bird, Ekart, Buckingham and Faria, 2019).

When the EEG data samples in the dataset were

arranged equally, no problem related to class

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

50

imbalance was encountered during the model training

and testing processes of the proposed system. Given

that the dataset contained 2558 features, we

performed feature dimension reduction in our study.

For this purpose, we used the Principal Component

Analysis (PCA) model. Principal Component

Analysis (PCA) is a technique that projects high

dimensional data onto a lower dimensional space

while maximizing the captured variance. For a given

set of points, PCA identifies the ‘best fit line’ that

minimizes the average distance to all points in the

dataset (Prakash and Poulose, 2025).

The use of this public dataset highlights the

unique qualities relevant to the objectives of our

study. Despite its frequent application, this dataset

provides distinctive opportunities to analyze EEG

derived emotional responses within a controlled

experimental framework. Data collected from multi-

electrode regions with the Muse headset yield a

comprehensive set of temporal statistical features,

including mean and variance, alongside frequency

domain characteristics derived via FFT (Fast Fourier

Transform). Such features offer a detailed

representation of brain wave activity, supporting an

in-depth evaluation of ML approaches for emotion

classification. The structure of the dataset is well

suited to our research focus, facilitating a thorough

assessment and generalization of ML methods in

EEG based emotion classification.

3.2 Models Used

In this study, various machine learning algorithms

were used to recognize the emotional states of

individuals based on EEG signals. The dataset used is

the “EEG Brain Wave Dataset: Feeling Emotions,” a

dataset publicly available on the Kaggle platform that

contains EEG recordings corresponding to different

emotional states.

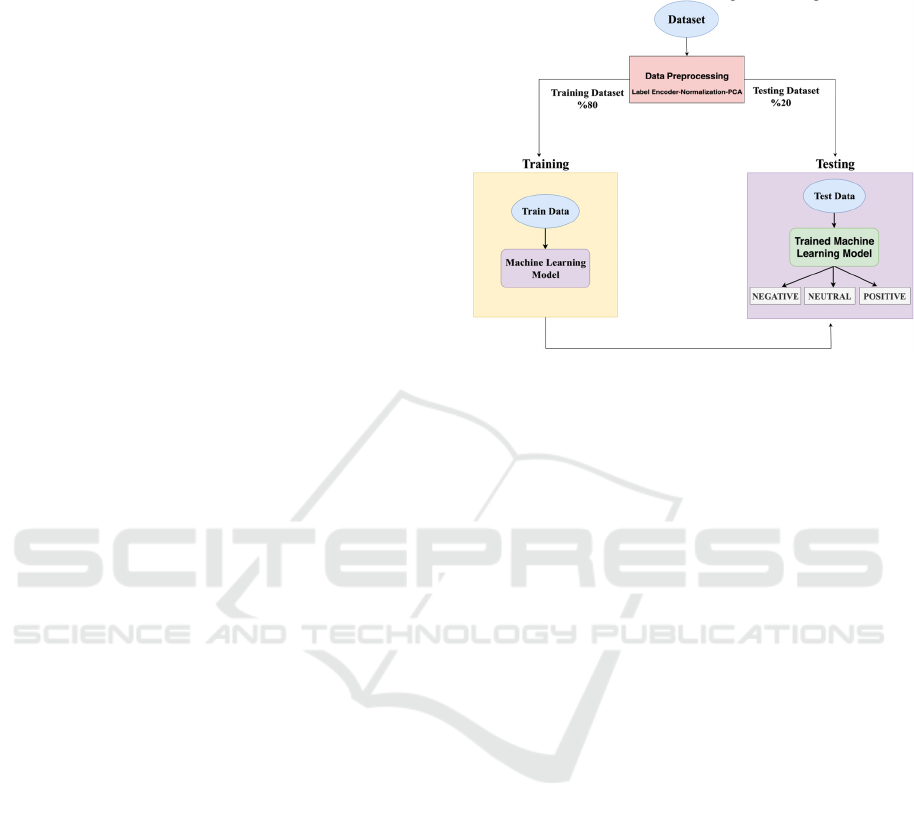

In the “EEG Brain Wave Dataset: Feeling

Emotions” dataset, several preprocessing steps were

applied to prepare the data for machine learning

models. First, categorical labels corresponding to

emotional states were converted into numerical

values using a Label Encoder to ensure compatibility

with the algorithms. Next, normalization was

performed to scale the features to a uniform range,

thereby reducing bias caused by varying magnitudes

and improving model convergence. Finally, Principal

Component Analysis (PCA) was applied for

dimension reduction. This helped minimize

redundancies, highlight the most informative

features, and improve computational efficiency. After

undergoing preprocessing phase, the dataset was

employed for training with various machine learning

algorithms. The general architecture of the proposed

model is shown in the block diagram in Figure 2.

Figure 2: Block diagram of the model presented in this

study.

LSTM is a type of RNN known for its ability to

learn long term dependencies in time series data.

Through the integration of memory cells and gating

mechanisms, LSTMs effectively overcome the

vanishing gradient problem, rendering them

particularly well suited for tasks involving sequential

data, such as speech recognition, natural language

processing, and EEG signal analysis. In this study, the

GRU network was applied alongside LSTM in order

to assess its sensitivity to the temporal patterns of

emotional states. DNN, composed of multiple layers

of artificial neurons, is capable of learning high-

dimensional and abstract representations from

complex data. After normalization, EEG features

were provided as input to the DNN, and multilayer

architectures employing Rectified Linear Unit

(ReLU) activation functions were systematically

evaluated. The learning capacity of the DNN shows

strong performance, particularly when combined with

carefully selected features, highlighting its potential

in capturing nonlinear relationships within EEG

signals

(Mridha, Sarker, Zaman, Shukla, Ghosh and Shaw,

2023)

. SVM, a traditional yet robust machine learning

algorithm, has proven effective in scenarios with

limited sample sizes and high dimensional data,

owing to its ability to maximize the decision margin

and generalize well in such contexts (

Rachini, Hassn,

El Ahmar and Attar, 2024

).

XGBoost is a powerful ensemble learning

technique based on gradient boosted decision trees

and it typically achieves high accuracy values by

balancing both model complexity and learning time.

In this study, the XGBoost model trained with

Emotion Recognition Using Machine Learning Models on EEG Signals

51

features extracted from EEG data has drawn

attention, particularly for its robustness against

overfitting. Logistic Regression, despite being a

simple and interpretable algorithm known for

producing linear decision boundaries, has achieved

satisfactory results on well preprocessed EEG data.

Random Forest, on the other hand, is an ensemble

learning technique that classifies by combining the

outputs of multiple decision trees; it has shown

successful performance on EEG data due to its

resilience to noise in the data and its lack of tendency

toward overfitting (Prakash and Poulose, 2025).

Various experiments were conducted on the

dataset using the mentioned models. The results

obtained from these experiments are explained under

the section titled Experimental Results.

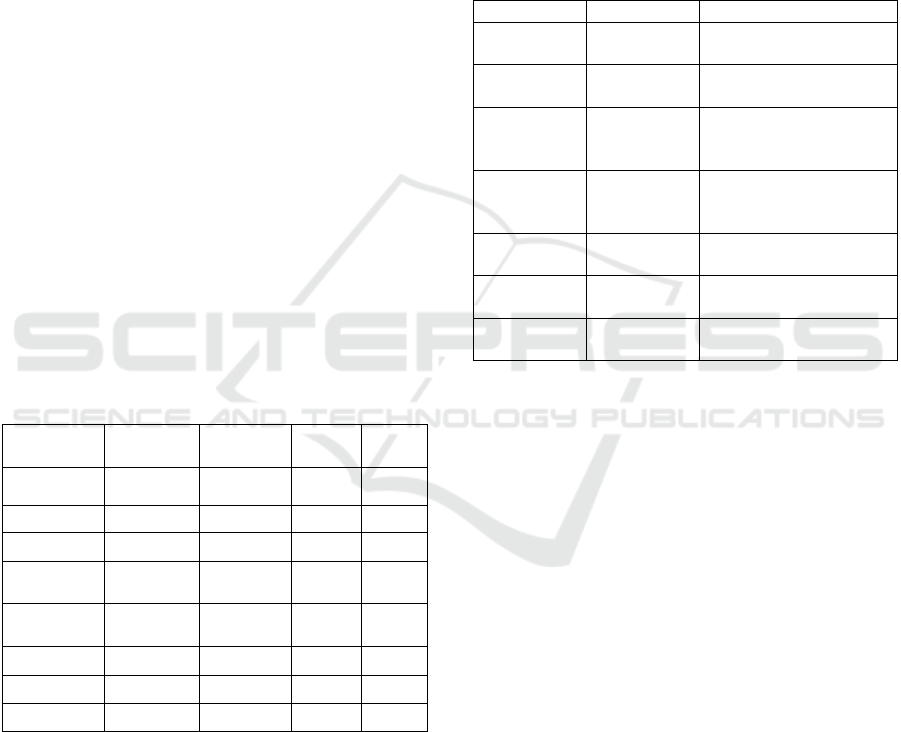

4 EXPERIMENTAL RESULTS

In this study, the performance of various machine

learning algorithms (Logistic Regression, Random

Forest, SVM, XGBoost, RNN, GRU, and LSTM) was

compared for emotion classification using FFT based

features that were reduced in dimensionality through

PCA. Table 2 shows the applied machine learning

models and their respective performance metrics.

Table 2: Machine learning models used and their

performance metrics.

Model Accuracy Precision Recall

F1-

Score

LSTM

0,9625 0,9626 0,9625 0,9626

GRU

0,9789 0,9794 0,9789 0,9789

DNN

0,9781 0,9781 0,9781 0,9781

Random

Forest

0,9578 0,9589 0,9578 0,9579

Logistic

Re

g

ression

0,9461 0,9492 0,9461 0,9458

SVM

0,9555 0,9560 0,9555 0,9554

XGBoost

0,9672 0,9676 0,9672 0,9671

RNN

0,9555 0,9569 0,9555 0,9552

Among the models examined in this study, GRU

demonstrated the highest classification accuracy.

This result indicates that GRU exhibits an outstanding

performance with signal-based data due to its ability

to effectively learn time dependent patterns.

Specifically, the GRU's ability to learn similarly to

deep structures such as LSTM with fewer parameters

optimizes training time while also improving

classification performance. In this context, we

conclude that the GRU offers an effective and

efficient alternative model for applications such as

sentiment analysis working with high dimensional

data containing time series features.

Looking at Table 3, we can observe that the GRU,

XGBoost, and RNN models achieve better results

than the studies in the literature, while the other

models achieve similar results to the studies in the

literature.

Table 3: Comparison of literature results and proposed

model performance.

Model Accuracy Authors

LSTM

0,9750

0,9625

Mridha et al. (2023)

Pro

p

osed Model

GRU

0,9789

0,9718

Proposed Model

Mridha et al.

(

2023

)

DNN

0,9489

0,9781

0,9844

J.J. Bird et al. (2019)

Proposed Model

Mridha et al.

(

2023

)

Random

Forest

0,9789

0,9900

0,9578

J.J. Bird et al. (2019)

Rachini et al. (2024)

Pro

p

osed Model

SVM

0,9800

0,9555

Rachini et al. (2024)

Pro

p

osed Model

XGBoost

0,9672

0,9279

Proposed Model

Prakash et al. (2025)

RNN

0,9484

0,9555

Joshi et al. (2022)

Proposed Model

5 CONCLUSIONS

In this study, experimental research was conducted to

perform emotion recognition based on EEG signals

using machine learning models. Current studies in the

literature were reviewed and compared with the

results obtained from the experimental research.

Thus, machine learning models show promising

results in classifying emotional states with high

accuracy rates, even with low-cost EEG devices.

However, the nature of signals being prone to noise,

individual differences, and the challenges

encountered in real time applications are among the

significant problems awaiting further research in this

field.

In our future work, the focus will be on

developing more flexible and reliable systems

through multi-modal approaches, more advanced and

personalized models, and the integration of

explainable artificial intelligence (XAI).

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

52

ACKNOWLEDGEMENTS

This work was supported by Research Fund of the

Kocaeli University. Project Number: 4703.

REFERENCES

Allen, J. J., & Reznik, S. J. (2015). Frontal EEG asymmetry

as a promising marker of depression vulnerability:

Summary and methodological considerations. Current

opinion in psychology, 4, 93-97.

Bird, J. J., Ekart, A., Buckingham, C. D., & Faria, D. R.

(2019, April). Mental emotional sentiment

classification with an eeg-based brain-machine

interface. In Proceedings of theInternational

Conference on Digital Image and Signal Processing

(DISP’19).

Bird, J. J., Faria, D. R., Manso, L. J., Ekárt, A., &

Buckingham, C. D. (2019). A Deep Evolutionary

Approach to Bioinspired Classifier Optimisation for

Brain ‐ Machine Interaction. Complexity, 2019(1),

4316548.

Chatterjee, S., & Byun, Y. C. (2022). EEG-based emotion

classification using stacking ensemble approach.

Sensors, 22(21), 8550.

Davidson, R. J., & Fox, N. A. (1982). Asymmetrical brain

activity discriminates between positive and negative

affective stimuli in human infants. Science, 218(4578),

1235-1237.

Dhara, T., & Singh, P. K. (2023). Emotion recognition from

EEG data using hybrid deep learning approach. In

Frontiers of ICT in Healthcare: Proceedings of EAIT

2022 (pp. 179-189). Singapore: Springer Nature

Singapore.

Mennella, R., Patron, E., & Palomba, D. (2017). Frontal

alpha asymmetry neurofeedback for the reduction of

negative affect and anxiety. Behaviour research and

therapy, 92, 32-40.

Mridha, K., Sarker, T., Zaman, R., Shukla, M., Ghosh, A.,

& Shaw, R. N. (2023, August). Emotion Recognition:

A New Tool for Healthcare Using Deep Learning

Algorithms. In International Conference on Electrical

and Electronics Engineering (pp. 613-631). Singapore:

Springer Nature Singapore.

Rachini, A., Hassn, L. A., El Ahmar, E., & Attar, H. (2024).

Machine learning techniques towards accurate emotion

classification from eeg signals. WSEAS Transactions

on Computer Research, 12, 455-462.

Prakash, A., & Poulose, A. (2025). Electroencephalogram-

based emotion recognition: a comparative analysis of

supervised machine learning algorithms. Data Science

and Management.

Joshi, S., & Joshi, F. (2022). Human Emotion Classification

based on EEG Signals Using Recurrent Neural Network

And KNN. arXiv preprint arXiv:2205.08419.

Emotion Recognition Using Machine Learning Models on EEG Signals

53