Customer Review Summarization in Production with Large Language

Models for e-Commerce Platforms

H. Bahadir Sahin, M. Furkan Eseoglu, Berk Taskin, Aysenur Kulunk and

¨

Omer Faruk Bal

Data Analytics Department, Hepsiburada, Istanbul, Turkey

Keywords:

Large Language Models, Customer Review Summarization, Opinion Summarization, e-Commerce,

LLM-as-a-Judge.

Abstract:

The increasing volume of customer-generated content on large-scale e-commerce platforms creates significant

information overload, complicating consumer decision-making processes. This study presents a case study

of the ”Customer Review Summarization” system, deployed in production at Hepsiburada, one of Turkey’s

leading e-commerce platforms, as a solution to this problem. The system leverages Large Language Models

(LLMs) to generate meaningful and concise summaries from customer feedback. The primary contribution of

this paper is the detailed description of an innovative, staged system architecture that optimizes the trade-off

between operational cost and output quality. This architecture utilizes any LLM model for large-scale and

cost-effective summary generation, while strategically leverages the more powerful GPT-4.1 model for quality

assurance. Furthermore, practical challenges encountered in a production environment, such as inconsistent

summary quality and managing noisy input data, and the iterative solutions developed to address them, are

transparently discussed. Finally, a comprehensive empirical evaluation framework is proposed to compare the

performance of various state-of-the-art LLMs.

1 INTRODUCTION

The modern e-commerce ecosystem is defined by a

massive amount of user-generated content. While

customer reviews serve as a valuable resource by pro-

viding social proof for potential buyers, the presence

of hundreds or even thousands of reviews creates a

dilemma, imposing a significant cognitive load on

consumers. Users are forced to read numerous re-

views to gain a comprehensive understanding of a

product, which prolongs the shopping experience and

complicates the decision-making process. This issue

becomes more pronounced on smaller screens, such

as mobile devices. In this context, the main business

objective is to increase conversion rates by shortening

the time from a customer viewing a product to placing

an order.

In response to this challenge, Hepsiburada has de-

veloped the ”Review Summary” feature, which lever-

ages advanced Large Language Models (LLMs), and

presents these summaries for hundreds of thousands

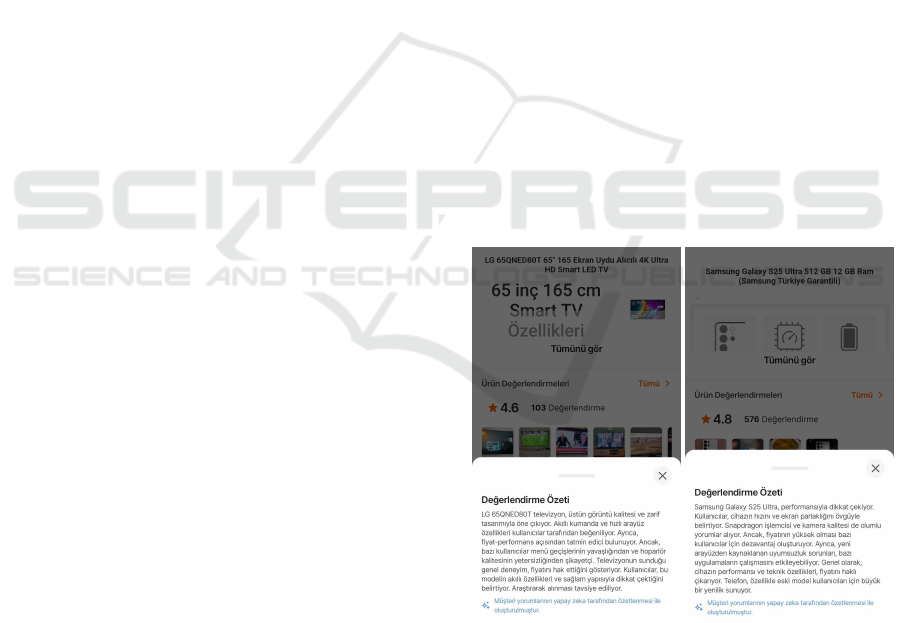

of products as in Figure 1. The core purpose of

this system is to provide comprehensive, easy-to-read,

and unbiased summary of products by analyzing ver-

ified and approved customer feedback. This approach

Figure 1: Summary examples from in Hepsiburada.

helps users quickly understand the positive and neg-

ative aspects of a product, enabling them to make in-

formed decisions. Unlike traditional machine learn-

ing projects, pre-trained foundational models, such as

GPT, Gemini, Llama, etc., significantly accelerate de-

velopment cycles by focusing on system integration

and contextual engineering rather than lengthy data

Sahin, H. B., Eseoglu, M. F., Taskin, B., Kulunk, A. and Bal, Ö. F.

Customer Review Summarization in Production with Large Language Models for e-Commerce Platforms.

DOI: 10.5220/0014284800004848

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences (ICEEECS 2025), pages 5-13

ISBN: 978-989-758-783-2

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

5

labeling and model training processes.

The contributions of this paper to the field can be

summarized under four main headings:

• A detailed case study is presented on the design,

deployment, and maintenance of a large-scale

generative AI system in a high-traffic commer-

cial environment, covering approximately 300000

unique products.

• A pragmatic and cost-driven system architecture

(the staged LLM approach) that balances perfor-

mance and operational expenditure, a critical as-

pect for industrial applications often overlooked

in the academic literature, is thoroughly exam-

ined.

• Moving beyond idealized laboratory settings, the

real-world challenges encountered in a production

environment and the solutions developed for them

are transparently discussed.

• A robust framework is proposed for the com-

parative evaluation of contemporary LLMs on a

domain-specific summarization task.

The remainder of this paper will first review rel-

evant academic works, followed by a detailed ex-

planation of the system’s architecture and methodol-

ogy. Subsequently, the planned experimental setup

and evaluation metrics will be presented, and finally,

the study’s conclusions and potential directions for fu-

ture work will be discussed.

2 RELATED WORKS

This section situates our work within the context of

academic research from leading NLP and data mining

conferences, tracing the evolution of opinion summa-

rization from foundational techniques to the current

state-of-the-art driven by Large Language Models.

2.1 From Extractive to Aspect-Based

Summarization

The core challenge of opinion summarization is to

distill salient information from a multitude of user re-

views (Pecar, 2018). Early approaches often focused

on extractive methods that identify and select repre-

sentative sentences or phrases from the source. How-

ever, these methods can lack fluency and fail to syn-

thesize information cohesively. This led to the devel-

opment of more structured approaches, most notably

aspect-based summarization, which aims to identify

key product features (aspects) and aggregate the sen-

timent expressed towards them (Titov and McDonald,

2008). A significant advancement in this area was

presented by (Angelidis and Lapata, 2018), who de-

veloped a neural framework that combines aspect ex-

traction and sentiment prediction in a weakly super-

vised manner, reducing the reliance on heavily anno-

tated data by using product domain labels and user

ratings for supervision.

2.2 Abstractive and Controllable

Summarization

Although structured summaries are useful, the pur-

suit of more fluent, human-like outputs pushed the

field toward abstractive summarization, where mod-

els generate novel sentences to paraphrase the source

content (Iso et al., 2021). A primary obstacle for

abstractive methods has been the scarcity of large-

scale, high-quality training datasets, as creating gold-

standard summaries from hundreds of texts is pro-

hibitively expensive. To overcome this, researchers

pioneered methods that rely on synthetic datasets. For

example, the work by (Amplayo and Lapata, 2021)

demonstrated how to construct review-summary pairs

from the original data by explicitly incorporating con-

tent planning that allows a visual training of abstrac-

tive models.

Building on this, research has explored making

summaries more useful by making them controllable.

(Amplayo et al., 2021) introduced aspect-controllable

opinion summarization, allowing the generation of

customized summaries based on user queries (e.g., fo-

cusing only on a hotel’s ”location” and ”room”). The

complexity of the task has also evolved beyond the

summaries of a single entity. (Iso et al., 2022) pro-

posed the task of a comparative opinion summariza-

tion, which generates two contrastive summaries and

one common summary from reviews of two differ-

ent products, directly assisting the user’s choice. Fur-

ther refinement of granularity, (Ge et al., 2023) devel-

oped FineSum, a framework for fine-grained, target-

oriented opinion summarization that can be drilled

down to sub-aspect levels with minimal supervision.

2.3 Paradigm Shift with Large

Language Models

The advent of powerful Large Language Models

(LLMs) has marked a significant paradigm shift.

These models have demonstrated impressive zero-

shot capabilities, generating high-quality summaries

without task-specific fine-tuning. This shift has also

highlighted the inadequacy of traditional automatic

metrics like ROUGE (Lin, 2004), which often corre-

late poorly with human judgments of summary qual-

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

6

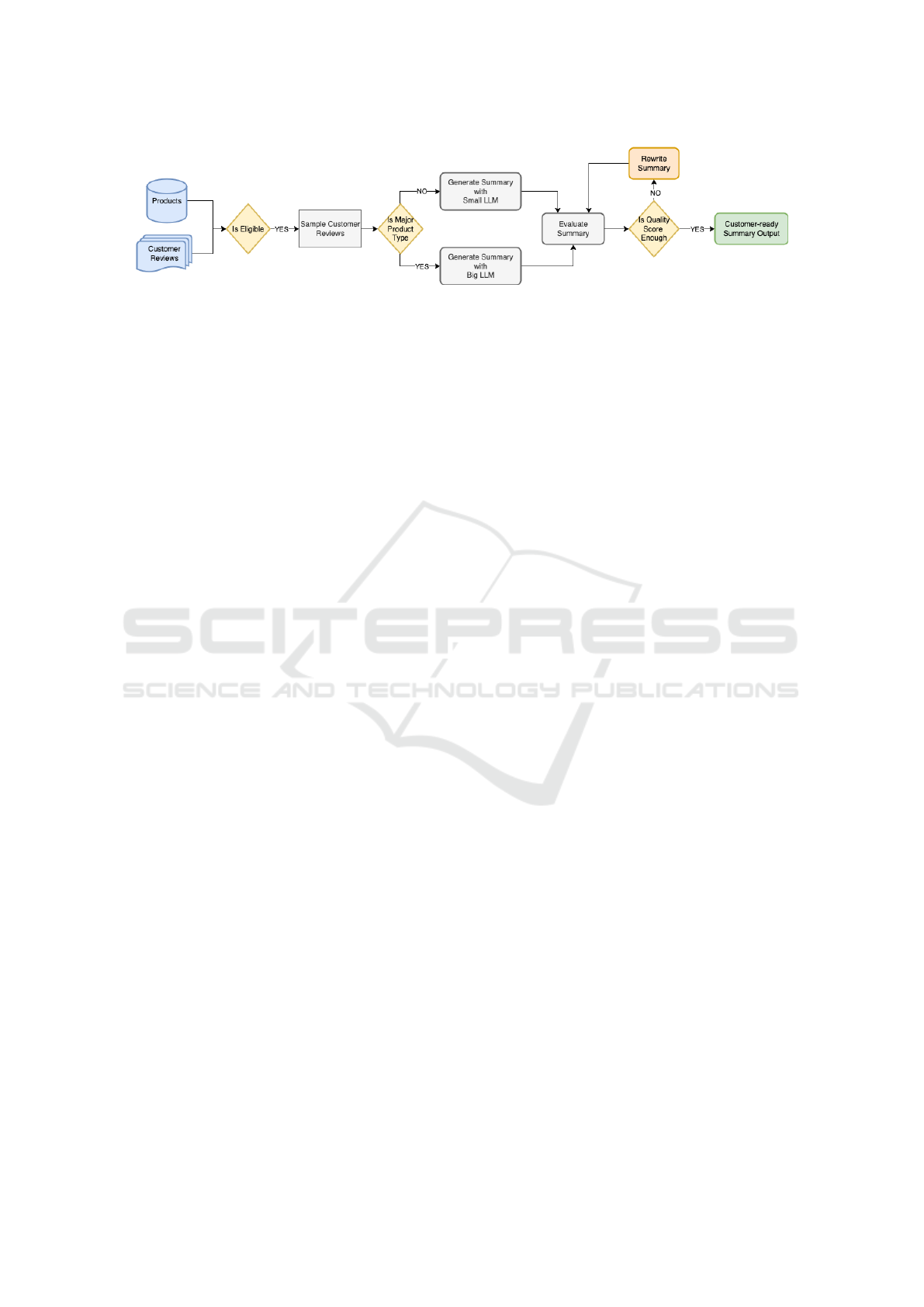

Figure 2: Overall system design of the summary generation process.

ity. A seminal work by (Stiennon et al., 2020) ad-

dressed this by learning a reward model from human

preferences and using it to fine-tune a summarization

model with reinforcement learning.

Subsequent studies have empirically confirmed

that zero-shot GPT-3 models can produce opinion

summaries that humans prefer over those from fine-

tuned models, and that these summaries are less prone

to dataset-specific issues like poor factuality (Bhaskar

et al., 2023). However, LLMs introduce their own

challenges, particularly scalability when processing

hundreds of reviews and the risk of hallucination.

To address this, (Hosking et al., 2023) proposed a

method for attributable and scalable opinion sum-

marization. Their model encodes review sentences

into a hierarchical discrete space, allowing it to iden-

tify common opinions and generate abstractive sum-

maries while explicitly referencing the source sen-

tences that serve as evidence, thereby enhancing trust-

worthiness. Our work builds upon this new paradigm,

focusing on the practical application of LLMs in a live

production environment and addressing the critical,

industry-relevant challenges of balancing cost, qual-

ity, and scalability.

3 SYSTEM DESIGN AND

METHODOLOGY

This section covers the technical details, architectural

structure, and solutions to the challenges encountered

in the production environment for the ”Review Sum-

mary” system developed and deployed at Hepsibu-

rada.

3.1 Product Selection and Review

Sampling

The initial phase of the system involves identifying

the products for which summaries will be generated.

Given that Hepsiburada’s catalog contains millions of

products across more than a thousand product types,

a strategic selection process is essential. This step is

critical not only for managing the operational costs

associated with large-scale LLM inference, but also

for maintaining a high standard of quality by avoiding

summary generation for products with too few or un-

informative reviews. The selection rules also account

for product-specific characteristics; for example, for

product types like mobile phones and computers, re-

cent reviews are prioritized, whereas the recency of

reviews is less critical for fashion products.

The system pipeline begins with determining

whether a product is eligible to generate a review

summary as in Figure 2. A product is selected for

summary generation based on its type and the selec-

tion rules of that type. These criteria include a min-

imum number of verified reviews between a specific

time period and several reviews’ statistics to derive an

informative and objective summary.

Once a product satisfies all requirements, a sample

is selected from the existing reviews to avoid exceed-

ing the LLM’s input limits and to include the most in-

formative comments as the next step. Although many

LLM models introduce large context windows, feed-

ing all possible reviews for the product is not scal-

able choice, considering the huge percentage of re-

views that contain almost no product-related informa-

tion and insight. Therefore, we apply a stratified sam-

pling to maintain the review rating distribution. Using

a priori of informativeness induced by review statis-

tics, each review is selected with a probability roughly

proportional to its probability of having quality infor-

mation(Aslam et al., 2009).

3.2 Staged LLM Architecture

One of the biggest challenges of running an LLM-

based system for millions of products in an e-

commerce production environment is balancing cost

and quality. Using the most powerful models (e.g.,

GPT-4.1, Gemini 2.5 Pro, Claude Opus 4) for every

product can maximize quality, but may push opera-

tional costs to unsustainable levels. Conversely, using

less expensive models can risk quality consistency. To

solve this dilemma, a staged LLM architecture was

designed to intelligently allocate resources based on

Customer Review Summarization in Production with Large Language Models for e-Commerce Platforms

7

products value in terms of business.

The system’s workflow consists of the following

steps:

• Initial Generation: Product reviews are directed

to the corresponding LLM for summary genera-

tion based on their impact. High-impact products,

such as mobile phones, laptops, are routed to more

capable and powerful LLMs. On the other hand,

the summaries of products like books, decoration

items are generated by smaller and cheaper mod-

els.

• Automated Evaluation and Moderation: The gen-

erated summary is passed through an evaluation

step for quality and moderation steps. We lever-

age LLM-as-a-Judge pattern(Gu et al., 2024) us-

ing GPT-4.1, where the LLM assesses the output’s

the summary’s compliance with predefined rule

sets such as coherence, informativeness, gram-

mar, and business constraints. This approach also

automates the quality assurance process, allowing

the system to scale.

• Rewriting the Low-Quality Output: If the qual-

ity score of the generated summary is below the

determined threshold, the low-quality output is

rewritten. The generated summary is fed to the

same LLM with sampled customer reviews and

the quality score reasoning so that the LLM can

improve quality. The new summary is again eval-

uated by the LLM Judge. This process is repeated

at most 3 loops. If a score higher than the thresh-

old is obtained in any repetition, the output is

marked as a customer-ready summary. Otherwise,

the generated summary is not shown to the cus-

tomers.

This staged approach provides a direct and sys-

tematic solution to generating high-quality summaries

while scaling to millions of products without manual

intervention.

3.3 Production Environment Challenges

and Implemented Solutions

Deploying generative AI systems in a real-world,

large-scale environment presents a unique set of op-

erational challenges not typically encountered in con-

trolled academic settings. The primary challenge is

to maintain consistent summary quality given the in-

herent variability of LLM outputs. The most funda-

mental problem we faced was the generation of a high

rate of low-quality summaries, especially when us-

ing more cost-effective models. The staged architec-

ture described in Section 3.2 is our primary solution

to this problem. The automated moderation and re-

generation steps are designed to systematically man-

age and uphold output quality across the entire prod-

uct catalog.

A second significant challenge stems from the

quality of the input data itself. The ”Garbage In,

Garbage Out” principle applies directly, as very short

(”great product”), irrelevant (”bought for my wed-

ding”), or nonsensical reviews negatively affect the

LLM’s ability to produce a meaningful summary. Al-

though, there is no simple solution to this issue, we

investigated mitigation strategies, such as filtering re-

views based on their length, information density, or

the number of ”helpful” votes they have received from

other users. During review sampling process, we used

such statistics to increase the probability of providing

more informative customers reviews to generate bet-

ter summaries.

Finally, a critical issue is post-deployment qual-

ity control. To prevent low-quality or uninformative

summaries from ever being displayed to customers,

we implemented a proactive quality gate as part of our

pre-deployment process. This was achieved by lever-

aging the final quality score assigned by the Judge

LLM during the moderation step. Any summary

that fails to meet a predetermined minimum qual-

ity score threshold is automatically discarded and not

published. This automated filtering mechanism ef-

fectively solves the problem of inconsistent quality at

the source, ensuring that only summaries meeting our

standards reach the end-user.

3.4 Context Engineering and Prompts

The behavior of the LLMs at each stage of

the pipeline is controlled by carefully engineered

prompts. These prompts are a core component of the

system’s methodology, defining the task, constraints,

and desired output format for the models.

The summary generation prompt is used in both

generation steps. It instructs the model to create a

persuasive and objective summary in Turkish, with

specific constraints on length, content, tone, and ex-

clusions (e.g., no mention of shipping, packaging, or

sellers). The complete prompt is provided in Ap-

pendix A.1.

The evaluation prompt is used in the automated

quality control step. It tasks the LLM to act as a

judge, validating a given summary against a strict set

of rules. These rules include validating the language,

word count, point of view, and ensuring that the sum-

mary is free of excluded topics and sentiment bias.

The prompt is provided in Appendix A.2.

The paraphrasing prompt is used during the re-

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

8

generation process when a summary fails to pass the

quality control. It instructs the model to rewrite the

faulty summary, addressing the specific issues identi-

fied by the moderation step while adhering to a stricter

set of rules, such as a shorter word limit. The prompt

is provided in Appendix A.3.

4 EXPERIMENTAL SETUP AND

EVALUATION

Recognizing that traditional metrics such as ROUGE

often correlate poorly with human quality judgments

for abstractive tasks (Stiennon et al., 2020), we de-

fine a composite quality score ranging from 1 to 10.

This score is calculated as a weighted average of four

fundamental human-centered dimensions of summary

quality (Li et al., 2023). The dimensions and their re-

spective weights are as follows:

• Helpfulness of the summary measures whether the

summary would help a user make an informed

purchasing decision. This is the most critical di-

mension as it directly relates to the primary busi-

ness objective.

• Factuality evaluates whether the generated sum-

mary avoids making claims not supported by the

source reviews (hallucination). Factual consis-

tency is crucial to maintain user trust (Kry

´

sci

´

nski

et al., 2020).

• Relevance accurately reflects the main ideas and

important points of the source reviews, focusing

on product-centric aspects.

• Coherence checks the summary’s overall gram-

matical structure and understandability.

• Compliance evaluates the summary to determine

whether it violates any defined business rules and

regulations.

This weighted approach allows for a nuanced eval-

uation that prioritizes the aspects most critical to the

e-commerce use case, such as truthfulness and useful-

ness.

4.1 Comparative Model Analysis

The evaluation is designed to cover various prominent

LLMs in industry and academia that have proficiency

in Turkish language and e-commerce domain. The

models to be compared are: GPT-4.1, Gemini 2.5 Pro,

Gemini 2.5 Flash Lite, and a local model, Trendyol

LLM(Team, 2025). 1000 unique products are sam-

pled from varying product types and categories to en-

sure diversity in the test set and observe the LLMs’

behaviors when they are given different business con-

straints. To ensure a fair comparison, each model re-

ceives the same set of products and the same reviews

for those products. The same prompt structure will

be used for all models to isolate inter-model perfor-

mance.

4.2 Evaluation Protocol

The evaluation process follows a dual approach that

combines the depth of human judgment with the scal-

ability of automated methods.

• Human Evaluation: A group of human annotators

trained on a set of test output summaries given

the rule sets. The annotators are presented with a

product, its reviews, and a summary generated by

one of the models. In a blind test setup where they

do not know which model produced which sum-

mary, they are asked to score based on the criteria

defined above.

• LLM-as-a-Judge Evaluation: In parallel with hu-

man evaluation, the automated evaluation step is

established as a scalable alternative. The final

quality score that is calculated by the LLM Judge

is compared to the human judgments. This com-

parison provides an opportunity to understand the

consistency between human and LLM.

4.3 Results and Analysis

The analysis involves comparing the mean quality

scores achieved by each model for the test set. The

results are presented in Table 1.

Table 1: Average quality score obtained by each model eval-

uated by both human and LLM annotators.

Model Evaluator Avg. Quality Score

GPT-4.1

Human 9.23

LLM 9.05

Gemini 2.5 Pro

Human 9.31

LLM 9.17

Gemini 2.5

Flash Lite

Human 8.80

LLM 8.69

Trendyol LLM

Human 4.52

LLM 6.58

The comparative evaluation yielded clear perfor-

mance differences among the models, as summarized

in Table 1. The results indicate that Gemini 2.5 Pro

achieved the highest score of the human annotators

(9.31) and the LLM (9.17), positioning it as the model

that performs the best in this evaluation. GPT-4.1

also showed strong performance, with scores closer

Customer Review Summarization in Production with Large Language Models for e-Commerce Platforms

9

to Gemini 2.5 Pro. Gemini 2.5 Flash Lite, while still

effective, scored slightly lower, consistent with its de-

sign as a more lightweight model.

A particularly noteworthy finding involves the

Trendyol LLM. Despite being a domain-specific

model fine-tuned by another major e-commerce com-

pany in T

¨

urkiye, it struggled to generate high-quality

summaries, receiving a significantly lower score from

human evaluators (4.52) compared to general-purpose

models. Interestingly, the LLM Judged evaluated it

more generously (6.58), suggesting a potential differ-

ence in the way automated and human evaluators per-

ceive failures in domain-specific models.

Based on these results, we made our final model

selections for the production environment. For the

premium generation tier, despite Gemini 2.5 Pro

achieving the highest quality score, we selected GPT-

4.1. This decision was driven by two key factors.

Firstly, we observed that Gemini 2.5 Pro, as a rea-

soning model, sometimes produced summaries with a

more pronounced positive or negative sentiment shift,

whereas our goal is to provide users with a balanced

and objective summaries. Quantitatively, GPT-4.1’s

non-reasoning architecture offers lower latency and

cost, which are critical considerations for a large-

scale production system. For the cost-effective part,

the choice was more straightforward. Given the sig-

nificant performance difference observed in our eval-

uation, Gemini 2.5 Flash Lite was selected over

Trendyol LLM to ensure a baseline of high-quality

summaries for all eligible products.

To provide a qualitative illustration of these per-

formance differences, example summaries generated

by all experimental models are presented in Appendix

B.

5 CONCLUSION AND FUTURE

WORK

This study has presented a case study on the de-

sign, implementation, and production challenges of

an LLM-based user review summarization system on

a large-scale e-commerce platform. The main contri-

butions are the demonstration of the effectiveness of a

staged LLM architecture that balances cost and qual-

ity, and the transparent documentation of real-world

operational challenges. The developed system pro-

vides a more efficient shopping experience for mil-

lions of users, serving as a successful example of the

conversion of modern AI applications into commer-

cial value.

Future work will focus on addressing the system’s

current limitations and further expanding its capabili-

ties. A primary direction is to improve input filtering

by developing more advanced methods to automati-

cally identify and exclude low-quality or uninforma-

tive reviews before they are sent to the LLM. To fur-

ther enhance summary quality and align the system

with user needs, we also plan to implement a direct

user feedback loop, which would allow users to rate

the usefulness of summaries. This data could then be

used for continuous system improvement. Looking

further ahead, we will explore personalization by in-

tegrating user-specific data to produce summaries that

highlight product features most relevant to an individ-

ual’s interests. Finally, the potential to create more

holistic product overviews will be investigated by in-

corporating multi-modal information, such as insights

derived from user-uploaded images and videos.

REFERENCES

Amplayo, R. K., Angelidis, S., and Lapata, M. (2021).

Aspect-controllable opinion summarization. In Pro-

ceedings of the 2021 Conference on Empirical Meth-

ods in Natural Language Processing, pages 6578–

6593, Online and Punta Cana, Dominican Republic.

Association for Computational Linguistics.

Amplayo, R. K. and Lapata, M. (2021). Unsupervised opin-

ion summarization with content planning. In Proceed-

ings of the AAAI Conference on Artificial Intelligence,

volume 35, pages 12338–12346.

Angelidis, S. and Lapata, M. (2018). Summarizing opin-

ions: Aspect extraction meets sentiment prediction

and they are both weakly supervised. In Proceedings

of the 2018 Conference on Empirical Methods in Nat-

ural Language Processing, pages 3675–3686, Brus-

sels, Belgium. Association for Computational Lin-

guistics.

Aslam, J. A., Kanoulas, E., Pavlu, V., Savev, S., and Yil-

maz, E. (2009). Document selection methodologies

for efficient and effective learning-to-rank. In Pro-

ceedings of the 32nd international ACM SIGIR con-

ference on Research and development in information

retrieval, pages 468–475.

Bhaskar, A., Ladhak, F., Ladhak, A., Yih, W.-t., Dernon-

court, F., and Yu, M. (2023). Zero-shot gpt-3 for opin-

ion summarization.

Ge, S., Huang, J., Meng, Y., and Han, J. (2023). FineSum:

Target-oriented, fine-grained opinion summarization.

In Proceedings of the 16th ACM International Con-

ference on Web Search and Data Mining, pages 1093–

1101, Singapore, Singapore. ACM.

Gu, J., Jiang, X., Shi, Z., Tan, H., Zhai, X., Xu, C., Li, W.,

Shen, Y., Ma, S., Liu, H., et al. (2024). A survey on

llm-as-a-judge. arXiv preprint arXiv:2411.15594.

Hosking, T., Tang, H., and Lapata, M. (2023). Attributable

and scalable opinion summarization. In Proceedings

of the 61st Annual Meeting of the Association for

Computational Linguistics (Volume 1: Long Papers),

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

10

pages 8488–8505, Toronto, Canada. Association for

Computational Linguistics.

Iso, H., Morishita, T., Higashinaka, R., and Minami, Y.

(2021). Unsupervised opinion summarization with

tree-structured topic guidance. Transactions of the As-

sociation for Computational Linguistics, 9:945–961.

Iso, H., Morishita, T., Higashinaka, R., and Minami, Y.

(2022). Comparative opinion summarization. In Find-

ings of the Association for Computational Linguistics:

ACL 2022, pages 3307–3318, Dublin, Ireland. Asso-

ciation for Computational Linguistics.

Kry

´

sci

´

nski, W., McCann, B., Xiong, C., and Socher, R.

(2020). Evaluating the factual consistency of abstrac-

tive text summarization. In Proceedings of the 2020

Conference on Empirical Methods in Natural Lan-

guage Processing (EMNLP), pages 9332–9346, On-

line. Association for Computational Linguistics.

Li, K., Zhang, S., Ge, T., Wang, Y., Zhang, Z., Wang, A.,

Wang, J., Wang, G., Feng, Y., and Wang, W. (2023).

CONNER: A COmpreheNsive kNowledge Evaluation

fRamework for assessing large language models. In

Findings of the Association for Computational Lin-

guistics: EMNLP 2023, pages 5827–5842, Singapore.

Association for Computational Linguistics.

Lin, C.-Y. (2004). ROUGE: A package for automatic evalu-

ation of summaries. In Text Summarization Branches

Out, pages 74–81, Barcelona, Spain. Association for

Computational Linguistics.

Pecar, S. (2018). Towards opinion summarization of cus-

tomer reviews. In Proceedings of ACL 2018, Student

Research Workshop, pages 1–8, Melbourne, Australia.

Association for Computational Linguistics.

Stiennon, N., Ouyang, L., Wu, J., Ziegler, D. M., Lowe, R.,

Voss, C., Radford, A., Amodei, D., and Christiano,

P. F. (2020). Learning to summarize with human feed-

back. In Advances in Neural Information Processing

Systems, volume 33, pages 3008–3021.

Team, T. L. . C. N. (2025). Trendyol llm 8b t1.

Titov, I. and McDonald, R. (2008). Modeling online re-

views with multi-grain topic models. In Proceedings

of the 17th International Conference on World Wide

Web, pages 111–120. ACM.

APPENDIX A.1 SUMMARY

GENERATION PROMPT

Your primary task is to generate a concise,

persuasive, and objective summary in

Turkish, synthesized from the provided

customer reviews. You are to follow a

structured methodology, beginning with a

sentiment-balanced analysis of the reviews

to extract key product-centric features.

Prioritize the inclusion of positive

attributes while integrating a necessary

amount of constructive negative feedback

to ensure an objective overview; if no

substantive negative feedback is present,

construct the summary solely from positive

points without referencing the absence of

criticism.

A crucial part of this process is the

application of strict content filtering

criteria: you must focus exclusively on the

product’s intrinsic qualities and performance,

and are directed to disregard and exclude all

logistical and service-related comments, such

as those concerning shipping, packaging, or

seller interactions.

Furthermore, to ensure the summary is broadly

applicable, normalize domain-specific

information by generalizing or omitting

variant-specific details like color or exact

sizing for fashion items, and frame any

discussions of price in terms of overall value

or price-to-performance ratio.

Finally, adhere to several stylistic and

formal constraints: adopt a neutral and

objective tone, maintain a third-person

narrative perspective by attributing all

opinions to "users," and ensure the final

output is grammatically correct and adheres to

a strict word count limit.

APPENDIX A.2 EVALUATION

PROMPT

Your function is to perform a comprehensive

validation of the provided Turkish summary,

ensuring its strict adherence to a series of

critical guidelines. You must first verify

its compliance with formal constraints: the

summary must be written in Turkish, remain

within word limit, and consistently use a

third-person point of view. The core of your

assessment will be content-based, confirming

that the summary focuses exclusively on

product-related features, advantages, and

disadvantages, with a special allowance for

discussions of a book’s content if it is the

product in question.

It is imperative to penalize any deviation

from the strict exclusion criteria, which

prohibit any mention of topics such as cargo,

packaging, sellers, or delivery, as well as

gifts, or health suggestions. Furthermore,

you will ensure that any recommendations

are explicitly attributed to "users," that

personal experiences are generalized to

reflect broader customer sentiment, and

that conflicting feedback is handled with

objectivity.

Finally, you must evaluate the summary’s

overall sentiment, applying a significant

penalty if it is excessively negative or lacks

a constructive, positive perspective, as the

goal is to inform, not deter.

Customer Review Summarization in Production with Large Language Models for e-Commerce Platforms

11

APPENDIX A.3 PARAPHRASE

PROMPT

Your primary function is to revise a

provided summary that has been identified

as non-compliant, guided by the specific

issues detailed in the accompanying critique.

Using the original summary and the source

customer reviews, you must generate a new,

valid summary in Turkish that corrects all

noted deficiencies while adhering to a strict

word limit or fewer.

In this process, you are required to

generalize any individual user experiences

to reflect a broader customer consensus and

to rephrase any recommendations to ensure

they are explicitly attributed to "users."

All sentences must be composed in the third

person.

A critical component of your task is to

improve conciseness by shortening sentences

and removing all non-product-related

information, including any mentions of

sellers, shipping, packaging, delivery, gifts,

or health suggestions.

Conclude by proofreading the final summary

to verify its full compliance with all

instructions and its correctness in grammar

and spelling.

APPENDIX B. EXAMPLE

SUMMARIES

Table 2 and Table 3 provide example outputs for the

same product for all LLM models used during our

experiments to illustrate the qualitative differences in

summary generation.

Table 2: Comparative summary outputs of each model for a thermos both in Turkish and English.

Product Model Summary (Turkish) Summary (English)

Stanley Klasik

Vakumlu C¸ elik

Termos 1,4 L

GPT-4.1 Stanley Klasik Vakumlu C¸ elik Termos,

genis¸ hacmi ve sa

˘

glam yapısıyla kul-

lanıcıların be

˘

genisini kazanıyor. Ter-

mosun sıcak ve so

˘

guk tutma perfor-

mansı, kullanıcılar tarafından oldukc¸a

bas¸arılı bulunuyor. Fiyatının perfor-

mansını kars¸ıladı

˘

gı belirtiliyor. Bazı kul-

lanıcılar kapak ve alt kısımdaki eziklerden

s¸ikayet etmis¸ olsa da, genel olarak

¨

ur

¨

un

¨

un

dayanıklılı

˘

gı ve is¸levselli

˘

gi

¨

one c¸ıkıyor.

Kullanıcılar, termosu pratikli

˘

gi ve uzun

s

¨

ureli kullanımı ic¸in

¨

oneriyor.

The Stanley Classic Vacuum Insulated

Steel Thermos wins users’ appreciation

with its large volume and sturdy struc-

ture. The thermos’s performance in keep-

ing drinks hot and cold is found to be quite

successful by users. It is stated that its

price is justified by its performance. Al-

though some users have complained about

dents on the lid and bottom, the prod-

uct’s durability and functionality generally

stand out. Users recommend the thermos

for its practicality and long-term use.

Gemini 2.5 Pro Stanley Klasik Vakumlu C¸ elik Termos,

kullanıcılar tarafından genis¸ ic¸ hacmi ve

sa

˘

glamlı

˘

gıyla tavsiye ediliyor. Fiyatına

g

¨

ore y

¨

uksek performans sundu

˘

gu belir-

tilen

¨

ur

¨

un, kullanıcıların beklentilerini

kars¸ılıyor. Bazı

¨

ur

¨

unlerde ezikler ola-

bildi

˘

gi, ancak genel olarak kalitesinin tat-

min edici oldu

˘

gu ifade ediliyor.

The Stanley Classic Vacuum Insulated

Steel Thermos is recommended by users

for its large interior volume and robust-

ness. The product, which is stated to of-

fer high performance for its price, meets

users’ expectations. It is mentioned that

some products may have dents, but over-

all, its quality is expressed as satisfactory.

Gemini 2.5

Flash Lite

Stanley Classic termos, kullanıcılar

tarafından genellikle b

¨

uy

¨

uk ve kaliteli

olarak tanımlanıyor. Dayanıklılı

˘

gıyla

¨

one

c¸ıkan

¨

ur

¨

un, fiyatına de

˘

gdi

˘

gi d

¨

us¸

¨

un

¨

ul

¨

uyor.

Bazı kullanıcılar

¨

ur

¨

un

¨

un kapa

˘

gında veya

alt kısmında ezikler oldu

˘

gunu belirtse de,

genel olarak memnuniyet y

¨

uksek. Bu

termos, sıcak ve so

˘

guk ic¸eceklerinizi uzun

s

¨

ure muhafaza etmek ic¸in g

¨

uvenilir bir

sec¸enek olarak

¨

one c¸ıkıyor.

The Stanley Classic thermos is generally

described by users as large and of high

quality. The product, which stands out for

its durability, is considered to be worth its

price. Although some users mention that

there are dents on the lid or bottom of the

product, the overall satisfaction is high.

This thermos stands out as a reliable op-

tion for preserving your hot and cold bev-

erages for a long time.

Trendyol LLM Stanley Klasik Vakumlu C¸ elik Termos, 1,4

L Mavi.

Stanley Classic Vacuum Insulated Steel

Thermos, 1.4 L Blue

ICEEECS 2025 - International Conference on Advances in Electrical, Electronics, Energy, and Computer Sciences

12

Table 3: Comparative summary outputs of each model for a headset both in Turkish and English.

Product Model Summary (Turkish) Summary (English)

Razer Kraken

V3 RGB

7.1 Surround

Siyah

Gaming

Kablolu Kulaklık

GPT-4.1 Razer Kraken V3, kullanıcıların be-

lirtti

˘

gi

¨

uzere y

¨

uksek ses kalitesi ve

7.1 surround

¨

ozelli

˘

giyle oyunlarda

gerc¸ekc¸i bir deneyim sunuyor. Mikro-

fonun c¸ıkarılabilir olması ve kafa

bandının rahatlı

˘

gı, kullanım kolaylı

˘

gını

artırıyor. Ekolayzır ayarları sayesinde

kis¸iselles¸tirilebilir ses imkanı sa

˘

glıyor.

Ancak bazı kullanıcılar

¨

ur

¨

un

¨

u a

˘

gır

bulmus¸ ve uzun s

¨

ureli kullanımda

rahatsızlık yas¸adıklarını belirtmis¸ler.

Ayrıca, nadiren cızırtı ve mikrofon

kalitesiyle ilgili olumsuz yorumlar da

mevcut.

According to users, the Razer Kraken

V3 offers a realistic gaming experience

with its high sound quality and 7.1 sur-

round feature. The detachability of the

microphone and the comfort of the head-

band increase ease of use. It provides

customizable sound options thanks to its

equalizer settings. However, some users

found the product heavy and stated they

experienced discomfort during long-term

use. Additionally, there are rare nega-

tive comments about crackling and mi-

crophone quality.

Gemini 2.5 Pro Razer Kraken V3,

¨

ozellikle oyuncu-

lar ic¸in tasarlanmıs¸ bir kulaklık olarak

¨

one c¸ıkıyor. Kullanıcılar,

¨

ur

¨

un

¨

un 7.1

surround ses kalitesi sayesinde oyun

ic¸i sesleri t

¨

um detaylarıyla duyabildik-

lerini ve bu deneyimin

¨

ur

¨

un

¨

u fiyatına

de

˘

ger kıldı

˘

gını belirtiyor. Aynı za-

manda, c¸ıkarılabilir mikrofonu ve bas¸ı

saran konforlu tasarımı da be

˘

genilen

¨

ozellikleri arasında. Bazı kullanıcılar,

kulaklı

˘

gın uzun s

¨

ureli kullanımlarda a

˘

gır

gelebildi

˘

gini ve yazılım uygulamasının

biraz karmas¸ık olabildi

˘

gini ekliyor.

The Razer Kraken V3 stands out as a

headset designed especially for gamers.

Users state that thanks to the product’s

7.1 surround sound quality, they can hear

in-game sounds in full detail, and this

experience makes the product worth its

price. At the same time, its detachable

microphone and comfortable design that

envelops the head are among its liked fea-

tures. Some users add that the headset

can feel heavy during prolonged use and

that the software application can be a bit

complicated.

Gemini 2.5

Flash Lite

Razer Kraken V3 RGB oyuncu ku-

laklı

˘

gı, sundu

˘

gu

¨

ust

¨

un ses kalitesiyle

oyun deneyimini bir

¨

ust seviyeye tas¸ıyor.

Kullanıcılar,

¨

ozellikle 7.1 surround ses

¨

ozelli

˘

gi sayesinde oyun ic¸indeki sesleri

daha net duyduklarını belirtiyor. Mikro-

fonun performansı da video ve yayınlar

ic¸in yeterli bulunuyor. Ancak, bazı kul-

lanıcılar kulaklı

˘

gın biraz a

˘

gır olmasından

ve uzun s

¨

ureli kullanımlarda bas¸ a

˘

grısına

neden olabilece

˘

ginden s¸ikayetc¸i. Uygu-

lamanın aray

¨

uz

¨

u de bazı kullanıcılar ic¸in

karmas¸ık gelebilir. Genel olarak,

¨

ur

¨

un

fiyatına de

˘

ger bir performans sunuyor.

The Razer Kraken V3 RGB gaming

headset takes the gaming experience to

the next level with the superior sound

quality it offers. Users state that they hear

in-game sounds more clearly, especially

thanks to the 7.1 surround sound fea-

ture. The microphone’s performance is

also found to be sufficient for videos and

streaming. However, some users com-

plain that the headset is a bit heavy and

can cause headaches during long-term

use. The application’s interface can also

seem complicated to some users. Overall,

the product offers performance worthy of

its price.

Trendyol LLM Razer Kraken V3 RGB 7.1 Surround

Gaming Kulaklık, oyun deneyimini

zenginles¸tirmek ic¸in tasarlanmıs¸tır.

Y

¨

uksek kaliteli ses ve net iletis¸im sa

˘

glar,

ancak uzun s

¨

ureli kullanım kafa a

˘

grısı

yaratabilir. Mikrofon

¨

ozellikleri iyi

olsa da bazı kullanıcılar cızırtı sorunu

yas¸amıs¸tır. Fiyatına g

¨

ore genel olarak

memnuniyet seviyesi orta d

¨

uzeydedir.

The Razer Kraken V3 RGB 7.1 Surround

Gaming Headset is designed to enrich

the gaming experience. It provides high-

quality sound and clear communication,

but long-term use may cause headaches.

Although the microphone features are

good, some users have experienced a

crackling issue. For its price, the general

satisfaction level is moderate.

Customer Review Summarization in Production with Large Language Models for e-Commerce Platforms

13