Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN

Integrated with CNN-Based Classification

Lawryan Andrew Darisang, Samuel Krishna Dwisetio, Ivan Sebastian Edbert and Alvina Aulia

Computer Science Department, School of Computer Science, Bina Nusantara University Jakarta, 11480, Indonesia Bina

Nusantara University, Indonesia

Keywords: YOLOv11, SSD, Faster-RCNN, CNN, Real-Time Waste Classification.

Abstract: Substantial amount of unmanaged waste causes serious health and environmental risks, particularly in

Indonesia, where 13.4 million tons were left unmanaged in 2024. However manual waste sorting is inefficient,

labor-intensive, and prone to error, creating an urgent need for automated waste classification systems. This

study proposes a real-time waste classification approach by integrating object detection models and a

Convolutional Neural Network (CNN) classifier with the help of camera vision, through the transfer learning

method. Object detection models YOLOv11, SSD-MobileNetV3, and Faster R-CNN with ResNet50 FPN

were trained on TACO and Trash-ICRA19 datasets, while the CNN classifier with MobileNetV2-based was

trained on the Domestic Waste Classification dataset. The MobileNetV2 classifier achieved 85.02% accuracy

with a macro F1-score of 85%. For object detection models, YOLOv11 shows superior performance achieving

mean Average Precision @.5:.95 of 55.69% with an inference speed of 14.1ms and 71.10 frames per second,

outperforming others. The results indicate that YOLOv11 combined with CNN offers an efficient and accurate

solution for real-time waste classification and scalable waste management applications.

1 INTRODUCTION

Waste is one of the major environmental things the

world is concerned about today. The rise of waste

production is directly influenced by the population

growth that keeps increasing. According to the

Indonesia National Waste Management Information

System (SIPSN) in 2024, there are approximately 33

million tons of garbage was produced. However, only

about 59.82% or 20 million tons of waste were

managed properly. While the remaining 40.18%,

approximately 13.4 million tons of waste were not

managed (Ministry of Environment and Forestry of

Indonesia, 2024). This problem happened due to the

lack of knowledge and public awareness about proper

waste classification, resulting in improper and

inefficient waste disposal. These big piles of

unmanaged waste significantly affect the

environment and public health.

The traditional approaches to sorting waste are no

longer sufficient, because it cannot keep up with the

growth of waste production. These manual

approaches also have drawbacks such as being

inefficient, labor-intensive, and prone to human

errors. Therefore, there is an urgent need to

implement automated waste classification systems to

improve efficiency, accuracy, and reduce operational

costs (Fang et al., 2023).

Advances in Artificial Intelligence (AI) with the

help of camera vision provide promising solutions for

the realization of automated and enhanced waste

classification systems. Convolutional Neural

Network (CNN) provides machines that accurately

classify waste (Haqqi et al., 2024). Various

architectures that have been widely used for object

detection, like SSD (Single Shot Multibox Detector),

Faster R-CNN, and YOLO (You Only Look Once),

can be applied for waste detection (Wahyutama and

Hwang, 2022), (Ma, Wang, and Yu, 2020), (Kulkarni

and Kannamangalam Sundara Raman, 2019).

YOLO is well-known for its quick processing

speed and single-pass object detection, which makes

it appropriate for real time applications requiring

speed, but since it lacks accuracy due to its

prioritizing in speed. In contrast with YOLO, Faster

R-CNN offers good accuracy, but it lacks processing

speed. SSD is like the combination of Faster R-CNN

and YOLO architechture it has better speed than

Faster R-CNN and better accuracy than YOLO

(Dakari Aboyomi and Daniel, 2023). By combining

76

Darisang, L. A., Dwisetio, S. K., Edbert, I. S. and Aulia, A.

Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN Integrated with CNN-Based Classification.

DOI: 10.5220/0014276000004928

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Innovations in Information and Engineering Technology (RITECH 2025), pages 76-83

ISBN: 978-989-758-784-9

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

object detection architecture with CNN as the

foundation to do the waste classification with the help

of camera vision, it is possible to create a model that

allows real-time detection and classification with

high precision for classifying waste into recyclable

and organic waste.

While these models have been explored

individually, a systematic side-by-side comparison

under real-time conditions for waste management is

notably absent. This study aims to fill that gap by

methodically evaluating YOLO, SSD, and Faster R-

CNN. With the use of camera vision, this study

compares the performance of YOLO, SSD, and Faster

R-CNN models for real-time waste and detection, and

the help of CNN for classification. The key

performance metrics include mean Average Precision

(mAP), inference speed, and frames per second (FPS)

for object detection, and accuracy and F1-score for

classification. The goal is to find the best architecture

for building an accurate, fast, and scalable automated

waste classification system.

2 LITERATURE REVIEW

The development of AI, especially in the areas of

machine learning and computer vision, offers

promising opportunities for automating waste sorting

procedures. Object detection architectures such as

YOLO, SSD, and Faster R-CNN and CNN are among

the many technologies that are being developed for

this purpose, and they are frequently emphasized for

their potential in real-time waste classification

applications. Previous studies have shown that CNN-

based models perform well in classification tasks, but

they struggle to optimize for speed, accuracy, and

computational efficiency for real-world applications

(Dwiatmoko et al., 2024).

Past studies that used YOLO models to classify

plastic waste (Li et al., 2022) evaluated the accuracy

and computational efficiency of several YOLO

versions, ranging from YOLO-11m to YOLO-10n.

While YOLO-10n was faster, it showed a slight trade-

off in classification accuracy, whereas YOLO-11m

showed a high accuracy of 98.03%. This result

implies that YOLO is very fast, especially in real-time

applications; obtaining the ideal speed-accuracy ratio

is still difficult. This problem is critical to waste

management since efficient garbage sorting and

recycling depend on quick, precise classification

(Wahyutama and Hwang, 2022).

Another study that talks about Faster R-CNN

(Yan et al., 2021), a model that is more accurate than

YOLO, particularly in intricate detection situations

with several overlapping objects. The model's

performance in categorizing household waste

products was highlighted in this study, which

produced encouraging findings (Yan et al., 2021). But

the study also showed that, despite its excellent

accuracy, Faster R-CNN has slower inference times

and requires more processing power than YOLO and

SSD. These features make Faster R-CNN less

suitable for real-time applications in settings like

waste sorting, even though they are useful for

achieving high accuracy. As a result, the current

literature clearly illustrates the trade-off between

speed and accuracy (Kulkarni and Kannamangalam

Sundara Raman, 2019).

But the study also showed that, despite its

excellent accuracy, Faster R-CNN has slower

inference times and uses a lot more processing power

than YOLO and SSD. These features make Faster R-

CNN less suitable for real-time applications in

settings like waste sorting, even though they are

helpful in reaching accuracy. As a result, the current

literature clearly illustrates the trade-off between

speed and accuracy (Kulkarni and Kannamangalam

Sundara Raman, 2019).

Furthermore, Single Shot Multibox Detector

(SSD) has also been investigated for waste sorting

because of its capacity to strike a balance between

speed and accuracy (Fang, 2022). SSD has received

recognition for its effectiveness in real-time object

detection since it creates numerous bounding boxes

with different aspect ratios for every feature map

point. Research contrasting SSD with other

architectures, such as Faster R-CNN and YOLO,

frequently notes that while SSD is faster, it

occasionally loses accuracy when dealing with

smaller objects or more complicated waste scenarios

(Ma, Wang, and Yu, 2020).

Despite these developments, a lot of the

researchers are more focused on examining single

models or making comparisons without carefully

examining the critical performance indicators needed

for actual garbage classification systems. For

instance, although some research has concentrated on

enhancing classification accuracy using sophisticated

CNN structures, other studies have attempted to

enhance YOLO or Faster R-CNN to perform better in

dynamic settings. However, research that

systematically evaluates these models across several

crucial performance criteria, including inference

speed, computational resource requirements, and

practical applicability, is still lacking.

These gaps in the literature highlight a more

thorough investigation that directly contrasts YOLO,

SSD, and Faster R-CNN in the context of waste

Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN Integrated with CNN-Based Classification

77

classification. This study attempts to close the gap

and provide comprehensive information about the

advantages and drawbacks of each model by

methodically assessing them using a variety of

criteria.

3 METHODOLOGY

3.1 Research Framework

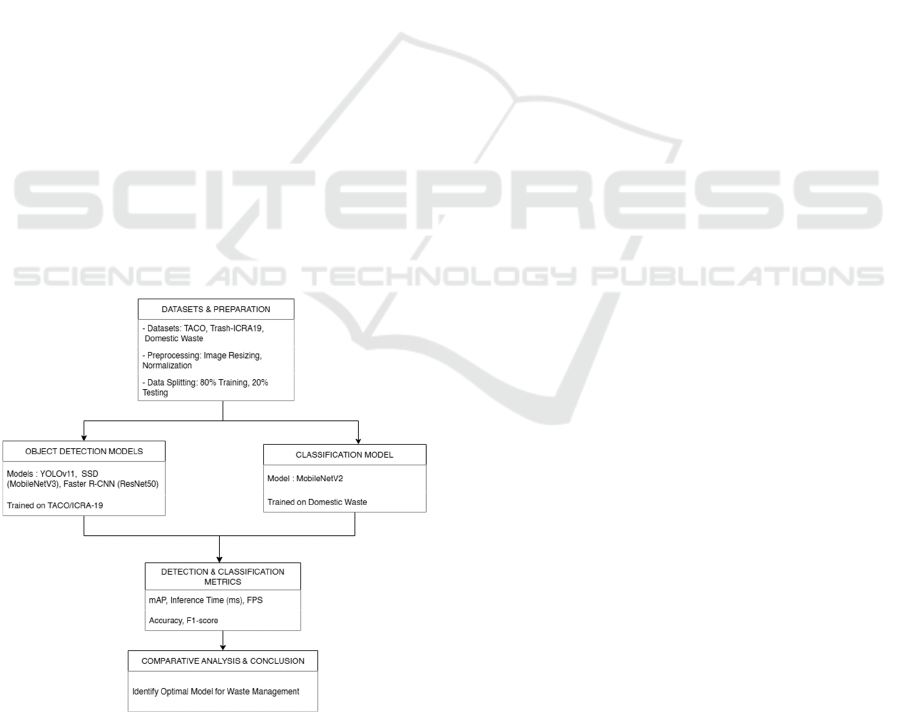

The methodological framework of this research was

systematically structured to facilitate a rigorous and

reproducible comparison of deep learning models for

real-time waste detection and classification. An

overview of this end-to-end workflow is presented in

a diagram that you can see in Figure 1. The

framework commences with the acquisition and

preprocessing of the designated datasets.

Subsequently, the process diverges into two parallel

tracks: one dedicated to the fine-tuning of three

different well-known object detection architectures

(YOLOv11, SSD, and Faster R-CNN), and another

for the classification model (MobileNetV2). Upon

completion of training, each model is evaluated

against a suite of predefined, task-specific

performance metrics. The framework culminates in a

comparative analysis of these metrics to ascertain the

optimal architecture for the proposed automated

waste management system.

Figure 1. Proposed Research Framework.

3.2

Datasets

This study used a total of three publicly available

datasets. Two datasets, TACO (Trash Annotations in

Context) and Trash-ICRA19, were used to train and

evaluate the object detection models. The other

dataset, the Domestic Waste Classification dataset

from the 209Sontung GitHub repository, was used for

the classification model.

The TACO dataset consisted of approximately

1,500 real-world waste images captured in diverse

environments (Proença and Hua, 2020). These

images were annotated with various common waste

categories, including materials such as metal (e.g.,

cans, foil), plastic (e.g., bottles, caps), and

paper/cardboard (e.g., cartons, tubes). As for Trash-

ICRA19 dataset it consisted of over 7,600 underwater

waste images (Fulton, Hong, and Sattar, 2020). This

dataset specifically focuses on marine litter,

classifying different types of trash found in

underwater settings.

To increase the diversity and robustness of the

object detection models, the two datasets were

combined into a single dataset for model training and

evaluation. Images without annotations were

removed, resulting in a final dataset of 9,077 images

across 13 classes: bio (organic), cloth, fishing, glass,

metal, non-recyclable, paper, plastic, rov (man-made

underwater equipment/robotic parts), rubber,

timestamp, unknown, and wood. Preprocessing

involved resizing all images to 640 × 640 pixels, with

no additional preprocessing steps applied. Finally, the

annotation formats were converted to match the

requirements of each detection model: YOLOv11

required the YOLO TXT format, Faster R-CNN

(ResNet50 + FPN) used the COCO JSON format, and

SSD (with a MobileNetV2 backbone) employed the

TF-Record format.

For the classification model, the dataset consisted

of 3,495 images of waste, categorized into three main

classes: recyclable, organic, and non-organic waste.

Preprocessing involved resizing images to 320 × 320

pixels and applying normalization to scale pixel

values. There was no additional preprocessing or

augmentation applied. This dataset was used to train

and evaluate the classification model (Tung, 2021).

3.3

Model and Architecture

3.3.1 YOLO

YOLO is a one-stage object recognition architecture

that uses a single CNN to predict both bounding box

coordinates and class probabilities directly from full

RITECH 2025 - The International Conference on Research and Innovations in Information and Engineering Technology

78

images in a single forward pass. YOLO can attain

great accuracy and real-time detection performance

with this method. In this study, YOLOv11 is utilized

because it is the most recent version of YOLO,

offering significant improvements over its

predecessors. YOLOv11 presents an anchor-free

detection paradigm, enhanced attention mechanism,

and deeper feature extraction (Alif, 2024). The

architecture consists of several key modules: the

C3k2 Block for efficient feature extraction, SPPF

(Spatial Pyramid Pooling - Fast) for multi-scale

feature aggregation, and Cross Stage Partial with

Spatial Attention (C2PSA) for enhanced spatial

attention. Additionally, the detection part used multi-

scale prediction layers to improve detection

performance across objects of varying sizes,

particularly enhancing accuracy for both small and

large objects (Khanam and Hussain, 2024).

3.3.2 SSD

SSD is an effective one-stage object recognition

architecture that uses feature maps to directly be

forecast bounding boxes and class labels, making it

possible for real-time detection. SSD can recognize

objects of varying sizes by using multiscale feature

maps from different network layers (Fang et al.,

2023)(Ma, Wang, and Yu, 2020).

SSD used Focal Loss to handle class imbalance by

lowering the weight of simple negative samples,

allowing the model to focus on challenging or

unclassified objects. In this study, SSD used

MobileNetV3 as the backbone, which includes depth

wise separable convolutions to reduce computation,

inverted residual structures, linear bottlenecks, and

squeeze-and-excitation blocks. These components

enhance computational efficiency while maintaining

high detection accuracy (Lu et al., 2020).

3.3.3 Faster R-CNN

Faster R-CNN is an efficient two-stage object

detection framework that combines region proposal

generation and object classification. The first stage is

the Region Proposal Network (RPN), which

generates candidate regions directly using a CNN

which enhances the detection process. For precise

object detection and localization, the second stage

uses shared convolutional features to classify and

improve the first-stage suggestions (Nie, Duan, and

Li, 2021)(Fang, 2022).

In this study, Faster R-CNN used ResNet-50 as

the backbone and integrated with Feature Pyramid

Networks to handle objects from different sizes

ranging from small to large. Additionally, for feature

extraction, the Attention Mechanism (AM) enhances

feature selection which increases the accuracy for

small waste objects, and this combination results in

high precision (Nie, Duan, and Li, 2021).

3.3.4 CNN

This study used pre-trained model MobileNetV2 as

the CNN backbone for the waste classification task.

This model was chosen for its lightweight

architecture, efficiency, and its offering good

accuracy. Furthermore, this model is well-suited for

application on environment with limited

computational resources like mobile phones

(Musaev, Anorboev, and Youn, 2025).

MobileNetV2 is a deep learning model that

utilizes an inverted residual framework. This

framework increases the dimensionality of the

convolutional layer before applying depth-wise

convolution to reduce computational cost while

maintaining accuracy. Additionally, it uses linear

bottleneck structure, where the last layer is replaced

with linear activation function to prevent the missing

of important information (Wu et al., 2022).

3.4 Training and Evaluation

All models used in this research are pre-trained

models and utilize transfer learning to fine-tune each

model using the dataset prepared before. TACO

(Trash Annotations in Context) and Trash-ICRA19

datasets are utilized for training the object detection

model, while the Domestic Waste Classification

dataset is utilized for training the classification

model. All the datasets must be pre-processed before

initiating training. For the object detection models, all

the images are resized to 640 x 640 pixels. And for

the classification model, preprocessing is done by

scaling images to 224 x 224 pixels and followed by

applying normalization to scale pixel values.

All datasets are divided into two different sets with

20% for testing and 80% for training. Training runs

50 epochs for all models, implementing early stopping

to prevent overfitting and the use of unnecessary

computation for each model. The evaluation metrics

for object detection models focus on FPS, inference

time and mAP, with the formula (1):

mAP =

1

N

APi

(1)

While classification models focus on accuracy (2)

and f1-score (3) for the evaluation metrics, computed

as:

Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN Integrated with CNN-Based Classification

79

Accurac

y

=

TP TN

TP TN FP FN

(2)

F1 Score = 2 X

Precision X Recall

Precision Recall

(3)

3.5

Parameter Configuration

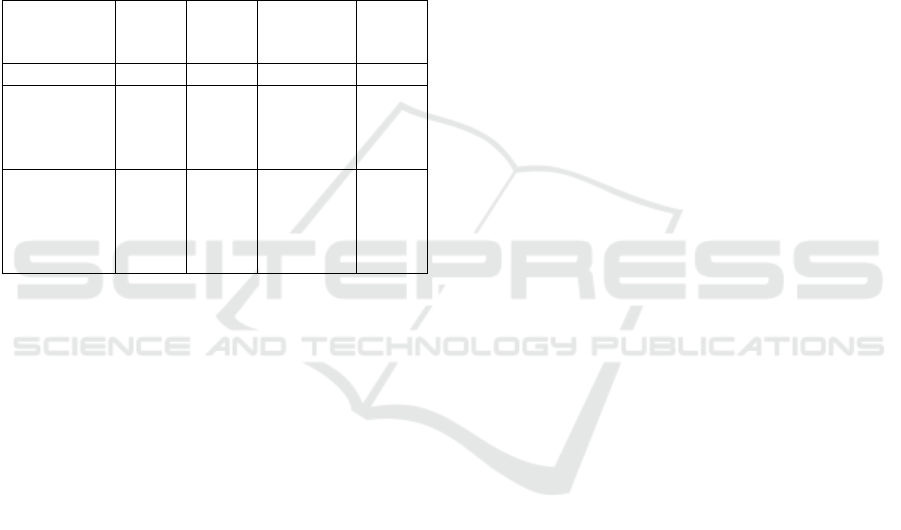

To ensure a fair and direct comparison of the models'

architectural performance, a consistent set of

hyperparameters was used for all three models during

the fine-tuning process, as detailed in Table 1.

Table 1. Hyperparameter Configuration for Model Training

Optimizer

Stochastic Gradient

Descent

(

SGD

)

Initial Learnin

g

Rate 0.005

Momentum 0.9

Weight Deca

y

0.0005

Batch Size 15

Epochs 50

By providing each model with the same learning

conditions, any significant differences in

performance can be more confidently attributed to the

inherent strengths and weaknesses of their designs,

rather than variations in the training process.

Stochastic Gradient Descent optimizer was selected

for its proven robustness and stability in fine-tuning

tasks. SGD updates the model’s weights by taking

steps in the direction that most effectively reduces the

training error. For initial learning rate, a value of

0.005 was chosen as it provides a balanced starting

point, allowing the models to learn efficiently without

the risk of instability that a higher rate might cause,

or the slow convergence from a rate that is too low.

The momentum value of 0.9 was used to help the

SGD optimizer accelerate convergence and navigate

complex error landscapes. It helps the optimizer

maintain a consistent direction, leading to faster and

more stable training. Weight decay with of 0.0005

was used to prevent overfitting. This regularization

strategy penalizes high weights in the model,

encouraging it to learn simpler and more

generalizable patterns.

4 RESULT

The chosen models for waste identification and

classification have undergone a 50-epoch training and

fine-tuning procedure

4.1

Waste Classification Model

Performance

The CNN model using pre-trained MobileNetV2

architecture, was evaluated for its performance and

effectiveness in categorizing waste materials. This

model was fine-tuned on the Domestic Waste

Classification dataset, which contains images

categorized as recyclable, organic, and non-organic

waste. The performance of the MobileNetV2 model

is summarized as follows:

• Accuracy: 85.02%

• Macro Average F1-score: 85%

These metrics demonstrate the model's robust

capability to accurately categorize waste items into

their categories.

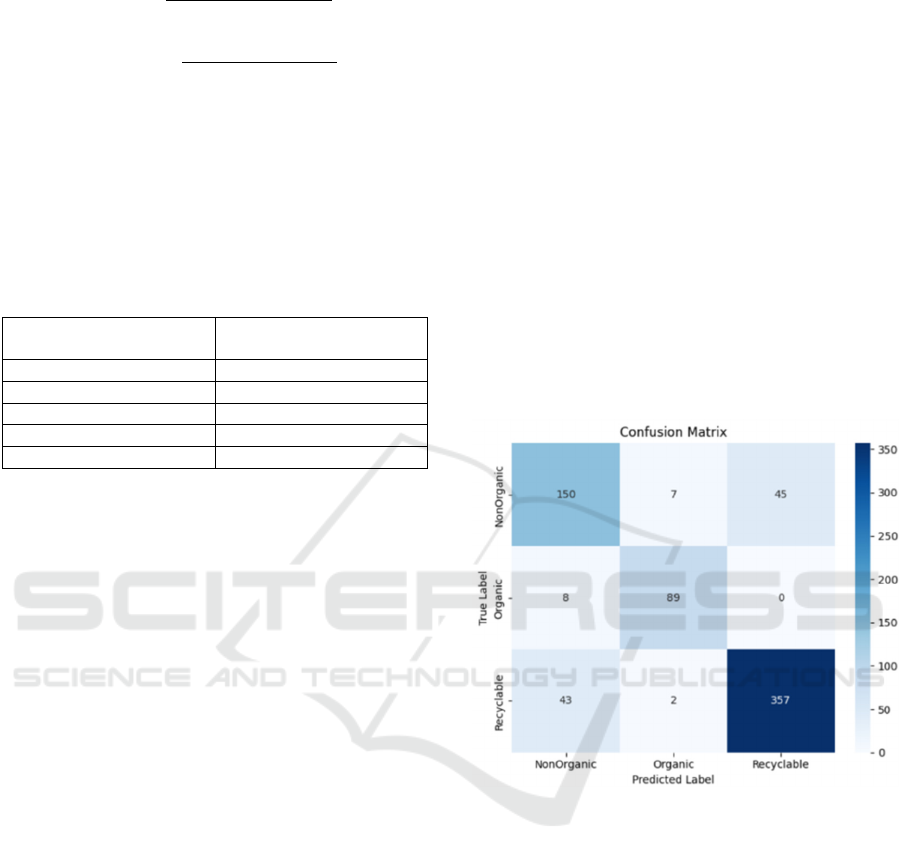

Figure 2. Confusion matrix for the MobileNetV2 model.

The confusion matrix shown in Figure 2, displays

the model's predictions in comparison to the actual

labels. The model was able to achieve particularly

high true positive rates for the 'Organic' and

'Recyclable' classes by identifying 89 out of 97 for

'Organic' items and 357 out of 402 for 'Recyclables'

correctly. However, there was some confusion

between the 'Non-Organic' and 'Recyclable'

categories, with 45 'Non-Organic' incorrectly

identified as 'Recyclable', and 43 'Recyclable'

incorrectly identified as 'Non-Organic'. Although

there are some inter-class confusions, the overall

accuracy of 85.02% shows that MobileNetV2 model

performs classification effectively.

RITECH 2025 - The International Conference on Research and Innovations in Information and Engineering Technology

80

4.2 Object Detection Model

Performance

For the real-time waste detection, these three object

detection architectures were evaluated: YOLOv11,

SSD with a MobileNetV3 backbone, and Faster R-

CNN with a ResNet50 FPN backbone. These models

were fine-tuned and tested on the TACO dataset. The

primary evaluation metrics included mAP at an IoU

threshold range of 0.5:0.95 (mAP@.5:.95), inference

speed (measured in milliseconds), and FPS.

Table 2. Performance Comparison of Object Detection

Models on the Integrated TACO and Trash-ICRA19

Datasets

Model mAP

@.5:.9

5

(

%

)

mAP

@.5

(

%

)

Avg.

Inference

Time

(

ms

)

Avg.

FPS

YOLOv11 55.69 73.34 14.1 71.1

SSD

(MobileNet

V3

b

ackbone

)

47.86 65.99 38.6 25.91

Faster R-

CNN

(ResNet50

FPN

b

ackbone

)

47.86 72.06 87.6 11.41

Table 2 illustrates that YOLOv11 achieved the

highest mAP@.5:.95 score of 55.69% which is the

best overall detection accuracy among others

evaluated models. It also shows an exceptional real-

time performance with the lowest average inference

time of 14.1 ms and the highest average FPS of 71.10.

Faster R-CNN (ResNet50 FPN) achieved the second-

best mAP@.5:.95 with a score of 49.89%.

Nonetheless, its inference speed was significantly

slower, averaging 87.6 ms per image, and it also

recorded the lowest FPS among all at 11.41.

The SSD model utilizing a MobileNetV3

backbone delivered a well-rounded performance,

attaining a mAP@.5:.95 of 47.86%. Having an

intermediate speed with average inference time of

38.6 ms and an FPS of 25.91, positioning SSD

between YOLOv11 and Faster R-CNN in terms of

processing speed. These outcomes emphasize a

balance between detection precision and processing

efficiency. Although YOLOv11 performed

exceptionally well in both areas for this waste

detection task, Faster R-CNN achieved comparable

accuracy but sacrificed speed, while SSD struck a

balance between the two.

4.3 Efficient Combination for

Real-World Deployment

The integration of the high-performing YOLOv11

with the MobileNetV2 classifier creates a synergistic

and highly efficient end-to-end pipeline.

MobileNetV2 was explicitly chosen for its

lightweight architecture and suitability for

environments with limited computational resources.

This two-step process—fast, accurate detection by

YOLOv11 followed by efficient classification by

MobileNetV2—forms a complete system optimized

for both speed and accuracy.

From a practical standpoint, these results directly

address real-world deployment concerns. The 14.1

ms latency of YOLOv11 is critical for real-time

systems where instantaneous decisions are required.

YOLOv11 ability to process 71.10 FPS ensures that

it can handle standard high-speed video feeds without

missing items. The low computational footprint of the

combined YOLOv11 and MobileNetV2 models

makes the system viable for real world deployment

on cost-effective computing devices.

5 CONCLUSION

With the goal of finding the best architecture for

automated waste management systems, this study

compares and analyzes the performance of different

deep learning models for real-time waste

classification and detection using computer vision.

The study focused on a CNN-based approach for

waste classification and evaluated three leading

object detection architectures: YOLOv11, SSD (with

a MobileNetV3 backbone), and Faster R-CNN (with

a ResNet50 FPN backbone). These architectures were

assessed on key metrics, including accuracy,

mAP@.5:.95, inference speed, and FPS. The

experimental results showed that the fine-tuned

MobileNetV2 model performed well on the Domestic

Waste Classification dataset, achieving an accuracy

of 85.02% and a macro F1-score of 0.85. This

demonstrates the model's capability to effectively

categorize waste as organic, recyclable, and non-

organic.

In the comparative study of object detection

models on the TACO dataset, YOLOv11 stood out as

the most efficient model overall. It achieved the

highest detection accuracy with a mAP@.5:.95 of

55.69% and showed exceptional real-time processing

performance, as indicated by an average inference

time of 14.1 ms and an average FPS of 71.10. These

results show the balance between computational

efficiency and detection precision, a critical

Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN Integrated with CNN-Based Classification

81

consideration for real-life implementation. Such

evaluation across various performance criteria

addresses gaps and shortcomings in previous studies,

which often focused on individual models or

restricted comparison metrics.

Based on these results, YOLOv11 was chosen as

the most suitable object detection model, as it

outperformed the other architectures. It was then

integrated with a CNN classification model using a

MobileNetV2 backbone, which was selected for its

lightweight architecture and suitability for

deployment in limited computational resource

environment. These models can be used in smart

recycling bins, automated waste-sorting facilities,

and mobile apps. Such implementations could

immediately help Indonesia's waste management

policy by enhancing waste segregation efficiency and

increasing the proportion of properly managed waste

beyond the current rate of around 60%. This approach

would also contribute to broader sustainability goals,

such as SDG 11: Sustainable Cities and

Communities, while aligning with smart city

development initiatives.

5.1 Limitations and Future Work

Although this research offers valuable insights, it is

important to recognize its limitations. The

performance of the models can be affected by the size

and variety of the training datasets (TACO, ICRA-19,

and Domestic Waste Classification). Future work

may include augmenting these datasets with more

diverse waste items and complex environmental

conditions (e.g., varying lighting, occlusions) to

improve model robustness and generalization.

Additional exploration of hyperparameter

optimization for each model or examining recent

architectures could also lead to performance

improvements. Furthermore, effective execution of

such a system would require hardware integration and

creating a comprehensive workflow for real-world

functionality, including the mechanical aspects of

waste sorting activated by the vision system output.

Exploring ensemble methods or model quantization

techniques for deployment on devices with limited

resources could also be beneficial for future studies.

ACKNOWLEDGEMENTS

During the preparation of this work, the authors used

generative AI tools for language refinement. After

using these tools, the authors reviewed and took full

responsibility for the content of the publication.

REFERENCES

Alif, M. A. R. (2024). YOLOv11 for vehicle detection:

advancements, performance, and applications in

intelligent transportation systems.

Dakari Aboyomi, D. and Daniel, C. (2023). A comparative

analysis of modern object detection algorithms: yolo vs.

ssd vs. faster r-cnn. ITEJ 8:96–106.

Dwiatmoko, F., Utami, D., Sivi, N. A., Nahdlatul, U., and

Lampung, U. (2024). Image Classification of Organic

and Non-Organic Waste Using CNN (Convolutional

Neural Network) Algorithm [In Indonesian].

Fang, B., Yu, J., Chen, Z., Osman, A. I., Farghali, M., Ihara,

I., Hamza, E. H., Rooney, D. W., and Yap, P. S. (2023).

Artificial intelligence for waste management in smart

cities: a review.

Fang, J. (2022). SSD-based Lightweight Recyclable

Garbage Target Detection Algorithm. Innovation in

Science and Technology 1(1). doi:

10.56397/ist.2022.08.05.

Fulton, M. S., Hong, J., and Sattar, J. (2020). Trash-

ICRA19: a bounding box labeled dataset of underwater

trash. Available at: https://doi.org/10.13020/x0qn-y082

(accessed June 15, 2025).

Haqqi, M., Rochmah, L., Dwi Safitri, A., Adhi Pratama, R.,

and Tarwoto (2024). Implementation of machine

learning to identify types of waste using cnn algorithm.

Khanam, R. and Hussain, M. (2024). YOLOv11: an

overview of the key architectural enhancements.

Kulkarni, H. N. and Kannamangalam Sundara Raman, N.

(2019). Waste object detection and classification.

Li, J., Chen, J., Sheng, B., Li, P., Yang, P., Feng, D. D., and

Qi, J. (2022). Automatic Detection and Classification

System of Domestic Waste via Multimodel Cascaded

Convolutional Neural Network. IEEE Transactions on

Industrial Informatics 18(1):163–173. doi:

10.1109/TII.2021.3085669.

Lu, H., Li, C., Chen, W., and Jiang, Z. (2020). A single shot

multibox detector based on welding operation method

for biometrics recognition in smart cities. Pattern

Recognition Letters 140:295–302. doi:

10.1016/j.patrec.2020.10.016.

Ma, W., Wang, X., and Yu, J. (2020). A lightweight feature

fusion single shot multibox detector for garbage

detection. IEEE Access 8:188577–188586. doi:

10.1109/ACCESS.2020.3031990.

Ministry of Environment and Forestry of Indonesia (2024).

National Waste Management Information System

(SIPSN). Available at:

https://sipsn.menlhk.go.id/sipsn/ (accessed April 3,

2025) [In Indonesian].

Musaev, A., Anorboev, A., and Youn, J. M. (2025).

Optimized epoch selection ensemble: integrating

custom cnn and fine-tuned mobilenetv2 for malimg

dataset classification. IEEE Access. doi:

10.1109/ACCESS.2025.3547791.

Nie, Z., Duan, W., and Li, X. (2021). Domestic garbage

recognition and detection based on Faster R-CNN. In:

Journal of Physics: Conference Series.

RITECH 2025 - The International Conference on Research and Innovations in Information and Engineering Technology

82

Proença, P. and Hua, Y. (2020). TACO: Trash Annotations

in Context Dataset.

Tung, S. (2021). Domestic Waste Classification Dataset.

Wahyutama, A. B. and Hwang, M. (2022). Yolo-based

object detection for separate collection of recyclables

and capacity monitoring of trash bins. Electronics

(Switzerland) 11(9). doi: 10.3390/electronics11091323.

Wu, C., Zhang, J., Yu, X., and Lei, X. (2022). A novel

capsnet neural network based on mobilenetv2 structure

for robot image classification.

Yan, D., Li, G., Li, X., Zhang, H., Lei, H., Lu, K., Cheng,

M., and Zhu, F. (2021). An improved faster r-cnn

method to detect tailings ponds from high-resolution

remote sensing images. Remote Sensing 13(11). doi:

10.3390/rs13112052.

Real-Time Waste Detection Using YOLO, SSD, and Faster R-CNN Integrated with CNN-Based Classification

83