Dual Drivers of Emotional and Efficiency Needs: A Study of Group

Differences in AI Chat Dependency Behavior

Mingzhen Liu

School of Literature and media, Chengdu Jincheng College, Chengdu, 610000, China

Keywords: AI Chat Dependency, Group Differences, Emotional Needs, Efficiency Needs.

Abstract: Users increasingly perceive AI chatbots not merely as functional tools but as emotional companions. This

study proposes a 'demand-behavior' dual-path model to examine the formation mechanisms and group

heterogeneity of AI chatbot dependence, revealing how emotional compensation and efficiency enhancement

synergistically operate, while identifying behavioral variation causes through cross-group analysis. This study

mainly employed a questionnaire survey to collect data on user interactions with AI chatbots, analyzing

motivations, engagement frequency, and contextual usage patterns. The study found that, first, emotional and

efficiency needs were the primary drivers of user reliance on AI chatbots; second, AI chatbots boosted work

efficiency but might also cause anxiety from over-reliance. Third, dependence levels and demand focus varied

significantly across user groups. This study proposed a novel framework explaining the emotional

mechanisms and efficiency pursuits in human-AI interaction, while offering practical insights for promoting

rational chatbot use and mitigating associated risks.

1 INTRODUCTION

As an essential carrier of human-computer

interaction, AI chatbots are gradually penetrating all

fields of human life. Integrating textual intelligence,

visual pattern recognition, and predictive modeling

techniques (Xie & Pentina, 2022), people use AI

chatbots for daily communication and information

acquisition (such as Apple Siri Assistant, Microsoft

Xiaobing (Song et al., 2022), and OpenAI 's ChatGPT

(Haman et al., 2023; Yankouskaya et al., 2024). At

the same time, its highly anthropomorphic dialogue

ability and emotional interaction experience have

made more and more people begin to regard AI

chatbots as emotional sustenance (Xie et al., 2023).

For emotional needs, companion chatbots like

Replika (Ta et al., 2020; Xie & Pentina, 2022) and

Mitsuku came into being. When individuals perceive

that the translation of AI chatbots is sufficient to

provide emotional support, encouragement, and

psychological security, they will become attached to

social chatbots (Xie & Pentina, 2022). Some scholars

have analyzed the negative behaviors of AI users’

addiction from the unique perspective of cognition-

affective-conative (CAC) and proposed cognitive and

emotional factors that may affect user addiction

(Zhou & Zhang, 2024). The China Academy of

Information and Communications Technology

(CAICT) mentioned in its Blue Book on Artificial

Intelligence Governance (2024) that the increasing

emotional companionship of artificial intelligence is

prone to emotional dependence, which may erode

human autonomy. In addition, AI chat addiction may

also cause a series of psychological problems (Huang

et al., 2024; Laestadius et al., 2024; Salah et al., 2024).

2 METHODOLOGY

In order to further study how emotional and

efficiency needs drive users ' dependence on AI chat

tools ', this paper adopts the method of questionnaire

survey and uses quantitative analysis to explore this

issue. By issuing questionnaires in the form of online

answers to people who have chatted with AI agents, a

total of 64 real and effective data were collected.

The preferred questionnaire analysis as a research

method is mainly based on the following aspects:

First, user behavior theories such as the Technology

Acceptance Model (TAM) and Usage and

Gratification Theory (U & G) provide a theoretical

basis for understanding users ' dependence on

448

Liu, M.

Dual Drivers of Emotional and Efficiency Needs: A Study of Group Differences in AI Chat Dependency Behavior.

DOI: 10.5220/0013993100004916

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Public Relations and Media Communication (PRMC 2025), pages 448-455

ISBN: 978-989-758-778-8

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

technology. Secondly, the related research on

emotion and efficiency shows that emotional needs

(such as emotional support and social interaction) and

efficiency needs (such as task completion speed and

information acquisition convenience) are the core

factors that drive users to rely on AI chat tools.

Thirdly, by integrating user behavior data from AI

chat platforms (e.g., ChatGPT and Google Assistant)

with their real-world application scenarios, this

research constructed a robust framework for context-

driven questionnaire design.

Compared with other methods, questionnaire

analysis has advantages in studying this topic. The

questionnaire survey can systematically and

efficiently collect large sample data, which is helpful

to understand the basic characteristics, usage

behavior, and specific performance of emotional and

efficiency needs of users. In addition, users '

emotional and efficiency needs can be transformed

into quantifiable indicators to explore which needs

dominate while revealing significant differences in

dependence behavior among different groups (e.g.,

age, occupation status). This quantitative analysis

provides rigorous statistical support and macro

quantitative conclusions, making the research

conclusions more convincing. In addition, it also has

the advantages of strong flexibility, low cost and

anonymity.

The survey instrument comprises six primary

components, with the initial section dedicated to

collecting participant demographic profiles. The

purpose is to understand the background information

of the interviewees and facilitate the subsequent

analysis of group differences. The second part is the

measurement of AI chat dependence behavior.

Drawing on the validated ' Internet Addiction Scale ',

selected items were modified to operationalize AI

chatbot dependency metrics in the target population.

The third part is the measurement of users' emotional

needs. The questions are adapted from the ' UCLA

Loneliness Scale ' and the ' Emotional Accompanying

Needs Scale ' to evaluate the emotional motivation of

users using AI chat tools. The fourth part is the

measurement of user efficiency requirements. The

purpose is to evaluate the functional motivation of

users using AI chat tools. The fifth part is the survey

of group differences. The purpose is to understand the

differences in dependence behavior, emotional needs,

and efficiency needs among different groups. The

sixth part is an open-ended question, setting up two

blank-filling questions to collect users ' subjective

views and suggestions on AI chat tools.

Descriptive analysis, one-way ANOVA,

correlation analysis, regression analysis, and multiple

comparisons — including the Least Significant

Difference (LSD) method — were performed in SPSS

to process the survey responses.

3 RESULTS

3.1 Basic Information

The survey achieved a 100% valid response rate

(N=64), with all administered instruments meeting

rigorous inclusion criteria. Participants were

predominantly aged 18–25 years (50.0%), followed

by those aged ≥ 46 years (21.9%). The gender

distribution showed a slight female predominance

(54.7%), with male participants comprising 43.8%.

Regarding occupational categories, students

constituted the largest group (42.2%), followed by

professionals (26.6%).

3.2 The Influence of Emotional Needs

and Efficiency Needs on AI Chat

Dependence

According to the descriptive statistical analysis, the

average score of users ' AI chat tools as emotional

sustenance was 2.41 (standard deviation 1.080),

indicating that some users regarded AI chat tools as

emotional sustenance. At the same time, the average

score of users who think that AI chat tools can

improve work efficiency or learning effect was 3.48

(standard deviation 1.127), indicating that users

generally perceived that AI chat tools have a positive

impact on efficiency.

Table 1. One-Way ANOVA Results for Effects of

Emotional and Efficiency Needs on AI Chat Dependency.

Source

of

Variati

Sum of

Squares

(SS)

Degre

es of

Freedo

(

df)

Mean

Square

(MS)

F

p

Betwe

en

G

.758 4 .189 .237 .916

Within

Group

47.180 59

.800 - -

Total 47.938 63 - - -

As delineated in Table 1, the linear regression

analysis revealed a positive association between

emotional needs ("I think AI chat tools can be my

emotional sustenance") and dependency severity (β =

0.201, p = 0.037), confirming emotional requisites as

a robust predictor of AI-mediated dependency

Dual Drivers of Emotional and Efficiency Needs: A Study of Group Differences in AI Chat Dependency Behavior

449

phenotypes. Productivity enhancement perceptions

showed a robust positive correlation with dependency

levels (β=0.293, p<0.01), with work efficiency

improvements emerging as key behavioral drivers.

Table 2. Regression ANOVA for AI Chatbot Dependency Prediction.

Source SS df MS F

p

Between Groups 13.085 2 6.542 11.451 .000

b

Within Groups

34.853 61

.571 - -

Total 47.938 63 - - -

a. Dependent variable: What do you think of your

dependence on AI chat tools?

b. Predictive variables: (constant) I think AI chat

tools can become my emotional sustenance, I think

AI chat tools can improve my work efficiency or

learning effect.

Table 3. Regression Coefficients for AI Chatbot Dependency Prediction.

Predictors

Unstandardized

Coefficients (B)

Standard Error Standardized

Coefficients (Beta)

t

p

(Constant)

.463 .330 -

1.403 .166

"AI chatbots improve my

work/study efficiency"

.293 .091 .379

3.240 .002

"AI chatbots provide

emotional support"

.201 .094 .249

2.130 .037

a. Dependent variable: What do you think of your dependence on AI chat tools?

Table 4. Bootstrap Regression Analysis of AI Chatbot Dependency (BCa 95% CI).

Predictors

B Bias SE

Sig.

(2-tailed)

BCa

95% CI

(Constant)

.463 -.011 .190

.017 [0.115,

0 797]

"AI chatbots improve my

work/study efficiency"

.293 .006 .079

.001 [0.112,

0.470]

"AI chatbots provide

emotional support"

.201 .005 .103

.054 [0.003,

0.358]

a. Unless otherwise stated, the bootstrap results

are based on 1000 stratified bootstrap samples.

In the regression analysis of Table 2-4, the

unstandardized coefficient of the predictive variable

(emotional needs) to the degree of dependence was

0.201, and the significance level was 0.037. The

results of self-sampling showed that the 95%

confidence interval of emotional needs was

[0.003,0.385], indicating that emotional needs had a

significant impact on dependent behavior. The

unstandardized coefficient of another predictor

variable (efficiency demand) to dependence was

0.293, and the significance level was 0.002. The

results of self-sampling showed that the 95%

confidence interval of efficiency demand was

[0.112,0.470], indicating that efficiency demand had

a significant impact on dependence behavior.

From the data analysis based on SPSS, both

emotional needs and efficiency needs have a

significant impact on dependence behavior. Among

them, the unstandardized coefficient of efficiency

demand (0.293) is higher than that of emotional

demand (0.201), indicating that efficiency demand is

more significant in driving dependence behavior.

PRMC 2025 - International Conference on Public Relations and Media Communication

450

3.3 The Influence of Dependent

Behavior on Users' Mental Health,

Social Ability, and Work Efficiency

3.3.1 Mental Health

Table 5. Correlation Analysis of AI Chatbot Usage Perceptions.

Measurement Variables

Pearson’s r Sig. (2-tailed) N

Bias SE BCa 95% CI

1. Anxiety when unable to use

AI chatbots

.

Dependency level

.623** <.001 64

.005 .085 [.421, .795]

Perceived work efficiency

.352** .004 64

.007 .115 [.069, .598]

2. Self-reported AI dependency

Perceived work efficiency

.468** <.001 64

.003 093 [.261, .641]

a. Correlations marked with ** are significant at p <

.01 (two-tailed) **.

b. All bootstrap analyses used 1,000 stratified

resamples with bias-corrected and accelerated (BCa)

intervals.

Correlation analysis showed that users would feel

anxious or upset when they were unable to use AI chat

tools (r=0.623, p=0.000), indicating that dependent

behavior may hurt users' mental health. (See Table 5)

3.3.2 Operating Efficiency

Users generally believe that AI chat tools can

improve work efficiency or learning effect, indicating

that dependence behavior has a positive impact on

improving work efficiency.

3.4 Research on Group Differences

3.4.1 The Relationship Between Age and the

Use of AI Chat Tools Needs and

Scenarios

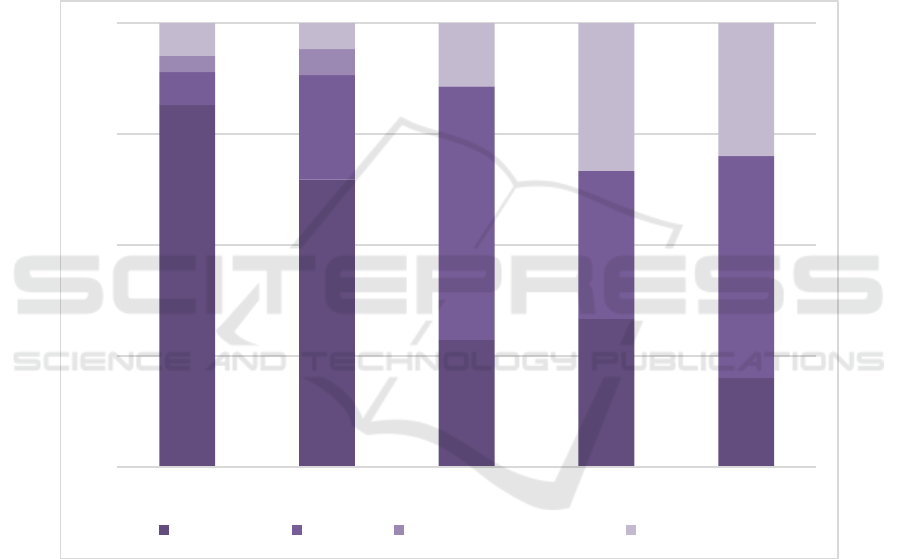

Alt Text for Graphical Figure: A vertical bar chart illustrates the percentage distribution of four activity categories

(work/study, daily life, emotional companionship, entertainment) across five age groups. The x-axis lists the age ranges:

Under 18, 18-25, 26-35, 36-45, and 46+. The y-axis shows percentages from 0 to 100.

Figure 1. Age and ' What are the main scenarios for you to use AI chat tools? Cross-analysis histogram of ' problem

(Photo/Picture credit: Original).

0

75

50

42.86

33.33

18.75

50

21.43

33.33

3.13

0

0

33.33

3.12

0

35.71

0

25

50

75

100

<18 18-25 26-35 >46

work / study daily life emotional companionship game and glee

Dual Drivers of Emotional and Efficiency Needs: A Study of Group Differences in AI Chat Dependency Behavior

451

According to cross-analysis, there are significant

differences in the main scenes of AI chat tools used

by users of different ages. Younger users tend to seek

entertainment and companionship, whereas users in

middle age and beyond focus more on balancing work

and leisure. (See Figure 1)

Young users (especially under 18 years old) are

more inclined to chat with AI by age and emotional

needs. The dependence and anxiety of AI chat tools

are stronger, and with the increase in age, this

dependence and anxiety gradually weaken. The

attitude of the middle-aged and elderly groups is

relatively conservative. This reveals that age plays a

key role in how people view interactions with AI

chatbots.

Age and how much people value efficiency both

play a role — younger and older users tend to have

noticeably different opinions on using AI chat tools.

Young users have a higher acceptance of AI chat tools

and are more inclined to think that AI chat tools can

provide accurate information or advice, while middle-

aged and elderly users show a relatively conservative

attitude, especially in the age group of 26-35 years old,

which may be related to their adaptability to new

technologies and habits.

3.4.2 The Relationship Between Occupation

and the Needs and Scenarios of Using

AI Chat Tools

Alt Text for Graphical Figure: A vertical bar chart compares time allocation percentages across work/study, daily life,

emotional companionship, and entertainment for five occupational groups (students, working professionals, freelancers,

retirees, others), with segmented bars labeled numerically.

Figure 2. The cross-tabulation bar chart between occupation and "Your primary scenarios for using AI chat tools"

(Photo/Picture credit: Original).

Students and professionals demonstrate a stronger

propensity to utilize AI chatbots for work/study-

related tasks, aligning with functional efficiency

demands; conversely, freelancers and other

occupational groups exhibit greater reliance on these

tools for daily-life convenience and recreational

engagement, indicative of affective needs. These

patterns underscore the heterogeneous demand

structures and usage modalities across professional

cohorts in human-AI interaction contexts. (See Figure

2)

81.48

64.71

28.57

33.33

20

7.41

23.53

57.14

33.33

50

3.7

5.88

0

0

0

7.41

5.88

14.29

33.33

30

0

25

50

75

100

student professional freelancer retiree other occupations

work / study daily life emotional companionship game and glee

PRMC 2025 - International Conference on Public Relations and Media Communication

452

Alt Text for Graphical Figure: A vertical bar chart compares agreement levels (strongly disagree to strongly agree) across

five demographic groups (students, working professionals, freelancers, retirees, others) using segmented bars with labeled

percentages for each response category.

Figure 3. The cross-tabulation bar chart between occupation and the statement "I feel anxious or uneasy when unable to use

AI chat tools" (Photo/Picture credit: Original).

According to the cross-analysis between

occupation and the attitude of anxiety or uneasiness

when AI chat tools cannot be used, it is found that

there are significant differences in emotional

response among different occupational groups. (See

Figure 3)

Only 7.41% and 11.11% of the students agree or

strongly agree. Although 11.76% of the workplace

people disagree very much, 41.18% of them feel

ordinary about it. In general, students and freelancers

have lower anxiety about AI tool dependence, while

workplace people show more obvious anxiety

tendencies. The responses of retirees and other

occupational groups are more diverse. On the whole,

the dependence and anxiety of occupational groups

on AI tools are the most prominent.

4 CONCLUSION

4.1 The Effect of Emotional Needs and

Efficiency Needs on Users ' AI Chat

Dependence Behavior

This study suggests that efficiency needs and

emotional needs jointly drive users' dependence on

AI chat tools. Among them, the leading role of

efficiency needs is significant and stable, and its

influence exceeds emotional needs by about 46.3%.

4.2 The Links Between AI Chat

Dependence, Mental Health, Social

Competence, and Workplace

Effectiveness

Dependence behavior reveals a significant dual-

edged impact effect: on the one hand, users generally

recognized the practical value of AI tools to improve

33.33

11.76

28.57

33.33

10

18.52

29.41

28.57

0

10

29.63

41.18

28.57

33.33

50

7.41

17.65

14.29

14.29

10

11.11

00

0

20

0

25

50

75

100

student professional freelancer retiree other occupations

very disagree disagree neutrality agree very agree

Dual Drivers of Emotional and Efficiency Needs: A Study of Group Differences in AI Chat Dependency Behavior

453

work efficiency (average score 3.48 / 5), on the other

hand, the degree of dependence and anxiety level

showed a strong positive correlation (r = 0.623).

Especially in the workplace, 41.18% of the

respondents said that they would have negative

emotions when they could not use AI.

Secondly, users generally believe that AI chat

tools can improve work efficiency or learning effect,

indicating that the high probability of dependence

behavior has a positive impact on work efficiency.

The study's scope was partially constrained by dataset

incompleteness, limiting rigorous assessment of

interpersonal skill impacts. Future research can

further explore this field, such as social network

analysis or influence mechanism research, in order to

more fully understand the comprehensive impact of

AI chat dependence behavior.

4.3 Group Difference Analysis

The analysis of group differences reveals the deep

association between different groups and usage

patterns. From the age dimension, although young

users aged 18-25 accounted for 50% of the total

sample, they showed a unique model of ' high use-low

anxiety ', while the user group over 46 years old

showed a trend of polarization, 14.29% developed

into deep dependence, and 42.86% maintained

instrumental rationality. From the perspective of

occupational dimension, the proportion of students

using AI as a learning tool (59.3%) was significantly

higher than that of other occupational groups.

4.4 Future Research Directions

In terms of group research, it can be further refined.

Especially in-depth exploration of high-risk groups in

the adolescent subgroup, such as adolescents with

Asperger's syndrome or social anxiety characteristics.

The emotional projection mechanism of these groups

to AI may be significantly enhanced by

neurodevelopmental differences, as shown by the case

of Seville, a 14-year-old teenager in Florida who

eventually committed suicide due to a long-term

addiction to AI chatbots. At the same time, it is urgent

to research the differentiation of occupational groups,

such as comparing the differences in dependence

patterns between high-pressure industry practitioners

(such as programmers, health care) and freelancers.

Future emerging research topics should focus on

the deep cognitive impact of human-computer

interaction. It is necessary to systematically analyze

the two-way effect of AI dependence on social ability:

on the one hand, the long-term use of simplified

language may lead to the degradation of real

communication ability, such as some users ' trance

back to the real world; on the other hand, virtual social

training in specific scenarios (such as autistic children

learning social rules through AI partners) may have

the value of skill transfer. In addition, the inhibitory

effect of AI on creativity is worthy of attention.

Excessive reliance on templated answers may weaken

divergent thinking, while moderate use of AI

brainstorming tools may stimulate innovative

potential.

REFERENCES

Haman, M., & Školník, M. 2023. Behind the ChatGPT hype:

Are its suggestions contributing to addiction? Annals of

Biomedical Engineering, 51:1128–1129

Huang, S., Lai, X., Ke, L., Li, Y., Wang, H., Zhao, X., …

Wang, Y. 2024. AI Technology panic—is AI

Dependence Bad for Mental Health? A Cross-Lagged

Panel Model and the Mediating Roles of Motivations for

AI Use Among Adolescents. Psychology Research and

Behavior Management, 17:1087–1102

Laestadius, L., Bishop, A., Gonzalez, M., Illenčík, D., &

Campos-Castillo, C. 2024. Too human and not human

enough: A grounded theory analysis of mental health

harms from emotional dependence on the social chatbot

Replika. New Media & Society, 26(10):5923–5941

Salah, M., Abdelfattah, F., Alhalbusi, H., et al. 2024, May

23. Me and my AI bot: Exploring the 'AI-holic'

phenomenon and university students' dependency on

generative AI chatbots—Is this the new academic

addiction? (Version 2) [Preprint]. Research Square

Song, X., Xu, B., & Zhao, Z. 2022. Can people experience

romantic love for artificial intelligence? An empirical

study of intelligent assistants. Information &

Management, 59:103595

Ta, V., Griffith, C., Boatfield, C., Wang, X., Civitello, M.,

Bader, H., DeCero, E., & Loggarakis, A. 2020. User

experiences of social support from companion chatbots

in everyday contexts: Thematic analysis. Journal of

Medical Internet Research, 22(3):e16235

Xie, T., & Pentina, I. 2022. Attachment theory as a

framework to understand relationships with social

chatbots: A case study of Replika. Proceedings of the

55th Hawaii International Conference on System

Sciences

Xie, T., Pentina, I., & Hancock, T. 2023. Friend, mentor,

lover: Does chatbot engagement lead to psychological

dependence? Journal of Service Management,

34(4):806–828

Yankouskaya, A., Liebherr, M., & Ali, R. 2024. Can

ChatGPT be addictive? A call to examine the shift from

support to dependence in AI conversational large

language models. SSRN Electronic Journal

PRMC 2025 - International Conference on Public Relations and Media Communication

454

Zhou, T., & Zhang, C. 2024. Examining generative AI user

addiction from a C-A-C perspective. Technology in

Society, 78:102653

Dual Drivers of Emotional and Efficiency Needs: A Study of Group Differences in AI Chat Dependency Behavior

455