Real-Time Leaf Disease Detection and Fertilizer Recommendation

System

A. V. Nageswara Rao, Pranathi Avutu, Sai Preethi Bijjala

and Peddi Lakshmi Aishwarya

Department of ACSE, Vignan University, Guntur, Andra Pradesh, India

Keywords: Leaf Disease Detection, Hybrid Model, Feature Extraction, Image-Based Disease Detection.

Abstract: This project highlights the importance of leaf disease detection in precision agriculture, enabling early

identification and timely intervention to protect crops from various diseases. This project presents a novel

approach to detecting leaf diseases using deep learning (DL) and machine learning (ML) techniques. The

study employs four powerful convolutional neural network (CNN) architectures: VGG16, VGG19,

Inception v3, and Inception v6 to train a comprehensive leaf image data set, enabling robust disease

classification. VGG-based models are used to extract features, which are then input into a support vector

machine (SVM) classifier for disease classification. This hybrid DL-ML framework improves both the

accuracy and efficiency of the system in distinguishing between healthy and diseased leaves. An interactive

interface was developed, allowing users to upload leaf images for real-time disease detection, while an IoT

camera system was integrated for automated leaf disease analysis in the field. The proposed solution

demonstrates significant potential

to improve crop management practices and advance automated

agricultural systems, offering an innovative tool for early-stage disease diagnosis and management.

1 INTRODUCTION

In recent years, global demand for food has

increased significantly due to rapid population

growth, placing greater pressure on agricultural

systems to ensure optimal crop pro- duction. One of

the primary challenges faced by farmers is the timely

detection of leaf diseases, which can severely impact

crop health and yield. The timely and precise

detection of these diseases is essential for efficient

pest management, reducing crop loss, and

minimizing the use of pesticides, this ultimately

results in more sustainable farming practices.

Traditional leaf disease detection methods often

depend on manual inspection, which is time

consuming, labor intensive, and susceptible to

human error. With rapid progress in machine learning

(ML) and deep learning (DL), there is great potential

to automate and enhance the accuracy of disease

detection processes. In this context, computer vision

techniques powered by convolutional neural

networks (CNN) have shown great promise in

analyzing plant leaf images to identify disease

patterns.

This study uses advanced CNN architectures,

specifically VGG16, VGG19, Inception v3, and

Inception v6., to train

and evaluate a

comprehensive data set of leaf images for

disease detection. These deep learning models are

used for feature extraction, where the VGG models

play a pivotal role in identifying relevant features

from leaf images. The extracted features are then fed

into a support vector machine (SVM) classifier for

further processing, a traditional machine learning

algorithm, to classify whether the leaf is healthy or

diseased. By combining the strengths of CNN for

feature learning and SVM for classification, the

proposed method seeks to improve both the accuracy

and efficiency of disease detection.

In addition, an interactive user interface was

developed that allows users to upload images of

plant leaves and receive real-time feedback on

whether the leaf is healthy or infected. To further

extend the practical application, the system was

integrated with an IoT camera device, allowing

automated image capture and analysis directly in

agricultural fields. This IoT-enabled camera

provides real-time monitoring and disease detection,

creating a seamless and efficient solution for

farmers.

Integrating deep learning with IoT technology

represents a significant step toward modernizing

862

Rao, A. V. N., Avutu, P., Bijjala, S. P. and Aishwarya, P. L.

Real-Time Leaf Disease Detection and Fertilizer Recommendation System.

DOI: 10.5220/0013982100004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 5, pages

862-874

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

agricultural practices. By automating the leaf disease

detection process, the proposed system can help

farmers make informed decisions faster, improve

crop management, and contribute to increased

agricultural productivity.

2 LITERATURE SURVEY

The detection of leaf diseases using machine

learning and deep learning models has been a key

focus in modern agricultural research. The goal is to

automate the process and enhance crop management.

This section examines various studies on leaf disease

detection and emphasizes the key distinctions in our

approach.

2.1 Deep Learning Approaches for

Leaf Disease Detection

Mohanty et al. (2016) developed a convolutional

neural network (CNN) model to classify images of

various crops, such as tomatoes, cucumbers, and

peppers, achieving re- markable results in leaf

disease detection. Their approach leverages CNNs to

automatically extract features from images,

significantly reducing the need for manual feature

engineering. However, their model was trained on

a small dataset, which reduced its ability to

generalize between different plant species and

environments.

2.2 Convolutional Neural Networks

Diagnose Plant Disease

Toda and Okura (2019) developed a convolutional

neural network (CNN) model to diagnose plant

diseases by analyzing leaf images. Their approach

aimed to improve the interpretability of CNN-based

predictions by extracting learned features in an

understandable way, enhancing the model re-

liability. Using deep learning, their method reduced

the dependence on manual feature extraction,

making the diagnosis plant disease more efficient.

However, their study focused mainly on

interpretability rather than improving classification

precision, and the ability of the model to generalize

between different plant species and environmental

conditions remained a challenge.

2.3 Deep Neural Networks-based

Recognition of Plant Diseases

Sladojevic et al. (2016) developed a deep

convolutional neural network (CNN) model to

recognize plant disease using leaf image

classification. Their model was developed to

differentiate between healthy and diseased leaves,

covering 13 different plant diseases. Using CNNs,

their approach automates feature extraction,

reducing the need for manual preprocessing. The

study also utilized the Caffe deep learning

framework to train the model on an extensive

dataset, achieving an accuracy of 96.3%. However,

the model’s performance was influenced by dataset

limitations, particularly in generalizing across

diverse environmental conditions and plant species.

2.4 Convolutional Neural Networks for

the Automatic Identification of

Plant Diseases

Boulent et al. (2019) carried out an extensive

review on the use of convolutional neural networks

(CNNs) for the automatic identification of plant

diseases. Their study analyzed 19 research papers

that implemented CNNs for crop disease detection,

highlighting key aspects such as dataset

characteristics, model architectures, training

strategies, and performance metrics. The review

emphasized the potential of CNNs in precision

agriculture, improving food security through

automated disease diagnosis. However, challenges

such as dataset limitations, model generalization,

and interpretability were identified as critical areas

for improvement. The authors also offered

recommendations to improve the reliability of CNN-

based plant disease identification systems for

practical agricultural applications.

2.5 Fast and Accurate Detection and

Classification of Plant Diseases.

International Journal of Computer

Applications

Al-Hiary et al. (2011) developed a machine

learning-based approach for the rapid and accurate

detection of plant diseases using image processing

techniques. Their model used K- means clustering

for segmentation and artificial neural net- works

(ANNs) for classification, greatly enhancing

efficiency compared to traditional expert-based

disease identification methods. The proposed

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

863

method successfully classified various plant diseases

with a precision ranging between 83% and 94%,

achieving a 20% speedup compared to previous

approaches. However, the model’s performance was

affected by factors like dataset variability and

environmental conditions, which presented

challenges for its implementation in real-world

applications.

2.6 Detection of Unhealthy Region of

Plant Leaves and Classification of

Plant Leaf Diseases Using Texture

Features

Arivazhagan et al. (2013) developed a machine

learning- based method for detecting and classifying

plant leaf diseases by utilizing texture features. Their

method involved transforming input images into the

HSI color space, masking and removing green

pixels, segmenting infected regions, and extracting

texture statistics for classification. The study

employed a SVM classifier, achieving an accuracy

of 94% on a dataset of 500 plant leaves. The

proposed approach improved efficiency in plant

disease detection, reducing dependency on expert

analysis. However, its performance was influenced

by variations in leaf conditions and environmental

factors.

2.7 Detection of Unhealthy Region of

Plant Leaves and Classification of

Plant Leaf Diseases Using Texture

Features Using ANN

Kanjalkar and Lokhande (2013) developed an

approach using artificial neural networks (ANN) to

detect and classify plant leaf diseases through image

processing techniques. Their method involved

converting RGB images to the HSI color space,

removing noise, segmenting infected regions using

connected component labeling, and extracting key

features such as size, color, proximity, and centroid

distance. The ANN classifier was trained on four

different plant diseases, achieving reliable

classification results. However, the model’s

performance was affected by dataset limitations and

variations in leaf appearance due to environmental

factors.

2.8 Diseases Detection of Various Plant

Leaf Using Image Processing

Techniques: A Review

The paper” Diseases Detection of Various Plant Leaf

Using Image Processing Techniques: A Review”

explores the importance of detecting plant diseases

to improve agricultural productivity. Given that over

70% of India’s population depends on agriculture,

early disease detection is crucial to prevent

economic losses. The study reviews various plant

diseases affecting crops like maize, are can’t,

coconut, papaya, cotton, chili, tomato, and brinjal. It

discusses key challenges, such as image quality,

background noise, and the need for large datasets.

The paper highlights different image processing

techniques, including K-Means clustering, Support

Vector Machine (SVM), Artificial Neural Networks

(ANN), and Deep Learning for disease

classification. Various studies are reviewed, the use

of color space transformations, wavelet transforms,

and machine learning models for effective disease

detection. The research emphasizes the

importance of automation in agriculture to reduce

manual monitoring and pesticide usage. Future

improvements include enhancing classification

accuracy and expanding the dataset for better

detection of plant diseases. [8]

2.9 Recent Technologies of Leaf

Disease Detection Using Image

Processing Approach – A Review

The paper” Recent Technologies of Leaf Disease

Detection using Image Processing Approach – A

Review” presents a comprehensive study of

advancements in image processing techniques for

plant leaf disease detection. Given the critical role of

agriculture in economic growth, early disease

detection is essential to prevent yield loss. The paper

reviews various methodologies, such as

segmentation, feature extraction, and classification,

and organizes them according to the technologies

used and their applications. Techniques such as

histogram equalization, median filtering, and

machine learning classifiers like Support Vector

Machine (SVM) and K-Nearest Neighbor (KNN) are

analyzed. The study also discusses challenges such

as varying lighting conditions, image noise, and

dataset limitations. Future research should aim at

integrating hybrid algorithms, deep learning models,

and mobile-based solutions to enable real-time

disease detection and enhance precision agriculture.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

864

2.10 A Review of Machine Learning

Approaches in Plant Leaf Disease

Detection and Classification

The paper” A Review of Machine Learning

Approaches in Plant Leaf Disease Detection and

Classification” offers a thorough analysis of recent

advancements in the identification and classification

of plant diseases using Machine Learning (ML) and

Deep Learning (DL) models. The study reviews over

45 research papers from 2017 to 2020, highlighting

techniques such as Support Vector Machine (SVM),

Neural Networks, K- Nearest Neighbor (KNN), and

advanced DL architectures like AlexNet,

GoogLeNet, and VGGNet. Various image

processing techniques, including segmentation and

feature extraction, are employed, and classification,

are discussed along with their accuracy and dataset

details. The paper emphasizes the importance of

mobile-based applications for real-time plant disease

detection, improving agricultural productivity. It

also addresses key challenges like dataset quality,

segmentation precision, and the need for hybrid ML-

DL models to enhance accuracy. Future research

should focus on real-time image datasets, mixed

lighting conditions, and automated severity

estimation to improve detection performance.

2.11 Comparison with Our Work

While existing studies have contributed significantly

to the field of leaf disease detection, they primarily

focus on training deep learning models for

classification or rely on traditional machine learning

methods for disease identification. Our approach

differentiates itself in the following key areas:

• Integration of Advanced Deep Learning Models

– Unlike previous works, our study utilizes a

combination of VGG16, VGG19, Inception v3, and

Inception v6 models for feature extraction. These

architectures are designed to handle complex

patterns in image data, improving the accuracy of

leaf disease detection across various plant species

and environments.

• Hybrid Deep Learning and Machine Learning

Approach –By combining VGG-based deep learning

models with an SVM classifier, our approach

leverages the strengths of both deep learning (for

feature extraction) and machine learning (for

classification). This hybrid framework improves the

system’s robustness and ac- curacy. compared to

traditional deep learning models, which may

struggle in some real-world conditions due to

overfitting or lack of generalization.

• User Interface for Practical Application – We

have developed an easy-to-use interface that allows

farmers and users to upload leaf images for instant

disease diagnosis. This interactive feature makes our

system more practical and accessible to non-expert

users, differentiating it from previous works that

often do not focus on user-friendly implementations.

Table 1 gives the overall literature survey.

•

Table 1: Literature survey.

Study Focus Area Key Findings

Shrada P.Mohanty(2016)

Deep learning for plant disease

detection

High accuracy but real- world

challenges.

Toda T.Okura(2019)

Deep learning for plant disease

recognition.

Achieved 96.3% accuracy in classifying

13 plant diseases.

Boulent,J.; Foucher(2019)

CNN-based automatic plant

disease identification.

CNNs achieve high accuracy but require

diverse datasets for real-world ap-

plication.

M.V.V.R.S.Varma and

P.P.V.S.Reddy,(2019)

Advanced

convolutional neural

networks

Improved accuracy and efficiency in

disease classification

Arivazhagan S.,Newlin Shebia

R.(2019)

Texture-based plant dis- ease

classification.

Achieved 94.74% accuracy using

SVM classifier.

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

865

3 METHODOLODY

3.1 Existing Model

• Visual Inspection - Visual inspection is the most

traditional and commonly used method to detect

plant leaf diseases, farmers and agronomists rely

on their expertise to identify symptoms such as

discoloration, necrotic spots, wilting, or

abnormal growth patterns. This method is cost-

effective and does not require specialized

equipment, making it accessible to small-scale

farmers. However, it is highly subjective, as

accuracy depends on the observer’s experience

and knowledge. In addition, visual inspection is

often limited to detecting diseases in advanced

stages when symptoms become evident, reducing

the chances of early intervention. Factors such as

lighting conditions, environmental stress, and

plant variety can also lead to misdiagnosis.

Furthermore, the presence of multiple diseases

with overlapping symptoms complicates the

identification. Although visual inspection

remains a valuable initial screening method, it is

increasingly being supplemented by technology-

driven solutions to improve accuracy and early

detection capabilities.

• Feature Extraction and Machine Learning:

Traditional methods involve segmenting leaf

images to isolate dis- eased areas, followed by

extracting characteristics such as color, texture,

and shape. These features are then used to train

classifiers like Support Vector Machines (SVM)

for disease identification. For example, a study

applied K-means clustering for segmentation

and extracted color features to train an SVM,

achieving significant accuracy in classifying

different plant diseases.

• Deep Learning Approaches: The integration of

Convolutional Neural Networks (CNNs) has

revolutionized feature extraction by allowing

models to learn directly from raw pixel data, thus

improving classification accuracy. A notable

study developed a CNN-based model capable of

diagnosing 26 unique plant diseases in 14 plant

species, achieving an accuracy of 98.14%. This

model was also integrated into a mobile

application, facilitating real-time on-field disease

diagnosis for farmers.

• Sensor based Technologies: Innovations in

sensor-based technologies have led to the

development of wearable devices for plants,

allowing early detection of stress signals such as

elevated levels of hydrogen peroxide H

2

O

2

.

These sensors, often designed as microneedle

patches, attach to plant leaves and monitor

H2 O, a signaling scaling the pixel values in the

range of 0 and 1, thus improving training

efficiency.

• Feature Extraction Using Pretrained Deep

Learning Models

• VGG16 and VGG19: The Deep convolutional

neural networks intended for image

classification. These models include an

increasing number of convolutional, pooled, and

fully connected layers. They were fine-tuned

with pre-trained weights using a data set of

leaf diseases. The last few layers will be

modified to support the binary classification, in

which two classes (healthy versus diseased) will

be treated.

• Inception v3 and v6: These models are more

complicated and perform better at obtaining

characteristics and generalizing models.

Inception networks have applied different sizes

of the convolutional kernel on all layers, thereby

enabling the model to capture features at different

ranges like VGG models, Inception v3 and

Inception v6 are also fine-tuned on the leaf

disease dataset to detect disease.

• Feature Extraction and SVM Classification

With the help of the VGG-16 and 19

models, these molecule indicatives of

environmental stressors such as dehydration,

excessive heat, infections, or pest attacks. By

detecting these biochemical changes before

visible symp- toms manifest, such as wilting or

discoloration, these devices provide real-time

alerts to growers, facilitating timely intervention

to mitigate potential crop damage. For example,

researchers have developed a wearable patch that

can detect stress signals in plants before visible

symptoms appear, allowing early intervention.

Furthermore, advances in Nano sensor

technology have allowed the real-time

monitoring of plant health by detecting H

2

O

2

signaling waves, providing deeper insights into

plant stress responses. These innovations

represent a significant leap toward precision

agriculture, improving crop management

practices, and potentially improving agricultural

productivity.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

866

3.2 Proposed Model

• Dataset Collection and Processing:

A dataset is gathered consisting of images of healthy

and diseased leaves of various species. Open

datasets- like the Plant Village dataset- can be used

for this purpose; they contain labeled images for the

model to train on.

Data Augmentation: Data augmentation

techniques such as rotation, flipping, cropping, and

color changes increase the diversity of training

data and diminish the chance of overfitting. This

helps mimicking the actual world variance in leaf

appearance.

Image preprocessing: The collected image

data would first undergo resizing to account for

model input requirements from a ground-truth

perspective, for example, to 224x224 pixels.

Normalization allows general patterns are extracted

from images related to disease detection in leaves.

These models primarily help to capture hierarchical

patterns in the images, such as edges, textures, and

shapes. The output of the last few aforementioned

Conv-n layers is flattened and then used as input

features for the SVA learning model.

After features are extracted from the VGG-16

and VGG- 19 models, these features are given to the

Support Vector Machine SVM) classifier. SVM is a

supervised learning method that works by

identifying the hyperplane in the feature space that

best separates healthy and diseased leaf images.

The SVM model is fitted on the extracted features to

classify the leaf images as either” healthy” or”

diseased.”

Training the SVM: The SVM is trained with the

feature vectors extracted from the dataset.

Hyperparameters such as the kernel function (linear,

radial basis function, etc.), regularization

parameters, and so on will be optimized to maximize

the classification accuracy.

• IoT Camera Integration for Real-Time Image

Capture VGG Model for Feature Extraction

The VGG16 and VGG19 models will be utilized

to extract advanced features from the images. These

models are built to detect different levels of

features in the images, such as edges, textures,

and shapes, that are crucial for the identification

of diseases in leaves. The output of the last

convolutional layers is flattened and used as input

features for the machine learning model. Support

Vector Machine (SVM): This extracted feature is fed

into the SVM classifier. The SVM algorithm

functions by identifying the optimal hyperplane that

separates the two classes of healthy and diseased

images in the feature space. The trained SVM is

being trained on the extracted features to classify the

two classes of the leaf image as healthy or diseased.

Training the SVM: The extracted feature vector is

used to train the SVM, along with hyperparameters

such as the kernel function (for example, linear,

radial basis function, etc.) and also the regularization

parameters.

IoT Camera Hardware: IoT-enabled cameras are

developed to capture real-time leaf images in the

agricultural field. The images are processed by

connecting the IoT camera to a local server or a

cloud platform.

• User Interface for Image Upload and

Diagnosis Web interface design

To enable users (farmers, re- searchers, etc.) to

upload leaf images for disease diagnosis, a user-

friendly Web interface is created. The interface is

made using web technologies (HTML, CSS,

JavaScript) linked with a back-end server where

the disease detection model is hosted.

Image upload: Users can upload leaf images in a

variety of formats (JPEG, PNG, etc.) through the

interface. The uploaded image will be passed

through trained models to determine whether the leaf

is healthy or diseased.

Real-time diagnosis feedback: After image

processing is complete, the system will provide

feedback about the condition of the leaf indicating

that it is healthy or diseased along with possible

disease names, and will be given in real time by the

system. The system may also suggest actions or

treatments to be performed based on the detected

disease.

• Deployment and Testing

Model Evaluation: Standard performance metrics,

such as accuracy, precision, recall, and F1 score,

are used to evaluate the trained models (VGG16,

VGG19, In- ception v3, and Inception v6). Through

cross-validation, the trained models are proven to be

robust enough to withstand validation on various

datasets.

By enabling federation, users can join external

com- munities, share resources, and maintain open

lines of communication while still benefiting from

end-to-end encryption and administrative control.

This makes it an ideal choice for organizations with

distributed teams or multiple branches that require

secure internal and external collaboration.

System Testing: The whole system, including the

user interface and the IoT camera, is operated in

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

867

real-time conditions so that the hardware interacts

with the users as required. These tests will include

uploading images to the Web interface, retrieving

feedback in real time, and examining the IoT camera

for disease detection accuracy.

Using Arduino and IoT Hardware Camera

Device to Capture Images for Leaf Disease

Detection: Integrating an IoT camera system with

an Arduino plat- form is a powerful way to capture

images for leaf disease detection in real time. This

setup allows for easy deployment in agricultural

fields or greenhouses. An Arduino- based device can

capture images of leaves and upload them to a server

or cloud for processing. Below is an overview of the

methodology for using Arduino with an IoT camera

for leaf disease detection:

IoT Camera Module Camera Module: The

camera module attached to Arduino is responsible

for capturing images of leaves. Popular camera

modules include

OV7670 Camera Module: A low-cost, simple-to-

use camera that can be interfaced with Arduino to

capture small images.

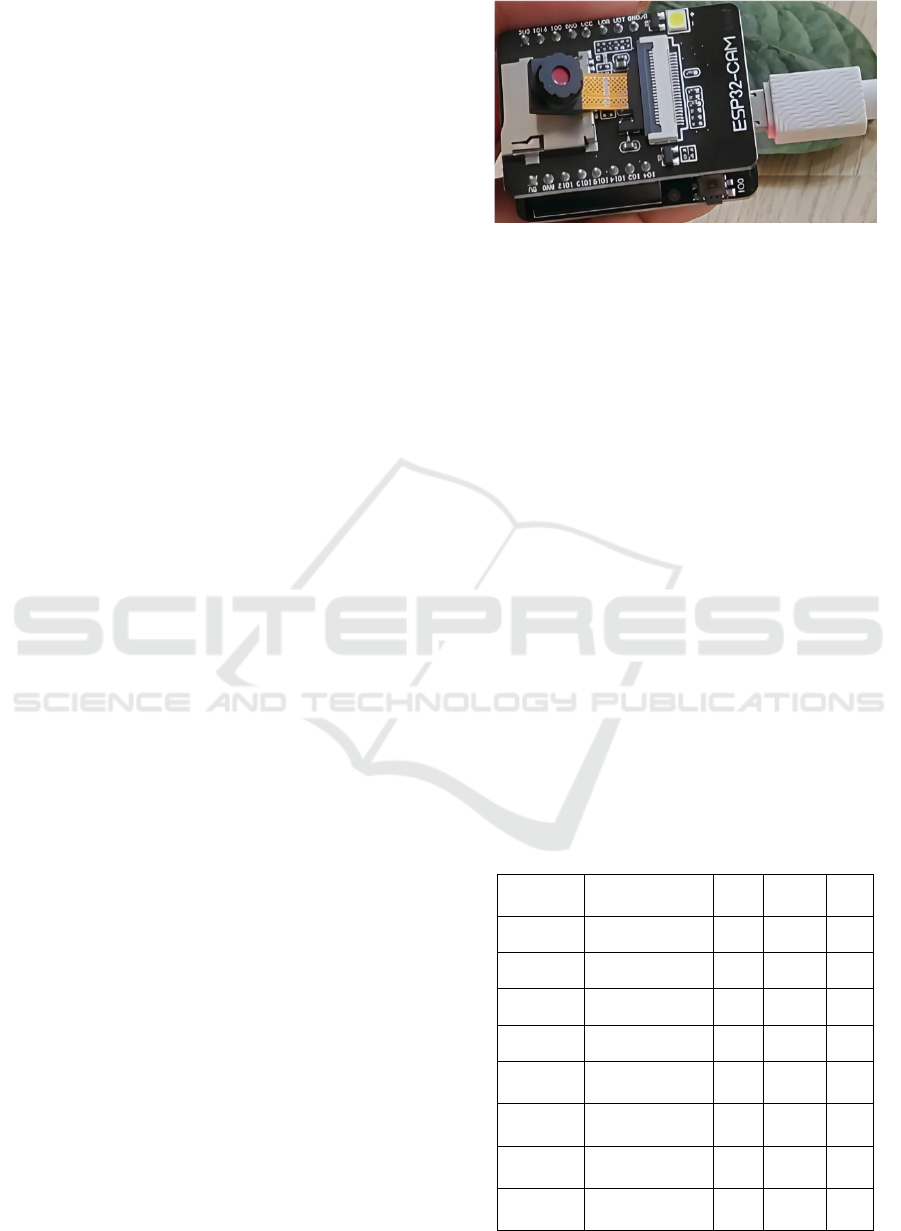

ESP32-CAM: An all-in-one solution with a built-

in camera, Wi-Fi capabilities, and low power

consumption, ideal for IoT applications.

Arducam Mini Module Camera: A high-

resolution camera that can be used for more detailed

image capture. The ESP32-CAM is an ideal choice

for IoT applications due to its built-in camera and

Wi-Fi capabilities, offering a compact and energy-

efficient solution. Alternatively, the OV7670 and

Arducam Mini provide options for different levels of

image quality and complexity, depending on the

application’s needs. So, we choose ESP32-CAM.

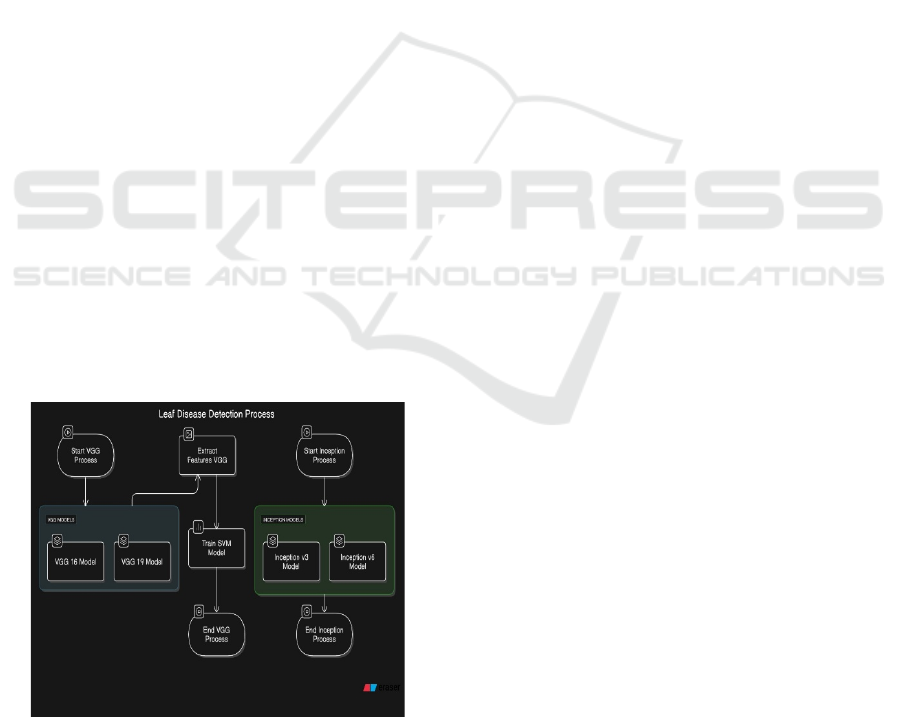

Figure 1: Block-Diagram.

Figure 1 illustrates a leaf disease detection

process that uses deep learning models for the

extraction and classification of characteristics. The

process begins with two parallel approaches: VGG-

based models VGG-16 and VGG-19 and

Inception-based models Inception V3 and Inception

V6. In the VGG pipeline, feature extraction is

performed using VGG models, followed by

training a Support Vector Machine (SVM) for

classification. Inception models process images

separately, but do not involve SVM training. Both

approaches operate independently and terminate

after their respective processes. This methodology

leverages deep learning for feature extraction while

integrating machine learning for classification,

enhancing the accuracy of plant disease detection.

Image Capture and Processing Flow Capturing

Images: The camera module captures images of the

leaves by triggering the Arduino to capture the

image using the camera. A button or sensor (such as

a light or motion sensor) can be used to trigger

image capture when a leaf enters the camera’s field

of view.

Sending Images to a Server:

Once an image is

captured,

it needs to be sent to a server or database

for analysis. The ESP32 can use its built-in Wi-Fi

capabilities to upload the image to a server, or it can

send the image via HTTP POST requests.

Image Processing and Leaf Disease Detection:

After the image is uploaded to the server, it is

processed using machine learning models like

VGG16, VGG19, or InceptionV3 to classify

whether the leaf is healthy or diseased. This can be

achieved by integrating a pre- trained model with a

Flask API to receive images and return predictions.

Secure Transmission via HTTPS: To ensure

privacy and prevent tampering, we implemented

HTTPS using a self- signed SSL certificate. The

Flask Web server was con- figured to encrypt image

uploads and model predictions, securing

communication between users and the server.

ESP32-CAM with Arduino: To integrate an

ESP32-CAM with an Arduino to capture images via

a web interface (providing an IP address and

allowing image capture directly through the

browser), you will need to set up the ESP32-CAM as

a web server.

In this way, users can access the camera’s web page

from a browser, where they can trigger image

captures and send them to a server or save them

locally.

Hardware

Software Requirements:

-

ESP32-CAM Module

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

868

-

Arduino IDE

-

ESP32 Camera Library

3.3 Install ESP32 Board in Arduino

IDE

Open Arduino IDE.

Go to Tools > Boards > Boards Manager, search for

ESP32, and install it.

3.4 Configure the Web Server Code

code to configure the ESP32-CAM as a web server

that will give you an IP address and allow you to

capture an image through a web interface.

3.5 Upload the Code to ESP32-CAM

On the ESP32-CAM while uploading the code (to

enter programming mode).

-

After uploading, restart the ESP32-CAM.

3.6 Monitor Output

Open the Serial Monitor (at 115200 baud) in the

Arduino IDE. Once connected to Wi-Fi, the ESP32-

CAM will print its IP address.

{

css

Connecting to WiFi... Connected to

WiFi

IP Address: 192.168.1.100

}

3.7 Accessing the Web Interface

Open a web browser and enter the IP address

displayed on the Serial Monitor. For example,

http://192.168.1.100/. The ESP32-CAM will capture

and serve a JPEG image of what the camera sees.

3.8 Capture Image

ESP32-CAM will capture images automatically and

from different angles. Figure 2 shows the camera

module.

Figure 2: IOT based ESP-32 CAMERA MODULE.

4 RESULTS AND DISCUSSION

The table compares the performance of deep

learning models (VGG-16, VGG-19, Inception V3,

and Inception V6) for plant disease classification

using different activation functions (ReLU, Sigmoid,

and Tanh). The accuracy and loss values were

obtained by training these models on various

datasets, such as the Fruit Dataset, Vegetable

Dataset, Healthy Dataset, and Diseased Dataset,

while fine-tuning the activation functions to assess

their impact.

In Table 2, VGG-16 and Inception V3

demonstrated superior performance, achieving peak

accuracy of 96% with Tanh, while VGG-19 and

Inception V6 showed relatively lower accuracy,

ranging from 68% to 73%, with higher loss values.

ReLU suffered from slightly higher loss, likely due

to the” dying ReLU” issue, while Sigmoid and Tanh

exhibited better gradient propagation, leading to

lower loss values, particularly in VGG-16 and

Inception V3.

Table 2: Fruit dataset- existing model.

Model

Activation

Function

ReLu Sigmoid Tanh

VGG 16 Accuracy 93 95 96

VGG 16 Loss 18 4 4

VGG 19 Accuracy 73 71 70

VGG 19 Loss 57 58 62

Inception

V3

Accuracy 92 94 96

Inception

V3

Loss 17 4 4

Inception

V6

Accuracy 68 73 72

Inception

V6

Loss 55 57 56

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

869

Table 3: Vegetable dataset- existing model.

Model

Activation

Function

ReLu Sigmoid Tanh

VGG 16 Accuracy 80 100 91

VGG 16 Loss 54 23 22

VGG 19 Accuracy 94 87 100

VGG 19 Loss 20 35 21

Inception

V3

Accuracy 93 93 93

Inception

V3

Loss 18 18 20

Inception

V6

Accuracy 93 92 92

Inception

V6

Loss 19 20 21

In Table 3, VGG-16 achieved a maximum

accuracy of 100% with Sigmoid, although its loss

remained slightly higher at 23 compared to Tanh’s

22. VGG-19 performed best with Tanh, reaching

100% accuracy while maintaining a lower

loss of

21. Inception V3 demonstrated consistent

performance across all activation functions, with

an accuracy of 93% and a minimal variation in loss

values between 18 and 20. Similarly, Inception V6

exhibited stable accuracy, hovering around 92–93%,

with slight fluctuations in loss values.

Table 4: Healthy dataset- proposed model.

Model

Activation

Function

ReLu Sigmoid Tanh

VGG 16 Accuracy 90 91 60

VGG 16 Loss 34 21 29

VGG 19 Accuracy 50 65 52

VGG 19 Loss 70 78 82

Inception

V3

Accuracy 70 69 64

Inception

V3

Loss 60 67 83

Inception

V6

Accuracy 72 65 66

Inception

V6

Loss 59 64 74

In Table 4, VGG-16 demonstrated the highest

accuracy with Sigmoid (91%) while maintaining a

lower loss (21), whereas its performance dropped

significantly with Tanh (60% accuracy and 29 loss).

VGG-19 showed moderate accuracy, peaking at

65% with Sigmoid, but suffered from high loss

values across all activation functions, with the

highest loss (82) recorded for Tanh. Inception V3

exhibited balanced performance, with ReLU

providing the highest accuracy (70%) and the lowest

loss (60), while Tanh resulted in lower accuracy

(64%) and the highest loss (83). Similarly, Inception

V6 performed best with ReLU, achieving 72%

accuracy with the lowest loss (59), whereas its

performance declined with Sigmoid and Tanh.

Table 5: Diseased dataset- proposed model.

Model

Activation

Function

ReLu Sigmoid Tanh

VGG 16 Accurac

y

93 70 95

VGG 16 Loss 25 25 17

VGG 19 Accurac

y

68 75 71

VGG 19 Loss 62 56 58

Inception

V3

Accuracy 69 61 68

Inception

V3

Loss 62 71 69

Inception

V6

Accuracy 64 72 64

Inception

V6

Loss 64 64 76

In Table 5, VGG-16 showed the highest accuracy

with Tanh (95%), while ReLU also performed well

(93%), while Sigmoid showed a significant drop

(70%). The loss values for VGG-16 remained low

across all activation functions, with Tanh achieving

the lowest loss (17). VGG-19 exhibited moderate

accuracy, peaking at 75% with Sigmoid, but

struggled with higher loss values, particularly with

ReLU (62).

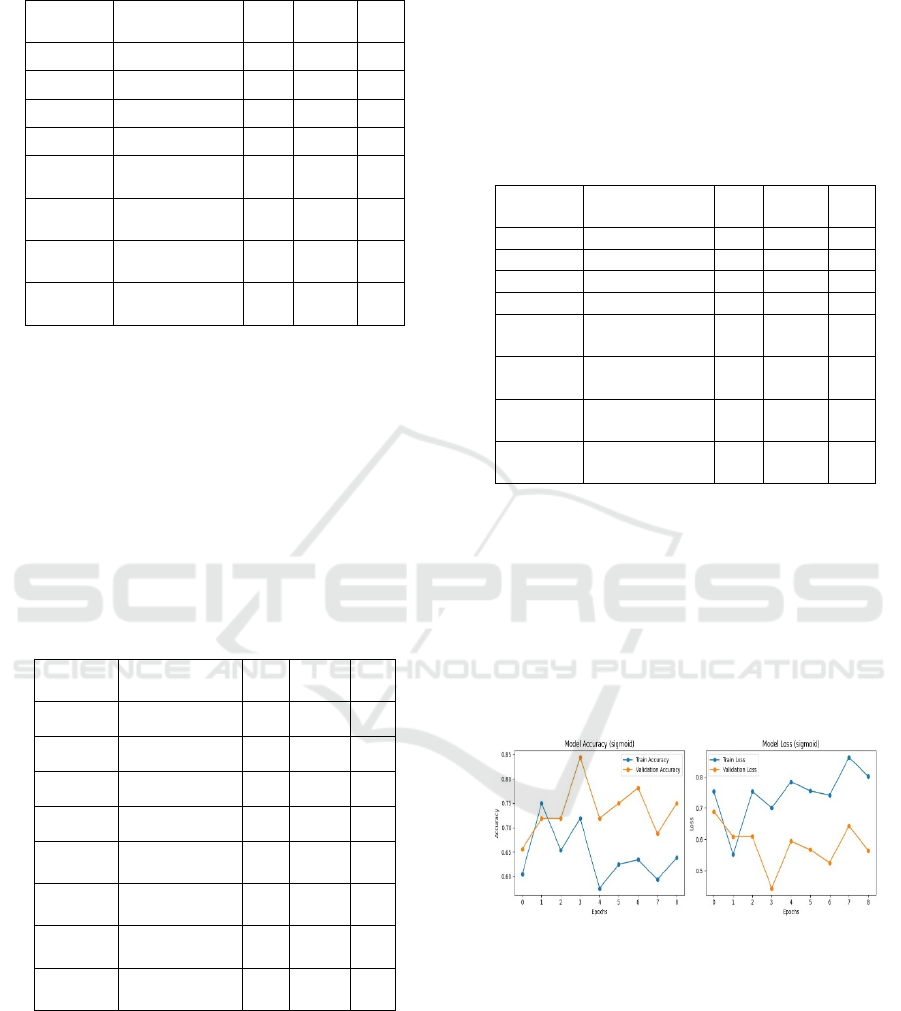

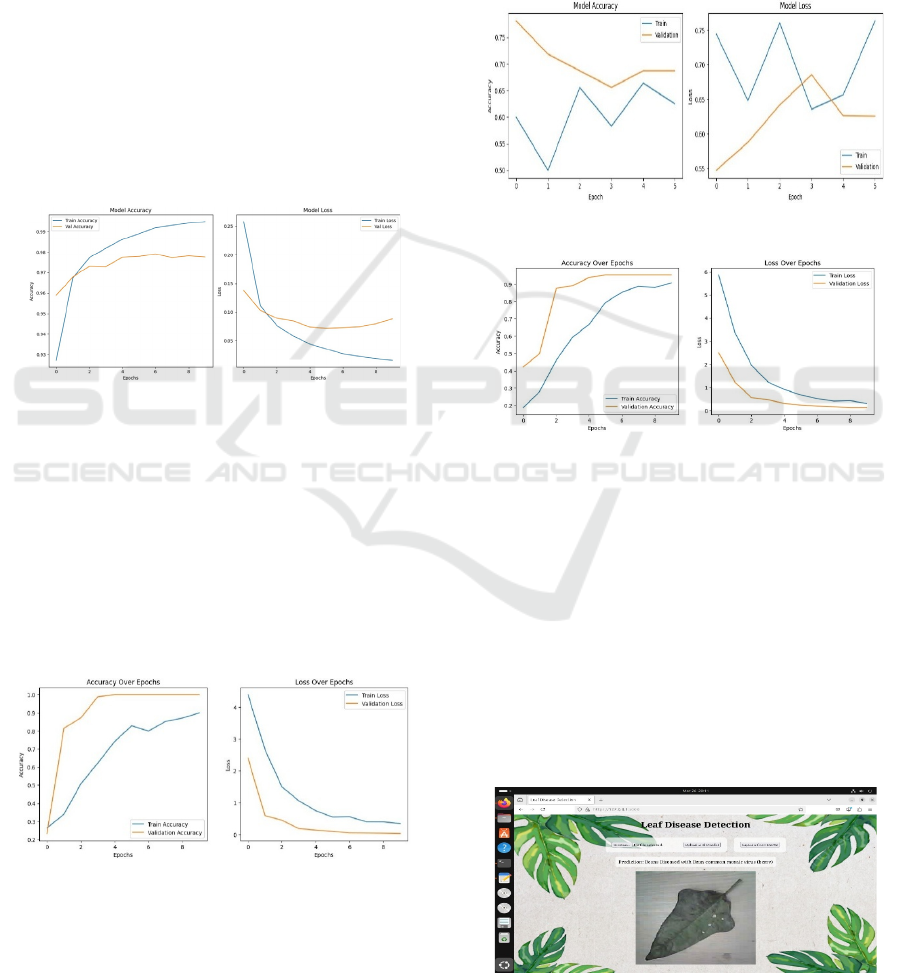

Figure 3: VGG-19 Healthy.

Inception V3 showed relatively lower

accuracy, ranging from 61% (Sigmoid) to 69%

(ReLU), and had consistently high loss values, with

Sigmoid performing the worst (71). Inception V6

achieved its best accuracy with Sigmoid (72%) but

showed similar accuracy with ReLU and Tanh (both

64%), while its loss values were higher, reaching 76

with Tanh. Figure 3 shows the healthy statics.

The performance analysis of VGG-16, VGG-19,

Inception V3, and Inception V6 models across four

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

870

datasets—fruit, veg- etable, healthy, and diseased—

demonstrates variations in accu- racy and loss based

on activation functions. In the fruit dataset, VGG-16

and Inception V3 achieved the highest accuracy with

Tanh (96%), while VGG-19 and Inception V6

showed lower performance, with their best accuracy

reaching 73%. The vegetable dataset exhibited

strong performance, particularly with VGG-19 using

Tanh (100%) and VGG-16 using Sigmoid (100%),

indicating robust classification abilities. Loss values

in this dataset were also relatively low, signifying

stable training.

In contrast, the healthy dataset showed decreased

accuracy, with VGG-19 performing the worst (50%

with ReLU), and high loss values were observed,

particularly for VGG-19 and Inception models,

suggesting challenges in detecting healthy samples

accurately.

Figure 4: SVM Model for Healthy and Diseased Dataset.

The accuracy and loss curves in Figure 4

illustrate the training performance of the proposed

model. The left plot shows a steady increase in

training and validation accuracy, reaching above

98%, indicating effective learning. The right plot

demonstrates a consistent decrease in training and

validation loss, with a minor gap suggesting minimal

overfitting. These results confirm the model’s high

generalization capability in plant disease

classification.

Figure 5: VGG 16 Healthy.

In Figure 5, the diseased dataset exhibited moderate

performance, with VGG-16 achieving the highest

accuracy of 95% using Tanh, while Inception

models struggled to exceed 72%.

Figure 6 illustrates the accuracy and loss trends

of the model across the training epochs. The left

graph shows training and validation accuracy

improving steadily, with validation accuracy

reaching near-perfect levels early on.

The graph on the right shows a steady decrease

in both training and validation loss, indicating

effective learning and highlighting the accuracy and

loss trends of the model across multiple epochs.

Figure 6: VGG 19 Diseased.

Figure 7: VGG 16 Diseased.

The accuracy graph in Figure 7, indicates

fluctuating training accuracy, while validation

accuracy declines, suggesting poor generalization.

The loss graph shows instability in training loss.

The implementation of HTTPS using a self-

signed certificate effectively encrypts

communication, preventing interception. HTTPS

conversion successfully encrypted data transmission,

preventing snipping and MITM attacks. Self-signed

SSL reduced costs, making it feasible for small-

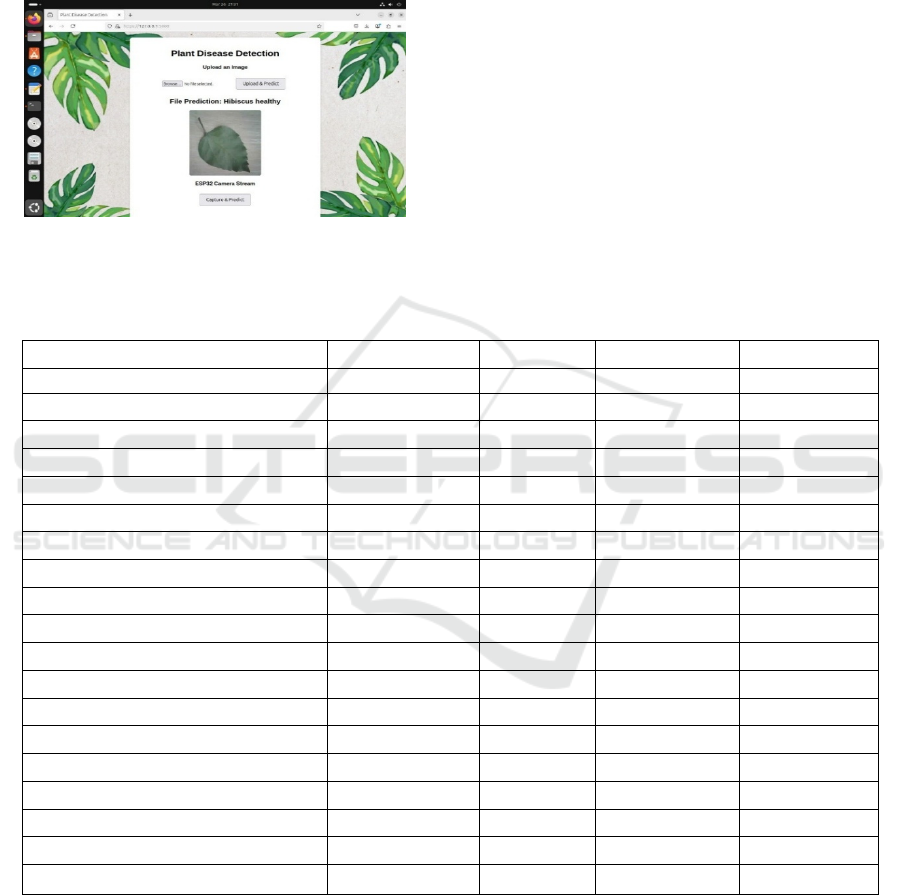

scale applications and internal tools. Figure 8 shows

the user interface.

Figure 8: Secured Web interface detecting real-time leaves

through ESP-32 camera module.

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

871

Once an image is uploaded or captured, it is

processed and classified as” Healthy” if no disease

symptoms are detected. The result is displayed on a

user-friendly web interface, with an option for

further analysis or expert consultation as shown in

figure 9.

Figure 9: Secured Web interface detecting leaf images

through Real-time Database.

In Table 6, it presents the classification

performance of the Support Vector Machine (SVM)

model on various plant dis- ease categories. The

model achieves an overall accuracy of 91%, with

macro and weighted F1-scores of 0.92, indicating

strong classification capabilities. While most classes

exhibit high precision and recall values, some, such

as Bottleguard Diseased (F1-score: 0.73) and

Tomato Diseased (F1-score: 0.75), show relatively

lower performance, suggesting potential

misclassification. The perfect scores (1.00 F1-score)

in several classes indicate effective feature

extraction and differentiation by the model.

Table 6: Classification Report on SVM.

Precision Recall F1-score Support

Beans Diseased 0.77 0.91 0.83 22

Beans Healthy 1.00 0.88 0.94 17

Blackgram Diseased 1.00 1.00 1.00 10

Blackgram Healthy 1.00 0.90 0.95 21

Bottleguard Diseased 0.57 1.00 0.73 16

Bottleguard Healthy 1.00 1.00 1.00 15

Brinjal Healthy 1.00 0.78 0.88 9

Brinjal Diseased 1.00 0.78 0.88 9

Greengram Diseased 1.00 1.00 1.00 12

Guava Healthy 1.00 1.00 1.00 15

Guava Diseased 1.00 1.00 1.00 8

Hibiscus Healthy 1.00 0.82 0.90 11

Jungle flower Healthy 1.00 1.00 1.00 17

Jungle flower Diseased 1.00 1.00 1.00 4

Rose Healthy 1.00 0.75 0.86 8

Tomato Diseased 1.00 0.60 0.75 10

Accuracy 0.91 204

Macro avg 0.96 0.90 0.92 204

Weighted avg 0.94 0.91 0.92 204

5 CONCLUSION AND

FUTURESCOPE

In this project, an integrated and comprehensive

system is developed for leaf disease detection using

advanced deep learning models such as VGG16,

VGG19, and Inception V3. This system aims to

achieve very efficient and accurate diagnosis or

detection of leaf disease based on the developed

ground of pre-trained Convolutional Neural

Networks (CNNs); this is done to classify healthy

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

872

plant images against those with diseases. Through

the integration IoT hardware (like the ESP32

camera), farmers and agricultural experts can shoot

leaf images and upload them for instant observation.

The model was trained with healthy and diseased

leaves across various datasets so that it could

generalize over several plant species. Transfer

Learning was applied, where we harvested the

power of the pre-trained layers from VGG and

Inception models, significantly reducing training

time and computation costs while ensuring desirable

accuracy. Additionally, optimization was done based

on performance, with machine learning classifiers

like SVM being used for better prediction

performance.

Additionally, the project considered the possible

deployment of the system on the cloud, so as to

leverage the platform’s scalability and global

accessibility, such that users from different parts of

the world can ultimately take advantage of the

leaf disease detection system available. An easy

interface design was done for seamless interaction

that would allow an easy image upload and feedback

on prediction. Also, steps to make the model

scalable are catered for with performance

optimizations in place, and this would further enable

the system to easily handle a large volume of

concurrent users with no significant performance

degradation at all.

Thus, this project has shown the possibility of

using deep learning, IoT, and cloud technologies to

radically change the methods of farm management

practices. Systems have now been developed that

provide automatic, accurate, organic, and efficient

leaf disease detection systems that would allow for

timely interventions and particular attention to crops

growing. Future work may include creating

comprehensive models encompassing multiple plant

species, improving real-time detection/dynamic

detection, and interfacing mobile platforms for more

wide accessibility.

REFERENCES

Mohanty, S. P., Hughes, D. P., Salathe´, M. (2016).”

Using Deep Learning for Image-Based Plant Disease

Detection.” Frontiers in Plant Science, 7, 1419. [DOI:

10.3389/fpls.2016.01419]

Toda, Y.; Okura, F. How Convolutional neural networks

diagnose plant disease. Plant Phenomics 2019, 2019,

9237136. [CrossRef] [PubMed]

Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.;

Stefanovic, D. Deep neural networksbased recognition

of plant diseases by leaf image classification. Comput.

Intell. Neurosci. 2016, 2016, 3289801. [CrossRef]

Boulent, J.; Foucher, S.; The´au, J.; St-Charles, P.-L.

Convolutional neural networks for the automatic

identification of plant diseases. Front. Plant Sci. 2019,

10, 941. [CrossRef]

Al-Hiary H., S. Bani-Ahmad., M. Reyalat., M. Braik. And

Z. AlRahamneh. 2011. Fast and accurate detection and

classification of plant diseases. International Journal

of Computer Applications. Vol 17, No 1, pp 31-38.

Arivazhagan S., Newlin Shebia R. “Detection of un-

healthy region of plant leaves and classification of

plant leaf diseases using texture features”. Agricultural

Engi- neering Institute: CIGR journal, 2013. Volume

15, No.1

Hrishikesh Kanjalkar P. and Prof. Lokhande S. “Detection

and Classification of Plant Leaf Diseases using ANN”.

International Journal of Scientific Engineering

Research. 2013, ISSN: 2229 5518

Diseases Detection of Various Plant Leaf Using

Image Processing Techniques: A Review

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=arnumbe

r= 8728325

A Review of Machine Learning Approaches in

Plant Leaf Disease Detection and Classification

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=arnumbe

r= 9388488

Recent Technologies of Leaf Disease Detection

using Image Processing Approach A Review

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=arnumb

er=8275901

Simonyan, K., Zisserman, A. (2014). ” Very Deep Con-

volutional Networks for Large Scale Image

Recognition.” International Conference on Learning

Representations (ICLR), 1–14.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed,

S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabi-

novich, A. (2015). ” Going Deeper with

Convolutions.” Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition (CVPR),

1-9. [DOI: 10.1109/CVPR.2015.7298594]

Jayaraman, P. P., Poonkodi, P. (2019). ”IoT Based Plant

Disease Detection and Diagnosis System.”

International Journal of Innovative Technology and

Exploring Engi- neering (IJITEE), 8(7), 2422-2427.

Perez, L., Wang, J. (2017). ”The Effectiveness of Data

Augmentation in Image Classification using Deep

Learning.” Convolutional Neural Networks for Visual

Recognition, Stanford University.

Chollet, F. (2015). ”Keras: The Python Deep Learning

Library.” Journal of Machine Learning Research,

18(1), 1-6.

P. M. Gajendran, T. V. S. N. Chaitanya, and S. B. Ananya,

”Application of Deep Learning for Plant Disease

Classification,” IEEE Access, vol. 8, pp. 103984-

103993, 2020. doi: 10.1109/ACCESS.2020.2995856.

K. R. Shetty, S. A. R. Shariff, and A. M. Patil, ”IoT- based

Plant Disease Detection System Using Machine

Learning,” IEEE International Conference on

Computing, Communication, and Intelligent Systems

Real-Time Leaf Disease Detection and Fertilizer Recommendation System

873

(ICCCIS), pp. 16, 2020. doi:

10.1109/ICCCIS49118.2020.9194353.

A. T. K. Sahu, B. R. R. Roy, and S. Ghosal, ”A Deep

Learning Approach for Plant Disease Detection Using

Convolutional Neural Networks,” IEEE Transactions

on Industrial Informatics, vol. 17, no. 4, pp. 2741-

2748,2021. doi: 10.1109/TII.2020.3037629.

M. V. V. R. S. Varma and P. P. V. S. Reddy, ”Web-Based

Plant Disease Detection Using Convolutional Neural

Networks,” IEEE International Conference on

Advanced Networks and Telecommunications Systems

(ANTS), pp. 16, 2019. doi:

10.1109/ANTS46884.2019.8966549.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

874