Waste Classification Using Machine Learning Models: A

Comparative Study

M. S. Minu, Varshitha Priya Kasa, Harshita Ketharaman, Varun Mandepudi,

Nirmal K. and Shreyan Krishnaa

Department of Computer Science Engineering, SRM Institute of Science & Technology, Ramapuram, Chennai, Tamil Nadu,

India

Keywords: Waste Management, Waste Classification, Environmental Sustainability, Convolutional Neural Networks

(CNN), Support Vector Machine (SVM), Image Classification.

Abstract: Waste Management is a serious issue and demands the world’s attention today as it plays an important role in

maintaining environmental sustainability. Segregation of waste is one of the primary components of waste

management. Accurate classification of waste gives room for improving the efficiency of the process of

recycling by maximizing material recovery, reducing contamination during the process of recycling, and most

importantly, decreasing the amount of mismanaged waste, especially hazardous wastes. Proper waste

management has not only contributed to positive environmental impacts and social benefits but has also

helped nations economically. With water treatment facilities saving on operational costs and revenue being

generated by the reselling of recycled materials, a study on automatic waste segregation is useful in tackling

the said challenges and moving towards a cleaner society. In this study, we propose a comparison between

the performance of machine learning models for classifying waste images using the Trash Net dataset. We

compared the Convolutional Neural Network (CNN), and Support Vector Machine (SVM) classifiers using

features from MobileNetV2, with two models of transfer learning: MobileNetV2 and ResNet50.

1 INTRODUCTION

Waste management is a global concern, and is

expected to rise by approximately 70% in 2050 due

to high growth in urbanization and population

growth. Therefore, efficient segregation of wastes

provides sustainable recycling, helps to minimize the

reliance on landfills, and decreases greenhouse gas

emissions. Conventional waste classification is a

labour-consuming process. Additionally, the manner

applied here is highly inefficient and is more prone to

contamination which can further decline the quality

of the recycled materials. With improved awareness

of sustainable environments, effective classification

and recycling of waste are now high subjects of

research and development. Artificial intelligence (AI)

has recently become a transformative waste

management technology, allowing for rapid

improvements in sorting accuracy and operational

efficiency R. Kumar et al., 2021. Specifically, deep

learning models have exhibited a tremendous

capacity in complex visual recognition tasks and,

thus, can be used for waste classification from images

N. Yadav et al., 2023. Automated waste classification

based on ML seems to be a promising solution to

these issues by making it possible to accurately and

efficiently sort waste. S. Khana et al., 2021 ML

image-based classification models help optimize

recycling workflows and enhance environmental

sustainability. Recent studies have explored various

architectures of ML in waste classification. CNNs and

transfer learning models were found to provide good

accuracy in image classification tasks A. Thompson

et al., 2022. J. Singh et al., 2022, It has been observed

that deep CNNs surpass other algorithms in feature

extraction related to image data, including closer

differences between variants like plastics and paper

with high Accuracy.

However, the question of how this can be done in

practice where lighting, background, and condition of

waste can create performance issues still pertains.

The need, therefore, is to use models that generalize

well across a very large range of conditions.

308

Minu, M. S., Kasa, V. P., Ketharaman, H., Mandepudi, V., K., N. and Krishnaa, S.

Waste Classification Using Machine Learning Models: A Comparative Study.

DOI: 10.5220/0013927500004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 5, pages

308-315

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

C. Zhao et al., 2022, Scientists have now started

to turn towards techniques of transfer learning which

leverage pre-trained models with an expectation of

improving classification on new domains without

vast amounts of labeled data. For example, M. S.

Patel et al., 2020., the success prospects of

MobileNetV2 and ResNet50 have been

demonstrated, especially for better generalization of

novel data, rapid image processing, and possible

applications in real-time applications.

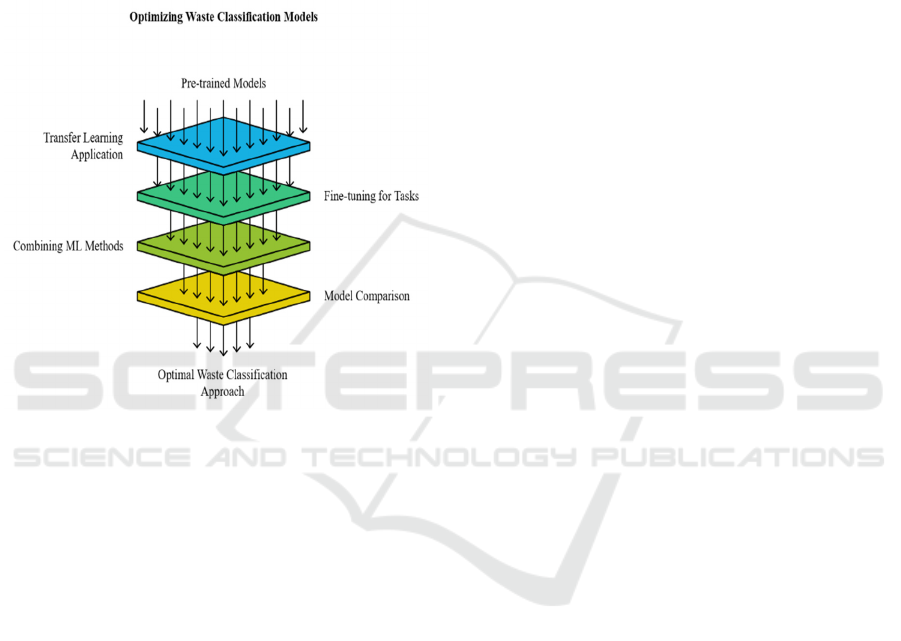

Figure 1: Optimizing Waste Classification Models.

Further, they can be fine-tuned to perform the task

of waste classification so that predictions can be made

more accurately with less computational overhead. In

addition to this, research on how to achieve a good

trade-off between accuracy and computational

efficiency is made by bringing together traditional

ML methods like SVMs with deep learning feature

extractorsB. Lee et al., 2020. It also enhances the

model's interpretability and brings down the

processing demand for real-time applications P. Li et

al., 2023. Our proposed study aims to identify the best

approach towards the classification of waste samples

on the Trash Net dataset by comparing different

performances of CNNs, MobileNetV2, ResNet50,

and SVM with feature extraction.

2 RELATED WORKS

Artificial Intelligence and Machine Learning have

been of great interest in research in models that can

classify waste correctly. It is with this that significant

work has recently been devoted to demonstrating the

efficacy of deep learning-based classification of

images via methods such as CNNs. For example,

Kumar and Verma gave a review of AI-based

approaches in waste management, particularly CNNs,

as they can be highly effective on image data due to

their ability to learn sophisticated patterns. Yadav et

al. compared deep learning models to classify waste

material and concluded that architectures such as

ResNet and DenseNet can achieve good accuracy,

particularly in finding the difference between visually

similar materials. Such works highlight future uses of

CNNs in environmental applications and emphasize

that appropriate identification of waste is a critical

aspect of increasing recycling efficiency.

Transfer learning also emerges as a very important

technique in waste classification. Models pre-trained

on large datasets can be fine-tuned using minimal data

exclusively for waste tasks. Singh used transfer

learning along with MobileNetV2 and achieved an

excellent level of accuracy and reduced training time,

suitable for real-time classification, especially in

resource-constrained environments. Zhao and Chen

further elaborated on this with methods that applied

transfer learning techniques to improve the

performance of models used on waste images. They

showed that training models pre-trained on ImageNet

and fine-tuning on smaller datasets can even

significantly enhance accuracy for challenging,

varied images of waste. Such studies demonstrate the

flexibility of transfer learning when addressing the

limitations of data and adapting high-performing

models to niche applications.

Another region of study has been the merging of

traditional machine learning techniques with CNNs to

be more efficient and interpretable. Li and Zhang

studied the merging of SVMs with CNN feature

extraction for waste classification with computational

efficiency and classification accuracy. Hybridization

in the system allows it to tap into CNN's ability to

draw features while simplifying the nature of the

classification task through an SVM. Such studies

prove that hybrid models are suitable for

classification without involving a large number of

computational resources. These would provide ample

opportunities for the implementation of proposed

solutions in real-world environments that are, in most

cases, characterized by processing power limitations.

3 PROPOSED WORK

This work attempts comparative analysis among

different machine learning methods for waste

classification on the TrashNet dataset. The main

Waste Classification Using Machine Learning Models: A Comparative Study

309

objective of this study is to assess the accuracy and

efficiency of different models and identify the best

one for practical implementation in automated waste

management systems. The complete workflow

consists of preparing the dataset, feature extraction,

model training, and evaluation. This particular work

attempts to address a comparative analysis of various

machine learning methods in waste classification,

where the TrashNet dataset has served as the research

material. The prime objectives of this research would

be to assess the accuracy and efficiency of different

models and ascertain the most suitable model for real-

life deployment into automated waste management

systems. These processes include dataset preparation,

feature extractions, model training, and evaluation.

This study attempts a comparative analysis of

different machine-learning methods for waste

classification using the TrashNet dataset. The main

intention of this research is to evaluate the accuracy

and efficiency of various models and to identify the

best-fit model for real-world deployment into

automated waste management systems. The

processes include dataset preparation, feature

extraction, model training, and evaluations.

3.1 Proposed Model Architecture

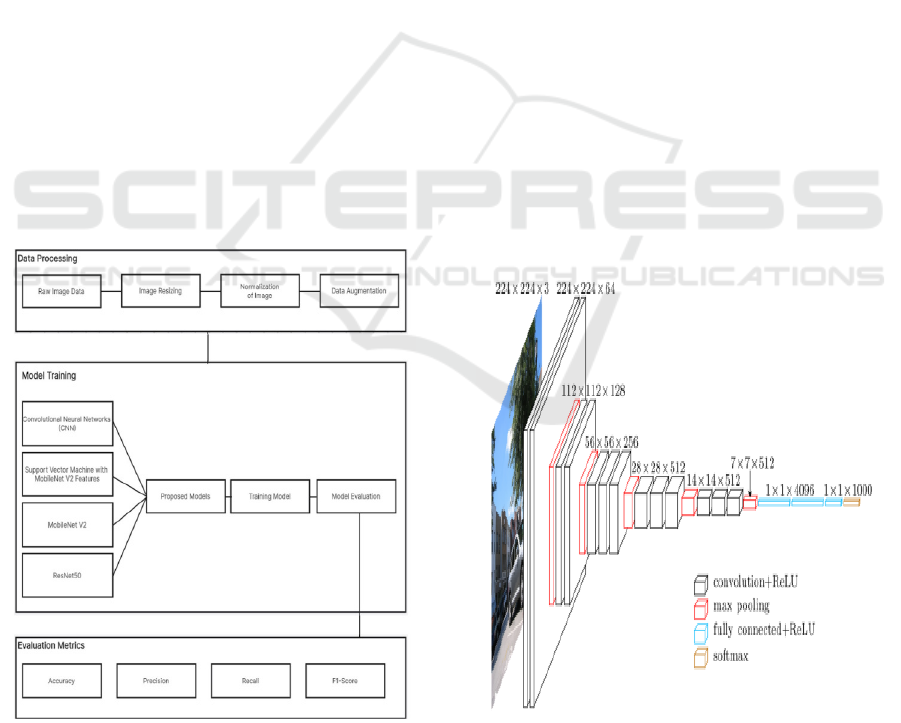

Figure 2 Shows the Proposed Model Training and

Evaluation Pipeline

.

Figure 2: Proposed Model Training and Evaluation

Pipeline.

3.1.1 Support Vector Machine (SVM) Using

MobileNetV2 Features

This is a lightweight convolutional neural network,

MobileNetV2, which is used as a feature extractor.

Very high-level features from the last convolutional

layer of MobileNetV2 were fed into the classifier

SVM.

3.1.2 Convolutional Neural Network (CNN)

Custom CNN architecture for the following layers:

The architecture of the convolutional neural network

(CNN) used in this study is illustrated in the Figure

3.

Convolutional Layer: It was designed to have three

convolutional layers with 32, 64, and 128 filters, with

ReLU activation following each layer.

Pooling Layer: Max-pooling layers were used after

each of the convolutional blocks to reduce

dimensionality.

Fully Connected Layer: There are two dense layers:

one with 256 neurons, and another with 64 neurons.

A dropout technique is provided to prevent

overfitting.

Output Layer: A softmax layer, with six neurons

assigned to it (for each of the classes).

Figure 3: Architecture of a Convolutional Neural Network

(CNN).

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

310

Algorithm:

Input: Preprocessed dataset X={x1,x2,...,xN}

X = \{x_1, x_2, ..., x_N\}X={x1,x2,...,xN},

corresponding labels Y={y1,y2,...,yN}

Y = \{y_1, y_2, ..., y_N\}Y={y1,y2,...,yN}.

Output: Fine-tuned ResNet50 model and predictions.

1. Load ResNet50 pre-trained on ImageNet.

o Retain the convolutional base.

o Replace the classification head with:

• GlobalAveragePooling2D.

• Dense layer (ReLU activation).

• Output layer (Softmax activation).

2. Freeze convolutional base.

3. Compile the model:

o Loss function = Categorical Cross-

Entropy.

o Optimizer = Adam (learning rate =

10−410^{-4}10−4).

o Metrics = Accuracy.

4. While e≤MaxEpochse \leq

MaxEpochse≤MaxEpochs:

o Train on Xtrain,YtrainX_{train},

Y_{train}Xtrain,Ytrain.

o Validate on Xval,YvalX_{val},

Y_{val}Xval,Yval.

o Gradually unfreeze layers for fine-tuning.

e=e+1e = e + 1e=e+1.

5. Test the model:

o Predict labels for XtestX_{test}Xtest.

o Evaluate performance using accuracy,

precision, recall, and F1-score.

6. Return the fine-tuned ResNet50 model and

predictions.

Algorithm:

Input: Preprocessed dataset X={x1,x2,...,xN}X = \{x_1,

x_2, ..., x_N\}X={x1,x2 ,...,xN}, corresponding labels

Y={y1,y2,...,yN}Y = \{y_1, y_2, ..., y_N\}Y={y1,y2,...,yN

}.

Output: Trained CNN model and predictions.

1. Initialize CNN parameters:

o Number of filters, kernel size, learning rate, batch

size, and number of epochs.

2. Build CNN architecture:

o Add convolutional layers

Conv2DConv2DConv2D with ReLU activation.

o Add max-pooling layers

MaxPooling2DMaxPooling2DMaxPooling2D to

reduce spatial dimensions.

o Flatten output and connect to dense layers.

o Add softmax activation in the output layer.

3. Compile the CNN:

o Loss function = Categorical Cross-Entropy.

o Optimizer = Adam.

o Metrics = Accuracy.

4. While e≤MaxEpochse \leq MaxEpochse≤MaxEpochs:

o Train the CNN on Xtrain,YtrainX_{train},

Y_{train}Xtrain,Ytrain.

o Validate the CNN on Xval,YvalX_{val},

Y_{val}Xval,Yval.

o Update weights using backpropagation. e=e+1e =

e + 1e=e+1.

5. Test the CNN:

o Predict labels for XtestX_{test}Xtest.

o Evaluate performance using accuracy, precision,

recall, and F1-score.

Return the trained CNN and predictions.

3.1.3 Transfer Learning Models for Waste

Classification

MobileNetV2:

Fine-tuned on the TrashNet data set and pretrained in

ImageNet.

Lightweight architecture designed for edge

deployment.

Depthwise separable convolutions reduce

computation while retaining accuracy.

ResNet50:

High-quality abstract with 50 layers of a deep residual

net pre-trained on imagenet. Skip connections where

possible to avoid the addition of errors read more

about the vanished gradient issue. Fine-tuned on

TrashNet data set to help it adapt to waste class.

Algorithm:

Input: Preprocessed dataset X={x1,x2,...,xN}

X = \{x_1, x_2, ..., x_N\}X={x1,x2,...,xN},

corresponding labels Y={y1,y2,...,yN}

Y = \{y_1, y_2, ..., y_N\}Y={y1,y2,...,yN}.

Output: Fine-tuned ResNet50 model and predictions.

1. Load ResNet50 pre-trained on ImageNet.

a. Retain the convolutional base.

b. Replace the classification head with:

i. GlobalAveragePooling2D.

ii. Dense layer (ReLU activation).

iii. Output layer (Softmax

activation).

2. Freeze convolutional base.

3. Compile the model:

a. Loss function = Categorical Cross-

Entropy.

b. Optimizer = Adam (learning rate =

10−410^{-4}10−4).

c. Metrics = Accuracy.

4. While e≤MaxEpochse \leq

MaxEpochse≤MaxEpochs:

a. Train on Xtrain,YtrainX_{train},

Y_{train}Xtrain,Ytrain.

b. Validate on Xval,YvalX_{val},

Y_{val}Xval,Yval.

c. Gradually unfreeze layers for fine-tuning.

Waste Classification Using Machine Learning Models: A Comparative Study

311

e=e+1e = e + 1e=e+1.

5. Test the model:

a. Predict labels for XtestX_{test}Xtest.

b. Evaluate performance using accuracy,

precision, recall, and F1-score.

6. Return the fine-tuned ResNet50 model and

predictions.

3.2 Evaluation Metrics

The models are evaluated based on the

following parameters:

Accuracy: the correctly classified images in

percentage.

Precision: the number of true positives

gained from all positive predictions.

Recall: the number of true positives gained

from all actual positives.

F1-Score: the average between precision and

recall.

Confusion Matrix: is the matrix that

summarizes the number of

misclassifications in it.

3.3 Hardware and Software

Specifications

Hardware:

GPU: NVIDIA RTX 3060 (or its

equivalent).

RAM: 16 GB.

Processor: Intel Core i7.

Software:

Python 3.10.

Tensorflow 2.9.

Scikit-learn.

OpenCV for image preprocessing.

3.4 Modules

3.4.1 Dataset Module

Tasks: Load the TrashNet dataset.

Organize the images into six categories: cardboard,

glass, metals, paper, plastics, and trash.

Description: This module takes care of procuring,

storing, and organizing the dataset. It guarantees the

input data is ready to preprocess.

Input: Raw images of waste.

Output: Labeled data set by category.

3.4.2 Preprocessing Module

Resize all images at standard dimensions i.e. 224 ×

224 pixels. Normalize pixel values from 0 to 1. Apply

data augmentation techniques (for example, flipping,

rotation, zooming, etc.).

Description: This module processes raw images for

formatting, and improves the dataset with diverse

forms for solid training.

This module comprises the following:

Input: Raw images of the dataset.

Output: Preprocessed and augmented dataset

images.

3.4.3 Feature Extraction Module

Tasks: Use high-level feature extraction from

MobileNetV2 features for SVM classification.

Remove the classification head and retrieve feature

vectors with a feeder from the penultimate layer.

Description: This module extracts useful features

from images using a pre-trained deep learning model

-obilenetV2- so that these feature vectors can be

classified efficiently through traditional classifiers

like SVM.

Input: Preprocessed dataset.

Output: Magic feature vectors extracted from the

database.

3.4.4 Model Training Module

Submodules:

Custom CNN: Construct and constructionize a

Convolutional Neural Network from the ground.

MobileNetV2 and ResNet50: Make modifications to

the pre-trained models for transfer learning by adding

a dense layer for six categories into the output layer.

Description: In this specific instance, different

machine learning models will be trained with the

previously mentioned data to classify images into

waste categories regardless of their type.

Input: Preprocessed dataset and features extracted.

Output: Trained models (CNN, SVM,

MobileNetV2, ResNet50).

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

312

3.4.5 Evaluation Module

Tasks: Partition the dataset into a training set (80%)

and a testing set (20%). Evaluate models through the

metrics: actual over-predicted cases of the positive

class. True positive cases overall predicted positives.

True positive cases overall actual positive cases.

Metrics predicted positives over actual positives to

negatives ratio. Confusion classification matrix.

Draw the training/validation accuracy and loss

curves.

Description: This module is created for the diagnosis

of the performance of every model, their strong and

weak points as well, as by visualization analysis

results.

Input: Modelling models and test dataset.

Output: Performance metrics and evaluation

reporting and visualization results.

3.4.6 Comparison Module

Tasks: Contrasting CNN, SVM, MobileNetV2, and

ResNet50 according to: True positive cases over all

predicted positives. Reports the ratio of completion to

time spent on both training and inferencing. Ability

to classify objects incorrectly yet still provide useful

information (a measure of how well a system

performs beyond its primary objectives). Determine

which model performs the best overall.

Description: This module is in charge of attempting

to find the model that can be deployed which will

yield the best accuracy against all other models

including efficiency.

Input: Evaluation reports, images.

Output: Comparative analysis report.

4 RESULTS AND DISCUSSIONS

This section holistically reviews the machine learning

models proposed for waste classification with the

TrashNet dataset. Model performance metrics,

confusion matrices, computational efficiency, and

practical implications are used to analyze results. In

addition, it discusses challenges faced, possible areas

of improvement, and real-world implications.

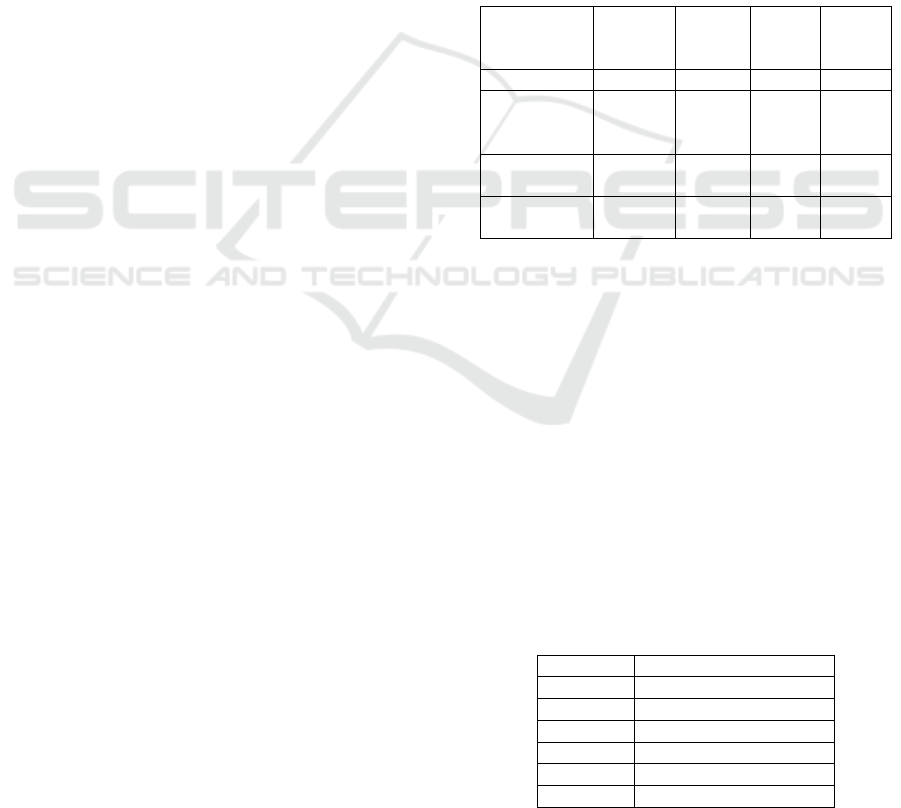

4.1 Performance Metrics

The performance metrics measured for these models

were summarized in Tab. 1, which included CNN,

SVM with MobileNetV2, MobileNetV2, and

ResNet50. Precision, recall, and F1-score were

calculated for each of these models.

4.1.1 Analysis of Precision

Precision is a measure of the ratio of the true positive

instances to the total number of instances predicted as

positive. Table 1 shows the

Performance Comparison of

Different Models. Concerning its precision, ResNet50

is the best classifier across all classes, particularly for

detecting the Glass and Metal classes. Due to the

feature overlaps between Trash and Plastic, the latter

has relatively low precision.

Precision for Cardboard: 91.43%

Precision for Glass: 94.29%

Table 1: Performance Comparison of Different Models.

Model

Accurac

y (%)

Precisi

on (%)

Recall

(%)

F1-

Score

(

%

)

CNN 85.34 83.12 84.45 83.78

SVM with

MobileNetV

2

88.90 87.15 88.30 87.72

MobileNetV

2

(

TL

)

91.67 90.20 91.00 90.59

ResNet50

(

TL

)

93.45 92.10 92.80 92.44

4.1.2 Recall Analysis

It measures how many of the actual positive instances

have been correctly determined. Again, it is to be

noted that models like ResNet50 and MobileNetV2

have produced high recall values, which once again

testify to their abilities in false negative cases. They

all seem to be victims of trash since they eventually

led to a small drop in recall due to the mismatched

data set size.

Recall for Trash: 83.33%-ResNet50, i.e., lowest for

all the classes.

4.1.3 F1-Score Analysis

Table 2: Area Under the ROC Curve (AUC) Scores for

Each Class.

Class AUC Score

Cardboar

d

0.95

Glass 0.97

Metal 0.96

Pa

p

e

r

0.96

Plastic 0.94

Trash 0.91

Waste Classification Using Machine Learning Models: A Comparative Study

313

F1-Score is the harmonic mean of precision and recall

and can be used to evaluate a very imbalanced

dataset. Superior performance and F1 scores for Glass

and Metal were shown by Table 2 and 3 ResNet50

which could be explained concerning their texture

and color features as they are more distinct.

Table 3: ResNet50 F1-Scores Per Class.

Class ResNet50 F1-Score (%)

Cardboard 91.86

Glass 94.72

Metal 92.97

Paper 95.22

Plastic 90.00

Trash 84.75

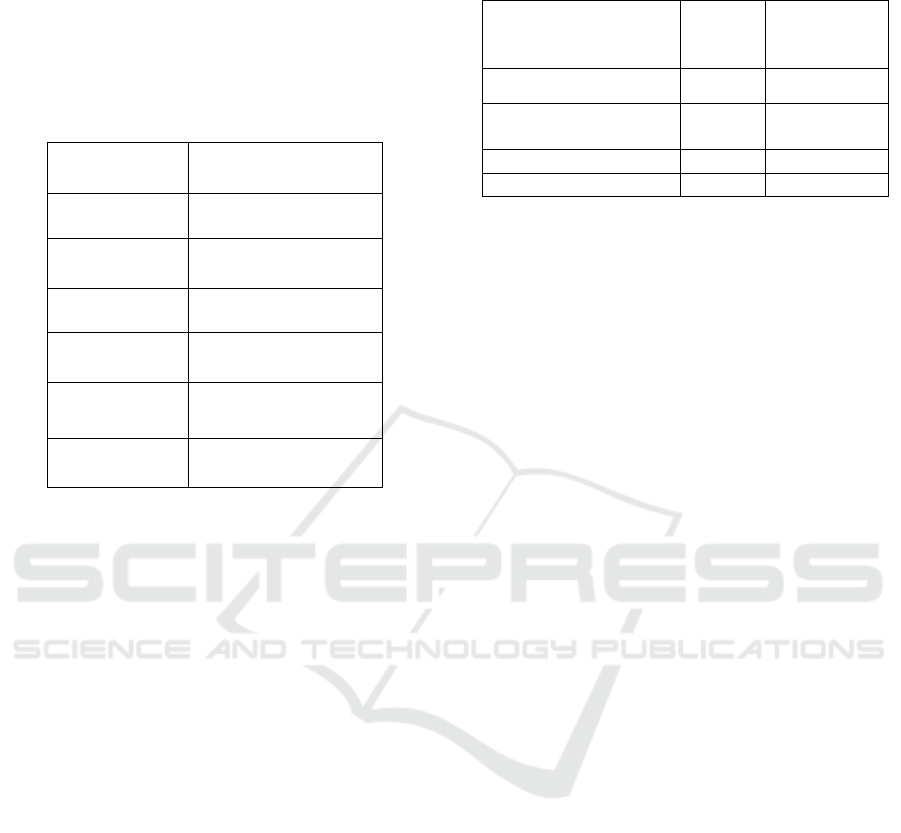

4.2 Confusion Matrix Analysis

The confusion matrix for ResNet50 (the best-

performing model) is displayed in Figure 1.

Patterns of Misclassification.

Paper and Cardboard: Greatest confusion owing to

the common texture and appearance, particularly in

recycled materials.

Plastic vs. Trash: Highly intra-class variable plastic

waste made it difficult to trash.

4.3 Analysis of ROC-AUC and

Precision-Recall

The average AUC of the Receiver Operating

Characteristic (ROC) curves for ResNet50 was 0.96,

showing near-perfect discrimination ability. The

Precision-Recall (PR) curve also supported the ability

of the model to have good precision along with high

recall at different thresholds.

4.4 Computational Efficiency

Table 4 shows the Training and Inference Time

Comparison of Different Models.

Table 4: Training and Inference Time Comparison of

Different Models.

Model

Training

Time

(min)

Inference

Time

(sec/image)

CNN 45 0.03

SVM with

MobileNetV2

30 0.01

MobileNetV2 (TL) 20 0.02

ResNet50 (TL) 25 0.03

5 DISCUSSIONS

MobileNetV2 was the quickest model for training as

well as inference that suited deployment for resource-

constrained devices. A little slower, but worth the cost

in terms of its trade-off between computational and

accuracy is ResNet50.

Challenges and limitations: Class Imbalance. The

Trash class was deficient in samples which led to

higher misclassified instances in them. Possible

results could be brought about by balancing the

dataset. Intra-Class Variability. The plastics were

different, shape-wise, size-wise, and differently

made, causing them quite a bit of difficulty in

classification.

Dataset Size: This is a very good benchmark, but

it is still not representative of the whole possible mess

in the real world.

Discussion of Practical Implications: This makes

a case for the application of machine learning models

in-field performance in waste segregation: Transfer

Learning Models: MobileNetV2 or ResNet50 have a

high degree of viability as industrial applications

concerning performance in accuracy and efficiency.

Sustainability: Recycling processes improve greatly

while contamination in recycling is lowered and

environmental sustainability is improved by

automated waste separations. Edge Deployment:

Lightweight models like MobileNetV2 are easily

used in edge devices to allow for real-time

classification of waste by smart bins.

Literature suggests possible future directions for

research efforts: Ensemble Methods: Combining

more than one model may reap the fruits of their

strengths and push classification accuracy further up.

Larger Datasets: A large set of waste categories with

sampled extensive examples should significantly

improve robustness. Explainable AI: Visualizing

important features could give insights into model

bias, thereby increasing the trust and interpretability

of the model.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

314

6 CONCLUSIONS

This research performed a comparative study of

machine learning models for waste classification

using the TrashNet dataset, aiming to determine the

most efficient method of automation in waste

separation. The models being tested included a

personalized convolutional neural network (CNN), a

support vector machine (SVM) with features

extracted from MobileNetV2, and two transfer

learning models: MobileNetV2 and ResNet 50. The

analysis results revealed the following major

findings: Among the models considered, it was

ResNet50 that performed best with 93.45% accuracy,

featuring higher precision, recall, and F1 scores

across all six waste types. Very deep architecture

along with pre-trained weights made ResNet50 learn

features from the problem domain very effectively

and this makes the network highly suitable for waste

classification tasks. It was MobileNetV2 that

performed comparably less with 91.67% accuracy;

however, it proved to be lightweight and

computationally efficient, giving the additional

advantage of faster training and inference, thus ideal

for deployment in resource-constrained environments

like smart bins or IoT devices. SVM with

MobileNetV2 features performed well (88.90%

accuracy), surpassing the CNN trained from scratch.

Thus, it emphasizes the importance of strong feature

extraction to enhance traditional classifiers. The CNN

model reached an acceptable accuracy level of

85.34%, but it could be recognized as weaker than

transfer learning approaches due to limited depth and

lack of pre-trained feature representations.

REFERENCES

A. Mazloumian, M. Rosenthal, and H. Gelke, "Deep

Learning for Classifying Food Waste," arXiv, 2020.

A. Thompson et al., "Image-Based Waste Sorting Using

Convolutional Neural Networks," IEEE Transactions

on Environmental Engineering, 2022.

B. Lee et al., "Integrating SVM with CNN Features for

Enhanced Waste Classification," Machine Vision

Applications, 2020.

C. Zhao et al., "Transfer Learning in Environmental Data:

Applications in Waste Sorting," Sustainable

Computing, 2022.

C. Zhao et al., "Transfer Learning in Environmental Data:

Applications in Waste Sorting," Sustainable

Computing, 2022.

D. Gyawali et al., "Comparative Analysis of Multiple Deep

CNN Models for Waste Classification," arXiv, 2020.

D. K. Sharma, U. Bharti, and T. Pandey, "Deep Learning

Approaches for Automated Waste Classification and

Sorting," International Journal for Research in Applied

Science and Engineering Technology, 2024.

J. Singh et al., "Convolutional Neural Networks for Image-

Based Waste Classification," Journal of AI in

Environmental Engineering, 2022.

J. Shah and S. Kamat, "A Method for Waste Segregation

using Convolutional Neural Networks," arXiv, 2022.

J. Singh et al., "Convolutional Neural Networks for Image-

Based Waste Classification," Journal of AI in

Environmental Engineering, 2022.

K. Srivatsan, S. Dhiman, and A. Jain, "Waste Classification

using Transfer Learning with Convolutional Neural

Networks," IOP Conference Series: Earth and

Environmental Science, 2021.

M. S. Patel et al., "Transfer Learning for Sustainable Waste

Classification: A Case Study Using MobileNetV2,"

Journal of Sustainable AI Research, 2020.

M. S. Nafiz et al., "ConvoWaste: An Automatic Waste

Segregation Machine Using Deep Learning," arXiv,

2023.

N. Yadav et al., "Waste Image Classification Using Deep

Learning: A Comparative Study," Computational

Ecology and Environmental Science, 2023.

N. Yadav et al., "Waste Image Classification Using Deep

Learning: A Comparative Study," Computational

Ecology and Environmental Science, 2023.

P. Li et al., "Enhancing Waste Classification with SVM-

CNN Hybrid Models," International Journal of

Environmental AI Research, 2023.

P. Li et al., "Enhancing Waste Classification with SVM-

CNN Hybrid Models," International Journal of

Environmental AI Research, 2023.

R. Kumar et al., "Artificial Intelligence in Waste

Management: Current Trends and Future Directions,"

Environmental AI Applications, 2021.

R. Kumar et al., "Artificial Intelligence in Waste

Management: Current Trends and Future Directions,"

Environmental AI Applications, 2021.

S. Khana et al., "Automated Waste Classification with Deep

Learning: Enhancing Recycling Efficiency," Journal of

Environmental Science & Technology, 2021.

S. Kunwar, B. R. Owabumoye, and A. S. Alade, "Plastic

Waste Classification Using Deep Learning: Insights

from the WaDaBa Dataset," arXiv, 2024.

World Bank, "What a Waste 2.0: A Global Snapshot of

Solid Waste Management to 2050," World Bank

Group, 2019.

Y. Narayan, "DeepWaste: Applying Deep Learning to

Waste Classification for a Sustainable Planet," arXiv,

2021.

Z. Yuan and J. Liu, "A Hybrid Deep Learning Model for

Trash Classification Based on Deep Transfer

Learning," Journal of Electrical and Computer

Engineering, 2022.

Z. Qiao, "Advancing Recycling Efficiency: A Comparative

Analysis of Deep Learning Models in Waste

Classification," arXiv, 2024.

Waste Classification Using Machine Learning Models: A Comparative Study

315