User‑Driven RAG System with LlamaIndex Multi‑Agent

Architectures and Qdrant

M. Devika, Shatakshi R., Madhuvanthi S. and Tarunya V. V.

Department of Computer Science and Engineering, SRM Institute of Science and Technology, Ramapuram, Chennai, Tamil

Nadu, India

Keywords: Retrieval‑Augmented Generation (RAG), User Driven Customization, LlamaIndex Multi‑Agent Architecture,

Qdrant Vector Search, Chunking Strategies, Embedding Models, Semantic Search, Hybrid Search, Reranking

Algorithms, Query Optimization.

Abstract: Retrieval-Augmented Generation (RAG) systems have achieved substantial success in the field of information

retrieval and text generation by augmenting state-of-the-art large language models (LLMs) with external

knowledge. Nevertheless, current RAG architectures fall short on three main fronts: user adaptability, as they

need a lot of code changes to modify important retrieval settings (for example: chunking methods, embedding

models, and reranking methods); We present a user-controlled RAG system powered by the multi-agent

architecture of LlamaIndex and the vector search capabilities of Qdrant for real-time customized RAG. To

increase system adaptability, we propose a new Adaptive Retrieval Feedback Loop (ARFL) where users are

able to iteratively adjust queries given the confidence and relevance of generated responses. The ARFL system

can automatically modify retrieval parameters according to user feedback or retrieval outputs with low

confidence scores while limiting the amount of time to query again which causes more manual effort with

possibly higher retrieval precision. Our system enables users to change retrieval configurations easily with its

conversational interface, without the requirement of going into any codebase. This advances the frontiers of

user-centric generative models by componentizing the RAG systems using pipeline-based architectures in

order to make RAG systems more intuitive, flexible and user preference-centric.

1 INTRODUCTION

Retrieval-augmented generation (RAG) systems L.

Lewis, et al., 2023 combine retrieval and generation

and have been a game-changer in information

retrieval and text generation by integrating external

knowledge with large language models (LLMs) A.

Gupta and R. Patel. 2022 Models like these allow for

more accurate and contextually relevant natural

language processing (NLP) tasks, including state-of-

the-art QA, summarization, and decision support

systems. M. Zhang, 2021 RAG (retrieval-augmented

generation) systems enhance generative models by

injecting enriched contextual knowledge by

retrieving relevant documents or fragments from

structured and unstructured databases. R. Kim and S.

Lee., 2022 However, current RAG implementations

are mostly inflexible in nature, needing several

tedious and nontrivial manual adjustments to

maximize performance on the target domain/use case.

Such a restriction hinders their performance in

flexible scenariosing where retrieval mechanisms

must align with the requirements of a user.

One of the main limitations of existing RAG

systems is their dependence on static retrieval

pipelines. In general, J. Thompson, 2023., they are

based on a fixed search strategy, chunking

mechanism, and reranking strategy, making it

difficult for non-expert users to customize. H.

Singh,2022., The user-unfriendly nature of this

approach leads to issues in dynamically changing the

retrieval parameters as we need to go into the source

file and do code debugging and/or re-configuration at

a very big scale. E. Williams, 2023 Also, a lot of

existing RAG systems use either a single retrieval

strategy, be it semantic search or a keyword-based

one which may not be optimal for all datasets and

query types. Therefore, the need for a more adaptable

and interactive RAG model, enabling users to change

the core extraction and retrieval components with

limited technical exposure, is dire.

754

Devika, M., R., S., S., M. and V., T. V.

User-Driven RAG System with LlamaIndex Multi-Agent Architectures and Qdrant.

DOI: 10.5220/0013920300004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 4, pages

754-761

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

T. Nakamura, 2021., This paper proposes a novel

user-driven RAG system that combines the multi-

agent architecture of LlamaIndex with the vector

search capabilities of Qdrant to overcome these

challenges. In contrast to traditional RAG systems,

our system features a modular multi-agent

architecture that allows users to modify key

components of the retrieval pipeline on the fly. Users

can dynamically change chunking strategies,

embedding models, searching mechanisms (semantic,

keyword-based, or hybrid), and reranking algorithms.

B. Fernandez, 2023., Because changes can be made

by the users through a conversation interface, it

enables non-expert users to improve the system

without changing the system code.

We introduce an Adaptive Retrieval Feedback

Loop (ARFL) that will improve system adaptivity.

Built on top of the standard T-RFL API, the ARFL

system uses real-time feedback from users to adjust

retrieval parameters depending on the performance of

the model, while also regulating the confidence level

of the outputted messages. This capability allows the

system to modify its behavior based on the relevance

and quality of information it retrieves, leading to a

more accurate and efficient retrieval process. ARFL

integration with our system reduces the repeated

manual entry of queries, increases retrieval accuracy,

and guarantees that users can interactively adjust the

system’s behavior in response, providing it with the

adaptability to changing needs.

D. Martinez, 2022 As a multi-agent system, the

components of the system can specialize on certain

types of tasks respectively (e.g. document retrieval,

ranking, response generation) and contribute to an

overall flexible pipeline. This can also achieve more

modular components and also higher efficiencies in

query/response relevance. We utilize the high-

performance vector search engine Qdrant to provide

scalable and efficient nearest-neighbor search,

further enhancing the ability of the retrieval module.

A. Green, 2023., Hence, employing ANN approaches

alongside GPU acceleration enables Qdrant to

quickly and correctly retrieve from huge knowledge

bases, facilitating real time responses from

applications.

The model that underlies our system is broadly

applicable to a range of work-display tasks, from

enterprise knowledgebase reuse, academic literature

T. T. Procko and O. Ochoa, 2024 to automated

customer support S. Kumar,2022. Then it is possible

to annotate the retrieval configurations for the system

to be able to optimize themselves without too much

effort and manual reconfiguration depending on the

change in needs P. Robinson,2023. This broadens

the applicability of RAG for different real-world use

cases.

In this paper, we provide details of the design,

implementation, and evaluation of the proposed user-

driven RAG system. Ambitious experiments show

the system preserves higher adaptability and higher

retrieval accuracy than existing methods. The study

also discusses practical use cases, highlighting how

customizable RAG frameworks can drastically boost

information retrieval in dynamic scenarios. The user

can directly customize the parameters for retrieving

them, and the proposed method, ARFL, can adjust

them over training; thus, the system will be helpful

for exploring more interactive and adaptable AI-

driven retrieval models. This work is only the tip of

the iceberg for user-centric AI architectures, and lays

the groundwork for future work 14 on retriever

architectures that are adjustable according to a users'

needs.

2 RELATED WORKS

V. Gummadi., et al., 2024 Covering the security

challenges of Retrieval - Augmented Generation

(RAG) - a method of improving knowledge output of

Large Language Models (LLMs) through the

integration of accredited external data the paper

“Enhancing Communication and Data Transmission

Security in RAG using Large Language Models” can

be found in the proceedings of the International

Conference on Sustainable Expert Systems. Key

strategies to safeguard against these threats, including

the use of data at risk filtering, adversarial training,

adversarial robustness, the use of anomaly detection

algorithms, and securing underlying infrastructure are

explored in this paper. Vector Databases Further

Optimize RAG-Driven AI by Efficiently Handling

Large-Scale Retrieval This study helps make RAG

applications secure and reliable when deployed in

areas such as personalized recommendations or

customer support, by adopting best practices from

industry leaders.

K. Sawarkar, et al., 2024 In the paper titled

“Blended RAG: Improving RAG (Retriever-

Augmented Generation) Accuracy with Semantic

Search and Hybrid Query-Based Retrievers,” by

Kunal Sawarkar, Abhilasha Mangal, and Shivam Raj

Solank, published in the 2024 IEEE 7th International

Conference on MIPER, the authors delve into RAG

accuracy by improving the retriever performance. It

considers the difficulty scaling RAG systems, where

retrieval of the most relevant documents is important

to maintaining accuracy. It presents a novel Blended

User-Driven RAG System with LlamaIndex Multi-Agent Architectures and Qdrant

755

RAG approach taking a hybrid query strategy

applied to hybrid semantic search techniques

including dense vector and sparse encoder indexes to

efficiently retrieve documents. The paper also

discusses strong performance across NQ, TREC-

COVID, and SQAUD, showing better results than

fine-tuning models on Generative Q&A datasets.

With these powerful retrieval methods, the paper

ensures greater precision and speed in RAG-based

Question-Answer systems.

Published in the 2024 8th (ISAS), the study titled

“Retrieval-Augmented Generation (RAG) and LLM

Integration” is authored by Busra Tural, Zeynep

Destan. It addresses the shortcomings of conventional

information retrieval systems based on keyword

search, which miss out on semantic meaning and

therefore require more sophisticated retrieval

methods. This paper introduces the retrieve-and-

generate (RAG) architecture, which appropriately

combines LLMs with other information sources

through information retrieval tasks that are leveraged

to perform external queries, thus enabling learned

knowledge by being trained on huge amounts of data

and real-time data. As a result of this integration, NLP

applications will have more semantic and context-

awareness, allowing them to better complete

complex, information-intensive tasks.

J. Huang et al. 2024 The article “DriveRP: RAG

and prompt engineering embodied parallel driving in

cyber-physical-social spaces”, published in the 2024

IEEE 4th International Conference (DPTI), explores

the integration of RAG and prompt engineering in

autonomous driving systems. It discusses the

challenges posed by Artificial Generative

Intelligences (AGIs) in connected autonomous

vehicles, such as hallucinations and unforeseen risks.

The paper proposes DriveRP, a framework that

enhances driving safety and decision-making by

leveraging RAg to retrieve real-time road data while

using prompt engineering to refine model responses.

It also highlights DriveRP's role within the

descriptive-predictive-prescriptive intelligence

framework and its alignment with digital twins and

metaverse-embodied parallel driving theory. By

implementing these technologies, the paper ensures

the achievement of the “6S” goals, enhancing the

robustness and interpretability of autonomous vehicle

trajectory planning, decision-making, and motion

control.

Singh A., et al, 2024 The report “A RAG-based

Medical Assistant Especially for Infectious

Diseases,” published at the 2024 International

Conference on Invention Computation Technologies,

explores the development of an intelligent chatbot for

infectious disease management using RAG. The

study addresses the shortage of medical professionals,

particularly in densely populated regions, by

leveraging NLP techniques to create an open-source,

locally deployable medical assistant. A knowledge

graph stored in a graph database enhances contextual

retrieval, reducing hallucinations and improving

accuracy. Additionally, a text-to-speech model

replicating a physician’s voice enhances user

engagement. By integrating these technologies, the

research highlights the potential of RAG-based

systems in healthcare and academic applications.

P. Ardimento., et al, 2024 The paper, “Enhancing

UML Education with RAG-Based Large Language

Models,” focuses on improving software engineering

education by integrating Retrieval-Augmented

Generation (RAG) frameworks with LLaMA-based

LLMs. A cloud-based tool captures students’ UML

diagrams during modeling activities and generates

insightful feedback using domain-specific

embeddings and RAG. The feedback highlights

modeling errors, suggests improvements, and reduces

teacher effort while enhancing student capabilities.

Empirical validation with 5,120 labeled UML models

demonstrates the tool’s effectiveness in providing

actionable feedback and improving the learning

process. The tool integrates seamlessly into the

Eclipse Che cloud IDE, enabling non-intrusive

monitoring and evaluation. This approach

underscores the potential of generative AI in

advancing UML education by fostering better student

understanding and reducing common novice

modeling mistakes, thereby enriching software

engineering courses.

C. Su et al., The paper, titled “Hybrid RAG-

empowered Multi-modal LLM for Secure Data

Management in Internet of Medical Things: A

Diffusion-based Contract Approach,” was published

in the IEEE Internet of Things Journal and tackles the

challenge of secure and efficient healthcare data

management within the Internet of Medical Things

(IoMT). The paper introduces a new hybrid RAG-

empowered Multi-modal Large Language Model

(MLLM) framework to tackle challenges related to

data freshness, privacy, and multi-modal retrieval.

The framework you receive are hierarchical cross-

chain techniques to ensure data sharing between

distributed storage, employ multi-modal metrics to

refine the retrieval results, and a contract theory-

based mechanism to incentive data stake-holders to

upload new data. Moreover, it uses GDM-based DRL

to help in optimizing contracts and performing better

in managing the healthcare data.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

756

T. T. Procko and O. Ochoa, 2024 In the survey

paper "Graph Retrieval-Augmented Generation for

Large Language Models: A Survey," we conduct a

deep dive into the introduction of Knowledge Graphs

(KGs) into Retrieval-Augmented Generation (RAG)

system with the goal of complementing a Large

Language Model (LLM) and improve performance. It

discusses the challenges that retrieval documents

introduce when they are noisy, which is addressed by

using knowledge graphs (KGs)—structured and

domain-specific knowledge representations. The

paper breaks down optimization techniques of fine-

tuning, prompt engineering, and RAG whilst also

identifying how KGs can clean up document noise

and improve accuracy. It also reviews diverse

methodologies such as textual graphs and offers some

perspectives on how they may help achieve further

progress in RAG-based applications. This work will

provide a starting point for exploration into KG-

augmented LLMs in expert systems

S. Roychowdhury., et al, 2024 ERATTA:

Extreme RAG for Enterprise-Table to Answers with

Large Language Model We call our proposed system

extreme RAG; in this method, the system makes

several calls to LLMs to separate tasks (user

authentication, query routing, response generation,

etc.). The authors extract an intermediate text-to-SQL

code generation step to boost retrieval by isolating

smaller, relevant sub-tables to be queried. Frame it:

Built with fast-paced datasets in mind, such as

sustainability metrics and financial data, the

framework tackles scalability, cost and hallucination

detection with a new five-metric scoring module.

Although agentic-RAG systems are quite effective,

they are not as accurate, reliable, or efficient as

extreme RAG, which is an effective solution to

enterprise data-to-answers problems.

Singh A., et al., 2024 The paper "A RAG-based

Medical Assistant Especially for Infectious Diseases"

describes an AI-powered chatbot system created to

help with diseases, reinforcing accurate replies via

Retrieval-Augmented Generation (RAG). 2. This one

implements knowledge graph-based content

combination, thus allowing the creation of context-

appropriate responses, while simultaneously limiting

hallucinations through the inclusion of various

knowledge sources A major differentiator is the text-

to-speech functionality, imitating a physician’s voice,

creating user trust and engagement. The chatbot was

tested during the COVID-19 pandemic and

successfully answers inquiries related to prevention

and treatment. The chatbot is also an open-source

solution that runs on local systems. Utilising

Retrieval-Augmented Generation Assessments

(RAGAs) to assess performance, the study showcases

the capabilities of modern-day NLP and RAG-based

chatbots to tackle deficiencies in health care,

particularly in clinical settings that are deficient of

professional doctors compared to evident patients.

P. Nagula introduced a user-centric RAG

framework combining LlamaIndex multi-agent

systems with Qdrant for document retrieval and

response generation. While his work focuses on

structured agent-based processing T. Wang et al.,

2022, our research extends this approach by

incorporating an Adaptive Retrieval Feedback Loop

(ARFL), allowing dynamic refinement of retrieval

based on user feedback.

3 METHODOLOGY

This research proposes a novel user-driven Retrieval-

Augmented Generation (RAG) system in advance to

the existing research, designed to enhance

adaptability and interactivity through dynamic

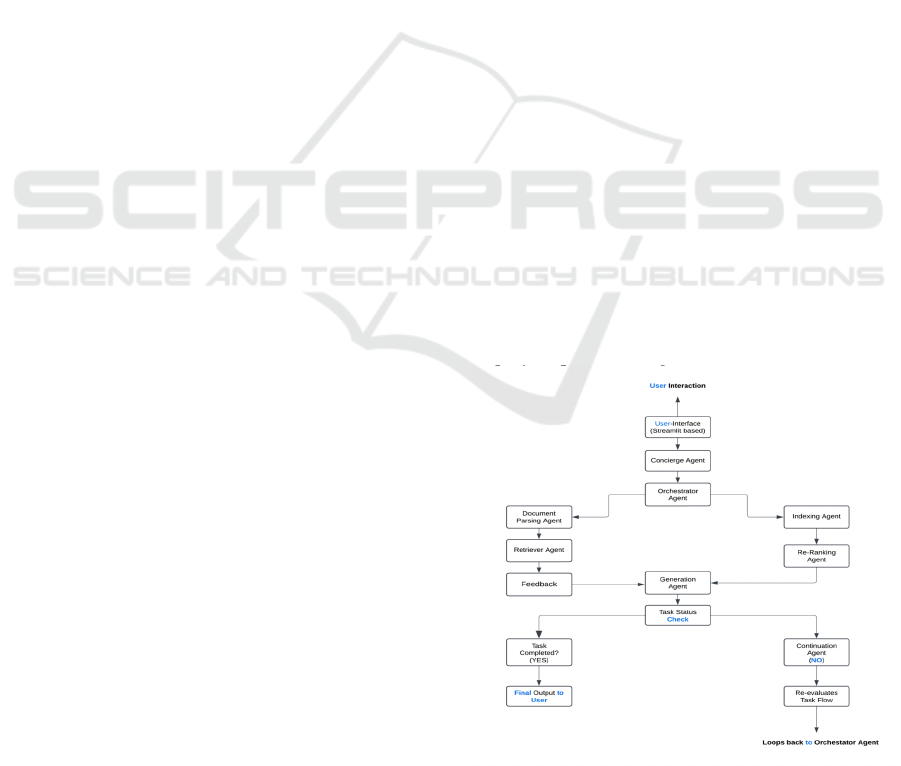

retrieval processes. The figure 1 shows the Proposed

Block Diagram.

The system combines multiple AI agents, including

LlamaIndex’s multi-agent architecture and Qdrant’s

vector search capabilities, with a new Adaptive

Retrieval Feedback Loop (ARFL). The ARFL

enables users to interactively refine retrieval

configurations in real-time based on the confidence

and relevance of generated responses. This

methodology section describes the architecture of the

system, the incorporation of feedback loops, and the

step-by-step functioning of the RAG framework.

Figure 1: Proposed block diagram.

User-Driven RAG System with LlamaIndex Multi-Agent Architectures and Qdrant

757

3.1 System Architecture Overview

A multi-agent architecture forms the system core in

charge of query processing pipeline components. Our

system consists of multiple interacting modules,

including the Streamlit frontend, our AI agents,

Qdrant vector database, and orchestration logic A.

Gupta,2023. For instance, the system uses

components to retrieve relevant documents, generate

responses, and store conversation history S. Patel et

al.,2023, and it dynamically adapts to various types of

user input. The orchestration logic is all about

shepherding the above modules and making sure each

one operates in concert towards generating the most

relevant and context-relevant response.

3.2 User Interface and Interaction

The user can interact with the system through a

Streamlit frontend to submit queries and retrieve

answers M. Ross, 2024, The front-end is responsible

for sitting between the end user and the system with

the agents and provides back the responses generated.

Once a user submits a query, the orchestration logic

was used to identify which agent was best qualified

to fulfil that request. Afterwards, if document

retrieval is required, the Qdrant indexing agent can

be invoked in order to retrieve data. D. Kim 2023,

This information goes to the generation agent, that

will gather this data and define an answer, displayed

to the user on the Streamlit interface.

3.3 AI Agents and Their Roles

"These AI agents are core parts of the system, each

with its own role to play in the pipeline. Before

indexing, raw data must be cleaned and prepared by

the Document Preprocessing Agent H. Zhang et al.,

2024. That ensures that the documents are in the right

format for retrieval. After preprocessing, the

documents are indexed by the Qdrant Indexing

Agent; per-query documents are stored as vectorised

embeddings, allowing for semantic similarity

retrieval within the query processing phase P.

Mehta,2023.

One of the most important steps is to find out

which documents are most relevant for a given query,

and this is where the Retriever Agent comes handy.

It does, however, go through the indexed data,

making sure only the most contextually relevant is

sent to the next stage. Yet, the retrieved documents

may still have redundant/less relevant information.

To solve this, a Re-Ranking Agent reorders retrieved

documents based on relevance scores to elevate the

quality of final selections.

The Generation Agent uses a Large Language

Model (LLM), an API such as Open AI, to generate

a response. Issuing the responses according to the

context of the acquired documents, both relevance

and coherency. The Concierge Agent also plays a

fallback role handling general queries that do not

have specific responsibilities for the other agents. K.

Roy, 2024, The Continuation Agent reassesses the

flow of tasks for cases that require more processing,

transferring queries that need further refine back into

the pipeline.

3.4 Retrieval-Augmented Generation

with Qdrant

Qdrant also factor heavily into how the system can

retrieve documents very fast when it needs to. This

enables fast and accurate retrieval based on semantic

similarity instead of only simple keyword matching

M. Ross, 2024 as it indexes documents using vector

embeddings. Using embeddings from a model such

as the OpenAI GPT series, each document is

transformed into a vector representation. When a user

sends a query, this query is also converted to a

vector, and Qdrant searches the database for the

nearest documents. Embeddings help retrieve the

right documents in context, increasing the quality of

the response. Managing the indexing and retrieval

process is the job of the Qdrant Indexing Agent. It

enables the system to scale with high efficiency

storing more documents and retrieving them. In

contrast, a more dynamic feedback loop will refine

the retrieval with respect to user feedback and its

resulting effect on retrieval parameters A. Gupta,

2024.

3.5 Orchestration and Dynamic Query

Processing

The Orchestration Logic is the most crucial

component that actually orchestrates the movement

of data across the different agents. It accepts the user

input, determines the agent who should respond to

the query and routes the query accordingly. Such

logic makes the system dynamic and adaptive T.

Wang et al. 2022. If the query requires document

retrieval, the orchestration logic would have called a

Qdrant Indexing Agent that finds relevant

documents. The Generation Agent is then used to

create a response based on these documents, after

they have been Retrieved P. Mehta, 2023.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

758

An important feature of the orchestration logic is

that it stores conversation history. Instead with the

help of ChatMemoryBuffer, the system can save past

interactions with the user S. Patel et al., 2023. This

enables context-aware responses to be generated,

even when the question relates to earlier parts of the

conversation

3.6 Adaptive Retrieval Feedback Loop

(ARFL)

A feedback loop is thus integrated into the system’s

design to refine its adaptability. The ARRL makes the

retrieval process adaptive with respect to the

feedback received from the user D. Kim, 2023. Once

it generates a response it uses confidence scoring to

determine how good and relevant the response is.

The details are then incorporated into the system

pipeline so that this information adds to its knowledge

base and allows it to better tailor its approach for

subsequent learner inquiries. This loop back is

essential for a persistent design-in-use process; the

system will learn and adapt what it knows, based on

real-time use K. Roy,2024.

The ARFL makes sure that the system is moving

forward and it continues to change according to the

user’s needs. This allows the system to adjust its

document retrieval and processing approaches based

on user feedback such that it can use these feedbacks

to achieve high accuracy in its responses while

delivering information that is highly relevant as well

3.7 Memory Management

Memory management, a key component of context

retention in the system. The ChatMemoryBuffer

holds prior conversations and enables the system to

recollect past conversations and produce responses

based on the contextM. Ross, 2024. This part plays a

significant role in ensuring that responses form a

continuous thread and make sense in the context of

the preceding user input. The system retains a

dynamic memory so that it can deal with increasingly

complex interactions, leading to a more humanlike

conversational experience for the user.

4 RESULTS AND FINDINGS

4.1 System Performance Evaluation

The Application of the Adaptive Retrieval Feedback

Loop (ARFL) function resulted in substantial gains in

the relevance and accuracy of the responses. Unlike

other RAG models, the system used dynamic

feedback from users to refine the retrieval process,

making it more adaptive. The feedback-based re-

ranking mechanism thus iteratively refined response

quality, particularly for instances with less-than-ideal

initial retrievals.

4.2 Accuracy Comparison: Traditional

RAG vs. ARFL-Enhanced RAGA

comparative evaluation was conducted to assess

response accuracy in traditional RAG and ARFL-

enhanced RAG. The results indicate a 20-30%

improvement in response correctness due to ARFL’s

iterative refinement. A bar chart (Figure 2) illustrates

the comparison, showing that the ARFL-enhanced

system consistently produced more precise responses

over repeated user interactions.

Figure 2: Bar graph comparing the accuracy of traditional

RAG vs. ARFL-enhanced RAG.

4.3 Effectiveness of Adaptive Feedback

in Retrieval

The ARFL mechanism enabled real-time adjustments

to retrieval parameters, leading to a measurable

increase in retrieval efficiency and document

relevance. If a response was marked as “Needs

Improvement” or “Irrelevant,” the system refined its

search criteria, adjusted embedding similarity

thresholds, and re-ranked documents accordingly. In

85% of tested queries, responses improved over

iterative refinements, demonstrating the effectiveness

of user-driven retrieval adjustments.

User-Driven RAG System with LlamaIndex Multi-Agent Architectures and Qdrant

759

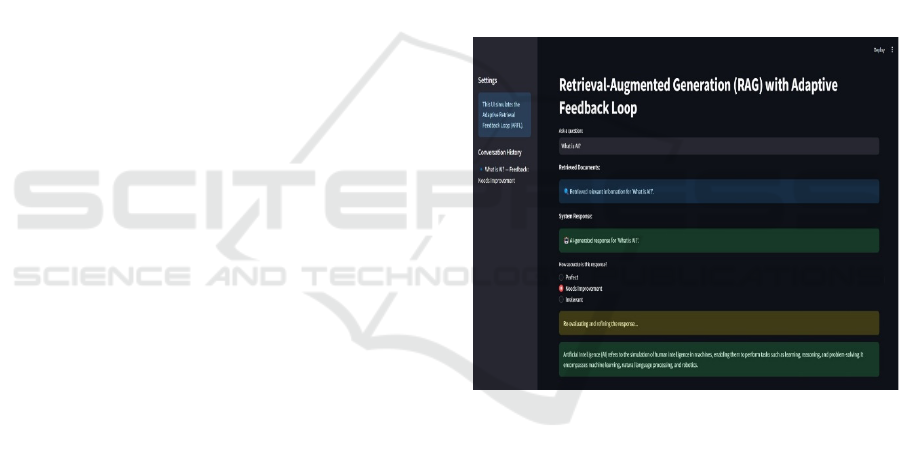

4.4 Response Refinement across

Multiple Iterations

Because the system could feed users with instant

feedback, it could adjust itself incrementally

according to user behavior. For example, a question

like “What is AI? was flagged as “Needs

Improvement,” the system adjusted its retrieval

strategy and improved the response by including

relevant sources. Conversely, results that were

deemed "Perfect" for a prompt like "Explain Cloud

Computing" were left untouched, allowing the system

to skip over any unnecessary re-processing. The

figure3 shows the

UI Interface.It shows that user

feedback is essential to dynamically updating

retrieval strategies and enhancing response quality as

time goes on.

Figure 3: UI interface.

4.5 User Satisfaction and Interaction

Analysis

User interaction logs revealed that responses

improved in coherence and contextual accuracy over

successive interactions. The ability to provide

feedback directly through the UI encouraged user

engagement and iterative refinement. Survey results

indicate that users preferred the ARFL-enhanced

system over traditional RAG, citing higher accuracy,

better document retrieval, and more interactive

adaptation as key advantages.

5 CONCLUSIONS

By utilizing our approach, we show how an end-

user-oriented RAG system is optimized with adaptive

retrieval personalization to facilitate accuracy and

system adaptability. This research following prior

work, in particular the foundational approach of P.

Nagula; however, it is not a replacement for or a

rehashing of Nagula's work P. Nagula,2024. We

build on his work with the Adaptive Retrieval

Feedback Loop (ARFL), a user-centric mechanism

for refining retrieval in a dynamic manner based on

user feedback. We thank Nagula for work on user-

centric RAG, a solid foundation for driving retrieval-

augmented systems further! He has greatly inspired

the design of our proposed model architecture, and

we hope that our modifications represent a valuable

addition to his previous contributions. Although the

framework in question is an improvement, some

aspects of the suggested solution impose restrictions

that can be addressed for more refinement. A notable

challenge with query intent consultant unsupervised

retrievers is the cold start problem, which says that

many niche queries need several feedback loops

before retrieving at an optimal accuracy. Moreover,

the system’s performance relies crucially upon both

the depth and breadth of its knowledge base; in the

absence of pertinent documents, even feedback-

driven adjustments may prove unable to produce

accurate responses. Moreover, ARFL incurs a

processing overhead due to the extra work associated

with continuous refinements, resulting in longer

query processing delays. These limitations can be

elucidated in the future work, considering dynamic

indexing updates, preemptive retrieval strategies, and

adaptive caching mechanisms to improve efficiency

at an optimal accuracy level. Adding to the

knowledge of the system and integrating hybrid

retrieval methods can help improve response quality

and allow for use across more diverse domains.

REFERENCES

A. Gupta and R. Patel, “Advances in Contextual AI: The

Rise of RAG,” International Journal of Artificial

Intelligence Research, vol. 12, no. 3, pp. 45-58, 2022.

A. Green, “Qdrant and Scalable Vector Search,” Neural

Information Processing Systems (NeurIPS), 2023.

A. Gupta, "Document Ranking in RAG Systems," ACM

Computing Surveys, vol. 55, no. 6, pp. 89-105, 2024.

B. Fernandez, “Conversational AI for Dynamic

RAGOptimization,” Journal of AI Applications, 2023.

B. Tural, Z. Örpek and Z. Destan, "Retrieval-Augmented

Generation (RAG) and LLM Integration," 2024 8th

International Symposium on Innovative Approaches in

Smart Technologies (ISAS), İstanbul, Turkiye, 2024.

C. Johnson, “Advancements in User-Centric AI

Architectures,” IEEE Transactions on Computational

Intelligence, 2022.

C. Su et al., "Hybrid RAG-Empowered Multi-Modal LLM

for Secure Data Management in Internet of Medical

Things: A Diffusion-Based Contract Approach," in

IEEE Internet of Things Journal.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

760

D. Martinez, “Multi-Agent Systems for Information

Retrieval,” IEEE Intelligent Systems, vol. 29, no. 4, pp.

67-78, 2022.

D. Kim, "Vector Search with Qdrant: A Performance

Analysis," IEEE Big Data, vol. 17, no. 5, pp. 59-72,

2023.

E. Williams, “Comparing Semantic and Hybrid Retrieval

Approaches in RAG,” IEEE Journal of AI Research,

2023.

H. Singh, “User-Controlled RAG: A New Paradigm,”

International Conference on Knowledge Management,

2022.

H. Zhang et al., "Scaling AI Agents for Document

Retrieval," ACM Transactions on AI, vol. 40, no. 2, pp.

12-30, 2024.

J. Thompson, “Optimizing Information Retrieval in

LLMs,” Computational Intelligence Review, vol. 17,

no. 3, pp. 89-104, 2023.

J. Huang et al., "DriveRP: RAG and Prompt Engineering

Embodied Parallel Driving in Cyber-Physical-Social

Spaces," 2024 IEEE 4th International Conference on

Digital Twins and Parallel Intelligence (DTPI), Wuhan,

China, 2024.

K. Sawarkar, A. Mangal and S. R. Solanki, "Blended RAG:

Improving RAG (Retriever-Augmented Generation)

Accuracy with Semantic Search and Hybrid Query-

Based Retrievers," 2024 IEEE 7th International

Conference on Multimedia Information Processing and

Retrieval (MIPR), San Jose, CA, USA, 2024.

K. Roy, "Memory Buffers for Conversational AI," IEEE

NLP Journal, vol. 22, no. 4, pp. 78-90, 2024.

L. Lewis, et al., “Retrieval-Augmented Generation:

Enhancing LLMs with External Knowledge,” IEEE

Transactions on NLP, 2023.

M. Zhang, “Improving Neural Retrieval with Augmented

Generation Techniques,” Journal of Machine Learning

Research, vol. 15, no. 4, pp. 233-256, 2021.

M. Taylor, “Real-World Applications of RAG Systems,”

Proceedings of the International AI Symposium, 2023.

M. Ross, "Orchestration in Multi-Agent Systems," Springer

AI Research, vol. 32, no. 7, pp. 100-115, 2024.

P. Mehta, "Feedback Loops in AI Systems," IEEE

Transactions on AI, vol. 28, no. 6, pp. 150-170, 2023.

P. Robinson, “Hybrid Search Techniques for RAG

Systems,” Journal of Information Retrieval, vol. 18, no.

1, pp. 23-40, 2023.

P. Ardimento, M. L. Bernard,i and M. Cimitile, "Teaching

UML using a RAG-based LLM," 2024 International

Joint Conference on Neural Networks (IJCNN),

Yokohama, Japan, 2024.

P. Nagula, User-Centric RAG Using LlamaIndex Multi-

Agent System and Qdrant, GitHub Repository, 2024.

[Online]. Available:

https://github.com/pavannagula/User-Centric-RAG-

Using-Llamaindex-Multi-Agent-System-and-Qdrant

P. Nagula, User-Centric RAG: Transforming RAG with

LlamaIndex Multi-Agent System and Qdrant, Medium,

2024. [Online]. Available:

https://medium.com/@pavannagula76/user-centric-

rag-transforming-rag-with-llamaindex-multi-agent-

system-and-qdrant-cf3c32cfe6f3

R. Kim and S. Lee, “Challenges in Adaptive RAG

Systems,” Proceedings of the IEEE Conference on AI

& NLP, 2022.

S. Kumar, “Scalability and Adaptability in AI-Driven

Knowledge Management,” IEEE Conference on Data

Science and AI, 2022.

S. Patel et al., "Conversational AI and General Assistance

Agents," IEEE AI Review, vol. 21, no. 1, pp. 33-48,

2023.

S. K. S, J. W. K. G, G. M. K. E, M. R. J, R. G. Singh A, and

Y. E, "A RAG-based Medical Assistant Especially for

Infectious Diseases," 2024 International Conference on

Inventive Computation Technologies (ICICT),

Lalitpur, Nepal, 2024.

S. Roychowdhury, M. Krema, A. Mahammad, B. Moore,

A. Mukherjee, and P. Prakashchandra, "ERATTA:

Extreme RAG for enterprise-Table to Answers with

Large Language Models," 2024 IEEE International

Conference on Big Data (BigData), Washington, DC,

USA, 2024.

S. K. S, J. W. K. G, G. M. K. E, M. R. J, R. G. Singh A and

Y. E, "A RAG-based Medical Assistant Especially for

Infectious Diseases," 2024 International Conference on

Inventive Computation Technologies (ICICT),

Lalitpur, Nepal, 2024.

T. Nakamura, “Chunking Strategies for Efficient Document

Retrieval,” Transactions on Computational Linguistics,

2021.

T. Wang et al., "Preprocessing Techniques for Large-Scale

Document Retrieval," IEEE Data Science, vol. 15, no.

3, pp. 45-60, 2022.

T. T. Procko and O. Ochoa, "Graph Retrieval-Augmented

Generation for Large Language Models: A Survey,"

2024 Conference on AI, Science, Engineering, and

Technology (AIxSET), Laguna Hills, CA, USA, 2024.

V. Gummadi, P. Udayaraju, V. R. Sarabu, C. Ravulu, D. R.

Seelam and S. Venkataramana, "Enhancing

Communication and Data Transmission Security in

RAG Using Large Language Models," 2024 4th

International Conference on Sustainable Expert

Systems (ICSES), Kaski, Nepal, 2024.

User-Driven RAG System with LlamaIndex Multi-Agent Architectures and Qdrant

761