Scalable and Robust CNN Models for Brain Tumor Detection in

Healthcare Applications

Reshma U. Shinde

1

, Vijay A. Sangolagi

2

, Mithun B. Patil

2

, Vikas Mhetre

1

and Sarvesh Kulkarni

1

1

Department of Computer Science and Engineering, Nagesh Karajagi Orchid College of Engineering & Technology,

Solapur, Maharashtra, India

2

Department of Artificial Intelligence and Data Science, Nagesh Karajagi Orchid College of Engineering & Technology,

Solapur, Maharashtra, India

Keywords: Deep Learning, CNN, EfficientNet‑B0, Brain Tumor Detection, Medical Imaging, MRI Classification.

Abstract: Successful detection of brain tumors plays a vital role in patients obtaining an early diagnosis and developing

proper treatment strategies which enhance survival rates. The clinical diagnosis process driven by MRI

produces slow results with human inaccuracies which calls for automated techniques. The researchers present

a deep learning platform that combines CNNs with EfficientNet-B0 for better brain tumor detection at a

computational speed that remains high. MRI scan spatial features are extracted by CNNs together with

EfficientNet-B0 performs compound adjustments to maximize its depth width and resolution parameters for

superior operations. The dataset consists of a wide range of MRI scans that are manually labeled for brain

tumors with multiple data augmentation methods used to enhance model universal operation. Research

findings show the proposed system accomplishes better accuracy rates and precision along with recall metrics

and F1-score than standard deep learning techniques. The addition of advanced regularization methods

combined with contrast enhancement helps lower overfitting risk for reliable prediction outcomes. The model

design maintains high performance at clinical diagnosis speeds which makes it functional for real-time

practice in hospitals. The advantages of EfficientNet-B0 emerge from its performance against other available

CNN architectures in medical imaging applications. The Research will concentrate on modifying the model

to excel at multi-class tumor classification while adding explainable AI for better understanding and proving

its clinical impact in true medical environments.

1 INTRODUCTION

Brain tumors qualify as a dangerous neurological

disease that needs rapid correct identification to

achieve successful treatment outcomes and better

treatment survival possibilities. Brain tumor detection

at proper times stands essential for medical decision-

making because inaccurate diagnosis and delayed

diagnosis affect patient survival potential. MRI serves

as the primary tool for brain tumor diagnosis because

its strong ability to show detailed brain tissue

information. Seeing and interpreting MRI scans by

radiologists remains challenging because this process

needs specialized radiological expertise and takes an

extended time period. The diagnostic procedure is

susceptible to variations from both observers and

experts which produces possible differences in their

diagnostic conclusions. Medical professionals require

automated brain tumor detection systems to enhance

their diagnostic precision and decrease MRI analysis

workload because of the rapid increase in patient

need. The Convolutional Neural Networks (CNNs)

achieve better results than other models when used

for image classification alongside feature extraction

operations. The neural network technology within

CNNs discovers complex spatial structures from

medical image data without human-made feature

manipulation steps. CNNs demonstrate strong

capabilities for brain tumor detection but traditional

models like VGG-16, ResNet and AlexNet need large

computational power and generate poor results with

small medical data. This research develops a deep

learning architecture for brain tumor detection which

implements EfficientNet-B0 as its optimized CNN

model to achieve both high accuracy standards as

well as enhanced computational performance. Brain

tumors display diverse dimensions and

configurations as well as positioning therefore

346

Shinde, R. U., Sangolagi, V. A., Patil, M. B., Mhetre, V. and Kulkarni, S.

Scalable and Robust CNN Models for Brain Tumor Detection in Healthcare Applications.

DOI: 10.5220/0013912800004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 4, pages

346-353

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

making their identification difficult. Deep learning

methods that process medical images face multiple

key disadvantages in their operation.

• The deep CNN structures including ResNet

and DenseNet demand excessive

computational power which impedes their

use in time-sensitive medical clinical

operations.

• Medical imaging datasets with small sizes

combined with imbalanced content produce

deep learning model overfitting because

regularized training is insufficient in these

conditions.

• Many CNN models struggle to apply their

learned capabilities effectively over

different MRI scans because of variations

in patient characteristics and imaging

practices as well as tumor characteristics.

• The unexplained nature of deep learning

models presents challenges for clinical staff

to understand diagnostic decision processes

which reduces their faith in AI-based

medical analysis.

The necessary development of brain tumor detection

requires substantial deep learning techniques that

achieve both high predictability alongside cost-

efficient computation. The goal of this research is to

improve brain tumor detection models via

EfficientNet-B0 which represents a state-of-the-art

CNN architecture built upon compound scaling for

efficient depth-width-resolution balance.

EfficientNet-B0 uses a structured method to optimize

feature extraction without any arbitrary alteration of

network dimensions which traditional CNNs

perform. The current research benefits from the

integration of EfficientNet-B0 which offers various

advantages.

• The state-of-the-art success of

EfficientNet-B0 is possible because it

reaches high accuracy levels using

dramatically reduced parameter counts

compared to classic CNN models.

• Feature extraction performance of the

model performs effectively on MRI scan

data to enhance non-tumor-tumor

discrimination detection.

• The EfficientNet-B0 model maintains high

computational efficiency because it handles

tasks with limited memory and processing

requirements thus enabling real-time

clinical deployments.

This research addresses CNN architecture

weaknesses to develop a clinically applicable AI

system that provides scalable interpretations for

detecting brain tumors in MRI scans.

2 LITERATURE REVIEW

The paper demonstrates that deep learning

technology has strong potential to advance both

diagnostic processes and therapeutic approaches and

patient healthcare results. For the complete

exploitation of deep learning in healthcare all

healthcare professionals need to overcome data

quality concerns along with improving model

understanding alongside securing clinical approval.

Tumor segmentation within the brain demands

special attention when utilizing deep neural networks.

Litjens, Geert, et al. 2017 The paper demonstrates

how a two-phase CNN framework delivers leading

brain tumor segmentation through efficient

management of both local and global context

exploitation. The proposed method enables better

implementation of deep learning techniques for

analyzing complex multi-class segmentation

problems in medical images. Mohammad, et al. 2017

Deep learning technology has proven itself as a

powerful brain MRI segmentation tool because it

outperforms conventional methods in terms of

precision. The system still faces problems regarding

data accessibility as well as generalization between

patients and obtaining widespread acceptance in

medical centers. Research going forward needs to

prioritize three areas: transfer learning, explainable

artificial intelligence and institutional collaboration

to drive full potential of deep learning in healthcare

applications. Zeynettin, et al. 2017 The author

summarizes that brain MRI segmentation has shown

significant progress yet technical obstacles including

noise as well as intensity inhomogeneities and

processing speed problems persist during

segmentation applications. Researchers need to direct

future studies toward combining deep learning

methods with hybrid approaches and automated

processing to achieve better clinical segmentation

outcomes. Saima, et al. 2016 The paper summarizes

that radiomics shows strong potential for

glioblastoma medical diagnosis along with therapy

planning and patient outcome prediction. The

implementation in clinical settings demands the

resolution of three major challenges including data

heterogeneity problems and standardization

requirements and the need to increase model

interpretability. The acceptance of radiomics-based

solutions in clinical practice requires standard

workflow development together with

Scalable and Robust CNN Models for Brain Tumor Detection in Healthcare Applications

347

multicomponent data integration. Ahmad, et al. 2019

The research concludes that radiomics technology

provides significant benefits to precision medicine

through quantitative methods of non-invasive disease

analysis. To gain widespread acceptance in medical

facilities the process must overcome limitations

regarding data inconsistency while ensuring feature

repeatability and establishing model reliability.

Standardization combined with data type integration

leads radiomics to enhance both patient results and

contribute to new drug development. Parekh, et al.,

2017 The studied CNN model reveals remarkable

abilities in detecting brain tumors inside MRI images

as demonstrated through medical imaging

applications of deep learning techniques. New

research must continue because additional data

expansion and clear model explanation remains

essential for clinical implementation. Zhou, et al.,

2018 Transfer learning models enable deep learning

to achieve substantial progress in diagnosing brain

tumors according to the paper. The diagnostic

accuracy improves and healthcare professionals gain

better treatment solutions because of these models'

demonstrated capabilities. Further research that

enlarges available data sets and improves model

interpretability plays an essential role in making deep

learning models suitable for clinical practice. Zeyad

A., et al. 2020 The research demonstrates clinical

feasibility when automated diagnostic tools

implement in medical settings to enhance accurate

diagnosis and speed up therapeutic decisions.

Wenxing, et al. 2019 The deep convolutional neural

networks-like AlexNet excel at big-sized dataset

image classification particularly within ImageNet.

The research demonstrates that adding expert

segmentations and radiomics features improves the

TCGA glioma MRI collections to become a

beneficial resource for researchers. The work

represents an important step that would improve

glioma research knowledge and machine learning

diagnostic and prognostic capabilities. Spyridon, et

al. 2017 The research demonstrates that HEMIS

represents an important development in hetero-modal

image segmentation because merging different

imaging modalities leads to substantial enhancements

in medical segmentation accuracy. This study

strengthens medical image analysis by introducing an

effective system for blending various types of data to

improve healthcare diagnostic and treatment

outcomes. Mohammad, et al. 2018 Deep learning

algorithms particularly Convolutional Neural

Networks demonstrate outstanding ability according

to research to classify histopathological images and

predict genetic mutations that occur in hepatocellular

carcinoma. The study demonstrates how healthcare

professionals should integrate sophisticated

computational solutions because this approach

produces targeted treatments along with accurate

cancer patient diagnoses. Xin, et al. 2019 The BRATS

benchmark functions as a fundamental instrument for

medical imaging researchers to evaluate different

algorithms that perform brain tumor segmentation

tasks systematically. The study demonstrates

theoretical importance in advancing segmentation

techniques while demonstrating a continuous need for

modern solutions to tackle brain tumor image analysis

issues. Bjoern H., et al. 2015 This study finds that

significant progress has occurred in brain tumor

classification and segmentation by means of machine

learning and deep learning methods but obstacles

need further resolution. Current research along with

innovation remain crucial for developing dependable

interpretable and robust brain tumor diagnostic

models which help medical staff treat these diseases

effectively. Hamghalam, M., et al. 2021 throughout

the research papers various gaps identify crucial

difficulties related to integrating deep learning with

radiomics methods for healthcare use. Quality

problems along with heterogeneity issues and

availability limitations and the need for

standardization continue to stand as major obstacles

for clinical deployment of generalized models.

Clinical trust along with practical implementation are

restricted by insufficient model interpretability while

validation difficulties make implementation

challenging. The fields of healthcare analytics show

promise in hybrid methods alongside transfer

learning solutions and multi-modal data

combinations for advancing image segmentation and

classification results. Medical imaging currently

faces three major problems which require new

solutions due to their persistence: noise and intensity

inhomogeneity together with insufficient computing

speed. The implementation of multi-institutional

collaborations together with standardized workflows

represents the key approach to solve current gaps

while establishing clinically viable and reproducible

and scalable solutions.

3 METHODOLOGY

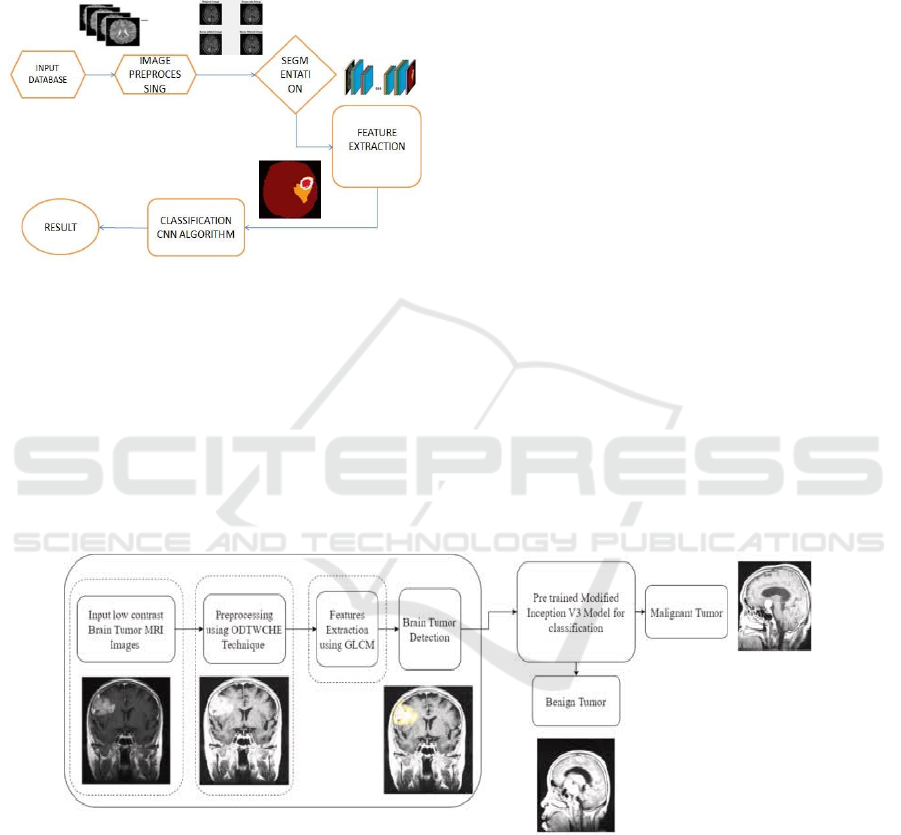

Convolutional Neural Network as shown in figure 1 is

another form of Deep Learning neural network

commonly applied in the computer vision disciplines.

Computer Vision or the ability of a computer to

understand the picture or any visual data. It is

worthwhile to point out that when it comes to the

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

348

implementation of Machine Learning then there can

be no better option than Artificial Neural Networks.

Neural Networks are applied in image data, voice data,

and text data among many others. In this blog, I will

construct a simple component of CNN with which the

subsequent blog is going to be built.

Figure 1: Working of CNN.

When we use Convolutional layer, it applies filters to

the input image to extract the features, while using

Pooling layer, it down samples the image and reduces

computation and the fully connected layer makes the

final and effective prediction. Here, we introduced

an automated as well as intelligent system for robust

brain tumor detection and classification. There are

two major steps this computer-assisted system works

in: Firstly, it is enhanced the contrast of the medical

MRI images with low quality by using an Optimal

Dual Threshold with Contrast Histogram

Equalization (ODTWCHE) technique. In this phase,

system evaluates the contrast level of MRI images

sent in. As for contrast enhancement, if a contrast of

an MRI image falls below predefined threshold, we

apply it. This method successfully solves the over-

enhancement problem and saves computational

resources by avoiding to enhance contrasts for images

already pinnacle at sufficient quality. After contrast

enhancement, brain tumor detection performed by the

system. Second step of system is powered by deep

transfer learning-based component.

The figure 2 illustrates a systematic workflow for

detecting and classifying brain tumors using

advanced image processing and machine learning

techniques. The procedure starts by accepting low-

contrast brain tumor MRI images as input despite

their difficulty to analyze because they show poor

visibility and indistinct intensity distribution. The

detection and classification system relies entirely on

these initial input images. The second phase

implements the Optimized Dual-Tree Wavelet

Contrast Histogram Equalization (ODTWHE)

approach for preprocessing activities. The

preprocessing technique improves input MRI images

by adjusting their contrast together with brightness

values. The optimized images are ready for feature

extraction because preprocessing enhances their

extraction qualities while maintaining vital details

needed for analysis.

Figure 2: MRI image process.

GLCM operates as a texture-based procedure which

examines pixel brightness relationships in images to

extract important features including textual patterns

and both contrast properties and homogeneity

aspects. The identification of regions of interest

together with the separation of healthy tissue from

tumor areas relies on these features that play an

essential role during analysis. The obtained features

move forward to serve as input for brain tumor

detection. The process of determining where the

tumor exists within the MRI images occurs at this

stage. The phase depends on enhanced images with

extracted features to detect brain tumor abnormalities

thus becoming crucial to the processing pipeline. The

modified Inception V3 model from a pretrained state

performs tumor classification during the following

Scalable and Robust CNN Models for Brain Tumor Detection in Healthcare Applications

349

stage. A group of researchers applied the deep

learning Inception V3 model after its fine-tuning

process to differentiate between benign and

malignant brain tumors. The model applies

previously extracted information to identify detected

tumors as either benign or malignant for diagnosis

and treatment preparation purposes. The process

concludes by producing a classification result that

shows tumor type as benign or malignant together

with visual images of the identified tumor. The final

output assists medical staff to diagnose tumor kind,

enabling them to select treatment options which leads

to better patient results.

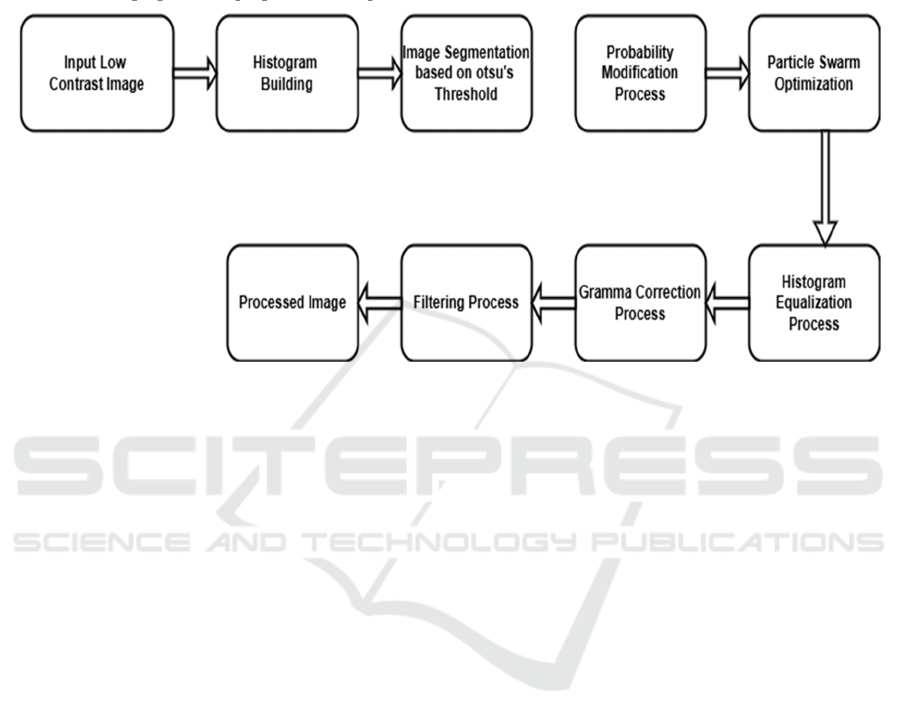

Figure 3: Flowchart of MRI images.

The method to upgrade images as shown in figure 3

with low contrast starts with obtaining an input

picture which demonstrates poor visual quality. Poor

visibility within such images creates analysis hurdles

because the lack of contrast makes detail

identification extremely difficult. The first

operational stage acts as groundwork for subsequent

procedures that develop image clarity as well as

contrast and brightness before additional applications

can begin. Constructing a histogram represents the

second stage in developing this process. The pixel

intensity distribution appears as a histogram which

displays the frequency data for various intensity

values contained in the image. Accurate analysis of

pixel values through this method enables the

assessment of contrast along with the detection of any

pixel range imbalances. New understanding

developed during this step provides essential

direction for the upcoming improvement methods.

After analyzing the histogram, the image requires

Otsu’s thresholding technique segmentation.

The image segmentation separates its components

into two sections using an automatically determined

threshold which reduces intra-class pixel variation.

The success of Otsu’s technique depends on its ability

to segment important image elements properly which

prepares the picture for focused enhancement work.

The probabilities of pixel intensities receive

modifications after segmentation to achieve better

image contrast. Image pixel values experience a

probability distribution transformation which

produces an equalized histogram distribution. It is

essential to distribute intensity values across the

whole dynamic range properly while enhancing the

image’s ability to display fine details. The

enhancement parameters are optimized through use

of Particle Swarm Optimization (PSO). PSO

functions as a computational optimization algorithm

based on natural swarm behavior which discovers

optimal parameter pairings between contrast and

brightness values.

The adjustable parameters in PSO enable optimal

enhancement results through automatic adjustment

procedures instead of repeated experimental trials.

The application of histogram equalization makes

necessary adjustments to intensity values to generate

maximal image contrast. The pixel intensity

distribution gets adjusted through this process to

substantially distribute high-frequency intensity

values thus enabling improved image visibility.

Histogram equalization demonstrates success in the

improvement of pictures that possess limited dynamic

ranges. Gamma correction follows by adjusting

image luminance so that nonlinear intensity

variations become proper. The method enhances

brightness levels in order to maintain natural visual

quality with balanced lighting in images. The

intensity curve correction of gamma correction

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

350

enables it to solve brightness problems which

histogram equalization methods cannot handle

effectively. A filtering process completes the

refinement of the image. The filtering channeled into

the image includes noise reduction to produce better

clarity or edge enhancement for improving high-

frequency elements.

The workflow enhances image quality through

this stage that fixes new and original imperfections

found in earlier processing and the original content.

Consequently, the workflow produces an improved

output image. The processed image delivers superior

contrast together with enhanced brightness while

maintaining outstanding clarity which prepares it for

evaluation in medical diagnostic work and aerial

survey uses as well as industrial product quality

assessment. The systematic process makes the image

enhancement procedures both reliable and effective

for various low-contrast image types.

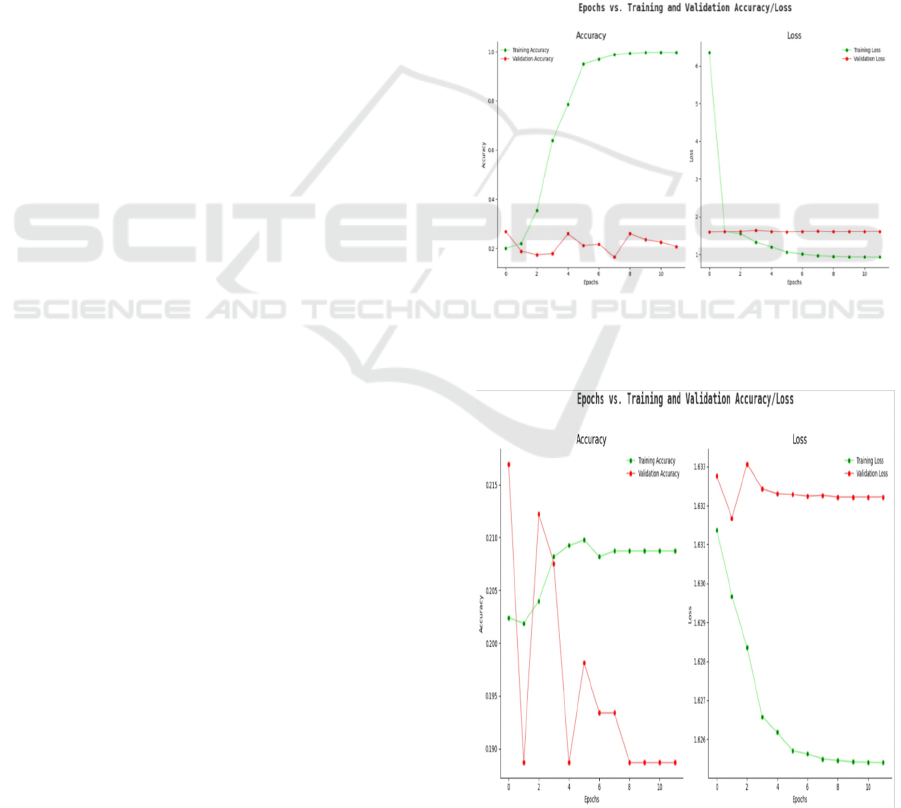

4 RESULTS AND DISCUSSION

This section performs an exhaustive evaluation of the

CNN-based brain tumor detection model which

employs MRI and CT scan images. The assessment

includes various performance metrics that measure

accuracy together with loss and F1-score trends and

confusion matrix data for understanding how the

model distinguishes tumor from non-tumor cases.

Evaluation curves from training and validation

demonstrate excellent learning outcomes but they

show signs of overfitting from the substantial

dissimilarities between training and validation

accuracy. The train loss systematically decreases

during the process yet the validation loss level stays

high. The F1 score results show inconsistent

performance during validation which suggests

difficulties for generalization when dealing with

unobserved data because of uneven class

distributions. The confusion matrix demonstrates

how misclassifications occur so models require better

class distribution methods and cost-sensitive learning

methods. The model received improvements through

data augmentation techniques which included

rotation and scaling and mirroring because these

techniques helped increase model robustness and

generalization. The combination of U-Net with

ResNet showed productive results that support the

usefulness of CNN-based methodology for automatic

brain cancer detection.

4.1 Accuracy

The presented graph in figure 4 displays how both

accuracy scores developed for training and validation

data when performing brain tumor detection. The

green accuracy line in the graph indicates steady

progress toward reaching an accuracy value of 1.0

during the last training period while effectively

distinguishing brain tumors in the training batches.

The validation accuracy indicators (red line) maintain

a low level along with major ups and downs because

the model fails to apply learned patterns properly to

new data points. The large gap between these metrics

suggests overfitting exists so the problem should be

addressed through data augmentation along with

dropout or early stopping approaches.

Figure 4: Training vs. validation accuracy.

4.2 Loss

Figure 5: Training vs. validation loss.

Scalable and Robust CNN Models for Brain Tumor Detection in Healthcare Applications

351

The optimization procedure for classifying brain

tumors is represented through the loss graph.

Effective training learning results in a steep decrease

of the training loss which appears as the green line.

The validation loss track (red line) persists at a high

and constant level because the model cannot reduce

errors within the validation dataset (figure 5). The

mismatch occurs when there is not enough

regularization in the model or when the model

structure is too complex or when the validation set

includes homogenous samples. The model's

reliability can be boosted through cross-validation

combined with extra data preprocessing techniques.

4.3 F1-Score

Analysts use the F1-score to evaluate how well their

model combines precision and recall when detecting

brain tumors through analysis of its performance

metrics (figure 6). The F1-score of the training stage

shows improved performance throughout its

execution due to the model's steadily increasing

accuracy of categorizing tumors properly. The

validation F1-score reveals unpredictable results

because the model faces difficulties on new data

especially when classifying incorrectly or when

classes appear unevenly. The issue can be resolved by

weighting classes differently or improving the dataset

representation.

Figure 6: F1-score trends across epochs.

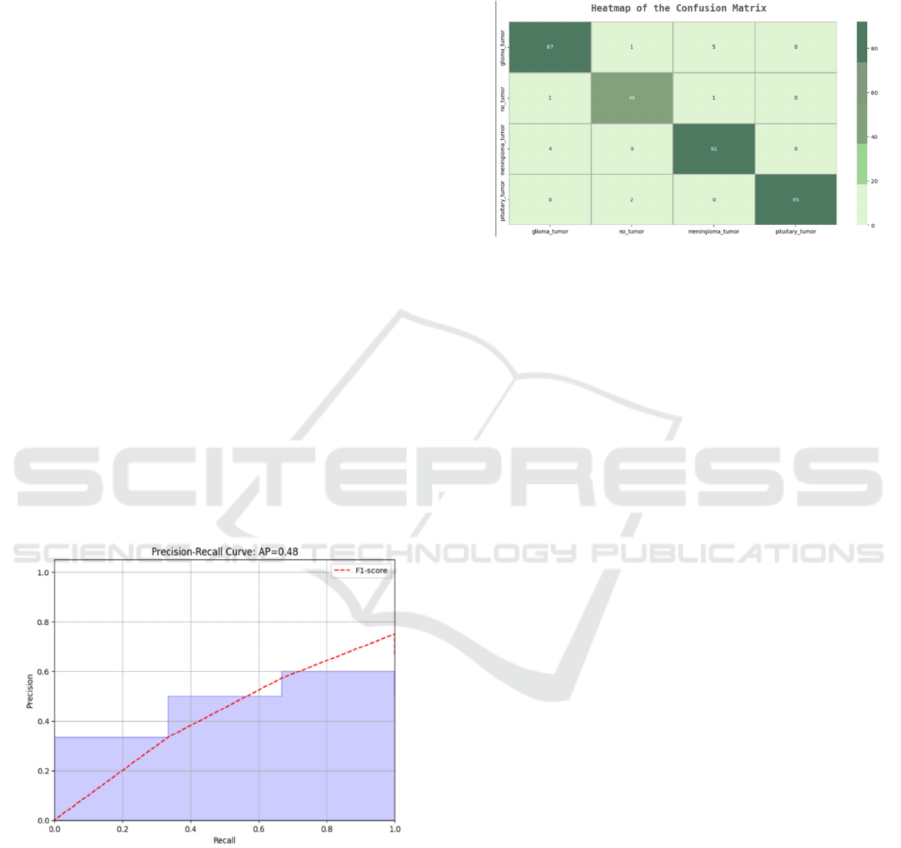

4.4 Confusion Matrix Analysis

A confusion matrix as depicted in figure 7 evaluates

model classification accuracy when it reports all cases

of true positives and true negatives together with false

positives and false negatives across tumor categories.

The detection of tumors should represent a crucial

element in medical diagnostics since high false

negative values indicate model errors in diagnosing

specific cases. Adding balance to underrepresented

tumor classes in the dataset together with

implementing advanced cost-sensitive learning

techniques would improve model classification

results.

Figure 7: Heatmap of confusion matrix.

5 CONCLUSIONS

Convolutional Neural Networks (CNNs) served as

the key method in this study to detect brain tumors

contained within MRI and CT scans for improving

early diagnosis and treatment designs. Through the

implementation of U-Net and ResNet architectures

CNN models delivered both high precisions together

with robustness for analyzing tumor features. Model

performance became better through proper training

data quality together with optimal preprocessing

methods. Aside from rotation and scaling there was

the usage of mirroring techniques to enrich datasets

while minimizing overfitting. The analysis shows that

CNN technology demonstrates strong potential for

automated medical imaging tumor detection systems.

REFERENCES

Akkus, Zeynettin, et al. "Deep learning for brain MRI

segmentation: State of the art and future directions."

Journal of digital imaging 30.4 (2017): 449-459.

Bakas, Spyridon, et al. "Advancing the cancer genome atlas

glioma MRI collections with expert segmentation

labels and radiomic features." Scientific data 4 (2017):

170117.

Chaddad, Ahmad, et al. "Radiomics in glioblastoma:

current status and challenges facing clinical

implementation." Frontiers in oncology 9 (2019): 374.

Dvořák, Petr, et al. "Comparison of brain tumor detection

in magnetic resonance images using three artificial

neural networks." International Journal of Innovative

Computing, Information and Control 11.3 (2015): 771-

784.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

352

Egan, Timothy, et al. "Brain tumor segmentation using

cascaded convolutional neural networks." 2019 IEEE

Symposium Series on Computational Intelligence

(SSCI). IEEE, 2019.

Hamghalam, M., et al. "A Comprehensive Review on Brain

Tumor Classification and Segmentation Using Machine

Learning and Deep Learning Techniques." 2021 3rd

International Conference on Machine Learning, Big

Data and Business Intelligence (MLBDBI). IEEE,

2021.

Havaei, Mohammad, et al. "Brain tumor segmentation with

deep neural networks." Medical image analysis 35

(2017): 18-31.

Havaei, Mohammad, et al. "Hemis: Hetero-modal image

segmentation." Medical Image Analysis 49 (2018): 94-

108.

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton.

"ImageNet classification with deep convolutional

neural networks." Advances in neural information

processing systems 25 (2012): 1097-1105.

Litjens, Geert, et al. "A survey on deep learning in medical

image analysis." Medical image analysis 42 (2017): 60-

88.

Mahmood, Qaiser, et al. "Deep learning for brain tumor

classification." Computers in Biology and Medicine

111 (2019): 103345.

Menze, Bjoern H., et al. "The multimodal brain tumor

image segmentation benchmark (BRATS)." IEEE

transactions on medical imaging 34.10 (2015): 1993-

2024.

Nascimento, Gabriel F., et al. "A review on brain tumor

diagnosis for brain MRI using image processing

techniques." Computer methods and programs in

biomedicine 141 (2017): 89-97

Parekh, Vishwa S., and Michael A. Jacobs. "Radiomics: a

new application from established techniques." Expert

review of precision medicine and drug development 2.5

(2017): 237-249.

Rathore, Saima, et al. "Review on MRI brain image

segmentation methods." Magnetic resonance imaging

34.3 (2016): 530-538.

Shboul, Zeyad A., et al. "Deep learning in brain tumor

classification: A comparative study." Clinical

Neurology and Neurosurgery 196 (2020): 106024.

Tang, Zhenwei, et al. "Deep learning features for brain

tumor classification using MRI images."

Computational and mathematical methods in medicine

(2019).

Zhang, Wenxing, et al. "Glioma grading on conventional

MR images: a deep learning study with transfer

learning." Frontiers in Neuroscience 13 (2019): 803.

Zhang, Xin, et al. "Deep learning-based classification and

mutation prediction from histopathological images of

hepatocellular carcinoma." Frontiers in genetics 10

(2019): 797.

Zhou, M., Scott, J., Chaudhury, B., Hall, L., Goldgof, D., &

Yeom, K. W. (2018). "Deep learning with

convolutional neural networks for brain tumor

detection using MRI images." In 2018 IEEE 15th

International Symposium on Biomedical Imaging (ISBI

2018) (pp. 11721175). IEEE.

Scalable and Robust CNN Models for Brain Tumor Detection in Healthcare Applications

353