AI‑Powered Facial Expression Recognition for Real‑Time

Productivity Improvement

N. Ramadevi, A. Akhila, C. S. Sushmitha, S. Pravallika, K. Lahari and D. Bhavyasree

Department of Computer Science and Engineering (Data Science), Santhiram Engineering College, Nandyal, Andhra

Pradesh, India

Keywords: Convolutional Neural Network (CNN), Facial Emotion Detection, Facial Expression Recognition, Real‑Time

Productivity Improvement.

Abstract: Imagine having a little helper at work, an AI-powered bot that's like a super observant friend. This bot can

actually see how you're feeling by looking at your face through the computer camera. It uses special tech to

understand if you're stressed, tired, really focused, happy, or maybe just not feeling it. When the bot picks up

on an emotion, it's ready to jump in with support. It has a whole collection of encouraging words and helpful

tips, kind of like a digital pep talk. So, if it sees you're stressed or tired, it might pop up with a calming message

or a suggestion to take a quick break. This way, you become more aware of your emotions and get the support

you need right when you need it. This system is designed to fit right into your workday without being

disruptive. It can help you and your managers see how everyone's doing emotionally over time, so you can

address any issues before they affect how well you work. By giving personalized encouragement, the bot

helps create a more positive and supportive atmosphere at work, which can lead to less stress, better focus,

and ultimately, higher productivity.

1 INTRODUCTION

1.1 Motivation

The motivation behind this research stems from the

compelling opportunity to enhance human well-being

and productivity in the workplace through intelligent

technology. Imagine a work environment where

individuals feel more supported and understood,

leading to reduced stress and increased focus. The

ability to accurately and efficiently recognize facial

expressions opens a door to creating AI assistants that

can proactively respond to emotional cues, offering

timely support and fostering a more positive and

productive atmosphere. Furthermore, the drive to

make this technology accessible and affordable for

widespread use fuels the focus on computational

efficiency. By overcoming the limitations of existing

systems, this research aims to unlock the potential of

facial expression recognition to create truly helpful

and empathetic AI solutions that can make a tangible

difference in people's daily work lives. Ultimately,

the motivation lies in building an "AI friend" that

contributes to a healthier, happier, and more efficient

workforce.

1.2 Problem Statement

The problem this research addresses is the need for

accurate, fast, and computationally efficient facial

expression recognition systems that can be practically

applied in real-world settings, particularly

workplaces. Existing facial expression recognition

technologies often suffer from limitations such as

requiring significant computing power, being too

slow for real-time applications, or lacking the

accuracy needed for reliable use. This research aims

to overcome these limitations by developing a

lightweight CNN-based system that can effectively

recognize emotions and contribute to improved

employee well-being and productivity, while also

considering crucial aspects of privacy and cost-

effectiveness for widespread adoption.

1.3 Objectives

• Develop an accurate and fast facial

expression recognition system: This was a

Ramadevi, N., Akhila, A., Sushmitha, C. S., Pravallika, S., Lahari, K. and Bhavyasree, D.

AI-Powered Facial Expression Recognition for Real-Time Productivity Improvement.

DOI: 10.5220/0013911000004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 4, pages

229-233

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

229

core goal, focusing on the fundamental

capability of the technology. The researchers

aimed to create a system that could correctly

identify a range of emotions from facial images

with a high degree of certainty and do so quickly

enough to be useful in real-time scenarios, like

during a workday.

• Create a computationally efficient system for

broader accessibility: A key objective was to

design the system to run effectively on standard

computer hardware, rather than requiring

specialized and expensive equipment. This

focus on being "lightweight" is crucial for

making the technology practical and affordable

for widespread adoption in workplaces and

other settings.

• Design an AI tool to proactively support

employee well-being and productivity: The

research went beyond just recognizing

emotions; it aimed to use that information to

create a helpful workplace tool. The objective

was to build an AI assistant that could identify

signs of stress or fatigue and then offer timely

interventions, like suggesting breaks or

providing calming messages, ultimately aiming

to improve employee mental health and work

output.

• Offer a scalable and cost-effective solution

for practical implementation: The researchers

aimed to develop a technology that could be

easily implemented in various workplace

environments without significant overhead

costs or complex integration processes. This

implies a focus on making the system adaptable

and affordable for businesses of different sizes.

• Address critical privacy and ethical

considerations related to facial data:

Recognizing the sensitive nature of analyzing

facial expressions, a significant objective was to

acknowledge and emphasize the importance of

responsible data handling and user privacy. This

highlights an awareness of the ethical

implications of the technology and a

commitment to its responsible deployment.

2 RELATED WORKS

P. Ekman 1971 this study established that facial

expressions are universally recognized across

cultures, forming a psychological basis for facial

expression analysis. It laid the groundwork for

modern automated facial expression recognition

systems.

S. Lawrence 1997 this research introduced

convolutional neural networks (CNNs) for face

recognition, demonstrating their effectiveness in

extracting facial features. It played a crucial role in

the adoption of deep learning techniques for facial

analysis.

H.-C. Shin 2016 the study investigated CNN

architectures and transfer learning for computer-

aided detection in medical imaging. It emphasized the

importance of dataset characteristics and feature

extraction in deep learning models.

M.-I. Georgescu 2019 the authors combined deep

learning and handcrafted features to enhance facial

expression recognition. Their approach improved

classification accuracy by leveraging both automatic

and manually designed features.

C. Du and S. Gao 2017 this study applied CNNs for

image segmentation-based multi-focus image fusion.

It contributed to advancements in image processing

by enhancing the quality and clarity of fused images.

M.Z.Uddin 2017 this study proposed a facial

expression recognition system using local direction-

based robust features combined with a deep belief

network (DBN). The approach improved feature

extraction and classification accuracy by leveraging

both handcrafted features and deep learning

techniques.

According to the research, the research proposes the

subsequent Hypothesis:

1. A lightweight CNN model can efficiently

classify facial emotions in real-time with

high accuracy.

2. Feature extraction from key facial

components (eyes, eyebrows, mouth)

enhances recognition accuracy.

3. Preprocessing large-scale datasets reduces

noise and redundancy, improving model

performance.

4. Real-time emotion recognition in

workplaces helps monitor employee

engagement and well-being.

5. AI-powered emotion recognition enhances

decision-making and optimizes

productivity.

3 METHODOLOGY

Facial expression recognition is a crucial technology

that enables machines to understand human emotions.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

230

Our AI-driven system employs a lightweight

Convolutional Neural Network (CNN) to achieve

real-time, high-accuracy emotion detection while

ensuring efficiency.This code uses a deep learning

model to recognize facial expressions, and here’s a

breakdown in simple terms:

Algorithm Used: It uses a type of deep learning

called a Convolutional Neural Network (CNN).

CNNs are very good at processing images because

they can automatically learn to recognize features like

edges and shapes.

Model Used: The model is loaded from a file called

emotion.h5. This is a pre-trained model saved in

Keras format. It was already trained on a set of facial

images so that it can predict emotions based on new

images.

Lightweight Model: The model is considered

lightweight because it has been optimized to run

quickly and use fewer computing resources. This is

especially useful for applications like web services

where fast, real-time predictions are needed. The

image size is reduced to 48x48 pixels and pixel values

are normalized, which helps keep the processing

efficient.

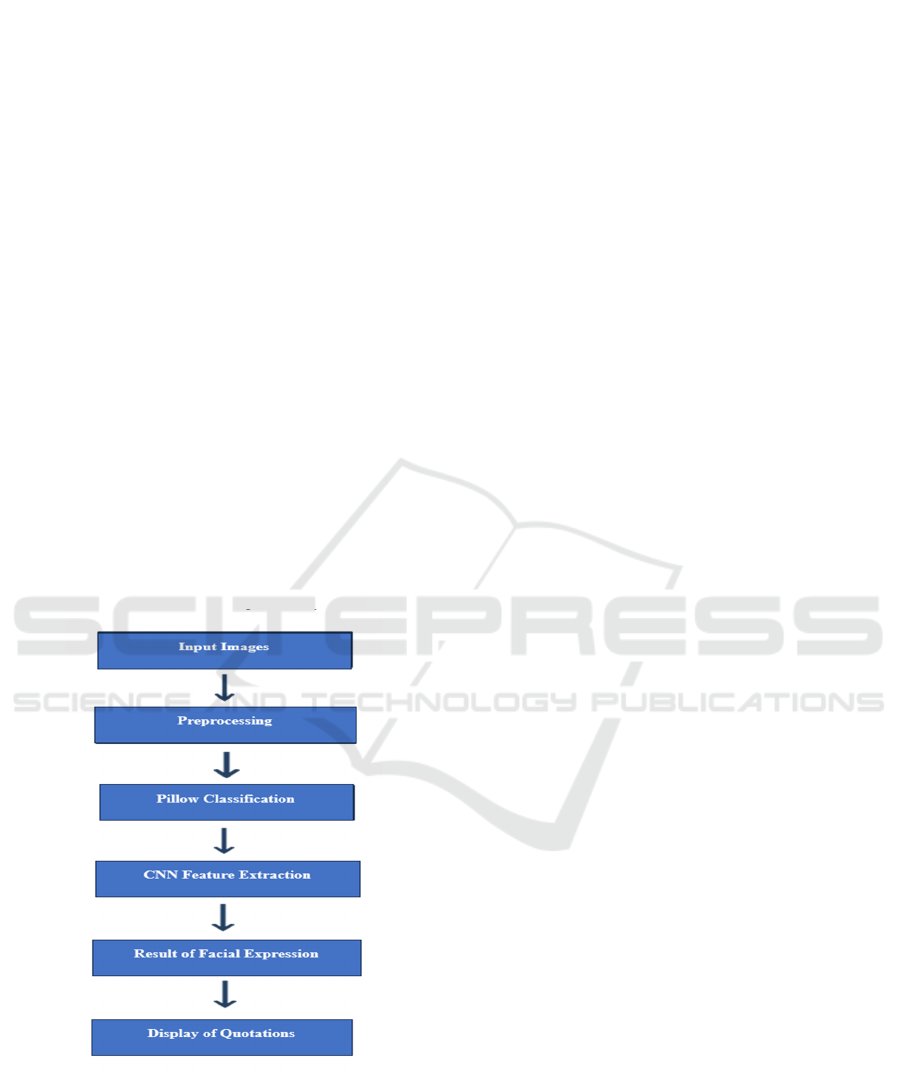

Figure 1 shows the Operation flow of light weight

CNN based face emotion recognition system.

Figure 1: Operation flow of light weight CNN based face

emotion recognition system.

Input Images

• This stage involves capturing or receiving

images that contain human faces, which are

the subject of emotion analysis.

• The source of these images can vary,

including live camera feeds, uploaded files,

or stored datasets.

• The quality and characteristics of these input

images directly influence the accuracy of the

subsequent steps in the process.

Preprocessing

• Before feeding the images to the CNN, they

undergo preprocessing to ensure optimal

performance.

• This typically involves resizing the facial

region to a specific dimension (like 48x48

pixels) and normalizing the pixel values.

• Preprocessing helps standardize the input

and makes the emotion recognition task

more efficient for the CNN.

Pillow Classification

• It seems there might be a slight

misunderstanding or a typo in this step.

Based on the previous information, the

classification is done by the CNN itself, not

directly by the Pillow library.

• Pillow is primarily used for image loading

and manipulation before the image goes into

the CNN.

• Therefore, this step likely refers to the

CNN's role in classifying the extracted

features to predict the emotion.

CNN Feature Extraction

• The core of the emotion recognition process

lies in the Convolutional Neural Network

(CNN).

• This part of the system automatically learns

and extracts relevant features from the pre-

processed facial image.

• These features represent patterns and

characteristics in the face that are indicative

of different emotional states.

Result of Facial Expression:

• This stage represents the outcome of the

CNN's analysis, which is the identified

emotion present in the facial image.

• The result is typically a label indicating the

predicted emotion, such as "Happy," "Sad,"

"Angry," or "Neutral."

• This output provides the system's

interpretation of the emotional state

conveyed by the input face.

Display of Quotations

• This step describes an additional

functionality of the system, where relevant

AI-Powered Facial Expression Recognition for Real-Time Productivity Improvement

231

quotations are displayed based on the

detected emotion.

• The system likely has a database of

quotations categorized by emotion.

• Depending on the recognized facial

expression, the system selects and presents a

quotation that is appropriate to that

emotional state, potentially offering support

or encouragement.

4 RESULTS AND EVALUATION

The research successfully created a facial expression

recognition system that's good at identifying

emotions quickly and accurately, even on regular

computers. This is a big deal because it means this

technology could be used in many workplaces

without needing expensive equipment. The system

aims to be like a helpful AI friend that notices when

you're stressed or tired by looking at your face and

then offers support, like a calming message or a

reminder to take a break. The idea is that by

understanding and responding to employees'

emotions, this system can make the workplace a

better environment, reducing stress and boosting how

well people work. While there's excitement about

using this in real-world settings, the researchers also

point out that it's really important to handle people's

privacy and data carefully. Overall, the project offers

a promising way to use AI to improve well-being and

productivity at work in a way that's efficient. Figure

2 shows the facial output.

Figure 2: Facial expression recognition sad emotion output.

5 DISCUSSION

This research introduces an efficient AI system for

recognizing facial expressions in real-time. It utilizes

a CNN model optimized for accuracy and speed, even

on less powerful devices. The system aims to be a

workplace assistant, detecting emotions like stress or

fatigue via camera. Upon detecting negative

emotions, it offers supportive interventions such as

calming messages. This technology can provide

insights into employee well-being over time. The goal

is to create a more supportive and productive work

environment. The system is designed to be scalable

and cost-effective for widespread use. Privacy and

responsible data handling are crucial considerations

for implementation. This work advances facial

emotion recognition technology for practical

applications. Ultimately, it seeks to improve

workplace emotional health and productivity.

6 CONCLUSIONS

Our research has developed a facial expression

recognition system that prioritizes accuracy, speed,

and efficiency in terms of computational resources.

This system utilizes a sophisticated computer model

based on a Convolutional Neural Network (CNN),

which excels at identifying crucial facial features and

interpreting emotions more effectively than

traditional methods. A key advantage of our system is

its ability to operate on devices with limited

processing power, making it a cost-effective and

accessible solution for various applications. While

this technology holds significant promise for

enhancing workplace environments and security

systems, it's crucial to address privacy concerns and

ensure responsible data handling. Ultimately, our

work contributes to the advancement of facial

emotion recognition technology by providing a

scalable, efficient, and affordable solution. The goal

is to create an AI tool that can act as a helpful assistant

in the workplace, capable of detecting signs of stress

or fatigue through facial analysis and offering support

such as calming messages or suggestions for breaks.

We believe this technology can improve emotional

well-being, foster a more supportive work

environment, and ultimately boost productivity.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

232

REFERENCES

C. Szegedy, S. Ioffe, and V. Vanhoucke, ``Inception-v4,

inception-ResNet and the impact of residual

connections on learning,'' in Proc. 31st AAAI Conf.

Artif. Intell., 2016, p. 1.

C. Du and S. Gao, ``Image segmentation-based multi-focus

image fusion through multi-scale convolutional neural

network,'' IEEE Access, vol. 5, pp. 1575015761, 2017.

D. Amodei et al., ``Deep speech 2: End-to-end speech

recognition in english and mandarin,'' in Proc. Int. Conf.

Mach. Learn., Jun. 2016, pp. 173182.

D. Hazarika, S. Gorantla, S. Poria, and R. Zimmermann,

``Self-attentive feature-level fusion for multimodal

emotion detection,'' in Proc. IEEE Conf. Multimedia

Inf. Process. Retr. (MIPR), Apr. 2018, pp. 196201.

D. Kollias, P. Tzirakis, M. A. Nicolaou, A. Papaioannou,

G. Zhao, B. Schuller, I. Kotsia, and S. Zafeiriou, ``Deep

affect prediction in the-wild: Aff-wild database and

challenge, deep architectures, and beyond,'' Int. J.

Comput. Vis., vol. 127, pp. 123, Jun. 2019.

D. Kollias, A. Schulc, E. Hajiyev, and S. Zafeiriou,

``Analysing affective behavior in the rst ABAW 2020

competition,'' 2020, arXiv:2001.11409. [Online].

Available: http://arxiv.org/abs/2001.11409

H.-C. Shin, H. R. Roth, M. Gao, L. Lu, Z. Xu, I. Nogues, J.

Yao, D. Mollura, and R. M. Summers, ``Deep

convolutional neural networks for computer-aided

detection: CNN architectures, dataset characteristics

and transfer learning,'' IEEE Trans. Med. Imag., vol. 35,

no. 5, pp. 12851298, May 2016.

K.-Y. Huang, C.-H. Wu, Q.-B. Hong, M.-H. Su, and Y.-H.

Chen, ``Speech emotion recognition using deep neural

network considering verbal and nonverbal speech

sounds,'' in Proc. IEEE Int. Conf. Acoust., Speech

Signal Process. (ICASSP), May 2019, pp. 58665870.

M. E. Kret, K. Roelofs, J. J. Stekelenburg, and B. de Gelder,

``Emotional signals from faces, bodies and scenes

inuence observers' face expressions, Fixations and

pupil-size,'' Frontiers Human Neurosci., vol. 7, p. 810,

Dec. 2013.

M. Z. Uddin, M. M. Hassan, A. Almogren, A. Alamri, M.

Alrubaian, and G. Fortino, ``Facial expression

recognition utilizing local direction-based robust

features and deep belief network,'' IEEE Access, vol. 5,

pp. 45254536, 2017.

M. Z. Uddin, W. Khaksar, and J. Torresen, ``Facial

expression recognition using salient features and

convolutional neural network,'' IEEE Access, vol. 5, pp.

2614626161, 2017.

M. R. Koujan, A. Akram, P. McCool, J. Westerfeld, D.

Wilson, K. Dhaliwal, S. McLaughlin, and A.

Perperidis, ``Multi-class classification of pulmonary

endomicroscopic images,'' in Proc. IEEE 15th Int.

Symp. Biomed. Imag. (ISBI), Apr. 2018, pp. 15741577.

M.-I. Georgescu, R. T. Ionescu, and M. Popescu, ``Local

learning with deep and handcrafted features for facial

expression recognition,'' IEEE Access, vol. 7, pp.

6482764836, 2019.

O. Leonovych, M. R. Koujan, A. Akram, J. Westerfeld, D.

Wilson, K. Dhaliwal, S. McLaughlin, and A.

Perperidis, ``Texture descriptors for classifying sparse,

irregularly sampled optical endomicroscopy images,'' in

Proc. Annu. Conf. Med. Image Understand. Anal.

Cham, Switzerland: Springer, 2018, pp. 165176.

P. Ekman and W. V. Friesen, ``Constants across cultures in

the face and emotion.,'' J. Personality Social Psychol.,

vol. 17, no. 2, pp. 124-129, 1971.

S. Lawrence, C. L. Giles, A. Chung Tsoi, and A. D. Back,

``Face recognition: A convolutional neural-network

approach,'' IEEE Trans. Neural Netw., vol. 8, no. 1, pp.

98113, Jan. 1997.

T. Chang, G. Wen, Y. Hu, and J. Ma, ``Facial expression

recognition based on complexity perception

classication algorithm,'' 2018, arXiv:1803.00185.

[Online]. Available: http://arxiv.org/abs/1803.00185

W. Y. Choi, K. Y. Song, and C.W. Lee, ``Convolutional

attention networks for multimodal emotion recognition

from speech and text data,'' in Proc. Grand Challenge

Workshop Hum. Multimodal Lang. (Challenge-HML),

2018, pp. 2834.

AI-Powered Facial Expression Recognition for Real-Time Productivity Improvement

233