Performance Comparison of Deep Learning‑Based Classification of

Skin Cancer

D. Gowthami, C. Gowri Shankar, K. Kathiravan, K. Dhanush, K. M. Dharshni and C. Lavanya

Department of Electronics and Communication Engineering, KSR College of Engineering, Thiruchengode, Tamil Nadu,

India

Keywords: Skin Cancer Classification, Deep Learning, NASNet, CNN.

Abstract: Skin cancer is among the most prevalent and perilous diseases globally, necessitating accurate and efficient

categorisation methods for early detection. Deep learning methodologies have demonstrated significant

potential in the automated identification of skin cancer by enhancing diagnostic precision and minimising

human error. The main motive of this work is Deep Learning (DL)-based skin cancer classification, this work

proposed NASNet DL model, compared to conventional Convolutional Neural Networks (CNNs). NASNet

is a neural architecture designed through automated architecture search that optimizes feature extraction and

classification accuracy while maintaining computational efficiency. Experimental results verify that NASNet

surpasses most traditional CNNs in classification precision, recall, and F1-score. Consequently, the

supremacy of NASNet will pave its way for usage in real applications in the domain of medicine that will

eventually translate into early, improved patient diagnoses.

1 INTRODUCTION

Millions and millions of humans are diagnosed yearly

with skin cancers, which occur when the ordinary

cells in human skin grow or multiply in very

abnormal ways usually due to intense exposure to

radiation from the ultraviolet rays produced by the

sun or artificial machines such as a tanning bed. It is

crucial to identify skin cancer in time and classify it

correctly to provide the best treatment and improve

the survival chances of patients. The epidermis is the

main type of tissue in the cutaneous membrane, and it

acts as the outer covering of the body. It plays a

significant role in the immune system's defense

against infections and excessive water loss in the

body. It provides a flexible, mechanical, physical, and

protective barrier against all external assaults,

including harmful chemicals, pathogenic bacteria,

ultraviolet (UV) radiation, and mechanical forces.

Moreover, it modulates immunological,

thermoregulatory, and sensory coordination

responses. The body’s living cells grow, divide, and

eventually pass away. The cell cycle replaces dead

cells constantly in the human body. Contrarily,

uncontrolled cell division and the growth of abnormal

cells cause cancer. It originates in the skin and is

brought on by cells that develop atypically and are

more likely to be distributed to several bodily regions.

Skin cancer can be mainly divided into three biggest

categories, viz. Basal cell carcinoma (BCC),

Melanoma, Squamous Cell Carcinoma (SCC).

In dermatology, skin cancer classification is a

significant responsibility that involves accurate

identification of malignant and benign lesions on the

skin to allow their early detection and treatment.

Since skin cancers are increasingly common

worldwide, effective classification systems are

needed to improve the chances of survival among

patients as well as to reduce healthcare costs.

Traditional methods of diagnosing skin cancer

include clinical examination, dermoscopy, and

histological testing. However, these treatments

sometimes require a lot of time, involve the expertise

of trained dermatologists, and are prone to human

error. Automated skin cancer classification using

Deep Learning (DL) has become a feasible solution

to overcome these limitations.

DL models, specifically CNNs, have been pretty

efficient in the analysis of medical images and in

classifying different types of skin cancer. New

designs such as NASNet, a Neural Architecture

Search Network, have been recently designed to

improve classification performance through the

146

Gowthami, D., Shankar, C. G., Kathiravan, K., Dhanush, K., Dharshni, K. M. and Lavanya, C.

Performance Comparison of Deep Learning-Based Classification of Skin Cancer.

DOI: 10.5220/0013909400004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 4, pages

146-152

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

optimization of feature extraction and efficiency of

the model.

Automated skin cancer classification accelerates

the diagnostic process and provides a reliable, non-

invasive, and cost-effective method for early

detection. Ongoing breakthroughs in AI and deep

learning are anticipated to significantly assist

dermatologists and enhance patient care.

2 LITERATURE REVIEW

In 2021, Chu et al. proposed two levels of fairness:

expectation fairness and rigorous fairness. These

measures are put in place to reduce supernet bias and

enhance evaluation competence. Both methods

performed better than existing unfair methods,

including back-propagation and direct parameter

updates for every model. Strict Fairness performs best

when it is a combination of back-propagation

operations (BPs) and a single parameter change as a

supernet step.

Res-Unet, a combination of pre-trained CNNs

ResNet and UNet, was introduced by Zafar et al.

(2020) to detect lesion boundaries and significantly

enhance classification performance. The proposed

solution employed computer vision-based supervised

learning methods. The model was validated and

trained utilizing the ISIC-2017 and PH2 datasets for

detecting epidermal tumours accurately, segmenting

them, and classifying them. Jaccard index was

employed to identify how close the datasets were to

one another. Aziz et al. (2020) employed complex

features that were derived from a pre-trained AlexNet

network and classified them with SVM. This

approach yielded correct outcomes for tumour

classification.

A novel technique for data analysis was proposed

by Arivuselvam et al. (2021). The data were then

separated into training and testing sets and then

classified via the SVM algorithm. Statistical

measures are utilized to quantify how similar the

features of two images are, which enhances the

accuracy of the classifications. Lesions are eliminated

and subsequently standardized into symmetrical Grey

Co-occurrence Level Matrices (GLCM) for the sake

of texture analysis. The Multi-Class SVM Classifier

was applied in the classification. Here, Support

Vector Machine classifiers are employed to compare

the characteristics of the Test image and those of the

data set.

Houssein et al. (2020) proposed a Levy flight

distribution (LFD) metaheuristic ´ method based on

Levy flight for handling practical optimization issues.

The Levy flight random walk serves as the model for

the LFD algorithm in traversing unfamiliar extensive

search domains. A variety of optimisation test bed

issues are considered to evaluate the performance of

the LFD method. The statistical simulation findings

indicated that the LFD technique surpasses several

prominent metaheuristic algorithms in the majority of

tests, producing superior outcomes.

Praveena et al. (2020) the hazardous infection

which causes the prime resaon for death rate is skin

malignant growth. The reason for skin disease is

because of the unusual development in melanocytic

cells. Because of hereditary elements and openness of

bright radiation, melanoma shows up on the skin as

brown or dark in variety. Early conclusion can fix this

skin malignant growth totally. The conventional

strategy to distinguish the skin disease is Biopsy

which is obtrusive and difficult. This strategy for

research facility testing consumes additional time. To

determine the above issues, finding of skin disease is

created in light of systematic supported. The

proposed framework utilizes four stages to

distinguish the skin disease. In the first place, it

utilizes Dermoscopy to catch the skin picture.

Subsequent stage is to pre-process the picture. After

the progression of pre-handling, it is sectioned which

is trailed by include extraction with novel highlights

from the divided sore. A last, these highlights were

given to a directed classifier named SVM to group

whether the given picture is as typical picture or

melanoma infected skin picture.

Arivuselvam et al. (2021) human disease is the

perilous infections existing which are mainly

achieved by genetic feebleness of various nuclear

changes. Among the various sorts of infection, skin

disease is potentially the most generally perceived

kinds of threat. Skin malignant growth recognition

innovation is broadly confined into four basic parts

starting from social affair dermoscopic picture

informational collection, dermoscopic picture data

set, picture pre-handling which incorporates hair

expulsion, commotion evacuation, resize, honing,

contrast extending of the input data.

Priya Choudhary et al. (2022) based on the

circumstance in the residing become more unpleasant

constantly which appears to make a few issues to

people where one of the most hazardous issue is

malignant growth that should be recognize in

beginning phase and afterward to fix it too. In this

paper the demonstrative apparatuses for the

recognition of skin disease sores as Dermoscopic

pictures that will eventually help in decreasing the

melanoma-actuated mortality. We have presented

division procedure which helps in the mechanized

Performance Comparison of Deep Learning-Based Classification of Skin Cancer

147

skin sore determination pipe-line. Here, we have

introduced quick and completely programmed

calculation for the location of the skin disease in

Dermoscopic pictures which are concocted by past

statements. In this paper, we have likewise introduced

the issues which were identified in the past work. The

obtained skin pictures are preprocessed by middle

channel and portioned by Edge-based division,

Morphological division and K-implies strategies. The

factual elements mean, and standard deviation, and

the surface highlights difference, and energy are

determined for all the fragmented skin injury pictures.

The presentation of the three division strategies are

thought about and found that the K-Means calculation

creates improved outcomes.

Kavitha et al. (2020) because of the rising

intricacies in human discernment challenges and

subjectivity, the dermatological problems are as yet

staying as one of the best clinical issues. Lately, a

melanocytic malignant growth is becoming as a most

dangerous disease in the mankind. Dermatologists are

expecting a PC supported framework that can

distinguish it in beginning phase. So, the doctors

genuinely must recognize disease in its beginning

phase. This paper has been introducing an overview

on promptly open picture handling procedures for

melanoma discovery as picture handling has a huge

impact on the pictures got from the computerized

center in distinguishing and characterizing the

sicknesses. This paper learns about the different

accessible harmless procedures that are should give a

mechanized picture. Various classification

techniques perform certainly for the conclusion of

skin injuries is related and the comparing discoveries

are described.

3 SYSTEM METHODOLOGY

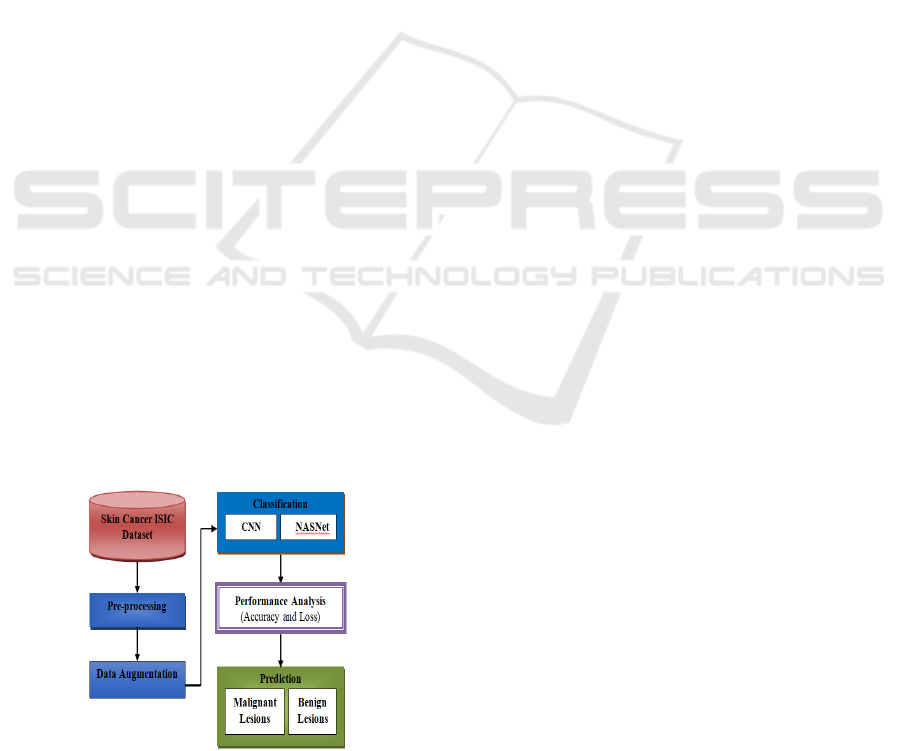

Figure 1: System methodology.

Figure 1 illustrates the proposed System

Methodology for skin cancer classification.

3.1 Pre-Processing

ISIC image pre-processing for skin cancer

classification involves essential steps to enhance

image quality and improve classification accuracy.

First, images are resized and normalized to a standard

resolution (e.g., 224×224) to ensure uniform input for

deep learning models like NASNet. Noise reduction

techniques, such as median filtering, are applied to

remove unwanted artifacts like hair, reflections, and

uneven lighting. Additionally, color normalization is

performed to maintain consistency in color

distribution across images, improving lesion feature

extraction. These preprocessing procedures enhance

dermoscopic pictures, facilitating improved feature

representation and more precise skin cancer

classification.

3.2 Data Augmentation

After preprocessing, data augmentation techniques

are applied on ISIC images to enhance generalization

in a model and hence increase the precision of skin

cancer classification. A few of these common

augmentation techniques include rotation, flipping,

scaling, and cropping, used in improving the diversity

of lesion orientation and size. It has the ability to

adjust to several lighting conditions with contrast and

brightness adjustments, although Gaussian noise and

blurring are used to emulate the fluctuations found in

images during real-world scenes. Additionally,

elastic transformations as well as affine distortions

indirectly change the geometry of lesions while not

changing the diagnostic features of the lesions. This

type of augmentation has the impacts of minimizing

class imbalance, minimizing overfitting, and

maximizing the robustness of deep learning models

like NASNet when it comes to diagnosing skin

cancer.

3.3 Classification

Deep learning models, especially Convolutional

Neural Networks (CNN), are commonly used to

classify skin cancers since they can learn

automatically hierarchical features from dermoscopic

images with or without human involvement. Existing

methods primarily use CNN models such as as

VGG16, ResNet, or Inception for skin lesion

identification and classification. The performance of

these models is impressive; however, accuracy and

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

148

efficiency have shortcomings, particularly with

complicated lesion types. To address this issue, the

improved version is NASNet, or the Neural

Architecture Search Network. NASNet uses

automated search to improve network design,

enabling better feature extraction and achieving high

classification accuracy while maintaining computing

efficiency. This study reveals that the NASNet model

far outperforms conventional CNN models in skin

cancer diagnosis, making it a better and more

effective method.

3.4 NASNet

The Neural Architecture Search Network (NASNet)

is a state-of-the-art deep learning architecture created

through automated neural architecture search. It

improves accuracy as well as computational

efficiency. NASNet has proven to be quite effective

in image classification, making it a good candidate for

skin cancer classification.

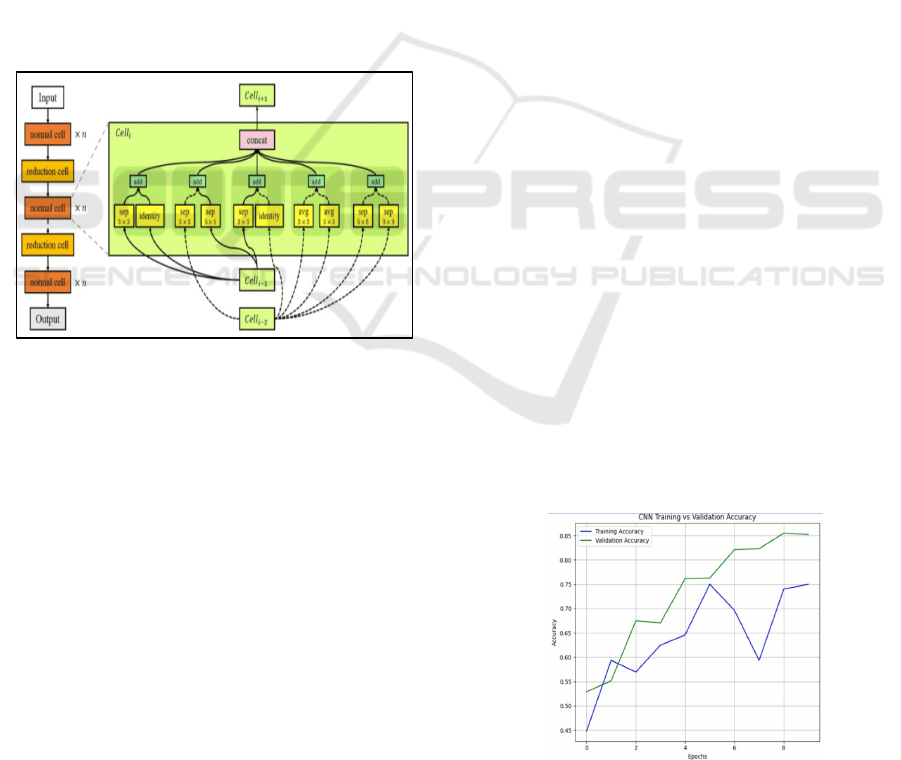

Figure 2: NASNet architecture diagram.

Figure 2 shows the architecture of NASNet, which is

automatically built to optimize the layers and

parameters of the network and determine the most

efficient architecture. The process entails the search

for the best convolutional layer arrangement,

activation functions, pooling, and skip connections to

create a better and more robust architecture with the

capability to extract the important information from

images and improve classification accuracy.

NASNet is able to learn hierarchical features of

incoming images independently without any human

intervention. Skin cancer classification identifies

essential features like texture, colour patterns, and

morphology that distinguish malignant lesions from

benign lesions.

NASNet uses a modular architecture with several

convolutional layers and cell blocks. The cells in

NASNet are engineered to collect both detailed and

overarching information, which are crucial for

differentiating various types of skin diseases. The

architectural components comprise

• Convolutional Layers for detecting edges,

textures, and patterns.

• Max Pooling Layers for down sampling the

image, preserving important features while

reducing computational complexity.

• Batch Normalization for stabilizing and

accelerating training.

• Dense Layers at the final stage to perform

classification based on the learned features.

This transfer learning method allows the model to use

previously acquired attributes (such as edges and

textures) and adapt them for the specific aim of

detecting skin cancer. By training on the ISIC dataset,

NASNet improves its ability to extract features and

classify skin lesions. While being trained, NASNet

decreases loss (for example, category cross-entropy)

and improves its performance by employing back-

propagation and gradient descent.

4 EXPERIMENTAL RESULTS

The effectiveness of the proposed model is assessed

using measures such as validation accuracy and loss.

4.1 Dataset Details

The ISIC dataset is a well-known standard for

classifying skin cancer and segmenting lesions. It

consists of 25,000 annotated dermoscopic

photographs, as well as high-resolution dermoscopic

JPEG and PNG photos. Dermatologists have

annotated it, and it includes a wide range of skin

problems, such as benign lesions and malignant types.

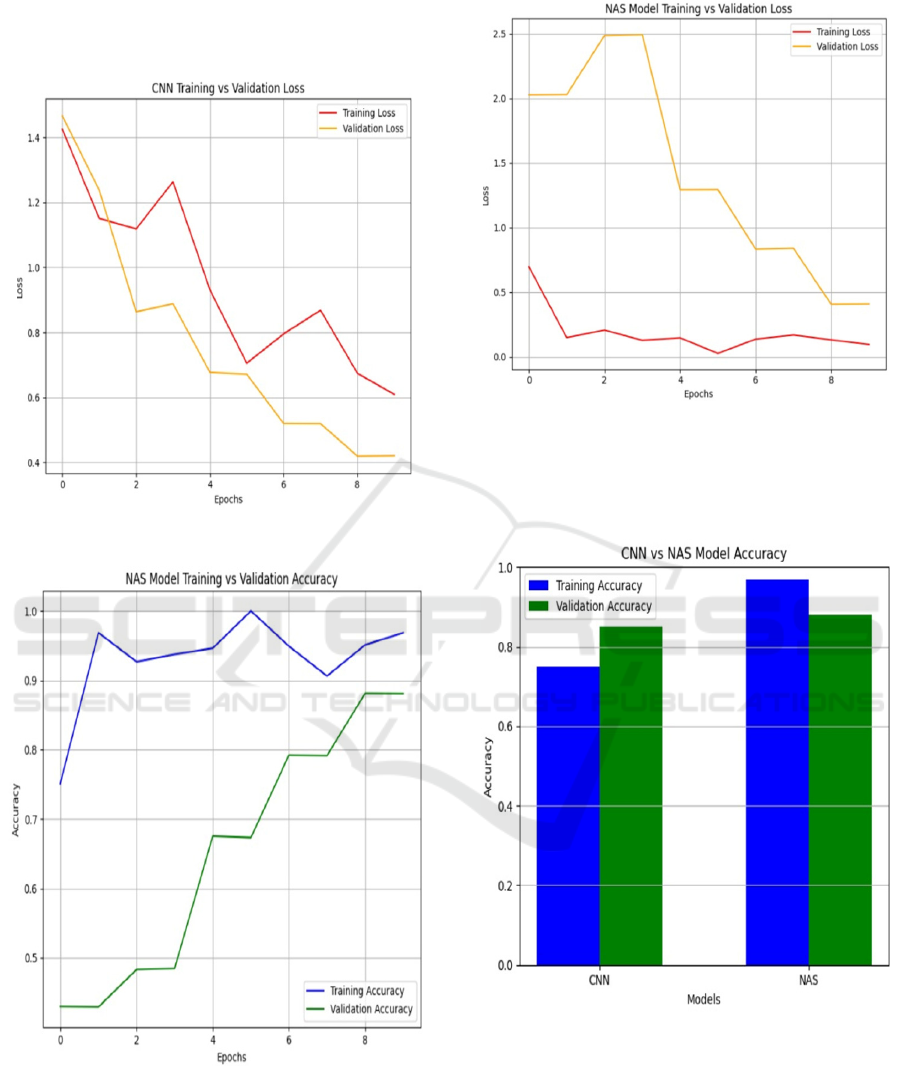

Figure 3: Accuracy of CNN training versus validation

Performance Comparison of Deep Learning-Based Classification of Skin Cancer

149

Figure 3 presents Accuracy of CNN Training versus

Validation and Figure 4 shows the Loss of CNN

Training versus Validation.

Figure 4: Loss of CNN training versus validation.

Figure 5: Accuracy of NASNet training versus validation.

Figure 6: Loss of NASNet training versus validation.

Figure 5 represents Accuracy of CNN Training versus

Validation and Figure 6 illustrates the Loss of CNN

Training versus Validation.

Figure 7: Performance analysis – accuracy.

Figure 7 depicts the Performance Analysis that uses

Accuracy. NASNet is better than typical CNN models

since it automatically finds the ideal architecture for

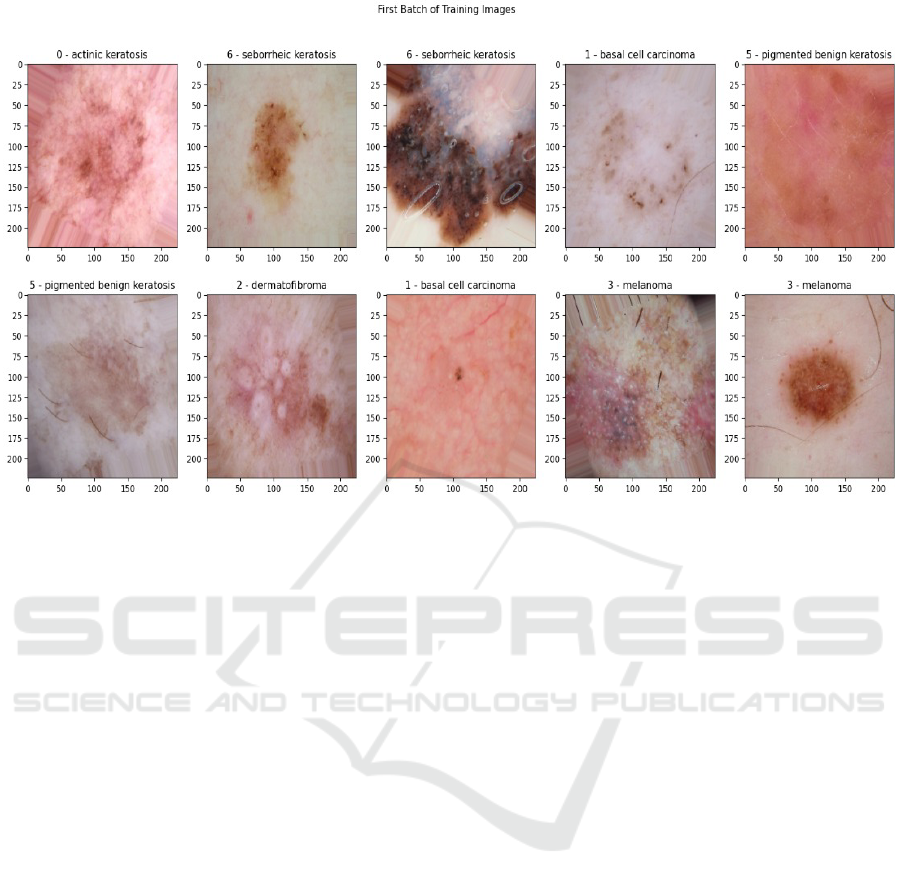

classifying skin cancer. Figure 8 gives Output skin

cancer classification image.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

150

Figure 8: Sample output image.

5 CONCLUSIONS

This work highlights the efficacy of deep learning

models in skin cancer classification, with NASNet's

design delivering major enhancements over standard

CNNs. It is extremely effective for automated skin

cancer detection, especially when trained on ISIC

datasets, because to its advanced search for the best

design. The study, which is based on validation

accuracy and loss, shows that NASNet is significantly

better than traditional CNN models when it comes to

classification accuracy and efficiency. The automated

architectural optimisation of NASNet improves its

ability to gather complex features of skin lesions,

leading to more accurate predictions and lower loss

values during training. The findings show that

NASNet is a suitable model for detecting skin cancer.

It performs better and is more reliable than current

CNN-based approaches, which leads to better early

diagnosis and patient outcomes.

REFERENCES

Ajmal, M., Khan, M. A., Akram, T., Alqahtani, A.,

Alhaisoni, M., Armghan, A., Althubiti, S. A. and

Alenezi, F. (2023), ‘Bf2sknet: Best deep learning

features fusion-assisted framework for multiclass skin

lesion classification’, Neural Computing and

Applications 35(30), 22115– 22131.

Arivuselvam, B. et al. (2021), ‘Skin cancer detection and

classification using svm classifier’, Turkish Journal of

Computer and Mathematics Education (TURCOMAT)

12(13), 1863–1871.

Bassel, A., Abdulkareem, A. B., Alyasseri, Z. A. A., Sani,

N. S. and Mohammed, H. J. (2022), ‘Automatic

malignant and benign skin cancer classification using a

hybrid deep learning approach’, Diagnostics 12(10),

2472.

Cassidy, B., Kendrick, C., Brodzicki, A., Jaworek-

Korjakowska, J. and Yap, M. H. (2022), ‘Analysis of

the isic image datasets: Usage, benchmarks and

recommendations’, Medical image analysis 75,

102305.

Chu, X., Zhang, B. and Xu, R. (2021), Fairnas: Rethinking

evaluation fairness of weight sharing neural

architecture search, in ‘Proceedings of the IEEE/CVF

International Conference on Computer Vision’, pp.

12239–12248.

Houssein, E. H., Saad, M. R., Hashim, F. A., Shaban, H.

and Hassaballah, M. (2020), ‘Levy flight distribution:

A new metaheuristic algorithm for solving engineering

opti- ´ mization problems’, Engineering Applications of

Artificial Intelligence 94, 103731.

Iqbal, I., Younus, M., Walayat, K., Kakar, M. U. and Ma, J.

(2021), ‘Automated multi-class classification of skin

lesions through deep convolutional neural network with

dermoscopic images’, Computerized medical imaging

and graphics 88, 101843.

Performance Comparison of Deep Learning-Based Classification of Skin Cancer

151

Kaur, R. and Ranade, S. K. (2023), ‘Improving accuracy of

convolutional neural networkbased skin lesion

segmentation using group normalization and combined

loss function’, International Journal of Information

Technology pp. 1–9.

Kavitha, P & Jayalakshmi, V 2020, Survey of Skin Cancer

Detection using Various Image Processing Techniques,

Proceedings of the Third International Conference on

Intelligent Sustainable Systems, IEEE Xplore Part

Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3,

pp. 1062-1069.

Khan, M. A., Akram, T., Zhang, Y.-D. and Sharif, M.

(2021), ‘Attributes based skin lesion detection and

recognition: A mask rcnn and transfer learning-based

deep learning framework’, Pattern Recognition Letters

143, 58–66.

Khouloud, S., Ahlem, M., Fadel, T. and Amel, S. (2022),

‘W-net and inception residual network for skin lesion

segmentation and classification’, Applied Intelligence

pp. 1–19.

Li, X., Zheng, J., Li, M., Ma, W. and Hu, Y. (2022), ‘One-

shot neural architecture search for fault diagnosis using

vibration signals’, Expert Systems with Applications

190, 116027.

Mehwish Dildar, Shumaila Akram, Muhammad Irfan,

Hikmat Ullah Khan, Muhammad Ramzan, Abdur

Rehman Mahmood, Soliman Ayed Alsaiari, Abdul

Hakeem M Saeed & Mohammed Olaythah Alraddadi

2021, ‘Skin cancer detection: A review using deep

learning techniques’, International Journal of

Environmental Research and Public Health, vol. 18, no.

01, pp. 1-22.

Mohakud, R. and Dash, R. (2022), ‘Designing a grey wolf

optimization based hyperparameter optimized

convolutional neural network classifier for skin cancer

detection’, Journal of King Saud University-Computer

and Information Sciences 34(8), 6280–6291.

Nawaz, M., Mehmood, Z., Nazir, T., Naqvi, R. A., Rehman,

A., Iqbal, M. and Saba, T. (2022), ‘Skin cancer

detection from dermoscopic images using deep learning

and fuzzy k-means clustering’, Microscopy research

and technique 85(1), 339–351.

Ragab, M., Choudhry, H., Al-Rabia, M. W., Binyamin, S.

S., Aldarmahi, A. A. and Mansour, R. F. (2022), ‘Early

and accurate detection of melanoma skin cancer using

hybrid level set approach’, Frontiers in Physiology 13,

2536.

Ragumadhavan, R, Aravind Britto, KR & Vimala, R 2022,

‘Melanoma skin cancer detection using wavelet

transform and local ternary pattern’, Journal of Medical

Imaging and Health Informatics, vol. 12, no. 1, pp. 15-

19, ISSN: 2156-7018,

Sangers, T, Reeder, S, Vander Vet, S, Jhingoer, S,

Mooyaart, A, Siegel, DM & Wakkee, M 2022,

‘Validation of a market-approved artificial intelligence

mobile health app for skin cancer screening: A prosp-

ective multicenter diagnostic accuracy study’,

Dermatology: Skin CancerResearch Article, vol. 11,

no. 8, pp. 1-8.

Wu, Y. P., Parsons, B., Jo, Y., Chipman, J., Haaland, B.,

Nagelhout, E. S., Carrington, J., Wankier, A. P., Brady,

H. and Grossman, D. (2022), ‘Outdoor activities and

sunburn among urban and rural families in a western

region of the us: Implications for skin cancer preventi-

on’, Preventive Medicine Reports 29, 101914.

Zafar, K., Gilani, S. O., Waris, A., Ahmed, A., Jamil, M.,

Khan, M. N. and Sohail Kashif, A. (2020), ‘Skin lesion

segmentation from dermoscopic images using convol-

utional neural network’, Sensors 20(6), 1601.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

152