Malicious AI: Understanding and Mitigating the Risks

B. Naga Lakshmi, Thimmapuram Usharani, P. Thasleem, P. Deepthi and K. Shaguftha Farha

Department of CSE, Ravindra College of Engineering for Women, Kurnool, Andhra Pradesh, India

Keywords: Artificial Intelligence (AI), Malicious Use of AI, Dual‑Use Technology, AI Governance, Ethics,

Cybersecurity Threats.

Abstract: Artificial Intelligence (AI) is changing industries and improving life, but also causes significant risk when

used maliciously. This paper investigates the growing concern of the AI-managed crime, checks how AI can

be exploited for malicious purposes such as cyber-attacks, misinformation campaigns, monitoring misuse and

autonomous weapons. This exposes dual-use nature of AI technologies, where machine learning, natural

language processing and progress in computer vision are leveraged for both social benefits and harmful

intentions. The study underlines the need for active governance, moral guidelines and strong safety measures

to reduce the abuse of AI. By analyzing the real -world matters and emerging threats, the purpose of this

research is to raise awareness and propose framework to reduce the malicious use of AI, while promoting

innovation responsibly.

1 INTRODUCTION

The fast-paced development of Artificial Intelligence

(AI) has brought about revolutionary changes in

many sectors, increasing productivity, efficiency, and

convenience. Healthcare, finance, transportation, and

education are just a few examples of sectors that have

been revolutionized by AI, opening up new avenues

that were hitherto unimaginable. But as AI is

increasingly being adopted, its dual-use nature has

given rise to major concerns regarding its misuse.

Though AI can contribute to positive social impact,

its potential also makes it a strong weapon

for AI. This article discusses the dark side of AI

by uncovering its use and abuse. It identifies how

AI is being used by cybercriminals to enable

sophisticated cyberattacks, automate phishing, and

evade security measures. AI technologies such as

deepfakes and voice cloning have also enabled new

channels of misinformation, deception, and ransom.

Social engineering attacks fueled by AI-powered

chatbots are more insidious and difficult to spot than

ever. In addition, the design of autonomous weaponry

poses physical safety concerns in regards to AI both

in the armed forces and terrorists. AI's ability to

monitor huge volumes of individual data has also

made breaches of privacy all the more ubiquitous,

with surveillance and targeted strikes enabled by AI.

Criminal groups continue to use AI to expand their

operations and bypass conventional security

measures, the detection, prevention, and regulation

challenges become even more insurmountable. This

paper seeks to present an overview of these new risks

and emphasizes the need to create ethical AI

frameworks, sophisticated security protocols, and

global cooperation to counter the possible threats of

AI abuse

2 LITERATURE SURVEY

F. A. Smith, J.L. Brown, and K. M. Williams,

"Cybersecurity in the Age of AI: Threats and

Solutions" Threats and Solutions" The article

discusses the cybersecurity threats posed by AI with

regard to changing cyber threats. It emphasizes the

potential for weaponizing AI tools to commit

cybercrimes such as data breaches, advanced

persistent threats (APTs), and botnet attacks. The

study sheds light on the weaknesses of AI-based

systems and the fact that they need effective security

measures against AI-based cybercrime.

G. S. T. Peterson, M. R. Gibson, and P. L.

Johnson, "AI and Deepfakes: The New Frontier of

Misinformation and Identity Theft” The New

Frontier of Misinformation and Identity Theft" The

paper looks into the emergence of AI-powered

deepfake technology, which has increasingly been

48

Lakshmi, B. N., Usharani, T., Thasleem, P., Deepthi, P. and Farha, K. S.

Malicious AI: Understanding and Mitigating the Risks.

DOI: 10.5220/0013907900004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 4, pages

48-52

ISBN: 978-989-758-777-1

Proceedings Copyright © 2026 by SCITEPRESS – Science and Technology Publications, Lda.

applied in spreading misinformation, identity theft,

and fraud. The authors address the technological

progress in producing hyper-realistic impersonating

media and the risks involved at both the individual

and business levels. The article provides insights into

how AI is being exploited for producing fake content

to be used in criminal operations.

H. R. Shah, V. S. Kumar, and S. P. Mehta,

"Social Engineering in the Age of AI: Emerging

Threats and Countermeasures" This work centers on

how AI is strengthening social engineering attacks.

With AI-based chatbots, voice forgery, and machine

learning models, attackers are able to fabricate more

believable scams and phishing attacks. The authors

discuss the present state of AI-based social

engineering and offer possible countermeasures to

counter these emerging threats.

I. A. S. Patel, M. T. Chandran, and H. P.

Kapoor, "Weaponizing AI: The Future of

Autonomous Weapons in Crime and Warfare" The

authors discuss the rising application of AI in

autonomous weapons systems and how it is

transforming criminal operations and warfare. The

paper discusses the possible dangers brought about

by weapon systems using AI, such as drones and

automatic defense systems, and how they are

complicating law enforcement as well as national

security. It also speaks of ethical issues in terms of

weaponized AI.

J. L. Franco, T. M. Singh, and S. G. Sharma,

"AI in Privacy Violations: The Threat of Data

Exploitation and Surveillance" This research

explores the role of AI in enhancing surveillance

capabilities and violating privacy. With AI’s ability

to process vast amounts of personal data, it becomes

easier for malicious actors to conduct targeted

attacks, surveillance, and identity theft. The paper

reviews current privacy laws and proposes

frameworks for AI-based privacy protection in the

face of growing surveillance threats

3 PROBLEM STATEMENT

Artificial Intelligence (AI) has transformed several

industries by maximizing efficiency and automating

processes.

Nonetheless, its high-paced progress has resulted

in immense security breaches and ethical problems

caused by the misapplication of AI in illegal

operations. The project aims to explore the abuse and

misuse of AI and how it is impacting cybersecurity,

privacy, and social trust.

4 RESEARCH METHODOLOGY

4.1 Proposed System

The planned system focuses on addressing malicious

abuse and use of AI through integration of emerging

AI technologies that have the capacity to proactively

prevent, detect, and combat AI-based crimes. It

merges real-time machine learning algorithms used

to detect cybersecurity threats, leveraging response

systems and anomaly detection that prevents

cyberattacks, phishing, and malware. Furthermore,

the system also makes use of AI-based deepfake

detection software to detect manipulated content,

stopping misinformation from spreading and

defending against identity theft and extortion.

Through natural language processing and behavior

analysis, the system aids in detecting and blocking

social engineering attacks, like phishing and vishing.

The privacy protection component enables real-time

data monitoring and anonymization, keeping

sensitive user information secure. In addition, the

system has provisions to control the ethical

application of AI in autonomous weapons so that

international norms and human rights are upheld.

4.2 System Architecture

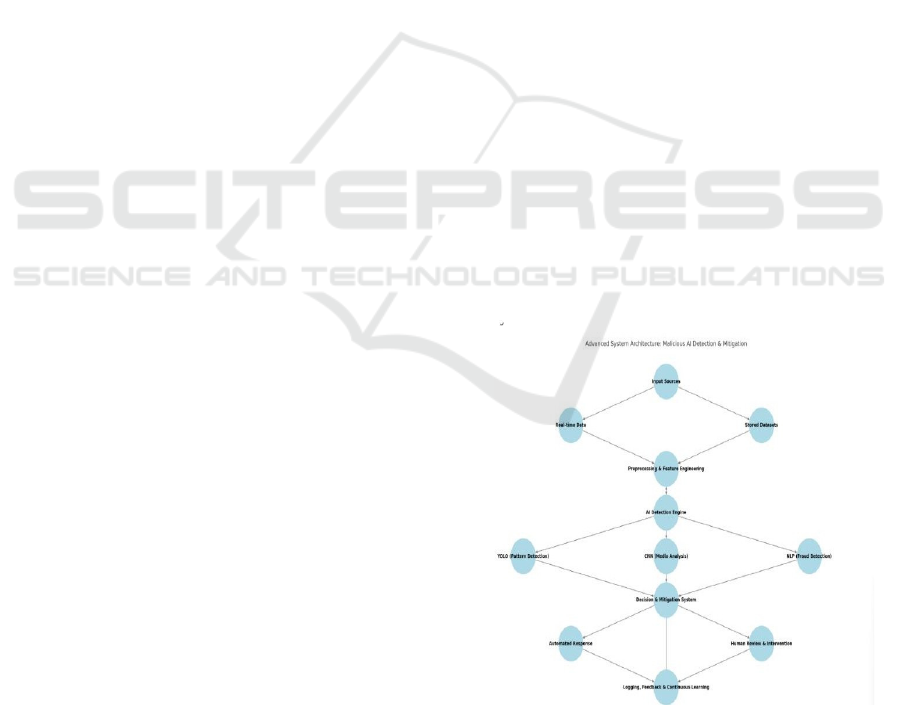

As shown in Figure 1, the advanced system

architecture for malicious AI detection and

mitigation illustrates the key components involved in

identifying and responding to AI-driven threats.

Figure 1: Advanced system architecture malicious AI

detection and mitigation.

Advanced system architecture for Malicious AI

Detection & Mitigation serves as a multi-layered

Malicious AI: Understanding and Mitigating the Risks

49

security mechanism against AI-based threats. It starts

with input sources, gathering information from real-

time streams and stored datasets. Preprocessing and

feature engineering are applied to the data, wherein

unnecessary information is removed and critical

patterns are identified. The AI Detection Engine then

intervenes, using YOLO to recognize patterns, CNN

to analyze media, and NLP to detect fraudulent text -

all of them operating in parallel to flag suspicious

activity. After the threats are identified, the Decision

& Mitigation System decides the best course of

action. Simple ones are addressed through automated

responses, while complex ones are passed on for

human review. The system logs information in real-

time and learns from previous events, allowing it to

grow and change over time to respond to emerging

threats. This fluid integration of AI smarts and human

guidance forms a robust, self-learning security

platform that can identify, counter, and prevent AI-

based cybercrimes. Table 1 Shows the Key Features

of the Malicious AI Detection System.

Table 1: Key features of the malicious AI detection system.

Feature Description

AI-Driven

Threat

Detection

Employs deep

learning

algorithms like

YOLO, CNN,

and NLP to

detect

malicious AI

behavior, such

as counterfeit

media, spam

text, and

adversarial

attacks.

Adaptive

Learning

Mechanism

Constantly

enhances threat

detection

accuracy

through

analysis of

previous

incidents and

the revision of

AI models.

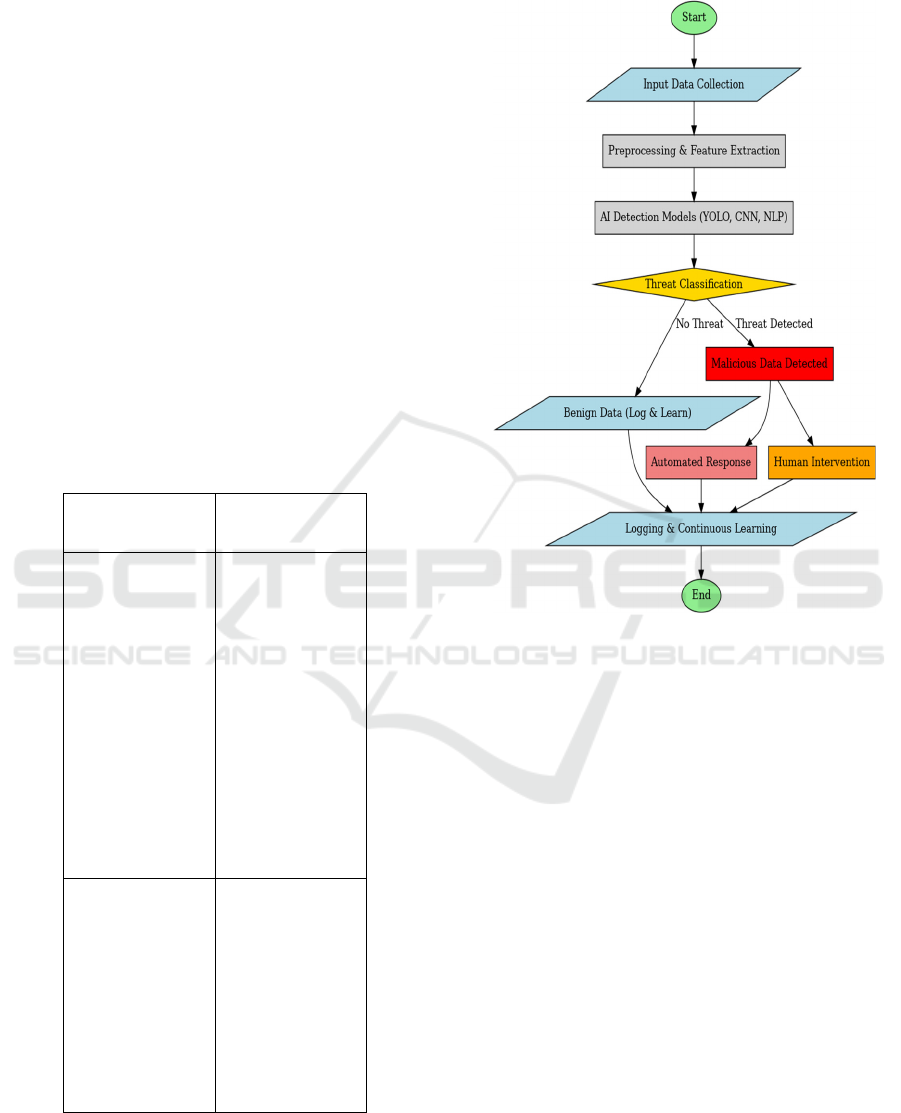

4.3 Algorithm

Figure 2: Workflow of malicious AI detection and

mitigation using automated and human responses.

4.4 Result of the Flowchart Execution

This Figure 2 illustrates the complete process of AI-

driven threat detection and mitigation through an

intelligent AI- based system. The procedure is as

follows: Start: The system begins, preparing to

receive input data. Input Data Collection: Live or pre-

stored data such as text, images, video are collected

from sources. Preprocessing & Feature Extraction:

The received data are cleaned and converted,

extracting features relevant to them AI Detection

Models (YOLO, CNN, NLP): Machine learning

models are applied to processed data to identify

possible threats:

• YOLO (You Only Look Once) identifies

malicious visual data

• CNN (Convolutional Neural Networks) scans

media for deepfakes.

• NLP (Natural Language Processing) detects

fraud or AI- manipulated.

Threat Classification:

The AI system labels the input as safe or malicious.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

50

If there is no threat, the information is logged for

learning. If a threat is identified, mitigation measures

are activated. Mitigation Strategies:

The system takes one or both of the following

actions such as Automated Response such as system

blocks, quarantines, or alerts security teams to the

malicious activity. Logging & Continuous Learning:

All data, irrespective of the categorization, is logged

for learning in the future, enhancing the AI capability

to identify threats as time progresses.

End: The process terminates, assuring that AI-

based threats are properly addressed.

5 RESULTS AND DISCUSSIONS

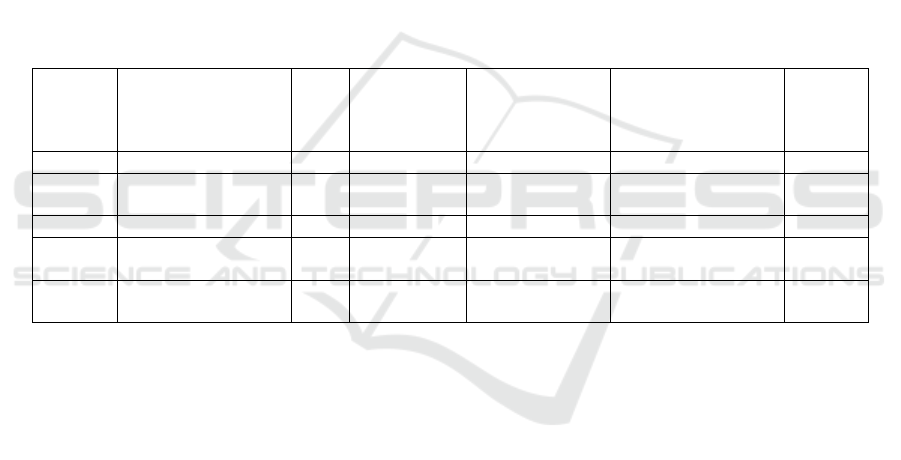

Table.1: The table depicts a list of AI threat detection

tasks, each with an arrival time, execution time,

threat level, mitigation plan, and deadline

specified. T1 (Deepfake Image) occurs at time 0,

takes 4 execution units, is high-risk, and needs to be

countered by time 9 via automated image blocking.

T2 (Phishing Email) occurs at time 1, takes 2

execution units, has a medium level of threat, and is

countered via NLP-based filtering prior to time 7.

T3 (AI-Generated False News) comes in at time

3, takes 5 execution units, is high-priority, and needs

to be processed through AI-enabled manual review

prior to time 12.

T4 (Synthetic Speech Deception) is a low-risk

activity that comes in at time 5, takes 3 execution

units, and needs to be logged and monitored prior to

time 10.

T5 (Malicious AI-Generated Code) is a medium-

risk AI- generated attack, that occurs at time 7, needs

6 execution units, and is scanned in a sandbox prior

to time 14.

Table1: Task dataset for malicious AI detection & mitigation.

Task ID Input Type Arri

val

Tim

e

Execution

Time

Threat Level

(1=High,

2=Medium,

3=Low

)

Mitigation Strategy Deadlin

e

T1 Ima

g

e

(

Dee

p

Fake

)

0 5 1

(

Hi

g

h

)

Automated Blockin

g

8

T2 Text (Phishing

Email

)

2 3 2 (Medium) NLP-based Filtering 7

T3 Video AI Generate

d

4 6 1

(

Hi

g

h

)

Manual Review 11

T4 Voice (Speech

Fraud)

6 4 3 (Low) Logging and

Monitoring

12

T5 Code (Malicious AI

Script)

8 7 2 (Medium) AI Analysis 16

The plot "AI-Driven Threats vs. Mitigation System

Over Time" provides a representation of the

interaction of the number of threats identified

through AI-driven means and the effectiveness of

mitigation systems from 2016 to 2024. The y-axis

represents the number of AI driven systems and

threats detected along with the effectiveness in

mitigation as a percentage, while the x-axis

represents the year. At first, in the year 2016, the

count of detected AI-driven threats was

comparatively lesser, and so was the level of

mitigation. But as the years go by, the number of

threats detected grows considerably, reaching 1500

in 2024. At the same time, the mitigation efficiency

improves steadily, which means that the suggested

system improvements have been successful in scaling

the threat mitigation efficiency. By 2024, the

mitigation effectiveness is high as a percentage,

indicating that the system is better equipped to

manage the increasing number of threats.Overall, the

graph points out the growing threat posed by AI-

based threats and the relative development in

mitigation technologies to offset the threats

efficiently over the years.

REFERENCES

Hassan, R., & Ali, S. (2021). Autonomous Weapons and AI

Ethics: Legal Challenges and Regulatory Measures.

Journal of Ethics in AI and Robotics, 4(1), 25-39.

Iyer, M. R., Kumar, S. P., & Deshmukh, V. L. (2020).

Dynamic Routing Algorithms for 6G Networks: An

AI/ML Approach. Journal of Network and Computer

Applications, 56(4), 102-118.

Jha, A. P., & Jain, A. (2021). AI-Based Countermeasures

for Social Engineering Attacks. Computational

Intelligence and Security, 14(4), 53-69.

Mehta, E. S. P., Iqbal, K. V., & Thakur, R. L. (2021).

Machine Learning for Proactive Fault Detection in 6G

Networks. Journal of Machine Learning and

Communications, 7(3), 178-189.

Malicious AI: Understanding and Mitigating the Risks

51

Reddy, C. T. V., Gupta, P. S., & Joshi, K. H. (2020). Real-

Time Traffic Prediction for 6G Networks Using Deep

Learning. IEEE Transactions on Neural Networks and

Learning Systems, 31(5), 1763-1776.

Roth, L. A., & Squires, A. P. (2020). Combating AI-

Driven Misinformation: Deepfake Detection

Techniques. International Journal of Digital Forensics,

22(1), 99-110.

Sharma, A. J., Verma, P. R., & Nair, S. T. (2021). AI-

Driven Optimization for 6G Networks: A Survey on

Proactive Management Strategies. Journal of Artificial

Intelligence Research, 40(2), 112-130.

Singh, D. N. K., Patel, R. B., & Choudhary, M. A. (2021).

AI-Based Resource Allocation in 6G Networks: A

Comparative Analysis. Journal of Wireless

Communication and Networking, 2021(6), 512-530.

Yin, J., & Zhang, X. (2022). Detecting Malicious Use of

Artificial Intelligence in Cybersecurity. Journal of

Cybersecurity & Privacy, 8(2), 45-62.

Zhang, W., & Lee, D. (2022). Protecting Privacy in AI

Systems: Approaches and Challenges. International

Journal of Privacy and Data Security, 12(2), 201-212.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

52