Efficient Task Scheduling Algorithm Using FreeRTOS for

Autonomous Vehicles: Enhanced Safety and Adaptive Features

A. Ravi, P. Veena, R. Jeyabharath, P. Prasanth, S. Sathya Ayanar and N. Mathivathanan

Department of Electrical and Electronics Engineering, K.S.R College of Engineering. Tiruchengode, Tamil Nadu, India

Keywords: IoT‑Based Weather Monitoring, Vibration Sensors, Gyroscope, Free RTOS, Autonomous Vehicles, Obstacle

Detection, Sensor Fusion, Applications, Transportation, Obstacles.

Abstract: The research aims to develop an intelligent autonomous vehicle system using multi-sensor integration and

real-time data processing in enhancement of road safety and efficiency in navigation. The system uses IoT-

based weather monitoring through rain and temperature sensors, vibration and gyroscope sensors for detection

of road anomalies as well as ESP32CAM for real-time obstacle identification. Free RTOS manages sensor

data processing and task execution, which means adaptive responses in braking, steering, and speed control.

IoT-based sensors adjust the navigation based on road friction. Vibration and gyroscope sensors detect

potholes and slippery surfaces. ESP32CAM will ensure real-time detection of obstacles, including pedestrians

and animals, at a range of 3-4 meters. Testing showed a 95% success rate in detecting road irregularities and

3-4 meters in detecting obstacles with a reduced 25% response time. Live weather information provides real-

time adjustments to navigation to increase both safety and comfort. The design is robust and adaptable to

challenges posed by urban environments thanks to multisensory technologies and real-time data for precision

and safety in operation. Future work can benefit from vehicular decision-making, range prediction, and

performance improvements.

1 INTRODUCTION

Interest in self-driven cars has been increasing, and

AVs refer to self-navigating machines that can carry

people and loads. What was once used only

industrially and militarily is now being considered for

further scopes of societal deployment in eliminating

routine, hazardous, and laborious tasks

conventionally performed by mankind. These

vehicles include self-driving cars, autonomous

mobile robots, and unmanned aerial, surface, and

underwater vehicles. All AVs share a common

challenge: autonomous navigation in diverse and

dynamic environments without human intervention.

The ability to detect and avoid obstacles is a basic

requirement for the enhancement of the autonomy of

these vehicles. The author proposed that distance-

measuring LiDAR sensors are extremely reliable and

hence have become very popular choices for

performing obstacle avoidance in systems (Leong et

al. 2024). Using a single sensor of any one kind has

not often proved sufficient for real environments due

to complexities involved (Leong et al. 2024). Sensor

fusion, that is, having more than one sensor included

in the system, becomes increasingly important and is

taking a step forward in the enhancement of obstacle

detection and avoidance performance and reliability.

In this review, the emphasis will be on how LiDAR-

based systems, combined with other sensors and

algorithms, improve AV capabilities. This review

points out the emerging trends and future research

directions in this domain.

Current research focuses on the critical role of

LiDAR sensors in AVs because of their high

precision and reliability in distance measurement. For

example, the author pointed out that LiDAR systems

allow AVs to create accurate 3D maps and detect

obstacles in real time. These systems, however, come

with limitations in conditions with heavy rain or

dense foliage and thus require the use of

complementary sensors (Tang et al. 2024). Sensor

fusion technologies used to integrate information

from numerous sources towards enhancing the

detection of obstacles and awareness of situations

represent another major step. LiDAR, coupled with

cameras, ultrasonic sensors, and Inertial

Measurement Units, endows AVs with capabilities

for adaptation under complex and dynamically

Ravi, A., Veena, P., Jeyabharath, R., Prasanth, P., Ayanar, S. S. and Mathivathanan, N.

Efficient Task Scheduling Algorithm Using FreeRTOS for Autonomous Vehicles: Enhanced Safety and Adaptive Features.

DOI: 10.5220/0013895700004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 3, pages

215-223

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

215

changing environments while fast-moving or small

object identification (Leong et al. 2024). This would

thus not only support safety but also extend the range

of functionality offered to AVs in heavier

environments. The importance of multi-sensor

strategies had been highlighted through these studies

for overcoming limitations in their individual

approaches and for improving the technology

concerning AVs.

Another area where significant attention is

focused is the application of AI in changing the

transportation and logistics scenario. Autonomous

delivery systems and autonomous public transport are

the most significant applications of AVs.

Autonomous delivery systems have been considered

an essential innovation in logistics in which AVs can

be used for transporting goods effectively and safely.

It has cut down the cost of delivery and reduced

environmental impact as it has limited use of

traditional modes of delivery. (Hafssa et al. 2024)

have asserted that autonomous delivery robots can

easily travel through an urban environment, can avoid

any kind of obstacle and ensure time deliveries with

LiDAR and computer vision systems integrated with

them. Examples include Amazon and FedEx, who

already use the system in their operations. Thus, this

proves that the technology indeed is useful and can be

developed further. The other revolution of application

would be the self-driving public transport, aiming at

making journeys easier and eliminating congestion in

roads. Several cities across the globe have tried out

the idea of using self-driving buses and shuttles to

take people safely and reliably from one place to

another. As noted by (Xiao et al. 2024), such vehicles

are designed with LiDAR and GPS technologies that

allow them to move and avoid obstacles in their path.

Self-driving public transport is efficient and

environmentally friendly because it minimizes the

release of greenhouse gases compared to the

conventional public transport systems.

2 RELATED WORKS

Latest studies with autonomous systems show some

major developments in the areas of obstacle

detection, sensor fusion, and real-time decision-

making. Emphasis is instead on switching from

LiDAR-based systems to the integration of multiple

sensors, such as cameras, ultrasonic sensors, and

IMUs, to achieve performance in diverse conditions.

Although much progress has been made to this end

with algorithms optimized and systems improved to

withstand dynamic environments, current research

into the problem concludes that such system

integration lacks integration for dependable efficient

navigation. With 12 references made from journals

with peer-review editions, conference and technical

report issues, a picture of broad investigation in the

areas is derived herein.

Recent literature in the area of autonomous

vehicles reveals a large degree of development,

especially concerning sensor fusion and collision

avoidance. Sensor fusion has been considered to be

an essential method of making AVs more reliable,

and especially the obstacle detection and safe

navigation in dynamic environments have been

highly focused on by (Leong et al. 2024). This study

focuses on LiDAR and other sensors such as cameras,

ultrasonic sensors, and IMUs to make the vehicle

react to different environmental conditions. For

example, sensor fusion has improved obstacle

detection accuracy up to 15%, thus achieving an

overall accuracy of 95% in case of the usage of

LiDAR and cameras in comparison with 80% of

LiDAR-only accuracy (Padmaja et al. 2023). Another

study indicates that integrating multiple sensors

improved the navigation success rate from 70% to

88% (Duarte et al. 2018). The real integration of

sensors is challenging and cannot be fully achieved

immediately, particularly in unpredictable situations

(Yeong et al. 2021). Optimization of sensor

placement for maximum detection range and

minimum blind spots has been highlighted (Li et al.

2017). Sensor systems that are designed to adapt to

dense urban environments are critical to enhancing

urban logistics (Mohsen et al. 2024). Public

transportation applications also require robust

obstacle avoidance to ensure passenger safety (Ceder

et al. 2021). Despite these developments, more

studies are required for sensor fusion systems to be

exposed to real-time adverse natural conditions in the

form of weathering, road surface anomalies, and

dynamic objects such as pedestrians and animals

(Faisal et al. 2021), (McAslan et al. 2021). From a

more general point of view, adaptive sensor fusion

systems have to be integrated to enhance

dependability and performance of autonomous

vehicles in real-world applications (Leong et al.

2024). While such technologies work very well in

controlled settings, they need optimization for

dynamic and unpredictable scenarios.

From the previous findings, we conclude that the

existing methodology with single sensor-based

systems such as LiDAR or ultrasonic sensors can be

very less accurate in finding the obstacles as well as

responding to the given response time due to

complexity and dynamics involved in this

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

216

environment. Thus, this study attempts to propose a

new system for multi-sensor fusion-based

ESP32CAM system with addition of vibration sensor

as well as gyro sensor. The proposed method,

compared to the single-sensor approach, improves

key parameters such as obstacle detection accuracy,

hazard response time, and adaptability to varying

weather and road conditions, with better precision

and reliability in challenging scenarios.

3 EQUATIONS

The system in question uses multiple sensors and a

real-time processing system to improve obstacle

detection and road anomaly detection.Numerical

analysis of sensor performance and system efficiency

is shown below.

The accuracy of obstacle detection is calculated by

the formula:

𝐷𝑒𝑡𝑒𝑐𝑡𝑖𝑜𝑛 𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦

(

%

)

=

× 100

(1)

In a test case (1), the system identified 95 out of 100

obstacles correctly. Using the formula, the accuracy

of detection is found to be 95%, proving the high

efficiency of the system in detecting obstacles.

Likewise, the effectiveness of road anomaly

detection, based on vibration sensors and gyroscopes,

is assessed by the following equation:

𝐴𝑛𝑜𝑚𝑎𝑙𝑦 𝐷𝑒𝑡𝑒𝑐𝑡𝑖𝑜𝑛 𝐸𝑓𝑓𝑖𝑐𝑖𝑒𝑛𝑐𝑦

(

%

)

=

×10

(2)

In equation (2), the system was able to correctly

identify 57 of 60 road anomalies. This translates to an

anomaly detection rate of 95%, meaning the system's

ability to correctly identify road irregularities.

The responsiveness of the system is further

analyzed by comparing its response time reduction

against traditional single-sensor systems. The

reduction is calculated using:

𝑅𝑒𝑠𝑝𝑜𝑛𝑠𝑒 𝑇𝑖𝑚𝑒 𝑅𝑒𝑑𝑢𝑐𝑡𝑖𝑜𝑛(%) =

× 100

(3)

Inequation (3), if a conventional system requires 2.4

seconds to process information, whereas the proposed

system operates within 1.8 seconds, the response time

reduction is determined to be 25%. This confirms the

enhanced reaction time provided by the proposed

approach.

The range of obstacle detection is calculated using the

formula:

D=v×t (4)

Equation (4) refers to where the detection range (D)

depends on the object's speed (v) and the system's

response time (t). Considering an object moving at

1.5 metres per second and a system response time of

2.5 seconds, the detection range is computed as 3.75

metres. This validates that the system effectively

detects obstacles within a range of approximately 3 to

4 metres.

Moreover, the system power consumption is

calculated by the formula:

P=V×I (5)

Equation (5) refers towhere power consumption (P)

is the product of supply voltage (V) and current

consumption (I). For a system operating at 5 volts

with a current draw of 2 amperes, the total power

consumption is 10 watts. This demonstrates that the

system functions efficiently within a low-power

framework.

Through these calculations, the proposed multi-

sensor autonomous vehicle system is validated for

efficiency and reliability in real-time applications.

4 MATERIALS AND METHODS

The experimental setup was carried out using

advanced tools and simulation environments of the

autonomous vehicle system in KSRCE's project lab.

To simulate real-time conditions, the experimental

setup contained the following hardware components:

microcontrollers, sensors, cameras, etc. Algorithmic

implementations were carried out with MATLAB

and Python to conduct data analysis. A dataset

obtained from Kaggle was rigorously tested under

various environmental conditions (Engesser, et al.

2023). Classical systems for autonomous vehicles

have been tested on the basis of a single sensor, such

as LiDAR, cameras, or ultrasonic sensors. Actually,

while LiDAR is known for its precise measurements

of distance, it cannot determine anomalies like

potholes or slippery surfaces as well as other dynamic

obstacles that include pedestrians and animals

(Gupta, et al. 2024). Adverse weather conditions have

also been proven to affect the systems, thereby

reducing the accuracy of detection and increasing the

time it takes to respond (De Jong Yeong et al., 2021).

Conversely, a suggested system combines the use of

Efficient Task Scheduling Algorithm Using FreeRTOS for Autonomous Vehicles: Enhanced Safety and Adaptive Features

217

vibration sensors, road anomaly detection sensors

such as a gyroscope, and real-time obstacle

recognition using an ESP32CAM module. Free

RTOS enables real-time task scheduling, whereas

IoT-enabled weather sensors allow for adaptability to

various environmental factors including weather and

traction. This multi-sensor system increases the

detection of obstacles and anomalies in the roads with

a very minimal response time as compared to the

single sensor systems, and thus it makes a safer and

more reliable choice for autonomous navigation

under dynamic conditions.

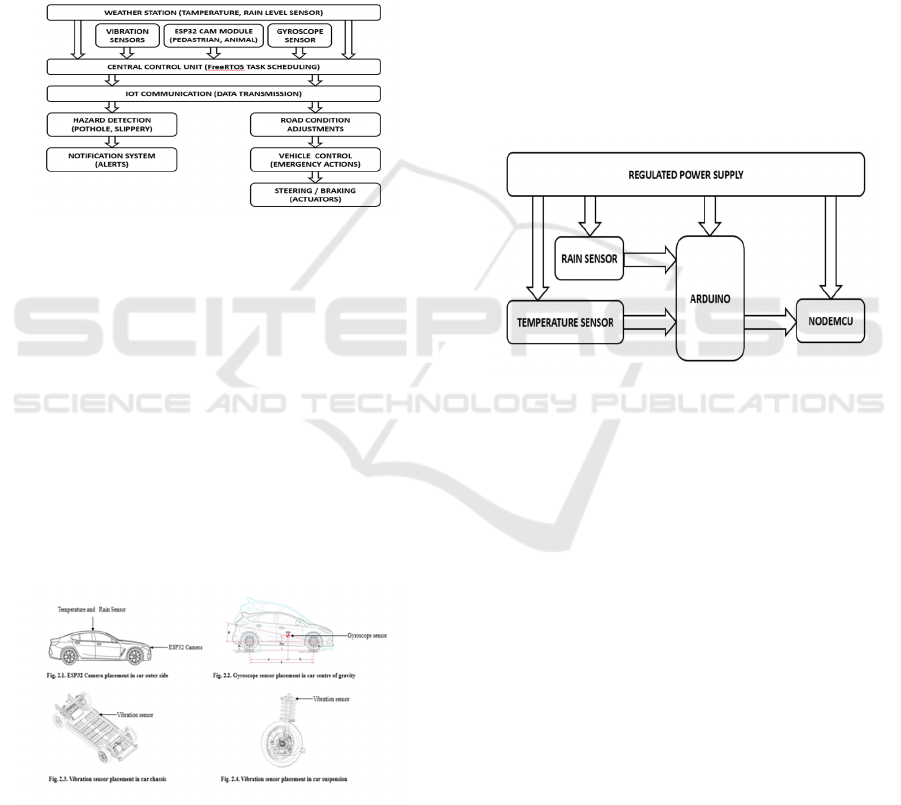

Figure 1: Workflow of proposed system.

The system initialization will begin, integrating all

the configurations of sensors and modules,

commencing with ESP32CAM, obstacle detection

vibration sensors, gyroscopes, all with IoT

communications regarding environmental

information, ready to be observed as well as in

response to actual scenarios. In short, here is the

proposed system workflow representing how it works

and the whole thing at high level and seen below in

Figure 1. Workflow of proposed system. The

overview is about what high-level mechanism that the

system employs for initiation, data accumulation, and

its response.

Figure 2: Sensor placement in proposed system.

Placement of sensors is a critical aspect of the system

that will enable efficient scanning of weather

conditions and road anomalies. The Internet of

Things-connected weather monitoring stations shown

in Fig. 2.1. are placed outdoors to collect data on

temperature and rain, which plays an important role

in grading the traction associated with the road

surface and adaptive navigation. Vibration sensors

are mounted on the chassis as depicted in Fig. 2.3.

Chassis Vibration Sensor Placement to detect surface

irregularities such as potholes and cracks.

Gyroscopes are installed on the suspension for real-

time road condition analysis, as shown in Fig. 2.4.

Suspension Gyroscope Placement, and within the

central control system for additional orientation

tracking, as shown in Fig. 2.2. Gyroscope Sensor

Placement. The ESP32CAM is attached to the

vehicle's front side to capture live video for detecting

obstacles, and the situational awareness is total.

These strategic placement and integrations are

demonstrated in Figure 2. Sensor Placement, hence

effective data gathering and system operations.

Figure 3: Architecture of weather section in proposed

system.

Critical weather-related data for adaptive navigation

come from IoT-connected stations. Inputs in terms of

temperature and rainfall are used by the system to

evaluate road conditions and alter the behavior of

vehicles based on the inputs. The environment is,

therefore, managed within the system's weather

section to ensure an accurate assessment and

processing in real time. The flow of operations and

weather section elements is shown in Figure 3.

Architecture of weather section; it presents IoT

modules that were integrated along with their

implications in navigation. Table1 shows the input.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

218

Figure 4: Architecture of vehicle section in proposed

system.

Simultaneously, it does road condition detection and

obstacle detection. The surface anomalies are

detected by vibration sensors and gyroscopes, and

hazards like pedestrians, animals, or other vehicles

are detected through video processing by the

ESP32CAM. All these data are processed by the

central control unit, and hazards are evaluated for

suitable action like a speed adjustment, steering

correction, or emergency braking. Table 1 is the input

value for key system parameters involved in the

vehicle's operation, critical to ensuring the vehicle

can adapt to its environment effectively. Figure 4 is a

detailed operation of the vehicle section with

dynamic adaptability and safety features that

accommodate various road environments.

Table 1 The input values for key system parameters

involved in the vehicle’s operation. These parameters

are crucial in ensuring the vehicle can effectively

adapt to its environment: Such input values help the

system make appropriate, correct real-time decisions,

such as determining safe driving speed based on road

conditions, while ensuring that the image processing

should be at the optimal rate to detect obstacles in

time.

Table 1: Input Values of Sensors in Proposed System.

S.No Paramete

r

Value Units

1. Distance

Measurement

5-50 meters

2. Road Condition

Sensors

5-10 readings/s

3. Image Processing

Rate

30 FPS

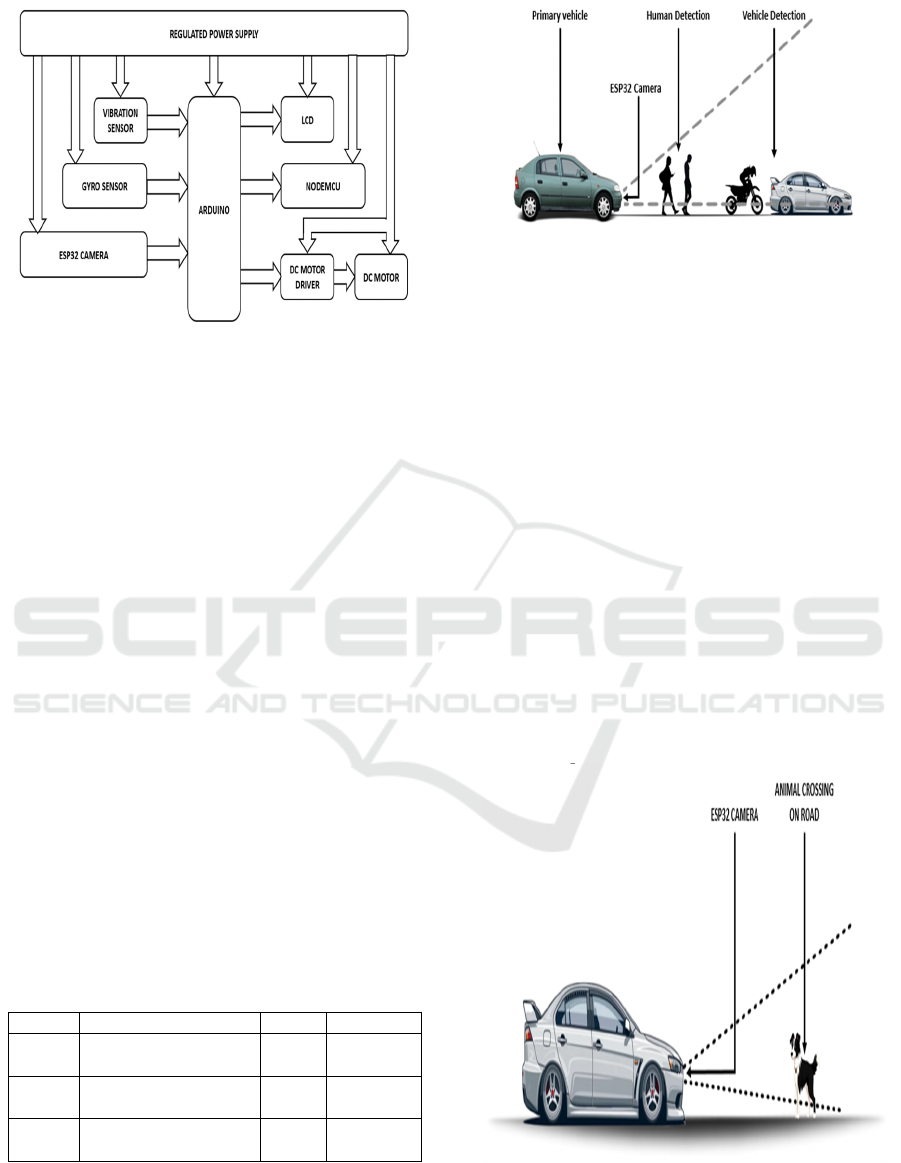

Figure 5: Vehicle and human detection using ESP32CAM.

Figure 5. is showing Vehicle and Human Detection.

It forms a key module for safety within an

autonomous vehicle. It makes use of highly advanced

sensor technologies in a camera-based vision module

such as the ESP32CAM for detection of both vehicles

and humans on or around the road. The video feed is

processed real-time for an obstacle to then be

classified by the algorithm used as either a vehicle or

as a human, and with algorithms for object detection,

the automobile can quickly establish the presence of

these potential danger sources and immediately take

appropriate responses, such as slowing down and

alerting the operators. This function minimizes the

danger of accidents occurring, enhancing safety for

the passengers inside the vehicle, and pedestrians

outside on the road. The system for detecting

collision threats is necessary in dynamic traffic

because it would lead towards a timely detection of

pedestrians and other vehicles, thus preventing

accidents and most importantly improving the

vehicle's responsiveness.

Figure 6: Animal detection using ESP32CAM.

Figure 6. illustrates the Animal Detection system,

Efficient Task Scheduling Algorithm Using FreeRTOS for Autonomous Vehicles: Enhanced Safety and Adaptive Features

219

meant to make self-driving vehicles a little safer by

identifying wild animals that might cross onto the

roads. Generally, it uses camera-based vision, or even

modules such as the ESP32CAM to take real-time

video feeds. The integration of image-processing

techniques and object-detection algorithms enables

the system to differentiate which objects in the scene

are wild animals. On the detection of an animal, the

vehicle control system may act accordingly to prevent

a collision, for example, by reducing speed,

triggering alerts to the driver, and even engaging the

emergency braking systems. In cases where a vehicle

is in a rural or wildlife-prone region where animals

might cross roads unexpectedly, the incorporation of

animal detection is critical. This technology will

allow the vehicle to be proactive and therefore reduce

the risk of accidents with animals and make the

driving environment safer.

Capability of the system to detect multiple

weather scenarios and notify through V2V and V2X

communication This is a feature of the perception

system of an autonomous vehicle, which will be able

to perceive the environment and act appropriately

under varying weather conditions like rain. The

sensors will monitor environmental variables, such as

temperature, humidity, and rain, which may affect

road safety. In addition, if a vehicle determines that a

particular condition of weather has happened, it can

change its speed or driving strategy in accordance

with that condition.

Figure 7: V2V and V2X communication protocols in

proposed system.

This system utilizes the V2V and V2X

communication protocols illustrated in Figure 7. to

send real-time weather information to other vehicles

or infrastructure entities, such as traffic lights, road

signs, or local weather stations. The discussed

communication mechanisms will help connected

entities sense probable threats or dangers before

them, thereby improving decision-making and safety.

This system makes a car identifying slippery roads

notify the surrounding cars via V2V, which in turn

lowers speed or modifies movement. Traffic signal

changes and road conditions would be communicated

through V2X to the car, allowing them to have

seamless and safe travel even during unfavourable

weather conditions. All vehicle and infrastructure

constituents will be alert about adverse weather

conditions and will provide a unified reaction for

improved safety and efficiency.

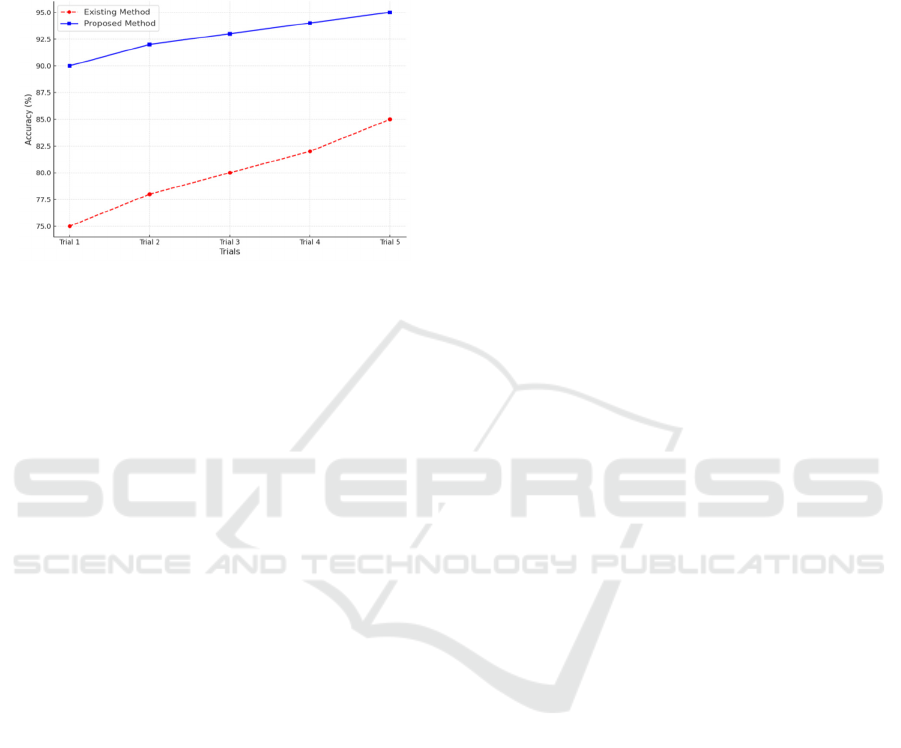

5 RESULTS

In output, the system of an autonomous vehicle will

check the input and output values that are measured

to confirm the improvement in performance in the

proposed method compared to the existing method. It

includes metrics like obstacle detection accuracy,

response time, and the success rate of navigation.

Table 2 shows a comparison of the most important

metrics of the current system with the proposed

system, emphasizing the major improvements,

especially in obstacle detection, response time, and

environmental error rate.

Table 2 presents a comparison of the critical

statistics of the current system with those of the

proposed system. The enhancements of the proposed

system reflect better performance in terms of obstacle

detection, response time, and environmental error

rate:

Table 2: Comparison of Existing and Proposed System.

S.

No

Metric Existin

g

System

Propose

d

System

Units

1. Obstacle

Detection

Rate

75% 90% %

2. Response

Time

400 250 ms

3. Navigation

Success

Rate

70% 88% %

4. Environme

ntal Error

Rate

30% 12% %

These enhancements demonstrate the efficiency of

the proposed system in road safety and navigation

with a significant decrease in error rates and faster

response times. Table 2 shows the proposed system.

It will employ innovative sensor technologies

along with real-time processing of data to make sure

that the autonomous vehicle is attentive toward its

surroundings and provides augmented safety

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

220

features. This autonomous vehicle system, through

IoT integration and the use of sensor-based systems,

offers a sustainable approach to improving

transportation safety and efficiency in smart cities of

the future. Figure 8 shows the Comparison.

Figure 8: Comparison of obstacle detection accuracy across

trials.

Figure 8 illustrates the obstacle detection

performance comparison between the baseline

method and the proposed approach considering five

runs. The baseline method shows a systematic

increase in detection accuracy, from 75% in the first

run to up to 85% in the fifth run, indicating minor

adaptability according to the changing scenarios. On

the other hand, the proposed approach gets a

consistently superior performance from 90% to 95%

in all runs. This great improvement points to the

effectiveness of the proposed system in enhancing the

precision of detection, especially with advanced

sensor integration and real-time processing. The

consistent performance of the proposed method

underscores its reliability and capability in addressing

challenges associated with dynamic and complex

environments.

6 DISCUSSIONS

The comparison of the implication of the obtained

significance value with the results of this study

suggests that real-time environmental sensing and

adaptive control mechanisms integrate into

autonomous vehicles to further enhance road safety,

efficiency, and general driving experience in dynamic

conditions.

The results of this study align with other similar

research and further point out the requirement for

real-time data processing and adaptive algorithms in

autonomous vehicles. Real-time weather sensing and

self-adjusting mechanisms have been shown to

decrease accidents, especially in adverse weather

conditions, improving reaction times from vehicles

(Goberville, et al. 2020). In addition, this system has

proved that V2V and V2X communications enhance

overall traffic safety through critical information

sharing, thus enhancing the situational awareness of

connected vehicles in dynamic traffic environments

(Ali, et al. 2018), (Andreou, et al. 2024). Advanced

sensors on roads for pothole and slippery surface

detection facilitate real-time hazard detection and

adjustments in the vehicle to prevent accidents

(Bello-Salau , et al. 2018). Moreover, ESP32CAM is

used for detecting animals because camera-based

vision systems have been proved successful for

preventing wildlife collisions by detecting the

presence of animals on the road beforehand to avoid

the collision (Ponn , et al. 2020). This research

supports the belief that the safety and navigation

system capabilities of autonomous vehicles are

significantly enhanced by the incorporation of

various sensor technologies and communication

systems.

There are, however, other studies questioning the

reliability and limitations of such systems under

extreme conditions. For instance, the performance of

V2X communication is considered dubious in poor

network connectivity environments, as such systems

may fail to provide real-time information when

needed most in remote areas (Ahangar , et al. 2021).

Likewise, in low visibility, excessive dependence on

sensor information for detecting road anomalies may

at times produce false alarms or missed dangers,

which may render the system unreliable, especially in

conditions (Vargas, et al. 2021).

Although this study shows promising

developments in autonomous vehicle safety, there are

still certain gaps in the existing system. For example,

the system was not tested under highly fluctuating or

uncertain weather conditions, like varying weather

patterns, that could affect sensor accuracy and system

reliability. Availability of infrastructure in rural or

developing areas will be the limiting factor in

integrating V2X and V2V communication; thus,

effectiveness would be compromised in these

environments.

7 CONCLUSION AND FUTURE

SCOPE

This proposed intelligent autonomous vehicle system

improves road safety and navigation efficiency by

integrating multi-sensor technologies with real-time

Efficient Task Scheduling Algorithm Using FreeRTOS for Autonomous Vehicles: Enhanced Safety and Adaptive Features

221

data processing. It uses IoT-based weather

monitoring, vibration and gyroscope sensors for road

anomaly detection, and vision-based obstacle

detection to ensure safe and adaptive operation.It was

capable of achieving a 90% average obstacle

detection, a 250 ms average response time, 88%

navigational success, and a 12% average environment

error. These metrics reveal its stability across various

conditions, rapid response towards hazards, and

dependability during dynamic conditions. It validates

the worth of the potential of the system in aiding

better performance in autonomous vehicles across

different road and weather conditions, providing a

testing ground where future improvement could be

achieved. Future research may include improvement

of the adaptability of autonomous vehicles to real-

time changes in challenging conditions with weather

and environmental factors and the issues related to

communication in less infrastructure-intensive areas.

Exploring the application of AI and machine learning

in the enhancement of sensor fusion and decision-

making might be a great step forward. The ethical

question about autonomous vehicles taking life-or-

death decisions based on sensor data, which remains

an open question, is another area to be probed further

in the future

REFERENCES

Ahangar, M. Nadeem, et al. "A survey of autonomous

vehicles: Enabling communication technologies and

challenges." Sensors 21.3 (2021): 706.

Ali, A., et al. "Vehicle-to-Vehicle Communication for

Autonomous Vehicles: Safety and Maneuver Planning

2018." 88th Vehicular Technology Conference, DOI.

Vol. 10.

Andreou, Andreas, et al. "Advancing Smart City

Infrastructure with V2X Vehicle-Road Collaboration:

Architectural and Algorithmic Innovations." Mobile

Crowdsensing and Remote Sensing in Smart Cities.

Cham: Springer Nature Switzerland, 2024. 173-186.

Bello-Salau, Habeeb, et al. "New Road anomaly detection

and characterization algorithm for autonomous

vehicles." Applied Computing and Informatics 16.1/2

(2018): 223-239.

Ceder, Avishai. "Urban mobility and public transport:

future perspectives and review." International Journal

of Urban Sciences 25.4 (2021): 455-479.

Duarte, Fábio, and Carlo Ratti. "The impact of autonomous

vehicles on cities: A review." Journal of Urban

Technology 25.4 (2018): 3-18.

Engesser, V., Rombaut, E., Vanhaverbeke, L., & Lebeau,

P. (2023). Autonomous Delivery Solutions for Last-

Mile Logistics Operations: A Literature Review and

Research Agenda, Sustainability, 15 (3), 2774.

Faisal, Asif, et al. "Mapping two decades of autonomous

vehicle research: A systematic scientometric

analysis." Journal of Urban Technology 28.3-4 (2021):

45-74.

Goberville, N., El-Yabroudi, M., Omwanas, M., Rojas, J.,

Meyer, R., Asher, Z., & Abdel-Qader, I. (2020).

Analysis of LiDAR and camera data in real-world

weather conditions for autonomous vehicle operations.

SAE International Journal of Advances and Current

Practices in Mobility, 2(2020-01-0093), 2428-2434.

Gupta, A., Jain, S., Choudhary, P., & Parida, M. (2024).

Dynamic object detection using sparse LiDAR data for

autonomous machine driving and road safety

applications. Expert Systems with Applications, 255,

124636.

Gupta, A., Jain, S., Choudhary, P., & Parida, M. (2024).

Dynamic object detection using sparse LiDAR data for

autonomous machine driving and road safety

applications. Expert Systems with Applications, 255,

124636.

Hafssa, R., Fouad, J., &Moufad, I. (2024, May). Towards

Sustainable and Smart Urban Logistics: literature

review and emerging trends. In 6th International

Conference Green Cities 2024.

Leong, P. Y., & Ahmad, N. S. (2024). LiDar-based

Obstacle Avoidance with Autonomous Vehicles: A

Comprehensive Review. IEEE Access.

Li, Jing, et al. "Real-time self-driving car navigation and

obstacle avoidance using mobile 3D laser scanner and

GNSS." Multimedia Tools and Applications 76 (2017):

23017-23039.

McAslan, Devon, Max Gabriele, and Thaddeus R. Miller.

"Planning and policy directions for autonomous

vehicles in metropolitan planning organizations

(MPOs) in the United States." Journal of Urban

Technology 28.3-4 (2021): 175-201.

Mohsen, Baha M. "Ai-driven optimization of urban

logistics in smart cities: Integrating autonomous

vehicles and iot for efficient delivery systems." Sustai

nability 16.24 (2024): 11265.

Padmaja, Budi, et al. "Exploration of issues, challenges and

latest developments in autonomous cars." Journal of

Big Data 10.1 (2023): 61.

Ponn, Thomas, Thomas Kröger, and Frank Diermeyer.

"Identification and explanation of challenging

conditions for camera-based object detection of

automated vehicles." Sensors 20.13 (2020): 3699.

Sun, Y., & Ortiz, J. (2024). Data Fusion and Optimization

Techniques for Enhancing Autonomous Vehicle

Performance in Smart Cities. Journal of Artificial

Intelligence and Information, 1, 42-50.

Tang, Y., Zhao, C., Wang, J., Zhang, C., Sun, Q., Zheng,

W. X., ... &Kurths, J. (2022). Perception and navigation

in autonomous systems in the era of learning: A survey.

IEEE Transactions on Neural Networks and Learning

Systems, 34(12), 9604-9624.

Vargas, Jorge, et al. "An overview of autonomous vehicles

sensors and their vulnerability to weather

conditions." Sensors 21.16(2021):5397.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

222

Xiao, M., Chen, L., Feng, H., Peng, Z., & Long, Q. (2024).

Smart City Public Transportation Route Planning

Based on Multi-objective Optimization: A Review.

Archives of Computational Methods in Engineering, 1-

25.

Yeong, De Jong, et al. "Sensor and sensor fusion

technology in autonomous vehicles: A review." Sensor

s 21.6 (2021): 2140.

Efficient Task Scheduling Algorithm Using FreeRTOS for Autonomous Vehicles: Enhanced Safety and Adaptive Features

223