AI Driven Biointegrated Control Systems for Enhancing Driver

Safety and Personalized Vehicle Adaptation

Raju Bhadrabasolara Revappa

1

, Seema Vasudevan

2

and Jaideep Rukmangadan

2

1

Department of Automobile Engineering, The Oxford College of Engineering, Bangalore , Karnataka, India

2

Department of Mechatronics Engineering, The Oxford College of Engineering, Bangalore, Karnataka, India

Keywords: AI Driven Systems, Biointegrated Control, Driver Monitoring, Real‑Time Driver Analysis.

Abstract: Rapid development of AI has brought revolutionary change in the auto industry, creating a new generation of

driver-vehicle interactions. This article proposes the AI-Driven Biointegrated Control Systems (AI-BICS), a

next generation platform to optimize vehicle-to-user interaction based on real-time physiological and

cognitive information from drivers. AI-BICS is the fusion of cutting-edge bio-sensing devices and the latest

deep learning technologies for increased safety, ease of use and customization of our cars. The technology

entails embedding bio-sensors in the car ecosystem that monitor physiological parameters like heart rate, skin

conductance and muscle activity. These signals go through a hybrid deep learning system, composed of

convolutional neural networks (CNNs) for image inputs and recurrent neural networks (RNNs) for time series.

Additionally, Transformer architectures are used for multi-modal data fusion for a complete view of the

driver’s state. It adjusts vehicle settings, like acceleration and steering response, on the fly and provides live

feedback to help you stay in control and comfortable. The system proposes a number of important

contributions to the discipline. It goes beyond the traditional driver monitoring system by integrating real-

time emotion detection, stress evaluation, and fatigue detection to give users more situational awareness. AI-

BICS also facilitates adaptive control, seamlessly morphing from manual to autonomous driving and provides

tailored driving experience through learning and responding to the user’s preferences. The intended effects of

this research are the enormous increases in road safety and driver wellbeing. In combatting important

problems including impaired driving and brain overload, the system is set to reshape the frontiers of end-user

car technology. Further, it can be used for fleet management and insurance schemes to offer a complete set of

safer and smarter transportation solutions.

1 INTRODUCTION

Artificial intelligence (AI) applications to automobile

industry have revolutionized how automobiles work

and connect with their passengers. AI has provided

huge advances in the last decade, with autonomous

driving, intelligent navigation and predictive

maintenance being some of them. Whether it is in

early applications for vehicle efficiency or in

sophisticated ones for driver safety and comfort, AI is

now an inevitable component of the automotive. Such

advances haven’t only revolutionised driving but they

also helped create the foundation for human-centred

systems that anticipate and accommodate driver

requirements. All these achievements have left us

with a set of new challenges in the form of the

proliferation of semi-autonomous and self-driving

cars. Safety and driver comfort are still a big issue,

especially in liminal situations where we need to be

human. Even existing systems, which can keep track

of a simple driver’s actions, are not a complete

account of a driver’s physiological and mental health.

Stress, fatigue and distraction are still leading causes

of traffic accidents and call for improved monitoring

and dynamic control systems. We need new

technologies beyond surface data to offer safe,

personalized driving. It is a study that focuses on an

essential omission in the current automotive

technology: the timely integration of physiological

and cognitive data to improve vehicle management

and driver assistance. Modern driver monitors have

cameras and rudimentary sensors to monitor

behaviour, but they rarely deploy bio-sensing

technologies that gather deep levels of data on a

driver’s mental and emotional states. This constraint

not only limits the systems predictive response but

Revappa, R. B., Vasudevan, S. and Rukmangadan, J.

AI Driven Biointegrated Control Systems for Enhancing Driver Safety and Personalized Vehicle Adaptation.

DOI: 10.5220/0013888300004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 2, pages

669-675

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

669

also the development of true user-centric vehicle

experiences. This is where we have a unique

solution––the AI-Driven Biointegrated Control

System (AI-BICS). With high-level bio-sensing and

the most recent AI models as the core components,

AI-BICS will transform the driver-car interface. The

engine continuously adjusts vehicle dynamics from

physiological and cognitive input in real-time for

best-in-class safety, comfort and customization. AI-

BICS, unlike traditional systems, doesn’t just

measure behaviour but provides a holistic view of the

driver state that can guide smart decisions and

intervention.

2 RELATED WORK

In driver monitoring systems, the years have been rich

in development as artificial intelligence and sensors

have been introduced in an instant.

C.E. Okwudire,

H.V. Madhyastha

(2023) The driver monitoring system

(DMS) of today mostly based on visual and

behavioural signals using in-cabin cameras to identify

facial expressions, eyes and head positions. These

technologies want to flag fatigue, sedation or

sleepiness and provide warnings or intervention to

maintain safety.

C. Chen, S. Ding, J. Wang (2023)

Artificial intelligence-based refinements in such

systems have added some rudimentary predictive

features, so that cars can predict risks from patterns

that can be seen (

Y. Luo, M.R. Abidian, J.-H. Ahn, D.

Akinwande, A.M. Andrews et al.,2023)

. Though they’ve

made the road safer, these innovations have not been

generalised to include much in the way of visual

signals. As such, AI has become a game-changer in

vehicle control, in particular autonomous driving and

advanced driver-assistance systems (ADAS). These

systems also have ML algorithms for lane-keeping,

adaptive cruise control and collision avoidance (

J.

Luo, W. Gao, Z.L. Wang ,2021). AI algorithms interpret

the information from LiDAR, radar and cameras to

drive decisions in real time to eliminate human error

and increase driving performance. But for all these

improvements, such systems only pay attention to the

driving environment outward, and nothing about the

inside. These otherwise strong technologies are

usually limited by a lack of fully integrated driver (

C.

Yang, B. Sun, G. Zhou, T. Guo, C. Ke et al.,2023).

Preexisting solutions, though revolutionary, have

limitations. Another Achilles’ heel is the absence of

multi-modal integration to capture the driver’s

physiological, cognitive and behavioural condition

(

G. Cao, P. Meng, J. Chen, H. Liu, R. Bian et al., 2023).

The majority of systems are based on single

modalities, including eye movement, and ignore all

the data coming in from physiological measures, (

W.

Huang, X. Xia, C. Zhu, P. Steichen, W. Quan et al.,2021)

such as heart rate variability, skin conductance or

EEG (

X. Wang, H. Yang, E. Li, C. Cao, W. Zheng et al.,

2023). This mono dimensional method limits the

system’s capacity to generate an overall picture of the

driver’s state. Further, existing mechanisms do not

change dynamically as needed in real time, but rather

depend on thresholds and generic interventions. This

constrains their ability to tailor intervention to the

specific driver profile or demands of the scenario,

which means that they provide less support (

M. Wang,

T. Wang, Y. Luo, K. He, L. Pan et al., 2021).

The AI-BICS is a proposed architecture that

bridges these divides using the latest in bio-sensing

with the most current AI methods. (

S.H. Kwon et al.,

2022) AI-BICS does not rely solely on inputs from

one modality (physiological signals, behavioural

states, environment) but also incorporates

information from many modes to present a holistic

picture of the state of the driver (

Alagumalai et al.,

2022). The combination of CNNs, RNNs and

Transformer architecture in its hybrid deep learning

algorithm combines multiple sources of data to create

a unified decision stream in real-time with

unparalleled accuracy. Furthermore, AI-BICS can

change adaptively based on driver state and provide

an individual-specific intervention as per the driver’s

requirement and preferences. Linked between the

external environment and the internal analysis of

driver states, AI-BICS redefines driver monitoring

and control technology by breaking through key

technological limitations in existing technologies.

3 PROPOSED FRAMEWORKS

Layered system (AI-BICS – Artificially Intelligent

Biointegrated Control System) incorporating

physiological and behavioural information from real-

time to adaptively to improve the driving behaviour

and support of vehicles. It consists of 3 layers (Bio-

Sensing Layer, Data Processing Layer, and AI

Model) with the final layer being a decision process

to modify the behavior of the vehicle according to the

state of the driver. It provides seamless connection

between the driver and the vehicle for enhanced

safety, convenience and customization through

intelligent, adaptive control.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

670

3.1 System Architecture

3.1.1 Bio-Sensing Layer

Bio-sensing layer AI-BICS is built on by harvesting

wide array of physiological and behavioural

information from the driver. This layer consists of

high-end wearable and embedded sensors

strategically located in the cabin of the car.

Wristbands, EEG headsets, chest-based heartrate

monitors, etc., all record physiological data (heart

rate, skin temperature, galvanic skin reaction, brain

activity). Sensors embedded into the steering wheel,

seats and seatbelts go further than these signals by

recording muscle tension, hand pressure and body

alignment. On board to pick up on feelings and levels

of stress, advanced emotion-recognition technology

is used. Face-recognition cameras monitor micro-

expressions; EEG machines monitor brainwaves

indicative of cognitive load, fatigue or stress.

Combining these signals, the bio-sensing layer

produces a continuous multi-modal feed that gives

you a true picture of the driver’s cognitive and

physiological state. This granular, near-real-time

understanding of the driver drives the AI-driven

changes to the car.

3.1.2 Data Processing Layer

Data processing layer: the data processing layer takes

care of the multi-modal, high frequency data that the

bio-sensing layer produces. Since the system is live,

edge computing is used to do immediate

preprocessing, filtering and feature extraction. Edge

computing keeps latency low by crunching the data

locally in the car to cut down the time needed to get a

useful insight. So noise in EEG signals or heart rate

changes, for example, are smoothed out, and

revealing details like fatigue signs or stress waves are

flagged for downstream analysis. For deep learning

training and optimization in the long term, the system

also uses cloud based deep learning platforms.

Drivers over longer time periods are anonymized and

stored in cloud servers where better models are

trained with the best AI. AI-BICS will learn through

this iterative mechanism to adjust to different driver

profiles and become more accurate in predictions

over time. Edge and cloud computing seamlessly

marry together so both quick fix and long-term

learning is efficiently delivered.

3.1.3 AI Model

In AI-BICS the hybrid deep learning model is at the

heart of this that is dedicated to handle multi-modal

data from the bio-sensing layer. It has three parts:

• Hybrid Deep Learning:

• Convolutional Neural Networks (CNNs)

are used to learn from image data like

facial expressions recorded by incabin

cameras. CNNs decode images for

spotting small movements in expression

that indicate tiredness, tension or other

states.

• Recurrent Neural Networks (RNN) to

process time series of data like heart rate,

EEG waves, and hand movements.

RNNs do a great job of representing

temporal dependencies and patterns that

point to changes in cognition or

physiological states over time.

• Multi-Modal Data Fusion: In order to bring the

various data flows together, Transformer-type

architectures are used. Multi-modal fusion is

possible with transformers as they can track

correlations between inputs from different

modalities (e.g., EEG, face, heart rate) in parallel

and spatial order. Combining physiological,

behavioural and environmental signals,

Transformer models provide a single snapshot of

the driver’s condition for optimal predictions and

intervention.

• Decision Layer: This converts the AI model’s

inputs into autopilot vehicle controls and

feedback loops in real time. Depending on

driver’s state – whether it is "alert", "fatigued" or

"stressed" – the system adjusts parameters of the

car in real time. Steering feel, acceleration and

suspension stiffness, for example, are all

modified to make up for impaired driver

behaviour when the driver is tired.

AI-BICS further provides haptic and visual

feedback that keep the driver on the ball. Haptic

feedback systems, like vibrations of the steering

wheel or seat, tell the driver if it notices that she is

feeling stressed or distracted. Visual cues in

dashboard notifications or head-up displays also

support situational awareness, which leads to

corrective steps. Combining all these elements

provides an instant, adaptive, personalised AI

response focused on the safety and comfort of the

driver while keeping the vehicle in good running

condition.

AI Driven Biointegrated Control Systems for Enhancing Driver Safety and Personalized Vehicle Adaptation

671

Figure 1: Multi-Modal Data Fusion Engine for Driver State Recognition.

Figure 1 depicts the flow of information from

different bio-sensors to practical vehicle control

signals using the AI-BICS model. The upper layer

shows inputs from EEG sensors, heartrate monitors,

wearables and embedded devices, all of which collect

physiological and behavioural data in real time. These

inputs are pre-processed in real time at the Edge

Computing Layer for low latency data refinement,

and then also uploaded to the Cloud System for model

consolidation over time. Its middle section presents

the Hybrid Deep Learning Model – CNNs and RNNs

to extract spatial and temporal features, and

Transformer Multi-Modal Data Fusion to combine

various inputs into a coherent model. This combo

enables powerful driver state analysis. The lower

portion of the image represents the AI-BICS decision

making system whereby AVCS continuously

monitors such things as speed and steering for

enhanced driver safety, comfort, and customization.

This ultimately optimizes Enhanced Vehicle

Performance as seen in the final output layer.

The AI-BICS proposal would be a revolutionary

upgrade over current driver monitoring platforms by

bringing physiological, behavioural, and

environmental information into a single platform.

Bio-sensing layer receives diversified inputs in real

time, and data processing layer provides feature

extraction and model fitting. This hybrid deep

learning algorithm with CNNs, RNNs and

Transformer architectures makes a high-quality

prediction and decision on each driver scenario. AI-

BICS provides a proactive, intelligent approach for

filling in vital gaps of current automotive systems via

dynamic vehicle parameter adjustments and

feedback. It is the kind of model that could

completely reshape driver-car experiences, setting the

next benchmark in safety, customization and overall

experience for the next-generation vehicle.

3.2 AI Model Components

This AI-BICS platform is supported by sophisticated

AI models for accurate analysis and decision-making.

Data Input: The device processes a variety of

physiological and behavioural data streams coming

from embedded and wearable sensors. Inputs from

the body such as heartrate variability, skin

conductance, and EEG are able to give you some

indication of the driver’s body state and mood.

Behavioural information – eye tracking, facial micro-

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

672

expressions, and force applied to the steering wheel –

sits alongside physiological information to provide an

insight into driver behaviour. With such a wide range

of inputs, AI-BICS can recognize cognitive load,

fatigue, and stress variation with great accuracy.

Multi-Modal Data Fusion: In order to efficiently

process the multi-modal streams of data, AI-BICS

uses Transformer architectures. Transformers are

especially suitable for merging multiple inputs

because they can simultaneously deal with relational

relationships between variables. This method enables

the system to fuse physiological and behavioural data

in real-time and recognise patterns pointing to driver

states. Through multi-modal data fusion, AI-BICS

will be able to produce high-quality insights

regardless of the partial or noisy data, with

significantly improved accuracy and reliability.

Prediction Layer: Prediction layer defines the

driver state classification into very specific "alert,"

"fatigued," and "stressed" types. The solution uses a

hybrid deep learning approach that uses

Convolutional Neural Networks (CNNs) for image

input (e.g., facial recognition) and Recurrent Neural

Networks (RNNs) for time-series biometrics. This is

evaluated by calculating the classification

performance, accuracy, recall and F1 scores of the

model. AI-BICS achieves this high reliability by

dynamically recalibrating predictions in real time

with streaming data to provide the right state

classification across different driving scenarios.

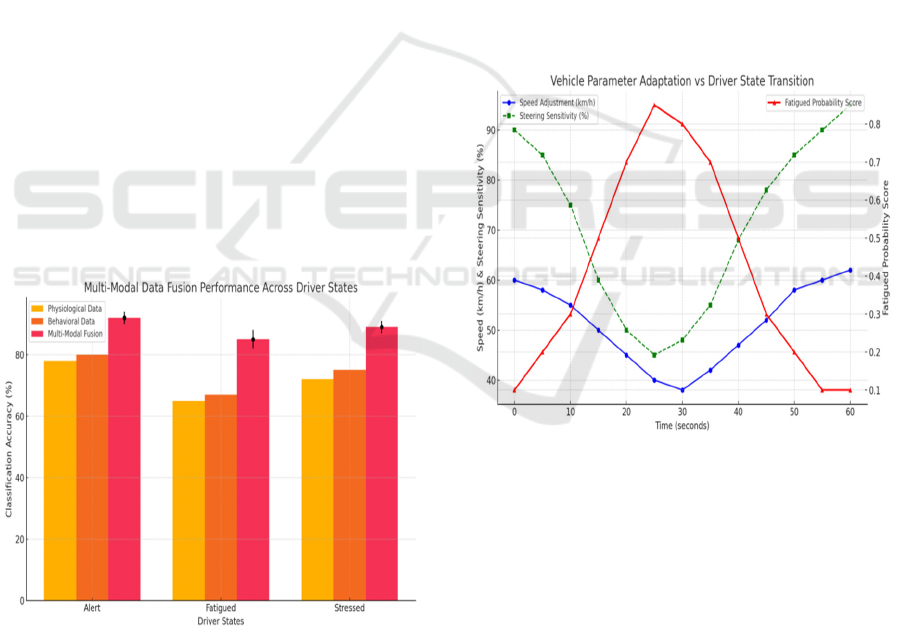

Figure 2: Accuracy of Multi-Modal Fusions for Different

Driver States.

Decision Layer: In the decision layer, RL-based

reinforcement learning approaches are applied to

make recommendations about appropriate

intervention methods. In response to the detected

driver state, the system modifies vehicle parameters

like the speed, steering and cabin environment

automatically. RL is contextual in the interventions,

maximising safety and comfort while minimizing

interference to the driver. For example, in fatigue

detection, the system adjusts to alternating alerts and

semi-autonomous controls to improve driving

comfort.

Figure 2 Classification of driver states (i.e., Alert,

Fatigued, and Stressed) for single input modalities

and multi-modal data fusion. Figure demonstrates

clearly the performance gap between physiological

data alone, behavioral data alone and integrated

Multi-Modal Fusion. Notably, multi-modal data

fusion is reliably more accurate in all states, and has

a 10% to 20% higher performance than single-mode

systems. This outcome shows why combining

multiple data streams are crucial to a holistic driver

conditions analysis. Error bars ensure that the system

is robust by addressing variations, thereby

demonstrating the stability of AI-BICS for predicting

driver states under various scenarios.

Figure 3: Vehicle Parameter Adaptation Vs. Driver State

Transition.

Figure 3 shows how parameters of the vehicle

change in real-time with the driver’s state. The graph

indicates the correlation between the risk of a driver

becoming fatigued and the subsequent modifications

to the speed and steering controls. As the probability

of fatigued state increases, the system actively slows

down the vehicle and decreases steering response to

improve stability and safety. When the driver wakes

up, both acceleration and steering become steadily

more normal. Such adaptive behavior in real-time

also proves the responsiveness of AI-BICS Adaptive

Vehicle Control System to effectively mitigate risk

during

impaired driving. This dual Y-axis display

AI Driven Biointegrated Control Systems for Enhancing Driver Safety and Personalized Vehicle Adaptation

673

Table 1: Classification of Driver States Depending on Input Modes.

Driver

States

Physiological Data

Accuracy (%)

Behavioral Data

Accuracy (%)

Multi-Modal

Fusion

Accuracy

(%)

Improvement

Over Single

Modality

(%)

Alert 78.5 80.2 92.3 13.8

Fatigue

d

65.4 67.8 85.5 17.7

Stressed 72.1 75 89.4 14.4

demonstrates exactly how the probabilities of driver

states are matched to vehicle control changes over

time demonstrating the accuracy and usefulness of

the system

Table 1 presents a detailed view of classification

performance for each of three driver states, Alert,

Fatigued and Stressed, on three different input

modalities (physiological, behavioral and multi-

modal fusion). The results show that multi-modal

fusion performs significantly better than the

individual modalities with highest classification

accuracy across all states. For instance, physiological

and behavioural data alone provide accuracy of 65–

80% but when combining these modalities through

multi-modal fusion, 92.3% accuracy for "Alert",

85.5% accuracy for "Fatigued" and 89.4% accuracy

for "Stressed." Furthermore, accuracy improvement

is from 13.8%–17.7% highlighting the value of

integrating multiple data sources to ensure a more

accurate and valid driver state measurement. Such

findings demonstrate the ability of the AI-BICS

system in the proposed model to detect driver states

by understanding multidimensional physiological and

behavioural patterns

Table 2: System Latency and Response Time for Vehicle Parameter Adaptation.

Driver State

Transitions

Speed

Adaptation

Latenc

y

(

ms

)

Steering Sensitivity

Adjustment Latency (ms)

System

Decision Time

(

ms

)

Overall

Response Time

(

ms

)

Alert → Fatigued

120 135 220 340

Fatigued →

Stresse

d

140 155 250 370

Stressed → Alert

110 125 200 315

Table 2 shows the system responsiveness to driver

state transitions: latency and total response time to

vehicle parameter changes. It measures three

transitions – Alert to Fatigued, Fatigued to Stressed,

and Stressed to Alert – and important response

parameters, such as speed adaptation latency, steering

sensitivity adjustment latency, system decision time,

and overall response time. The findings reveal that the

system is very low-latency with speed adjustment

from 110 to 140 ms and steering sensitivity

adjustments from 125 to155 ms System decision

times are similarly fast, making sure the AI-BICS

system intervenes in time. The total response time

remains less than 370 ms and reflects how the system

reacts rapidly to driver state changes with low

latency. This comparison illustrates the system’s

suitability for real-time applications, where fast

response is required for safety and driver assistance.

4 CONCLUSIONS

The AI-Driven Biointegrated Control System (AI-

BICS) is the next generation of automotive systems

that combine real-time physiological and behavioral

data with cutting edge AI. With its layers of structure,

AI-BICS improves safety on the road, personalization

and experience. Because the system can track driver

conditions – fatigue, stress, distraction, etc – the

unhealthy driving situation can be identified and

prevented in advance, so appropriate actions can be

made. With autopilotly controlling vehicle features,

reconfiguring cabin interiors, and offering predictive

accident avoidance tools, AI-BICS sets a new bar for

driver-car technology. And the systems ongoing

learning also guarantees that every driving is tailored

to the driver individually, with vehicle parameters

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

674

and settings adapted to drivers. It’s not only this

research that fixes the drawbacks of current driver

monitoring technologies but it shows that multi-

modal data fusion and real-time AI-powered decision

support can be key for better vehicle performance and

user safety. AI-BICS could impact more than just cars

with revolutionary fleet management, autonomous

vehicle operation and insurance models. With the

evolution of automotive into more intelligent, user-

centric systems, AI-BICS is one innovation that has

the potential to change the paradigm of safety and

personalization. It is scalable and flexible, which

promises to open the doors to future advancements,

which is a great advance in the merging of AI and

human-centred technology.

REFERENCES

Alagumalai, W. Shou, O. Mahian, M. Aghbashlo, M.

Tabatabaei et al., “Self-powered sensing systems with

learning capability,” Joule, vol. 6, pp. 1475–1500,

2022. DOI: 10.1016/j.joule.2022.06.001.

B. Feng, T. Sun, W. Wang, Y. Xiao, J. Huo et al.,

“Venation-mimicking, ultrastretchable, room-

temperature-attachable metal tapes for integrated

electronic skins,” Adv. Mater., vol. 35, pp. 2208568,

2023. DOI: 10.1002/adma.202208568.

C. Chen, S. Ding, J. Wang, “Digital health for aging

populations,” Nat. Med., vol. 29, pp. 1623–1630, 2023.

DOI: 10.1038/s41591-023-02391-8.

C. Yang, B. Sun, G. Zhou, T. Guo, C. Ke et al.,

“Photoelectric memristor-based machine vision for

artificial intelligence applications,” ACS Mater. Lett.,

vol. 5, pp. 504–526, 2023. DOI:

10.1021/acsmaterialslett.2c00911.

C.E. Okwudire, H.V. Madhyastha, “Distributed

manufacturing for and by the masses,” Science, vol.

372, pp. 341–342, 2021. DOI: 10.1126/science.

abg4924.

F. Sun, Q. Lu, S. Feng, T. Zhang, “Flexible artificial

sensory systems based on neuromorphic devices,” ACS

Nano, vol. 15, pp. 3875–3899, 2021. DOI:

10.1021/acsnano.0c10049.

G. Cao, P. Meng, J. Chen, H. Liu, R. Bian et al., “2D

material based synaptic devices for neuromorphic

computing,” Adv. Funct. Mater., vol. 31, pp. 2005443,

2021. DOI: 10.1002/adfm.202005443.

J. Luo, W. Gao, Z.L. Wang, “The triboelectric

nanogenerator as an innovative technology toward

intelligent sports,” Adv. Mater., vol. 33, pp. 2004178,

2021. DOI: 10.1002/adma.202004178.

M. Wang, Y. Luo, T. Wang, C. Wan, L. Pan et al.,

“Artificial skin perception,” Adv. Mater., vol. 33, pp.

2003014, 2021. DOI: 10.1002/adma.202003014.

M. Wang, T. Wang, Y. Luo, K. He, L. Pan et al., “Fusing

stretchable sensing technology with machine learning

for human–machine interfaces,” Adv. Funct. Mater.,

vol. 31, pp. 2008807, 2021. DOI:

10.1002/adfm.202008807.

S.H. Kwon, L. Dong, “Flexible sensors and machine

learning for heart monitoring,” Nano Energy, vol. 102,

pp. 107632 2022. DOI: 10.1016/j.nanoen.2022.10763

2.

W. Huang, X. Xia, C. Zhu, P. Steichen, W. Quan et al.,

“Memristive artificial synapses for neuromorphic

computing,” Nano-Micro Lett., vol. 13, pp. 85, 2021.

DOI: 10.1007/s40820-021-00618-2.

X. Wang, H. Yang, E. Li, C. Cao, W. Zheng et al.,

“Stretchable transistor-structured artificial synapses for

neuromorphic electronics,” Small, vol. 19, pp.

2205395, 2023. DOI: 10.1002/smll.202205395.

Y. Luo, M.R. Abidian, J.-H. Ahn, D. Akinwande, A.M.

Andrews et al., “Technology roadmap for flexible

sensors,” ACS Nano, vol. 17, pp. 5211–5295, 2023.

DOI: 10.1021/acsnano.2c12606.

Y. Wang, M.L. Adam, Y. Zhao, W. Zheng, L. Gao et al.,

“Machine learning-enhanced flexible mechanical

sensing,” Nano-Micro Lett., vol. 15, pp. 55, 2023. DOI:

10.1007/s40820-023-01013-9.

AI Driven Biointegrated Control Systems for Enhancing Driver Safety and Personalized Vehicle Adaptation

675