AI‑Enhanced Smart Helmet for Navigation, AR Integration and

Road Safety

K. Karthika, Logeshwari A., Akkash Raj S. and Abinaya M. V.

Department of Electronics and Communication Engineering, KCG College of Technology, Chennai, Tamil Nadu, India

Keywords: Navigation, AI‑generation, AR Integration, Optimal Visibility, Road Security.

Abstract: The evolution of digital technology in the automotive sector has raised concerns about driver distraction

caused by navigation screens. To address this challenge, an advanced system using augmented reality is

proposed, ensuring that navigation instructions are integrated directly into the driver’s line of sight,

minimizing the need to look away. The system’s primary innovation involves gatherings. AI-generated

navigation data, processing it through a main controller, and displaying it on an AR-based unit positioned for

optimal visibility. This method allows for effortless navigation without diverting the driver’s focus from the

road. Thereby enhancing both safety and convenience. Additionally, an accident notification system is

incorporated to further improve road security. By utilizing artificial intelligence and cutting-edge

technological advancements, this project seeks to revolutionize how navigation information is presented,

ultimately creating a more secure and efficient driving environment.

1 INTRODUCTION

Sure enough, as automakers are the espouse of

technological advancement, there is a new project in

their hands nearly ready to hit the market: This

system takes route guidance to the next level,

combining augmented reality with AI-sourced data

from the ChatGPT server. Real-time navigation

prompts improving security and convenience. This

high-tech AR navigation system includes a built-in

accident detection feature and aims to raise the bar for

safety standards in the pursuit of a more aware and

secure drive. This provides a comprehensive review

of intelligent helmet for systematic management of

worker safety in construction areas. It describes how

integrated sensors, communication protocols, and

real-time tracking can help prevent accidents. A

detailed description is given for an IoT-based smart

helmet and fitted with sensors to monitor

environmental conditions and worker wellness.

Various safety features including fall detection, gas

hazard identification, and live location tracking, are

built into the platform to provide better security on-

site. Researchers have developed an ultra-low-power

smart helmet using high technology for industrial

safety; it is integrated into the helmet through sensors

to monitor vital signs and detect risks and inform

workers and their supervisors. This article presents

research on the integration of augmented reality

(AR) into a smart helmet, with the aim of maximizing

worker safety. Analyzing the project from its prospect

based on advancements in technology, the intelligent

helmet provides real-time information overlays,

helps identify hazards, and assists with navigation in

the works environment. This paper proposes an AI

smart helmet with real-time hazard detection and

alerting systems. The helmet applies machine

learning to sensor data to produce alerts and provide

actionable safety guidance.

2 LITERATURE REVIEW

M. Li and A. I. Mourikis (2013), Smart Helmet has

a GPS module for location acquisition and a

navigation system that displays navigation

information on the screen to get the driving route.

Users can stay focused on the road with step-by-

step navigation and critical notifications without

having to look away from the road. The helmet's

built-in camera uses computer vision algorithms

to enhance safety with obstacle recognition and

collision warnings. The helmet is made of impact-

resistant material. The visor works as a heads-up

Karthika, K., A., L., S., A. R. and V., A. M.

AI-Enhanced Smart Helmet for Navigation, AR Integration and Road Safety.

DOI: 10.5220/0013888100004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 2, pages

663-668

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

663

display (HUD), providing visual and audio cues to

keep the driver safe at all times. It continuously

assesses user input, and the entire surrounding

environment to keep everyone safe while

traveling. Additionally, the other safety teatures

are implanted to improve the protection of the

rider.

L. Chang proposes Smart Helmet is designed

with Bluetooth connectivity, enabling effortless

synchronization with a rider’s smartphones. This

functionality allows hands- free interactions, such

as call management, receiving message alerts, and

accessing mobile applications, eliminating the

need to remove the helmet or use external devices.

By integrating these advanced capabilities, the

project seeks to transform the riding experience,

emphasizing both safety and convenience.

Leveraging state-of-the-art technology, the helmet

enhances awareness and streamlines

communication, ultimately improving the security

and comfort of motorcyclists.

H. Min proposes primary aim of this research is

to

develop an IoT-enabled smart helmet that enhances

safety for

motorcyclists. The proposed system

includes advanced features such as alcohol

detection, pothole and speed breaker recognition,

and fall detection. If the alcohol level exceeds the

permissible limit, the ignition system prevents the

rider from starting the bike. Using an MQ3 alcohol

sensor, the system can detect alcohol levels from

0.05 mg/L to 10 mg/L. It also identifies potholes

and speed bumps within a range of 2 cm to 400 cm.

Additionally, the helmet utilizes GSM and GPS

technology to notify a registered contact in case of

an accident.

H. Ko proposes Integrating modern

technologies, the Smart Helmet offers a holistic

safety solution for motorcyclists. Key features

include intelligent navigation support, easy call

handling, real-time accident detection, alcohol

level monitoring, fog elimination, and backlight

indicators. These functionalities boost visibility,

minimize risks, and promote adherence to safety

standards. A built-in safety mechanism prevents

the bike from starting unless the rider follows the

necessary safety measures. Additionally, an LED

strip in the visor aids in clearing fog, and a

backlight at the rear improves visibility for other

riders. Comprehensive testing validates the

reliability and efficiency of the helmet in real-world

conditions, while future improvements focus on

advanced sensor integration, AI applications,

better connectivity, and improved energy

efficiency.

X. Chen proposes helmet enhances rider safety

by providing alerts about potential road hazards,

verifying helmet usage, and implementing wireless

bike authentication to deter theft. Given the

extensive use of motorcycles in India compared to

cars, ensuring safety is paramount. This security

mechanism aims to establish a robust vehicle

protection system by modifying and integrating

existing technologies. The system consists of three

primary components: gas sensing, obstacle

detection, and an anti-theft alarm, all linked to an

ATmega16 microcontroller. This research outlines

a Smart Helmet equipped with various safety and

security features for enhanced rider protection.

J. Raquet paper introduces a 3D point-cloud

mapping approach for dynamic environments

using a LiDAR sensor attached to a Smart Helmet

worn by micro-mobility riders.

The scan data

obtained from the LiDAR undergoes correction by

estimating the helmet’s 3D position and orientation

using

an NDT-based SLAM system combined with

an IMU. The refined data is then projected onto an

elevation map. The system categorizes scan data

from static and dynamic objects using an

occupancy grid method, ensuring that only static

object data contributes to point-cloud mapping.

Z. M. Kassas study presents an intelligent

motorcycle helmet assistance system designed to

provide riders with critical information. The

system displays speed, navigation

directions from

smartphones to the Heads-up Display (HUD),

and

messages using an OLED screen. Additionally,

an inertial sensor monitors the rider’s posture, issuing

an alert if

prolonged downward head movement is

detected. The system

also detects traffic collisions

through acceleration monitoring and triggers

emergency notifications when needed. These

enhancements ensure that riders remain focused on

the road, improving safety and overall riding

experience.

S.-H. Fang proposes Smart Helmet system

extends its functionality by monitoring crucial

vehicle parameters such as tire pressure and fuel

levels. In case of an accident, a vibration sensor

detects the impact and promptly activates an alert

system through GSM communication, ensuring

rapid emergency response. Wireless connectivity

plays a key role, linking the helmet to an Arduino

Mega via the ESP protocol for real-time data

exchange. An LCD screen presents live status

updates, and the GPS module allows real-time

location sharing for improved navigation and

security. This system prioritizes rider safety while

also addressing authentication challenges, setting

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

664

new standards for vehicle monitoring in modern

transportation.

P. Thevenon et al. proposes Advanced

technologies

enable rapid prototyping and testing of

novel safety solutions. Virtual and mixed reality

environments help evaluate systems

in hazardous

conditions. This paper explores a suite of

motorcycle safety gadgets, including a Smart

Helmet, a haptic jacket, and haptic gloves. The

helmet features smart glasses and a communication

headset, while the jacket incorporates vibration

motors and LED signals. The gloves contain

individual vibration motors to enhance sensory

feedback. The system was tested in a simulated

environment, and findings demonstrate its

potential to improve safety, comfort, and user

experience for motorcyclists.

P. Thevenon et al. proposes system employs a

Smart Helmet as the primary user interface to

gather motion and location data, transmitting it to

a 3D visualization platform. The platform offers

real-time tracking of user movement and

positioning in a three-dimensional space,

delivering instant feedback. To refine sequential

signal classification, the study proposes SFETNet,

which enhances network learning by addressing

limitations caused by simplistic feature sets.

Compared to conventional classification

networks, SFETNet delivers superior accuracy and

performance. This system holds great promise for

applications in industries such as smart

manufacturing and healthcare, where real-time

movement tracking is essential.

3 EXISTING SYSTEM

Traditional vehicle navigation relies heavily on

GPS technology, with most systems delivering

guidance through in-car monitors or mobile

devices that provide auditory and visual directions.

While these solutions are widely used, they often

require drivers to glance away from the road,

increasing the risk of distractions and accidents.

The current interface design does not always align

with a driver’s line of sight,

making it difficult to

integrate navigation assistance naturally into the

driving process. Constantly checking a separate

device for directions can reduce focus on the road,

elevating safety concerns. Additionally,

conventional navigation systems may not respond

efficiently to live traffic updates, sudden road

hazards, or changes in route conditions. Many of

these tools also feature user interfaces that are not

intuitive, with cluttered menus and small displays

that complicate interaction while driving.

4 PROPOSED SYSTEM

To reduce the risk of distractions, navigation

guidance should be directly projected into the driver’s

natural line of sight, removing the need to look away

from the road. A streamlined, intuitive interface must

be developed to align seamlessly with

the driver’s

field of vision, ensuring smooth and immediate

absorption of navigation cues. AI-powered data

from the ChatGPT server will enable real-time

traffic updates, road closure alerts, and adaptive

routing for an optimized driving experience.

Furthermore, the system should be designed for

seamless integration with existing vehicle

infrastructure, ensuring broad compatibility across

various makes and models.

5 ARCHITECTURE

The AI-Enhanced Smart Helmet is designed to

improve rider safety, streamline navigation, and

provide real-time hazard detection using Artificial

Intelligence (AI), Augmented Reality (AR), and

IoT-enabled sensors. The system consists of

multiple interconnected components that work

together to ensure a safer and more efficient riding

experience. At the core, the AI GEMINI Kit

functions as the brain, processing navigation

inputs, recognizing voice commands, and detecting

potential hazards through advanced AI algorithms.

The helmet is equipped with a transparent OLED

or AR- based display embedded into the visor,

which overlays turn- by-turn navigation, traffic

warnings, and road alerts directly onto the rider’s

line of sight, ensuring better focus and reducing

distractions. To enhance safety, the helmet

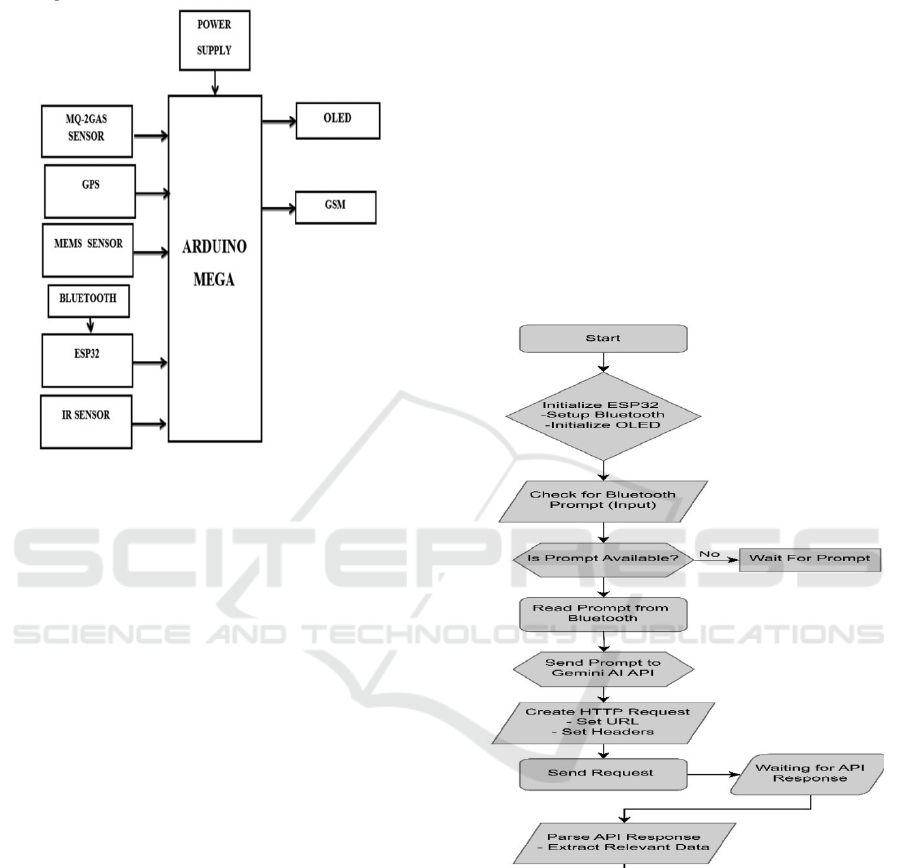

integrates a variety of sensors. Figure 1 shows the

system architecture.

A MEMS sensor detects sudden changes in

movement, falls, or collisions and immediately

activates emergency measures. The GPS module

continuously tracks real-time location data for

navigation and accident response, while the GSM

module instantly sends distress alerts to emergency

contacts when an accident is detected. An infrared

(IR) sensor ensures the helmet is worn correctly

before allowing the vehicle to start. Additionally,

an MQ-3 gas sensor monitors alcohol consumption

AI-Enhanced Smart Helmet for Navigation, AR Integration and Road Safety

665

by analyzing the rider’s breath, sending alerts if

intoxication is detected to discourage impaired

driving.

Figure 1: System Architecture.

The system also features Bluetooth for wireless

smartphone connectivity, Wi-Fi for cloud-based AI

communication, and V2X (Vehicle-to-Everything)

integration to facilitate data exchange with smart

traffic infrastructure and nearby vehicles, enabling

proactive hazard avoidance. The system operates

seamlessly through an automated workflow. When

the rider puts on the helmet, the IR sensor verifies

compliance

and activates the system. The rider

inputs the destination

using voice commands or a

mobile app, allowing AI GEMINI

to retrieve live

navigation data. The AR display then projects real-

time directions onto the visor. Throughout the ride,

the system continuously monitors safety

parameters, issuing alerts for helmet non-

compliance or alcohol detection. In the event of an

accident, the MEMS sensor detects the impact,

triggering the GPS module to acquire location

coordinates and automatically send an emergency

alert via the GSM module. The helmet also syncs

with traffic networks for live updates and supports

hands-free calls and navigation through Bluetooth.

To optimize power usage, an automatic shutdown

feature deactivates the system when the helmet is

removed. This AI-driven smart helmet architecture

offers hands-free navigation, proactive hazard

detection, intelligent connectivity with smart road

systems, automated emergency response, and an

intuitive A R -assisted

interface.

By integrating

state-of-the-art sensors, wireless communication,

and artificial intelligence, this system

revolutionizes road safety, significantly reducing

accident risks and making two- wheeler travel safer

and more efficient.

5.1 AI Gemini Server

Figure 2 shows an ESP32-based mechanism is

designed to interact with the Gemini AI API. The

process begins with setting up the ESP32,

activating Bluetooth, and turning on an OLED

display. The system listens for Bluetooth signals

and, upon detecting a prompt, captures the input,

forwards it to the Gemini AI API through an HTTP

request with appropriate headers and URL, and

awaits a response.

Figure 2: AI Gemini Sever.

After receiving the reply, it parses the data to

extract the required details. This cycle operates

continuously, ensuring real-time processing of

Bluetooth commands.

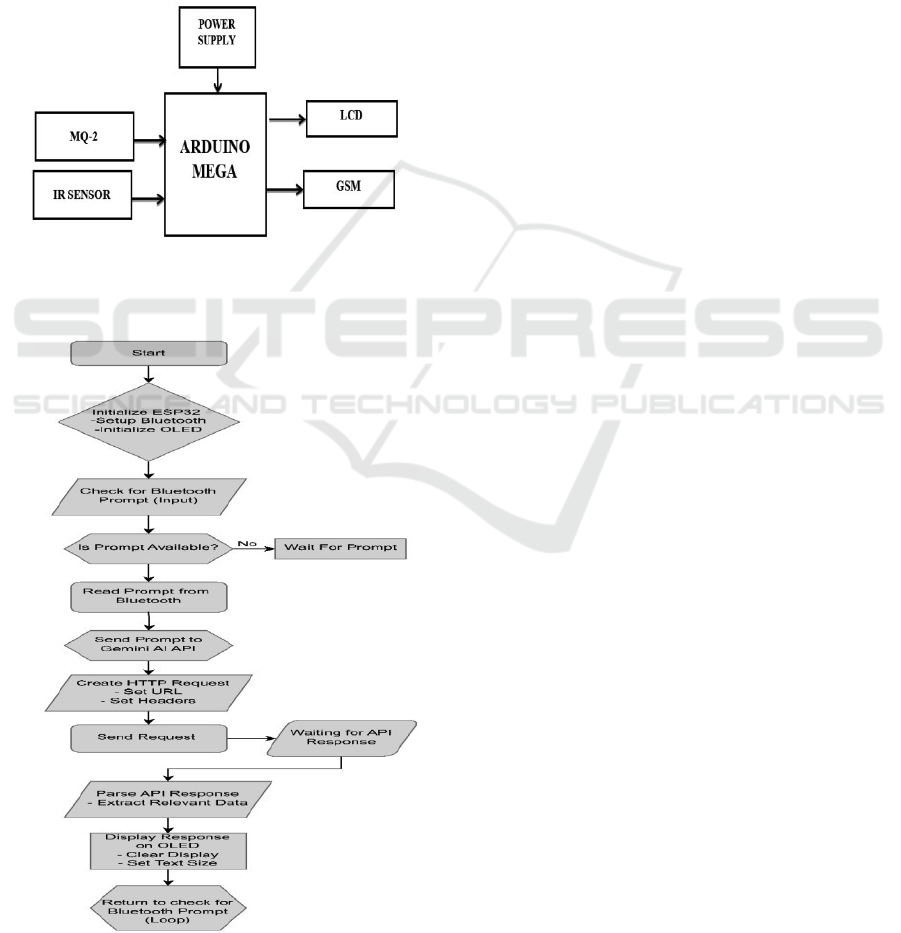

5.2 AI Gemini Server to Display

The flowchart showcases the working mechanism

of an ESP32-powered system that engages with the

Gemini AI API. It initiates by booting up the

ESP32 microcontroller, setting up Bluetooth

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

666

connections, and configuring an OLED display.

The system persistently scans for Bluetooth signals

and, upon detecting a promptreads its content and

forwards it

to the Gemini AI API. An HTTP request

is generated with the

necessary headers and URL,

then sent to the API. After receiving the API’s

response, the system processes the data,

extracts

relevant details, and displays them on the OLED

screen. The display is cleared, and text settings are

adjusted before the system returns to scanning for

new prompts, ensuring a continuous cycle of

communication with the Gemini AI API. Figure 3

shows the AI gemini server to display.

Figure 3: AI Gemini Server to Display.

5.3 Alcohol and Proximity Sensor Working

Figure 4: Alcohol and Proximity Sensor Working.

Utilizing an Arduino Mega microcontroller,

this system integrates sensors to promote safer

driving conditions. The MQ-2 gas sensor identifies

alcohol

intake in the driver, while the IR sensor

checks for proper

helmet usage. If any safety

violations occur, the GSM module

sends instant

warnings to the user. This setup strengthens road

safety by enforcing crucial driving regulations.

Figure 4 shows the Alcohol and Proximity Sensor

Working.

5.4 Accident Detection and Alert

An Arduino Mega-based system utilizes sensors,

including a MEMS sensor, to identify accidents.

Upon detection, the gyroscope signals the GPS to

acquire location data, and the Arduino relays an

alert through GSM to emergency contacts. By

enabling rapid response in critical situations, this

technology significantly enhances accident

management and safety.

6 WORKING PRINCIPLE

The Arduino Mega acts as the central intelligence

of this project, coordinating key functions.

Working alongside the AI GEMINI kit, it

processes and transfers navigation data. This

information is relayed to the main controller, which

then wirelessly sends it to a designated display unit

for the driver's convenience. A MEMS sensor is

linked to the main controller, detecting angular

shifts and serving as a critical safety feature. When

an accident is detected, the system recognizes the

change in position and automatically retrieves GPS

coordinates, sending them via GSM to emergency

contacts for immediate assistance. To prevent

reckless driving, an alcohol detection sensor is

integrated. If alcohol consumption is identified, an

automatic alert is dispatched to designated contacts

via SMS, enhancing precautionary measures. An

IR sensor is also employed to check for helmet

usage. If the driver is not wearing a helmet

properly, the system promptly notifies them. The

OLED display provides real-time updates,

ensuring the user has constant access to necessary

information. Through the fusion of sensor

technologies, artificial intelligence, and wireless

connectivity, this system serves as an innovative

solution to boost road safety, lower accident risks,

and enforce responsible driving behavior.

AI-Enhanced Smart Helmet for Navigation, AR Integration and Road Safety

667

7 RESULTS

Figure 5: Hardware Implementation.

Figure 5 shows the hardware implementation.

8 FUTURE SCOPE

By leveraging machine learning advancements, the

system can refine its ability to analyze driver

behavior, enabling a highly personalized and

adaptive navigation experience. The integration of

Vehicle-to-Everything (V2X) technology will

allow vehicles to communicate with their

surroundings, offering real-time traffic updates,

hazard alerts, and collaboratively optimized routes

for greater efficiency and

safety. Future

developments could also introduce holographic

display technology or wearable AR devices,

eliminating the need for fixed screens and reducing

driver distractions. As autonomous vehicle

technology continues to progress, the system can

seamlessly integrate with self-driving cars,

providing an intuitive interface for passenger

interaction. Additionally, collaboration with smart

city infrastructure

such as AI-driven traffic signals,

connected road signage, and

automated parking

systems will further enhance navigation, road

safety, and transportation efficiency, ultimately

fostering a smarter and more connected mobility

ecosystem.

9 CONCLUSIONS

The automotive industry is increasingly reliant on

advanced digital technologies, yet navigation

advancements have raised concerns about driver

distraction. To resolve this issue, an innovative

project leverages augmented reality (AR) to

provide real-time navigation assistance. The

system gathers navigational inputs from the AI

GEMINI server, processes them through the main

controller, and displays relevant guidance within

the driver’s immediate view. By directly integrating

navigation overlays into the diver’s visual field.

AR

minimizes distractions and enhances road

awareness. This improvement not only elevates

driving convenience but also plays a key role in

reducing accident rates. Additionally, the inclusion

of an accident alert system further strengthens road

safety, redefining how drivers interact with

navigation for a more secure journey.

REFERENCES

H. Ko, B. Kim, and S.-H. Kong, “GNSS multipath- resistant

cooperative navigation in urban vehicular network,

“IEEE Trans. Veh. Technol., vol.64, no. 12, pp. 5450-

5463.2015.

H. Min, X. Wu, C. Cheng, and X. Zhao, “Kinematic and

dynamic vehicle model-assisted global positioning

method for autonomous vehicles with low-cost

GPS/camera/in-Vechicle sensors,’ IEEE Sensors J.,

vol.19, no. 24, pp. 1/24, Jan. 2019.

J. Raquet and R. K. Martin, “Non-GNSS radio frequency

navigation,” in Proc. IEEE Int. Conf. Acoust., Speech

Signal Process., Mar. 2008, pp. 5308–5311.

L. Chang, X. Niu, T. Liu, J. Tang, and C. Qian,

“GNSS/INS/LiDARSLAM integrated navigation

system based on graph optimization, “Remote Sens.,

vol. 11, no, 9, p. 1009, Apr. 2019.

M. Li and A. I. Mourikis, “High -precision, consistent EKF-

based visualinertial odometry,” Int. J. Robot. Res., vol.

32, no. 6, pp. 690/711, May 2013.

P. Thevenon et al., “Positioning using mobile TV based on

the DVB-SH standard,” Navigation, vol. 58, no. 2, pp.

71–90, Jun. 2011

S.-H. Fang, J.-C. Chen, H.-R. Huang, and T.-N. Lin, “Is FM

a RF-based positioning solution in a metropolitan- scale

environment? A probabilistic approach with radio

measurements analysis,” IEEE Trans. Broadcast.,vol.

55, no. 3, pp. 577–588, Sep. 2009.

X. Chen and Y. Mortan, “Iterative subspace alternating

projection method for GNSS multipath DOA

estimation,” IET Radar, Sonar Navigat., vol. 10, no. 7,

pp. 1260/1269, Aug. 2016.

Z. M. Kassas, “Collaborative opportunistic navigation,”

IEEE Aerosp. Electron. Syst. Mag., vol. 28, no. 6, pp.

38–41, Jun. 2013.

Z. M. Kassas, “Analysis and synthesis of collaborative

opportunistic navigation systems,” Ph.D. dissertation,

Dept. Elect. Comput. Eng., Univ. Texas Austin, Austin,

TX, USA, 2014.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

668