Neem Leaf Disease Detection Using Hybrid Deep Learning Models

Jaldu Balasubramanyam Guptha

1

, E Elakiya

1

, K Ramesh

2

and E Anuja

3

1

School of Computer Science and Engineering, Vellore Institute of Technology, Chennai– 600127, Tamil Nadu, India

2

Department of CSE, Sri Bharathi College for women, Pudkottai -622303, Tamil Nadu, India

3

Department of Chemistry, University College of Engineering, Anna University, BIT Campus, Trichy – 620024,

Tamil Nadu, India

Keywords: Neem leaf, Disease Detection, Deep Learning, Hybrid models, Smart Farming.

Abstract: Neem is well-known for its medicinal value however; neem yields are highly affected by various leaf diseases.

Management and control of the diseases requires timely and accurate detection. This paper introduces a

mobile hybrid deep learning framework based on MobileNet and DenseNet that has high accuracy of 90.5%

compared to other hybrid models. The proposed framework consists of image processing, feature extraction

model, and ensemble learning model to improve accuracy and robustness. The dataset includes 1862 images

of neem leaf diseases in six classes; a split of an 80-20 training to testing ratio was used for the dataset. The

proposed MobileNet DenseNet framework is an enhancement from existing framework and illustrates the

feature extraction and classification capabilities. Empirical results support our model has the highest accuracy

and is an effective approach for neem leaf disease detection. The current paper provides precision agriculture

with an automated framework for accurate neem leaf disease detection and timely disease management

programs.

1 INTRODUCTION

Neem, one of the highly recognized medicinal trees,

possesses antibacterial, antifungal, and insecticidal

characteristics. Nevertheless, various leaf diseases

have a huge impact on its health and productivity,

hence restraining its growth capacity and medicinal

qualities. Some of the most prevalent neem leaf

diseases are Alternaria, Dieback, and Leaf Blight.

These diseases not only diminish the natural

resistance of the tree against insects but also decrease

the overall vigor of the tree, resulting in massive

agricultural and economic losses. A timely and

accurate diagnosis of these diseases is indispensable

for the proper implementation of control measures.

Traditional approaches for detecting neem leaf

diseases rely on manual inspection performed by

agricultural professionals. These methods are

subjective in nature, time-consuming, and not ideal

for large-scale surveillance. Moreover, most of the

neem leaf diseases have the same visual symptoms,

which poses a challenge to differentiate between them

through human observation. This makes it absolutely

crucial to devise an automated, accurate, and efficient

method for proper classification of neem leaf

infections.

Deep learning models have shown promising

performance in plant disease diagnosis, with the

ability to extract complex information and precisely

identify images. These architectures, such as

DenseNet, ResNet, MobileNet, AlexNet, and

GoogleNet, have shown promising performance in

image classification. However, single models are

prone to failure in handling intra-class variability

problems and limitations on feature extraction, which

results in decreased classification accuracy. In an

attempt to address these problems, hybrid models

have become increasingly popular in the area of

recent work. This work introduces a hybrid deep

learning model that combines the strengths of

MobileNet and DenseNet for neem leaf disease

classification (Elakiya, E et al., 2024). MobileNet,

with its lightweight architecture and computational

aspect, enhances feature extraction, while DenseNet,

with its dense connection and deep feature

propagation, enhances classification accuracy. We

compare the MobileNet-DenseNet model with other

hybrid frameworks, such as DenseNet-AlexNet,

DenseNet-ResNet, and DenseNet-GoogleNet, to

Guptha, J. B., Elakiya, E., Ramesh, K. and Anuja, E.

Neem Leaf Disease Detection Using Hybrid Deep Learning Models.

DOI: 10.5220/0013887500004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 2, pages

627-635

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

627

determine the best architecture to apply in the

classification of neem leaf diseases. To evaluate the

performance, a dataset of 1,862 images of neem

leaves was used, classified into six classes:

Alternaria, Dieback, Leaf Blight, Leaf Miners with

Powdery Mildew, Powdery Mildew, and Healthy.

From the experimental results, the proposed

MobileNet-DenseNet model contributions are

proposing a hybrid deep learning model (MobileNet-

DenseNet) for accurate neem leaf disease

classification (R. Kanagaraj et al., 2023). Compares

the performance of the other three hybrid models to

select the optimal architecture. Demonstrates the

proposed model's practical application for the

diagnosis of neem leaf diseases automatically, which

is beneficial for precision agriculture and sustainable

neem tree cultivation.

2 RELATED WORKS

This paper explores a set of hybrid deep learning

architectures for plant disease detection, emphasizing

their effectiveness in the field of precision

agriculture. One of the commonly used methods

involves combining EfficientNetB0 with

MobileNetV2, both light-weight mobile

architectures, with an accuracy rate of 98.44%. This

hybrid system is more effective compared to other

conventional CNN-based architectures like ResNet

and AlexNet and, therefore, is a promising candidate

for plant disease diagnosis in real-time (Vamshi et al.,

2024). Another method involves combining Artificial

Neural Networks (ANNs) and Convolutional Neural

Networks (CNNs) for differentiation between

different types of plant diseases and achieves 98%

accuracy, 97% precision, and 96% recall (Vellela et

al., 2024).

A hybrid stacking learning approach that

integrates pre-trained models with image processing

technology has demonstrated improved performance.

With ensemble CNNs trained on the Plant Village

dataset that contains images of healthy and diseased

leaves, this approach achieves a classification

accuracy range of 99.75% to 100% (Sheneamer et al.,

2024). A hybrid approach integrating wavelet

analysis, autoencoder denoising, and SVM

classification has been reported to be effective for a

range of plant species but is not specifically neem leaf

disease (Huddar et al., 2024). A hybrid model

integrating EfficientNetB7 enhances image

segmentation and classification with an Adaptive and

Attention-aided Mask R-CNN (AAM-RCNN), which

is further optimized by the Boosted Random

Parameter-based Golden Tortoise Beetle Optimizer

(BRP-GTBO). This approach significantly improves

plant disease detection and classification accuracy

(Patil et al., 2025). Another hybrid model involving

Convolutional Neural Networks (CNNs) and K-

means clustering clocks 98.38% accuracy on a

database of 7,771 leaf images, which suggests its

application in the automatic diagnosis of diseases

(Mallma et al., 2021). Comparison of deep learning

architectures such as VGG16, VGG19, and ResNet50

has stated that limitations in datasets are a significant

challenge, thus resulting in the application of hybrid

models that combine deep learning and machine

learning methods in a bid to improve classification

performance (Kumar, S., & Singh, S. R. (2023).

Traditional image processing techniques such as

histogram equalization, K-means clustering, and

feature extraction via methods such as the Discrete

Wavelet Transform (DWT), Principal Component

Analysis (PCA), and Gray-Level Co-occurrence

Matrix (GLCM) have also been tried, with CNNs

performing consistently better than Support Vector

Machines (SVM) and k-Nearest Neighbors (KNN)

classifiers in disease identification (Kanabur et al.,

2019).

A hybrid AlexNet+SVM model was discovered to

have 99.9986% accuracy in large-scale plant disease

classification of 38 leaf diseases on 12 crop species,

though this approach is not particularly designed for

neem leaf infections (Kawatra et al., 2020). A CNN-

DenseNet hybrid model was employed in another

research to enhance feature extraction to an accuracy

of 98.79% and may potentially be employed as a

precision agriculture tool (Dari et al., 2023). Hybrid

models with K-means clustering to mark disease area

and CNNs for classification had a mean accuracy of

92.6%, which is higher than conventional

classification methods (Devi, N., et al., 2025). The

fusion of ViTs and CNNs has also been employed for

the detection of plant diseases. A VGG16, Inception-

V3, and DenseNet20-based model as the CNN feature

extractors attained 99.24% accuracy in apple leaf

disease detection and 98% accuracy in the

classification of corn leaf diseases, signifying the

effectiveness of hybrid models in multi-scale feature

extraction (Aboelenin et al., 2021). Transfer learning

techniques incorporating DenseNet201 and VGG16

and SVM have also significantly enhanced the

performance of disease classification with high F-

scores and improved performance over individual

deep learning models (Sharma et al., 2023).

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

628

3 MATERIALS AND METHODS

3.1 Dataset Description

The dataset, which includes 1,862 neem leaf photos,

was collected from Mendeley Data, as shown in Table

1. It is divided into six categories: Alternaria,

Dieback, Leaf Blight, Leaf Miners with Powdery

Mildew, Powdery Mildew, and Healthy leaves, with

examples shown in Figures 1 and 2. Due to a

considerable class imbalance, image augmentation

techniques were used to obtain a uniform distribution

across every classes, hence improving the model's

capacity to generalize across different diseases.

Several pre-processing processes (E. Elakiya, 2017)

were performed prior to training to ensure

consistency and increase dataset quality. Each image

was resized to fit the input dimensions of the CNN

architectures. Pixel values were also adjusted within

the 0 to 1 range to help with training stability and

convergence. To improve the model's emphasis on

key leaf properties, noise reduction techniques were

used to reduce background interference.

3.2 Data Augmentation

Augmentation techniques were employed prior to

dataset splitting to rectify class imbalance. A

balanced dataset was achieved by augmenting each

class with 565 images. The augmentation techniques

included brightness correction, zooming (±20%),

rotation (0° to 360°), horizontal and vertical flipping,

and the insertion of Gaussian noise. These

adjustments reduced the likelihood of overfitting

while also supporting models in learning more robust

and generalized properties through changes in scale,

illumination, and orientation.

Table 1: Neam Leaf Disease Dataset.

Diseases Number of images

Alternaria 191

Dieback 174

Leaf blight 231

Leaf miners’ Powdery

mildew

203

Powdery mildew 544

Healthy 519

Total 1862

Figure 1: (a) Alternaria (b) Dieback (c) Healthy.

Figure 2: (d) Leaf blight (e) Leaf miners’ Powdery mildew

(f) Powdery mildew.

3.3 Data Splitting

The final dataset size after augmentation was 3,390

images, of which 80% were used for training (2712

images) and 20% were used for testing (678 images).

In addition to preserving an independent test set for

unbiased evaluation, this ensured that the models had

enough data to train. The training set was utilized to

optimize model parameters, while the testing set gave

an objective evaluation of classification accuracy.

3.4 Architecture Design

The proposed hybrid deep learning model improves

neem leaf disease classification through a structured

pipeline that includes data preparation, augmentation,

model implementation, compilation, and training.

The dataset was preprocessed by scaling all images to

224×224 pixels for compliance with pretrained

models. Pixel normalization was the dataset was class

imbalance further, we apply data augmentation

techniques to the dataset where they are rotation,

flipping, zooming, brightness modifications, and

translations were used to avoid overfitting. The

hybrid model design is built on feature fusion, which

involves two deep learning models extracting distinct

feature representations that are then concatenated for

classification. The MobileNet+DenseNet hybrid

model, which performed best, combines

MobileNetV2's lightweight and efficient feature

extraction (Elakiya et al., 2024) with DenseNet121's

Neem Leaf Disease Detection Using Hybrid Deep Learning Models

629

hierarchical feature propagation. Additionally,

DenseNet+AlexNet, DenseNet+ResNet, and

DenseNet+GoogleNet hybrid models were created

for comparative analysis using a similar

methodology. Transfer learning was used in all

models, with ImageNet-pretrained weights to utilize

learnt feature representations while freezing the basic

model layers. Each model retrieved deep hierarchical

features from neem leaf images, then used Global

Average Pooling (GAP) to convert the feature maps

into one-dimensional vectors. The concatenation of

these feature vectors formed a compounded

representation that was used to increase classification

accuracy. The features went through a fully

connected dense layer of 128 neurons with ReLU

activation before the last SoftMax classification layer.

This layer allowed for the classification of input

images into six different neem leaf disease groups.

TensorFlow and Keras were used to create the

models, which were trained using an 80-20 train-

validation split across 60 epochs, with early pausing

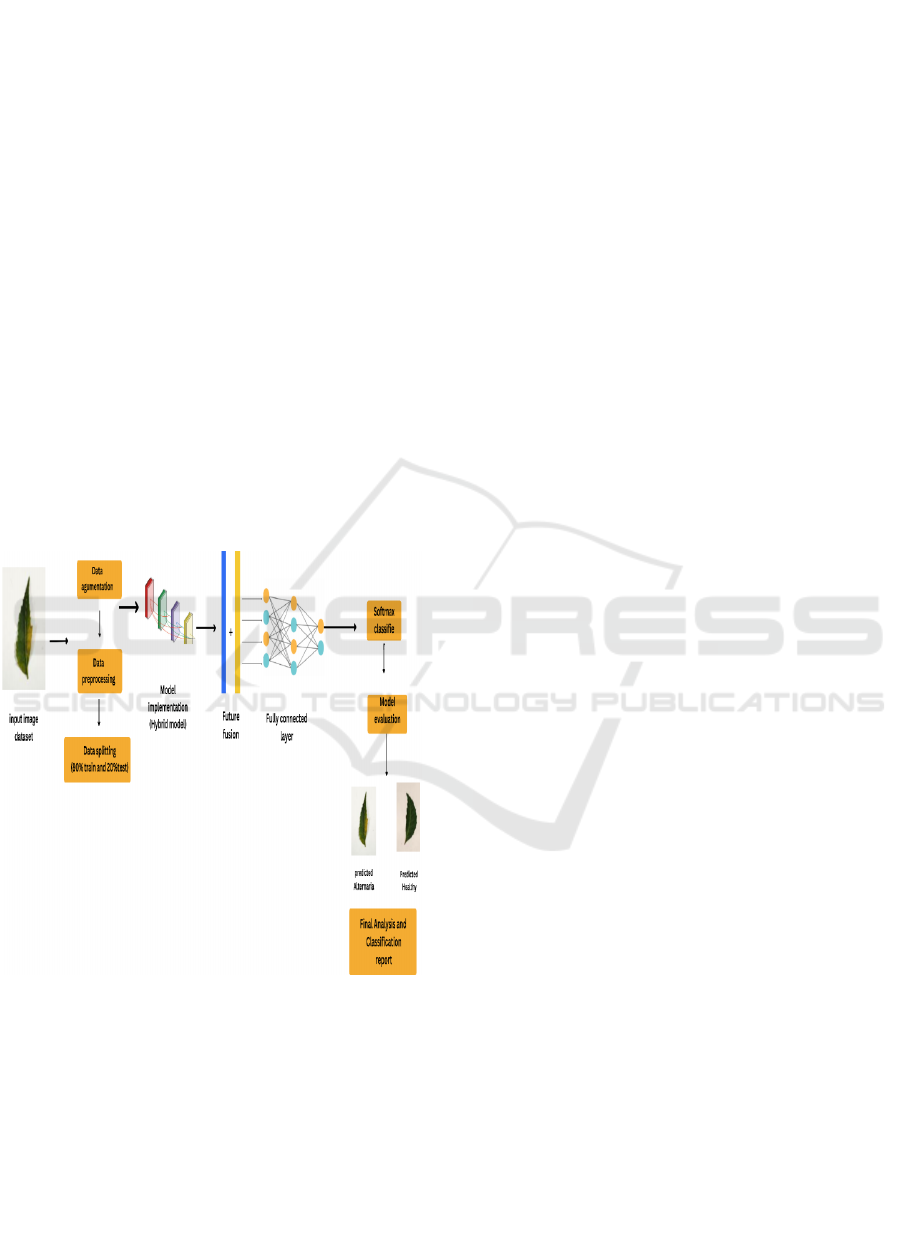

to prevent overfitting. Figure 3 illustrates the full

workflow. A full explanation of each model's

operating principles is given below.

Figure 3: Functional Diagram of the Proposed Model.

4 HYBRID MODELS

4.1 Mobile Net-DenseNet

The hybrid model between MobileNet-DenseNet

combines the excellence of MobileNetV2, a light-

weight and computation-efficient convolutional

neural network, and DenseNet121, a deep learning

network with better feature propagation.

MobileNetV2 is particularly optimized for mobile

and embedded vision tasks, providing the optimal

performance-computation trade-off. DenseNet121

helps in efficient reuse of features, thus enhancing the

performance of the model with a reduction in the

number of total parameters at the same time

compared to normal convolutional networks. The

hybrid model accepts an input image of size

224×224×3 by simultaneously utilizing

MobileNetV2 and DenseNet121, both pre-trained on

the ImageNet database. For pre-learned

representation integrity maintenance, the layers are

frozen. After feature extraction, Global Average

Pooling (GAP) layers transform feature maps into

one-dimensional vectors, thus reducing the

dimension without losing essential spatial

information. The output feature vectors of both

models are concatenated to produce a single

representation that combines MobileNet's efficient

spatial feature learning with DenseNet's hierarchical

feature propagation. The concatenated feature vector

is then fed into a fully connected dense layer of 128

units with ReLU activation followed by a softmax

classifier, which classifies the image into one of the

six neem leaf disease classes. Figure 4 shows the end-

to-end process.

4.2 DenseNet-AlexNet

DenseNet121 is the base deep feature extractor within

the DenseNet-AlexNet hybrid model, with AlexNet

offering supplemental feature learning potential

within its plain but effective convolutional layers.

Five convolutional and three fully connected layers

comprise AlexNet, successfully extracting principal

textures and low-level spatial information to enhance

DenseNet's deeper feature representations. Again, as

with the initial model, both networks compute an

input image in parallel, and feature maps generated

from both networks are reduced to one-dimensional

feature vectors using Global Average Pooling (GAP).

Concatenation at this point is where both these feature

vectors, taking the strength of each network, are

concatenated before moving through a fully

connected layer. The concatenation here takes feature

vectors, borrowing strength from each network,

before moving through a fully connected layer. The

final classification uses the softmax activation

function. The combination of AlexNet's simplicity

and DenseNet's deep connections yields a model that

adequately balances computation with deeper feature

extraction.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

630

4.3 DenseNet-ResNet50

The hybrid DenseNet-ResNet50 architecture

integrates the useful features of DenseNet's feature

reuse mechanism with the residual connections of

ResNet, which stabilize deep neural networks by

avoiding the vanishing gradient issues. The ResNet50

deep residual learning architecture improves the

gradient flow between layers, resulting in better

extraction of deep features. Under this setup,

DenseNet121 and ResNet50, pre-trained on

ImageNet, both forward an input image in parallel.

After the feature extraction process, Global Average

Pooling (GAP) is used to transform the output of both

networks into one-dimensional feature vectors. These

vectors are concatenated to form a combined feature

representation that captures ResNet's hierarchical

learning benefits with connectivity of DenseNet. The

resulting merged feature vector then passes through a

fully connected layer with 128 neurons followed by

classification through a softmax layer. This hybrid

model benefits from ResNet's capacity to retain

learned information in deep networks and,

simultaneously, exploits the efficient feature sharing

between layers by DenseNet.

4.4 DenseNet-GoogleNet

The DenseNet-GoogleNet model combines

GoogleNet's inception modules with DenseNet's

densely connected layers to improve multi-scale

feature extraction. GoogleNet (InceptionV3) is

widely used for its parallel convolutional filters with

different kernel sizes, which allow the model to

collect information at different scales. This is very

useful in the detection of complex patterns of disease.

In this hybrid design, DenseNet121 and GoogleNet

(InceptionV3) process the input image separately, so

each network extracts features independently.

GoogleNet's inception modules can recognize fine-

grained features as well as larger structural

information. The feature maps extracted from each

network are then fed to Global Average Pooling

(GAP) to get compact feature vectors. These

concatenated feature vectors leverage the best of both

designs before being passed to a fully connected

dense layer followed by a final softmax classifier.

Where entire working process of hybrid model is

depicted in Figure4. GoogleNet's capability of

processing input at different scales complements

DenseNet's hierarchical feature extraction, so this

hybrid model is extremely successful in recognizing

neem leaf diseases of varying intensities.

Al

g

orithm 1: DenseNet-MobileNet H

y

brid Model.

1. Input: X → Dataset,d → Preprocessed dataset and resized images,l → Corresponding labels for the images

2. Output: Final classification performance on the test dataset

3. For each and every epoch:

4. Feature Extraction using MobileNet:

5. For each convolution layer in MobileNet:

6. For each input image in X:

7. Extract feature map 𝑎𝑖𝑗 from MobileNetV2 convolutional layers.

8. End for

9. End for

10. Apply Global Average Pooling (GAP) for obtaining compact feature representation.

11. Final MobileNetV2 feature vector: (1, num_filters)

12. Feature Extraction using DenseNet121:

13. For each convolution layer in DenseNet121:

14. For each input image in X:

15. Extract feature map 𝑎𝑖𝑗 from DenseNet121 convolutional layers.

16. End for

17. End for

18. Use Global Average Pooling (GAP) to generate a compact feature representation.

19. Final DenseNet121 feature vector: (1, num_filters)

20. Hybrid Feature Fusion:

21. Define feature set fet from dataset d.

22. For each image in dataset:

23. Preprocess the image before inputting it into the models.

24. End for

25. Split dataset into train_fet, test_fet, train_labels, test_labels.

26. Train & Evaluate MobileNetV2:

Neem Leaf Disease Detection Using Hybrid Deep Learning Models

631

27. M_MobileNet ← Train MobileNetV2 on train_fet, train_labels.

28. Extract training features: MobileNet_train ← M_MobileNet.predict(train_fet).

29. Extract testing features: MobileNet_test ← M_MobileNet.predict(test_fet).

30. Train & Evaluate DenseNet121:

31. M_DenseNet ← Train DenseNet121 on train_fet, train_labels.

32. Extract training features: DenseNet_train ← M_DenseNet.predict(train_fet).

33. Extract testing features: DenseNet_test ← M_DenseNet.predict(test_fet).

34. Hybrid Model Construction:

35. Combine extracted features:

36. model_train ← Concatenation of MobileNet_train and DenseNet_train.

37. model_test ← Concatenation of MobileNet_test and DenseNet_test.

38. Train a fully connected neural network on the merged feature set.

39. Evaluate performance on test data.

5 RESULTS AND DISCUSSION

The performance of proposed hybrid deep learning

models was checked through various measures of

performance, i.e., accuracy, loss, precision, recall,

F1-score, sensitivity, specificity, AUC (Area Under

the Curve), and MUC (Mean Under curve), which

are displayed in Table 2.

Table 2: Comparison of Used Models Accuracy and Error.

Parameters Densenet- Goo

g

leNet DenseNet-AlexNet DenseNet-Resnet50 DenseNet-MobileNet

Accurac

y

88.20 88.50 88.79 90.56

Precision 87.68 87.82 87.97 89.71

Recall 87.09 87.43 86.96 89.36

F1score 87.29 87.47 87.13 89.42

Sensitivit

y

87.09 87.43 86.96 89.36

S

p

ecificit

y

97.63 97.68 97.57 97.95

MCC 85.50 85.86 85.23 87.64

AUC 98.25 98.46 98.14 97.64

Loss 0.1 0.1 0.1 0.09

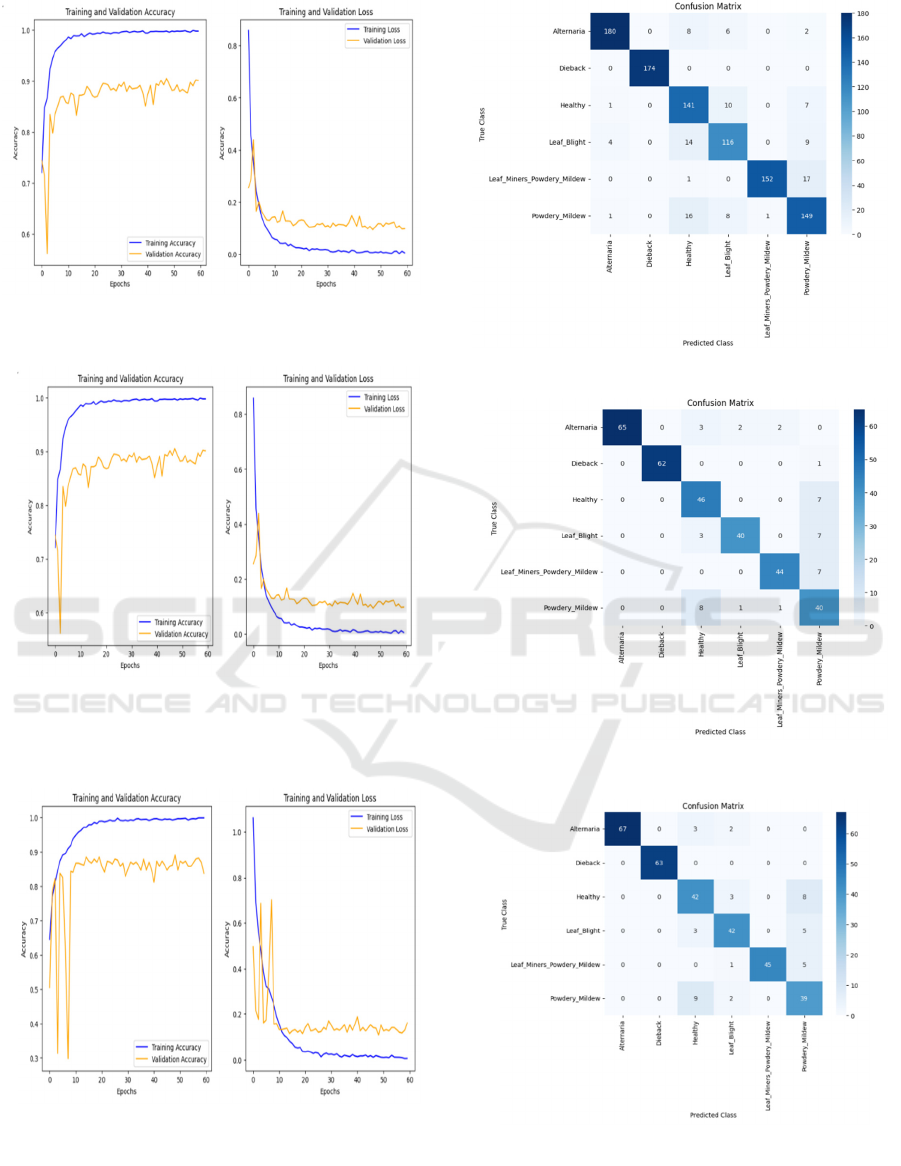

Among the evaluated models, DenseNet-

MobileNet achieved the highest performance,

attaining an accuracy of 90.56%., because of

MobileNet's fast feature extraction and DenseNet's

hierarchical connection. The DenseNet-ResNet

model followed with 88.79% accuracy, because of

residual learning, while DenseNet-AlexNet and

DenseNet-GoogleNet showed lower accuracy in Fig

12. Despite the fact that GoogleNet's inception

modules allow for multi-scale feature extraction, the

decreased accuracy shows feature redundancy in

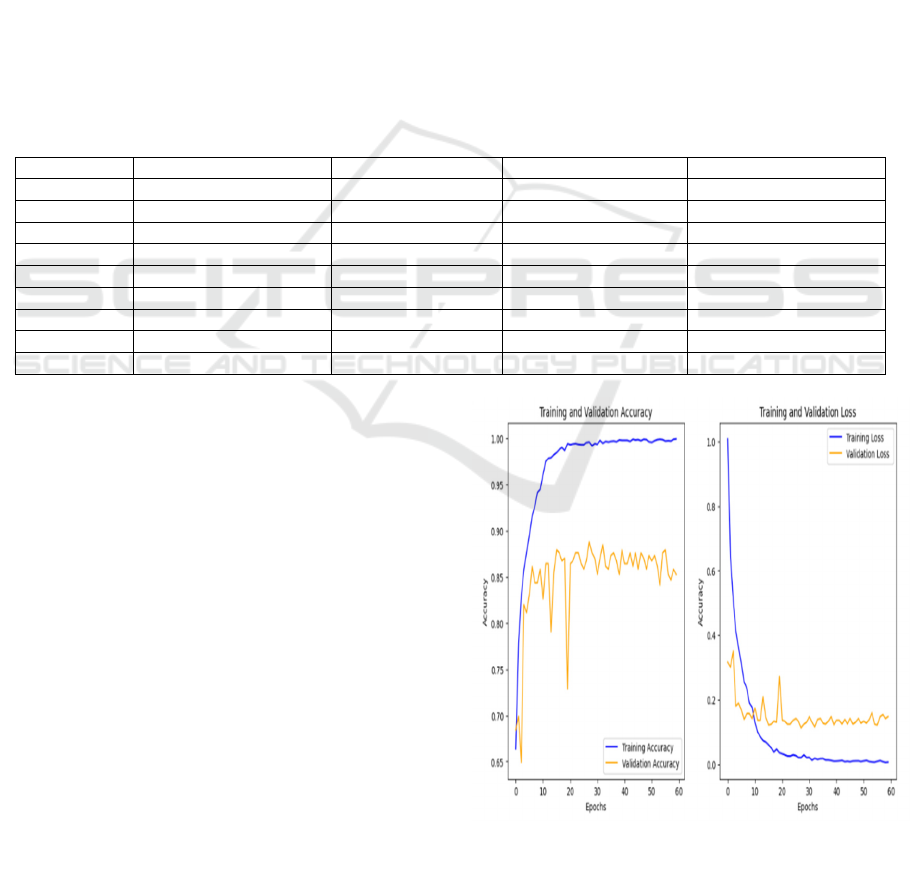

neem leaf disease diagnosis. The training and

validation accuracy and loss curves give a better

understanding of the performance of the model. The

accuracy graph indicates a steady increase with the

epochs, with DenseNet-MobileNet having the highest

stability level, while the loss graph indicates effective

convergence, which depicts decreased classification

errors. Figure 4 to 11 shows the Accuracy, Loss and

Confusion Matrix of various models.

Figure 4: Accuracy and Loss Graph for DenseNet-

MobileNet.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

632

Figure 5: Accuracy and Loss Graph for DenseNet-ResNet5.

Figure 6: Accuracy and Loss Graph for DenseNet-

GoogleNet.

Figure 7: Accuracy and Loss Graph for DenseNet-AlexNet.

Figure 8: Confusion Matrix of DenseNet-MobileNet.

Figure 9: Confusion Matrix of DenseNet-ResNet50.

Figure 10: Confusion Matrix of DenseNet-GoogleNet.

Neem Leaf Disease Detection Using Hybrid Deep Learning Models

633

Figure 11: Confusion Matrix of DenseNet-AlexNet.

Figure 12: Comparison of used Models.

6 CONCLUSIONS

This study proposed a hybrid deep learning approach

for neem leaf disease detection by integrating

DenseNet with MobileNet, ResNet, AlexNet, and

GoogleNet. Among these models, DenseNet-

MobileNet had the highest accuracy of 90.5%,

making it the most effective for neem leaf disease

classification. Other hybrid models, including

DenseNet-ResNet (88.7%), DenseNet-AlexNet

(88.5%), and DenseNet-GoogleNet (88.2%), also

performed well but were slightly less accurate. The

study of model performance utilizing criteria such as

accuracy, loss, precision, recall, and AUC revealed

that hybrid models outperform independent

architectures. The accuracy and loss graphs showed

stable training and convergence, confirming the

reliability of the proposed models for automated

disease prediction. For future work, tribrid models

integrating three deep learning architectures shall be

explored to further enhance classification accuracy.

In addition, a new neem disease dataset will be

compiled to increase model generalization and

robustness. To improve model performance, attention

mechanisms, explainable AI approaches, and

hyperparameter tuning will be combined.

Furthermore, efforts will be made to create

lightweight models for real-time disease

identification in mobile and edge computing settings.

These developments will help precision agriculture

(S. Banerjee et al., 2024) by enabling early and

efficient disease identification.

REFERENCES

Vellela, Sai Srinivas, et al. An Identification of Plant Leaf

Disease Detection Using Hybrid Ann and Knn. 2024,

pp. 149–58, doi:10.58532/v3baio8p5ch4.

Vamshi, J., Tanwar, S., Thatipudi, J. G., Ponsangeetha, A.,

Mukherjee, S., & Mandhare, J. B. (2024). Novel Plant

Leaf Disease Detection Approach using Hybrid Deep

Learning Strategy. 266–273.

https://doi.org/10.1109/icicv62344.2024.00047

Sheneamer, Abdullah. “Early Detection of Plant Leaf Dis-

eases Using Stacking Hybrid Learning.” PLOS ONE,

vol. 19, no. 11, Nov. 2024, p. e0313607,

doi:10.1371/journal.pone.0313607.

Huddar, Suma, et al. “Deep Autoencoder Based Image En-

hancement Approach with Hybrid Feature Extraction

for Plant Disease Detection Using Supervised Classifi-

cation.” International Journal of Electrical and

Com6puter Engineering, Aug. 2024,

doi:10.11591/ijece.v14i4.pp3971-3985.

Patil, Manesh P., and Indrabhan S. Borse. “A Novel Hybrid

Convolution and Multiscale Dilated EfficientNetB7-

Based Plant Disease Detection and Classification with

Adaptive Segmentation Procedures.” International

Journal of Image and Graphics, Jan. 2025,

doi:10.1142/s0219467827500203.

Mallma, J. B., Rodriguez, C., Pomachagua, Y., & Navarro,

C. (2021). Leaf Disease Identification Using Model Hy-

brid Based on Convolutional Neuronal Networks and

K-Means Algorithms. International Conference on

Computational Intelligence and Communication Net-

works.

https://doi.org/10.1109/CICN51697.2021.9574669

Kumar, S., & Singh, S. R. (2023). Plant Leaf Disease De-

tection: A Deep Hybrid Learning Approach. 625–630.

https://doi.org/10.1109/mosicom59118.2023.1045874

8.

Kanabur, V., Harakannanavar, S. S., Purnikmath, V. I.,

Hullole, P., & Torse, D. A. (2019). Detection of Leaf

Disease Using Hybrid Feature Extraction Techniques

and CNN Classifier (pp. 1213–1220). Springer, Cham.

https://doi.org/10.1007/978-3 030-37218-7_127.

Kawatra, M., Agarwal, S., & Kapur, R. (2020). Leaf Dis-

ease Detection using Neural Network Hybrid Models.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

634

International Conference on Computing, Communica-

tion and Automation.

https://doi.org/10.1109/ICCCA49541.2020.9250885.

Dari, S. S., Tiwaskar, S., Kumar, J. R. R., Langote, V., Mo-

hadikar, G. M., & Ashtagi, R. (2023). Enhancing Plant

Leaf Disease Identification with a CNN and DenseNet

Hybrid Model. 102:1-102:6.

https://doi.org/10.1145/3647444.3647929.

Devi, N., et al. “Categorizing Diseases from Leaf Images

Using a Hybrid Learning Model.” Symmetry, vol. 13,

no. 11, Nov. 2021, p. 2073,

https://doi.org/10.3390/SYM13112073.

Aboelenin, S., Elbasheer, F. A., Eltoukhy, M. M., Elhady,

W. M., & Hosny, K. M. (2025). A hybrid Framework

for plant leaf disease detection and classification using

convolutional neural networks and vision transformer.

Complex & Intelligent Systems, 11(2).

https://doi.org/10.1007/s40747-024-01764-x.

Sharma, Pavan, et al. “Hybrid Models for Plant Disease De-

tection Using Transfer Learning Technique.” Interna-

tional Conference on Computing for Sustainable

Global Development, Mar. 2023, pp. 712–

18.https://data.mendeley.com/dasets/sjtxmcv5d4/3#:~:

text=This%20dataset%20consists%20of%202544,into

%20further%20six%20disease%20classes.

Elakiya, E. and Sai Nikhil, Parasu and Sujithra@kanmani,

R. and Christopher Columbus, C., Early-Stage Classifi-

cation of Diabetic Retinopathy in Retinal Images using

Efficient-DenseNet (November 15, 2024). Proceedings

of the 3rd International Conference on Optimization

Techniques in the Field of Engineering (ICOFE-2024),

Available at http://dx.doi.org/10.2139/ssrn.5088869

DenseNet-121, MobileNetV2, and ResNet-50 in Im-

ageCLEF 2024. Available at: https://ceur-ws.org/Vol-

3740/paper-160.pdf

Architectural design of MobileNetV2 model. Available at:

https://www.researchgate.net/figure/Architectural-de-

sign-of-MobileNetV2-model_fig2_358006784

S. Banerjee, P. Akhila, S. Kanmani R, E. Elakiya and B.

Surendiran, "CropBot: A Chatbot for Agriculture Ad-

vancement," 2024 International Conference on Signal

Processing, Computation, Electronics, Power and Tel-

ecommunication (IConSCEPT), Karaikal, India, 2024,

pp. 16, doi: 10.1109/IConSCEPT61884.2024.1062784

8.

Elakiya, E., Paturu, T., Naik, K.C., Sai Tarun, V. (2024).

Investigating Feature Extraction and Classification Al-

gorithms for Effective disease Lung Disease Detection

Using Chest X-Ray Images. In: Ranganathan, G., Papa-

kostas, G.A., Shi, Y. (eds) Inventive Communication

and Computational Technologies. ICICCT 2024. Lec-

ture Notes in Networks and Systems, vol 23. Springer,

Singapore. https://doi.org/10.1007/978-981-97-7710-

5_33

R. Kanagaraj, E. Elakiya, A. Kumaresan, J. Selvakumar, J.

Suganya and K. Shruthi, "Predictive Classification

Model of Crop Yield Data Using Artificial Neural Net-

work," 2023 5th International Conference on Inventive

Research in Computing Applications (ICIRCA), Coim-

batore, India, 2023, pp. 747-751, doi:

10.1109/ICIRCA57980.2023.10220791.

E. Elakiya and N. Rajkumar, "Designing preprocessing

framework (ERT) for text mining application," 2017 In-

ternational Conference on IoT and Application

(ICIOT), Nagapattinam, India, 2017, pp. 1-8, doi:

10.1109/ICIOTA.2017.8073613.

Neem Leaf Disease Detection Using Hybrid Deep Learning Models

635