Navigation Assistance for the Visual Handicapped Persons through

Mobile Computing

T. Venkata Naga Jayudu, M. Pallavi, S. Bindu Sai, P. Thrisha and G. Sai Meghana Reddy

Department of CSE, Srinivasa Ramanujan Institute of Technology, Rotarypuram Village, B K Samudram Mandal,

Anantapur - 515701, Andhra Pradesh, India

Keywords: Voice Command, Speech Recognition, Object Detection, Navigation Assistance, Google Maps Integration,

Accessibility, Visually Impaired Users, Hands‑Free Interaction, Mobile Computing, Inclusive Technology.

Abstract: For a one-of-a-kind experience, this groundbreaking software provides voice-activated directions, object

recognition, and conversation. The software can better interpret voice commands when the user speaks clearly.

Detection of objects is the basis of the visual recognition system. To make things more accessible, users may

use voice commands when they call or text. This software integrates with the user's address book and enables

voice-activated contact addition. Object recognition and voice-driven commands bring a whole new

dimension to user engagement with visual aids. This program exemplifies how a simple and welcoming

interface may serve several functions.

1 INTRODUCTION

Technology has significantly transformed the way

people interact with their surroundings, offering

innovative solutions to enhance accessibility for

individuals with disabilities. Among these

advancements, mobile computing has emerged as a

powerful tool to assist visually impaired individuals

in navigating their environment with ease. Traditional

navigation methods, such as guide dogs or walking

canes, provide assistance but come with limitations in

detecting obstacles and offering real-time directional

guidance. To address these challenges, this project

introduces a voice-driven mobile application that

integrates speech recognition, object detection, and

Google Maps navigation to facilitate hands-free

movement and communication. The proposed system

enables users to interact through voice commands,

eliminating the need for manual input. The application

listens to spoken words using Speech-to-Text (STT)

technology, extracts meaningful information, and

processes it to generate navigation routes. By

integrating with Google Maps, the system allows

users to receive step by-step directions to their desired

location, supporting different travel modes such as

walking, public transport, and driving. Additionally,

the app extends its functionality by incorporating

voice-activated contact management and messaging,

allowing users to add new contacts and send SMS

without physical interaction. Beyond navigation, the

inclusion of object detection enhances safety and

situational awareness by identifying obstacles in the

user's path. This feature is particularly beneficial in

unfamiliar environments, where visually impaired

individuals might struggle with unexpected barriers.

The application can provide voice alerts or haptic

feedback to warn users about detected objects,

making navigation safer and more efficient. The

combination of these technologies not only improves

accessibility but also fosters independence, enabling

visually impaired individuals to move confidently in

their surroundings. With a user-friendly interface and

an emphasis on inclusivity, the proposed system aims

to bridge the gap between accessibility needs and

technological advancements. Future enhancements

may include multi language support, offline

navigation, and integration with wearable devices to

further refine the user experience. By leveraging

artificial intelligence, speech processing, and mobile

computing, this project represents a significant step

towards creating a smarter, more inclusive world for

individuals with visual impairments.

Jayudu, T. V. N., Pallavi, M., Sai, S. B., Thrisha, P. and Reddy, G. S. M.

Navigation Assistance for the Visual Handicapped Persons through Mobile Computing.

DOI: 10.5220/0013884700004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 2, pages

457-460

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

457

2 RELATED WORKS

Home appliances that operate via voice commands and

run on the Android platform are detailed in this article.

Disabled and older people can use this technology in

the comfort of their own homes. Google is able to

identify and analyse voice commands spoken into

smartphones. This article explains how to use

Android to record voice commands and transmit them

to an Arduino Uno. The light and fan were turned on

and off using the Arduino Uno's Bluetooth module.

With its user-friendly interface and straightforward

installation, the system was created to manage

electrical appliances. Using Bluetooth, you may

manage your household appliances up to 20 meters

away (Norhafizah bt Aripin,et.al., 2014).

These days, we can't imagine life without our

smartphones and other smart devices. These

innovations can make daily life easier for people who

are visually impaired. In this article, we take a look at

an Android software that can scan text and objects and

identify them in real time. A device doesn't need an

external server to run an app. Our solution includes

voice feedback that notifies the user of the object that

has been found. The object may be detected by the

app without the requirement for a snapshot. For strong

detection, we separate the object from its background

using the Tensorflow machine learning API and many

diagram cuts. Then, we educate the user about the

object via text-to-speech by transforming the

recognition difficulty into an instance retrieval task.

Those who are visually impaired can use the

technology to better comprehend their environment.

This app is compatible with all budget smartphones

(Md. Amanat Khan Shishir,et.al., 2019).

Voice Assistant, a Serbian-supporting personal

assistant app for Android phones, is introduced in this

paper. A native- UI, open-source speech recognition

framework called Kaldi underpins this massive

vocabulary continuous voice recognition system. To

train a variety of acoustic models with varying

degrees of noise, a dataset of 70,000 utterances was

utilised. With a vocabulary of more than 14,000

words and a test database of 4500 utterances, results

are obtained (Branislav Popović,et.al., 2015).

Portable gadgets are used by people quite a bit.

People who are visually impaired may benefit from

these technologies on a daily basis. The research

suggests an app for Android smartphones that might

be useful for these individuals. Applications use

microelectromechanical system (MEMS) sensors

found in smartphones as well as a few third-party

sensor modules. All of the parts work together to form

a portable aid. Bluetooth and Wi-Fi allow

smartphones and external modules to connect with

each other. Customers who are visually impaired will

find this app's UI to be suitable. Through text-to-

speech software, smartphones are able to converse

with their users. These modules do indoor/outdoor

navigation and manage incoming phone calls.

According to the results, the assistant system in this

Android app is small, effective, easy to transport,

cheap, and only needs a few hours of training

(Laviniu Ţepelea,et.al., 2017).

Smart assistants, which allow us to converse with

and question machines, are a boon to all humans in the

modern day. Thanks to mobile phones, computers,

desktops, etc., this technology is appealing to nearly

everyone on the planet. A smart assistant that can

recognise speech, understand text and voice input,

and then verbalise search results. Smart assistants

include Google Assistant, Apple's Siri, and Amazon's

Alexa. They have trouble interacting and can't identify

sounds. People may have difficulties in employing

Google Assistant due to their language restrictions

and the fact that they rely on WiFi and internet to

communicate with people. You can find Google

Assistant on Android smartphones. To use this

program, you need to be online. You don't need the

Internet to use our suggested system. With the help of

the voice assistant, users may access data in several

languages, including current apps, daily news,

geolocation, and Wikipedia, thanks to the usage of

raspberry pi for data loading and storage. Users

utilising automation technology can benefit from

voice help (Rajakumar P,et.al., 2022).

3 METHODOLOGY

3.1 Proposed System

For the vision challenged, there is a game-changing

smartphone software that can identify objects,

communicate, and issue voice orders. For the

program to properly process spoken commands, it

employs Speech-to Text (STT) technology. Upon

destination recognition, the system utilises Google

Maps to provide real-time, step-by step instructions

for a variety of travel options. The software is much

more user-friendly because it allows users to make

contacts and send SMS using voice commands. To

further enhance security, the system employs object

detection in addition to navigation and

communication. Making the user's environment safer

for walking, the program alerts them to obstacles via

voice or vibration. This feature gives the sight

handicapped the confidence they need to move

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

458

around in unfamiliar environments. Because it

integrates speech recognition, object identification,

and smooth communication into a single platform, the

application is especially accessible and inclusive for

people with visual impairments. The incorporation of

wearable devices, offline navigation, and support for

several languages are all potential enhancements to

the user experience.

3.2 System Architecture

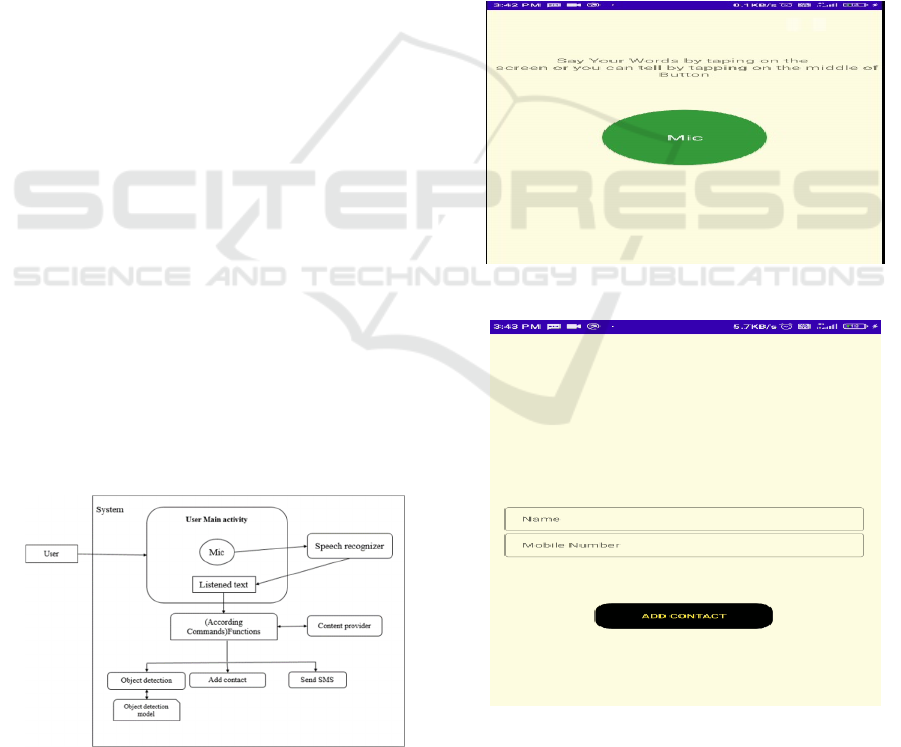

The system architecture as shown in figure 1 is

designed to facilitate seamless voice-based

interaction for visually impaired users. The user

initiates communication through voice input, which is

captured by the microphone and processed by the

speech recognizer. The recognized speech is

converted into text, which is then analyzed to

determine the appropriate function. Based on the

extracted command, the system can perform various

tasks, including navigation assistance, contact

management, and message sending. The architecture

also integrates a content provider module, which

helps in retrieving and managing relevant data,

ensuring smooth execution of user commands. One of

the key components of the architecture is object

detection, which enhances user safety by identifying

obstacles in the environment. The system utilizes an

object detection model to recognize surrounding

objects and provide real-time feedback to the user.

Additionally, the application allows users to add new

contacts and send messages through voice

commands, reducing the need for manual input. By

combining speech recognition, object detection, and

communication functionalities into a unified system,

the architecture ensures an intuitive and accessible

experience for visually impaired users, making

navigation and communication more efficient and

user-friendly.

Figure 1: Architecture.

3.3 Modules Users

Go ahead and send the SMS. Input a name or number

and use object detection to make a call. Just say

"Google Maps" and add a contact. The incorporation

of hands free features streamlines the management

and connection of devices.

4 RESULTS AND ANALYSIS

The developed system successfully integrates voice-

based commands, object detection, and

communication features to enhance accessibility for

visually impaired users. Through speech recognition,

users can provide voice inputs to navigate, add

contacts, and send messages seamlessly.

Figure 2: Application Interface.

Figure 3: Add Contact Page.

Figure 2 shows the Application Interface page and

figure 3 shows the Add Contact Page respectively.

Navigation Assistance for the Visual Handicapped Persons through Mobile Computing

459

The system effectively converts spoken words into

text and processes commands with high accuracy,

ensuring smooth user interaction. Google Maps

integration enables real time navigation, allowing

users to reach their destination hands-free.

Additionally, the speech feedback mechanism

confirms user commands before execution, reducing

errors and improving usability. The object detection

feature enhances safety by identifying obstacles in the

user's path, providing timely alerts to assist in

navigation. The hands- free communication system

allows users to manage contacts and send messages

effortlessly, making the application highly useful in

daily activities. The proposed system outperforms

existing solutions by offering a unified and intuitive

interface that caters specifically to the needs of

visually impaired individuals. Overall, the results

demonstrate the effectiveness of the application in

improving independence and mobility for visually

challenged users, showcasing its potential for real-

world implementation.

5 FUTURE WORK

The proposed system has the potential to enhance

accessibility and user experience with significant

upgrades. In the future, the program may be enhanced

to support several languages, allowing individuals

from varied backgrounds to easily utilise it. The

system's adaptability and efficiency may be enhanced

with the addition of AI and ML, which can improve

item detection and speech recognition. Offline

functionality can be developed to assist visually

impaired folks who do not have access to the internet.

Improving the app in the future may involve adding

more language recognition features to attract a wider

range of users. One way to improve the visual aid

feature is to incorporate it with new technology, such

as augmented reality. Constant updates have the

potential to provide new features like improved voice

commands and more compatibility, further

establishing the app as a versatile and cutting-edge

answer to a wide range of customer demands.

6 CONCLUSIONS

Users with visual impairments can benefit from a

unified application that allows for spoken commands,

object identification, and conversation. Speech

recognition provides a seamless user experience by

enabling hands free navigation, contact management,

and messaging. Movement is made safer and easier

with real-time obstacle alerts via object detection. It is

a significant improvement over prior solutions

because of the system's user-friendly and efficient

interface for those with visual impairments. The

software enhances user freedom, communication, and

navigation, according to the results. To make the

system more accessible and convenient in the real

world, it might be improved by adding support for

several languages and the ability to work offline.

REFERENCES

A. Stolcke, J. Zheng, W. Wang, and V. Abrash, “SRILM at

Sixteen: Update and Outlook,” in Proc. IEEE Worksho

p on Automatic Speech Recognition and Understandin

g ASRU, Waikoloa, 2011.

B. Popović, E. Pakoci, S. Ostrogonac, D. Pekar, “Large

Vocabulary Continuous Speech Recognition for

Serbian Using the Kaldi Toolkit, in Proc. 10th Digital

Speech and Image Processing, DOGS, Novi Sad, 2014,

Branislav Popović; Edvin Pakoci; Nikša Jakovljević; Goran

Kočiš; Darko Pekar; Voice assistant application for the

Serbian language; 24-26 November 2015.

D. Povey and P. C. Woodland, “Minimum Phone Error and

I- Smoothing for Improved Discriminative Training,”

in Proc. 27th Int. Conf. on Acoustics, Speech and Signal

Processing ICASSP, Orlando, 2002, pp. I105 108.

D. Povey, D. Kanevsky, B. Kingsbury, B. Ramabhadran, G.

Saon, and K. Visweswariah, “Boosted MMI for Model

and Feature-Space Discriminative Training,” in Proc.

33rd Int. Conf. on Acoustics, Speech and Signal Proce

ssing ICASSP, Las Vegas, 2008, pp. 4057-4060.

Laviniu Ţepelea; Ioan Gavriluţ Electronics and Telecomm

unications Department, University of Oradea, Oradea,

Romania; Alexandru Gacsádi; Smartphone application

to assist visually impaired people; 01-02 June 2017.

Md. Amanat Khan Shishir; Shahariar Rashid Fahim; Fairuz

Maesha Habib; Tanjila Farah; Eye Assistant: Using

mobile application to help the visually impaired; 03-05

May 2019.

Norhafizah bt Aripin; M. B. Othman; Voice control of

home appliances using Android; 27-28 August 2014.

pp. 31-34.

R. Kneser and H. Ney, “Improved Backing-Off for M Gram

Language Modeling,” in Proc. 20th Int. Conf. on Acou

stics, Speech and Signal Processing ICASSP, Detroit,

1995, pp. 181-184.

Rajakumar P; K. Suresh; Boobalan M; M. Gokul; G.Darun

Kumar; Archana R; IoT Based Voice Assistant using

Raspberry Pi and Natural Language Processing; 08-09

December 2022.

S. Suzić, B. Popović, V. Delić, and D. Pekar, “Serbian

Mobile Speech Database Collection and Evaluation”, in

Proc. Int. Conf. on Electronics, Telecommunications,

Automation and Informatics ETAI, ETAI 1-1, Ohrid,

2015.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

460