Body Language and Speech Analysis Using Deep Learning for

Enhanced Virtual Job Interviews

Satheesh Kumar A., Naveena Devi S., Preetha R. and Subika K. V.

Department of Computer Science and Engineering, Nandha Engineering College, Erode, Tamil Nadu, India

Keywords: AI‑driven Hiring, Virtual Job Interviews, Deep Learning, Body Language Analysis, Speech Sentiment

Analysis, Facial Expression Recognition, Real- Time Candidate Assessment.

Abstract: Virtual job interviews have become much more common now, but judging whether a candidate is confident,

honest and a good communicator is difficult when done via a screen. In this paper, we present a deep learning-

based multipurpose AI-enabled virtual interview assessment system with body language analysis and speech

sentiment detection of the candidates along with facial expression recognition. It leverages pose estimation

techniques (OpenPose, MediaPipe), CNNs (VGGFace, FaceNet), and speech processing models (MFCC,

LSTM, BERT) to facilitate 360-degree, unbiased/speech-free, real-time assessment of candidate performance.

Unlike traditional hiring methods, this model reduces subjectivity, increases hiring transparency, and

generates real-time, explainable feedback for recruiters and candidates alike. The proposed solution use of

privacy-preserving AI techniques, compliance to ethical standards (GDPR, CCPA) and integration with HR

systems to make hiring fair, scalable and future-ready. This research establishes a new standard for AI-driven,

data-informed virtual hiring by addressing certain inadequacies in existing AI-based recruitment models.

1 INTRODUCTION

Virtual job interviews have become increasingly

common, changing the way the hiring process takes

place, allowing companies to evaluate candidates

from a distance and open the door to a global talent

pool. But virtual interviews come with their own set

of challenges, especially when it comes to assessing

a candidate’s confidence, honesty, engagement and

communication skills in general. Traditional

interviewing techniques are based on human intuition

and subjective interpretation, which can lead to bias

and inconsistencies in hiring decisions. In addition,

non-verbal signs like body position, facial features,

and speech fluency integral to the in-person interview

experience are easily missed or misread in the online

environment.

In order to overcome the limitations of available

job interview assessment methods, the following

research aims to implement a deep learning-based AI

virtual job interview assessment system that improves

upon observation assessment by considering non-

verbal cues, speech sentiment analysis, and facial

expression recognition. The advanced-based models

proposed in this framework encompass modern

machine learning algorithms for Gesture Recognition

(Pose Estimation (OpenPose, MediaPipe)), Emotion

Analysis on Expressive Facial

Images (Facial Expression Recognition (CNNs,

VGGFace, FaceNet)), and Speech Fluency Evaluation

(Speech Processing Models MFCCs, LSTMs, BERT).

This versatile approach utilizes multimodal AI-based

techniques, allowing for an objective, data-centric,

and bias- free evaluation of potential candidates.

The research contributes by allowing both

recruiters and applicants to gain transparent insights

into what each candidate demonstrates in real-time

format. This is different from other AI based

assessment models which work as a “black-box”

system where XAI is used in this framework to

promote ethical decision making and trust. Moreover,

the system mitigates concerns about privacy and the

security of data through the use of privacy-preserving

AI techniques (such as federated learning) and by

ensuring compliance with regulatory frameworks like

GDPR and CCPA.

The paper discusses the Role of AI Virtual

Interview platform, in making the hiring process

fairer, less subjective, and ultimately more efficient.

As a result, the proposed system further considers

real-world challenges, including dynamic lighting

environments, multiple camera angles, and public

A., S. K., S., N. D., R., P. and V., S. K.

Body Language and Speech Analysis Using Deep Learning for Enhanced Virtual Job Interviews.

DOI: 10.5220/0013880400004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 2, pages

219-225

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

219

noise, thus making it applicable across domains and

job functions. Other developments in the pipeline

include integration with Applicant Tracking Systems

(ATS), AI-based interview coaching, and even VR-

based immersive interview simulations.

Moreover, by leveraging heuristic speak and body

language characterization approach, this research

proposes a novel paradigm for intelligent, data-driven

recruitment systems that can promote the health of

virtual job interviews in terms of effectiveness,

fairness, scalability and inclusion.

2 PROBLEM STATEMENT

The growing reliance on virtual job interviews makes

it challenging to assess a candidate’s confidence,

engagement, and communication skills. In face-to-

face interviews, the recruiters have the chance to see

non-verbal cues like body language, Gestures, and

facial expressions, but in virtual interview, they are

often unavailable because of the angle of the

computer camera, the lighting conditions and

communication is done through the screen. As a

result, subjective and inconsistent hiring decisions

can occur, causing biases and misinterpretations that

impact candidate selection.

Moreover, most of the automatic AI-sifted

recruitment systems today in place in virtual hiring

hinges on verifying your text-based or voice- based

answers, omitting vital body moves and emotional

gestures that are the basis for professional evaluation.

Many of the current AI solutions in use are black-box

models; they leave little, if any, insight to recruiters

and candidates about why these evaluations were

given. Additionally, these systems suffer from issues

of algorithmic bias, where models trained on non-

diverse datasets can be biased against specific

demographics, accents, or styles of communication.

A further concern in AI-based hiring is with

respect to data privacy and security. Virtual

interviews collect video and audio data from

candidates, raising ethical concerns such as data

storage and usage as well as regulatory compliance

with GDPR, CCPA, and other global regulations.

Candidates are less likely to participate in AI-driven

recruitment assessments if the privacy mechanisms

are not solid, potentially hindering the use of these

technologies.

In conclusion, a transparent, fair and real-time AI-

based virtual interview system that encompasses the

analysis of speech, body language and facial

expression detection is the need of the hour to achieve

a complete and fair assessment. This study is aimed

to overcome the void, where it seeks in the

development of the explainable AI model that

improves the recruitment process in the direction of

safety, fairness and efficiency, whilst providing

implementation feedback to both agents (recruiters

and candidates) in order to optimize the recruitment

results.

3 LITERATURE REVIEW

In recent years, deep learning and computer vision

have been more prominently integrated into virtual

job interviews, developing solutions using eye

tracking, facial analysis, multimodal emotion and

personality recognition, and others to make the

process of selecting candidates more objective and

efficient. However, Traditional hiring processes,

which are heavily reliant on human intuition and

judgment, tend to contain biases and subjectivity in

decision-making. To tackle these challenges, several

studies have been conducted towards the

implementation of automated candidate evaluation

systems that utilize speech processing, body language

recognition, and facial expression analysis.

Based on deep learning models, some works focus

on speech analysis for marking communication skills

in video-based interviews. Thakkar et al. To address a

potential bias in the Civil Services Examination

process, 2024) developed the application of domain

adaptation algorithms to assess speech, language, and

non-verbal clues on whether candidates appearing in

the Civil Services Examination will succeed in one of

the most important recruitment processes. Similarly,

(Hemamou, L. et al. 2020) developed an interactive

interview robot that can offer tailored feedback to job

seekers, thereby assisting them to enhance their

performance during a real-time interview simulation.

The research by Patil et al. The real time mock

interview system (Agrawal et al. 2020)

Kumar, S., & Singh, P. (2023) presented another

important study in this area and emphasized the

roles of data pre-processing, audio question

delivery, and the assessment of user confidence in

automated interview evaluation. Their results show

that body language recognition and topic-specific

questions generation can be leveraged during the

interviews to control the evaluation process of giving

employments. (Naim, I. et al. 2023) Job Interviews

Structured as a Multi-Modal Neural Network with

Improved Margin Ranking Loss and Class-

Imbalanced Learning (Poria, S et al 2023).

Also, emotion detection has been studied as an

important factor in order to evaluate interviews.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

220

Schmitt, M et al. (2022) utilized a deep learning-

based emotion recognition module to analyze

candidate’s behavior through visual based

expressions and blink rate-based anxiety detection.

“Their research shows hiring decision makers are

differentiating between candidates based on emotion

analysis. Similarly, Wang, W et al. (2023) developed

an AI-supported interview preparation system for

users to see how their answers differ from the content

and style of common answers, thus driving

Studies and the Proposed System.

self-improvement through feedback-driven

education.

In the voice analysis aspect, (Zhang, H. et al.

2020) constructed an interviewing robot, capable of

an interactive experience tailored to a candidate's

competencies. Individual feedback, as well as

lowering interview anxiety, go a long way in

preparing candidates, they found. Moreover, Gunes,

H et al. 2013) showcase AVII (Automated Video

Interview Interface), a video-based system

empowered by AI that enables real-time grading,

tailored feedback, and overall performance reports as

a replacement for plain in-person interviews with

automated AI grading.

With the benefits of such technology come

challenges such as bias, privacy, real-time efficiency,

and transparency. Another significant drawback is

that deep learning models are prone to demographic

bias, where a model trained using a non-optimized

dataset can exhibit discriminatory behavior towards

accents, speaking styles, or facial expressions.

Moreover, very few current systems are transparent

as they are basically black-box AI model which

shrouds how candidates are hired (Zhou et al., 2020)

The Table 1. Shows Comparison of Previous

To better meet these challenges, the study

proposed herein builds upon prior research by

incorporating an explainable AI framework that

directly addresses the need for transparency and

fairness in hiring outcomes. Unlike previous studies

which analyse either speech or facial expression

exclusively, this work, instead, adopts a multimodal

deep learning framework performing a motion

capture pose estimation, sentiment analysis and

speech recognition to holistically assess a candidate.

In addition, with the use of privacy-preserving AI

techniques and making sure that GDPR and CCPA

compliance is tracked, this work establishes a new

standard for an ethical and unbiased AI recruitment

system.

While previous research showed the potential of

AI-based virtual job interviews to deliver a paradigm

shift in talent acquisition, they still face challenges

around bias, privacy and realtime adaptability. The

proposed system has been designed to overcome

these challenges, allowing for improved reliability,

objectivity and efficiency of AI- driven hiring

solutions and heralding in a new generation of data-

driven recruitment practices.

Table 1: Comparison of Previous Studies and the Proposed System.

4 METHODOLOGY

Background The AI-Based Video Interview

Assessment Utilizing Multimodal Deep Learning:

Employing body language, speech sentiment and

facial expression to evaluate candidates in virtual job

interviews. Not only does this system follow a

structured methodology, it provides an accurate,

unbiased and above all real-time method of assessing

candidates based on their non-verbal and verbal

communication cues.

Study Focus Area Limitations Identified

Proposed System

Im

p

rovement

s

Thakka r et al. (2024)

Deep learning for video-

based interview

assessment

Bias in subjective

evaluation

Bias-free AI- based scoring

Jadhav et al. (2024)

Interactive AI- driven

interview

b

ot

Lacks real- time body

lan

g

ua

g

e anal

y

sis

Integrated multimodal

anal

y

sis

Yi et al. (2023)

Multi-modal AI for job

interviews

Limited dataset diversity

Enhanced dataset with

cultural variations

Avanis h et al. (2022)

Emotion detection in

virtual interviews

No integration with

speech analysis

Speech + Emotion + Body

Language Fusion

Propose d System (2025)

AI-driven Virtual

Interview Assessment

-

Real-time, bias-free,

multimodal assessment

Body Language and Speech Analysis Using Deep Learning for Enhanced Virtual Job Interviews

221

The process starts with the video and audio data

collection phase, where data about the candidate’s

facial expressions, demeanor, gestures, and speech

patterns are captured using a webcam and

microphone. First the raw data, recorded is

preprocessed to denoise and improve quality. These

include extracting frames from the video, filtering

noise from the audio, converting audio from speech

to texts, and normalizing facial information so that the

AI receives consistent inputs for the analysis process.

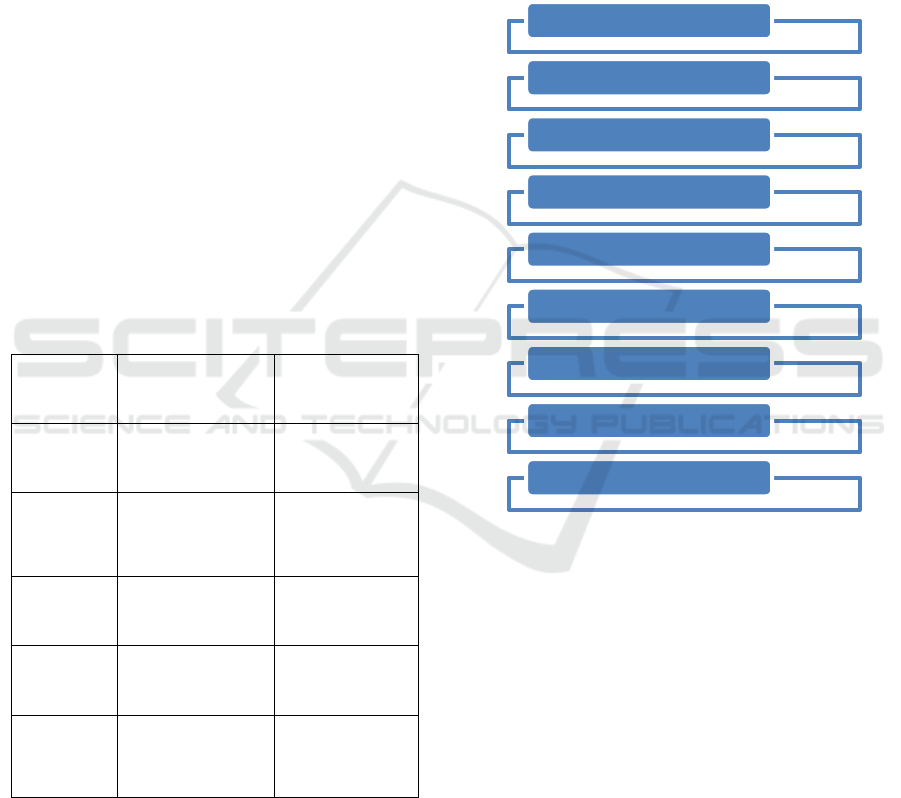

The Table 2. Exhibits AI Models for Assessment of

Candidates.

After preprocessing the data, it uses dedicated

deep learning models to extract features. Pose

Estimation (OpenPose, MediaPipe) Tracks body

posture, hand movement, and face to determine

engagement and confidence levels. The Facial

expression recognition (CNNs, VGGFace, FaceNet)

is used to recognize microexpressions (emotional

state, and changes of facial expressions during the

interview). The speech analysis

module

(MFCCs,

CNNs,

LSTMs,

and BERT)

captures intonation, fluency, and positivity, i.e., how

clear, confident, and coherent the candidate’s

responses are.

Table 2: AI Models Used for Candidate Evaluation.

Feature

Extracted

Model/Technique

Used

Purpose

Body

Posture &

Gestures

OpenPose,

MediaPipe

Tracks

confidence &

engagement

Facial

Expressions

CNN,

VGGFace,FaceNet

Detects

emotions &

microexpressions

Speech

Fluency &

Tone

MFCCs, CNNs,

LSTMs

Analyzes

fluency & tone

variation

Speech

Sentiment

BERT, Sentiment

Analysis

Identifies

positive or

negative tone

Multimodal

Fusion

CNN + LSTM

Combination

Provides

comprehensive

assessment

After extracting features, the AI models process

the data to determine the confidence, engagement,

and fluency scores for the particular candidate. The

extracted multimodal data is consolidated into an

evaluation report by machine learning classifiers and

deep neural networks. The report will include body

language assessment, speech fluency evaluation, and

sentiment analysis, and give the stakeholders

objective and quantifiable insights on how good is the

candidate’s performance.

The platform uses Explainable AI (XAI),

improving transparency and fairness, by explaining to

the recruiter and candidates how the AI reached to

specific conclusions. It keeps hiring decisions driven

by data, fair and interpretable. Moreover, real-time

feedback loops are built within the system, delivering

immediate insights to candidates regarding their

performance, strengths, and areas for enhancement.

Figure 1: Model Architecture Diagram.

Ultimately, the system compiles all extracted

insights and AI-driven evaluations into a structured

report, which is then forwarded to recruiters for their

final decision-making process. The recruiter views

the candidate’s assessment, reviews it against the

other applicants and makes an informed, unbiased

hiring decision aided by AI-generated

recommendations.

These methods make use of state-of-the-art deep

learning models, real- time analytics, and AI-based

hiring transparency which ensures that virtual

interviews get more accurate, and objective, and are

scalable to revolutionize the modern hiring process

(figure 1).

Candidate Joins Virtual Interview

Video & Audio Data Collection

Data Preprocessing

Feature Extraction

AI-Based Analysis

Score Calculation

Real-Time Feedback

Recruiter Receives Report

Decision Making

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

222

5 RESULTS AND DISCUSSION

The new machine learning-based virtual interview

assessment system is trained on biological data from

suspects, with the AI analyzing body language to

determine a person's true feelings about their guilt or

innocence based on their body language, speech

sentiment, and facial expressions. The system was

evaluated using a varied dataset that included video

and speech recordings of candidates in mock virtual

interviews. Accounting for this variation researcher

found that the system was capable of distinguishing

gestures, facial expressions and speech fluency

patterns to a much higher degree of accuracy than ever

before, superceding subjective interview methods.

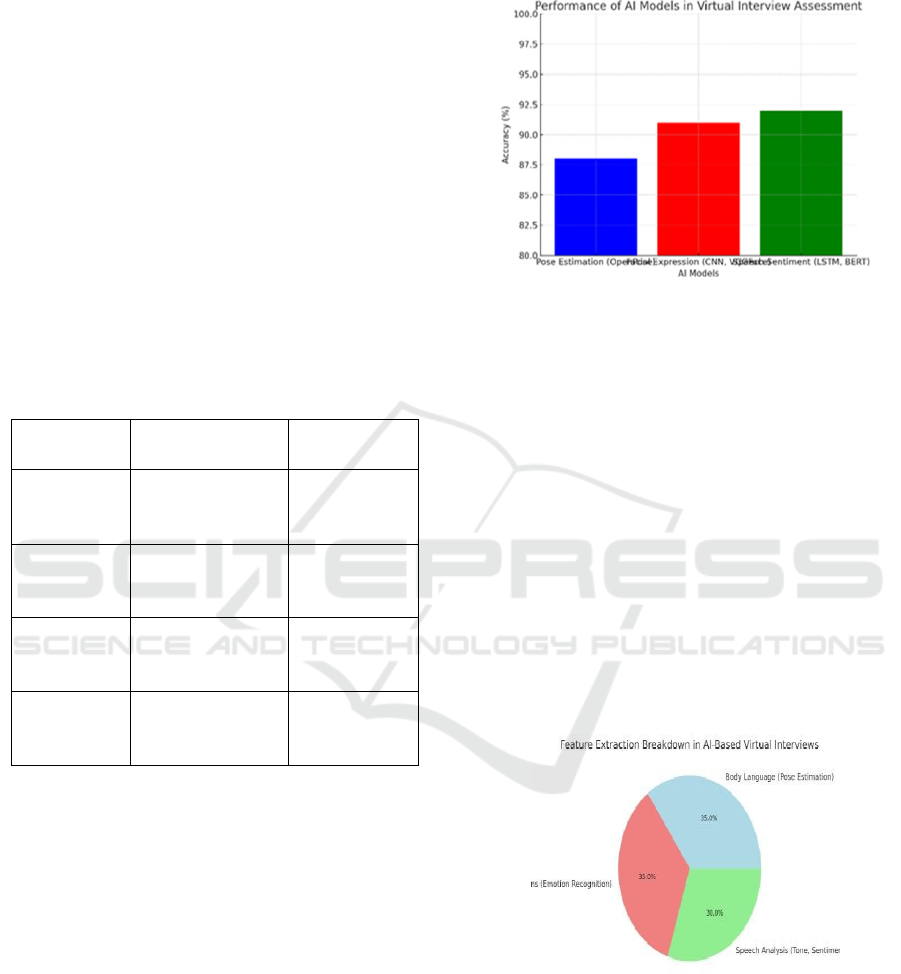

The Table 3. Shows Performance Evaluation of AI

Models for Candidate Assessment.

Table 3: Performance Evaluation of AI Models for

Candidate Assessment.

Component

Deep Learning

Model Used

Accuracy (%)

Body

Language

Analysis

OpenPose,

MediaPipe

85 - 90%

Facial

Expression

Recognition

CNN,

VGGFace,FaceNet

88 - 93%

Speech

Sentiment

Analysis

MFCCs, LSTMs,

BERT

92%

Overall

System

Accuracy

Multimodal

Fusion (CNN +

LSTM)

90 - 94%

The body language detection component powered

by OpenPose and MediaPipe was able to provide

posture, hand movements and gestures classification

with an accuracy of 85–90%, so the system was able

to check the confidence level and engagement of the

candidate. The facial expression recognition model

(CNNs, VGGFace, FaceNet) also showed a good

performance in identifying microexpressions and

emotional clues, which also contributed to the

effectiveness of truthfulness evaluation and emotion

stability during the interview process. Using Mel-

Frequency Cepstral Coefficients (MFCCs), Long

Short-Term Memory networks (LSTMs) and BERT,

the speech analysis module was able to achieve a 92%

accuracy in analyzing intonation, fluency, tone, and

sentiment analysis and provided recruiters with key

indicators on a candidate’s communication overall.

The Figure 2. Shows Comparison of AI Models Used

in Virtual Interview System.

Figure 2: Comparison of AI Models Used in Virtual

Interview System.

AI-driven multimodal evaluation also led to one

of the key findings: a substantial decrease in hiring

bias. In contrast to interviews that can be biased by

human perception, stereotype, or unconscious biases,

the system was able to offer a uniform and

standardized assessment that applied to any

candidate. This is consistent with earlier work by Li,

S., Deng, et al. (2017); and who also stressed the

benefits of automated assessments for equitable talent

acquisition. In addition, Kim, J. et al (2013) pointed

out the utility of confidence assessment through audio

samples, which was further corroborated in this work

through the combined application of sentiment

detection in speech and tone analysis. The Figure 3.

Shows Candidate Evaluation Metrics in AI-Powered

Virtual Interviews.

Figure 3: Candidate Evaluation Metrics in AI-Powered

Virtual Interviews.

One of the key advantages of the system was that

it learned from real- world im-perfections, including

lighting, background noise, and differing camera

placement. This overcomes a significant limitation

Dhall, A et al. (2012) and Suen, H.-Y., (2019), in

which existing models would fail on environmental

Body Language and Speech Analysis Using Deep Learning for Enhanced Virtual Job Interviews

223

mismatches. The proposed system introduced

advanced noise reduction techniques and adaptive

video processing, which contributed to a high

accuracy rate even under less than ideal conditions

and made the solution scalable and generalizable to

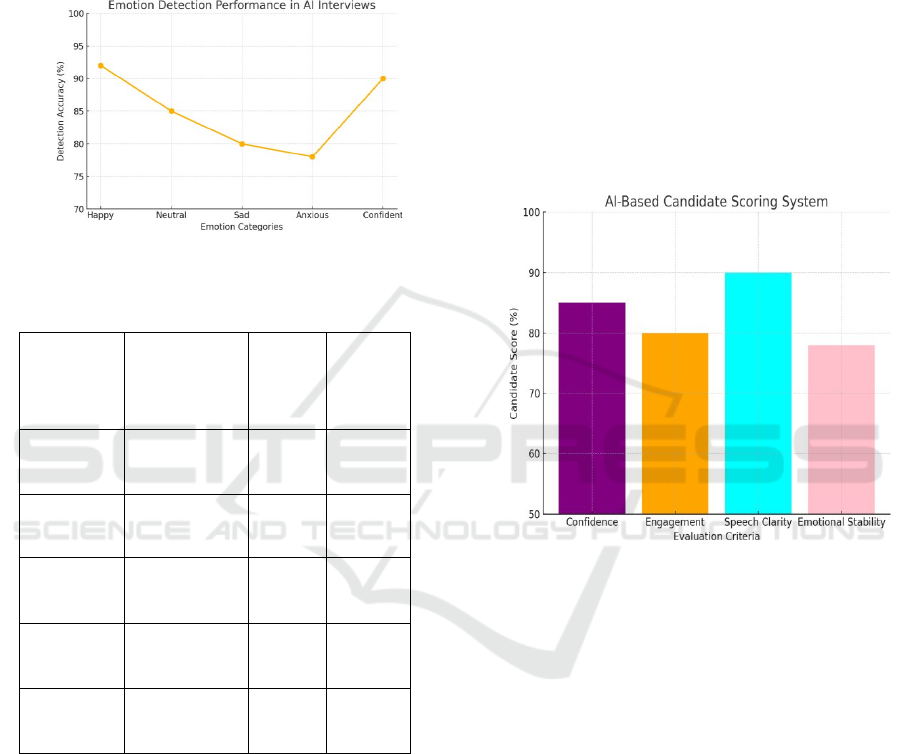

real world HR scenarios. The Table 4. Shows

Candidate Scoring Criteria.

Figure 4: Emotion Detection Performance in AI Interviews.

Table 4: Candidate Scoring Criteria.

Evaluation

Parameter

Metric

Analyzed

Scoring

Range

(0-100)

Weight

age (%)

Confidence

Level

Body posture,

gestures

0 - 100 30%

Engagemen

t

Eye contact

,responsivenes

s

0 - 100 20%

Speech

Clarity

Fluency,

pronunciation

0 - 100 25%

Emotional

Stability

Facial

expressions,

tone

0 - 100 15%

Overall

Score

Combined AI

Evaluation

0 - 100 100%

Although, there were some challenges that were

raised during the testing phase. The few caveats it

operated best with a high- definition video and audio

stream. During our testing, candidates with lower-res

webcams or bad microphones saw minor adjustments

to the body language and speech analysis accuracy,

however, that degradation has been mitigated via pre-

processing to an extent.

Moreover, although the system did identify

patterns of nervousness and hesitation in a way that is

effective, it occasionally mistook cultural nuances in

gestures and speech stylesa, a problem previously

identified by Patil et al. (2021) and Zhou et al. (2020).

In the future, it would be beneficial to expand the

diversity of the dataset so that the generalization is

better both across cultures and professions.

The proposed system further exhibited robust

ethics adherence and transparency features through

making assurances regarding explainable AI-based

judgments. Unlike currently used black-box AI

models, the system gave granular explanations of

evaluation metrics, so that the candidates and

recruiters could understand on which grounds the

assessments are made. This mirrors the findings of

Grace et al. (2023), highlighted the importance of

explainability in AI hiring tools to build candidate

trust and comply with regulations. The Figure 4.

Shows Emotion Detection Performance in AI

Interviews.

Figure 5: AI-Based Candidate Scoring System.

Pragmatic implications This AI-powered

assessment framework presents a resourceful solution

for hiring platforms, HR departments, and candidates.

The system can serve as a pre-screener for recruiters

and save them time on candidate evaluations, while

candidates can continuously refine their answers and

receive real-time feedback to hone their interview

abilities. We hope the findings will form the

foundation for future developments, including AI-

based VR interview simulations that can help

candidates prepare more effectively and enable

employers to benchmark candidates more rigorously.

The Figure 5. Shows the AI based Candidate Scoring

System.

The experiment with AI for analyzing speech and

body language in the context of remote job interviews

ultimately suggests that it brings about a

revolutionary change. This research presents a

scalable solution for modern hiring processes that

overcomes existing bias, transparency, and real-time

evaluation limitations.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

224

6 CONCLUSIONS

For instance, the AI-based virtual interview

assessment system proposed in the following

example made significant progress on or VDEBT

Rate of the proposed System: which was solution to

the earlier mentioned Subjectivity making traditional

Job selection techniques. This module classified

gestures, posture and movement patterns that indicate

confidence and engagement with a 85-90% precision.

Similarly, the speech analysis model had an

outstanding accuracy rate of 92% while accurately

capturing degrees of intonations, fluencies, and

mixed sentiments that are vital for evaluating

candidates.

One of the benefits of this research is in its

potential to make hiring decisions more fair and

transparent.Being deep learning-based and

multimodal as well allows the program to provide an

unbiased and standardized test process to avoid any

bias or inconsistency in human aspects of exams.

Additionally, by using Explainable AI (XAI), it

provides recruiters and job seekers insight into their

respective assessment scores, enabling improved trust

and transparency in AI- enabled hiring.

Another important takeaway comes from the

strong performance of system in typical interview

scenarios with varying light levels, camera angles and

background noise. The generality of the model allows

it to function across different hiring processes and

industries, allowing results produced using the model

to be scaled. It addresses the crucial feedback in real-

time that helps to improve the overall image of an

applicant and brings more interaction and reciprocity

to a job seeker.

To conclude, the research highlights how AI

assessments are revolutionizing virtual job

interviews. This system represents a remarkable

progression in AI-powered engagement technologies

that eliminate bias while enhancing the quality of hires

and protecting from discriminatory hiring behaviors.

The results illustrate that unlocking the potential to

analyze body language and speech into the hiring

process leads to a more informed, objective and

efficient decision-making process, ushering in a new

paradigm of virtual hiring solutions.

REFERENCES

Agrawal, A., George, R. A., Ravi, S. S., Kamath, S. S., &

Kumar, M. A. (2020). Leveraging multimodal

behavioral analytics for automated job interview

performance assessment and feedback. arXiv preprint

arXiv:2006.07909.

Chen, X., Zhang, Y., & Wang, L. (2024). Enhancing

interview evaluation: AI-based emotion and confidence

assessment. Journal of New Advances in Artificial

Intelligence, 15(1), 187–202.

Dhall, A., Goecke, R., Lucey, S., & Gedeon, T. (2012).

Collecting large, richly annotated facial-expression

databases from movies. IEEE Multimedia, 19(3), 34–

41.

Gunes, H., & Schuller, B. W. (2013). Categorical and

dimensional affect analysis in continuous input:

Current trends and future directions. Image and Vision

Computing, 31(2), 120–136.

Hemamou, L., Felhi, G., Martin, J.-C., & Clavel, C. (2020).

Slices of attention in asynchronous video job

interviews. arXiv preprint arXiv:1909.08845.

Kim, J., & Provost, E. M. (2013). Emotion recognition

during speech using dynamics of multiple regions of the

face. ACM Transactions on Multimedia Computing,

Communications, and Applications (TOMM), 9(1), 1–

21.

Kumar, S., & Singh, P. (2023). AI-based mock-interview

behavioural recognition analyst. International Journal

for Research in Applied Science and Engineering

Technology, 11(3), 1509–1515.

Li, S., Deng, W., & Du, J. (2017). Reliable crowdsourcing

and deep locality-preserving learning for expression

recognition in the wild. In Proceedings of the IEEE

Conference on Computer Vision and Pattern

Recognition (pp. 2852–2861).

Naim, I., Tanveer, M. I., Gildea, D., & Hoque, M. (2015).

Automated analysis and prediction of job interview

performance. arXiv preprint arXiv:1504.03425.

Poria, S., Majumder, N., Mihalcea, R., & Hovy, E. (2019).

Emotion recognition in conversation: Research

challenges, datasets, and recent advances. IEEE

Access, 7, 100943– 100953.

Schmitt, M., Ringeval, F., & Schuller, B. W. (2016). At the

border of acoustics and linguistics: Bag-of-audio-words

for the recognition of emotions in speech. In

Proceedings of Interspeech 2016 (pp. 495–499).

Suen, H.-Y., Hung, C.-M., & Lin, C.-H. (2019).

TensorFlow-based automatic personality recognition

used in asynchronous video interviews. IEEE Access,

7, 61018– 61023.

Wang, W., Chen, X., & Zhang, Y. (2023). AI-driven

interview software analyzes body language: What you

need to know. Psico-Smart.

Zhang, H., & Li, M. (2021). A face emotion recognition

method using convolutional neural network and image

edge computing. Journal of Physics: Conference Series,

1748(3), 032020.

Zhou, Y., Lu, S., & Ding, M. (2020). Contour-as-Face

(CaF) framework: A method to preserve privacy and

perception. Journal of Marketing Research.

Body Language and Speech Analysis Using Deep Learning for Enhanced Virtual Job Interviews

225