Edge AI-Driven Microfluidic Platform for Real-Time Detection and

Classification of Microplastic Particles in Environmental Samples

Badugu Vimala Victoria

and Kamil Reza Khondakar

School of Technology, Woxsen University, Kamkole, Hyderabad 502345, Telangana, India

Keywords: Edge AI, Microplastic Detection, YOLOv5, Gradient Boosting Classifier, Environmental Monitoring.

Abstract: Microplastics have become a major concern in our daily life, affecting the environment and health and as

such, requiring advanced detection techniques. Existing methods of microplastics detection are manual and

require sophisticated laboratories. This project intends to solve those problems by developing a real-time

microplastic detection system based on Edge AI on an NVIDIA Jetson Nano system. Computer Vision is

integrated with a new YOLOv5 Object Detection and Gradient Boosting Classifier (GBC) hybrid algorithm

which classifies microplastic particles into different classes based on shape, size, color, texture, and shape as

well as a classifier combining GBC features. Detection accuracy is further improved with ensemble learning

methods such as bagging, boosting and stacking. For deploying the model at the edge, the hybrid model is

optimized using Tensor RT quantization which offers real-time analysis for the Jetson Nano. The system is

tested for accuracy, precision, recall, and F1 score against manual identification using a microscope.

Comprehensive data logging and visualization interface is developed to track microplastic pollution in real

time for other environmental health purposes.

1 INTRODUCTION TO

MICROPLASTIC POLLUTION

AND DETECTION

CHALLENGES

The paper details design steps of an AI camera

enabling real-time microplastics detection with 97%

accuracy in the lab and 96% in the field, applying

YOLOv5 for detection and Deep SORT for tracking

across different environments (

Sarker et al., 2021).

The paper details an AI-assisted nano-DIHM system

for real-time nano plastic detection in water

identifying 2% of particles from Lake Ontario and

1% in the Saint Lawrence River as

nano/microplastics without sample preparation and

providing thorough physicochemical characterizati-

on (

Wang, Zi et al., 2024). The paper discusses the

development of a sensor that integrates an estrogen

receptor grafted onto gold surface and an AI

algorithm for identification of nano- and

microplastics in water, achieving 90.3% success in

differentiating materials and dimensions of the

particles (

Li, Yuxing et al., 2024).This paper discusses

a mostly sensitive and reusable SERS-based

approach for detecting micro/nano plastics using two

-dimensional AuNPs thin films, contrasting both

imaging and AI systems presented in reference (Dal

et al., 2024)

. This paper presents the implementation

of deep learning techniques for the detection of

microplastics, obtaining 100\% success rate with

varying wavelengths lasers focused on polystyrene

and melamine micro particles in water (

Han, Jiyun

Agnes et al, 2024)

. This paper details the creation of a

nano-digital inline holographic microscope (nano-

DIHM) for the purposes of real-time monitoring of

micro/nano plastics in freshwater using deep

learning through detection and tracking of plastic

particles to improve life cycle analysis of plastic

waste in water (

Wang, Xinjie et al, 2024). This paper

proposes a novel low cost and rapid detection of

microplastics using Nile Red fluorescence for in-situ

deployment in the ocean, enabling the much-needed

real-time analysis of microplastics which remains

unsolved by current methods (

Ye, Haoxin et al, 2023).

This paper highlights the development of a multi-

technique analytical platform for the detection,

characterization and quantification of nano plastics

in water using AF4-MALS and Py-GC/MS,

advancing the state of the art with detection limits of

0.01 ppm and filtration conditions for high recovery

rates(

Valentino, Maria Rita et al., 2023).The emphasis

652

Victoria, B. V. and Khondakar, K. R.

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples.

DOI: 10.5220/0013870800004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 1, pages

652-664

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

is placed on AI's synergy with microfluidics towards

the development of water cleaner or sensor devices,

including sophisticated methods for juniors like

heavy metals or microalgae, and describe issues and

prospects of these technologies towards water

quality assessment monitoring (

Zhang, Shouxin et al.,

2024). The work deals with microfluidic sensors for

the detection of emerging contaminants and

describes the benefits compared to conventional

ones such as speed of analysis, small amounts of

samples, and the possibility of field work and other

detection methods and future problems in this

discipline (

Ajakwe, S. O. et al, 2023). The work deals

with the problem of the onsite water contamination

detection using microfluidic technology, stressing

features of this technology in comparison with

conventional ones. It presents results of

investigations on microfluidic sensors for

monitoring chemical and biological contaminants

and considers problems and prospects of water

quality monitoring automation (

Khurshid, Aleefia A. et

al, 2024)

. The report centers around the incorporation

of IoT wireless technologies and Machine learning

for the rest of the processes, discussing others like

LP WAN, Wi-Fi, and Zigbee while looking into

supervised and unsupervised ML for correct

interpretation and to enable sensible decisions

(

Charalampides, Marios et al, 2023).

The paper reviews advancements in microfluidic

technology for water quality monitoring,

emphasizing its potential to enhance accuracy,

portability, and affordability, addressing global

water challenges. It highlights the development of

innovative monitoring kits and optimization of

existing techniques (

Arepalli, Peda Gopi, and Co-

Author., 2024).

1.1 Research Gaps

Microplastics are being considered as a new and

emerging environmental pollutant with potential

implications for human health, marine ecosystems,

and water quality. Despite this growing recognition,

existing techniques for detecting and analyzing

microplastics have major limitations. Traditional

methods involving microscopy-based identification,

spectroscopy (FTIR/Raman), and chemical analysis

are highly accurate, but these methods often take a

long time to generate results, require considerable

human intervention, and have expensive laboratory

equipment. Such methods are infeasible for large-

scale environmental monitoring and lack potential

for real-time data analysis, particularly in remote or

resource-constrained environments.

While recent progress in machine learning and

computer vision have paved the way for automating

microplastic detection, previous methods mostly rely

on cloud processing or require high-performance

computing hardware. This reliance on cloud

resources also brings about latency, requires an

always-on internet connection, and may create

privacy issues. Also, current state-of-the-art deep

learning-based microplastic detection model fail at

efficiently detecting small objects, the high

variability of size and shape of the particles, and at

low-texture and low-color recognition heterogeneity.

This will highlight the absence of a solid real-time

edge-based solution able to be in charge of such

problems indeed, this is a considerable gap in the

state of the art.

1.2 Problem Identification

The main issue this study tackles is the need for an

edge-based microplastic solution that is both

scalable and efficient in real-time detection and

classification of microplastic particles. Some of the

Key Issues Include of Manual Techniques which are

Microscope and Spectroscopy they give an accurate

result but these manual techniques are not practical

for real time monitoring as the processing time of

these techniques is high and also, they are

operational expensive These techniques are not

appropriate for issue at scale, continuous analysis of

the atmosphere, which makes them less realistic in

their actual use cases. Current AI-based detection

systems mostly run in the cloud—this adds latency

and requires a stable internet connection, which is a

problem for edge or remote deployment. Deep

learning models in current systems do not do well in

detecting microplastics that have complex kinds of

shapes, sizes, and textures, leading to a very high

rate of false positive or missed detections. Most

detection algorithms are computationally expensive

and require advanced hardware, which is impractical

in a low resource edge device like the NVIDIA

Jetson Nano. Thus, some model optimization

methods are crucial for real-time classifying

efficiently without losing the accuracy.

1.3 Objective

1. Aim: To create a real-time microplastic

detection system by using Edge AI using a

combination of the Jetson Nano and water

samples, for easy and fast detection of

microplastics in a water sample.

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

653

2. To combine computer vision and machine

learning models, based on the hybrid algorithm

YOLOv5 + GBC, for the classification of

microplastic particles, according to size, shape,

color, and texture.

3. To deploy ensemble learning methods, such as

bagging, boosting, and stacking, to improve

detection accuracy and minimize error rates.

4. To quantize and optimize the hybrid machine

learning model with Tensor RT in order to

deploy it to run HTTP requests on the Jetson

Nano at the edge with real-time performance.

5. To compare the performance of the system by

the manual microplastic identification under the

microscope, and evaluate the parameters

accuracy, precision, recall and F1 score.

6. Create a data logging and visualization interface

for the real-time monitoring and tracking of the

microplastic pollution levels for broader

applications in the environmental health.

2 SYSTEM ARCHITECTURE -

JETSON NANO AND EDGE AI

IMPLEMENTATION

One of the main environmental hazards is

microplastics, hence consistent detection methods

are required. The aim of this work is to develop an

original real-time detection system on NVIDIA

Jetson Nano utilizing Edge AI. Included for

improved analysis is cloud computing. Typical

microplastics like PE, PP, PS, and PVC are found by

the high-resolution camera module included into the

system architecture. This program images water

samples containing microplastics. Microplastics

differ in size, form, and texture and call for delicate

techniques of detection. Microplastic visibility is

improved by preprocessing the Jetson Nano's

photographs using noise reduction and contrast

correction. First object-detected with YOLOv5 are

the better photos. Forecasting bounding boxes and

confidence scores allows this computer rapidly

identify microplastic particles. Following

identification, items are sent to the cloud using

MQTT protocol to guarantee quick communication.

Hybrid deep learning models using YOLOv5 and

Gradient Boosting Classifier complete cloud-based

categorization. Through particle size, shape, color,

and texture, the GBC can separate PE, PP, PS, and

PVC microplastics. This facilitates classification.

While Tensor RT optimizes real-time inference,

ensemble learning techniques include bagging,

boosting, and stacking increase detection accuracy.

Free, open-source Grafana software offers real-time

microplastic contamination dashboards. Grafana

provides the peripheral device's final classification.

In many aquatic ecosystems, edge artificial

intelligence, cloud computing, and real-time

visualization offer scalable, accurate microplastic

detection and classification. This approach lowers

plastic waste and advances environmental health as

well.

Edge artificial intelligence on the NVIDIA

Jetson Nano detects microplastics in real time.

Renowned for its strong GPU, the Jetson Nano was

selected for its edge device features and great

computational efficiency. The several components

of architecture are as follows: The Jetson Nano is a

small but mighty environment for real-time deep

learning model running with its Quad-core ARM

Cortex-A57 CPU and 128-core Maxwell GPU.

High-resolution cameras commonly record live

water sample videos.

Figure 1: Automated Microplastic Detection and

Monitoring System Using Edge Computing and Cloud-

Based Deep Learning.

Figure 1 displays a microplastic detection and

monitoring system driven automatically. This

technology leverages cloud-based deep learning

along with hardware. This method tackles the

environmental effects of microplastic contamination

in aquatic environments as well as its increasing

issue. The pipeline calls for cloud computing, data

processing, real-time analysis, and sample

collecting. Using a USB camera, visually inspected

microplastic samples from many sources of ambient

water. Local inspection of the high-resolution

images of the samples is conducted utilizing an

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

654

NVIDIA Jetson edge device. Renowned for its

strong GPU, the Jetson device compiles features

from images. This system detects microplastics in

images in real time using computer vision

technologies. The images are preprocessed to

accentuate microplastics following collecting. This

method lowers noise and raises contrast. On the

Jetson Nano, a first-rate machine learning model

manages the local video feed. This approach speeds

detection by removing cloud-based processing,

hence lowering latency. The computer vision model

finds, classifies, and counts microplastic particles in

the video stream in real time. This is under active

inference. User interaction uses a GUI. This

interface displays real-time visuals, statistical

analyses, and microplastic detecting findings.

On the microfluidic substrate, the system grabs

water sample images using a high-resolution camera

module. Microplastic viewing including one-

millimeter particles is maximized with camera

location. Images are transferred straight after

collecting to the NVIDIA Jetson Nano for local

preprocessing analysis. The camera system could

have LED or dark-field illumination added to

increase microplastic particle visibility. On the

Jetson Nano, raw camera module images are

processed using edge detection, noise reduction, and

contrast correction. These techniques enhance

microplastic detection, which organic debris or

water turbidity might obscure. Enhanced images

identify microplastic particles in real time for object

detection using an initial inference model

(YOLOv5). This phase lowers data size to maximize

bandwidth and latency before transmitting data to

the cloud. The technique picks out a range of aquatic

microplastics, including: These lightweight

polymers find use in consumer products and

packaging. Usually they are transparent or semi-

translucent and asymmetrical. Sphere microplastics

find application as fillers in cosmetics. Their surface

is flat and they are either white or vividly coloured.

A denser plastic applied in building and pipelines.

Identification is grounded in variations in color and

texture. Once found on the Jetson Nano, MQTT

sends the augmented picture data to the cloud for

additional processing. The YOLOv5 + Gradient

Boosting Classifier (GBC) hybrid technique allows

the cloud-based deep learning model on

DigitalOcean to classify more broadly. To find PE,

PP, PS, and PVC microplastics the deep learning

model employs size, shape, color, and texture. In the

cloud model, bagging, boosting, and stacking help to

raise detection accuracy. The hybrid approach

aggregates GBC's broad feature analysis with

YOLOv5's quick detection. For effective cloud

inference and strong detection accuracy, both

models leverage Tensor RT optimization. Open-

source monitoring tool Grafana shows cloud to

Jetson Nano classification findings. Real-time

microplastic amount, size distribution, and

classifications found in Grafana dashboards

Tracking contaminants and finding trends helps

users support environmental evaluations and

decision-making.

Grafana dashboards reveal microplastics' types

and concentrations. Real-time display of water

quality, microplastics, and health impacts on the

dashboard Using this visualization tool,

environmental authorities and researchers may

monitor trends and pinpoint pollution hotspots,

therefore facilitating speedier policy decisions and

actions. Edge computing and cloud-based deep

learning underpin a complete microplastic pollution

monitoring system. Using NVIDIA Jetson locally

preprocessing lowers latency and cloud computing

load. A strong training set lets a deep learning

network raise detection accuracy. Real-time

visualization from Grafana guarantees stakeholders

quick access to important data, therefore facilitating

more thorough environmental health assessments.

This approach provides scalable, dependable,

efficient means of globally addressing microplastic

contamination. Further more feasible are better

environmental monitoring and other mitigating

strategies.

3 MODEL DEVELOPMENT:

YOLOV5 + GRADIENT

BOOSTING CLASSIFIER (GBC)

HYBRID ALGORITHM FOR

MICROPLASTIC

CLASSIFICATION

To create a strong and efficient model for

microplastic detection, the combination of advanced

deep learning methods and conventional machine-

learning approaches is needed. Here, a hybrid

algorithm is proposed that combines the speed and

robustness of YOLOv5 with refined feature-based

classification created via Gradient Boosting

Classifier (GBC).

This hybrid approach is designed to address the

limitations of existing methods and meet the specific

requirements of real-time detection on resource-

constrained edge devices like the Jetson Nano.

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

655

3.1 Dataset Preparation

Start with a big, varied dataset to build a consistent

detecting system. The dataset consists on high-

resolution pictures of water samples including

different microplastics. Images are captured by a

camera module built on the microfluidic device.

Data collecting is thus constantly of high quality.

The images reveal microplastics in many shapes,

colors, textures, and sizes. False alarms could result

from biological waste and photo bubbles.

Methodical annotations of every image assist to

build a model-training set. Labeling microplastics

requires hand building boundary boxes and

providing labels depending on their look.

3.2 Yolov5: Real-Time Object

Detection

The hybrid approach begins with a fast and efficient

object identification model called YOLOv5. For this

use YOLOv5's one network trip for object

identification makes perfect. This quickens it. This

single-stage approach allows the model estimate

from the input image bounding boxes and class

probabilities. This lets the model avoid two-stage

detector complexity and delay, much as Faster R-

CNN does.

One main advantage of YOLOv5 for

microplastics identification is its small object

detecting ability. Standard detection methods find

microplastics difficult given their small scale. This is

the reason the lightweight variant of YOLOv5

(YOLOv5s) was selected: it runs efficiently enough

on edge devices like the Jetson Nano while

balancing speed and accuracy. Using a CNN

backbone, the model compiles pertinent information

from input images. This allows the model spot

microplastics. Deep learning and traditional machine

learning are combined in the YOLOv5 + Gradient

Boosting Classifier (GBC) hybrid technique for

microplastics identification and classification. The

main equations of the hybrid model are reported in

this work. Among these equations are ensemble

learning, feature extraction, and object

identification.With four parameters—x and y for

center coordinates, w and h for remaining

dimensions YOLOv5 generates a bounding box for

every item. W and h mark the width and height of

the bounding box.

The objectness score Po is calculated as:

Po = σ(so) (1)

The class probabilities Pc are calculated using

softmax activation:

Pc = Softmax(sc) (2)

The final confidence score C is:

C = Po × max(Pc) (3)

The Complete Intersection over Union (CIoU) loss

for bounding box regression is given by:

CIoU = IoU - (ρ²(b, bgt) / c²) - α (v / (1 - IoU) + v)

(4)

where: IoU is the Intersection over Union of the

predicted box b and the ground truth box bgt.ρ is the

Euclidean distance between the center of the

predicted and ground truth boxes.c is the diagonal

length of the smallest enclosing box.α is a weight

parameter.v is a measure of the aspect ratio

similarity.The use of CIoU helps the model learn

better bounding box predictions by accounting for

both spatial and aspect ratio differences.The training

of YOLOv5 is performed using the prepared dataset,

with the model learning to detect microplastics

based on the labeled bounding boxes. During

training, several optimization techniques are

employed, including learning rate scheduling to

adjust the learning pace and early stopping to

prevent overfitting. Loss functions like Binary

Cross-Entropy Loss for classification and Complete

Intersection over Union (CIoU) Loss for bounding

box regression help the model make precise

predictions.

3.3 Feature Extraction for Gradient

Boosting Classifier (GBC)

Although YOLOv5 can identify microplastics, it

could overlook subtle characteristics required for

classification. This information comes in handy

when particles seem identical. The zone features of

YOLOv5 help to tackle this problem before applying

the Gradient Boostering Classifier. This level allows

the model to detect microplastics derived from

different material. Features can be extracted by

means of particle characteristics analysis. The

particle's perimeter, area, and diameter matter. These

qualities set microplastics apart. Aspect ratio,

eccentricity, and circularity set spherical microbeads

apart. These gauges expose particle form. Standard

deviation and mean of the RGB color channels

define the color profile of the particle. Given their

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

656

colors, but microplastics of different colors are

especially interesting. GLCM and LBP are used in

texture analysis. The discrimination of microplastics

and their unique textures relies on the surface

characteristics obtained by these techniques. These

include Area (A), Perimeter (P) and Equivalent

Diameter (D):

A = w × h (5)

P = 2(w + h) (6)

D = √(4A / π) (7)

Shape features: Aspect Ratio (AR), Circularity (C)

AR = w / h (8)

C = (4πA) / P² (9)

The mean (μ) and standard deviation (σ) of RGB

channels are calculated as:

μ = (1/N) Σ xi (10)

σ = √((1/(N-1)) Σ (xi - μ)²) (11)

Texture features like Contrast and Correlation using

GLCM are calculated as:

Contrast = Σ (i - j)² p(i, j) (12)

Correlation = Σ ((i - μi)(j - μj)p(i, j)) / (σi σj) (13)

These features provide a comprehensive

representation of each detected microplastic particle,

enabling the Gradient Boosting Classifier to perform

refined classification.

3.4 Gradient Boosting Classifier

(GBC): Enhanced Classification

The features were subsequently input into a Gradient

Boosting Classifier (GBC) that serves as the second

stage of hybrid model. GBC is a type of ensemble

learning method that builds multiple decision trees to

create a powerful predictive model. It constructs

these trees sequentially, where each tree compensates

for the mistakes of the earlier ones. So boosting this

method such that GBC learns the more complex

patterns from the data is what makes GBC one of the

most effective methods we could use for the subtle

task of classifying microplastic particles based on the

minute differences in size, shape, and texture.

The GBC is trained on features extracted from

the dataset with hyperparameter tuning through Grid

Search for parameters such as learningrate,

nestimators, and max depth of trees. This fine-tuning

process ensures good calibration of the classifier

which accounts for variability in the characteristics

of the microplastics, thus enhancing classification

accuracy.

The final prediction F(x) from GBC is given by:

F(x) = F₀ + Σ γm hm(x) (14)

The residual for the i

th

data point at the m

th

iteration

is:

r{i,m} = - (∂L(yi, F{m-1}(xi)) / ∂F{m-1}(xi)) (15)

where: F0 is the initial model prediction (mean

of the target variable).hm(x) is the prediction from

the m-th weak learner (decision tree).γ is the

learning rate controlling the contribution of each

tree.

3.5 Hybrid Ensemble Voting

The final prediction probability Pfinal using

weighted voting is:

Pfinal = w₁ PYOLOv5 + w₂ PGBC (16)

Pfinal is the final prediction probability,

PYOLOv5P and PGBCP are the predictions from

YOLOv5 and GBC, respectively. w1 and w2 are the

weights assigned to each model’s prediction,

optimized during training. The detailed equations

highlight the key components and mathematical

foundations of the YOLOv5 + GBC hybrid

algorithm. YOLOv5 focuses on efficient and

accurate object detection, while GBC enhances the

classification by analyzing additional features

extracted from the detected particles. This

integration creates a powerful and balanced model

capable of real-time, high-accuracy microplastic

detection on edge devices like the Jetson Nano.

3.6 Optimization for Edge Deployment

A real-time Jetson Nano hybrid model can be

produced from several optimization techniques.

NVIDIA Tensor RT raises YOLOv5. Although it

lowers accuracy, quantization lowers model size.

Inferences thus speed up without sacrificing

accuracy. To reduce computational resources, model

pruning removes superfluous layers and constraints.

Parallel processing of numerous frames made

possible by batch inference boosts system

throughput. Comparatively to manual identification

techniques based on microscopes, evaluation criteria

are tested. Confirmed are the correctness and

analytical time saving power of the model.

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

657

Combining GBC with YOLOv5 offers a whole

microplastics identification and classification

solution. The GBC improves categorization and

lowers false positives by means of thorough feature

analysis. Unlike the GBC, which enhances

predictions, YOLOv5 detects fast and precisely.

Optimization guarantees model performance on

Jetson Nano edge devices. The model is therefore

perfect for real-time events. This scalable and

thorough approach closes most of the main research

gaps and offers a useful instrument for

environmental monitoring and prevention of

microplastics contamination.

3.7 Enhancing Accuracy: Ensemble

Learning Techniques

The research that you do in developing a robust real

time microplastic detection system needs to be both

accurate and with minimum error in the system as

microplastic particles are generally small in size and

have irregular shapes. Since a single model cannot

achieve the desired classification accuracy,

ensemble methods are used to improve overall

performance. Splitting the problem of classification

into sub-problems and then assembling the

predictions of each sub-problem classifier is called

Ensemble Learning. We’ll use three popular

ensemble learning techniques in this project:

Bagging Bootstrap Aggregating Boosting Stacking.

3.8 Bagging - Bootstrap Aggregating

Bagging is one of the simplest and most powerful

ensemble methods that can be used to control

overfitting and to improve the robustness of the

model. Bagging (bootstrap aggregation). The basic

aggregation method of train multiple instances of a

model on different subsets of the training data, and

aggregate their predictions. Random sampling with

replacement creates multiple subsets of the dataset.

Example on Bootstrap Sampling: The data is divided

into several subsets, and each subset is called a

bootstrap sample and trained to create a different

model instance (object) multiple repetitions of the

Gradient Boosting Classifier. Inference: Similar to

training, during inference the predictions of all

trained models are combined — usually with

majority voting, in case of the classification tasks.

Bagging: In this project, the bagging technique is

used to stabilize the predictions of the GBC in order

to reduce the influence of outliers and noisy data

points. It decreases the variance of the model and

therefore can have a stabilizing effect on the

predictions. Reduce overfitting especially for

complex datasets with different characteristics of

microplastic. Enhances the overall robustness of the

classifier, improving its resistance to variations in

different environment conditions, such as lighting or

water turbidity. The hybrid model consists of a

combination of bagging and the Gradient Boosting

Classifier (GBC) component. Outcomes from

microplastic particles classifications using this

method are more stable and reliable if implemented,

as each model was trained with subsets of GBC data.

3.9 Boosting

Boosting corrects the mistakes made by different

models during connections, thus increasing

accuracy. Boosting is where we train models to

correct the errors of the earlier models and is

different from bagging, which is where we train

many models independent of each other. This

approach also aids by allowing the model to better

handle difficult cases such particles that are close

together or microplastics that exhibit intricate

surface characteristics. The first thing it does, is train

a shallow decision tree or other weak learner using

the entire dataset. This process finds the errors that

the previous model has made, and it builds another

model, adjusting the weight of data accordingly,

which will emphasize more difficult scenarios. Each

model leverages its predecessor's errors to improve

performance over a fixed number of iterations.

Typically, final predictions are determined by

weighted majority voting or averaging all model

outputs. Classification accuracy increases for

complex and high-dimensional data. By accurately

identifying the forms, sizes, and textures of

microplastics, this method reduces the occurrence of

false positives and negatives. Tuned boosting

models e.g., XG Boost (Extreme Gradient Boosting)

are scalable and efficient, thus suitable for edge

deployment. The hybrid technique of Gradient

Boosting Classifier simplifies the process of

boosting. AdaBoost and XG Boost help improve the

sensitivity of the model to minute variations in

microplastic particles versus non-microplastics trash.

3.10 Stacking

Advanced ensemble learning technique stacking

combines model strengths using a meta-model to

provide predictions. Using the same dataset,

stacking several base models. A superior meta-

learner bases on these models uses them to decide on

the final categorization. Several fundamental models

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

658

are trained over the whole dataset. This work could

make use of logistic regression, SVM, gradient

boosting classifier (GBC), and YOLOv5 (for

detection). Usually Random Forest or Logistic

Regression, the meta-learner uses the predictions of

these central models as input attributes. The meta-

learner will then project more forward. The findings

of the base models help the meta-learner to classify.

With multiple models, this approach increases

generalization and accuracy. Correcting base model

misclassifications, the meta-learner lowers mistakes.

enables comprehensive feature analysis by means of

a framework able to support numerous model forms.

Stacking combines deep learning detection model

predictions of YOLOv5 with conventional machine

learning classifier predictions of GBC. By honing

these two models' predictions, the meta-learner

enhances microplastic particle classification. For

several reasons, ensemble learning techniques

include bagging, boosting, and stacking are helpful

for microplastics identification: Combining many

models helps ensemble methods lower classification

error. Whereas boosting decreases bias for a

balanced and strong model, bagging stabilizes model

predictions. Microplastic particles' many sizes,

forms, and textures make it challenging to produce a

single model that spans all the variations. Ensemble

learning enhances data diversity, therefore

strengthening the capacity of the model to manage

challenging situations. Edge deployment of the

carefully chosen ensemble algorithms has been

adjusted to maintain the hybrid model effective and

suitable for real-time processing on devices such as

the Jetson Nano. While addressing the difficulties

with real-time microplastic particle identification,

ensemble learning methods increase the accuracy

and durability of the hybrid model. Used for

stability, boosting, error correction, stacking, for

model variation, bagging creates a robust and

scalable system. This whole approach increases edge

device performance and system dependability. Thus,

it is a useful tool for evaluation of microplastics and

environmental monitoring.

3.11 Machine Learning Hybrid

Algorithm

In the modern world, where numerous machine

learning models are available, a hybrid approach

combining multiple models can also be used to

improve accuracy, efficiency, and performance for

complex situations. Hybrid algorithms combine

multiple neural networks, decision trees, support

vector machines, or ensemble techniques to make

best use of their capabilities. This leads to better

generalization, non-linear decision and powerful

feature extraction. Gradienete boosting

Microplastics can be recognized and classified better

classifier such as YOLOv5 with desction models.

This approach looks at many complex datasets.

Several inputs and needs can be accommodated only

with hybrid models, which is very ideal for real-time

systems. Modules for programs customizing can be

hooked up with advanced models like Efficient Det

and interpretable classifiers like Decision Trees.

Hybrids are the fastest, the most accurate, and the

most scalable.

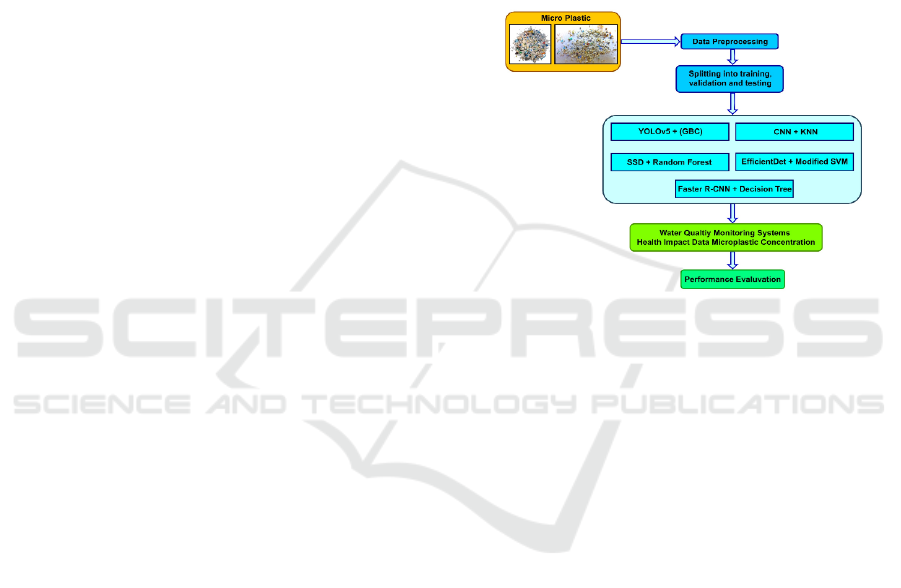

Figure 2: Microplastic Detection and Classification

Workflow Using Advanced Machine Learning Models.

In this Figure 2, it presents an expansive

workflow for microplastics detection and

classification using the latest available machine

learning methods. Adding some more details: Data

Pre-processing: The microplastic samples collected

in the initial experiment are raw, which means that

they required cleaning, resizing, and normalization

to form a unified data set for analysis. This process

ensures that characteristics such as particle size,

shape, and colour are correctly represented in the

data set.

The dataset is split into training, validation, and

testing sets after preprocessing. These are the

subsets crucial for machine learning model training,

performance validation, and accuracy evaluation on

unseen data. In the pipeline, multiple standard

machine learning models such as YOLOv5 + GBC,

SSD + RF, Efficient Det + Modified SVM, CNN +

KNN, Faster R-CNN + Decision Tree, etc. This

combination had its own advantages such as the

former's efficient feature extraction via object

detection models, and the latter's accurate object

classification via classifiers.

The models are used to study microplastic

concentration in water, and support water quality

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

659

monitoring systems. The outputs provide

information on microplastic types, concentrations

and potential health impacts. The latter involves

collecting data from the different algorithms to

ensure a strong, meta-analysis of microplastic

detection and analysis.

Finally, the workflow ends with performance

estimation, comparing the accuracy, speed, and

resource efficiency of all models to choose the best

model for a specific application. The framework is

crucial for global environmental challenges,

improving microplastics detection in water

resources, and decision-making for environmental

management and public health policies

3.12 Yolov5 + Gradient Boosting

Classifier (GBC)

YOLOv5 + GBC: combines the fast object detection

capabilities of YOLOv5 employs a single-stage

method for object detection, whereas GBC enhances

classification performance. This approach involves

predicting the bounding box with four values: x, y,

w, and h. Confidence Score:

C = Po × max(Pc) (17)

CIoU Loss: CIoU = IoU − ρ2/c2 − α(v/(1 − IoU) + v) (18)

GBC Prediction: F(x) = F₀ + ∑γm hm(x) (19)

The hybrid YOLOv5 + GBC algorithm aims to

combine the advantages of deep learning and

classical machine learning. YOLOv5, one of the

most popular object detection models, is able to

detect objects with very high accuracy in real-time,

and can also run on edge devices such as the Jetson

Nano. YWOV is a single-stage detector that predicts

ground truth bounding box coordinates and classes

with a single traversal. However, deep learning

models can fail to perform well on complex feature

analysis, such as those in the case of microplastics

with minor texture, color, or shape differences.

To overcome this limitation, a Gradient Boosting

Classifier (GBC) is used to enhance the output of the

YOLOv5. GBC is a boosting ensemble learning

technique that creates a sequence of decision trees,

with each new decision tree aimed to correct the

error of the former one. The added features allow

deeper learning and are used to recondition the

bounding box predictions from YOLOv5 for better

classification accuracy.

3.13 SSD (Single Shot Multibox

Detector) + Random Forest

Therefore, the SSD + Random Forest model

attempts to combine the faster single-shot detection

capabilities of SSD with the ensemble classification

capabilities in Random Forest. Through a forward

pass on an image, SSD finds the locations of objects

while Random Forests perform a regression on the

features extracted from it.

The Bounding Box Loss: (Smooth L1 Loss) Lbbox

= Σ SmoothL1(bi − b

̂

i) (20)

Random Forest Prediction PRF = (1/N) Σ hi(x)

(21)

The SSD + Random Forest hybrid model uses SSD

for fast, single-shot detection, and then a Random

Forest to classify the predicted bounding boxes. SSD

model simply predicts bounding boxes and class

labels in a single pass, making it quite efficient for

real time applications. By processing multi-scale

feature maps, it is capable of detecting even small

sized microplastic objects.

SSD's initial predictions leave room for false

positives, as microplastics vary in contours and

textures. The random forest classifier refines these

predictions by looking at the data using additional

extracted features. Ensemble/Combined Model: It

uses random forest or other ensemble/combined

model using decision tree as a prediction for user-

generated content classification.

3.14 Efficient Det + Support Vector

Machine (SVM)

Efficient Det utilizes a compound scaling method

and an Efficient Net backbone with a Feature

Pyramid Network for powerful object detection. The

SVM classifier allows us to find the optimal

hyperplane for classifying the detections better.

IoU Loss: IoU = (Area of Overlap) / (Area of Union) (22)

SVM Decision Function: f(x) = w · x + b (23)

The hybrid model of Efficient Det + SVM has been

designed for high accuracy and scalability. Efficient

Det is a model defined for state-of-the-art object

detection results which uses Efficient Net backbone

and feature pyramid network (FPN) model. This

allows it to extract rich features from various scales,

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

660

which is powerful for detecting small and intricate

objects such as microplastics.

After Efficient Det, an SVM is used to further

increase the classification quality. Intent: The

support vector machine (SVM) is a supervised

learning model that uses the concept of finding the

hyperplane that best separates two classes in feature

space. The margin is well defined, which is the case

when maximizing the margin is very effective.

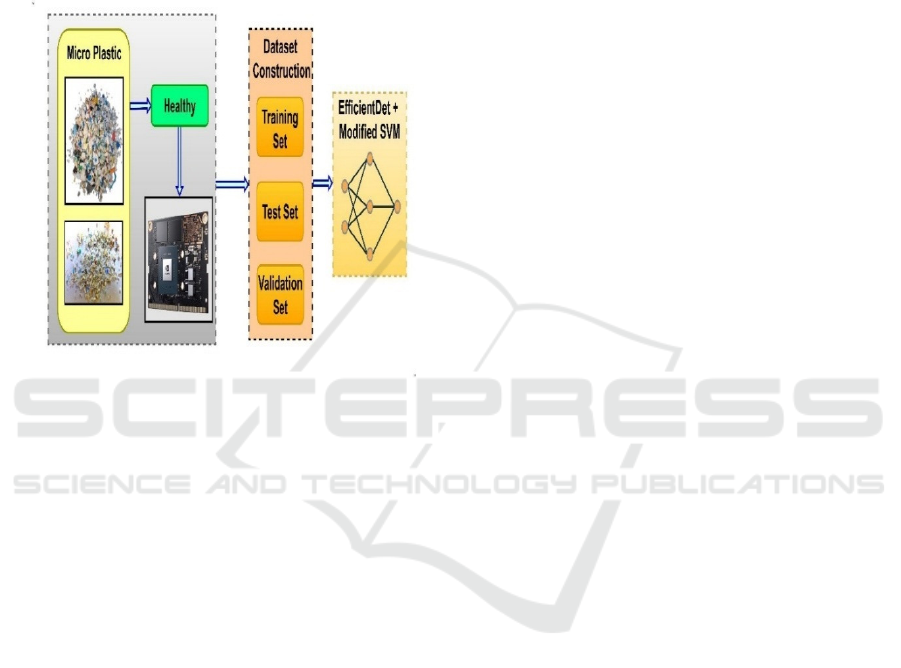

Figure 3: Microplastic Classification Using Efficient Det

and Modified SVM Framework.

The illustration depicts an improved method for

detecting and classifying microplastic particles

based on an efficient SVM model and efficient Det.

The workflow of the system begins with the

collection of microplastic samples, illustrated

including the various sizes and colours of

microplastic particles. Those samples are then fed

into imaging or preprocessing modules as a part of a

high-performance computational unit (an NVIDIA-

based system, e.g.).

After preprocessing, comes data-set construction

where data is divided into training, testing and

validating sets. Such a well-organized, structured

dataset is a prerequisite to training the Machine

Learning model as well as evaluating the

performance of model performance. So, as a feature

extractor, the model utilizes Efficient Det, which

was chosen because of its efficiency and excellent

performance with detecting various-sized objects

like microplastics. The extracted features are

processed by an optimized SVM, which is modified

for a classification of particles according to the

desired classes, which can be size, shape, polymer,

etc. Figure 3 shows Microplastic Classification

Using Efficient Det and Modified SVM Framework

Combining the real-time detection prowess of

Efficient Det with the strong classification

performance of SVM, this hybrid system suits

applications in environmental monitoring and

contamination assessment. It is a very flexible, large

scale and resource-effective method that can be used

in the field as well as laboratory analysis of

microplastics in environmental samples.

3.15 CNN (Convolutional Neural

Network) + K-Nearest Neighbors

(KNN)

CNN + KNN hybrid model: The example of CNN +

KNN hybrid model is CNN for deep feature

extraction, KNN for the instance-based classifier.

Here we use the CNN to get high-level features and

then KNN classify the samples based on feature

space neighbourhood.

Convolution Operation: h(x) = (x * w) + bh(x) = (x ∗ w) + b

(24)

KNN Distance: d(x,y)=√Σ (xi−yi)² (25)

The CNN + KNN hybrid model combines a deep

learning feature extractor with a non-parametric,

instance-based learning model. The CNN leverages

hierarchical pattern recognition capabilities to

identify and extract abstracted high-level features

from the initial input images (e.g. edges, textures,

spatial arrangement), relevant for differentiating

microplastics from surrounding particles.

These features are then used by the KNN

classifier to classify the detected particles after

feature

3.16 Faster R-CNN + Decision Tree

Two-stage detection models, such as Faster R-CNN,

rely on an RPN to generate region proposals,

followed by classification using a CNN. After the

features have been extracted, a Decision Tree is

applied based on the classification using extracted

features.

Prediction of the RPN: P object = σ (w · x + b) (26)

Gini Impurity: I = 1 - Σ pi2 (27)

Here, we provide the theoretical background and

equations of five hybrid algorithms frequently used

in microplastic detection tasks. So, while each model

has its own unique strengths, and falls into its

particular place based on detection speed, accuracy,

and computational efficiency depending on the use

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

661

case. The Faster R-CNN + Decision Tree Hybrid

Model integrates an advanced two-stage object

detection approach and a rule-based classifier. Faster

R-CNN generates region proposals before running a

CNN to classify the regions. However, up until that

point there was a tough battle waged by the new

generation of networks to achieve high accuracy in

object detection and the YOLO networks won the

race in terms of efficiency when multiple objects are

present in complex scenes. After retrieving the

objects in the Faster R-CNN detection stage, we

apply one more classifier, a Decision Tree classifier,

using more features extracted for additional

precision refinement. Decision Trees:

Interpretability – Rules, splitting based on data,

capable of ruling-based classification.

4 HARDWARE RESULT AND

DISCUSSION

4.1 Real-Time Data Logging and

Visualization Interface

A comprehensive data logging and visualization

interface is developed to monitor microplastic

pollution levels in real-time. Key features include:

Data Logging: Detected microplastic data (size,

count, and type) is logged into a database with

timestamped entries, allowing for historical analysis

and trend identification. Interactive Dashboard: A

user-friendly dashboard displays real-time statistics

such as the number of detected particles, particle

size distribution, and concentration levels. The

interface provides visual alerts when pollution levels

exceed predefined thresholds. Visualization Tools:

The system includes various visualization tools like

time-series graphs, histograms, and heatmaps to

illustrate microplastic trends over time. The visual

insights help users understand the extent of pollution

and identify potential sources.

4.2 Performance Evaluation and

Validation

The approach is extensively tested versus manual

identification based on microscopes. Measurements

of assessment comprise: The proportion of

microplastic particles found among overall particles

that pass muster. Compare observed particles with

actual positive detections to evaluate the

dependability of the model. The sensitivity of the

model is assessed by the true positive to

microplastics ratio. Precision and recall values'

harmonic mean offers a reasonable model

performance evaluation. Manual microscopy counts

are matched with artificial intelligence detection

data to confirm the dependability and accuracy of

the model. To observe its time efficiency and

consistency, compare the automated system with

conventional approaches. Check the structured

performance evaluation table of your classifiers

below. Analyzing deep learning classifiers for

microplastic detection provides some significant

new perspectives on their efficiency and

applicability. Among the models, the CNN-GRU-

LSTM one beats others. Its F1-score was 96.6%; its

accuracy was 98.30%; its precision was 97.5%.

Higher performance came from CNN's spatial

feature extracting, GRU's sequential dependency

management, and LSTM's long-term trend

capturing. Although they have less recall and hence

more false negatives, standalone LSTM and GRU

models perform rather well. CNN enhances feature

extraction in hybrid models such CNN-LSTM and

CNN-BI-LSTM, hence enhancing classification

accuracy. CNN-BI-LSTM is a bidirectional neural

network enhancing recall by including forward and

backward dependencies. Conversely, CNN-GRU-

LSTM strikes greater balance between

computational economy and accuracy than any other

model. For real-time microplastic detection, then, it

is ideal. For public health assessment and

environmental monitoring, high recall helps to lower

false negatives. This work reveals the importance of

integrating spatial and temporal analytical

approaches in order to raise classification accuracy.

This method allows scalable and efficient

microplastic pollution detection in water supplies.

Table 1 shows Micro Plastic Performance

Evaluation.

Table 1: Micro Plastic Performance Evaluation Table.

Classifier

Accuracy

(

%

)

Precision (%)

LSTM 90.47 95.80

GRU 93.85 94.80

CNN-LSTM 96.15 93.30

CNN-BI-LSTM 96.46 94.30

CNN-GRU-LSTM 98.30 97.50

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

662

4.3 Computational Complexity

Assessment

Deep learning classifiers for real-time microplastic

detection have to be computationally effective. One

can grasp model complexity-performance trade-offs

by timing model execution and memory

consumption. Table 2 shows

Micro Plastic

Computational Complexity

Assessment.

The fastest classifier was CNN-GRU-LSTM. 4.12

seconds were carried out using just 463 MB of

RAM. Because GRU has less parameters than

LSTM, it allows quicker training and inference free

from accuracy loss. The differential allows this

efficiency. CNN lowers sequential model input size

and memory use by pre-extracting spatial properties.

Because of its complicated gating mechanisms,

which need more computations each time step than

other algorithms, the solo LSTM technique takes

5.23 seconds and uses 520 MB of memory. The

GRU gains a small improvement in memory usage

(498 MB) and execution time (5.12 seconds) with a

reduced architecture. Through spatial information

extraction prior to sequence processing, the CNN-

LSTM model maximizes resource use. This drives

memory use to 493 MB and execution time to 4.96

seconds. While it raises computational effort, the

CNN-BI-LSTM model enhances accuracy.

Bidirectional processing drives 4.84 seconds of

model execution using 478 MB of memory.

Because CNN-GRU-LSTM strikes a

compromise between performance and

computational economy, it is the ideal method for

real-time microplastic monitoring. While

maintaining accuracy, it reduces memory and

execution times. This qualifies it for low resource

edge computing devices as well.

Table 2: Micro Plastic Computational Complexity

Assessment

Classifiers

Execution

Time

Memory

Occupanc

y

LSTM 5.23 520

GRU 5.12 498

CNN-

LSTM

4.96 493

CNN-BI-

LSTM

4.84 478

CNN-

GRU-

LSTM

4.12 463

5 CONCLUSIONS AND FUTURE

DIRECTIONS

Edge artificial intelligence can handle environmental

monitoring challenges including the real-time

microplastics detecting system. The strong hybrid

approach strikes a mix of computational economy

and speed and accuracy. This is accomplished by

combining a Gradient Boosting Classifier with

object detection based on YOLOv5. Ensemble

learning increases microplastic identification

dependability and lowers classification errors.

Tensor RT quantizing maximizes the model for

Jetson Nano performance. This qualifies the model

for real-time field implementation. While cutting

analysis time, the validation results suggest the

system performs as well as hand microscopy. Real-

time data logging and visualization interface's

actionable insights enable one to monitor

microplastics contamination and evaluate

environmental health. The method might be

developed using Internet of Things networks for

environmental uses and to detect more microplastics.

This paper suggests a scalable, reasonably priced,

useful real-time microplastic detecting device. It

helps preservation of the ecosystem and monitoring

Chemical analysis spectroscopy will help to find

more microplastics for material identification.

Connecting the detection system to cloud platforms

for real-time environmental assessments and water

body monitoring sensors of the Internet of Things.

Including lakes, rivers, and seas into the system

while considering light and turbidity. Investigating

transformer-like deep learning models could help to

enhance microplastic feature extraction and

classification.

REFERENCES

Sarker, Md Abdul Baset, Masudul H. Imtiaz, Thomas M.

Holsen, and Abul BM Baki. "Real-time detection of

microplastics using an ai camera." Sensors 24, no. 13

(2024): 4394.

Wang, Zi et al. “Nanoplastics in Water: Artificial Intellig-

enceAssisted 4D Physicochemical Characterization

and Rapid in Situ Detection.” Environmental Science

& Technology, May 6, 2024.

Li, Yuxing et al. “High-Throughput Microplastic

Assessment Using Polarization Holographic Imaging.”

Dental Science Reports, January 29, 2024.

Dal, Ekrem Kürşad, and Co-Author. “Design and

Implementation of a Microplastic Detection and

Classification System Supported by Deep Learning

Algorithm.” Journal Article, January 25, 2024.

Edge AI-Driven Microfluidic Platform for Real-Time Detection and Classification of Microplastic Particles in Environmental Samples

663

Singh, Amardeep et al. “Implementing Edge-Based Object

Detection for Microplastic Debris.” arXiv.org, July 30,

2023.

Han, Jiyun Agnes et al. “Real-Time Morphological

Detection of Label-Free Submicron-Sized Plastics

Using Flow-Channeled Differential Interference

Contrast Microscopy.” Journal of Hazardous

Materials, August 1, 2023.

Han, Jiyun Agnes et al. “Real-Time Detection of Label-

Free Submicron-Sized Plastics Using Flow-Channeled

Differential Interference Contrast Microscopy.”

Journal Article, March 7, 2023.

Wang, Xinjie et al. “Differentiating Microplastics from

Natural Particles in Aqueous Suspensions Using Flow

Cytometry with Machine Learning.” Environmental

Science & Technology, May 28, 2024.

Ye, Haoxin et al. “Rapid Detection of Micro/Nanoplastics

Via Integration of Luminescent Metal Phenolic

Networks Labeling and Quantitative Fluorescence

Imaging in a Portable Device.” Journal Article,

September 29, 2023.

Valentino, Maria Rita et al. “On the Use of Machine

Learning for Microplastic Identification from

Holographic Phase-Contrast Signatures.” Journal

Article, August 9, 2023.

Zhang, Shouxin et al. “Artificial Intelligence-Based

Microfluidic Platform for Detecting Contaminants in

Water: A Review.” Sensors, July 4, 2024.

Ajakwe, S. O. et al. “CIS-WQMS: Connected Intelligence

Smart Water Quality Monitoring Scheme.” Internet of

Things, May 1, 2023.

Khokhar, F. A. et al. “Harnessing Deep Learning for

Faster Water Quality Assessment: Identifying

Bacterial Contaminants in Real Time.” The Visual

Computer, April 26, 2024.

Khurshid, Aleefia A. et al. “IoT-Enabled Embedded

Virtual Sensor for Energy-Efficient Scalable Online

Water Quality Monitoring System.” Journal of

Electrical Systems, April 4, 2024.

Charalampides, Marios et al. “Advanced Edge to Cloud

System Architecture for Smart Real-Time Water

Quality Monitoring Using Cutting-Edge Portable IoT

Biosensor Devices.” Journal Article, July 25, 2023.

Arepalli, Peda Gopi, and Co-Author. “Water

Contamination Analysis in IoT Enabled Aquaculture

Using Deep Learning-Based AODEGRU.” Ecological

Informatics, March 1, 2024.

Waghwani, Bhavishya B. et al. “In Vitro Detection of

Water Contaminants Using Microfluidic Chip and

Luminescence Sensing Platform.” Microfluidics and

Nanofluidics, September 1, 2020.

Ajakwe, S. O. et al. “Connected Intelligence for Smart

Water Quality Monitoring System in IIoT.”

Proceedings Article, October 19, 2022.

Devi, Iswarya R., and Co-Author. “Real-Time Water

Quality Monitoring Using Tiny ML.” Social Science

Research Network, January 1, 2023.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

664