Facial Recognition and Feature Mapping with Machine Learning

G. Prathibha Priyadarshini, G. Utejitha, D. Tejaswini, K. Swathi and A. Renuka

Department of CSE, Ravindra College of Engineering for Women, Kurnool, Andhra Pradesh, India

Keywords: Facial Recognition, Feature Mapping, Deep Learning, Convolutional Neural Networks, Biometric

Authentication.

Abstract: Facial recognition and machine learning-based feature mapping have become key security, authentication,

and human-computer interaction technologies. This research discusses the application of deep learning-based

facial recognition systems that map and analyze facial features to perform robust identification and

verification. The system utilizes convolutional neural networks (CNNs) to extract and classify features for

enhanced accuracy and the ability to counter light, pose, and occlusion variations. Feature mapping methods

like key point detection and embedding generation facilitate effective face matching and identification. The

approach improves security, reduces and embedding generation facilitate effective face matching and

identification. The approach improves security, reduces false positives, and offers a scalable solution for real-

time surveillance, biometric, and personalized user experience applications. Experimental results prove the

model's effectiveness in delivering high recognition accuracy with optimized computational efficiency.

1 INTRODUCTION

Facial recognition and feature mapping are now

central to contemporary security, authentication, and

human-computer interaction systems. Facial

recognition systems have greatly in terms of real-time

capability, robustness, and accuracy due to advances

in machine learning and deep learning. The older

techniques were based on handcrafted features and

statistical models, whereas new techniques utilize

convolutional neural networks (CNNs) and deep

learning methods for automatic feature extraction and

classification. Facial recognition is the detection,

analysis, and identification of a person's face by

extracting distinguishing facial features like positions

of eyes, nose, and mouth. Feature mapping refines

this by generating organized representations of facial

features to facilitate efficient identification in varying

lighting, poses, and occlusions.

This study aims at creating a facial recognition

system using machine learning that involves feature

mapping methods for higher accuracy and scalability.

The system employs deep learning models to learn

meaningful facial embeddings with high precision in

verifying identities. The proposed method has usage

in multiple applications such as surveillance,

biometric authentication, access control, and

personalized user interfaces.

The rest of the paper explains methodology, dataset,

model structure, performance measurement, and

possible uses of the system with its advantages and

disadvantages.

2 RESEARCH METHODOLOGY

2.1 Research Area

Personalized face feature mapping and facial

recognition systems completely rely on machine

learning, especially the most sophisticated deep

learning techniques, into heightening speed and

accuracy. The entire process is complete with data

collection, preprocessing, model selection, training,

testing, and deployment.

Data Collection.

• It collects all the dataset of face images from

an archive of public databases as well as

captures every single day.

• Robustness added over these images is

created with lights, poses, expressions and

occlusions.

478

Priyadarshini, G., Utejitha, G., Tejaswini, D., Swathi, K. and Renuka, A.

Facial Recognition and Feature Mapping with Machine Learning.

DOI: 10.5220/0013867900004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 1, pages

478-484

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

Data Preprocessing.

• asl's methodology offers a solution for face

detection by two algorithms: MTCNNs

(multi-task cascaded deep convolutional

networks) and haar cascades.

• Histogram equalization and affine

transformations are used to align and

normalize the faces present into maximum

uniformity as in features.

• Model generalizes using some different

methods like n-data augmentation based on

rotation, flipping, or brightness adjustment.

Feature Extraction & Mapping.

• Face embedding extraction through

Convolutional Neural networks (i.e.,

VGGFace, FaceNet, or ResNet)

• Dimensionality reduction provides much

closer approximation to given features as

PCA or t-SNE does.

• Assign facial key points with geometric

relationships of features improve

recognition.

Model Training & Optimization.

• First, the face features are extracted from a

user and then they are going to be matched

against the ML classifiers such as SVM, k-

NN, or Softmax classifiers for deep learning

models.

• Adam and RMSprop, for example, with

batch normalization will be utilized to

facilitate convergence.

• Hyper-parameter tuning by use of dropout

and transfer-learning to improve the

efficiency of the mode.

Performance Evaluation.

• The performance of the model measures by

standard values like accuracy, precision,

recall, F1-score, and confusion matrix.

• Strength of the real-world test concerning

variations in pose, illumination, and

occlusion.

• As an enrichment comparison with current

models in the facial recognition field.

System Deployment.

• Real-world deployment of trained models

today happens through edge computing,

cloud computing, or now embedded models

in systems.

• Also, profusely studies are underway on

integrating these into security systems,

mobile apps, or biometric verification.

2.2 Research Area

This research involves the related fields of computer

vision, machine learning, artificial intelligence, and

biometrics with the following common objectives:

• Deep Learning in Facial Recognition:

Application of the method to the actual question

of face recognition was tackled using a very deep

architecture of convolutional neural networks.

• Feature Mapping Techniques: The methods of

feature mapping include geometric and

embedded methods that carry the power to

achieve enhanced accuracy in the domain of

facial recognition.

• Biometric Authentication: Innovations to

provide secure and reliable verification based on

face biometrics.

• Real-time Image Processing: Lowering the

algorithm's overhead for deployment in real-time

applications such as surveillance, security, and

personalized AI systems.

• Edge Computing and IoT Integration:

Application of facial recognition models in

embedded platforms for smart surveillance and

authentication solutions.

3 LITERATURE REVIEW

3.1 Parkhi, O: M., Vedaldi, A., &

Zisserman, A - (2015)

Title: Deep Face Recognition.

Abstract: Here in, the research is interested in the

process of deep learning for face recognition through

a very strong deep convolutional neural network that

makes use of a very large dataset for the training

process. In fact, the model very quickly learns high-

dimensional feature embeddings for discriminating

between individuals with great accuracy from

millions of face images. So, the paper demonstrates

the importance of feature representation and shows

the significance of the model over any previous

classical methods. The authors also mention different

loss functions and optimization methods for

calculating the performance of the face verification.

The effectiveness of deep learning for facial

Facial Recognition and Feature Mapping with Machine Learning

479

recognition is validated by high benchmark datasets

equivalent like LFW that show very high accuracy for

recognition experiments.

3.2 Schroff, F., Kalenichenko, D., &

Philbin, J: (2015)

Title: FaceNet: A Unified Embedding for Face

Recognition and Clustering.

Abstract: Nowadays, FaceNet introduces a pure end-

to-end deep learning model which maps a given

person's face images directly to a compact Euclidean

space in which distances correspond to how similar

the faces seem to be. Instead of a classifier, FaceNet

learns to project all facial images into the feature

space through a triplet loss function to ensure that

photos of the same person's face appear closer while

having maximum distance with the different ones. It

achieves very high accuracy in face verification and

clustering tasks, where it overcomes many of the

traditional methods which were feature-based and

hand-crafted. The research demonstrated a scalable

and efficient face recognition of real-world

applications with deep neural networks.

3.3 Taigman, Y., Yang, M., Ranzato,

M., & Wolf, L: (2014)

Title: DeepFace: Closing the Gap to Human-Level

Performance in Face Verification.

Abstract: DeepFace introduces a deep learning-

driven facial recognition model that comes close to

human-level accuracy. The authors use a deep neural

network that is trained on a massive set of labeled face

images to enhance facial feature extraction and

representation. Using 3D face alignment methods and

deep network structures, the system greatly enhances

the robustness of recognition in different poses and

illumination conditions. The model exceeds 97%

accuracy on benchmark data and poses a new

standard for deep learning-based biometrics. The

work highlights the efficacy of deep learning in

closing the gap between the facial recognition

capability of machines and humans.

3.4 He, K., Zhang, X., Ren, S., & Sun,

J: (2016)

Title: Deep Residual Learning for Image

Recognition (ResNet).

Abstract: As the present paper does not restrict itself

to face recognition, it introduces the structure of

ResNet that is almost entirely based on a deep

learning model and scales image classification tasks.

Deep residual learning is suggested by the authors to

assist with the issue of the vanishing gradient and to

allow training deep networks at an extremely high

level. This factor of skip connection, induced by

ResNet, improves feature extraction and

generalization, thus becoming one of the most widely

preferred backbones among the latest face

recognition models. This paper encompasses various

ways in which deep residual networks improve

accuracy and efficiency on complex vision tasks in

areas such as face recognition and feature mapping.

3.5 Cao, Q., Shen, L., Xie, W., Parkhi,

O: M., & Zisserman, A - (2018)

Title: VGGFace2: A Dataset for Recognizing

Faces across Age, Pose, and Illumination.

Abstract: Presented is a large dataset for facial

recognition against variation in age, pose, and

illumination. The principal idea is to strengthen deep-

learning face-recognition models by introducing

large binomial variations in pose, age, and lighting

conditions. The authors train a CNN-based face-

recognition model on the dataset in an attempt to

achieve improved generalization on real applications.

The work demonstrates the importance of having

such robust datasets in constructing efficient face-

verification systems. Further, the paper ends with

benchmark analyses and comparisons among several

CNN architectures, strengthening the need for high-

quality training data for pattern recognition.

4 EXISTING SYSTEM

Facial recognition systems have evolved throughout

the ages, utilizing classical image-processing

methods but now mostly favoring deep-learning

paradigms. The existent methods of facial recognition

deal with several aspects relating to feature

extraction, classification, and matching of images to

identify a particular individual.

4.1 Traditional Methods

The older facial recognition systems relied on

handcrafted feature-extraction techniques such as

Eigenfaces, Fisher faces, and LBP1. They aimed at

statistically analyzing the facial structure but suffered

from variations in lighting, posing, and occlusions,

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

480

leading to degraded performance of recognition

systems in terms of accuracy.

4.2 Machine Learning-Based

Approaches

The accuracy of support vector machine (SVM), K-

nearest neighbor (KNN), and principal component

analysis (PCA) models was improved because of

improvements in machine-learning technology. Yet,

these models were unable to overcome the different

limitations they possessed. They also need extensive

preprocessing to handle the intricate facial variations

present within their range of analysis.

4.3 Deep Learning-Based Systems

Current face recognition methods tend to involve

deep learning architecture (particularly convolutional

neural network) like DeepFace, FaceNet, and

VGGFace. They rely on models learned from many

features of high-dimensionality drawn directly from

the images and consequently achieve significantly

increased accuracy and insensitivity compared to

conventional models. They are expensive in terms of

very large databases, immense processing power

required during training, and hi-end computing

machines to apply in real time.

4.4 Limitations of Existing Systems

• High Computational Cost: Deep learning

programs are rampantly run on high-end

GPUs and require enormous processing

power.

• Data Privacy Concerns: Storage and

processing facial data also mean security

and privacy risks.

• Vulnerability to Spoofing: Most systems

can be spoofed with the help of images,

videos, or 3D masks posing a security threat.

• Challenges with Variations: Though AI

has advanced, issues like lighting, pose, or

occlusion remain pertinent.

5 PROPOSED SYSTEM

The proposed next-generation facial feature mapping

and recognition system enhances match accuracy,

security, and efficiency through the use of real-time

processing with state-of-the-art deep learning

methods. In contrast to older approaches, the new

system offers a deep learning-based model, such as

FaceNet or VGGFace2, directly mapping facial

features into an embedding space for further

recognition under varying conditions. A newly

introduced hybrid feature mapping technique

combines deep-learning methods with statistical

methods such as Principal Component Analysis

(PCA) for optimal performance under adverse

conditions such as low-light or occlusion and Local

Binary Patterns (LBP).

Enabling its real-time recognitions using Edge

AIs, running simple neural networks on embedded

hardware like NVIDIA Jetson Nano, or Google Coral

TPU. This tremendously saves on computation cost

and latencies, as opposed to cloud-dependent systems

which enable the facial matching procedure to be

faster and efficient. Advanced anti-spoofing

mechanisms-deep sensors, blink detection, micro-

expression analysis, and infrared imaging are

designed to make the system spoof resistance against

photo, video, or 3D mask attacks.

Data privacy is one of the major issues in

biometric systems and is addressed by the proposed

system through on-device processing to keep facial

data from being analyzed off devices. Private

biometric data should be stored as encrypted

embeddings rather than raw images. This prevents

unauthorized access through the secure hashing and

encryption of biometric data. By thus keeping private

information out of reach of unauthorized users, it

increases trust by users among other conditions for

compliance with data protection standards while

maintaining a high-security level.

5.1 Architect

This system enables a large-scale application by

connecting it to a secure cloud-based facial

recognition service. This makes it possible to have a

centralized database and remote access, along with

scalability, on encrypted communication for data

security. The proposed system, therefore, combines

deep learning, real-time processing, advanced

security measures, and privacy-preserving techniques

to greatly enhance accuracy, efficiency, and

reliability in real-world scenarios for facial

recognition.

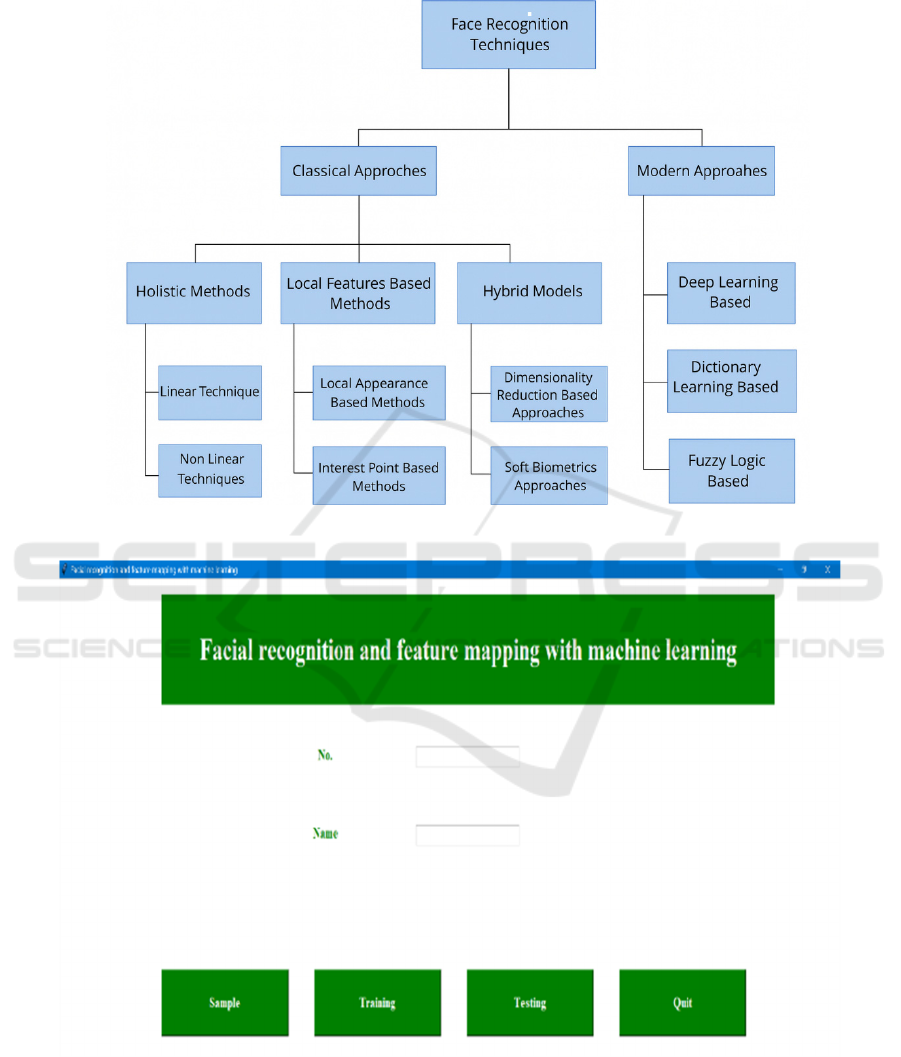

Figure 1 shows the Face Rec Taxonomy.

Figure 2 shows the Facial Recognition GUI. Figure 3.

Shows the Facial Recognition Training Console.

Facial Recognition and Feature Mapping with Machine Learning

481

6 RESULTS

Figure 1: Face Rec Taxonomy.

Figure 2: Facial Recognition Gui.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

482

Figure 3: Facial Recognition Training Console.

7 CONCLUSIONS

Facial recognition and feature mapping have certainly

evolved remarkably with all the difficulties

associated with accuracy, safety, and real-time

processing. The developed system features well

optimally deep learning models, well advanced

hybrid-feature extractions, and Edge AIs for facial

recognition at the minimal cost. Moreover, it uses

deep learning-based embedding and statistical feature

mapping techniques that improve recognition with

dynamic conditions such as low-light, occlusions, and

pose changes.

The incorporation of real-time processing through

Edge AI will cater to fast execution of functions,

along with reduced dependency on clouds for making

the system cost-effective and scalable. Also, the anti-

spoofing, depth sensing, and micro-expressions

analyses further provide the security of the system

against fraud access. Thus, this model becomes more

robust in its applications in the real world-from

surveillance security to high-end biometric

authentication.

The critical aspect of the biometric systems is

privacy and data security, which are addressed in the

proposed technique through device processing and

encryption of biometric data storage. Moreover,

assuring that the facial data is stored in no raw images

but processing securely, the system minimizes any

unauthorized access and breach risks. Additionally,

this increases trust and regulatory compliance making

the system a more reliable candidate for large-scale

roll-out.

In summary, this brings a paradigm shift in the

entire ecosystem of today in so far as facial

recognition systems are concerned. It will be

characterized by high accuracy, real-time

performance measures, strong security mechanisms,

and best-in-class privacy protection. Deep learning

and hybrid feature mapping would be a fundamental

reason for Edge AI's wide popularity in applications

such as surveillance, identity verification, and access

control as it speeds and scales the leap to secure,

intelligent, and biometrically recognized

technologies.

REFERENCES

Abate, A. F., Nappi, M., Riccio, D., & Sabatino, G. (2007).

2D and 3D face recognition: A survey. Pattern

Recognition Letters, 28(14), 1885–1906.

Ahonen, T., Hadid, A., & Pietikainen, M. (2006). Face

recognition with local binary patterns. IEEE

Transactions on Pattern Analysis and Machine

Intelligence, 28(12), 2037–2041.

Computer Vision and Pattern Recognition (CVPR), 815–

823.

Conference on Computer Vision and Pattern Recognition

(CVPR), 770–778.

Dutta, A., Chattopadhyay, A., & Chaudhuri, S. (2019).

Liveness detection in facial recognition using deep

learning. Journal of Visual Communication and Image

Representation, 60, 532–543.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual

learning for image recognition. Proceedings of the

IEEE

Facial Recognition and Feature Mapping with Machine Learning

483

Li, H., Lin, Z., Shen, X., Brandt, J., & Hua, G. (2015). A

convolutional neural network cascade for face

detection. Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition (CVPR),

5325–5334.

Masi, I., Wu, Y., Hassner, T., & Natarajan, P. (2019). Deep

face recognition: A survey. Proceedings of the IEEE

International Conference on Automatic Face & Gesture

Recognition (FG), 1–8.

Parkhi, O. M., Vedaldi, A., & Zisserman, A. (2015). Deep

face recognition. British Machine Vision Conference

(BMVC), 1–12.

Phillips, P. J., Grother, P., Micheals, R. J., Blackburn, D.

M., Tabassi, E., & Bone, M. (2003). Face recognition

vendor test 2002: Evaluation report. NIST Technical

Report, 1–37.

Schroff, F., Kalenichenko, D., & Philbin, J. (2015).

FaceNet: A unified embedding for face recognition and

clustering. Proceedings of the IEEE Conference on

Sun, Y., Wang, X., & Tang, X. (2014). Deep learning face

representation from predicting 10,000 classes.

Proceedings of the IEEE Conference on Computer

Vision and Pattern Recognition (CVPR), 1891–1898.

Taigman, Y., Yang, M., Ranzato, M., & Wolf, L. (2014).

DeepFace: Closing the gap to human-level performance

in face verification. Proceedings of the IEEE

Conference on Computer Vision and Pattern

Recognition (CVPR), 1701–1708.

Turk, M., & Pentland, A. (1991). Eigenfaces for

recognition. Journal of Cognitive Neuroscience, 3(1),

71–86.

Viola, P., & Jones, M. (2001). Rapid object detection using

a boosted cascade of simple features. Proceedings of the

IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), 511–518.

Wang, M., Deng, W. (2020). Deep face recognition: A

survey. Neurocomputing, 429, 215–244.

Zhang, K., Zhang, Z., Li, Z., & Qiao, Y. (2016). Joint face

detection and alignment using multi-task cascaded

convolutional networks. IEEE Signal Processing

Letters, 23(10), 1499–1503.

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

484