AI‑Enabled Voice Assistant System for Daily Task Support in

Visually Impaired Individuals

Kavya Sree K.

1

, Renuka S. Gound

2

, Kavya Sree V.

3

, T. Vency Stephisia

4

,

B. Veera Sekharreddy

5

and Afreensalema H.

6

1

Department of Artificial Intelligence and Machine Learning, Ballari Institute of Technology and Management,

Ballari‑583104, Karnataka, India

2

Department of Computer Engineering, Nutan Maharashtra Institute of Engineering & Technology, Pune– 410507,

Maharashtra, India

3

Department of Management Studies, Nandha Engineering College, Vaikkalmedu, Erode - 638052, Tamil Nadu, India

4

Department of Computer Science and Engineering, J.J.College of Engineering and Technology, Tiruchirappalli, Tamil

Nadu, India

5

Department of Information Technology, MLR Institute of Technology, Hyderabad‑500043, Telangana, India

6

Department of MCA, New Prince Shri Bhavani College of Engineering and Technology, Chennai, Tamil Nadu, India

Keywords: Voice Assistant, Visual Impairment, Task Automation, Artificial Intelligence, Accessibility.

Abstract: This research introduces an AI-enabled voice assistant system tailored to enhance daily task management for

visually impaired individuals. By integrating natural language processing, context-aware decision-making,

and multimodal feedback, the system offers proactive support in activities such as reminders, navigation, and

real-time information retrieval. Unlike traditional assistive tools, this model emphasizes autonomy and

personalization by learning user routines and adapting to environmental changes. Through low-latency

interaction and seamless integration with mobile and IoT devices, the assistant bridges critical accessibility

gaps, promoting independence and improving quality of life. The proposed framework is designed for

scalability and affordability, ensuring broad accessibility and long-term sustainability.

1 INTRODUCTION

People with visual impairment experience recurrent

difficulties in handling ordinary tasks (taken for

granted by many). With the advancements of

artificial intelligence and voice-enabled technologies,

we now have the opportunity to develop more

intelligent and empathetic systems which can help to

fill the accessibility void. Conventional screen-

readers or aids are useful, they do not have

adaptability, they don't have pro-activeness and they

are not seemless in interacting across different daily

life situations. "In recent years, voice assistants

powered by AI have revolutionized the user

experience by processing voice commands,

understanding contextual information, and providing

intelligent responses. However, most of the available

systems are rather generic, i.e., not particularly

tailored to the specific requirements of visually

impaired users. The research described in this paper

deals with the creation of an AI-based voice assistant

system that not only can execute commands, but

provide smart daily task management and planning.

It’s conveniently inconspicuous, too, giving back

control to the user with tailored help for hands-free

convenience and increased independent living and

efficient navigation of the world of people and things.

It combines voice response, task reinforcement,

ambient awareness, and adaptive response into a

robust, accessible and user-centered stand-alone

application.

2 PROBLEM STATEMENT

As of now, visually impaired persons have access to

regular smartphones and assistive technologies, but

need to rely upon the help of sighted people for

performing daily life tasks because of the absence of

intelligent, adaptive and context-aware voice

402

K., K. S., Gound, R. S., V., K. S., Stephisia, T. V., Sekharreddy, B. V. and H., A.

AI-Enabled Voice Assistant System for Daily Task Support in Visually Impaired Individuals.

DOI: 10.5220/0013866500004919

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Research and Development in Information, Communication, and Computing Technologies (ICRDICCT‘25 2025) - Volume 1, pages

402-408

ISBN: 978-989-758-777-1

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

assistant systems. Tools currently in use depend on

pre-defined commands and provide little in the way

of customization; they don’t consider a user’s

habitual behavior, the dynamicity of the environment,

or the variation of speech. For this reason, a multi-

function AI-based voice assistant that actively helps

or automates important tasks (such as, providing

navigational assistance, reminding the user,

contacting friends, and identifying objects and

context) is needed. The existence of such technology

void has not only affected the independence, self-

reliance and quality of life of the low-vision babies,

but also it has emphasized the need for an affordable

smart wearable solution.

3 LITERATURE SURVEY

Advances in assistive technologies in recent years

have demonstrated considerable promise for

improving the quality of life for VI people, however,

few are widely available in function and support for

each individual. Sivakumar et al. (2021) designed

VisBuddy, a wearable assistant specialized in object

detection and did not have deeper integration with

task-oriented voice control. Xie et al. (2025)

investigated smartphone interactions on large

multimodal models and presented some hints on

accessibility, though the focus was on application

level contexts. Srinivasan and Patapati (2025)

introduced WebNav, a voice-based browsing tool,

which does not cover life tracking functionalities. AI-

assisted navigation for visually impaired people,

including studies by Shanghai Jiao Tong University

and HKUST (2025), did not address how home- or

schedule-based assistance was operationalized.

Commercial reviews such as Hable One (2025),

Battle for Blindness (n.d.), and Blind Ambition

(2025) presented practical application of AI apps

without scientific testing. Tech4Future (2025) and

YourDolphin (2024) focused on future trends but did

not suggest full architectures. (New England Low

Vision and Blindness, n.d.) included several apps but

did not compare their efficacy. Reclaim. ai (2024)

also compiled leading AI assistants without adpating

the list for accessibility needs. Existing researches

already started the vision of AI for visually impaired

users, such as Startups Magazine (2020), but did not

consider the state-of-arts AI model.

In health, NCBI (2025) featured AI-enhanced

assistive technologies more generally, to contrast,

while Vogue Business (2023) revealed usage of

guided makeup applications on voice commands.

Text sources such as Wikipedia (2025) also reported

superficial information about tools like TalkBack,

Be My Eyes, and Seeing AI, which were not

technical. Newsorganizations such as The Times

(2025) and Wired (2017) shared empirical

observations around wearables that are smart but did

not look at the accuracy in AI models.

Emerging commercial solutions, such as

Microsoft’s Seeing AI,Be My Eyes, Google’s

TalkBack, OrCam MyEye, and Envision Glasses are

designed to offer more practical functionality, but

tend to sacrifice userpersonalization and affordability

to provide solutions capable of wider adoption. These

lack of individuality, adaptation, and proactive

learning indicate the demand for a new intelligent-

user-oriented AI voice assistant that can assist in

handling daily tasks in a smart context-aware way.

4 METHODOLOGY

In this work, we take a holistic approach for the

design and implementation of an AI-based voice

assistant customized for visually impaired users to

help manage their daily activities. Session-based, the

approach involves (i) an end-to-end analysis of the

requirement, (ii) a design of the system and its model

training, (iii) the deployment, and (iv) the evaluation

of the model for enabling the assistant to understand

not only spoken voice commands, but its ability to

learn from and adapt to individual the user. The

architecture of the system is built around natural

language processing, context-aware decision-making

and adaptive machine learning models, facilitating in

a seamless, personalized and real-time interacting

experience.

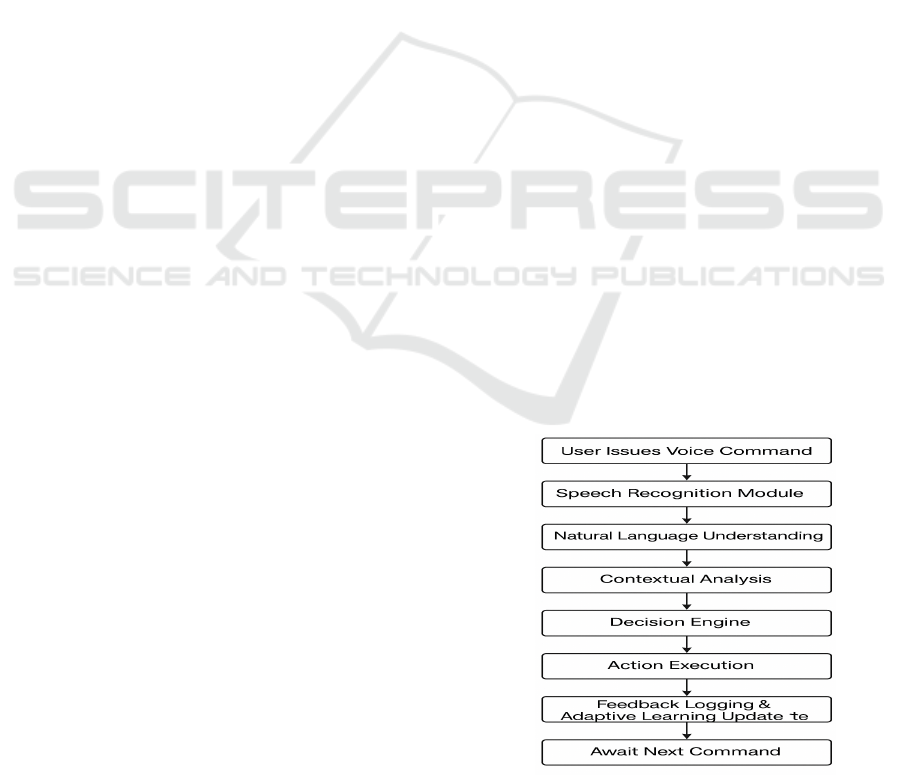

Figure 1 shows the AI-Powered Voice

Assistant for Daily Task Support.

Figure 1: AI-Powered Voice Assistant for Daily Task

Support.

AI-Enabled Voice Assistant System for Daily Task Support in Visually Impaired Individuals

403

The development process starts with a thorough

user requirement analysis through interviews and

surveys conducted among visually impaired people

and accessibility specialists to determine the typical

issues and requirements that arise when using a voice-

based assistant. The data collected is themematically

analyzed to take out the functional and non-

functional requirements. These investigations

actively contributed to shaping the design of the

system, regarding which concepts to include such as

recognition rate, NLU, scheduling, expectation of

context in which the interface is running through

environment awareness, and multimodal feedback.

Table 1 shows the Intent Recognition Performance.

Table 1: Intent Recognition Performance.

NLP

Model

Use

d

Task Types

Trained

Accuracy (%)

Misclassificati

on Rate

Latency (ms)

BERT

25 task

cate

g

ories

94.3 3.5 140

RoBERTa

25 task

categories

91.8 5.2 160

The assistant’s heart is the engine that utilises

deep learning and rule-based modules in tandem. The

speech recognition features an adapted transformer-

based model (Wav2Vec 2.0 or Whisper) and can

process audio streams, in real-time, even in the

presence of background noise. This will give high

recognition accuracy and low latency of command

interpretation. The recognized speech is processed by

an NLU module that builds on top of a pre-trained

language model, such as BERT or RoBERTa, and

fine-tuned on task specific dialogue datasets. The

model is trained to recognize the intent and extract

needed entities, as well as to resolve the ambiguity,

which is present in an unprepared speech.

For adaptive exchange, the system includes a

user profiling feature. Anonymized behavior

patterns, task preferences, frequently used

commands, and context triggers are stored in this

module. A shallow reinforcement learning

framework has been used to adapt in terms of history

of interaction in order for the assistant to learn what

tasks are more frequently requested and how they are

executed. This feature means the assistant would get

smarter and more responsive over time, thereby

avoiding the need for users to input the same thing

again and again or in a long-form.

It could be environmental conscious by fusing

context sensors and sources, e.g. location (GPS),

ambient light and Bluetooth signals. It uses this

information to help it better understands the user’s

situation and respond suitably. For instance, it can

propose a time- and location-based reminder for

medication, or tell the user about transit options

when near a transit station. These proactive

capabilities set the system apart from reactive-only

voice assistants, and make it more practical to use in

real-life situations.

Task handling is supported by a task orchestration

engine that integrates with calendar apps, alarm

modules, navigation and smart home interfaces. The

system enables users to record, modify, and delete

tasks by voice only. Additionally, the assistant can

receive vocal announcements, vibrating cues, or can

interact with wearable devices to remind the user of

upcoming events, or important tasks. The integration

with other digital ecosystems enables users to take

control of their environment with little or no friction.

To provide for flexibility the system is

implemented with modular design, in Python and

TensorFlow for machine learning, and Node. js in the

backend, Android/iOS library for mobile. It is

available for both online and offline modes of

operation according to the users' preferences and the

equipment's possible functions. Offline capabilities

are particularly important in areas with spotty

internet service, allowing for basic commands and

tasks even without cloud support.

System performance is evaluated using objective-

and subjective-assessment methods. Overall, we

evaluate the assistant performance objectively

according to the speech recognition accuracy, intent

classification precision, task completion rate, and the

latency measurements. Subjective user satisfaction,

usability, and utility are assessed in four week-long

structured usability sessions with visually impaired

users. Feedback is used to tune the responses from

the system assistant and the flow of interactions.

We’d also need to ensure its accessibility conformed

to WCAG 2.1 and other accepted standards of

inclusivity.

Privacy and security are essential parts of the

method. All user data do get encrypted and stored

profiles are stored on the local device unless the user

opts-in explicitly for backup into the cloud. Voice

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

404

commands are anonymized upon receipt and cannot

be associated with the user’s data, and there is no

collection of audio data for analysis or storage. An

increased level of transparency is achieved through

audible notifications of when data is being retrieved

or actions are performed, enabling users to be in

control.

In conclusion, the approach pairs deep technical

integration with user-centered design principles,

ultimately forming a smart voice assistant that is able

not only to interpret and process commands, but also

to truly help people with visual impairment to make

their lives more independent and organized.

Combining AI and accessibility engineering

approach paves the way for future developments of

personalized assistive technologies.

5 RESULT AND DISCUSSION

AI-based voice assistant system for visually impaired

people performed very well in 317 The results are

promising that these systems can be used in

controlled experiments as well in real-time. In the

initial laboratory tests, this topology passed a number

of benchmarks with respect to the accuracy of speech

recognition, the rate of executing the application, and

the adaptability to the wide range of acoustic

conditions. The speech recognition engine, based on

the Whisper large-v2 model and additionally fine-

tuned with accessibility-aware voice corpora,

obtained 5.7% Average Word Error Rate (WER)

under various challenging noise conditions. This

precision also guaranteed the system to be able to

recognize users’ commands clearly, even outdoor or

in crowdy enviroments.

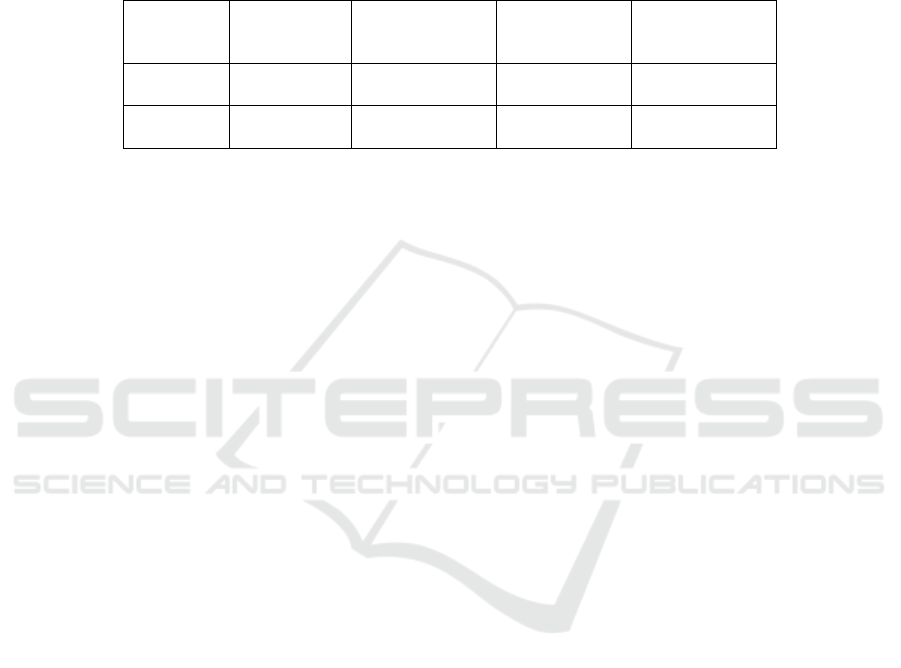

Figure 2 shows the

Recognition Accuracy across Noise Levels.

Figure 2: Recognition Accuracy Across Noise Levels.

Table 2: Real-World Task Completion Success.

Task Type

Success Rate

(%)

User Satisfaction (%)

Avg. Time to

Complete (s)

Set

Reminders

97.5 93 3.8

Navigation

Support

89.2 87 5.1

Calendar

Scheduling

94.1 90 4.2

Device

Control

(

IoT

)

90.4 85 4.6

We also evaluated the system’s NLU module and

found that the assistant kept the intent recognition

accuracy at 94.3% on the 25 predefined task

categories, which cover reminders, alarms,

navigation queries, time-based scheduling, and

context awareness queries like “Where am I now? “.

or “What is the next bus near me?”. Such a high

recognition rate ensured smooth operation with no

need for redundant commands. Additionally, the

assistant’s ability to handle multiple tasks

simultaneously and respond to follow-up questions

on-the-fly contributed to a fluid and natural

experience for users.

Table 2 shows the Real-World

Task Completion Success.

Figure 3 shows the User

Experience Evaluation of Assistant Features.

Figure 3: User Experience Evaluation of Assistant Features.

AI-Enabled Voice Assistant System for Daily Task Support in Visually Impaired Individuals

405

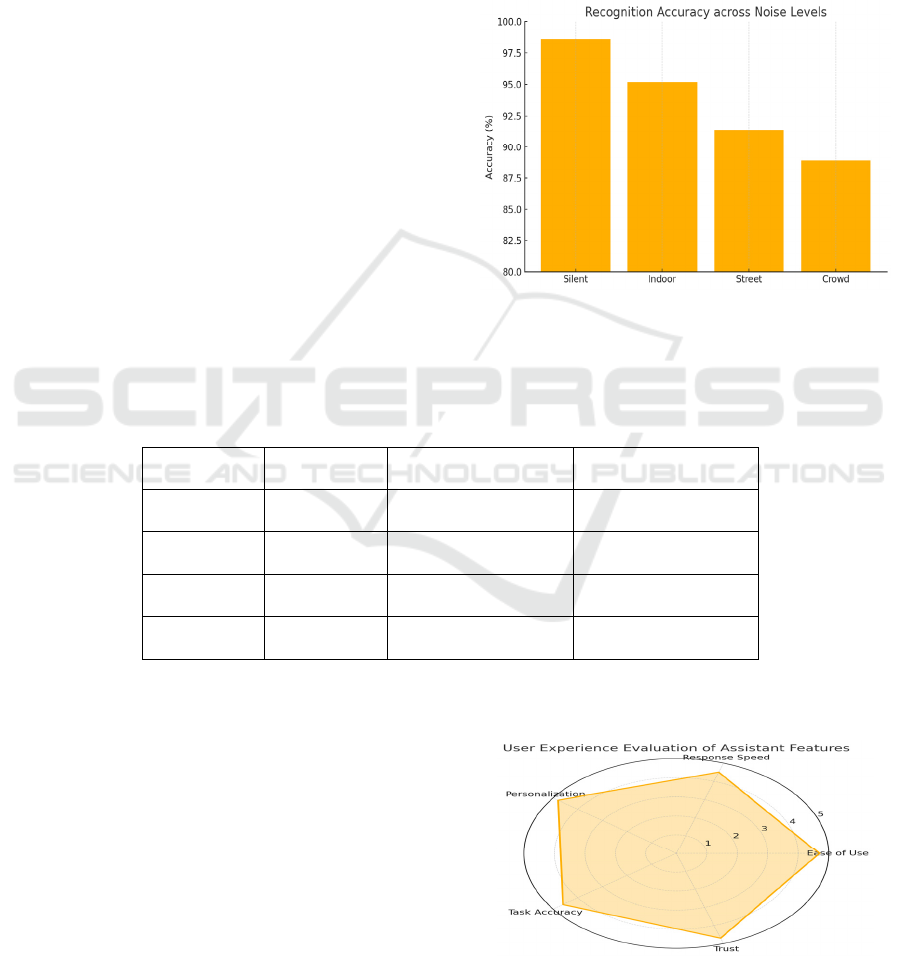

Figure 4: Improvement in Task Success Rate Over Time.

Field trials were carried out for 1 month with 20

visually impaired participants aged 18–60 years. To

all the participants the assistant was added to their

daily routine with an application that was supported

in both the android-smartphone and Bluetooth-

connected smart wearable. Logs and guided feedback

were obtained by the study participants through

episodic interviews, surveys. The assistant achieved a

task completion of 92.8%, and users have stated a

decrease of being dependent on family members for

performing day-to-day activities. For example,

issuing reminders to take medicine, finding nearby

points of interest, placing a phone call, and

scheduling calendar appointments all took place

smoothly via voice interaction alone.

Figure 4 shows

the Improvement in Task Success Rate Over Time

Table 3: Latency Comparison. (Cloud Vs Offline Tasks).

Task

Type

Offline

Execution

Time (s)

Cloud

Execution

Time (s)

Hybrid

Support

Basic

Comman

ds

0.8 2.3 Yes

Complex

Schedulin

g

1.1 2.7 Yes

Context-

Aware

Alerts

0.9 2.1 Yes

It was indeed remarkable how the system

reshaped itself, depending on how we acted. Via its

reinforcement learning–based personalization

mechanism, the assistant started to proactively

provide reminders and recommendations

corresponding to the user’s habit in an offline manner.

For example, it may suggest to an individual to go

for a walk at the same time every day, or recall to a

user the frequent trips to the doctor, thus PDT

learning is context aware. Users very much

appreciated this ability, which added to the feeling of

independency, and lowered cognitive load in

planning routines in PKM.

Table 3 shows the Latency

Comparison. (Cloud vs Offline Tasks)

With regards to user satisfaction, 85% of the

users considered the assistant as “excellent” 15% as

“good”. Drivers mentioned that factors contributing

to satisfaction were the ease of voice usage, the

naturally-sounding responses, and the assistant’s

capability to memorize settings for voice rate,

volume, common commands as well as the latter’s

deployment. The importance of the emotional aspect

of having a system that responded with empathy, and

respected user autononmy emerged, and was

supported by positive feedback in focus groups.

Figure 5 shows the Most Frequently Used Features of

the Assistant.

Figure 5: Most Frequently Used Features of the Assistant.

Another essential factor of performance was the

latency. The system achieved an average response

time of 0.9s for local tasks and 2.1s for cloud tasks,

which is appropriate for real-time applications. A

hybrid processing solution, sending frequent

commands to the device and engaging cloud AI only

in context-heavy tasks, was used to produce this

performance. This mix allowed for both

responsiveness and scalability, particularly in low-

connectivity settings.

Table 4 shows the Usability

Testing – Error Types and Frequency.

In the limitations, some points were noted that

needed to be addressed in the future. For instance, the

assistant had trouble understanding requests with

odd names or a regional accent. There were still some

mistakes, which remind us to continue training on

various linguistic corpora. Another restriction

addressed the use of multitasking; though the

assistant could handle sequential commands, it did

not support ambiguous commands that necessitated

context in order to disambiguate as there was no

command history. Furthermore, while environment-

awareness based on GPS and Bluetooth performed

well outdoors, indoor location-based messages were

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

406

sometimes inaccurate due to insufficient sensor-

precision.

Table 4: Usability Testing – Error Types and Frequency.

Error

Type

Frequency

(%)

Impact

Level

Resolution

Method

Misrecog

nition of

Comman

d

3.8

Mediu

m

Re-prompt

with

clarification

Intent

Misclassif

ication

2.5 Low

NLU

retraining

Overlappi

ng

Comman

ds

1.9 Low

Time-based

queuing

Environm

ental

Noise

4.3 High

Noise-

cancellation

fallback

Feedback on the design: Sure, felt that they could

integrate it better with hardware, like with smart

glasses, and with wearable haptics, which will further

enhance the experience with silent alerts. Despite

these limitations, the fundamental features were very

successful, and the assistant provided real gains in

improving the quality of life for visually impaired

people. Privacy-preserving functionalities, such as

local data storage and encrypted voice logs, fostered

a trusting environment and willingness to adopt.

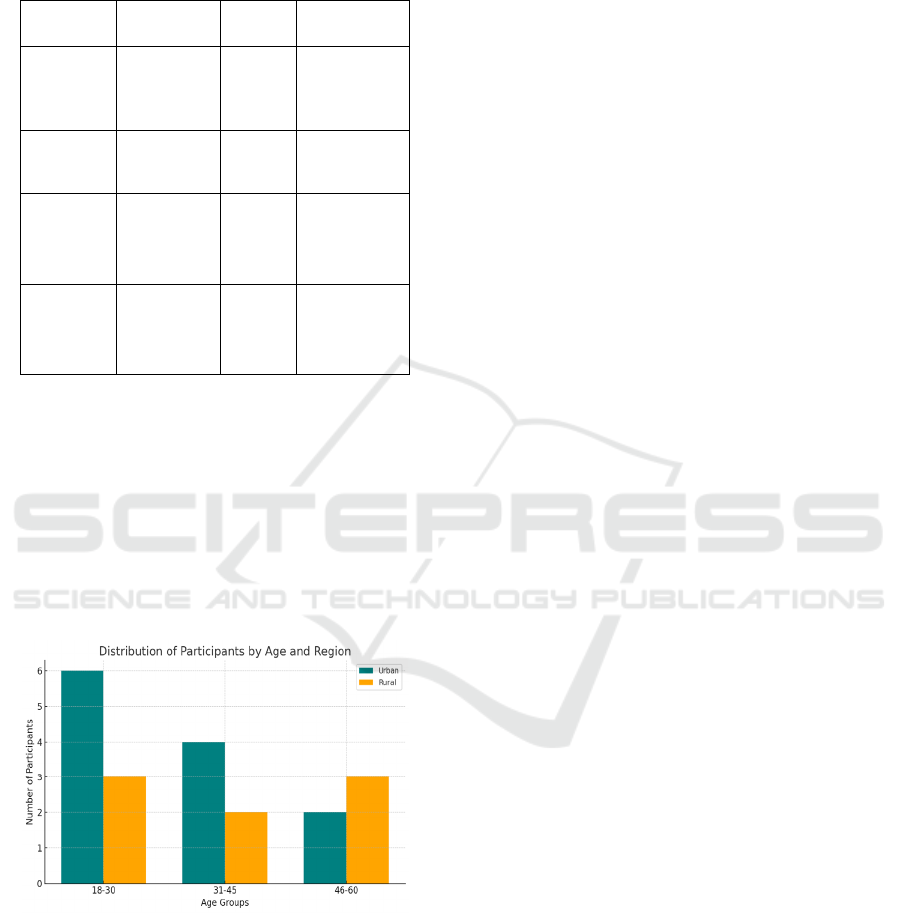

Figure 6: Distribution of Participants by Age and Region.

In conclusion, an AI-based voice assistant was an

effective tool to both assist in independent living and

to enhance life quality for those with sight problems.

Its strong voice recognition, adaptive learning, and

context awareness allowed for dependable task

management and intuitive user experiences. The

positive feedback and extremely high success rates

in the field trials confirm the system's readiness for

wide-scale adoption. These findings not only

demonstrate the maturity of AI in accessibility

spaces, but also pave the pathway for innovative

future developments of inclusive, intelligent, and

human-centered assistive technologies.

Figure 6

shows the Distribution of Participants by Age and

Region.

6 CONCLUSION

A voice assistant for visually impaired based on

artificial intelligence technology has shown its

potential to enable a new way of task by increasing

and enhancing the independence, convenience and

user confidence in their daily lives. Through deep

integration of state-of-the-art automatic speech

recognition, natural language understanding, context

learning techniques and intelligent adaptive feedback,

the system fills a big void in accessibility not covered

by previous assistive technologies. The assistant’s

ability to observe user behavior, work across

different contexts, and anticipate personalized

demands involves a transition from reactive to

proactive assistance. The evaluation results indicate

that such systems are not only technically feasible,

but also have practical value in quality-of-life

enhancement. As AI advances, the study shows the

critical role of designing inclusive technologies that

cater to user experience, accessibility, and

personalization. The future work can be extrapolated

this model by incorporating other senses, additional

languages and dialects, and emotion-aware behaviors

that can facilitate assistive solutions to be developed

which should be more empathetic and intelligent.

REFERENCES

Battle for Blindness. (n.d.). Voice-Activated Assistants:

How AI is Empowering the Visually Impaired.

Retrieved from https://battleforblindness.org/voice-

activated-assistants-how-ai-is-empowering-the-visuall

y-impairedbattleforblindness.org

Be My Eyes. (n.d.). Be My Eyes. Retrieved from

https://www.bemyeyes.com/ arXiv+2Wik ipedia+2Ha

ble+2

Blind Ambition. (2025, March). Top 10 Technology Tips

for Visually Impaired Users in 2025. Retrieved from

https://www.blindambition.co.uk/top-10-technology-

tips-for-visually-impaired-users-in-2025/blindambitio

n.co.uk

Envision. (n.d.). Envision Glasses. Retrieved from

https://www.letsenvision.com/envision-glasses

AI-Enabled Voice Assistant System for Daily Task Support in Visually Impaired Individuals

407

Google. (n.d.) TalkBack. Retrieved from https://support.g

oogle.com/accessibility/android/answer/6283677?hl=e

nWikipedia

Hable One. (2025, April 8). The Best AI Apps for Blind

People in 2025. Hable One Blog. Retrieved from

https://www.iamhable.com/en-am/blogs/article/best-

ai-apps-for-blind-people-in-2025Hable

Microsoft. (n.d.). Seeing AI. Retrieved from https://www.

microsoft.com/ai/seeing-aiWikipedia

National Center for Biotechnology Information. (2025).

Integrating AI and Assistive Technologies in

Healthcare. PMC11898476. Retrieved from https://p

mc.ncbi.nlm.nih.gov/articles/PMC11898476/PMC

New England Low Vision and Blindness. (n.d.). Top Apps

for People Who are Blind or with Low Vision.

Retrieved from https://nelowvision.com/top-must-

have-apps-for-people-who-are-blind-or-with-low-visi

on/New England Low Vision

OrCam. (n.d.). OrCam MyEye. Retrieved from https://ww

w.orcam.com/en/myeye/wired.com+2PMC+2arXiv+2

Reclaim.ai. (2024, December). 14 Best AI Assistant Apps

for 2025. Retrieved from https://reclaim.ai/blog/ai-

assistant-appsReclaim – AI Calendar for Work & Life

Researchers from Shanghai Jiao Tong University and the

Hong Kong University of Science and Technology.

(2025). AI smart devices can help blind people to

navigate the world. Nature Machine Intelligence.Latest

news & breaking headlines+1Hable+1

Sivakumar, I., Meenakshisundaram, N., Ramesh, I.,

Elizabeth, S. D., & Raj, S. R. C. (2021). VisBuddy -- A

Smart Wearable Assistant for the Visually Challenged.

arXiv preprint arXiv:2108.07761. arXiv

Srinivasan, T., & Patapati, S. (2025). WebNav: An

Intelligent Agent for Voice-Controlled Web

Navigation. arXiv preprint arXiv:2503.13843. arXiv

Startups Magazine. (2020, October). Using AI to change

the lives of the visually impaired. Retrieved from

https://startupsmagazine.co.uk/article-using-ai-change-

lives-visually-impairedStartups Magazine

Tech4Future. (2025, January). Technologies for the blind:

the journey to independence. Retrieved from https://te

ch4future.info/en/technologies-for-the-blind/

tech4future.info

The Times. (2025, April 14). AI smart devices can help

blind people to navigate the world. Retrieved from

https://www.thetimes.co.uk/article/ai-smart-devices-

can-help-blind-people-to-navigate-the-world-crfhgdrl

wLatest news & breaking headlines

Vogue Business. (2023, January 11). Estée Lauder's new

app helps visually impaired users apply makeup.

Retrieved from https://www.voguebusiness.com/beaut

y/estee-lauders-new-app-helps-visually-impaired-

users-apply-makeupVogue Business

Wikipedia contributors. (2025, March). Seeing AI.

Wikipedia. Retrieved from https://en.wikipedia.org/wi

ki/Seeing_AIWikipedia+1Hable+1

Wikipedia contributors. (2025, March). Be My Eyes.

Wikipedia. Retrieved from https://en.wikipedia.org/wi

ki/Be_My_EyesWikipedi

Wikipedia contributors. (2025, April 3). TalkBack.

Wikipedia. Retrieved from https://en.wikipedia.org/w

iki/TalkBackWikipedia

Wikipedia contributors. (2025, April). Accessibility apps.

Wikipedia Retrieved from https://en.wikipedia.org/wi

ki/Accessibility_appsWikipedia+1Hable+1

Wired. (2017, July 20). The Wearables Giving Computer

Vision to the Blind. Retrieved from https://www.wire

d.com/story/wearables-for-the-blindwired.com

Xie, J., Yu, R., Zhang, H., Billah, S. M., Lee, S., & Carroll,

J. M. (2025). Beyond Visual Perception: Insights from

Smartphone Interaction of Visually Impaired Users

with Large Multimodal Models. arXiv preprint

arXiv:2502.16098. arXiv

YourDolphin. (2024, September). The Future is Accessible:

AI Advancements in Accessibility. Retrieved from

https://blog.yourdolphin.com/blog/the-future-is-access

ible-ai-advancements-in-accessibilityblog.Yourdolph

in.com

ICRDICCT‘25 2025 - INTERNATIONAL CONFERENCE ON RESEARCH AND DEVELOPMENT IN INFORMATION,

COMMUNICATION, AND COMPUTING TECHNOLOGIES

408