Fire-Resistant Wall-Climbing UAV for Victim Detection in Urban

Search and Rescue Missions

Vedant Hambire

*

, Harsh Yadav, Dipshikha Hazari

and Satyam Singh

National Institute of Technology, Agartala, India

Keywords: Wall-Climbing UAV, Fireproof Drone, Urban Search and Rescue (USAR), YOLOv8, Optical Flow,

MediaPipe, Tilt-Rotor Mechanism, CFD, FEA, ROS, Alive Human Detection.

Abstract: Unmanned Aerial Vehicles (UAVs) have become invaluable in high-stakes search and rescue operations in

fire-prone and fire-damaged environments due to their capabilities in victim locating and situation analysis.

This paper describes the design, simulation, and realization of a fire-resistant, wall-climbing UAV with a

human alive detection system powered by AI. The UAV includes a custom-designed H-frame made out of

PLA which is thrust vectoring EDFs attached to a tilt-rotor system permitting vertical hovering and traversing.

Structural and aerodynamic aspects were verified with FEA and CFD simulations performed on SimScale.

To allow for autonomous victim detection, the UAV system includes a real-time human detector based on

YOLOv8 with and optical flow and MediaPipe-based eye tracking to classify people as conscious,

unconscious, dead, or blocked out. The UAV's mission computer, which comprises a Raspberry Pi with ROS,

records would-detect status and location, and outputs tagged geo-coordinates for mission planning in real-

time. Simulation and ground testing would confirm the system’s viability in heat-intensive, debris-laden

environments, advancing the development of autonomous aerial platforms for disaster response, firefighting,

and urban search and rescue (USAR) operations.

1 INTRODUCTION

Unmanned Aerial Vehicles (UAVs) have emerged as

crucial instruments in a variety of fields over the past

ten years, such as search and rescue, surveillance, and

disaster response (Mulgaonkar et al., 2016), (Dudek

& Jenkin, 2010). UAVs that can withstand high-risk

situations like industrial settings, collapsed

structures, and fire-prone areas are becoming more

and more necessary for these applications.

Despite their proficiency in aerial reconnaissance,

traditional multirotor platforms are constrained by

their poor wall traversal capabilities, heat sensitivity,

and incapacity to identify incapacitated victims

(Murphy, 2004). Researchers have developed

fireproof UAVs with thermal insulation layers, such

as Nomex, ceramic coatings, or silica aerogels, to get

around these limitations. These layers protect

electronic systems from high ambient temperatures

(Zhang et al., 2021), (Lee et al., 2020), (Tan et al.,

2021). Wall-climbing UAVs that draw inspiration

from biological systems like geckos, insects, and bats

have been developed as a result of parallel

*

Corresponding author: vedanthambire568@email.com

developments in surface adhesion and locomotion

(Kim et al., 2018), (Li et al., 2017). These systems

scale vertical or inverted surfaces using suction

mechanisms (Sun et al., 2021), electro-adhesion

(Spenko et al., 2012), or magnet-based gripping

(Wang et al., 2021). Most are less effective in multi-

hazard environments because they lack integration

with fire survivability or robust perception systems,

despite being effective in confined spaces.

UAVs can now accurately identify human targets

on their own thanks to recent advancements in real-

time computer vision, especially in object detection

using deep learning models like YOLO (You Only

Look Once) (Redmon et al., 2018). But YOLO by

itself is unable to distinguish between people who are

conscious, unconscious, or deceased. In order to

close this gap, scientists have integrated optical flow

to identify subtle thoracic movements that are

suggestive of breathing (Akhloufi et al., 2020),

(Sharma & Mahapatra, 2021). In our work, we

evaluate breathing using the Farneback method on

grayscale frame differences, which enables the

system to classify victims into subtle states.

Hambire, V., Yadav, H., Hazari, D. and Singh, S.

Fire-Resistant Wall-Climbing UAV for Victim Detection in Urban Search and Rescue Missions.

DOI: 10.5220/0013838500003982

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 22nd International Conference on Informatics in Control, Automation and Robotics (ICINCO 2025) - Volume 2, pages 417-424

ISBN: 978-989-758-770-2; ISSN: 2184-2809

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

417

We use MediaPipe FaceMesh, a landmark

detection framework created by Google, to further

evaluate consciousness. This framework allows for

accurate measurement of Eye Aspect Ratio (EAR),

which infers blink rates and eye closure (Zhang et al.,

2020). A complete "alive detection" pipeline that can

classify data in real time into four different states—

Alive & Conscious, Alive but Unconscious, Dead,

and Obstructed/Not Visible—is made possible by the

combination of YOLOv8, optical flow, and

MediaPipe. This system would be powered by a small

Raspberry Pi 4B onboard computer that runs ROS

and connects via MAVLink to a flight controller that

is compatible with Pixhawk. The UAV would be

equipped with an MTF-01P optical flow sensor for

indoor navigation and Intel RealSense cameras for

depth and odometry, making it ready for both

controlled and unpredictably changing field

conditions.

To ensure stable control during wall contact,

transition, and flight, we would implement a Model

Predictive Control (MPC) architecture. This control

strategy adapts across three behavioural modes—

sticking, tilting, and climbing—with specific

optimisations for wheel torque, gear actuation, and

thrust modulation. The UAV’s dynamic model is

simulated using ROS2 and Gazebo, ensuring that the

control algorithm respects physical constraints and

delivers energy-efficient performance.

Our work therefore unifies fireproofing, surface

adhesion, advanced vision-based perception, and

predictive control into a single hybrid UAV platform,

addressing multiple gaps in current USAR (Urban

Search and Rescue) technologies. The integration of

resilient materials, sensor-rich electronics, and deep

learning allows this system to operate autonomously

in highly dynamic and hazardous environments.

2 DESIGN STRATEGY

The proposed UAV is inspired by the tilt-rotor wall-

climbing concept introduced by Myeong and Myung

(Myeong & Myung, 2020), where thrust vectoring

and surface traction enable stable vertical attachment.

This design incorporates fire-resistant structural

elements and an AI-based alive detection system

tailored for disaster zones. The UAV uses a modified

H-frame made of PLA and carbon fibre rods for

optimal strength-to-weight performance. Developed

in SolidWorks and analysed in ANSYS, the frame

withstands perching and wall-impact loads. Four

EDFs (two front, two rear) are mounted on servo-

actuated tilting arms, enabling transitions between

horizontal flight and vertical climbing. MG995 servos

drive a 1:1 gear system for smooth tilt control, with a

selected angle of 59.2° optimised for wall adhesion

(friction coefficient = 0.5). Passive wheels assist

vertical mobility post-attachment. CFD simulations

in SimScale confirm stable aerodynamics near walls;

FEA validates joint and strut integrity. For victim

detection, a Raspberry Pi runs YOLOv8 with optical

flow analysis to monitor thoracic motion,

distinguishing live victims. On detection, GPS

coordinates are transmitted to responders. This builds

on approaches by Patel et al. (Patel et al., 2020) and

Li et al. (Li et al., 2021), highlighting deep learning's

role in disaster-zone reconnaissance. Upon detection,

the drone transmits GPS coordinates to responders.

This builds on approaches by Patel et al. (Patel et al.,

2020) and Li et al. (Li et al., 2021), who demonstrated

deep learning’s value in disaster-zone

reconnaissance.

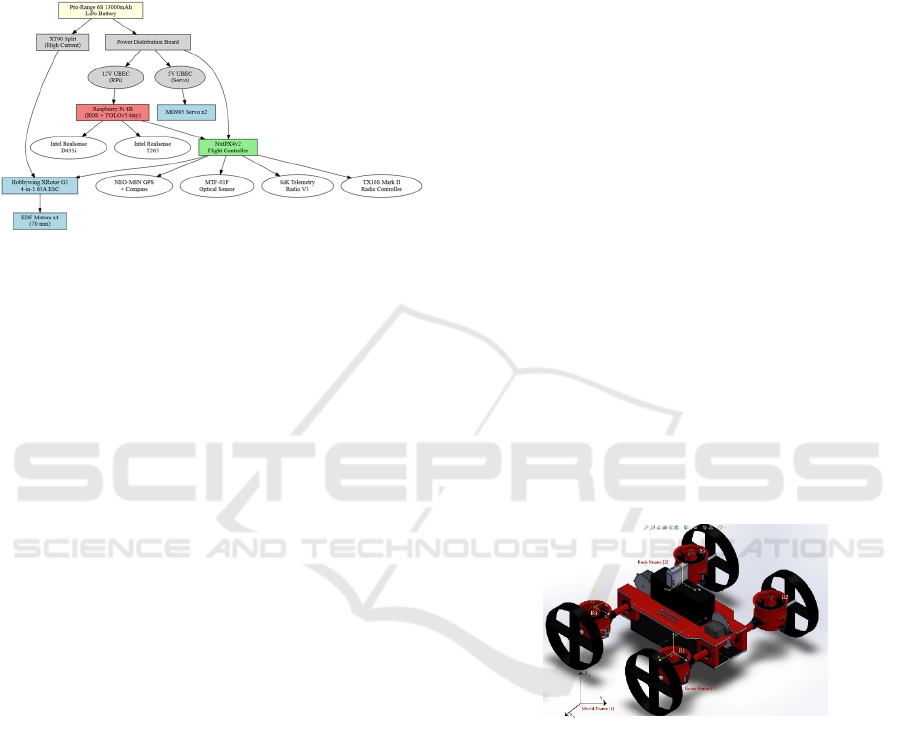

Figure 1: Final SolidWorks model of the wall-climbing

UAV; isometric view of the UAV showing the tilt-rotor H-

frame layout with EDFs and passive wall-contact wheels;

Front view illustrating vertical alignment of ducted fans and

the symmetric gear-driven shaft for tilt control.

2.1

Drone

Frame

The UAV uses a compact H-frame designed in

SolidWorks, optimised for structural rigidity and

balanced load distribution. The main frame is 3D

printed in PLA and laterally reinforced with carbon

fibre rods supporting the rotating EDF modules. A

165 mm-wide central platform includes precise

cutouts for mounting the servo-tilt system,

NxtPX4V2 flight controller (sandwiched for

vibration damping), Raspberry Pi, battery, and

passive wheels aiding wall climbing and stability.

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

418

Figure 2: SolidWorks sketch of the PLA frame with key

dimensions and cut-outs for mounting components. Top

view of the UAV assembly showing component placement

and symmetric EDF-wheel layout.

2.2 Tilt Rotor Mechanism

The UAV employs four EDF motors (two front, two

rear) housed in custom PLA cases that function as

both enclosures and supports for the wall-climbing

mechanism. These cases are mounted on a 16 mm

carbon fibre shaft driven by a 1:1 spur gear system

coupled to an MG995 high-torque servo, enabling

symmetric tilting of the EDFs. Following the tilt-rotor

approach of Myeong and Myung (Myeong & Myung,

2020), this design allows smooth transitions between

horizontal flight and wall adhesion. Each EDF case

also integrates a secondary mount for wall-contact

wheels, redesigned with ball bearings for low-friction

movement along vertical surfaces. The wheels,

fabricated from ABS for impact resistance and low

weight, support wall climbing, while the required tilt

angle (θ) is determined using equation (1).

tan(θ) = mg/μT (1)

Where m is UAV mass, g is gravity, T is thrust per

motor, and μ is the friction coefficient. For a UAV

mass of 2 kg, EDF thrust of 1.25 kgf per unit, and μ =

0.5, the calculated optimal tilt angle is approximately

59.2°, aligning with the previous research findings

(Myeong & Myung, 2020). This mechanism allows

the UAV to redirect thrust perpendicularly during

perching and revert for free flight, enabling hybrid

aerial-wall locomotion.

Figure 3: Top view of the servo-driven spur gear

mechanism enabling symmetric EDF tilt via a carbon fibre

shaft. Isometric view of the tilt-rotor gear assembly

integrated into the UAV frame.

2.3 Electronics

The UAV is equipped with electronics tailored for

high-thrust propulsion, autonomous wall-climbing,

and onboard human detection. At its core is the

NxtPX4V2 flight controller, handling motor control,

sensor fusion, and stabilisation. Propulsion is

delivered via four Powerfun 70 mm EDF motors

(2.2 kgf thrust each), driven by a Hobbywing XRotor

G2 4-in-1 65A ESC with 6S input and DShot1200

protocol for low-latency response. A Pro-Range

13000mAh 6S 25C LiPo battery (1.56 kg) powers the

system, offering ~8.5 minutes of flight and a 1.67

thrust-to-weight ratio, enabling stable lift and

climbing. Power is split via XT90 connectors, with

two UBECs (5V and 12V) providing isolated supply

to MG995 servos (for tilt-rotor actuation) and the

onboard compute unit.

Flight Time (minutes) = ( C × V × η / P ) × 60

Using the values:

(Battery Capacity) C = 13 Ah, (Battery Voltage) V =

22.2 V, (Discharge Efficiency) η = 0.85, (Average

Power Consumption) P = 1450 W

Flight Time ≈ 8.5 minutes

This estimated value is based on moderate hover and

cruise conditions. Final validation will be done using

telemetry logs and stopwatch measurements during

prototype testing. For perception and positioning, the

UAV uses the MTF-01P optical flow and range

sensor, NEO-M8N GPS with compass, and SiK

Telemetry Radio V3 for long-range communication.

Two Intel RealSense cameras D435i (depth) and

T265 (odometry) enable indoor navigation and visual

detection. These feed into a Raspberry Pi 4B running

ROS for onboard AI inference, including a YOLOv5-

tiny model for real-time human detection and optical

flow-based breathing analysis. The Pi communicates

with the flight controller via MAVLink for decision-

making. The system is manually operable through a

TX16S Mark II radio with fail-safe support via SiK

link.

To meet power and endurance requirements

within a 1.5 kg battery limit, a Tattu 6S 12000 mAh

25C LiPo battery was selected. Delivering 22.2 V

nominal voltage and up to 300 A continuous

discharge, it comfortably supports the combined

260 A draw of four EDFs rated at 65 A each.

Weighing ~1.43 kg, the battery keeps the UAV’s all-

up weight (AUW) at 4.5 kg, as confirmed by the

integrated mass breakdown. Flight time is estimated

at ~8.5 minutes under high-load conditions. The four

Fire-Resistant Wall-Climbing UAV for Victim Detection in Urban Search and Rescue Missions

419

EDFs generate a total of 8.8 kgf static thrust. At a 60°

tilt, the vertical thrust component reaches ~7.5 kg,

yielding a thrust-to-weight ratio of 1.67, which

ensures stable vertical lift and wall-climbing. The

horizontal thrust (~4.6 kg) supports wall traction and

transition control.

Figure 4: Power and control architecture of the UAV,

illustrating the integration of the propulsion, perception,

computation, and communication subsystems

2.4 Fireproofing

To enhance survivability in fire-prone environments,

the UAV’s base frame is 3D printed in lightweight

PLA, ideal for rapid prototyping. However, due to

PLA’s low thermal resistance (~60 °C), it is coated

with Nomex a flame-retardant aramid fibre known for

high thermal stability and low weight. Widely used in

aerospace and firefighting gear, Nomex withstands

temperatures up to 370 °C without melting or

dripping, while maintaining structural integrity. With

a density of just 1.38 g/cm³, it adds minimal weight to

the drone.

2.5 Mathematical Model

The system is defined using three coordinate frames:

the world frame, body frame, and individual rotor

frames. The world frame (Xw, Yw, Zw) is a fixed

inertial reference, while the body frame (Xb, Yb, Zb)

is centered at the UAV’s center of gravity and used

for dynamic modelling and control. Each rotor (R1–

R4) has a local frame initially aligned with the body

frame but can rotate around the yaw axis and tilt as

actuated. The UAV uses four Electric Ducted Fan

(EDF) rotors, each capable of dual-axis tilting (pitch

and roll). Two servomotors per side enable paired

tilting: R1 with R4, and R2 with R3.

Thrust Vector in Rotor Frame

𝑇

⃗

=

0

0

𝑇

(Thrust acts along the rotor's local Z-axis)

Rotation Due to Dual-Axis Tilt

𝑅

=𝑅

𝛼 ⋅ 𝑅

𝛽

𝑅

𝛼=

10 0

0cos 𝛼−sin 𝛼

0sin 𝛼cos 𝛼

𝑅

𝛽=

cos 𝛽 0 sin 𝛽

010

−sin 𝛽 0 cos 𝛽

𝑇

⃗

=𝑅

𝛼 ⋅ 𝑅

𝛽⋅ 𝑇

⃗

Rotor Orientation w.r.t. Body Frame (Yaw Offset):

𝑅

𝜓

=

cos 𝜓

−sin 𝜓

0

sin 𝜓

cos 𝜓

0

001

Thrust in Body Frame:

𝑇

⃗

body

=𝑅

𝜓

⋅𝑅

𝛼 ⋅ 𝑅

𝛽⋅

0

0

𝑇

UAV Orientation in World Frame (Euler ZYX)

𝑅

=𝑅

𝜓 ⋅ 𝑅

𝜃⋅ 𝑅

𝜙

The rotation matrix from the body frame to the world

frame using the ZYX Euler angle convention (yaw 𝜓,

pitch 𝜃, roll 𝜙 ) is given by:

𝑅

world

body

=𝑅

𝜓

⋅𝑅

𝜃

⋅𝑅

𝜙

𝑅=

𝑐

𝑐

𝑐

𝑠

𝑠

−𝑠

𝑐

𝑐

𝑠

𝑐

+𝑠

𝑠

𝑠

𝑐

𝑠

𝑠

𝑠

+𝑐

𝑐

𝑠

𝑠

𝑐

−𝑐

𝑠

−𝑠

𝑐

𝑠

𝑐

𝑐

Figure 5: Reference frames of the UAV. The world frame

(Xw,Yw,Zw) is a fixed inertial frame. The body frame

(Xb,Yb,Zb) is attached to the UAV’s center of gravity

(CG).

Final Thrust Vector in World Frame is:

𝑇

⃗

world

=𝑅

world

body

⋅𝑇

⃗

body

𝑇

⃗

world

=𝑅

𝜓 ⋅ 𝑅

𝜃 ⋅ 𝑅

𝜙⋅ 𝑅

𝜓

⋅𝑅

𝛼 ⋅ 𝑅

𝛽⋅

0

0

𝑇

Where:

𝜓

: Yaw position of the rotor relative to the UAV

body, 𝛼,𝛽 : Rotor tilt angles (dual-axis), 𝑇 : Thrust

magnitude (in rotor frame)

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

420

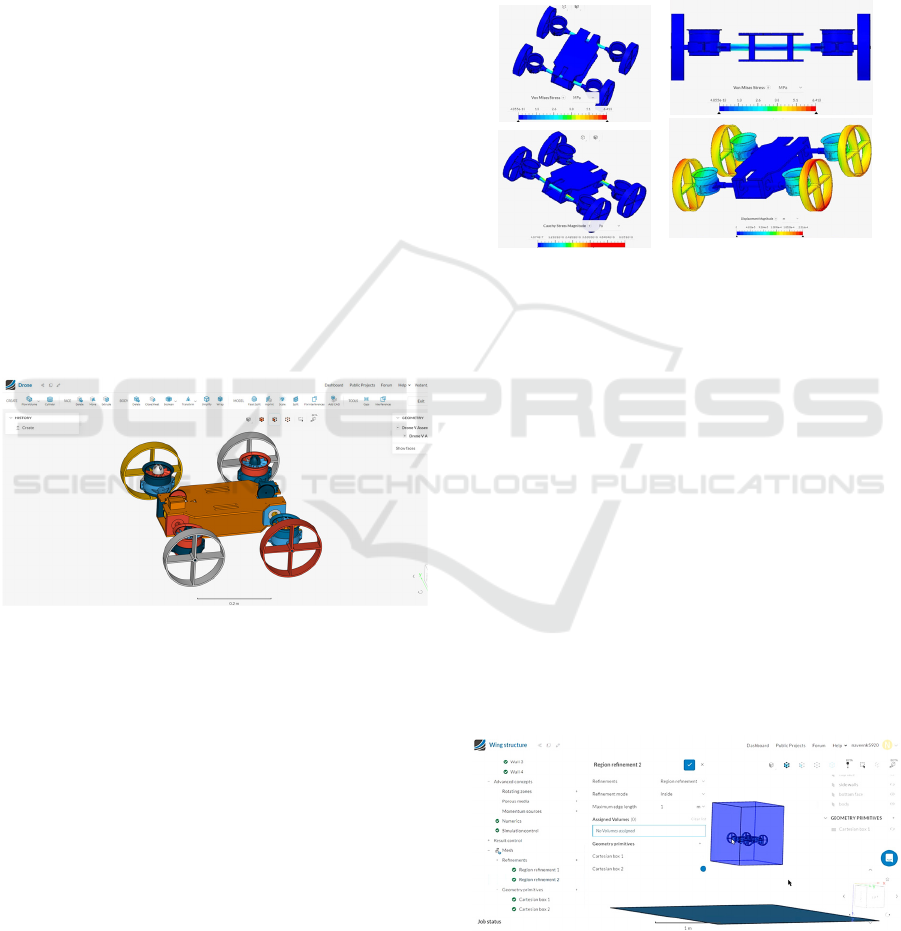

3 ANALYSIS

To ensure structural robustness, aerodynamic

efficiency, and fire resilience, the UAV was

simulated using SimScale. The frame was modelled

with Polylactic Acid (PLA), while 16 mm carbon

fibre rods reinforced the tilt mechanism and motor

shafts. Structural integrity was assessed via Finite

Element Analysis (FEA) to identify stress

concentrations under load. Computational Fluid

Dynamics (CFD) simulations examined airflow

during flight and wall climbing. The results informed

key design choices.

3.1 Frame Analysis

To evaluate the structural integrity of the UAV frame

under operational loading conditions, a static

structural analysis was performed using the Finite

Element Analysis (FEA) module in SimScale. The

frame model, derived from the SolidWorks assembly,

consists of PLA. A fine mesh was applied with

maximum edge length of 0.005 m and minimum of

0.001 m, generating approximately 944,000 nodes for

accurate stress resolution.

Figure 6: Meshing setup for structural FEA in SimScale.

The material properties used for PLA were:

Young’s Modulus of 3.5 GPa, Poisson’s ratio of 0.36,

and density of 1.24 g/cm³, while carbon fibre rods

were assumed to be rigid due to their high stiffness.

Boundary conditions included fixed supports at the

motor mount joints and load application points

corresponding to the EDF thrust force. A gravity

vector of 9.81 m/s² was applied globally to simulate

self-weight. Contacts between components were

defined as bonded, and the analysis assumes linear

elastic behavior with no material plasticity.

The simulation results revealed that the maximum

von Mises stress occurred at the junctions of the

carbon fibre rods and the PLA plates, especially

around the motor mount zones, with a peak stress of

approximately 48.3 MPa, which is well below PLA’s

typical yield strength (~60 MPa), ensuring a safe

stress margin. The displacement contour showed a

maximum deflection of around 1.83 mm. The Cauchy

stress distribution confirmed that stresses were

concentrated around bolt holes and load-bearing

corners, validating the structural importance of

reinforcement via carbon fibre rods. The factor of

safety was maintained above 1.25 throughout critical

regions.

Figure 7: FEA results showing von Mises stress

distribution, stress concentration near carbon fibre shafts,

Cauchy stress zones around load paths, and total

displacement.

3.2 CFD

Analysis

To assess the aerodynamic performance of the UAV

during both horizontal flight and inclined wall-

climbing phases, a steady-state incompressible

turbulent flow simulation was performed using the k-

omega SST model in SimScale. An inlet velocity of

15 m/s was specified at the front face, while the outlet

was set to zero relative pressure, replicating realistic

cruise conditions. All solid surfaces were treated as

no-slip walls, and the far-field boundaries were

defined with slip conditions. The mesh comprised

over 3.2 million cells, ensuring sufficient resolution

for boundary layer development and wake

interaction.

Figure 8: CFD domain setup in SimScale with refined mesh

regions enclosing the UAV to capture detailed boundary

layer behavior and wake dynamics under 15 m/s inlet flow.

Fire-Resistant Wall-Climbing UAV for Victim Detection in Urban Search and Rescue Missions

421

Viscous forces along the X-axis peaked at ~0.6 N,

reflecting aerodynamic drag opposing forward

motion, before stabilising in steady flow. Lateral and

vertical viscous forces were negligible, supporting

directional stability. Pressure moments were

strongest about the Y-axis (~1.2 N·m), indicating

possible yaw imbalance from asymmetric flow near

the EDF ducts, while X and Z-axis moments

remained negligible, confirming pitch–roll stability.

Porous moment analysis showed consistent Z-axis

resistance (~2 units), highlighting the need for yaw

compensation. Pressure forces were dominated by a

thrust-aligned X-axis component (~5 units), with

minimal fluctuations elsewhere.

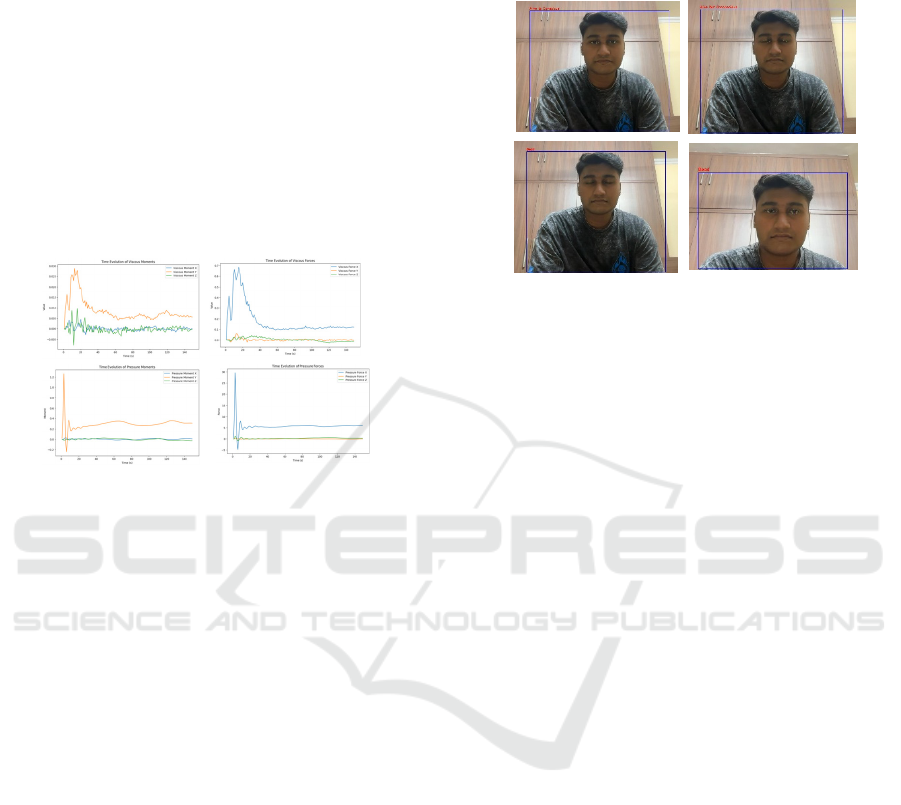

Figure 9: Time evolution plots of viscous and pressure

forces and moments from CFD simulation.

4 ALIVE DETECTION SYSTEM

In the domain of Urban Search and Rescue (USAR),

the ability to identify the presence and status of

human victims in obstructed or hazardous

environments remains a critical challenge. Past

approaches, as detailed in studies such as Ingle &

Chunekar (Ingle & Chunekar, 2016) and Kalaboina et

al. (Kalaboina et al., 2018), have relied on ultrasonic,

PIR, and low-resolution camera systems for motion

and heat-based human detection.

Traditional sensing methods struggle with static

or unconscious individuals and are prone to

environmental noise. To overcome this, we

implemented a computer vision-based alive detection

pipeline, simulated on a MacBook Air using its

webcam. The system integrates three modules: (i)

YOLOv8 for person detection, (ii) MediaPipe

FaceMesh to assess consciousness via Eye Aspect

Ratio (EAR), and (iii) Farneback optical flow to

detect breathing through pixel-level thoracic motion

over a temporal buffer. Each subject is classified as

Alive and Conscious (eyes open, breathing), Alive

and Unconscious (eyes closed, breathing), Dead (no

motion or eye activity), or Obstructed/Not Visible.

Outputs include timestamped detection logs, GPS

coordinates (simulated), and status saved in JSON

format for integration with geo-tagged rescue

interfaces.

Figure 10: Output states from the simulated alive detection

system showing classification into "Alive & Conscious",

"Alive but Unconscious", and "Dead" based on eye and

breathing activity.

We propose embedding the detection pipeline into the

wall-climbing UAV using Intel RealSense D435i

(depth/obstacle detection), RealSense T265 (pose

estimation), and an MTF-01P optical flow sensor,

managed through a Raspberry Pi running ROS. The

alive_detector.py node will publish real-time human

status, confidence, and obstruction flags, with depth

discontinuity aiding occlusion detection when YOLO

fails.

5 CONTROL SYSTEM

Model Predictive Control (MPC) governs the UAV’s

behaviour across three stages: sticking, tilting, and

thrust-based climbing. In the sticking phase, MPC

controls wheel torque to ensure zero wall-plane

velocity. During tilting, it regulates EDF gear rotation

for smooth, constrained motion. In the thrust phase,

MPC coordinates EDF thrust and wheel torque to

stabilise climbing while maintaining roll, pitch, and

tilt limits. The UAV model, built using URDF/Xacro,

includes structural and inertial details, focusing on

EDF and tilt joints. The MPC state vector includes

position, velocity, orientation, tilt angle, and thrust,

with inputs as thrust vectors and torques. Stage-

specific cost functions and constraints (e.g., torque

bounds, joint limits) ensure energy-efficient, stable

tracking.

For perception, the UAV uses an Intel RealSense

camera indoors to capture RGB-depth data for alive

detection via optical flow and pose estimation

through odometry. Outdoors, GPS is used for

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

422

mapping victim locations, enabling robust operation

across environments. To validate the control

framework, initial testing was conducted on a

standard quadcopter using PX4’s MPC Multirotor

Rate Model with body rate and thrust inputs. A

figure-eight reference trajectory was tracked at

constant altitude by discretizing parametric

equations, confirming smooth, accurate tracking thus

establishing a baseline for extending MPC control to

the more complex tilt-rotor UAV platform.

𝑥

𝑡

=𝑟𝑐𝑜𝑠

𝜔𝑡

𝑦

𝑡

=𝑟𝑠𝑖𝑛

2𝜔𝑡

𝑧

𝑡

=𝑧0

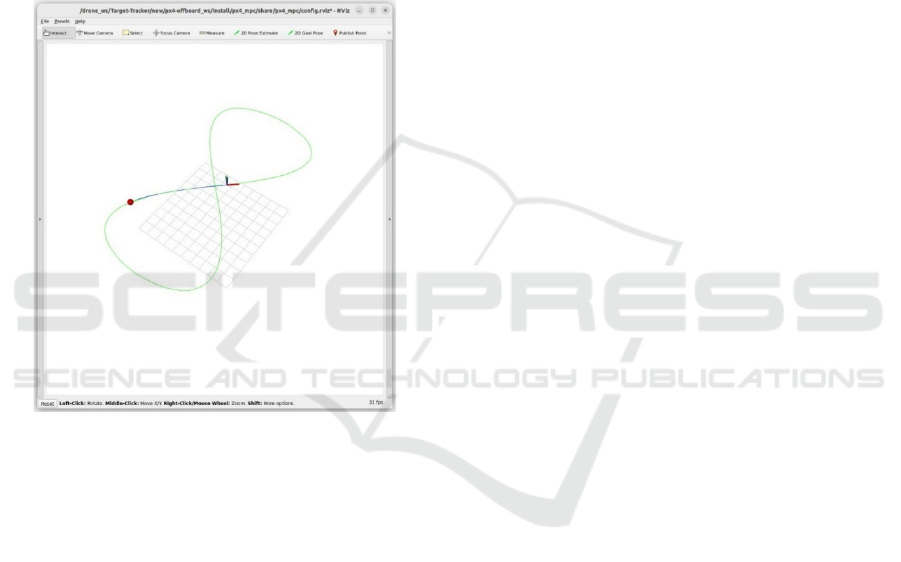

Figure 11: MPC-based quadcopter simulation in RViz

showing tracking of a figure-eight trajectory.

To ensure real-time tracking, the reference

trajectory was interpolated between sampled

waypoints so the MPC solver always received a

continuous setpoint. At each timestep, the control

loop retrieved the UAV’s state, computed tracking

error, constructed the MPC state vector and reference

horizon, and solved for optimal control inputs, which

were published as VehicleRatesSetpoint messages.

Visualisation in RViz was achieved using helper

functions that converted predicted state vectors into

PoseStamped messages and markers for intuitive path

comparison. Simulations demonstrated that the

quadcopter successfully tracked a figure-eight path

with smooth transitions and minimal deviation,

validating the MPC design. The green curve

(reference), red marker (current setpoint), and blue

path (predicted trajectory) in RViz confirmed

effective error minimisation and stability.

The MPC cost function minimized the error

between the predicted state and the figure-eight

reference while penalizing control effort:

min

:

|𝑥

−𝑥

ref

|

+ |𝑢

|

6 CONCLUSION

The design and simulation of a wall-climbing UAV

specifically suited for search and rescue missions in

dangerous, fire-prone areas are presented in this

paper. The UAV's servo-driven tilt-rotor mechanism,

which has been verified by structural and

aerodynamic analyses with SimScale, allows it to

transition between flight and vertical surface

adhesion. Strength, maneuverability, and thermal

resilience are guaranteed by essential elements like

high-thrust EDFs, PLA frames, and carbon fiber

reinforcements. Real-time sensors, a ROS-based

compute unit, and a specially designed alive detection

pipeline that can recognize human life signs using

YOLOv8, MediaPipe eye tracking, and optical flow-

based breathing detection are all integrated into the

UAV's electronics.

The detection system, successfully simulated on a

laptop, classifies individuals into four states: Alive &

Conscious, Alive but Unconscious, Dead, and

Obstructed. It logs results with GPS metadata for

mapping and rescue coordination. Future work will

involve full hardware integration, real-time testing

with ROS nodes, and validation of control strategies

through Model Predictive Control (MPC) in Gazebo.

The UAV will be fire-hardened using Nomex

insulation and tested in indoor and outdoor scenarios

to enable autonomous operation in real-world disaster

environments.

7 FUTURE WORK

In the next phase, we will focus on physical

prototyping and validation. A fully integrated UAV

will be fabricated using a PLA frame coated with

Nomex to improve fire resistance, with thermal

resilience assessed through heat flux and flame

exposure simulations. Indoor and outdoor trials will

evaluate surface stability, wall-climbing

performance, and autonomous navigation under

challenging conditions, including strong ambient

light where Intel RealSense sensors may

underperform. Real-time alive-detection inference

will be optimised on Raspberry Pi or NVIDIA Jetson

Fire-Resistant Wall-Climbing UAV for Victim Detection in Urban Search and Rescue Missions

423

modules, with endurance testing incorporating power

consumption measurements. Limitations in EDF

efficiency and flight time will be addressed through

revised propulsion strategies and adaptive energy

management.

REFERENCES

Akhloufi, T., et al. (2020). Optical flow-based motion

analysis for search and rescue. Computer Vision and

Image Understanding, 190, 102–118. https://doi.org/1

0.1016/j.cviu.2019.102847

Dudek, J., & Jenkin, M. (2010). Computational principles

of mobile robotics. Cambridge University Press.

Ingle, P., & Chunekar, R. (2016). Human detection in

disaster zones using PIR and ultrasonic sensors.

International Journal of Advanced Research in

Electronics and Communication Engineering, 5(7),

1201–1205.

Joseph, L. (2018). Learning robotics using Python (2nd

ed.). Packt Publishing.

Kalaboina, K., Mandala, S., & Kumar, M. S. V. (2018).

Smart human detection robot using PIR and ultrasonic

sensors. International Journal of Engineering Research

& Technology, 6(13), 141–145.

Kim, S., et al. (2018). Biologically inspired wall-climbing

robots. Annual Review of Control, Robotics, and

Autonomous Systems, 1, 365–391. https://doi.org/

10.1146/annurev-control-060117-105058

Lee, Y., et al. (2020). Fireproofing of UAV airframes using

ceramic insulation. Journal of Intelligent & Robotic

Systems, 97(2), 123–134. https://doi.org/10.1007/s1

0846-019-01076-7

Li, C., et al. (2017). Gecko-inspired climbing UAVs with

adhesive microstructures. Bioinspiration &

Biomimetics, 12(4). https://doi.org/10.1088/1748-319

0/aa7e59

Li, Y., Gao, J., & Zhang, H. (2021). AI-driven UAV

reconnaissance for post-disaster damage assessment.

IEEE Transactions on Humanitarian Technology, 9(3),

145–152. https://doi.org/10.1109/THT.2021.3076548

Mishra, N. (n.d.). Emergency response and search and

rescue. Retrieved from https://www.nikhileshmis

hra.com/emergency-response-and-search-and-rescue/

Mulgaonkar, C., et al. (2016). Power and weight

optimization for micro aerial vehicles. IEEE Robotics

& Automation Magazine, 23(4), 78–89. https://doi.org/

10.1109/MRA.2016.2601932

Murphy, R. (2004). Trial by fire: Lessons from rescue

robotics. IEEE Robotics & Automation Magazine,

11(3), 50–61. https://doi.org/10.1109/MRA.2004.133

7848

Myeong, H., & Myung, H. (2020). A bio-inspired quadrotor

with tiltable rotors for improved wall-perching and

climbing. IEEE/ASME Transactions on Mechatronics,

25(4), 1809–1819. https://doi.org/10.1109/TMECH.

2020.2969117

Patel, A., Deshmukh, R., & Srinivasan, M. (2020). Deep

learning-enabled UAVs for victim detection in disaster

zones. In International Conference on Intelligent

Systems (pp. 231–238). IEEE.

Redmon, J., et al. (2018). YOLOv3: An incremental

improvement. arXiv:1804.02767. https://arxiv.org/a

bs/1804.02767

Siegwart, R., Nourbakhsh, I. R., & Scaramuzza, D. (2011).

Introduction to autonomous mobile robots (2nd ed.).

MIT Press.

Sharma, A., & Mahapatra, A. (2021). Breath detection in

disaster victims using vision-based optical flow.

Procedia Computer Science, 186, 89–96. https://doi.

org/10.1016/j.procs.2021.04.103

Spenko, M., et al. (2012). Electroadhesion technology for

robotic perching. IEEE Transactions on Robotics,

28(5), 1079–1089. https://doi.org/10.1109/TRO.2012.

2208773

Sun, L., et al. (2021). Quadcopters with active suction

mechanisms for wall traversal. IEEE Access, 9, 11341–

11352. https://doi.org/10.1109/ACCESS.2021.3050234

Tan, R., et al. (2021). Thermal survivability of UAVs in

industrial fires. Sensors, 21(18), 1–14. https://doi.org/1

0.3390/s21186102

TheTechArtist. (n.d.). Robots for hazardous environments.

Retrieved from https://thetechartist.com/robots-for-

hazardous-environments/

Wang, H., et al. (2021). Magnetic wall-climbing drones for

structural inspection. Automation in Construction, 133,

103–122. https://doi.org/10.1016/j.autcon.2021.103982

Zhang, F., et al. (2020). MediaPipe face mesh: A real-time

face landmark system. Google AI Blog. https://ai.

googleblog.com/2020/08/mediapipe-facemesh.html

Zhang, W., et al. (2021). Fire-resistant UAV design with

silica-based aerogels. Materials Today: Proceedings,

36, 82–88. https://doi.org/10.1016/j.matpr.2020.04.373

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

424