Leveraging ROS to Support LLM-Based Human-Robot Interaction

Walleed Khan

1 a

, Deeksha Chandola

1 b

, Enas AlTarawenah

1 c

,

Baran Parsai

1 d

, Ishan Mangrota

2 e

and Michael Jenkin

1 f

1

Electrical Engineering and Computer Science, Lassonde School of Engineering, York University, Toronto, Canada

2

Information Technology, Netaji Subhas University of Technology, New Delhi, India

Keywords:

Robot Control, Avatar-Based Interface.

Abstract:

Large Language Model (LLM)-based systems have found wide application in providing an interface between

complex systems and human users. It is thus not surprising to see interfaces between autonomous robots also

adopting this strategy. Many modern robot systems utilize ROS as a middleware between hardware devices,

standard software tools, and the higher level system requirements. Here we describe efforts to leverage LLM

and ROS to provide not only this traditional middleware infrastructure but also to provide the audio- and text-

based interface that users are beginning to expect from intelligent systems. A proof of concept implementation

is described as well as an available set of tools to support the deployment of LLM-based interfaces to ROS-

enabled robots and stationary interactive systems.

1 INTRODUCTION

As robots move out of the lab and into the world

there is an increasing need to focus on developing

robots that humans are willing to engage and inter-

act with. Supporting this interaction may involve de-

veloping robots that provide a face, either realistic or

cartoonish, to provide a focus for interaction. Adding

a face to a robot can be beneficial (Altarawneh et al.,

2020) but incorporating a visual display such as that

shown on the robot in Figure 1 requires providing a

software infrastructure that supports the integration

of the visual appearance (an avatar) with the robot.

Adding such a display also introduces the need to an-

imate the avatar and provide mechanisms to drive the

avatar with realistic speech. Early efforts, such as the

one shown in Figure 1 relied on pattern-based chat-

bot technology to provide responses to queries of the

robot. The development of Large Language models

(LLMs) provides a more effective mechanism to drive

the interaction.

There have been a number of efforts to leverage

the capabilities of Large Language and Foundational

a

https://orcid.org/0000-0002-8945-4329

b

https://orcid.org/0000-0003-3654-0351

c

https://orcid.org/0009-0004-8744-1139

d

https://orcid.org/0009-0004-1329-7429

e

https://orcid.org/0009-0008-0766-8966

f

https://orcid.org/0000-0002-2969-0012

Figure 1: Interacting with a robot equipped with a visual

avatar. Developing a system that provides such interaction

mechanisms requires a software infrastructure to support

generation and interaction with the embedded visual dis-

play.

Models to support the process of developing useful

and user friendly software for robot control. For ex-

ample, the ROScribe package (Technologies, 2005)

can be used to assist in the development of novel ROS

packages while Mower et al. (2024) describes a sys-

tem in which an LLM constructs plans from a set of

atomic actions and standard mechanisms to assem-

ble them. But LLMs also find application in terms

of transforming standard user interaction mechanisms

402

Khan, W., Chandola, D., AlTarawenah, E., Parsai, B., Mangrota, I. and Jenkin, M.

Leveraging ROS to Support LLM-Based Human-Robot Interaction.

DOI: 10.5220/0013831200003982

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 22nd International Conference on Informatics in Control, Automation and Robotics (ICINCO 2025) - Volume 2, pages 402-409

ISBN: 978-989-758-770-2; ISSN: 2184-2809

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

Figure 2: LLM-based interaction technology can be used to

support a stationary user interface display as shown here.

The software shown here supports multiple visual avatars.

User input is via visual and audio channels and the user can

choose between different animated avatars.

into specific robot actions. For example, Wang et al.

(2024) describes a system that monitors the user and

then uses an LLM to transduce multi-modal commu-

nication into commands to the robot. Here we are

interested in leveraging an LLM to provide a more

natural interface to a user interacting with a mobile

robot or with a stationary avatar-based system such as

the one shown in Figure 2.

Merging LLM-based systems with robot control

software involves integrating two very different mid-

dleware software architectures. A common strat-

egy in LLM-based systems is the use of a retrieval

augmented generation (RAG) approach (Gao et al.,

2024). In contrast, many modern robot systems uti-

lize ROS (Macenski et al., 2022) as a middleware to

structure the software infrastructure. ROS (the cur-

rent common version is ROS 2), is a message passing

paradigm in which messages are strongly typed and

individual nodes operate in parallel. Integrating these

two architectures can be challenging. ROS involves

a parallel message passing architecture while RAG-

informed LLM systems can be modelled as a query-

response architecture. Here we explore the integra-

tion of these two architectures to develop a system

that enables a robot or an avatar system using ROS to

leverage advances in LLM-based interaction.

The remainder of this paper is organized as fol-

lows. Section 2 describes previous efforts that inte-

grate LLMs in robot systems, with particular empha-

sis on leveraging LLMs to support human-robot inter-

action (HRI). Section 3 describes how ROS and RAG-

LLM systems can be integrated together. Section 4

provides a simple example of how this combined ar-

chitecture supports personalized HRI while retaining

a standard ROS environment for robot control. Fi-

nally, Section 5 summarizes the work and describes

ongoing work on RAG-LLM-ROS integration. The

Avatar2 software package described here is available

on GitHub at https://github.com/YorkCFR/Avatar2.

2 PREVIOUS WORK

There have been a number of efforts to leverage LLMs

to support robot-related tasks from path-planning to

learning from demonstration. See Wang et al. (2025)

and Jeong et al. (2024) for recent reviews. Here we

concentrate on the use of LLMs to support human-

machine interaction and human-robot interaction in

particular.

Perhaps the most commonly encountered use of

LLMs for human-machine interaction is via a chat-

bot, a program designed to simulate conversation with

a human. Very early chatbots (e.g., Eliza – Weizen-

baum 1966) were based on simple pattern matching.

However, since the introduction of LLMs, LLMs have

found wide application in the development of chat-

bots (see Dam et al., 2024 for a review). Fundamen-

tally, LLMs are trained on an extremely large cor-

pus of textual data and develop a model that given

a portion of a text stream can predict the next tex-

tual token that should appear. Starting with an ini-

tial text prompt, this process can be applied recur-

sively to generate a response to a given prompt. In-

ternally, LLMs utilize a transformer architecture and

an attention mechanism. The resulting trained archi-

tecture has typically been trained on a large and gen-

eral corpus of data. This results in an effective text-

based chatbot that can be used to generate a realistic

response in a conversation or provide an answer to a

given question. It is critical to recognize the limita-

tions of the approach, however. An LLM trained on

a general corpus of data, e.g., by scraping the inter-

net, will not necessarily contain only truths, and the

process of generalizing a response to a given token

sequence can result in hallucination in the response.

Detecting and dealing with hallucinations can be a

challenging task. See Luo et al. (2024) for details.

Given their ability to engage in conversations,

Leveraging ROS to Support LLM-Based Human-Robot Interaction

403

Embedding

Generation

Query LLM Response

Information

Vector

Database

Prompt+Relevant

embeddings

Figure 3: RAG-based interaction.

LLM-based systems have found application in sys-

tems that wish to engage the user in conversation. For

example, Shoa and Friedman (2025) leverages LLM

to deploy virtual humans in XR environments. In

terms of robots, Kim et al. (2024) explores the im-

pact of LLM-powered robots to engage in conversa-

tion with users, and reports that LLM-powered sys-

tems can enhance the user expectations in terms of

robot communication strategies. While Ghamati et

al. (2025) explore the use of personalized LLM-based

communication with robots using EEG data.

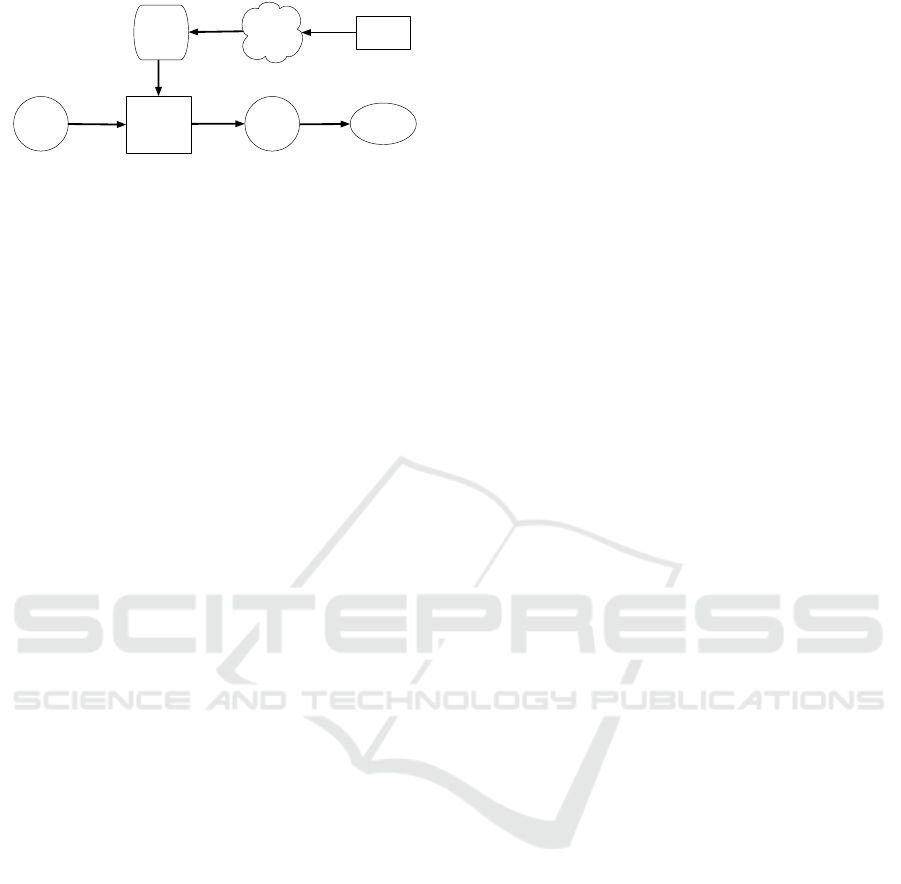

2.1 Retrieval-Augmented Generation

(RAG)

Tuning an LLM to a specific task can be accom-

plished in a number of different ways. Fine tuning

the network on data specific to a given domain is

a popular approach, however it can be difficult and

computationally very expensive to perform this fine

tuning. Another, less computationally expensive ap-

proach, is to provide within the prompt given to the

LLM specific textual information that is relevant to

the query. The basic concept is sketched in Figure 3.

Prior to interaction with the chatbot local informa-

tion is embedded in some representation and stored

in a vector database. This process typically requires

the local documentation to be chunked into manage-

able pieces related to the chatbot’s expected response

length. When a query is received by the chatbot,

the vector database is searched and relevant chunks

are retried from the database and integrated into the

LLM query. This focuses the LLM’s response on the

chunks recovered from the database. A number of dif-

ferent software libraries, including LangChain, have

been developed to support this process and provide

tools to encode and recover data chunks from the vec-

tor database.

3 INTEGRATING LLMS WITHIN

THE ROS ECOSYSTEM

A key difference between the RAG-LLM and ROS ar-

chitectures is the asynchronous message-passing ap-

proach of ROS and the synchronous query-response

nature of RAG-LLMs. Furthermore, LLMs are

known for their latency in generating a response.

Most commercial remote (cloud-based) LLMs must

deal with communication latency and potential com-

putational delays. Locally hosted LLMs avoid this

communication latency but must deal with more se-

vere local computational issues. In either case, deal-

ing with this latency involves structuring LLM query

responses within the ROS framework to retain liveli-

ness in the ROS environment.

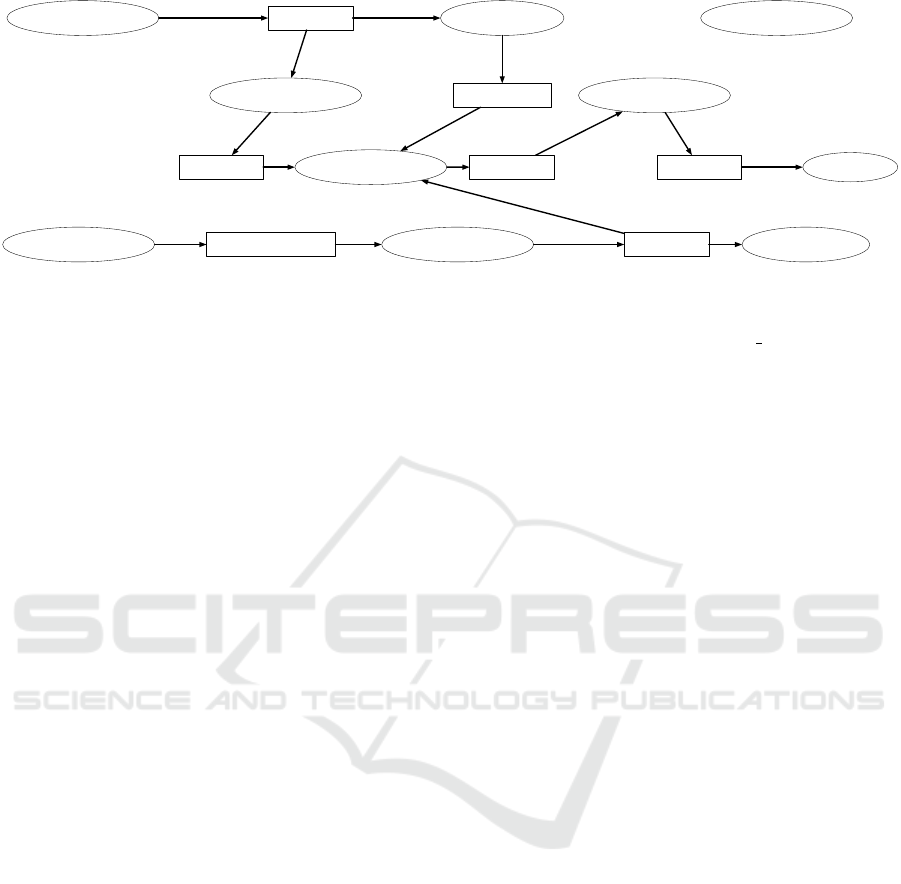

The basic structure of the approach is shown in

the ROS computation graph in Figure 4. This graph

shows only those nodes and messages related to HRI.

The core process takes input from the user, includ-

ing audio and visual information. This information

is processed asynchronously to monitor the user’s in-

teraction with the system. (Here we assume only a

single user communicates with the robot at a time.)

This information is then processed by a RAG-LLM

implemented in LangChain. Output from the RAG-

LLM’s is then used to provide textual output which is

converted to an audio signal which is rendered to the

user. While this rendering is taking place, the audio

input process is suppressed so that the robot does not

respond to its own utterances.

The prompt for the LLM is informed by a RAG

system that is tuned by the user to whom the system

is communicating as well as being informed by the

information contained within the ROS messaging sys-

tem.

3.1 Tailoring the Response to the

Individual

The system employs facial recognition capabilities to

identify known individuals and personalize interac-

tions based on their profiles. See Figure 5. This pro-

cess also provides information about the user interact-

ing with the robot that can be used to enhance the in-

teraction process, and even to assist in ignoring users

who are at some distance from the robot or avatar.

A standard face recognition system (dlib) based

on HOG and SVM is used to recognize faces. This

approach, introduced in Dalal and Triggs (2005) for

body detection has been successfully adapted to face

detection. (See Singh et al. 2020 for a review.) The

largest identified face is then compared against previ-

ously captured snapshots of participant faces associ-

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

404

/avatar2/rosbridge_websocket/avatar2/sound_capture

/avatar2/avatar_camera

/avatar2/in_raw_audio

/avatar2/avatar_camera/image_raw

/avatar2/analysis

/avatar2/sound_to_text

/avatar2/head_detect

/avatar2/sentiment_analysis

/avatar2/in_message

/avatar2/speaker_info

/avatar2/llm_engine

/avatar2/user_tracker

/avatar2/out_message

/avatar2/text_to_sound

/avatar2/out_raw_audio /ros_avatar

Figure 4: Basic structure of the approach. Standard ROS nodes are used to capture audio and visual interactions with the

robot and either a 3d avatar (as shown in Figure 6) or 2d animation (as shown in Figure 7) is used to present audio responses

and visual cues to the user. Shown here is a simple version of the approach using a 2D animation for presentation. The 3d

version uses rosbridge to connect to the Unity-based avatar which also has access to the output of the user tracker node which

provides information to the animation system so that the avatar can focus on the individual who is interacting with the robot.

ated with this task to enable the identification of the

individual who is communicating with the robot or

avatar. Identified faces which are not identified as be-

ing members of this set of faces are labelled as ‘un-

known’.

As the bounding box of the face is known, it is

possible to estimate a number of properties related

to the user’s visual interaction with the robot, includ-

ing an estimate of the distance from the robot to the

user. Based on the estimated distance, the nature of

the interaction is defined in terms of proxemics (Hall,

1966). Specifically, the distance to the user is char-

acterized as one of intimate, personal, social or pub-

lic. Face tracking data is aggregated across time to

characterize the current visual interaction between the

robot/avatar and the user as being one of starting,

continuing, or terminated. Conversations can be dis-

rupted (another individual has been identified as the

current speaker), idle (there is no one in the field of

view of the camera), or looking (the robot was com-

municating with an individual who has not been de-

tected for a short period of time). This information

enables the LLM to incorporate information about the

speaker (is this an ongoing conversation, for example)

when formatting the LLM prompt.

3.2 Dealing with User Sentiment

A wide range of methods exist for identifying a

speaker’s emotional state, including those based on

visual cues, textual analysis, and audio signals, as

well as more recent approaches that combine multi-

ple data types. Prior studies, such as Soleymani et

al. (2017) and Tripathi et al. (2019), provide exten-

sive reviews of these techniques. The basic approach

here is to assign a one-hot encoded vector over a

set of emotion labels – (Sadness, Excitement, Anger,

Neutral, Happy, Fear, or Surprise) – to each user ut-

terance. Although it would be possible to integrate

multiple cues to the perceived emotional content of

an utterance, for example, to combine text-based and

audio-based emotion detection, here we concentrate

on an audio signal-only approach that is based on the

work of Tripathi et al. (2019). Audio signals are de-

composed into a collection of audio features includ-

ing Fourier frequencies and Med-frequency Cepstral

Coefficients. These features are used within a deep

neural network involving stacked LSTMs to map the

audio signal onto the one-hot vector described above.

The audio-only system in Tripathi et al. (2019) re-

lied on the IEMOCAP dataset (Busso et al., 2008) for

training and a smaller set of sentiment classes. For

the system used here, we expand the set of sentiment

classes to the seven provided above and used both the

IEMOCAP and MELD (Poria et al., 2018) datasets to

increase the size of the training dataset.

3.3 Monitoring the Robot System’s

State

Having the LLM monitor the robot system is straight-

forward as the RAG infrastructure has complete ac-

cess to the ROS ecosystem. To take but one simple

example, to expose the current pose of the robot to

the LLM it is straightforward to add a statement such

as

The robot is currently at location

x=3.0m and y=2.0m.

to the prompt. This can be done either automatically

or more efficiently to only include such information if

the user’s query appears to contain key words such as

‘location’ or sequences like ‘where are you’. As the

RAG process is also aware of the user with whom it

Leveraging ROS to Support LLM-Based Human-Robot Interaction

405

Figure 5: Facial recognition system recognizing the indi-

vidual interacting with the system. The system is able to

retrieve the user information and pass it on in the ROS net-

work with their id, name, and their role.

is conversing, the nature of the response can be tuned

so that a response that is appropriate for a system de-

veloper (such as based on the property above) can be

replaced with a property such as

The robot is currently near the

kitchen.

assuming that the location (3.0,2.0) in the global co-

ordinate frame is near the kitchen.

3.4 Giving a Face to the Interaction

The avatar architecture described here draws on the

Extensible Cloud-based Avatar framework described

in Altaraweneh et al. (2021). This system serves as

a puppetry toolkit compatible with the Robot Oper-

ating System (ROS). An overview of the avatar sys-

tem architecture is depicted in Figure 4. In response

to human interaction, the avatar system integrates the

generated response into the avatar display. The ren-

dering system, as described in Altarawneh and Jenkin

(2020), combines speech audio with synchronized

lip motion and expressive facial animations to pro-

duce coherent avatar responses, which are integrated

into the display using idle loop animations. Rather

than defaulting to a static avatar pose between re-

sponses, the system employs a dynamic idle anima-

tion to maintain an animated presence and to assist in

masking any latency associated with responding to a

user query.

Early implementations of this avatar rendering re-

quired an in-house computational and rendering clus-

ter composed of multicore CPU servers equipped with

GPUs and ample memory and storage, capable of sup-

porting intensive animation tasks. The current imple-

mentation leverages standard video game assets that

support non-player-characters (NPCs) and the Unity

Figure 6: Sample avatars. Constructing and rigging avatars

is simplified by the existence of a number of standard

toolsets that enable construction. These toolsets also sup-

port lip/mouth synchronization with audio utterances and

the introduction of animations that mimic human (and other

avatar) mannerisms to provide a feeling of naturalness to

the agent being simulated.

Game Engine to render the avatar. These libraries

also simplify the deployment of the delay loops de-

scribed in Altaraweneh et al. (2021). Communica-

tion between the ROS and Unity spaces is provided by

the UnityRos library described in Codd-Downey et al.

(2014). Individual animated avatars are built using the

Ready Player Me

1

toolkit, and idle animations are

generated using Mixamo

2

. A view of a sample avatars

created in this manner is given in Figure 6.

The rendering process has complete access to the

ROS environment, including sentiment and visual in-

formation captured of the user. This would enable, for

example, the avatar to direct its gaze at the user inter-

acting with the robot and its avatar. It is important

to observe that the display does not have to resem-

ble a human (or even biological) entity. Figure 7, for

example, shows a alternative ‘avatar’ that consists of

a simple animated textured sphere whose appearance

changes with the intensity of the audio signal being

uttered.

4 AN EXAMPLE INTERACTION

To demonstrate the integrated system’s capabilities

in a real-world deployment, we present an interac-

tion with an avatar assistant operating at the welcome

desk at the ACME Hearing Clinic. The system shown

here is operating using a Llama-3.1-8B model de-

ployed on a dedicated RTX 4090 GPU-powered sys-

tem. While this smaller model provides near real-time

1

See readyplayer.me

2

See mixamo.com.

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

406

Figure 7: An alternative display ‘face’. Rather than utilizing

a 3d rendered avatar, other options are possible. Here an

animated sphere is used to focus the user’s attention. Four

frames of the animation are shown.

performance, larger language models introduce more

substantial delays in response generation, highlight-

ing the critical trade-off between model sophistication

and conversational responsiveness.

The system implements basic query caching dur-

ing ongoing interactions, storing queries until a pre-

defined cache size is full, to further reduce response

times for subsequent queries. Upon response gener-

ation, the LLM output is broadcast as a ROS topic

across the network infrastructure. The Unity-powered

avatar system, described in Section 3.4, subscribes to

these topic messages through the rosbridge websocket

connection.

When the Unity system receives an incoming

topic message containing the generated response, it

initiates synchronized lip movements and contextu-

ally appropriate gestures throughout the duration of

the utterance. These movements during speech repre-

sent a deliberate implementation of human conversa-

tional mannerisms, designed to enhance the perceived

authenticity of the interaction. Upon completion of

the spoken response, the avatar transitions to attentive

listening behaviors, including subtle head movements

mirroring the natural behavioral patterns observed in

human-to-human conversation.

The following transcript demonstrates these inte-

grated capabilities in action:

Mary (AI Assistant): Welcome to the

ACME Hearing Clinic! I’m Mary, one

of our friendly and knowledgeable staff

members. How can we help you today?

Do you have an appointment scheduled or

would you like to schedule one?

Walk-in Patient: “I don’t have an appointment

there, but I wanted to ask you about your clinic

hours and who will make it I might be able to see.”

Mary (AI Assistant): Hello! I’m

Mary, a helpful assistant at the

ACME Hearing Clinic. We’re open

from Monday to Friday at 9am till

5 pm. If you’d like to schedule an

appointment, please feel free to call

us at (555) 555-5555 or email us at

ACMEhearingclinic@nowhere.na. We’ll

be happy to accommodate your needs!

Please note that we may have limited

availability on evenings and weekends,

but we can try to work with you to find

a time that suits you best.

Walk-in Patient: “Can you tell me about who I

might be able to see?”

Mary (AI Assistant): Welcome to the

ACME Hearing Clinic! I’m Mary, and

I’ll be happy to help you. As for

who you might see here, we have a

team of experienced audiologists

and hearing specialists. They will

assess your hearing and provide

personalized recommendations for

treatment. You may also meet with our

speech-language pathologist (SLP) if

you’re experiencing difficulties with

communication or understanding speech

in noisy environments. We’ll do our

best to make sure you feel comfortable

and supported throughout the process.

Walk-in Patient: “Can you tell me about the resi-

dent doctor and the clinic?”

Mary (AI Assistant): I’m Mary, a helpful

assistant at the ACME Hearing Clinic.

I’d be happy to tell you about our

clinic and staff. Our clinic is

located on the main floor of Life

Labs at 99 Nowhere Road. We’re a

state-of-the-art facility with a team

of experienced audiologists and hearing

aid specialists. One of our resident

doctors, Dr. Patel, is an expert in

audiology and hearing health. She’s

been working with us for several years

and has helped countless patients

improve their hearing and quality of

life. We also have a team of friendly

and knowledgeable staff who are always

happy to help answer any questions

you may have about your hearing or our

services.

The system maintains consistent persona through-

out the exchange, with Mary appropriately greet-

ing the patient and maintaining a professional

yet welcoming tone. The RAG system retrieves

domain-specific information including precise oper-

ating hours, contact details, staff qualifications, and

facility location, demonstrating knowledge base inte-

gration. However, the conversation also reveals ar-

Leveraging ROS to Support LLM-Based Human-Robot Interaction

407

eas for improvement in discourse management. The

avatar exhibits redundant greeting behaviors, partic-

ularly noticeable in the second and third responses

where Mary re-introduces herself despite already hav-

ing established her identity. Currently, work is ad-

dressing this exact issue to reduce the redundant ex-

change and enhance the experience with a more so-

phisticated dialogue state tracking to maintain con-

versational coherence across multiple exchanges.

The response latency achieved through local GPU

processing enables natural conversational flow, while

the cached query system ensures that common in-

quiries about clinic hours and services are delivered

with minimal computational overhead. Throughout

each response, the Unity-powered avatar system syn-

chronizes speech with appropriate gestures and main-

tains attentive listening behaviors between utterances,

creating a compelling demonstration of naturalistic

human-robot interaction.

5 ONGOING WORK

The RAG-LLM-ROS system has been deployed for a

collection of different applications, including as mod-

elling a welcoming avatar for a medical clinic. The

use of ROS as a middleware enables the straightfor-

ward integration of visual and other sensor cues to

the chatbot, enabling a high level of personalization

without requiring a significant investment in software

to process sensor data.

Current development efforts are focused on lever-

aging the system’s facial recognition capabilities to

implement role-based access control and personal-

ized interaction management. Figure 5 showcases

the recognition capabilities of the system. The ex-

isting user identification system, which successfully

recognizes known individuals and characterizes inter-

action dynamics through proxemics analysis, is being

extended to support hierarchical user privileges and

administrative functions.

The system described here assumes that the

avatar/robot does not have to respond to commands

through executed actions. As a consequence the cur-

rent system assumes that the output of the LLM is a

text string that includes only the text to be presented

through the animated interface. Ongoing work is ex-

ploring including basic robot actions (e.g., move to a

given location) through the use of a structured LLM

response, e.g., by having the LLM respond using a

json structure that includes both motion commands as

well as text to respond with).

Using LLMs to power natural and responsive

Human-Robot Interaction (HRI) systems involves

managing the inherent latency of Large Language

Models (LLMs). The system described here utilizes

ROS to create a robust and asynchronous architec-

ture, yet delays arising from LLM processing, net-

work communication, or local computation remain in-

evitable. Such delays disrupt the flow of interaction

and negatively impact user experience as mentioned

in Schoenberg et al. (2014) and Zhang et al. (2024).

Current work on addressing this challenge seeks to

manage the user’s perception of latency by lever-

aging avatar behaviors that mimic human conversa-

tional cues during cognitive processing. Research has

demonstrated that conversational fillers and accompa-

nying gestures can significantly improve human-robot

interaction by making responses appear more natural

and reducing perceived delays (Wigdor et al., 2016).

Ongoing research is developing and validates tech-

niques that make unavoidable waiting periods feel

like a natural part of the interaction, thereby enhanc-

ing the avatar’s perceived responsiveness and natural-

ness.

ACKNOWLEDGEMENTS

The financial support the NSERC Canadian Robotic

Network, Mitacs Globalink and the IDEaS SentryNet

project are gratefully acknowledged.

REFERENCES

Altarawneh, E. and jenkin, M. (2020). System and method

for rendering of an animated avatar. US Patent

10580187B2.

Altarawneh, E., M., M. J., and MacKenzie, I. S. (2020).

Is putting a face on a robot worthwhile? In Proc.

Workshop on Active Vision and Perception in Human(-

Robot) Collaboration. Held in conjunction with the

29th IEEE Int. Conf. on Robot and Human Interactive

Communication.

Busso, C., Bulut, M., Lee, C.-C., Kazemzadeh, A., Mower,

E., Kim, S., Chang, J. N., Lee, S., and Narayanan,

S. S. (2008). IEMOCAP: Interactive emotional dyadic

motion capture database. Language Resources and

Evaluation, 42:335.

Codd-Downey, R., Forooshani, P. M., Speers, A., Wang, H.,

and Jenkin, M. (2014). From ROS to Unity: Lever-

aging robot and virtual environment middleware for

immersive teleoperation. In IEEE International Con-

ference on Information and Automation (ICIA), pages

932–936, Hailar, China.

Dalal, N. and Triggs, B. (2005). Histograms of oriented

gradients for human detection. In Proc. IEEE Inter-

national Conference on Computer Vision and Pattern

Recognition (CVPR), pages 886–893, San Diego, CA.

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

408

Dam, S. K., Hong, C. S., Qiao, Y., and Zhang, C. (2024).

A complete survey on LLM-based AI Chatbots. arXiv

2406.16937.

E. Altarawneh, E., Jenkin, M., and Scott MacKenzie, I.

(2021). An extensible cloud based avatar: Imple-

mentation and evaluation. In Brooks, A. L., Brah-

man, S., Kapralos, B., Nakajima, A., Tyerman, J., and

Jain, L. C., editors, Recent Advances in Technologies

for Inclusive Well-Being: Virtual Patients, Gamifica-

tion and Simulation, pages 503–522. Springer Inter-

national Publishing, Cham.

Gao, Y., Xiong, Y., Gao, X., Jia, K., Pan, J., Bi, Y., Dai, Y.,

Sun, J., Wang, M., and Wang, H. (2024). Retrieval-

augmented generation for large language models: A

survey. arXiv 2312.10997.

Ghamati, K., Banitalebi Dehkordi, M., and Zaraki, A.

(2025). Towards AI-powered applications: The de-

velopment of a personalised LLM for HRI and HCI.

Sensors, 25.

Hall, E. T. (1966). The Hidden Dimension. Anchor Books.

Jeong, H., Lee, H., Kim, C., and Shin, S. (2024). A sur-

vey of robot intelligence with large language models.

Appl. Sci., 14:8868.

Kim, C. Y., Lee, C. P., and Mutlu, B. (2024). Understanding

large-language model LLM-powered Human-Robot

Interaction. In Proc. ACM/IEEE International Con-

ference on Human-Robot Interaction (HRI), page

371–380.

Luo, J., Li, T., Wu, D., Jenkin, M., Liu, S., and Dudek,

G. (2024). Hallucination detection and hallucination

mitigation: An investigation. arXIV 2401.08358.

Macenski, S., Foote, T., Gerkey, B., Lalancette, C., and

Woodall, W. (2022). Robot operating system 2: De-

sign, architecture, and uses in the wild. Science

Robotics, 7:eabm6074.

Mower, C. E., Wan, Y., Yu, H., Grosnit, A., Gonzalez-

Billandon, J., Zimmer, M., Wang, J., Zhang, X., Zhao,

Y., Zhai, A., Liu, P., Palenicek, D., Tateo, D., Cadena,

C., Hutter, M., Peters, J., Tian, G., Zhuang, Y., Shao,

K., Quan, X., Hao, J., Wang, J., and Bou-Ammar, H.

(2024). ROS-LLM: A ROS framework for embod-

ied AI with task feedback and structured reasoning.

arXiv:2406.19741.

Poria, S., Hazarika, D., Majumder, N., Naik, G., Cambria,

E., and Mihalcea, R. (2018). Meld: A multimodal

multi-party dataset for emotion recognition in conver-

sations. arXiv preprint arXiv:1810.02508.

Schoenenberg, K., Raake, A., and Koeppe, J. (2014). Why

are you so slow? – misattribution of transmission de-

lay to attributes of the conversation partner at the far-

end. International Journal of Human-Computer Stud-

ies, 72(5):477–487.

Shoa, A. and Friedman, D. (2025). Milo: an LLM-based

virtual human open-source platform for extended re-

ality. Frontiers in Virtual Reality, 6.

Singh, S., Singh, D., and Yadav, V. (2020). Face recog-

nition using HOG feature extraciton and SVM classi-

fiere. Int. J. of Emerging Trends in Engineering Re-

search, 8.

Soleymani, M., Garcia, D., Jou, B., Schuller, B., Chang, S.-

F., and Pantic, M. (2017). A survey of multimodal sen-

timent analysis. Image and Vision Computing, 65:3–

14.

Technologies, R. C. (2005). Roscribe:

Create ROS packages using LLMs.

https://github.com/RoboCoachTechnologies/ROScribe,

accessed Jun-30-2025.

Tripathi, S., Tripathi, S., and Beigi, H. (2019). Multi-modal

emotion recognition on IEMOCAP dataset using deep

learning. arXiv 1804.05788.

Wang, C., Hasler, S., Tanneberg, D., Ocker, F., F. Jou-

blin, F., Ceravola, A., Deigmoeller, J., and Gienger,

M. (2024). LaMI: Large Language Models for Multi-

Modal Human-Robot Interaction. In Extended Ab-

stracts of the CHI Conference on Human Factors in

Computing Systems, page 1–10. ACM.

Wang, J., Shi, E., Hu, H., Ma, C., Liu, Y., Wang, X., Yao, Y.,

Liu, X., Ge, B., and Zhang, S. (2025). Large language

models for robotics: Opportunities, challenges, and

perspectives. Journal of Automation and Intelligence,

4:52–64.

Weizenbaum, J. (1966). Eliza—a computer program for

the study of natural language communication between

man and machine. Commun. ACM, page 36–45.

Wigdor, N., de Greeff, J., Looije, R., and Neerincx, M. A.

(2016). How to improve human-robot interaction with

conversational fillers. In 2016 25th IEEE Interna-

tional Symposium on Robot and Human Interactive

Communication (RO-MAN), pages 219–224.

Zhang, Z., Tsiakas, K., and Schneegass, C. (2024). Explain-

ing the wait: How justifying chatbot response delays

impact user trust. ACM Conversational User Inter-

faces 2024, page 1–16.

Leveraging ROS to Support LLM-Based Human-Robot Interaction

409