HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward

Thermal Loop Closing Even in Complete Darkness

Tatsuro Sakai, Yanshuo Bai, Kanji Tanaka, Wuhao Xie, Jonathan Tay Yu Liang and Daiki Iwata

University of Fukui, 3-9-1 Bunkyo, Fukui City, Fukui 910-0017, Japan

Keywords:

Thermal Loop Closing, Human as Landmark, HOI Features.

Abstract:

Visual SLAM (Simultaneous Localization and Mapping) is a foundational technology for autonomous navi-

gation, enabling simultaneous localization and mapping in diverse indoor and outdoor environments. Among

its components, loop closure plays a vital role in maintaining global map consistency by recognizing revisited

locations and correcting accumulated localization errors. Conventional SLAM methods have primarily relied

on RGB cameras, leveraging feature-based matching and graph optimization to achieve high-precision loop

detection. Despite their success, these methods are inherently sensitive to illumination conditions and often

fail under low-light or high-contrast scenes. Recently, thermal infrared cameras have gained attention as a ro-

bust alternative, particularly in dark or visually degraded environments. While various thermal-inertial SLAM

approaches have been proposed, they st ill depend heavily on static structures and visual features, limiting their

effectiveness in textureless or dynamic environments. To address this limitati on, we propose a novel loop clo-

sure method that utilizes Human-Object Interaction (HOI) as dynamic-static composite landmarks in thermal

imagery. Although humans are conventionally considered unsuitable as landmarks due to their motion, our

approach overcomes this by introducing HOI feature points as landmarks. These f eature points exhibit both a

human attribute, characterized by stable detection across RGB and thermal domains via person tracking, and a

static-object attribute, characterized by contact with visually consistent, semantically meaningful objects. This

duality enables robust loop closure even in dynamic, low-texture, and dark environments, where traditional

methods typically fail.

1 INTRODUC TION

Visua l SLAM ( Simultaneous Localization and Map-

ping) plays an essential role in both indoor and out-

door robotic navigation by enabling robots to simul-

taneously estimate their own position and co nstruct a

map of the sur rounding environment. Within Visual

SLAM, loop closing is a critically impor ta nt func-

tion (Klein and Murray, 2007; Cummins a nd New-

man, 20 08; Mu r-Artal et al., 2015a; Ali et al., 2022;

Adlakha et a l., 2020). It allow s the system to rec-

ognize previously visited loc a tions and integrate cur-

rent observations with pa st map information, thereby

mitigating the accumulation of localizatio n er rors and

maintaining global map consistency. However, real-

world environments are dynamic and often present

significant challenges to loop closure due to factors

such a s lighting changes, viewpoint variations, occlu-

sions, and differences in image features, all of which

can significantly degrade the accuracy and robustness

of the process.

Conventional loop closure methods in Visual

SLAM have primarily been developed under th e as-

sumption o f RGB cameras (visible light sensors).

These approaches typically extract image features

such as SIFT (Lowe, 2004) or ORB (Ru blee et al.,

2011) and estimate camera motion and environmental

structure based on the geometric relationships among

these features. Over time, the field has progressed

from early filter-based techniques (Montemerlo et a l.,

2002) to more advanced graph optimization-based

methods, such as ORB-SLAM (Mur-Artal et al.,

2015b) and LSD-SLAM (Enge l et al., 20 14), achiev-

ing high-precision localization, robust mapping, and

real-time performance . Nonetheless, RGB-based

methods are fundamentally limited by their reliance

on lighting conditions. In environmen ts with low illu-

mination or high dy namic range, extractin g sufficient

image features becomes difficult, leading to substan-

tial degrad ation in overall system perf ormance (Sapu-

tra et al., 2022).

To overcome the limitations of RGB camer as,

Sakai, T., Bai, Y., Tanaka, K., Xie, W., Liang, J. T. Y. and Iwata, D.

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness.

DOI: 10.5220/0013775900003982

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 22nd International Conference on Informatics in Control, Automation and Robotics (ICINCO 2025) - Volume 2, pages 105-115

ISBN: 978-989-758-770-2; ISSN: 2184-2809

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

105

Figure 1: Humans are typically unsuitable as traditional

landmarks due to their dynamic nature. However, this study

focuses on Human-Object Interaction (HOI) with static ob-

jects, demonstrating their effectiveness as landmarks.

thermal infrared (IR) cameras have attracted increas-

ing atten tion for loop closure applications (Saputra

et al., 2 022; Shin and Kim, 2019; van de Molengraft

et al., 2023; Li et al., 2025; Xu et al., 2025). As they

do not require visible light, thermal cameras offer a

promising solution for maintaining localization and

map consistency under visually degraded c onditions,

such as darkness, smoke, or dust. Several studies

have explored this direction, including Graph-Based

Thermal-Inertial SLAM (Saputra et al., 2022), which

combines therma l imagery with IMU data, and Fire-

botSLAM (van de Molengraft et a l., 2023), which

targets disaster scenarios with po or visibility due to

smoke. Other approaches include WTI-SLAM (Li

et al., 2025), designe d for weakly textured thermal

images, and SLAM in the Dark (Xu et al., 2025),

which employs self-supervised lea rning to achieve ac-

curate loop c losure. Additionally, Sparse Depth En-

hanced SLAM (Shin and Kim, 2019) leverages sparse

depth from external sensors to improve localization

accuracy. While these works demo nstrate the poten-

tial o f thermal-based app roaches for loop closure in

harsh conditions, many of them still heavily rely o n

static structures and feature points in the environment.

Thus, robustness remains lim ited in scenes that are

weakly textured or feature-sparse.

To address these challenges, this study proposes

a novel loop closure method that utilizes human s—

a small set of object categories commonly present

in huma n environments—as dynamic la ndmark s (Fig.

1). While humans are inherently dynamic and may

seem unsuitable as conventional landmarks, this work

focuses on human-object interactions (HOIs) (Antoun

and Asmar, 2023), leveraging the static objects in -

volved in such interactions as reliable cues. In ther-

mal imagery, human featur es are especially salient

(Teixeira et al., 2010), making them highly effective

for detecting HOIs. The propo sed method operates in

three main steps. Fir st, a thermal-domain-specific hu-

man tracker is trained to accurately localize human

regions within thermal images. Second, HO I fea-

tures are extracted from the detected human regions.

Third, loop closure is performed by matching th e se

HOI feature s between query and reference images us-

ing RANSAC (Random Sample Consensus) (Chum

et al., 2003). This approach aims to enable stable loop

closure even in challengin g environments with limited

static features or dyn amic elements, where conven-

tional feature-based methods often struggle.

2 RELATED WORK

Loop clo sing has developed as one of the core chal-

lenges in SLAM for robotics. In the early stag es,

Klein and Murray introduced PTAM (Parallel Track-

ing and Mapping), which separated real-time camera

tracking from mapping, enabling high -precision map-

ping in small-scale environments (Klein and Mur-

ray, 2007). Subsequently, FAB-MAP, proposed by

Cummins and Newman (Cummins and Newman,

2008), adopted a Bayesian approach based on the co-

occurre nce probability of visual features, significantly

improving the reliability of loop detection. FAB-

MAP 2.0 extended this framework to enable loop

closure based solely on visual appearan ce in large-

scale environments (Cummins and Newman, 2010).

In 2015, ORB-SLAM by Mur-Artal et al. (Mur-Artal

et al., 2015a) gained widespr ead adoption by employ-

ing the lightweight and high-precision ORB descrip-

tor, demonstrating robust perfor mance in real-world

applications. More recently, advances in deep learn-

ing have led to learning-based approa ches from the

feature extraction stage, as seen in NetVLAD (Arand-

jelovic et al., 2018), a CNN-based place re c ogni-

tion m e thod. Furthermore, Bi-directional Loop Clo-

sure (Ali et al., 2022) considers temporal context in

both forward and back ward directions, improving ro-

bustness. To ensure performance under conditions

where visible light is unavailable, such as in darkness

or smoke-filled e nvironments, studies like DeepTIO

(Adlakha et al., 2020) have explored the integration of

thermal imagery with inertial mea surements, indicat-

ing that multi-modal approaches adapted to specific

sensing domains will be crucial in the future.

Recent loop closure techniques have a c hieved

rapid innovation through advances in deep lea rn-

ing, neu ral rendering, semantics, a nd self-supervised

learning. For instance, GLC-SLAM (Chen et al. ,

2024) integrates lo op closure with a 3D scene rep-

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

106

resentation based on Gaussian Splatting, achieving

both photorealistic rende ring and precise loca liza tion.

SGLC (Wang et al., 2024) targets loop closure in Li-

DAR SLAM by introduc ing a coarse-fine-refine strat-

egy using semantic graphs, enabling accurate pose

correction and map consistency in large-scale envi-

ronments. DK-SLAM (Qu et al., 2024) presents a uni-

fied deep learning-based pipeline from keypoint d e-

tection to loop closure, significantly improving local-

ization accuracy and generalization with mon ocular

cameras. Loopy-SLAM (Liso et al., 2024) incorpo-

rates loop closure into dense NeRF-based scene rep-

resentations, allowing r e localization and map consis-

tency without relying on voxel grids. A novel trend

is introduced by AutoLoop (Lahiany and Gal, 2025),

which automates the fine-tuning proce ss of existing

SLAM models via agent-based curriculum learning,

enabling fast and a utonomous a daptation for loop clo-

sure. Additionally, 2GO (Lim et al., 2025) pro poses

an extremely efficient approa ch capable of detecting

loops from just two viewpoints, dramatically reduc-

ing the computa tional cost of SLAM systems th rough

lightweight multi-view inference. These cutting-edge

studies contribute to enhancing the robustness, scala-

bility, and autonomy of loop clo sure, each leveraging

different sensors, representations, and learning strate-

gies.

Loop closure based on thermal infrared cam eras

has recently g ained attention as a robust solution for

maintaining loc alization and map consistency in dark,

smoky, or dusty environ ments where visible light can-

not be used. Graph-Based Thermal-Inertial SLAM

(Saputra et al., 2022) integrates thermal im a gery with

IMU data and applies probabilistic neura l pose graph

optimization to achieve both accuracy and robustness,

demonstra ting effectiveness across various scenarios

including indoor and outdoor handheld cameras and

SubT tunnels. Sparse Depth Enhanced SLAM (Shin

and Kim, 2019) improves loop con sistency by sup-

plementing direct thermal SLAM with sparse depth

measurements from external sensors, demonstratin g

the effectiveness of multimodal fu sio n. Firebot-

SLAM (van de Molengraft et al., 2023), designed for

smoke-obscured disaster environments, significantly

improves situational awareness by generating maps

and loop closing using thermal im agery alone in con-

ditions of zero visibility. WTI-SLAM (Li et a l., 2025)

addresses the difficulty o f loop detection in weakly

textured thermal images by introducin g a specialized

feature extraction and tracking algorithm, enabling

loop closure in cases where traditional visible-light

SLAM methods fail. SLAM in th e Dark (Xu et al.,

2025), proposed by Xu et al., introduces a unified

deep model that learn s pose, depth, and loo p closure

entirely fro m therma l imagery in a self-supervised

manner, achieving high-accuracy loo p closure using

only thermal sensing. These studies suggest new di-

rections for robust loop closure in extreme environ-

ments through sensor f usion and learning-based ap-

proach e s grounded in thermal infrared imaging.

3 PROBLEM FORMULATION

Following prior work, we formulate loop closure as

an image retrieval problem. Given a query image

captured at the current location, the objective is to

find a matching imag e from a database of previously

observed images that corresponds to the same physi-

cal location. This formulation casts loop closure as a

place recognition task, where a successful match in-

dicates a loo p has been detected. This perspective en-

ables the integration of image retrieval techniques—

such as feature extraction, similarity co mputation,

and geo metric verificatio n—into the SLAM pipeline

to achieve robust loop detection. Under this formu-

lation, the performance of loop closure is typically

assessed using the metrics of precision and recall.

Precision indicates the proportion of correctly iden-

tified loop closures among all retrieved results, re-

flecting the system’s ability to minimize false posi-

tives. Recall measures the prop ortion of actual loop

closures that are successfully detected, indicating the

system’s ability to avoid false negatives. These met-

rics o ften present a tra de-off, and the design of the

retrieva l system must bala nce them appropriately de-

pending on the characteristics of the target environ-

ment and the requirements of the task. To capture

both prec isio n and recall characteristics in a single

metric, we adopt a ranking-based evaluation c riterion,

namely the Me a n Reciprocal Rank (MRR), in our ex-

periments. MRR evaluates the rank position of the

first correct match in the retrieval results, thereby pro-

viding a balanced view of detection accuracy and re-

liability.

It sho uld be noted , however, that loop closure

differs from g eneral image retrieval in several ways.

First, in practice, lo op closure is n ot triggered for ev-

ery incoming image. Instead, a pre-processing step

ensures tha t only sufficiently feature-rich query im-

ages are selected, avo iding loop detection attempts

on low-texture or ambiguous inputs. Second, a post-

processing step often evalua te s the confiden c e of the

retrieved result, and if the confidence is low, the result

is discarded and not used in the subsequent SLAM op-

timization. The se pre- and post-processing steps are

crucial fo r maintaining the stability an d reliability of

SLAM in real-world conditions. For simplicity, the

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness

107

proposed method in this work does not explicitly in-

clude such pre- or post-p rocessing steps. We assume

that a query image is a lready suitable for loop detec-

tion and that the retrieved results are dire ctly used for

matching and verification.

Traditional loop closure a pproaches have primar-

ily used static objects in the environment as land-

marks. This strategy offers advantages d ue to the

fixed nature of such landmarks, allowing for con-

sistent and reliable fea ture extraction. Additionally,

decades of research have contributed to the m a turity

and robustness of this approach. However, it also has

several limitations. Varia tions in lighting conditions,

viewpoints, seasons, and time of day can significantly

alter the visual appearance of scenes, intr oducing am-

biguity and leadin g to false positives or missed de-

tections. Moreover, in appearance-based SLAM sys-

tems that lac k absolute distance measuremen ts, align-

ing local maps built at different scales becomes d if-

ficult due to scale ambiguity. In large-scale environ-

ments, the growing size of the map inc reases compu-

tational costs, and the presence of moving objects or

temporally varying structures can furthe r destabilize

the system and lead to incorrect loop closures.

To address these limitations, we propose a novel

loop closure method that employs dynamic entities,

specifically huma ns, as landmarks. One notable ad-

vantage of this approac h is that humans exhibit clear

thermal signatures in thermal images, making them

relatively easy to detect compare d to su rrounding en-

vironm ents. This feature opens up the possibility of

effective loop detection in dark environments where

humans are pro minent landmarks. Using dynamic hu-

mans as landmarks also enhances th e adaptability of

SLAM systems to dynamic environments, which are

challengin g for traditional static-object-based meth-

ods. Further more, leveraging human motion pattern s

and behaviors may offer a dditional cues for more

complex scene understanding and localization.

However, this approac h a lso presents significant

challenges. Thermal image s are often n oisy, an d hu-

man appearance can vary substantially de pending on

posture, clothing, and carried items, making detec-

tion and re-identification difficult. Humans con stantly

move, appear, and disappear, introducing high vari-

ability and instability when used as land marks. As

a re sult, achieving high-precision loop detection re-

mains extremely challeng ing, and a single misiden-

tification can severely compromise the entire system.

Limitations of thermal cameras further complicate the

problem. Thermal imagery lacks color information

and often p rovides limited shape or semantic detail,

making it difficult to extract meaningful features com-

pared to RGB imagery. Additionally, variations in

body temperature can alter thermal signatures over

time, hindering consistent featu re extraction.

4 APPROACH

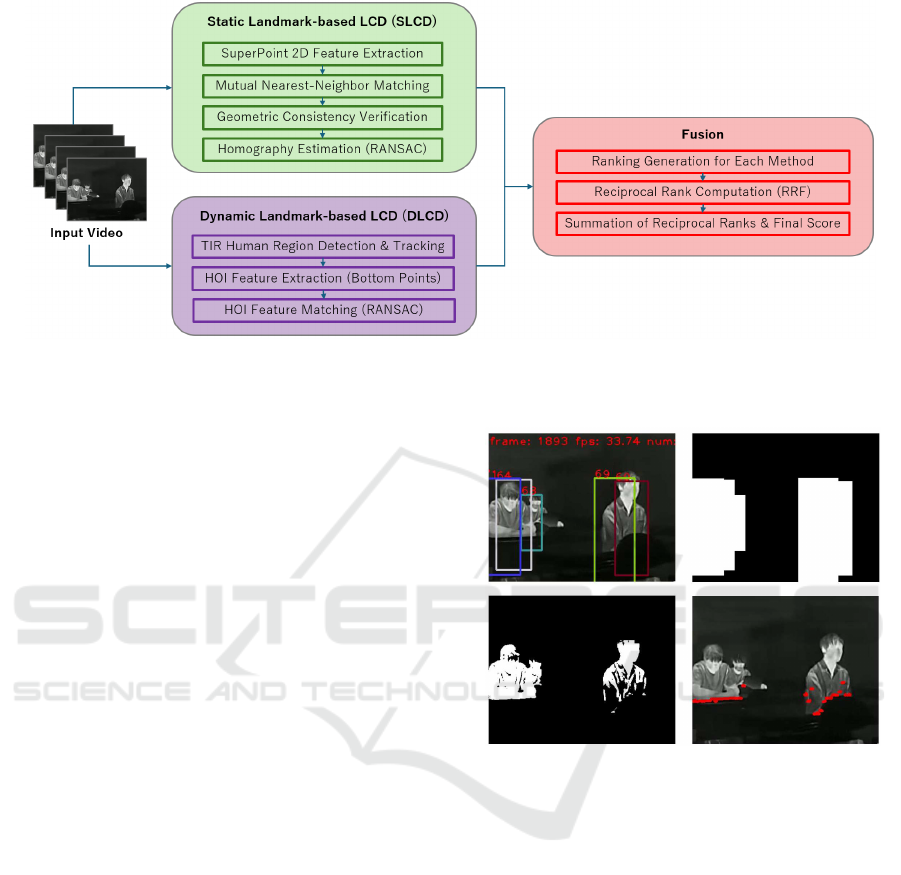

Figure 2 illustrates the system arc hitecture of the pro-

posed method. As shown, the system consists of three

main components: static landmark-based loop clo sure

detection (SLCD), dyn a mic landmark-based loop clo-

sure detec tion (DLCD), and a n in formation fusion

module that integrates the outputs of these two LCD

modules.

The SLCD component can employ any existing

method such as conventional thermal SLAM. In this

study, as described in Section 4.1, we utilize a clas-

sical approach based on SuperPoint matching. The

DLCD component is our newly introduced dynamic

landmark-based LCD, detailed in Section 4.2. The

informa tion fusion module fuses the outpu ts from the

two LCD modules; while it is not the main focus of

this study, we provid e a simple implementation exam-

ple in Section 4.3.

4.1 Static Landmark-Based LCD

As a conventional static landmar k-based LCD

(SLCD) method, this study adopts a feature match-

ing approach using only 2D coordinates extracted by

the SuperPoint feature extractor (DeTone et al., 2018).

The detailed proced ure is as follows.

4.1.1 Mutual Nearest-Neighbor Ma tching

For each feature point in the query image, the near-

est feature point in the referen ce image is found, and

vice versa. Only featur e point pairs mutually near est

in both directions are retained as matches, effectively

reducing false matches.

4.1.2 Geometric Consistency Verification

Matches are filtered by constraining the vertical co-

ordinate difference based on th e ima ge height to be

within a predefined threshold, enhancing the geom et-

ric validity of the matching results.

4.1.3 Homography Estimation via RANSAC

Random samples of matched feature pairs are selected

to estimate a h omogra phy (planar projective trans-

form). All matched poin ts are then projected by this

homography, an d points with reprojection err or below

a threshold are considered inliers. This process is re-

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

108

Figure 2: The SLCD module performs loop closure detection using static landmarks based on conventional methods like

SuperPoint matching. The DLCD module, newly proposed in this research, performs loop closure detection using dynamic

landmarks. The Information Fusion module integrates the results from both the SLCD and DLCD modules.

peated multiple times, and the homo graphy with the

largest inlier set is chosen as the final model.

4.2 Dynamic Landmark-Based LCD

To overcome the limitations discussed previously,

several prior works offer important insights. In the

domain o f loop closure and image c hange detection

(LCD-ICD), studies show that if the relative position

between lan dmark A and object B remains invariant,

they can be co nsidered “pseudo-static,” allowing their

use as features in dynamic environments over sho rt

SLAM durations. Research on Human-On ly SLAM

(Tanaka, 2002) treats occlusion boundarie s be twe en

humans and occlud ing objects as landmarks, mitigat-

ing false negatives by exploiting intermediate prop-

erties between dynamic and static objects. Building

on these ideas, HO3-SLAM (Human- Object Occlu-

sion Ordering SLAM) (Liang and Tanaka, 2024) ef-

fectively utilizes occlusion boundary points as key-

points for loop closure (see Fig. 1). HO3-SLAM no-

tably exploits the stability of human attributes across

RGB and thermal (T) domain s an d the reliability of

static object attributes in a ppearanc e, sh ape, and se-

mantics, providing valuable g uidance for robust loop

closure in dynamic environments.

4.2.1 HOI Feature Ext raction

The proposed dynamic landma rk-based loop c losure

detection pro ceeds a s follows. Given each imag e

frame, human regions are detec te d from thermal im-

ages. Detected bounding boxes are tracked an d as-

signed unique human IDs. Pixel-wise AND operation

between bou nding bo xes and human regions identifies

precise human areas with IDs.

Figure 3: As a simple example of an HOI feature, this

experiment utilizes the bottom-most point formed at the

boundary between the human and the object. Top-left: Hu-

man tracking result; Top-right: Temperature thresholding;

Bottom-left: AND operation; Bottom-right: HOI feature

point.

For ea ch human ID, at every horizontal pixel co-

ordinate x within the bounding box, the pixel with

the largest vertical coordinate y (closest to the im-

age bottom) belong ing to the human region is selected

(Fig. 3). These “bottom points” serve as Human-

Object In te raction (HOI) feature points, possessing

two key pr operties: (1 ) human attributes that are sta-

bly detectable via tracking in both RGB and thermal

domains, and (2) static object attributes that are in-

variant in appearance, shape, and semantics, func-

tioning as r e liable landmarks. The set of HOI fea-

ture points is defined as landmarks and recorded in

the m ap alo ng with frame IDs. These landmarks ex-

tracted from the current frame are matched against

previously recorded landmarks. The matching score

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness

109

is computed between landmark sets of current and

past frames usin g RANSAC (Section 4.2.2) to eval-

uate matching r eliability. Loop closure candidates are

ranked based on these matching scores.

4.2.2 RANSAC Feature Matching

Because outliers are inevitable in feature ma tching,

we apply the RANSAC algorithm to increase the re-

liability of the estimated geometric transform. The

algorithm starts by randomly selecting f our non-

repeating correspondence pairs (the minimum re-

quired to estimate a homography) from the initial

matching set between the query and d a ta base images.

A tentative homography matrix is computed from

these sam ples. L inear algebraic issues such as sin-

gularities invalidate the trial. The homography model

projects p oints from the reference image to the query

image; correspondences with r eprojection error below

the inlier threshold (T

inlier

= 5.0 pixels) are consid-

ered inliers. The process iterates up to a maximum

number of tria ls (N

trials

= 100) to find the homography

with the largest inlier count. If fewer than four initial

matches exist, homography estimation is aborted and

RANSAC terminated.

4.2.3 Training Thermal Infrared (TIR) Human

Tracker

Tracking humans in darkness is critical for applica-

tions including surveillance, security, and disaster res-

cue. Conventional RGB cameras fail under low illu-

mination, necessitating thermal infrared (TIR) cam-

eras. Developing a TIR human tracker requires exten-

sive a nnotated data, but manual labeling is costly. We

propose a training me thod that reduces this burden by

synchro nously capturing RGB and TIR videos fr om

co-located cameras. A pretrained high-performance

RGB tracker generates bounding boxes and human

IDs automatically on RGB v ideos, creating pseudo-

labels. These pseudo-labels paired with correspond -

ing TIR frames train the TIR tracker, enabling robust

human tracking in darkne ss witho ut manual ann ota-

tions. Both RGB and TIR trackers use ByteTrack

(Zhang et al., 2022) as th eir backbone.

4.3 Information Fusion

To leverage complementary informatio n and improve

robustness in image r etrieval, this study integrate s the

baseline static landmark-based LCD (Section 4.1) and

the prop osed dyn amic lan dmark-based LCD (Sec-

tion 4.2) . This integration aims to maxim ize the

strengths of both methods and enhance retrieval ac-

curacy.

For effective fusion of the two retrieval results, we

adopt Recip rocal Rank Fusion (RRF) (Corm ack et al.,

2009), a widely used techniq ue to combine rankings

from heter ogeneous sourc es. RRF fairly b alances the

contribution of each method’s top results, imp roving

overall performance.

The fusion process pro c eeds as follows.

4.3.1 Ranking Generation for Each Method

SLCD and DLCD produ c e separate ranked lists of

database images in descendin g order of matching

scores for a given query.

4.3.2 Reciprocal Rank Computation

For each database image, the reciproc a l rank is calcu-

lated for its rank r in each method b y:

RRF =

1

k + r

where k is a constant parameter. Based on prelimi-

nary experiments, we set k = 0 in th is study. Lower

ranks yield higher reciprocal rank values, giving more

weight to more releva nt images.

4.3.3 Summation of Reciprocal Ranks and Final

Score

For each database image, recipro c al ranks from

SLCD and DLCD are summed to obtain a final fusion

score:

Fusion Score = RRF

SLCD

+ RRF

DLCD

.

Sorting database images by these fusion scores pro-

duces the final retrieval ranking .

This reciprocal rank fusio n allows imag e s ranked

highly by either method to be prop e rly emphasized,

thus enhancing overall retrieval performance.

5 EXPERIMENTS

The primar y objective of this experiment is to eval-

uate the loop closing performance of a mobile robot

operating in low-light indoor environments. Specif-

ically, we assess the effectiveness of the proposed

method using thermal images captured by a thermal

camera in the presence of dynamic e le ments such as

humans.

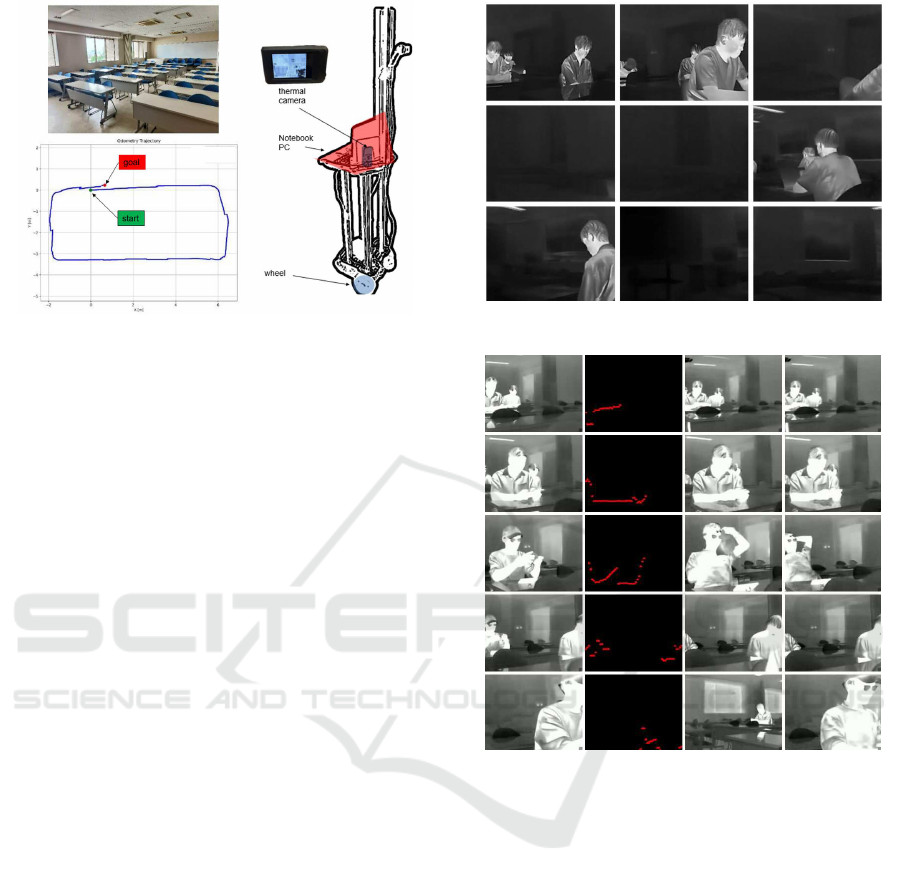

5.1 Setup

The experimental platform is a tricycle-drive mo-

bile robot equipped with an onboard computer. A

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

110

Figure 4: Experimental environment, robot, and robot tra-

jectory.

monocular therm a l ca mera (HIKMICRO Pocket2) is

mounted on the front of the robot. This camera has

a resolution of 256×192 pixels; thermal images are

continuously recorded during motion. The data are

collected in video mode, with an empiric a lly mea-

sured average fra me rate of approximately 25 Hz.

Each frame is tim e stamped and precisely synchro-

nized with o dometry data (position: x, y; orientation:

θ) estimated from the r obot’s encod ers. These data

are used to c onstruct the ground-truth dataset fo r loop

closure evaluation. The temperature range of the ther-

mal camera is configur e d to 20-30

◦

C to enhance con-

trast between human bodies and the background. As a

result, human subjects (with body temperatures of ap-

proxim ately 36

◦

C) ap pear as high-inten sity regions,

enabling clear identification as dynamic landmarks.

Experiments were conducted in an indoor envi-

ronment measuring approximately 7.0 m by 3.0 m

(Fig. 4). Six rectangular tables were arran ged

throughout the space, with four ind ividuals rand omly

seated around each table. This layout was designed

to reproduce condition s in which hu mans function as

promin ent high-temperature regions in thermal im-

agery. The robot auton omously navigated a clo c kwise

path around the room while continuously r e cording

thermal images. The experiment was conducted to

evaluate loop closing per formance in this dyna mic en-

vironm ent. Figure 5 shows therma l im ages captured

by the robot’s on-board came ra.

The collected data set (1996 frames) includes

seated individuals who appear as dynamic high-

intensity regions. Od ometry and tim e stamps were

recorde d alongside each frame to serve as input for

loop closure evaluation.

Loop closing performance was evaluated using the

number of inliers from homography estimation as the

key metric. Specifically, the m ean reciprocal rank

(MRR) sco re was calcu late d based on the ranking

Figure 5: Input images.

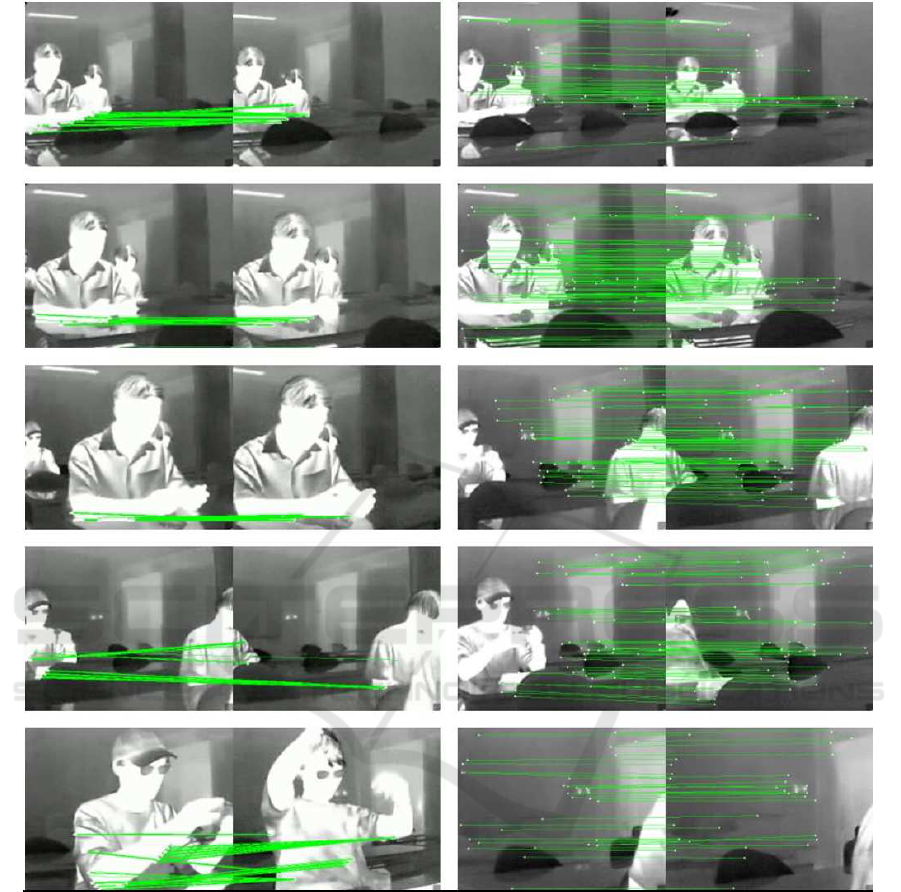

Figure 6: Image matching process. For each row, fr om left

to right, the images represent the following: Input Image,

Feature Map, Top-1 Ranked Reference Image, and Ground-

Tr uth Reference Image.

of ground-truth loop-closure pairs using RANSAC-

based inlier counts. In addition, the average process-

ing time per qu e ry frame was measured to assess com-

putational efficiency.

All thermal images were syn chronized with

odometry data via linear interpolation based on ac-

quisition timestamps. High-intensity regions at the

lower part of each image were extracted via thresh-

olding to obtain bottom-edge feature points, which

serve as dynamic landmarks. Ground-truth loop clo-

sures were determined by identifying da ta base fr ames

within 0.2 m of the query frame ’s odom e tric position.

Figure 6 shows the image matching process in the

proposed method, DLCD.

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness

111

Table 1: Performance results.

MRR

DLCD 0.222

SLCD 0.124

DLCD+SLCD 0.224

5.2 Baseline and Proposed Methods

The evaluated meth ods d etect loop closures between

thermal images using feature point matching followed

by homography estimation via RANSAC. Image fea-

tures w ere precomputed and loaded a s sets o f 2 D co-

ordinates. Homographies were estimated using the

Direct Linear Transform (DLT) algorithm applied to

homogeneous c oordinate s, with RANSAC employed

for outlier rejection. In each RANSAC iteration,

four randomly selected correspondences were used

to compute a homography hypothesis. Inliers were

determined as correspondences with projection errors

below a 5.0-pixel threshold. The model with the ma x-

imum number of inliers over 10 0 trials was selected

as the final result. If fewer than four correspondences

were available , homography estimation was skipp e d.

We compare the conventional SLCD, the proposed

DLCD, and their fusion as described in Section 4.3.

Each method is evaluated to assess its performance

and robustness in a dynamic environment.

5.3 Results

The experimental results report the loop closing ac-

curacy, specifically the mean reciprocal rank (MRR)

score. The average processing time pe r query fr ame,

including homography estimation and inlier compu-

tation, is summarized in Table 1. These results clarify

the lo op closing performance and h ighlight the com-

putational efficiency of the proposed approach in a

dynamic environment. Figure 7 shows ma tc hing re-

sults for both of the SLCD and DLCD methods.

We can see that the proposed DLCD meth od

clearly outperforms the baseline SLCD method. In

addition, the fusion method SLCD+DLCD performs

slightly better than the proposed method , demonstrat-

ing the effectiveness of the approach of fusing the two

methods.

6 CONCLUSIONS & FUTURE

WORK

This study introduced a novel loop closure method

for Visual SLAM that leverages human-object inter-

actions (HOIs) as dynamic landmarks in challenging

environments. Unlike conventional approaches that

rely on static feature s or are limited by illumination

conditions, our method utilizes the salient featu res of

humans in thermal imag ery to detect HOIs, treating

the static objects involved in these interactions as re-

liable cues for loop closure. The proposed pipeline

involves a thermal-domain-specific human tracker,

HOI feature extractio n from detected human regions,

and RANSAC-based match ing for robust loop clo-

sure. Our approach aims to enhanc e the stability of

loop closure, par ticularly in scenarios characterized

by limited static features or significant environmental

dynamics where traditional methods often fail.

Building upon the insights and methodology pre-

sented in th is study, future research will explore sev-

eral promising directio ns:

• Quantitative Evaluation in Diverse Real-World

Scenarios: While the theoretical framework for

utilizing H O Is h as been established, comprehen-

sive quantitative evaluation in a wider array of

real-world environments is crucial. This includes

datasets with varying degrees of human activity,

diverse object categorie s, and more complex en-

vironm ental changes (e.g., severe occlusions, ex-

treme temperature variations).

• Integration with Existing SLAM Frameworks:

Our curre nt work focuses on the loop closure

module. Integrating this HOI-based loop closure

method in to a full-fledged Visual SLAM system

(e.g., ORB-SLAM, LSD-SLAM) and evaluating

its end-to-end performance would be a significant

next step. This would involve assessing the im-

pact on global map consistency, localization ac-

curacy, and r eal-time performance.

• Robustness to Ambiguous HOI Detections: The

accuracy of HOI detection is paramou nt for the

effectiveness of our method. Future work will in-

vestigate techniques to improve the robustness of

HOI detectio n, e specially in cases of pa rtial oc-

clusions, unusual hu man poses, or ambiguous in-

teractions. This could involve exploring more ad-

vanced deep learning architectures or incor porat-

ing temporal reasoning.

• Scalability for Large-Scale Environments: For ap-

plications in large-scale environments, managing

and matching a potentially vast number of HOI

features could become computationally intensive.

Research into efficient data structur es, indexing

methods, and featu re aggregation techniques will

be necessary to ensure scalability.

• Extension to Other Dynamic Elements: While this

study focuses on humans, the concept of leverag-

ing dynamic entities interacting with static objects

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

112

Figure 7: Image matching results. Left: DLCD. Right: S LCD.

could be extended to other categories, such as ve-

hicles or other mobile robots. Investigating the ap-

plicability and benefits of such extensions would

broade n the scope of this approach.

• Fusion with Complementary Sensors: Combin-

ing thermal imagery with data from other sensor

modalities (e.g., event cameras f or high dynamic

range, LiDAR for precise 3D geometry) could fur-

ther enhance the robustness and accuracy of HOI-

based loop closure, especially in highly challeng-

ing conditions.

7 LIMITATIONS

While the proposed method demonstrates promising

results, it is impo rtant to ac knowledge several limita-

tions:

• Robustness of HOI Detect ion: The accura cy of

human-object interaction (HOI) detection directly

impacts the performance of our method. De-

tecting HOIs can b e challenging under complex

poses, partial o cclusions, or extreme lighting c on-

ditions. Alth ough human features are salient in

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness

113

thermal images, these challenges still exist.

• Nature of Dynamic Landmarks: Due to the dy-

namic natur e of humans, the reliability of loop

closure might be affected if HOIs are tr ansient.

While our focus is on interaction s with static ob-

jects, the duration and stability of the interaction

itself can influence the overall r obustness of the

system.

• Sparsity of Features: While our method is ef-

fective in envir onments with few static features,

there might be situations where HOIs themselves

are very rare, leading to an insufficient number o f

landmarks. This becomes particularly evident in

environments with minimal human presence or in-

frequent interaction s with specific objec ts.

• Computational Cost: Detecting and track-

ing HOIs, and subsequently performing feature

matching based on them, can be computation -

ally more intensive compared to traditional static

feature-point-based methods. Efficient algorithms

and optimization will be crucial to maintain real-

time performance.

• Dataset Diversity: Current evaluations might be

dependent on specific datasets. A comprehen-

sive quantitative evaluation across a wider range

of real-world scenarios, especially those involving

diverse human ac tivities, object categories, and

complex environmental changes (e.g ., severe oc-

clusions, extreme temperature variations), is nec-

essary.

REFERENCES

Adlakha, D. et al. (2020). Deeptio: A deep thermal-inertial

odometry with visual hallucination. arXiv.

Ali, I., Peltonen, S., and Gotchev, A. (2022). Bi-directional

loop closure for visual slam. arXiv.

Antoun, M. and Asmar, D. (2023). Human object interac-

tion detection: Design and survey. Image and Vision

Computing, 130:104617.

Arandjelovic, R ., Gronat, P., Torii, A., Pajdla, T., and Sivic,

J. (2018). Netvlad: C nn architecture for weakly super-

vised place recognition. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 40(4):1168–1181.

Chen, Z., Song, Z., Pang, Z.-J., Liu, Y., Chen, Z., Han,

X.-F., Zuo, Y.-W., and Shen, S.- J. (2024). Glc-slam:

Gaussian splatting sl am with efficient loop cl osure.

Chum, O., Matas, J., and Kittler, J. (2003). Locally opti-

mized ransac. In Michaelis, B. and Krel l, G., editors,

Pattern Recognition, pages 236–243, B erlin, Heidel-

berg. Springer Berlin Heidelberg.

Cormack, G. V., Clarke, C. L. A., and Buettcher, S. (2009).

Reciprocal rank fusion. In Proceedings of the 32nd

international ACM SIGIR conference on Research and

development in information retrieval, pages 528–529.

ACM.

Cummins, M. and Newman, P. (2008). Fab-map: Proba-

bilistic localization and mapping in the space of ap-

pearance. The International Journal of Robotics Re-

search, 27(6):647–665.

Cummins, M. and Newman, P. (2010). Appearance-only

slam at large scale with fab-map 2.0. International

Journal of Robotics Research, 29(8):943–959.

DeTone, D., Malisi ewicz, T. , and R abinovich, A. (2018).

Superpoint: Self-supervised interest point detection

and description. In 2018 IEEE/CVF Conference on

Computer Vision and Pattern Recognition Workshops

(CVPRW), pages 337–33712.

Engel, J. , Sch¨ops, T., and Cremers, D. (2014). Lsd-slam:

Large-scale direct monocular slam. In European con-

ference on computer vision, pages 834–849. Springer.

Klein, G. and Murray, D. (2007). Parallel tracking and map-

ping for small ar workspaces. In Proceedings of IS-

MAR 2007. IEEE.

Lahiany, A. and Gal, O. (2025). Autoloop: Fast visual slam

fine-tuning through agentic curriculum learning.

Li, S., Ma, X., He, R., Shen, Y., Guan, H., Liu, H., and

Li, F. (2025). Wti-slam: a novel thermal infrared vi-

sual slam algorithm for weak texture thermal infrared

images. The Journal of Engineering.

Liang, J. T. Y. and Tanaka, K. (2024). Robot traversability

prediction: Towards third-person-view extension of

walk2map wi th photometric and physical constraints.

In IEEE/RSJ International Conference on Intelligent

Robots and Systems, IROS 2024, pages 11602–11609.

IEEE.

Lim, T. Y., Sun, B., Pollefeys, M., and Blum, H. (2025).

2go: Loop closure from two views.

Liso, L., Sandstrom, E., Yugay, V., G ool, L. V., and Oswald,

M. R. (2024). Loopy-slam: Dense neural slam with

loop closures.

Lowe, D. G. (2004). Distinctive image features from scale-

invariant keypoints. Int. J. Comput. Vis., 60(2):91–

110.

Montemerlo, M., T hrun, S., Koller, D., Wegbreit, B., et al.

(2002). Fastslam: A factored solution to the simulta-

neous localization and mapping problem. Aaai/iaai,

593598:593–598.

Mur-Artal, R., Montiel, J. M. M., and Tardos, J. D. (2015a).

Orb-slam: A versatile and accurate monocular slam

system. IEEE Transactions on Robotics, 31(5):1147–

1163.

Mur-Artal, R., Montiel, J. M. M., and Tardos, J. D. (2015b).

Orb-slam: A versatile and accurate monocular slam

system. IEEE transactions on robotics, 31(5):1147–

1163.

Qu, H., Tang, X., Liu, C.-T., Chen, X.-Y., Li, Y.-Z., Zhang,

W.-K., Zhang, H.-T., and Li, J.-H. (2024). Dk-slam:

Monocular visual slam with deep keypoint learning,

tracking and loop-closing.

Rublee, E., R abaud, V., Konolige, K., and Bradski, G.

(2011). Or b: An efficient alternative to sift or surf. In

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

114

2011 International Conference on Computer Vision,

pages 2564–2571.

Saputra, M. R. U., Lu, C. X ., de Gusmao, P. P., Wang, B.,

Markham, A., and Trigoni, N. (2022). Graph-based

thermal–inertial slam with probabilistic neural net-

works. IEEE Transactions on Robotics, 38(3):1875–

1893.

Shin, Y.-S. and Kim, A. (2019). Sparse depth enhanced

direct thermal-infrared slam beyond the visi ble spec-

trum.

Tanaka, K. (2002). Detecting collision-free paths by ob-

serving walking people. I n IEEE/RSJ International

Conference on Intelligent Robots and Systems, pages

55–60. IEEE.

Teixeira, T., Dublon, G., and Savvides, A. (2010). A survey

of human-sensing: Methods for detecting presence,

count, location, track, and identity. ACM Computing

Surveys, 5(1):59–69.

van de Molengraft, S., Ferranti, L., and Dubbelman, G.

(2023). Firebotslam: Thermal slam to increase situ-

ational awareness in smoke-filled environments. Sen-

sors, 23(17):7611.

Wang, N., Chen, X., Shi, C., Zheng, Z., Yu, H., and Lu,

H. (2024). Sglc: Semantic graph-guided coarse-fine-

refine full loop closing for lidar slam.

Xu, Y., Hao, Q., Zhang, L., Mao, J., He, X., Wu, W., and

Chen, C. (2025). Slam in the dark: Self-supervised

learning of pose, depth and loop-closure f r om thermal

images.

Zhang, Y., Sun, P., Jiang, Y., Yu, D., Weng, F., Yuan, Z.,

Luo, P., Liu, W., and Wang, X. (2022). Bytetrack:

Multi-object tracking by associating every detection

box. In European conference on computer vision,

pages 1–21. Springer.

HOI-LCD: Leveraging Humans as Dynamic Landmarks Toward Thermal Loop Closing Even in Complete Darkness

115