Guiding Improvement in Data Science: An Analysis of Maturity Models

Christian Haertel

a

, Tom Engelmann, Abdulrahman Nahhas

b

, Christian Daase

c

and

Klaus Turowski

d

Magdeburg Research and Competence Cluster VLBA, Otto-von-Guericke-University, Magdeburg, Germany

Keywords:

Maturity Models, Data Science, Data Science Lifecycle, Literature Review.

Abstract:

Maturity models (MMs) can help organizations to evaluate and improve the value of emerging capabilities

and technologies by assessing strengths and weaknesses. Since the field of Data Science (DS), with its rising

importance, struggles with successful project completion because of diverse technical and managerial chal-

lenges, it could benefit from the application of MMs. Accordingly, this paper reports on a structured literature

review to identify and analyze MMs in DS and related fields. In particular, 18 MMs were retrieved, and their

contribution toward the individual stages of the DS lifecycle and common DS challenges was assessed. Based

on the outlined gaps, the development of a meta-maturity model for DS can be pursued in the future.

1 INTRODUCTION

The amount of data generated has been accelerated by

the COVID-19 pandemic and is projected to increase

even further in the future (Business Wire, 2021). Data

Science (DS) aims to leverage improvements in per-

formance for organizations (Chen et al., 2012) by

(semi-)automatically generating insights from com-

plex data (Schulz et al., 2020). However, studies show

that over 80% of DS projects fail (VentureBeat, 2019)

and do not yield any business value (Gartner, 2019).

Typical challenges in DS include low process ma-

turity, a lack of team coordination, and the absence

of reproducibility (Martinez et al., 2021). Therefore,

new approaches for handling the managerial and tech-

nical challenges of DS projects are needed (Saltz and

Krasteva, 2022).

Maturity models (MM) can be seen as support

tools to evaluate and improve an organization’s prac-

tices in a specific field (Pereira and Serrano, 2020).

Furthermore, they assist enterprises in assessing

and realizing the value of emerging capabilities and

technologies (H

¨

uner et al., 2009). Thus, enterprises

are enabled to grade their existing strengths and

weaknesses and consequently guide improvement

endeavors. DS is a discipline that can benefit from

a

https://orcid.org/0009-0001-4904-5643

b

https://orcid.org/0000-0002-1019-3569

c

https://orcid.org/0000-0003-4662-7055

d

https://orcid.org/0000-0002-4388-8914

the employment of MMs because of the high project

failure rates and the potential increases in return

on investments (G

¨

okalp et al., 2021). To assess the

contribution of contemporary DS MMs toward this

objective, this paper reports on a systematic literature

review (SLR) that identifies and analyzes existing

MMs in DS in two dimensions. Initially, the MMs are

evaluated regarding their consideration of the typical

stages in a DS undertaking, which is captured by the

first research question (RQ):

RQ 1: Which stages of the Data Science lifecycle are

addressed by the maturity models in the literature?

According to Haertel et al. (2022), a DS project

can be commonly subdivided into six abstract phases.

To fully assess an organization’s maturity in this

discipline, all activities within the DS lifecycle

should be taken into account. Secondly, throughout

the individual stages in a DS undertaking, various

challenges related to project, team, and data and

information management are encountered (Martinez

et al., 2021). Therefore, mitigating the obstacles in

DS project execution is required to progress toward

reducing the number of unsuccessful endeavors.

Accordingly, for the second RQ, the coverage of DS

challenges by the MMs is investigated:

RQ 2: Which common challenges of Data Science

projects are assessed by the maturity models?

450

Haertel, C., Engelmann, T., Nahhas, A., Daase, C. and Turowski, K.

Guiding Improvement in Data Science: An Analysis of Maturity Models.

DOI: 10.5220/0013739300004000

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Knowledge Discovery, Knowledge Engineer ing and Knowledge Management (IC3K 2025) - Volume 2: KEOD and KMIS, pages

450-460

ISBN: 978-989-758-769-6; ISSN: 2184-3228

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

As a result, this work offers valuable contributions

for theory and practice. An overview of existing MMs

in the academic literature is provided. For practition-

ers, the discussion of the RQs can facilitate the se-

lection of suitable MMs. Moreover, the analysis un-

veils potential gaps and weaknesses in the MMs to

guide future research endeavors to progress the state

of MMs in DS.

The remainder of the article is structured as fol-

lows. First, a background discussion on DS and MMs

is given. Then, the SLR process is outlined, which

follows the PRISMA guidelines (Page et al., 2021).

Subsequently, an overview and descriptive analysis

of the identified MMs are provided. In the fifth sec-

tion, the two RQs are discussed. Finally, the study is

concluded with a synthesis of the findings and recom-

mendations for future research.

2 THEORETICAL BACKGROUND

The theoretical background discusses the central con-

cepts around DS and MMs to establish the necessary

fundamentals for the topic. DS is characterized as

the ”methodology for the synthesis of useful knowl-

edge directly from data through a process of discovery

or of hypothesis formulation and hypothesis testing”

(Chang and Grady, 2019). Extracting value from data

often involves advanced analytical methods, such as

Machine Learning (ML) (Rahlmeier and Hopf, 2024).

ML describes algorithms that can learn from data

to detect complex patterns without explicit program-

ming (Janiesch et al., 2021; Bishop, 2006). Since DS

projects usually involve datasets that can be classi-

fied as Big Data (BD), this connection led to the term

”Big Data Science” (Chang and Grady, 2019). Fur-

thermore, Data Mining (DM) is closely linked to DS

and emerged as a specialization of Data Analytics in

the 1990s. Briefly, it is defined as a set of algorithms

for ”extracting patterns from data” (Chang and Grady,

2019). The tight liaison between these fields is under-

scored through the uniform application of methodical

approaches (e.g., process models).

Based on the work of Haertel et al. (2022), a

DS undertaking is typically structured in six phases,

namely 1) Business Understanding, 2) Data Collec-

tion, Exploration and Preparation, 3) Analysis, 4)

Evaluation, 5) Deployment, and 6) Utilization. Busi-

ness Understanding marks the starting point in the

DS lifecycle and includes activities such as the defini-

tion of business and associated DS objectives, as well

as the establishment of a project plan (Haertel et al.,

2022). The following stage involves data-related tasks

like the identification of data sources, data collection,

exploration, and preparation. Analysis contains ac-

tivities such as model selection, development, and as-

sessment. During the Evaluation phase, the analytical

models are reviewed concerning the business objec-

tives. In case of a decision to progress the project with

the Deployment, the commissioning of the models is

prepared and executed. Finally, Utilization includes

the use of the models in production and monitoring

and maintenance tasks (Haertel et al., 2022). The ex-

ecution of a DS project is a complex socio-technical

(Sharma et al., 2014; Thiess and M

¨

uller, 2018) en-

deavor that is subject to various technical and man-

agerial challenges. The literature highlights aspects

such as a low level of process maturity, clarity of ob-

jectives, a lack of team communication and collabo-

ration, or the difficulty in developing and deploying

models (Martinez et al., 2021). Addressing the typi-

cally faced obstacles is needed for improving the DS

project success rates (Haertel et al., 2023; Martinez

et al., 2021).

On this note, MMs might be helpful support tools

for organizations to evaluate and improve their prac-

tices in a particular field (Pereira and Serrano, 2020).

The assessment of strengths and weaknesses can con-

sequently guide improvement endeavors, including

DS. The MM concept arose in the early 1970s, and

since then, a variety of MMs have been developed

for numerous disciplines (Carvalho et al., 2019). A

notable example is the Capability Maturity Model

(CMM) that was built to evaluate the maturity of soft-

ware processes (Paulk et al., 1993). In general, an

MM features a set of maturity levels for ”a class of

objects” (Becker et al., 2009) (e.g., dimensions, ca-

pabilities). MMs provide different criteria and char-

acteristics that are required to be matched to achieve

a certain maturity level (Becker et al., 2009). Af-

ter the assessment of the maturity level in a given

field, measures for improvement can be derived to in-

crease the degree of maturity (IT Governance Insti-

tute, 2007). In DS, an exemplary MM is the Data sci-

ence capability maturity model (DSCMM) that was

constructed based on the MM development method of

Becker et al. (2009) to guide improvement in DS, in-

cluding technical, managerial, and organizational as-

pects (G

¨

okalp et al., 2022b). In this work, we aim to

obtain a comprehensive view of existing MMs for DS

and analyze their impact on the DS lifecycle (RQ 1)

and typical DS challenges (RQ 2).

3 METHODOLOGY

The guidelines of PRISMA (Page et al., 2021) were

followed to ensure a standardized and peer-accepted

Guiding Improvement in Data Science: An Analysis of Maturity Models

451

process for conducting the SLR. Sufficient source

coverage is established through the selection of five

renown scientific databases, namely AIS eLibrary

(AISeL), Scopus, IEEE Xplore (IEEE), ScienceDirect

(SD), and ACM Digital Library (ACM DL). To

guarantee consistency, a unified search term was

applied across all databases to identify MMs in DS.

Accordingly, the search query is composed of DS and

similar fields as well as terms related to MM:

(”maturity model” OR ”capability model” OR

”maturity level”) AND (”data mining” OR ”data

science” OR ”machine learning” OR ”big data”)

In total, the initial database search retrieved 870

articles that were published in 2019 and later. For

transparency, the distribution of results per database

is given in Table 1. Before the initial content screen-

ing, 108 duplicates were removed, leaving 762 publi-

cations.

Table 1: Initial search results as of 25th March 2025.

Database Search Space Results

Scopus Title, abstract, keywords 384

SD Title, abstract, keywords 76

IEEE All metadata 202

ACM DL Title, abstract 4

AISeL All fields 204

The three filtering stages of title assessment, ab-

stract screening, and full-text examination, prescribed

by Brocke et al. (2009), were applied to identify the

relevant contributions for the research endeavor. For

this purpose, suitable inclusion and exclusion criteria

need to be defined. The criteria for this SLR are pre-

sented in Table 2 and discussed in the following. Pub-

lications should comprise at least six pages to ensure

sufficient depth in the discourse of an MM. Literature

reviews on MMs are not considered if they do not re-

sult in the construction of a new MM. Only MMs with

the typical structure of dimensions and maturity lev-

els (Becker et al., 2009) are considered. Addition-

ally, because of this article’s focus on DS projects

in general, MMs that are only applicable to a spe-

cific domain are excluded. If an MM has multiple

versions (e.g., revisions) across different publications,

only the most recent implementation is included. Due

to the COVID-19 pandemic’s role in accelerating dig-

ital transformation at an unprecedented pace (Shah,

2022) and the significant impact on DS, only articles

from 2019 onward are considered. Further inclusion

criteria include the language (English or German) and

valid article types. To qualify an article for the next

filtering stage, all inclusion criteria must be fulfilled.

On the contrary, a match with one of the exclusion

criteria leads to the dismissal of a reviewed item.

Table 2: Inclusion and exclusion criteria.

Inclusion criteria Exclusion criteria

The publication dis-

cusses an MM in DS

or a related field.

The publication has

less than six pages.

The publication is

written in English or

German.

The MM does not pre-

scribe dimensions and

maturity levels.

The publication was

published in 2019 or

later.

The publication is

solely a literature

review on MMs.

The publication is a

journal article or con-

ference paper.

The MM is domain-

specific and irrelevant

to DS projects in gen-

eral.

The MM has a revised

or updated version.

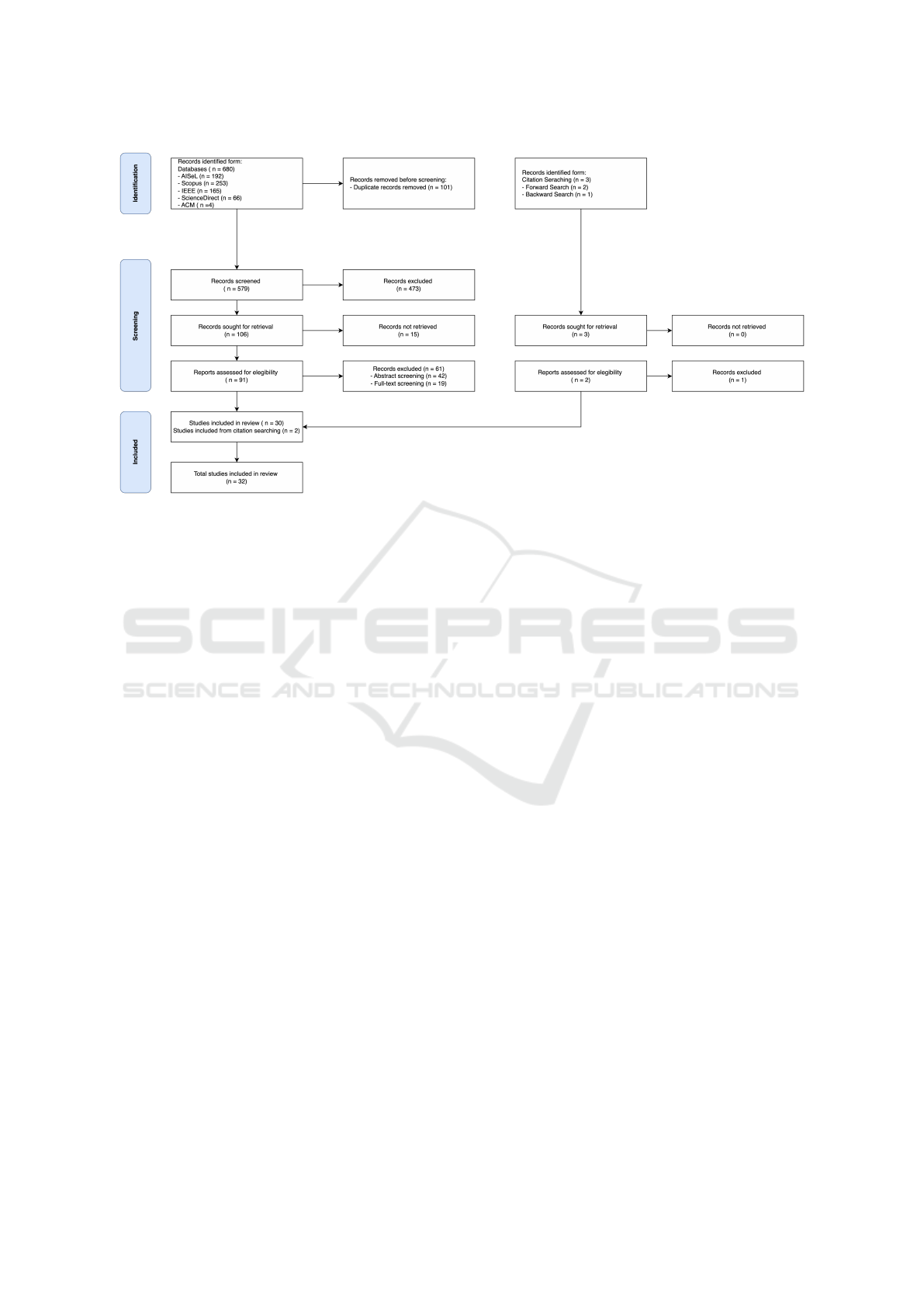

The 762 remaining records after duplicate re-

moval were then subject to the title screening, which

led to the elimination of 649 articles. This significant

reduction is attributed to the use of rather general-

ized search keywords such as ”maturity level”. Con-

sequently, a large portion of the publications had a

deviating research context (e.g., articles focusing on

agricultural maturity). Afterward, the 97 papers with

full-text access were assessed for eligibility in two

stages. First, the abstract evaluation removed another

44 articles. Accordingly, 53 contributions were sub-

ject to the full examination, leaving 18 studies. Fi-

nally, a forward and backward search on these items

was performed to identify additional relevant stud-

ies and mitigate potential bias from the search string

(Webster and Watson, 2002). While six candidate pa-

pers were identified using the citation search, none

of them passed the filtering process. Therefore, the

search and filtering process resulted in a total of 18

publications. Figure 1 visually summarizes this en-

tire process, following the PRISMA guidelines (Page

et al., 2021).

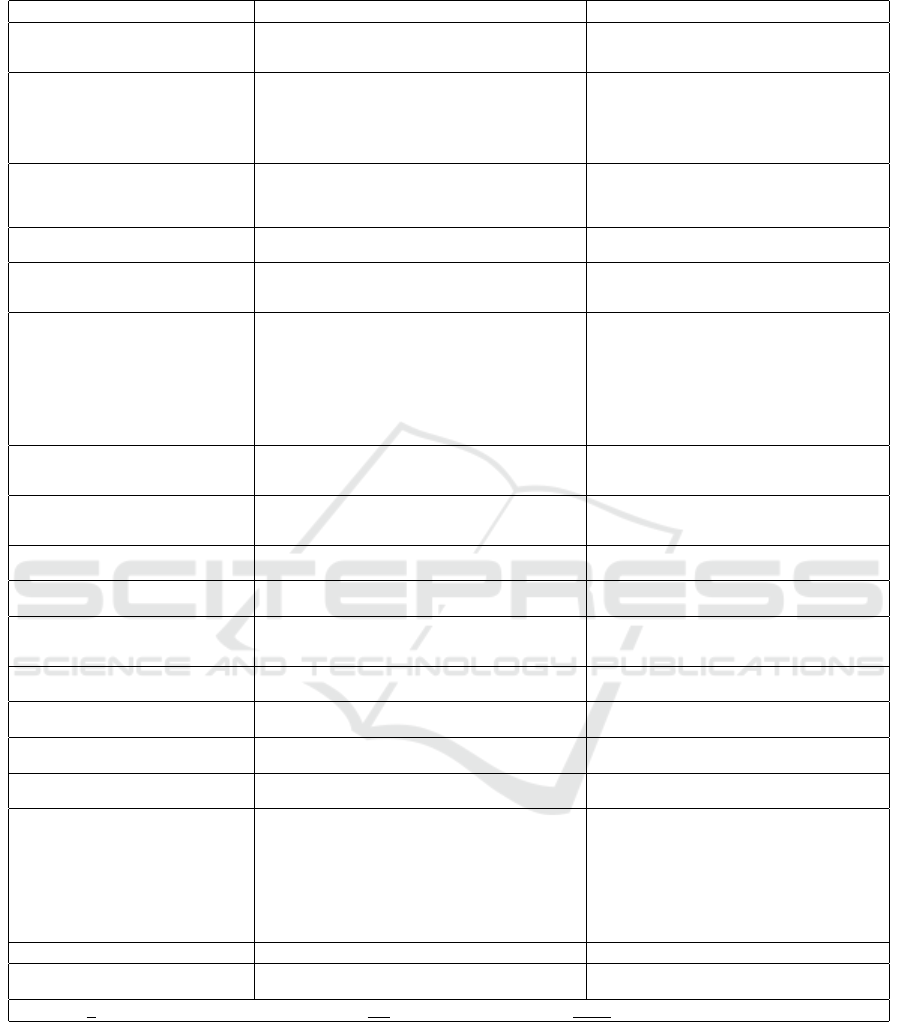

4 DESCRIPTIVE ANALYSIS

In this SLR, a primarily qualitative analysis is con-

ducted. Table 3 displays the concept matrix to support

this undertaking. It lists all identified MMs of this

SLR sorted by year of publication and author names,

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

452

Figure 1: Literature search and filtering process.

assigns an ID to the respective publication, and de-

notes the focus of the MM. Additionally, it contains

the DS lifecycle stages as proposed by Haertel et al.

(2022) as units of analysis (RQ 1). Before focusing

on this aspect in the next chapter, the retrieved MMs

will be introduced.

4.1 Overview of Maturity Models in

Data Science

Out of the 18 reviewed MMs, only two are gener-

ally designed for DS based on the authors’ statements.

Cavique et al. (2024) propose a DS MM, which com-

bines business experimentation and causality con-

cepts, featuring four criteria. In this regard, the

DSCMM of G

¨

okalp et al. (2022b) is more elaborate,

with five process areas and associated processes. The

model is grounded on the ISO/IEC standard. The re-

maining MMs address related fields or subdisciplines

of DS. Four MMs focus on (BD) Analytics, including

the capability maturity model for advanced data ana-

lytics (ADA-CMM) that was developed via a Delphi

study (Korsten et al., 2024). A maturity framework

to guide analytics growth is proposed by Menukhin

et al. (2019), constructed through action design re-

search. The BD Analytics MM of Pour et al. (2023)

is the result of an SLR to detect BD Analytics prac-

tices and their consequent assessment by expert sur-

veys. The process assessment model for BD Analyt-

ics of G

¨

okalp et al. (2022a) has similar origins to the

DSCMM. The key distinction is situated in the pro-

cess dimensions being closely aligned with the DS

lifecycle stages.

Two articles propose MMs for BD. Helal et al.

(2023) present an MM for the effect of BD on pro-

cesses based on COBIT 5. On the other hand, Corallo

et al. (2023) provide an MM for evaluating the ma-

turity level of BD management and Analytics in in-

dustrial companies. Five articles discuss MMs for

ML or Artificial Intelligence (AI). While Yablonsky

(2021) focuses on AI-driven platforms, Fukas (2022)

proposes a general AI MM, also relying on the MM

development model of Becker et al. (2009). Tackling

the issues of defining business cases for AI, Akkiraju

et al. (2020) suggest an MM for ML model lifecycle

management that is built on the CMM. Schrecken-

berg and Moroff (2021) developed a maturity-based

workflow to assess the capability of an organization

to realize ML applications. In a recent article, For-

nasiero et al. (2025) present an MM for AI and BD,

mainly applicable to the process industry. Another

MM related to ML aims to adopt, assess, and advance

ML Operations (MLOps) and was developed based

on case studies of multiple companies. The remain-

ing MMs have a more niche focus. Following a de-

sign science approach, Desouza et al. (2021) propose

an MM for cognitive computing systems, which is in-

terpreted as an umbrella term for technologies like AI,

BD Analytics, ML, etc. On the contrary, Zitoun et al.

(2021) have a condensed focus with their MM for

data management. Similarly, Raj M et al. (2023) con-

centrate on the maturity of industrial data pipelines.

Finally, Hijriani and Comuzzi (2024) cover a subdo-

main of DS, process mining, with their MM.

Guiding Improvement in Data Science: An Analysis of Maturity Models

453

Table 3: Concept Matrix for DS MMs.

# Articles BU DA AN EV DM Util Focus

1 Menukhin et al. (2019) X X X X Analytics

2 Akkiraju et al. (2020) X X X X X X Machine Learning

3 Desouza et al. (2021) X X X X Cognitive Comput-

ing Systems

4 Schreckenberg and Moroff (2021) X X Machine Learning

5 Yablonsky (2021) X X X Artificial Intelligence

6 Zitoun et al. (2021) X X X X Data Management

7 Fukas (2022) X X X Artificial Intelligence

8 G

¨

okalp et al. (2022a) X X X X X X Big Data Analytics

9 G

¨

okalp et al. (2022b) X X X X X X Data Science

10 Corallo et al. (2023) X X Big Data

11 Helal et al. (2023) X X X X X X Big Data

12 Raj M et al. (2023) X X X Data Pipelines

13 Pour et al. (2023) X X X X X X Big Data Analytics

14 Cavique et al. (2024) X X X Data Science

15 Hijriani and Comuzzi (2024) X X Process Mining

16 Korsten et al. (2024) X X X X X Data Analytics

17 Fornasiero et al. (2025) X X AI & Big Data

18 John et al. (2025) X X X X MLOps

#: Article ID, BU: Business Understanding, DA: Data Collection, Exploration and Preparation, AN: Analysis,

EV: Evaluation, DM: Deployment, Util: Utilization

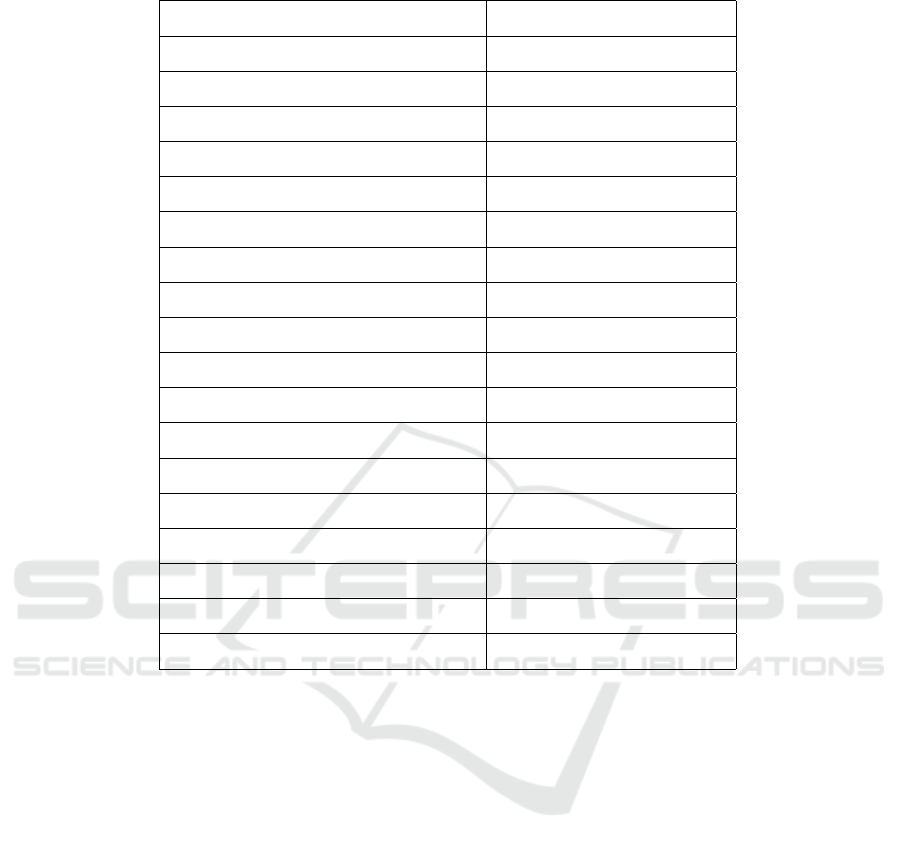

4.2 Dimensions and Maturity Levels

After an overview of the MMs and their context, their

contents are analyzed. For this purpose, Table 4 dis-

plays the dimensions and maturity levels of each MM.

The maturity levels constitute the desired or antic-

ipated state for ”a class of objects” (Becker et al.,

2009). Hence, they can be used to evaluate the current

state of maturity in an organization for a particular

field by determining a maturity level for each dimen-

sion in an MM. According to Becker et al. (2009),

the lowest level stands for the initial stage of an or-

ganization, while the highest level represents a con-

ception of full maturity based on the model. Hence,

recommendations for improvement measures can be

derived and prioritized to reach higher maturity levels

(IT Governance Institute, 2007). The number of ma-

turity levels in the reviewed MMs varies between four

and six. The MMs also partly differ in the terminol-

ogy used to describe these levels. Despite these differ-

ences, they all have a common framework: an initial

level, which constitutes the starting point, and a high-

est level, which signifies an optimized state. For ex-

ample, the MMs proposed by G

¨

okalp et al. (2022b,a)

are based on an ISO/IEC standard, which leads to uni-

form maturity levels in these frameworks. Contrary,

the maturity levels of Menukhin et al. (2019) and

Akkiraju et al. (2020) have been largely adopted from

the CMM (Paulk et al., 1993), essentially consisting

of five levels ranging from ”Initial” to ”Optimized”.

Raj M et al. (2023); Pour et al. (2023); Cavique et al.

(2024), and Fornasiero et al. (2025) use numeric lev-

els to describe the degree of maturity. Notably, the

process mining MM of Hijriani and Comuzzi (2024)

prescribes unique maturity levels for each of the seven

dimensions.

Overall, dimensions are being proposed differ-

ently across the publications due to the varying focus

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

454

Table 4: Dimensions and maturity levels.

# Articles #D Dimensions #ML Maturity Levels

1 Menukhin et al. (2019) 6 Organisation, IT&A Infrastructure, Analytics Pro-

cesses, Skills, Governance, Data & Analytics Tech-

nologies,

5 Initial, Committed, Focused, Managed, Opti-

mised

2 Akkiraju et al. (2020) 9 AI Model Goal Setting, Data Pipeline Management,

Feature Preparation Pipeline, Train Pipeline Man-

agement, Test Pipeline Management; Model Qual-

ity Performance and Model Management; Model Er-

ror Analysis, Model Fairness & Trust, Model Trans-

parency

5 Initial, Repeatable, Defined, Managed, Opti-

mizing

3 Desouza et al. (2021) 2 Technical Elements (Big Data, Computational Sys-

tems, Analytical Capacity), Organizational Elements

(Innovation Climate, Governance and Ethical Frame-

works, Strategic Visioning)

5 Ad-Hoc, Experimentation, Planning and De-

ployment, Scaling and Learning, Enterprise-

wide transformation

4 Schreckenberg and Moroff (2021) 5 Process, Data, Technology, Orga. & Staff, Strategy 4 AI-new, AI-enabled, AI-experienced, AI-

advanced

5 Yablonsky (2021) 5 AI Awareness, Adjustment of AI, Measurement of AI,

AI Reporting & Interpretation, AI Decision-making

5 Human Led; Human Led, Machine Supported;

Machine Led, Human Supported; Machine Led,

Human Governed; Machine

6 Zitoun et al. (2021) 4 Enterprise & Intent (Business Strategy, Cul-

ture & People), Data Management (Data Col-

lection & Availability, Metadata Management

& Data Quality, Data Storage & Preservation,

Data Distribution & Consumption, Data Analyt-

ics/Processing/Transformation, Data Governance,

Data Monitoring & Logging), Systems (Architecture

& Infrastructure, Data Integration, Security), Data

Operations (Processes, Data Deployment & Delivery)

6 No capability, Initial, Developing Awareness &

Pre-Adoption, Defined, Managed, Optimized

7 Fukas (2022) 8 Technologies, Data, People & Competences, Organi-

zation & Processes, Strategy & Management, Budget,

Products & Services, Ethics & Regulations

5 Initial, Assessing, Determined, Managed, Opti-

mized

8 G

¨

okalp et al. (2022a) 6 Business Understanding, Data Understanding, Data

Preparation, Model Building, Evaluation, Deploy-

ment and Use

6 Incomplete, Performed, Managed, Established,

Predictable, Innovating

9 G

¨

okalp et al. (2022b) 5 Organization, Strategy Management, Data Analytics,

Data Governance, Technology Management

6 Incomplete, Performed, Managed, Established,

Predictable, Innovating

10 Corallo et al. (2023) 5 Organisation, Data Management, Data Analytics, In-

frastructure, Governance & Security

4 Unaware, Aware, Action, Mature

11 Helal et al. (2023) 13 Collection, Planning, Management, Integration, Fil-

tering, Enrichment, Analysis, Visualization, Storage,

Destruction, Archiving, Quality, Security

5 Ad-hoc, Defined, Early adoption, Optimizing,

Strategic

12 Raj M et al. (2023) 5 Security, Scalability, Resiliency, Robustness, De-

pendability

5 Level 1 - 5

13 Pour et al. (2023) 4 Strategic Capabilities, Managerial Capabilities, Peo-

ple Capabilities, Technological Capabilities

5 Level 1 - 5

14 Cavique et al. (2024) 4 Information system, approach to planning, guidance,

function

4 Level 0 - 3

15 Hijriani and Comuzzi (2024) 7 Technology, Pipeline, Data, People, Culture, Gover-

nance, Strategic Alignment

4-5 varies by dimension; Technology has 4 levels,

the rest 5

16 Korsten et al. (2024) 5 Strategy (ADA Strategy, Strategic Alignment), Data

& Governance (Data Architecture, Automation, Data

Integration, Data Governance, Data Analytics Tools),

People & Culture (Knowledge, Commitment, Team

Diversity, Usage), Process Design & Collaboration

(Competence & Skills Development, Communica-

tion, Portfolio Management, Organizational Collabo-

ration), Performance & Value (Performance Metrics,

Innovation Processes)

4 Low, Moderate, High, Top

17 Fornasiero et al. (2025) 5 Strategy, Organisation, People, Technology, Data 5 Level 0-4

18 John et al. (2025) 5 Data, Model, Deployment, Operations & Infrastruc-

ture, Organisation

5 Ad Hoc, DataOps, Manual MLOps, Automated

MLOps, Kaizen MLOps

#: Article ID based on concept matrix, #D: Number of dimensions, #ML: Number of maturity levels

of the MMs. Dimensions are characterized as differ-

ent aspects of organizational capabilities or functions

(G

¨

oks¸en and G

¨

oks¸en, 2021). These dimensions col-

lectively indicate the general maturity state. The num-

ber of dimensions across the MMs varies between

four and 13, whereas almost half of the publications

employ five dimensions. For instance, the MM for

Data Analytics of Korsten et al. (2024) contains the

dimensions Strategy, Data & Governance, People &

Culture, Process Design & Collaboration, and Per-

formance & Value. The largest number of main di-

mensions is featured in the BD MM of Helal et al.

(2023) (13). However, other authors assign multiple

subcapabilities to a dimension, as can be seen in Zi-

Guiding Improvement in Data Science: An Analysis of Maturity Models

455

toun et al. (2021)’s MM for data management. An

organizational dimension is prescribed in nine sepa-

rate articles. However, its scope can differ. G

¨

okalp

et al. (2022a) and Corallo et al. (2023) include the

degree of communication and collaboration among

DS teams and efficient leadership. Menukhin et al.

(2019) tightly couple the organizational dimension to

the question of whether analytics is used in every-

day decision-making in the organization. The related

Strategy dimension is also quite common among the

general DS and analytics-focussed MMs, often as-

sessing the availability of an overall strategy for an-

alytics (Korsten et al., 2024). Business units, such as

the management or other stakeholders, must support

DS activities to further invest in them (G

¨

okalp et al.,

2022b).

Furthermore, Data Governance occurs frequently

as a dimension, evaluating the degree to which com-

panies address data security, privacy, and quality

problems (G

¨

okalp et al., 2022b). According to

Corallo et al. (2023) and Korsten et al. (2024), ac-

cess management and secure management of data fall

under this capability but not activities such as data

processing or data storage. Data and Data Analytics

are other typical dimensions. For the latter, Corallo

et al. (2023) and Menukhin et al. (2019) are both con-

necting it to the existence of data and analytics pro-

cesses and how the organization manages them. In

the work of Hijriani and Comuzzi (2024), the Data

dimension provides a rating of the state and availabil-

ity of the data for analytics but also refers to aspects

of data governance. G

¨

okalp et al. (2022b) combines

the former activities into their Data Analytics dimen-

sion. The MM for MLOps of John et al. (2025) unites

data governance, data management, data versioning,

and the feature store under Data. Consequently, it

can be stated that the dimensions around data have

significant differences in their manifestations across

the MMs.

Another common dimension is Technology. While

Schreckenberg and Moroff (2021) focus here on the

use of data warehouses and cross-divisional enterprise

resource planning systems, G

¨

okalp et al. (2022b) re-

fer to the degree of consideration of scalable and dis-

tributed technologies to efficiently manage the char-

acteristics of BD. Therefore, hardware, software, con-

figuration management, and deployment processes

are being taken into consideration. Notably, Pour

et al. (2023) combine the sub-dimensions Analytical

Infrastructure, Data Management, and Analytic tools

/ Techniques into the technological dimension. Ul-

timately, Organization, Strategy, Technology, Data,

and Analytics can be counted as the main dimensions

of the MMs in DS. Nevertheless, MMs that address a

particular area of DS (e.g., ML, data pipelines) pre-

dominantly propose dimensions that are closely re-

lated to their respective focus area.

5 RESEARCH AGENDA

After completion of the descriptive analysis of the

MMs, including the detailed synopsis of dimensions

and maturity levels, this section concentrates on dis-

cussing the two RQs.

5.1 Incorporation of Data Science

Lifecycle Stages

In addition to listing all included papers of the SLR

for transparency purposes, the concept matrix in Table

3 helps to conceptually structure the publications ob-

jectively (Klopper and Lubbe, 2007). This approach

supports a traceable discussion of the findings and

aligns with the principles of the PRISMA guidelines

(Page et al., 2021). The concept matrix indicates

which stages of the DS lifecycle, outlined in Section

2, are addressed by the MMs. Accordingly, when a

corresponding column is marked (X), the MM can be

used to evaluate the maturity of an organization for

some or all activities of the respective DS lifecycle

stage. Thus, this analysis allows readers to compre-

hend which MMs are potentially relevant to the as-

sessment of end-to-end DS maturity.

The concept matrix shows that all of the MMs

consider tasks of Data Collection, Exploration and

Preparation, reinforcing the notion of the pivotal role

of data in these undertakings. Apart from four MMs,

Business Understanding is consistently taken into ac-

count with the common organizational and strategic

dimensions, covering aspects such as planning, skills,

and culture. Analysis and Deployment are also incor-

porated by many MMs. On the other hand, Evalua-

tion and Utilization are addressed the least, with the

former finding a mention in only six MMs. Since

this stage involves the assessment of the analytical

models concerning the business objectives, a reason

for this observation could be found in the strongly

context-dependent (e.g., project characteristics, ana-

lytics type, application) manifestation of this phase.

Five of the reviewed MMs consider aspects of all

DS lifecycle stages. For practitioners in search of a

holistic MM regarding the DS lifecycle, a look into

these models might be useful. One candidate is the

ML maturity framework of Akkiraju et al. (2020),

which puts superficial emphasis on organizational as-

pects but includes dimensions like model fairness and

transparency. In the case of the BD Analytics MM,

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

456

Table 5: Coverage of typical DS challenges by MMs, based on Martinez et al. (2021).

Data Science Challenge Maturity Models (#)

Alignment of objectives 1-4, 6-10, 13, 15, 17-18

Data governance & management 1, 3, 5-11, 13, 15-16, 18

IT infrastructure 1, 3-4, 6-10, 13-14, 18

Analytical model development 1-2, 5-6, 8-11, 13-14, 16, 18

Skills & training 1, 3-4, 6-7, 13, 15-17

Data quality 3, 5-6, 8-9, 11, 15, 17

Data availability 4-6, 8-9, 11, 15, 17

Data security & privacy 6-7, 9-12, 15, 17

Deployment approach 3, 5-6, 8-9, 18

Clear objectives & scope 1-2, 7-8, 11, 16

DS roles & responsibilities 1, 9, 10, 16, 18

Emphasis on Business Understanding 8-9, 11, 14, 16

Knowledge retention 1-2, 4, 9, 12

Setup for reproducibility 1-2, 5, 6, 18

Process maturity 3, 6, 8-9

Result evaluation 2, 8, 11, 16

Team communication & collaboration 8, 10, 16, 18

Control processes 9

the dimensions almost exactly align with the DS life-

cycle of Haertel et al. (2022) (G

¨

okalp et al., 2022a).

The DSCMM integrates these capabilities as pro-

cesses across the different dimensions (”process ar-

eas”) (G

¨

okalp et al., 2022b). While Helal et al. (2023)

touch on all stages, too, the model is mainly aligned

with the lifecycle of data (including archiving and de-

struction) and prioritizes analytics and its evaluation

less. In conclusion, it has to be noted that the MMs

of G

¨

okalp et al. (2022a) and G

¨

okalp et al. (2022b)

achieve the highest coverage of the stages and activi-

ties of the DS lifecycle. However, as the Utilization is

considered together with Deployment, the monitoring

and maintenance of analytical models appear to be of

lower importance. Hence, developing a meta-MM for

DS could constitute a worthwhile contribution.

5.2 Assessment of Data Science

Challenges

A crucial purpose of MMs is to guide improvement

(Pereira and Serrano, 2020). In DS, many projects

fail to reach a successful conclusion. Since it is ar-

gued that addressing the challenges in DS undertak-

ings is needed to mitigate this circumstance (Haertel

et al., 2023; Martinez et al., 2021), in this section,

it is analyzed whether common obstacles are consid-

ered by the MMs (RQ 2). Consequently, a valuable

perspective toward improving DS project execution is

covered. Table 5 presents the result of this analysis.

It lists the challenges in DS identified by Martinez

et al. (2021) in the left column. The right column

contains the IDs of all MMs (based on Table 4) that

consider the respective issue in their maturity assess-

ment. The table is sorted in descending order based

on the number of MMs that address a given obstacle.

Seeing the numerous MMs that feature dimensions

around strategic, organizational, and data governance,

it is not surprising that the challenges alignment of

business objectives and DS goals and appropriate

data governance and management are commonly ad-

dressed. This also applies to the IT infrastructure,

which has high significance, especially in BD envi-

ronments, and the difficulty of developing analytical

models with suitable technologies. Additionally, the

Guiding Improvement in Data Science: An Analysis of Maturity Models

457

staff skill and training aspect and ensuring sufficient

data quality are valued by many MMs. Among the

data-specific obstacles, data availability and data se-

curity are considered often, too.

The remaining challenges identified by Martinez

et al. (2021) are evaluated by only one-third or less

of the MMs. This includes especially managerial in-

tricacies (e.g., lacking emphasis on Business Under-

standing, clear project scope, suitable approaches for

Deployment). Notably, a clear structure of roles and

responsibilities and measures to ensure effective com-

munication and collaboration in a DS team seem less

important to many MMs compared to the availability

and advancement of employee competencies. More-

over, tackling the low level of process maturity in lots

of DS projects with the reliance on an established

DS workflow is caught on by merely four MMs and

only plays a significant role in the publications of

G

¨

okalp et al. (2022a) and G

¨

okalp et al. (2022b). In

line with the subpar coverage of the Evaluation stage

of the DS lifecycle, evaluation methods for analyti-

cal results are rarely considered in the MMs. Another

important issue in DS is insufficient reproducibility

and knowledge retention. These aspects include data,

models, experiments, and lessons learned (Martinez

et al., 2021) and are not of predominant significance

in the analyzed MM. One exception is the MLOps

MM prescribed by John et al. (2025) since MLOps

aims to contribute to reducing the difficulty in ML de-

velopment through feature stores and experiment and

model (metadata) tracking. Finally, it is hard to es-

tablish realistic timelines for DS undertakings, moti-

vating the need for control processes for DS activities

(Martinez et al., 2021). However, this requirement

is exclusively reviewed in the article of G

¨

okalp et al.

(2022b).

While all of the DS challenges under review were

considered at least once and some even played a role

in the maturity assessment in a majority of the MMs,

none of the models under examination included all

considered obstacles. To strengthen the impact of a

DS MM toward its intended purpose, that is, helping

to improve DS project success rate, high coverage of

the typical challenges should be pursued. Therefore,

this further intensifies the relevance of a meta-MM for

DS, constructed based on the obtained findings of this

SLR.

6 CONCLUSION

MMs enable organizations to assess and realize their

current value of emerging capabilities and technolo-

gies (H

¨

uner et al., 2009). Determining the maturity

level of an enterprise across the different dimensions

of an MM can foster the identification of recommen-

dations toward improvement measures (Becker et al.,

2009). As DS struggles with a high degree of in-

completed projects (VentureBeat, 2019), MMs can be

considered useful in this domain. In this paper, we

retrieved 18 MMs for DS from the literature and ana-

lyzed their coverage of the DS lifecycle stages (Haer-

tel et al., 2022) and major DS project challenges.

While some MMs address all phases of a DS under-

taking, gaps and weaknesses can still be found (RQ

1). Additionally, no MM considers all reviewed DS

issues for its maturity assessment (RQ 2). Based on

our analysis and the inputs from the existing models,

the development and evaluation of a DS meta-MM

constitutes a future research stream. For this objec-

tive, the knowledge base could be extended through

the inclusion of additional literature databases or the

expansion of the search query with related keywords

(e.g., Industry 4.0, AI).

REFERENCES

Akkiraju, R., Sinha, V., Xu, A., Mahmud, J., Gundecha, P.,

Liu, Z., Liu, X., and Schumacher, J. (2020). Char-

acterizing machine learning processes: A maturity

framework. Lecture Notes in Computer Science (in-

cluding subseries Lecture Notes in Artificial Intelli-

gence and Lecture Notes in Bioinformatics), 12168

LNCS:17 – 31.

Becker, J., Knackstedt, R., and P

¨

oppelbuß, J. (2009). Devel-

oping Maturity Models for IT Management. Business

& Information Systems Engineering, 1(3):213–222.

Bishop, C. M. (2006). Pattern Recognition and Machine

Learning.

Brocke, J. v., Simons, A., Niehaves, B., Riemer, K., Plat-

tfaut, R., and Cleven, A. (2009). Reconstructing the

Giant: On the Importance of Rigour in Documenting

the Literature Search Process.

Business Wire (2021). Data Creation and Replication Will

Grow at a Faster Rate Than Installed Storage Ca-

pacity, According to the IDC Global DataSphere and

StorageSphere Forecasts. Accessed: 2025-03-31.

Carvalho, J. V.,

´

Alvaro Rocha, Vasconcelos, J., and Abreu,

A. (2019). A health data analytics maturity model for

hospitals information systems. International Journal

of Information Management, 46:278–285.

Cavique, L., Pinheiro, P., and Mendes, A. (2024). Data

Science Maturity Model: From Raw Data to Pearl’s

Causality Hierarchy. Lecture Notes in Networks and

Systems, 801:326 – 335.

Chang, W. L. and Grady, N. (2019). NIST Big Data Inter-

operability Framework: Volume 1, Definitions.

Chen, H., Chiang, R. H. L., and Storey, V. C. (2012). Busi-

ness Intelligence and Analytics: From Big Data to Big

Impact. MIS Quarterly, 36(4):1165–1188.

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

458

Corallo, A., Crespino, A. M., Vecchio, V. D., Gervasi, M.,

Lazoi, M., and Marra, M. (2023). Evaluating maturity

level of big data management and analytics in indus-

trial companies. Technological Forecasting and Social

Change, 196:122826.

Desouza, K., G

¨

otz, F., and Dawson, G. S. (2021). Maturity

Model for Cognitive Computing Systems in the Public

Sector.

Fornasiero, R., Kiebler, L., Falsafi, M., and Sardesai, S.

(2025). Proposing a maturity model for assessing Ar-

tificial Intelligence and Big data in the process indus-

try. International Journal of Production Research,

63(4):1235–1255.

Fukas, P. (2022). The Management of Artificial Intelli-

gence: Developing a Framework Based on the Arti-

ficial Intelligence Maturity Principle. In CEUR Work-

shop Proceedings, volume 3139, pages 19 – 27.

Gartner (2019). Our Top Data and Analytics Predicts for

2019.

G

¨

okalp, M. O., G

¨

okalp, E., Kayabay, K., G

¨

okalp, S.,

Koc¸yi

˘

git, A., and Eren, P. E. (2022a). A process

assessment model for big data analytics. Computer

Standards & Interfaces, 80:103585.

G

¨

okalp, M. O., G

¨

okalp, E., Kayabay, K., Koc¸yi

˘

git, A., and

Eren, P. E. (2021). Data-driven manufacturing: An

assessment model for data science maturity. Journal

of Manufacturing Systems, 60:527 – 546.

G

¨

okalp, M. O., G

¨

okalp, E., Kayabay, K., Koc¸yi

˘

git, A., and

Eren, P. E. (2022b). The development of the data

science capability maturity model: a survey-based re-

search. Online Information Review, 46(3):547 – 567.

G

¨

oks¸en, H. and G

¨

oks¸en, Y. (2021). A Review of Matu-

rity Models Perspective of Level and Dimension. Pro-

ceedings, 74(1).

Haertel, C., Pohl, M., Nahhas, A., Staegemann, D., and Tur-

owski, K. (2022). Toward A Lifecycle for Data Sci-

ence: A Literature Review of Data Science Process

Models. In PACIS 2022 Proceedings.

Haertel, C., Staegemann, D., Daase, C., Pohl, M., Nahhas,

A., and Turowski, K. (2023). MLOps in Data Sci-

ence Projects: A Review. In 2023 IEEE International

Conference on Big Data (BigData), pages 2396–2404.

IEEE.

Helal, I. M. A., Elsayed, H. T., and Mazen, S. A.

(2023). Assessing and Auditing Organization’s Big

Data Based on COBIT 5 Controls: COVID-19 Ef-

fects. Lecture Notes in Networks and Systems, 753

LNNS:119 – 153.

Hijriani, A. and Comuzzi, M. (2024). Towards a Maturity

Model of Process Mining as an Analytic Capability.

H

¨

uner, K. M., Ofner, M., and Otto, B. (2009). Towards

a maturity model for corporate data quality manage-

ment. In Proceedings of the ACM Symposium on Ap-

plied Computing, pages 231–238.

IT Governance Institute (2007). COBIT 4.1. IT Governance

Institute.

Janiesch, C., Zschech, P., and Heinrich, K. (2021). Ma-

chine learning and deep learning. Electronic Markets,

31(3):685–695.

John, M. M., Olsson, H. H., and Bosch, J. (2025). An em-

pirical guide to MLOps adoption: Framework, matu-

rity model and taxonomy. Information and Software

Technology, page 107725.

Klopper, R. and Lubbe, S. (2007). The Matrix Method of

Literature Review. Alternation, 14.

Korsten, G., Aysolmaz, B., Ozkan, B., and Turetken, O.

(2024). A Capability Maturity Model for Developing

and Improving Advanced Data Analytics Capabilities.

PAJAIS Preprints (Forthcoming).

Martinez, I., Viles, E., and Olaizola, I. G. (2021). Data Sci-

ence Methodologies: Current Challenges and Future

Approaches. Big Data Research 24.

Menukhin, O., Mandungu, C., Shahgholian, A., and

Mehandjiev, N. (2019). Now and Next: A Maturity

Model to Guide Analytics Growth. UK Academy for

Information Systems Conference Proceedings 2019.

Page, M., Moher, D., Bossuyt, P., Boutron, I., Hoffmann,

T., Mulrow, C., Shamseer, L., Tetzlaff, J., Akl, E.,

Brennan, S., Chou, R., Glanville, J., Grimshaw, J.,

Hr

´

objartsson, A., Lalu, M., Li, T., Loder, E., Mayo-

Wilson, E., Mcdonald, S., and Mckenzie, J. (2021).

PRISMA 2020 explanation and elaboration: Updated

guidance and exemplars for reporting systematic re-

views. BMJ, 372:n160.

Paulk, M., Curtis, B., Chrissis, M., and Weber, C. (1993).

Capability maturity model, version 1.1. IEEE Soft-

ware, 10(4):18–27.

Pereira, R. and Serrano, J. (2020). A review of methods

used on it maturity models development: A systematic

literature review and a critical analysis. Journal of

Information Technology, 35(2):161–178.

Pour, M. J., Abbasi, F., and Sohrabi, B. (2023). Toward a

Maturity Model for Big Data Analytics: A Roadmap

for Complex Data Processing. International Jour-

nal of Information Technology and Decision Making,

22(1):377 – 419.

Rahlmeier, N. and Hopf, K. (2024). Bridging Fields of Prac-

tice: How Boundary Objects Enable Collaboration in

Data Science Initiatives. Wirtschaftsinformatik 2024

Proceedings, 55.

Raj M, A., Bosch, J., and Olsson, H. H. (2023). Maturity

Assessment Model for Industrial Data Pipelines. In

2023 30th Asia-Pacific Software Engineering Confer-

ence (APSEC), pages 503–513.

Saltz, J. S. and Krasteva, I. (2022). Current approaches for

executing big data science projects - a systematic lit-

erature review. PeerJ Computer Science, 8(e862).

Schreckenberg, F. and Moroff, N. U. (2021). Developing

a maturity-based workflow for the implementation of

ML-applications using the example of a demand fore-

cast. Procedia Manufacturing, 54:31–38.

Schulz, M., Neuhaus, U., Kaufmann, J., Badura, D.,

Kuehnel, S., Badewitz, W., Dann, D., Kloker, S.,

Alekozai, E. M., and Lanquillon, C. (2020). Intro-

ducing DASC-PM: A Data Science Process Model. In

Proceedings of ACIS 2020. Association for Informa-

tion Systems.

Shah, T. R. (2022). Can big data analytics help organisa-

Guiding Improvement in Data Science: An Analysis of Maturity Models

459

tions achieve sustainable competitive advantage? A

developmental enquiry. Technology in Society, 68.

Sharma, R., Mithas, S., and Kankanhalli, A. (2014).

Transforming decision-making processes: a research

agenda for understanding the impact of business ana-

lytics on organisations. European Journal of Informa-

tion Systems, 23(4):433–441.

Thiess, T. and M

¨

uller, O. (2018). Towards design principles

for data-driven decision making - an action design re-

search project in the maritime industry. ECIS 2018

Proceedings.

VentureBeat (2019). Why do 87% of data science projects

never make it into production? [Online: accessed

2024-11-14].

Webster, J. and Watson, R. T. (2002). Analyzing the Past to

Prepare for the Future: Writing a Literature Review.

MIS Quarterly, (26):13–23.

Yablonsky, S. (2021). AI-driven platform enterprise matu-

rity: from human led to machine governed. Kyber-

netes, 50(10):2753 – 2789.

Zitoun, C., Belghith, O., Ferjaoui, S., and Gabouje, S. S. D.

(2021). DMMM: Data Management Maturity Model.

In 2021 International Conference on Advanced Enter-

prise Information System (AEIS), pages 33–39.

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

460