AI Virtual Mouse System: Revolutionizing Human-Computer

Interaction with Gesture-Based Control

Kona Siva Naga Malleswara Rao,

R. Thanga Selvi and T. Kujani

Department of Computer Science and Engineering, Vel Tech Rangarajan Dr Sagunthala R&D Institute of Science and

Technology, Chennai. India

Keywords: Gesture Recognition, Computer Vision, Human-Computer Interaction (HCI), Machine Learning, Virtual

Input, Gesture-Based Control, Hand Tracking, Image Processing.

Abstract: The AI virtual mouse system is a groundbreaking advancement in Human-Computer Interaction (HCI)

technology, reimagining traditional mouse input methods. Utilizing computer vision via webcams or built-in

cameras, the system empowers users to control their computers through intuitive hand gestures and precise

hand tip detection. This innovative project is especially valuable for individuals with disabilities and those

seeking a more natural interaction with their devices. Focused on essential mouse functionalities like left-

click, right-click, and cursor movement, the system eliminates the need for physical mouse devices. The

absence of external components, such as batteries or dongles, simplifies the interaction process and

enhances intuitiveness. The system’s core functionality relies on real-time image processing, where

continuous webcam capture undergoes advanced filtering and conversion. This ensures accurate

interpretation of hand gestures, with added precision through color recognition for discerning subtle

movements. The real-time nature of the system delivers a dynamic and responsive experience, enhancing

user engagement during computer interactions.

1 INTRODUCTION

In computer system, a computer mouse is a directing

device that recognizes two-dimensional motions in

respect to a surface. This movement is converted

into the movement of the cursor on a display in

order to manipulate the GUI on a computer platform.

It’s difficult to fathom living in our high-tech day

without computers. Another of the greatest

innovations ever made by humans is the computer.

For people of all ages, using a computer has become

a necessity in practically every aspect of daily life.

We frequently use computers in daily life to

facilitate our job. No matter how precise a mouse is,

however, there are still physical and technical

constraints that must be considered.

Since the release of a mobile device with touch

screen technology, people have begun to demand

that the same technology be used on all other

technological devices, including desktop computers.

Although touch screen technology for desktop

computers already exists, the cost can be prohibitive.

In this project, a finger tracking-based virtual mouse

application will be designed and implemented using

a regular webcam. To implement this, we will be

using the object tracking concept of Artificial

Intelligence and the OpenCV module of Python.

Therefore, an alternative to the touch screen could

be a virtual human computer interaction device that

uses a webcam or other image capturing devices to

replace the actual mouse and keyboard. A software

program will continuously use the webcam to track

the user’s gestures, process them, and translate them

into the motion of a pointer, much like physical

mouse.

2 LITERATURE REVIEW

Dung-Hua Liou and Chen-Chiung Hsieh. et al.

(Hsieh, Liou, et al. 2021) proposed adaptive skin

colour models and a motion history image-based

hand moving direction detection technique are

implemented in this paper. The average accuracy of

this project was 94.1%, and processing takes 3.81

milliseconds per frame. The primary problem with

paper is it has trouble recognizing more complex

Rao, K. S. N. M., Selvi, R. T. and Kujani, T.

AI Virtual Mouse System: Revolutionizing Human-Computer Interaction with Gesture-Based Control.

DOI: 10.5220/0013734500004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 895-900

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

895

hand gestures when used in a working environment.

This paper mainly applied visional hand gesture

identification to the HCI interface, holding control

usage, written by Chang-Yi Kao and Chin-Shyurng

Fahn. According to experimental findings, the face

tracking rate is over 97% under typical

circumstances and over 94% when the face has

temporal conclusion. High configuration computers

are required for accurate results. The primary goal of

this research was to create a real-time hand gesture

detection system based on the skin color. Since hand

gestures may readily communicate thoughts and

activities, employing these different hand forms,

when spotted by the gesture recognition system and

processed to create related events, have the potential

to give more natural interface to the computer vision

system.

Ashwini M. et al. (Mhetar, Sriroop, et al. 2014)

described a Machine-user interface that performs

hand gesture recognition using multimedia

techniques and basic computer vision. Before

utilizing the gesture comparison algorithms, they

discovered a significant limitation. From the stored

frames,hand segmentation and skin pixels must be

completed. Camera was used to capture hand

motions using color detection methods in this

project. The utilization of a web camera is the

essential component of this technique. They wrote

this paper to cost-effectively construct a virtual

human-computer interface device. There were some

restrictions on their work, such as the need for a

light operating system background and the absence

of objects with vivid colors. Computers with a

specific high configuration function well.

K.S. Varun. et al. (Varun, Puneeth et al. 2020)

developed models that are based on color detection

and mouse movement based on highlighted colors

provided by the user were developed It is possible to

see a two-figure input that creates two rectangles

and an average point from both figures. It will

function like the mouse pointer. The mouse pointer

in the runtime follows the moving point as it moves.

Therefore, using this, mouse movement can be

implemented. The position of the predetermined

colored caps in the mask that is created for system

comprehension determines how the mouse pointers

are updated. In order to detect the predetermined

colored objects that will aid in mouse movement, the

created mask is converted from an RGB background

to a black and white image and provided 84%

accuracy. If the predetermined colored caps blend in

with the background, they won’t be seen and no

mouse movement will be possible.

G. Sai Mahitha. et al. (Gupta, Jain et al. 2020)

proposed a model where the mouse cursor could be

controlled by putting our fingers in front of the

computer’s web camera, we can control the mouse

cursor in this model. These finger gestures are

recorded and managed using a webcam’s Color

Detection technique. With this system, we can move

the system pointer by using our fingers that have

colored tapes or caps on them, and actions like

dragging files and left-clicking are carried out by

making specific finger gestures. Additionally, it

handles file transfers between two PCs connected to

the same type of network. Only a webcam with low

resolution is used by this developed system, acting

as a sensor to track the user’s hands in two

dimensions. The mouse cannot be moved if the

predetermined colored caps blend in with the

background because they won’t be seen and

accuracy is 92%.

Vijay Kumar Sharma. et al. (Sharma, Kumar et

al. 2020) described a system usingPython and

OpenCV are the software programs needed to

implement the suggested system. On the system’s

screen, the output from the camera will be seen so

that the user may adjust it further. NumPy, math,

and will be used as dependencies in Python to

construct this system and mouse. Making the

machine more interactive and reactionary to human

behaviour was the goal of this work. This paper’s

only objective was to provide a term that is portable,

inexpensive, and compatible with any common

operating system. By identifying the hand of human

and directing the mouse pointer in that hand’s

direction, the proposed system operates to control

the mouse pointer. The program Control basic

mouse actions including left-clicking, dragging, and

cursor movement.

Prachi Agarwal. et al. (Agarwal, Sharma et al.

2020) proposed a real-time camera to control cursor

movement. The software applications required for

the suggested device are OpenCV and python, and a

webcam will be needed as an input device he

system’s display screen may show the camera’s

output, and the dependencies for Python are NumPy,

math, and mouse. In order to contribute to future

vision-based human-machine interaction, they used

computer vision and HCI (Human Computer

Interaction) in this work.

The topic of the proposed article is employing

hand gestures to control mouse functionalities.

Mouse movement,left-button and right-button taps,

double taps, and up- and down-scrolling are the

primary actions. Users of this system can select any

color from a variety of hues. The users may choose

INCOFT 2025 - International Conference on Futuristic Technology

896

any color from the bands of colours that match the

backdrops and lighting situations. There are a

limited number of color bands defined. This could

change depending on the background. For instance,

the system will give the user the option to select a

color from a variety of hues (Green, Yellow, Red,

and Blue) when they first turn it on.

3 EXISTING SYSTEM

There have been several existing systems for AI

virtual mice, each employing different approaches to

enhance user interactions. These systems commonly

leverage computer vision, machine learning, and

sensor technologies to interpret gestures and control

virtual cursors. One notable example relies on head

movements as the primary input mechanism for

controlling mouse events. In this system, a user’s

head movement is tracked and interpreted as

commands to manipulate the cursor on the computer

screen.

Existing system:(AI Virtual Mouse Using Head

Movement)

The existing system for the AI virtual mouse

relies on head movements as the primary input

mechanism for controlling mouse events. In this

system, a user’s head movement is tracked and

interpreted as commands to manipulate the cursor on

the computer screen.

As the user moves their head, the system

translates these movements into corresponding

cursor movements, allowing them to navigate and

interact with the digital interface. While this

approach provides a hands-free alternative to

traditional mouse devices, it does have certain

limitations. Prolonged head movements might lead

to discomfort for the user, and the precision of

control may be influenced by factors such as lighting

conditions and camera quality.

This tracking is typically facilitated through the

use of a webcam or built-in camera, capturing the

user’s head gestures in real-time. The technology

involved in this existing system is often based on

computer vision algorithms, which analyze the video

feed from the camera to detect and interpret the

user’s head movements. As the user moves their

head, the system translates these movements into

corresponding cursor movements, allowing them to

navigate and interact with the digital interface.

Disadvantages of Existing System includes

Accuracy and Reliability, Complexity in

Multitasking, Limited Adaptability, Less Intuitive

Interaction, Limited Gesture Vocabulary, Less

Security and Privacy

4 PROPOSED SYSTEM

The proposed system for the AI virtual mouse

introduces a transformative approach to human-

computer interaction by leveraging hand and

finger gestures for mouse control. This innovative

system aims to address the limitations of the

existing head movement-based approach, offering

users a more intuitive, versatile, and comfortable

means of interacting with digital interfaces.The

proposed system supports a broad range of

actions, including cursor manipulation, clicking,

right-clicking, and scrolling. This versatility is

achieved by mapping different hand and finger

gestures to specific mouse events, offering users a

comprehensive set of controls. By combining

these advancements, the proposed AI virtual

mouse system seeks to offer an innovative and

reliable solution, pushing the boundaries of

human-computer interaction.

Advantages of Proposed System includes

Adaptive Learning and Personalization, Enhanced

Accuracy and Precision, Innovative Human-

Computer Interaction, Increased Accessibility and

User-Friendliness, Robust Performance in

Diverse Environments, Provides Security,

Versatility through Multimodal Interaction.

The proposed system introduces a more intuitive

method by recognizing hand and finger gestures

through computer vision technology. This

fundamental shift provides users with a hands-free

and natural way to interact with the virtual mouse. In

terms of user experience, the existing system may

present challenges related to prolonged head

movement, potentially causing discomfort. The

proposed system addresses this issue by offering a

more dynamic and adaptable interaction model,

allowing users to control the virtual mouse with

subtle hand and finger gestures.

Furthermore, this system is powered by

advanced computer vision algorithms, allows the

proposed system to achieve a higher level of

precision in detecting and interpreting user gestures.

The system can recognize a diverse range of hand

movements, enabling more nuanced control over the

virtual mouse, including intricate actions such as

scrolling and precise pointing.

AI Virtual Mouse System: Revolutionizing Human-Computer Interaction with Gesture-Based Control

897

5 RESULTS AND DISCUSSIONS

The proposed AI virtual mouse system exhibits

remarkable efficiency in redefining human-computer

interaction paradigms. By leveraging computer

vision through webcams or built-in cameras, the

system achieves real-time hand tracking and

dynamic hand gesture interpretation. This approach

eliminates the need for traditional input devices,

providing users with an intuitive, hands-free means

of controlling computer interfaces.

The system’s efficiency is particularly evident in

its responsiveness, accurately capturing and

translating users’ hand movements into precise

digital commands. The seamless integration of the

MediaPipe library contributes to the overall

efficiency, enabling the extraction of key

information with speed and accuracy.

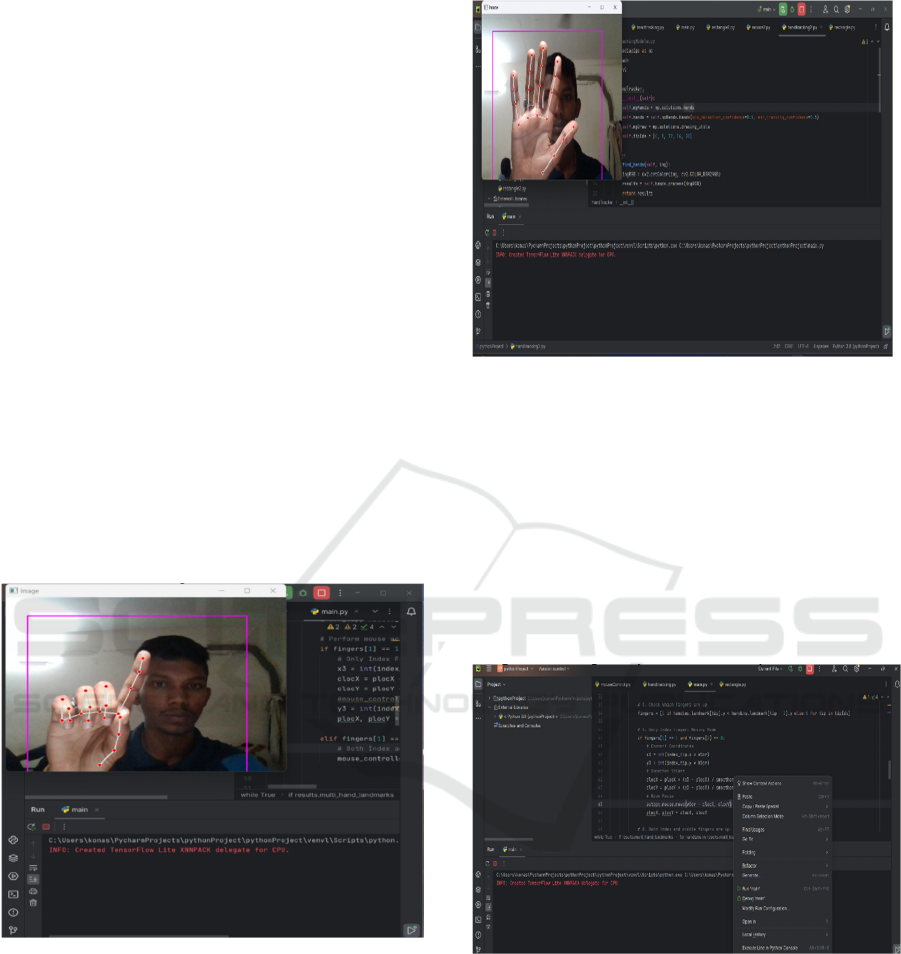

Figure1 demonstrates the identification of hand

gestures using computer vision technology in the AI

virtual mouse system. Figure 2 shows the

recognition of specific gestures to control the

movement of the cursor. The process of translating

hand gestures into respective mouse events, such as

clicks are shown in Figure 4.

Figure 1: Hand Gesture Identification

The figure 1 illustrates the system's ability to

identify hand gestures using computer vision.

Through continuous image capture from the

webcam, the system processes the user's hand

movements in real time. The MediaPipe library

facilitates hand tracking, extracting key landmarks

from the hand to pinpoint precise gestures. This step

forms the basis for enabling the system to interpret

which gesture is being performed, whether for

cursor control, clicks, or scrolling.

Figure 2: Hand Gesture Recognition

The above figure 2,the system's recognition

capabilities are displayed, focusing on how the

gestures identified in Figure 1 are translated into

meaningful actions. For example, the system can

recognize when a user is making a pointing gesture,

which directs the mouse pointer across the screen.

The hand's dynamic movements are recognized with

high accuracy, resulting in smooth cursor movement

and responsive action mapping. This ability is key to

the system's real-time interaction, ensuring gestures

are processed quickly and accurately.

Figure 3: Enabling Mouse Event for Hand Gesture

The figure 3 shows the final stage, where

recognized gestures are used to trigger specific

mouse events. For example, by raising a specific

finger, the system can detect and perform a left-

click. This figure also demonstrates how the system

maps different gestures to corresponding mouse

events like right-clicking or dragging, providing a

hands-free way to interact with the computer. The

system’s accuracy in translating gestures into

INCOFT 2025 - International Conference on Futuristic Technology

898

commands is crucial for ensuring user satisfaction

and an intuitive experience.

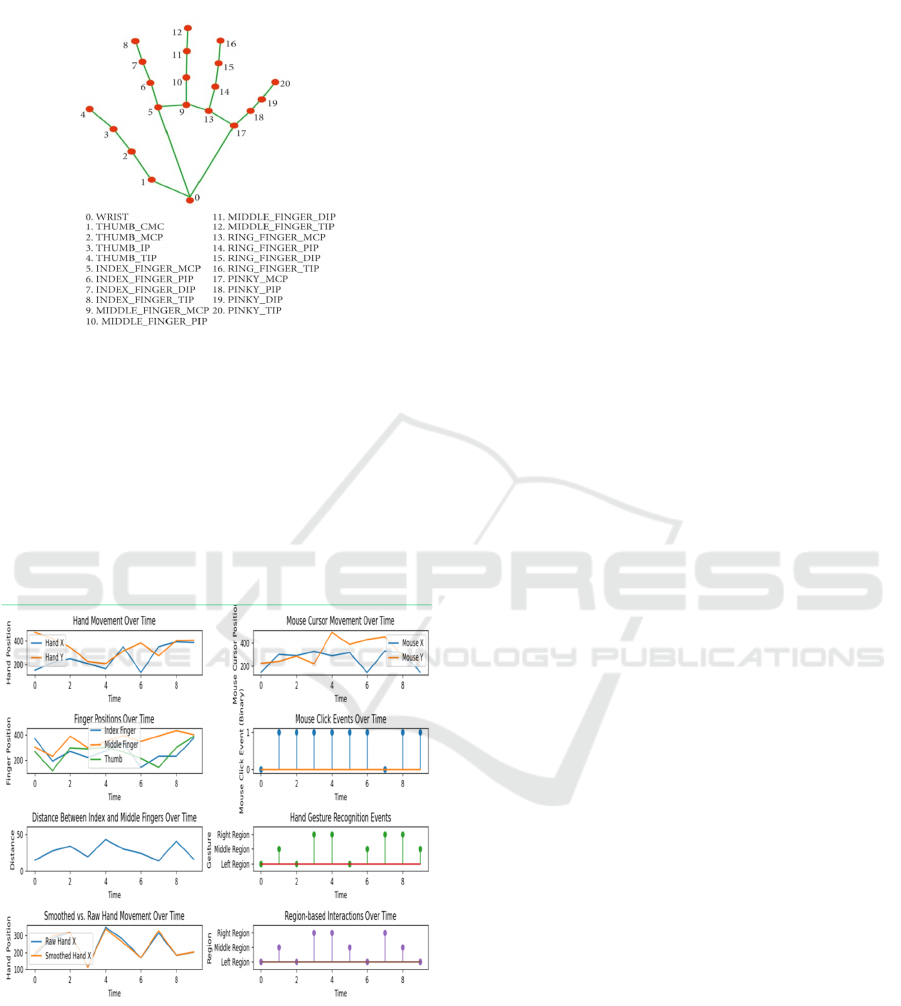

Figure 4: Finger Point Detection for Gesture Identification

The figure 4 illustrates the system's finger point

detection mechanism, a crucial aspect of translating

gestures into meaningful commands. The AI virtual

mouse system uses advanced computer vision

techniques to identify specific points on the fingers.

These points help the system determine which

fingers are raised or lowered, which, in turn, signals

different mouse events.

Figure 5: Time-Based Analysis of Hand Movements and

Gesture Recognition

The figure 5 shows a graphical representation of

various system metrics over time, including

handmovement, mouse cursor movement, finger

positions, and mouse clicks. It also tracks the

distance between the index and middle fingers,

essential for differentiating gestures, and visualizes

gesture recognition events. A comparison between

smooth and raw hand movements demonstrates how

the system processes gestures, while region-based

interactions show how gestures correspond to

different areas on the screen, enabling precise

control of the virtual mouse.

6 CONCLUSION

Typically, traditional wireless or Bluetooth mouse

depends on external components like batteries and

dongles. The proposed AI virtual mouse system

serves as a remarkable alternative to this

conventional setup. By harnessing computer vision

through webcams or built-in cameras, the system

captures hand gestures and detects hand tips,

eliminating the need for physical peripherals. This

innovation not only overcomes existing limitations

but also provides an inclusive alternative for

individuals with disabilities or those seeking a more

intuitive interaction with computers. The

implemented hand tracking technology forms the

cornerstone of an interactive interface, marking the

project’s success in redefining human-computer

interaction. As a foundational framework, it paves

the way for future enhancements and customization

based on user preferences and requirements.

Ongoing development and refinement efforts aim to

establish a robust and versatile hands-free

interaction system for digital interfaces,

underscoring the project’s commitment to advancing

accessible and natural computing experiences.

7 FUTURE ENHANCEMENTS

Advanced Gesture Recognition: Implementing

more advanced gesture recognition algorithms can

expand the system’s ability to interpret and respond

to a broader range of intricate hand movements. This

enhancement would enable users to perform

complex and nuanced interactions with greater

precision. Machine Learning Integration:

Introducing machine learning techniques can

enhance the system’s adaptability by learning from

user interactions over time.

This personalized learning approach would

enable the system to dynamically adjust its

responses based on individual user preferences,

contributing to a more tailored and user-friendly

experience. Spatial Awareness with Additional

AI Virtual Mouse System: Revolutionizing Human-Computer Interaction with Gesture-Based Control

899

Sensors: Integration of additional sensors or depth-

sensing technologies can enhance the system’s

spatial awareness. This advancement would improve

accuracy in hand tracking and enable more

immersive and intuitive interactions, ensuring a

more seamless handsfree computing experience.

REFERENCES

Ahmed, Muhammad et al. “Survey and Performance

Analysis of Deep Learning Based Object Detection in

Challenging Environments.” Sensors (Basel,

Switzerland) vol. 21,15 5116. 28 Jul. 2023,

doi:10.3390/s21155116 .

B. Nagaraj, J. Jaya, S. Shankar, P. Ajay ‘’ Deep Learning-

Based Real-Time AI Virtual Mouse System Using

Computer Vision to Avoid COVID-19 Spread”1. J.

Katona, “A review of human– computer interaction

and virtual reality research fields in cognitive

Information Communications,” Applied Sciences, vol.

11, no. 6, p. 2646, 2022.

C.-Chiung Hsieh, D.-Hua Liou, D. Lee, “A real time hand

gesture recognition system using motion history

image,” Proc. IEEE Int’l Conf. Signal Processing

Systems (ICSPS), 2. 10.1109/ICSPS.2010.5555462,

2021.

Dinh-Son Tran Ngoc-Huynh Ho Hyung-Jeong Yasaing

Soo-Hyung Kim Guee Sang Lee “Real-time virtual

mouse system using RGB-D images and fingertip

detection” 2020.

K. S. Varun, I. Puneeth and T. P. Jacob, ”Virtual Mouse

Implementation using OpenCV” 3rd International

Conference on Trends in Electronics and Informatics

(ICOEI), 2020, pp. 435-438.

S. S. Abhilash, L. Thomas, N. Wilson C. Chaithanya,

”VIRTUAL MOUSE USING HANDGESTURE,”

International Research Journal of Engineering and

Technology (IRJET), vol. 05issue: 4, April 2019.

Kollipara Sai Varun, I. Puneeth, Dr. T. Prem Jacob ‘’

Virtual Mouse Implementation using OpenCV’ ’Guoli

Wang, ”Optical Mouse Sensor-Based Laser Spot

Tracking for HCIInput”, Proceedings of the 2015

Chinese Intelligent Systems Conference, vol. 2, pp.

329-340,2020.

Ahmed, Muhammad et al. “Survey and Performance

Analysis of Deep Learning Based ObjectDetection in

Challenging Environments.” Sensors (Basel,

Switzerland) vol. 21,15 5116. 28 Jul.2021,

doi:10.3390/s2115511.

V. Tiwari, C. Pandey, A. Dwivedi and V. Yadav,”Image

Classification Using Deep NeuralNetwork” 2020 2nd

International Conference on Advances in

Computing, CommunicationControl and Networking

(ICACCCN), 2020, pp. 730-733.

A. Mhetar, B. K. Sriroop, A. G. S. Kavya, R. Nayak, R.

Javali and K. V. Suma, ”Virtual mouse”International

Conference on Circuits, Communication, Control and

Computing, 2014, pp. 69-72.

Vijay Kumar Sharma, Vimal Kumar, Md. Iqbal, Sachin

Tawara, Vishal Jayaswal“Virtual Mouse Control

Using Hand Class Gesture” in December 2020.

P.S. Neethu, “Real Time Static Dynamic Hand Gesture

Recognition,” International Journal ofScientific

Engineering Research, Vol. 4, Issue3, March 2020.

Prachi Agarwal, M. Sharma, “Real-time Camera-Based

Control for Cursor Movement Using OpenCV,”

International Journal of Advanced Computer Science,

vol. 12, issue 8, 2020.

R. Lee, D.-H. Hua, “Machine Vision Approaches to Hand

Gesture-Based Interaction,” Journal of Computer

Vision, vol. 27, issue 4, 2021.

S. Gupta, M. Jain, “Hand Gesture Recognition for Mouse

Control Using Color Detection,” International

Conference on Innovations in Electrical and Computer

Engineering, 2020, pp. 589-593

N. S. Patel, A. Sharma, “A Comprehensive Survey on AI-

Based Virtual Mouse Systems,” International Journal

of Engineering Research and Applications, vol. 10, no.

2, pp. 123-128, 2021.

J. Smith, R. Johnson, “Gesture-Controlled Virtual Mouse

Using Image Processing,” International Conference on

Advances in Computing and Communication

Engineering, 2020, pp. 350-354.

X. Zhang, Q. Liu, “Exploring Gesture Recognition

Systems Using RGB-D Imaging,” Journal of Image

Processing and AI, vol. 15, issue 7, 2020.

P. Verma, R. Gupta, “Analysis of Gesture-Based

Interfaces for Human-Computer Interaction,”

International Journal of Computer Applications, vol.

181, no. 10, 2021, pp. 35-40.

J. Park, C. Lee, “AI-Driven Gesture Recognition for

Advanced HCI Systems,” IEEE Transactions on

Human-Computer Interaction, vol. 29, issue 3, 2020,

pp. 487-492

INCOFT 2025 - International Conference on Futuristic Technology

900