Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment

for O-XAI to Sheep BCS Prediction

Nourelhouda Hammouda

1 a

, Mariem Mahfoudh

2 b

and Khouloud Boukadi

3 c

1

University of Sfax, MIRACL Laboratory, National School of Electronics and Telecommunications, Sfax, Tunisia

2

University of Sfax, MIRACL Laboratory, Institute of Computer Science and Multimedia, Sfax, Tunisia

3

University of Sfax, MIRACL Laboratory, Faculty of Economics and Management, Sfax, Tunisia

Keywords:

Ontology, O-XAI, MoonCAB, SWRL, Body Condition Score, Computer Vision.

Abstract:

Body Condition Score (BCS) is a key metric for monitoring the health, productivity, and welfare of livestock,

playing a crucial role in supporting farmers and experts in effective herd management. Despite advancements

in BCS prediction for cows and goats, no computer vision-based methods exist for sheep due to their complex

body features. This absence, coupled with the lack of interpretability in existing AI models, hinders real-

world adoption in sheep farming. To address this, we propose the first interpretable AI framework for sheep

BCS prediction leveraging ontology-based knowledge representation. In this paper, we enrich the ontology

MoonCAB, which models livestock behavior in pasture systems, with BCS-related knowledge to prepare it for

future integration into explainable AI (XAI) systems. Our methodology involves enhancing the “Herd” module

of MoonCAB with domain-specific concepts and 200 SWRL rules to support logical inference. The enriched

ontology is evaluated using Pellet, SPARQL, and the MoOnEV tool. As a result, MoonCAB now enables

reasoning-based support for BCS-related decision-making in precision sheep farming, laying the groundwork

for future developments in ontology-based explainable AI (O-XAI).

1 INTRODUCTION

The Body Condition Score (BCS) is a critical in-

dicator used to monitor animal health, productivity,

and welfare It enables both domain experts and farm-

ers to make informed, cost-effective, and ethically

sound decisions in animal management (Hamza and

Bourabah, 2024).

While several studies have developed AI-based

tools to predict BCS in animals such as cows and

goats using computer vision techniques; deep learn-

ing (Rodr

´

ıguez Alvarez et al., 2019; C¸ evik, 2020),

machine learning algorithms (V

´

azquez-Mart

´

ınez

et al., 2023), no such work exists for sheep. This

research gap is primarily due to the anatomical and

visual complexities of sheep — including thick wool,

variable fleece color, and less pronounced body con-

tours — which make visual assessment and feature

extraction far more challenging. As a result, BCS

prediction in sheep remains a largely unexplored

a

https://orcid.org/0009-0005-5185-0325

b

https://orcid.org/0000-0001-7860-8604

c

https://orcid.org/0000-0002-6744-711X

task, particularly in large-scale settings where regu-

lar, manual evaluations are impractical.

This lack of automation is critical, given that a

sheep’s BCS can vary rapidly due to nutrition man-

agement (Corner-Thomas et al., 2020). Without fre-

quent and reliable assessments, breeders risk compro-

mising animal health, reproductive performance, and

overall productivity. Therefore, there is a pressing

need for intelligent, automated, and interpretable so-

lutions that can assess BCS in sheep at both the indi-

vidual and herd levels.

Moreover, beyond prediction accuracy, explain-

ability is crucial in agricultural settings. Farmers and

domain experts require not only accurate predictions

but also transparent explanations to support their de-

cisions. In this regard, contrary to the XAI tech-

niques such as Gradient-weighted Class Activation

Mapping (Grad-CAM) (Selvaraju et al., 2017) and

SHapley Additive exPlanations (SHAP) (Lundberg

and Lee, 2017), semantic explainable AI (O-XAI)

offers a promising direction by integrating symbolic

knowledge through ontologies and reasoning rules,

thereby making AI decisions more interpretable and

actionable.

52

Hammouda, N., Mahfoudh, M. and Boukadi, K.

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction.

DOI: 10.5220/0013717500004000

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2025) - Volume 2: KEOD and KMIS, pages

52-63

ISBN: 978-989-758-769-6; ISSN: 2184-3228

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

In this paper, we propose the first step toward

building an interpretable AI framework for sheep

BCS prediction. Our approach is based on the en-

richment of the Modular ontology for Computational

analysis of Animal Behavior (MoonCAB) (Ham-

mouda et al., 2023), which models the behav-

ior of livestock animals (sheep and goats) in pas-

ture environments. MoonCAB currently comprises

154 classes, 156 properties, 234 individuals, and

14,653 axioms, structured into three main modules:

M Pasture, M Herd, and M Behavior.

Our contribution focuses on integrating all rel-

evant concepts and implications of BCS into the

“Herd” module, along with formal axioms and rea-

soning capabilities using SWRL (Semantic Web Rule

Language) (Horrocks et al., 2004). Although Moon-

CAB already provides structured representations, it

lacks inferential capacity. By embedding semantic

rules, we enhance its ability to derive implicit knowl-

edge and support informed decision-making in live-

stock management. In addition to structural enrich-

ment, we define and integrate 200 SWRL rules to en-

able inferential reasoning about BCS conditions, be-

havioral patterns, and their impact on management

decisions. These rules enhance the ontology’s capac-

ity to support actionable insights for precision live-

stock farming.

To ensure semantic consistency, structural sound-

ness, and practical utility, a rigorous evaluation of

the enriched MoonCAB ontology was conducted. We

relied on several established tools, including OOPS!

(Poveda-Villal

´

on et al., 2014), Delta-T (Kondylakis

et al., 2021), and XDTesting (Ciroku and Presutti,

2022), each addressing complementary aspects of

ontology quality. For in-depth modular validation,

we employed the MoOnEV tool, selected for its

compatibility with the OMEVA framework (Gobin-

Rahimbux, 2022), which encompasses 35 evaluation

metrics across eight quality dimensions. Its support

for modular analysis and detailed diagnostics makes

it particularly suited for assessing the robustness of

complex ontologies like MoonCAB.

This paper is organized as follows: Section 2 re-

views related work on XAI and animal ontologies.

Section 3 presents our MoonCAB-XAI framework,

detailing the enrichment of the ontology with BCS-

related concepts and SWRL rules. Section 4 de-

scribes the evaluation using Pellet, SPARQL, and the

MoOnEV tool. Section 5 discusses the results, and

Section 6 concludes with future perspectives.

2 RELATED WORK

2.1 Explainable Artificial Intelligence

(XAI)

Explainable Artificial Intelligence (XAI) seeks to en-

hance transparency and trust in AI systems by reveal-

ing how decisions are made, particularly in complex

or black-box models (Altukhi et al., 2025).

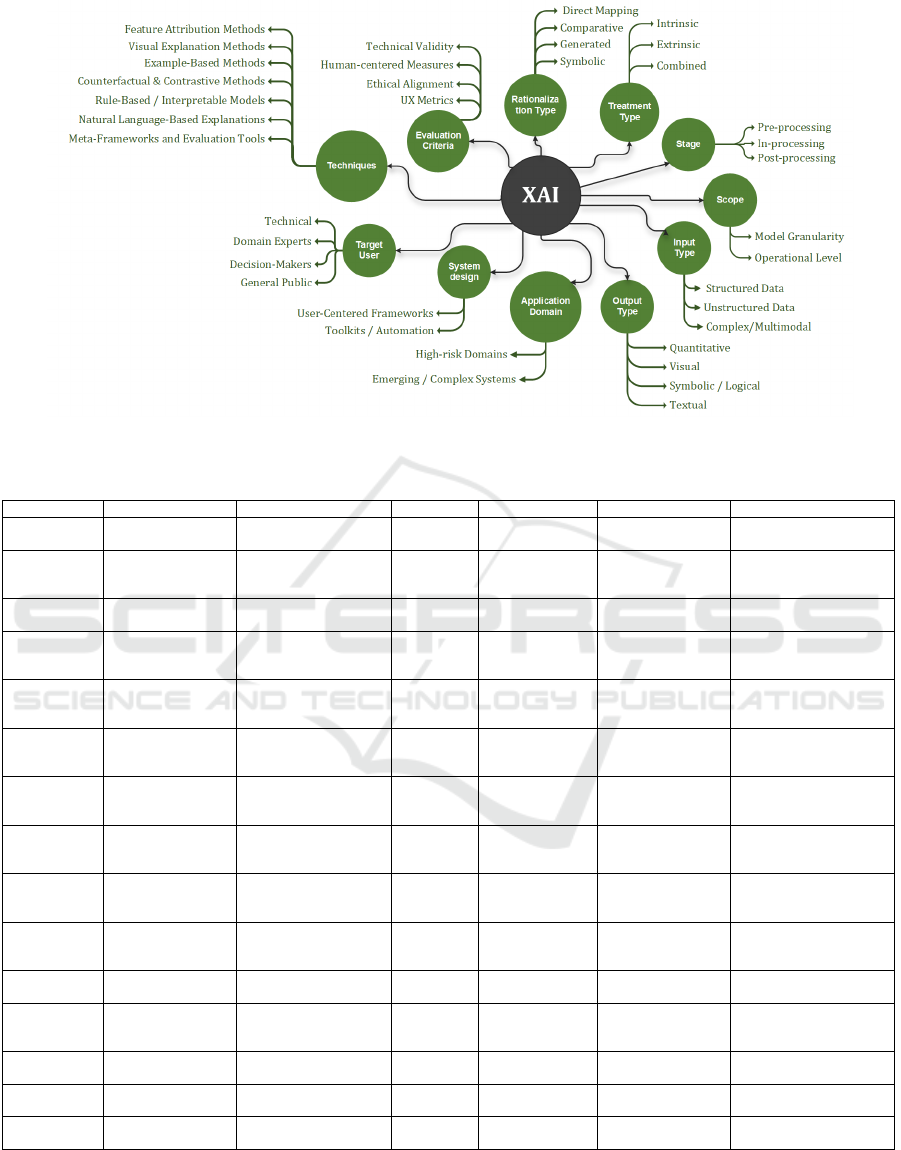

As illustrated in Figure 1, XAI frameworks vary

across multiple dimensions, including the explanation

stage (ante-hoc, in-process, post-hoc), scope (local,

global, system-wide), and target audience (develop-

ers, experts, or general users) (Brdnik and

ˇ

Sumak,

2024). XAI methods also differ depending on in-

put type (structured, unstructured, multimodal) and

produce outputs in various forms—visual, quanti-

tative, symbolic, or linguistic (Kong et al., 2024).

They incorporate different rationalization strategies

(contrastive, counterfactual, extractive) and treatment

models (inherently interpretable or post-hoc explana-

tions) (Brdnik and

ˇ

Sumak, 2024). Evaluation metrics

such as fidelity, clarity, fairness, and usefulness help

assess the quality and reliability of explanations (Ret-

zlaff et al., 2024).

To address these diverse dimensions, XAI lever-

ages a broad range of techniques including SHAP

(Lundberg and Lee, 2017), LIME (Ribeiro et al.,

2016), Grad-CAM (Selvaraju et al., 2017), Anchors

(Ribeiro et al., 2018), and meta-frameworks like Au-

toXAI (Cugny et al., 2022). These approaches are

applied in critical domains such as healthcare, cyber-

security, finance, smart cities, and agriculture. Ef-

fective XAI system design is supported by structured

tools like ODCM and REXAI, alongside user-centred

and low-code development interfaces (Aslam et al.,

2023). Increasingly, semantic approaches to XAI are

being explored to incorporate ontologies and struc-

tured knowledge for deeper, more human-aligned ex-

planations. These efforts collectively contribute to

more ethical, interpretable, and reliable AI systems.

2.2 Semantic eXplainable Artificial

Intelligence (S-XAI)

The integration of semantics in Explainable Arti-

ficial Intelligence (XAI) has become essential to

enhance the clarity and relevance of machine-

generated explanations. Traditional XAI meth-

ods often rely on attribution techniques or statisti-

cal saliency, which—though informative—lack align-

ment with human cognition and domain semantics.

In contrast, semantic XAI leverages structured, in-

terpretable representations such as ontologies, logical

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

53

Figure 1: Overview of the key conceptual components of Explainable AI (XAI).

Table 1: Comparative overview of recent works employing semantic-based approaches in XAI.

Paper Scope Semantic Technique Stage Input Output Evaluation Criteria

(Dong et al.,

2017)

Image classifica-

tion (CNNs)

Semantic concepts + in-

terpretable neurons

Ante-hoc Visual images Concept attention

maps

Clarity, alignment with

concepts

(Garcez et al.,

2019)

Cross-domain rea-

soning

Neural-symbolic inte-

gration

In-process Structured and

unstructured data

Symbolic justifica-

tions + logical in-

ference

Logical completeness,

scalability

(Marcos et al.,

2019)

Image recognition

with explanation

Semantic Interpretable

Activation Maps

Ante-hoc CNN activations

+ image inputs

Tripartite semantic

maps

Map interpretability, se-

mantic decomposition

(Donadello

and Dragoni,

2020)

Image classifica-

tion

Ontological predicates,

fuzzy logic

Ante-hoc +

Post-hoc

Images, concept

labels

Logical rules +

fuzzy predicates

Faithfulness, inter-

pretability

(Ngo et al.,

2022)

Smart agricul-

ture knowledge

formalization

AgriComO +

OAK4XAI

Post-hoc Data mining

results, concept

definitions

Structured explana-

tions (no interface)

No UI for end users,

lacks feature attribution

(Dragoni and

Donadello,

2022)

Generic AI deci-

sion justification

Explanation Graph Post-hoc Structured

knowledge, logic

rules

Graph-structured

semantic explana-

tion

No interaction interface,

only conceptual structure

(Chhetri et al.,

2023)

Cassava disease de-

tection

Ontology + SWRL +

DL

Post-hoc Images + sensor

data

Weighted decision

combining CNN

and SWRL rules

CNN opacity, rule-based

model lacks scalability

(Sajitha et al.,

2023)

Banana quality

classification

Knowledge Graph +

KEGCNN

Post-hoc Banana images Knowledge-

embedded GCN

output

High complexity, opaque

embedding layer

(Corbucci

et al., 2023)

Sequential diagno-

sis prediction

Ontology mapping

(ICD-9 to SNOMED-

CT)

Post-hoc ICD codes, clini-

cal notes

Ontology-linked

text highlights

F1-score (text match),

human validation

(Sun et al.,

2024)

Image classifi-

cation and OOD

detection

Self-supervised con-

cept extraction (PCA,

SVD)

Post-hoc Images Concept prototypes

and semantic labels

User trust, semantic clar-

ity, interpretability

(Kosov et al.,

2024b)

Credit risk assess-

ment

Ontology + SWRL for

tabular data

Post-hoc Tabular records

(credit attributes)

Symbolic explana-

tion via rules

Explanation coverage,

clarity

(Kosov et al.,

2024a)

Images (Fashion-

MNIST), general-

izable

Generalized explana-

tory properties

In-process Visual features Ontology-based

JSON explanation

Faithfulness, reusability

(Sharma and

Jain, 2024)

Medical diagnosis

(Dengue)

Ontology + SWRL +

ChatGPT

Post-hoc Clinical data Natural language

explanation

Accuracy, clarity, preci-

sion vs. ML

(Doh et al.,

2025)

Face verification Face regions +

LIME/SHAP + LLMs

Post-hoc Face image pairs Similarity map +

textual rationale

User preference, faithful-

ness, LLM clarity

(Chandra

et al., 2025)

Disease diagnosis

& treatment

BFO + PCD Ontology

+ SWRL + GPT

Post-hoc Clinical data, test

results (OCR)

SPARQL results +

XAI suggestions

Precision, recall, batch

event timing

rules, and conceptual traits to bridge the gap between

model reasoning and human understanding. These

elements can be applied at various stages of the AI

pipeline.

Several works illustrate this semantic shift, as

summarized in Table 1. Foundational contributions

KEOD 2025 - 17th International Conference on Knowledge Engineering and Ontology Development

54

include (Dong et al., 2017), (Garcez et al., 2019), and

(Marcos et al., 2019), which embed semantic mean-

ing directly into neural models. (Dong et al., 2017)

aligns CNN filters with semantic concepts via neuron

regularization, enabling concept-level attention maps.

(Garcez et al., 2019) promotes neural-symbolic in-

tegration for logic-based reasoning with deep learn-

ing. (Marcos et al., 2019) introduces the What-

Where-How framework, decomposing CNN deci-

sions into interpretable spatial and conceptual com-

ponents—especially useful for image classification.

Building on these foundations, several notable

contributions have proposed diverse and robust se-

mantic XAI systems. (Donadello and Dragoni, 2020)

introduced SeXAI, using fuzzy logic and ontological

constraints to guide DNNs, producing rule-based ex-

planations. (Sun et al., 2024) proposed AS-XAI, a

self-supervised method that extracts concept proto-

types via PCA and SVD, enabling global, annotation-

free interpretation. (Doh et al., 2025) developed a se-

mantic multimodal XAI system for face verification,

combining structured face-region semantics, similar-

ity maps, and LLM-generated explanations tailored to

users.

In agriculture, where interpretability is critical for

non-experts (Grati et al., 2025), (Ngo et al., 2022)

used AgriComO in OAK4XAI to organize and ex-

plain model outcomes through domain knowledge.

(Chhetri et al., 2023) combined CNNs with SWRL

and ontology reasoning for cassava disease detection,

while (Sajitha et al., 2023) used KEGCNN for ba-

nana grading by embedding graph methods based on

a graph convolutional network (GCN).

In healthcare, (Corbucci et al., 2023) linked clin-

ical notes and model predictions with SNOMED-

CT via ontology-based highlights. (Sharma and

Jain, 2024) introduced OntoXAI using SWRL and

ChatGPT to provide human-readable explanations for

dengue diagnosis. (Chandra et al., 2025) extended

this with GPT-driven logic and SWRL using BFO and

PCD ontologies for diagnostic support.

Other representative efforts expanded semantic

XAI across domains: (Kosov et al., 2024b) pro-

posed ontology-driven rule explanations for credit as-

sessment, while (Kosov et al., 2024a) extended this

to cross-modal reasoning with JSON-structured sym-

bolic outputs.

These contributions mark the evolution of seman-

tic XAI—from embedding meaning in models to de-

veloping modular frameworks combining ontological

reasoning, visual and textual explanation. Ontolo-

gies are now key to structuring knowledge, ensuring

traceability, and aligning AI with domain expecta-

tions. They support integration of SWRL, rule-based

reasoning, and cross-domain adaptation. Yet, chal-

lenges remain in engineering, scalability, and integra-

tion with deep learning. Despite these, ontologies an-

chor semantic XAI as a bridge between opaque com-

putation and intelligible, trustworthy AI.

2.3 Animal Ontologies

Ontology languages like OWL allow for the formal

representation of domain knowledge, but they are lim-

ited in expressing complex logic. The Semantic Web

Rule Language (SWRL) extends OWL by enabling

the integration of logical rules directly into the ontol-

ogy structure (Horrocks et al., 2004). They support

reasoning by establishing cause-and-effect relation-

ships, enabling the inference of new knowledge from

existing facts (Amith et al., 2021). As a result, SWRL

enhances ontology expressiveness, supports dynamic

decision-making, and improves the semantic richness

of knowledge-based systems.

In this context, several ontologies have been de-

veloped to semantically model knowledge related

to animals and their environments. However, de-

spite the potential of SWRL to enrich these ontolo-

gies, only a few of them incorporate rule-based rea-

soning mechanisms. MBO (Beck et al., 2009) fo-

cuses on mammalian species but does not integrate

SWRL rules. NBO (Gkoutos et al., 2012) includes

23 SWRL rules to infer regulatory relationships in

animal-related studies. AHSO (D

´

oreas et al., 2017)

addresses animal health surveillance and was devel-

oped using the eXtreme Design (XD) methodology.

ATOL (Golik et al., 2012) provides extensive termi-

nology for livestock traits without implementing for-

mal rules. GEOBIA (Gu and et al, 2017) deals with

environmental concepts extracted from remote sens-

ing and employs SWRL to enhance semantic interpre-

tation. MoonCAB (Hammouda et al., 2023) follows

the MOMo methodology to describe animals and pas-

ture systems semantically.

Several ontology engineering methodologies fo-

cus on reusing existing ontologies and adopting mod-

ular design principles to enhance clarity and scalabil-

ity. Approaches like Enterprise (Uschold and King,

1995), XD (Blomqvist et al., 2016), and MOMo

(Shimizu et al., 2021) encourage creating reusable

components. MOMo specifically supports system-

atic reuse and modularity through diagram-first mod-

eling, pattern-based instantiation, and collaborative

tools like CoModIDE (Shimizu et al., 2021).

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

55

3 MOONCAB ENRICHMENT

FOR SHEEP BCS PREDICTION

EXPLICATION

MoonCAB is a modular ontology designed to seman-

tically represent knowledge about sheep and goats

in pasture (Hammouda et al., 2023). It was devel-

oped in collaboration with INRAT to support smart

farming through semantic annotation of observational

data. The ontology adheres to the MOMo method-

ology (Shimizu et al., 2021), ensuring modularity,

reusability, and expert validation. Existing resources

like ATOL (Golik et al., 2012) and GEOBIA (Gu and

et al, 2017) were reused to enhance semantic cover-

age. MoonCAB integrates standardized traits and en-

vironmental concepts relevant to livestock systems.

It includes 143 classes and 106 properties dis-

tributed for 3 modules: Herd, Pasture, and Produc-

tion. These rules support dynamic inference and au-

tomated decision-making. Logical consistency was

checked using FaCT++, and SPARQL queries val-

idated the defined competency questions. The on-

tology was further assessed with the MoOnEv tool

(Hammouda et al., 2024) across 36 evaluation criteria.

Results confirmed its quality, modularity, and reason-

ing capabilities.

In this work, we apply the MOMo methodology

to guide the enrichment of the MoonCAB ontology.

This choice is motivated by its support for reusabil-

ity and modularity, as the current extension focuses

specifically on the Herd module.

3.1 Enrichment of Existing MoonCAB

Modules

We contribute to the enrichment of the “Herd” mod-

ule within the MoonCAB ontology by integrating

structured knowledge related to the Body Condition

Score (BCS) of sheep. This contribution is based on

the MOMo (Modular Ontology Modeling) methodol-

ogy. Evaluating the body condition in sheep is a key

method for determining their overall health and nu-

tritional status. This evaluation is based on the Body

Condition Score (BCS) scale, which ranges from 1

(very thin) to 5 (obese).

To support ontology enrichment and reasoning in

BCS, it is essential to formalize the process by which

sheep are scored. This includes identifying the rele-

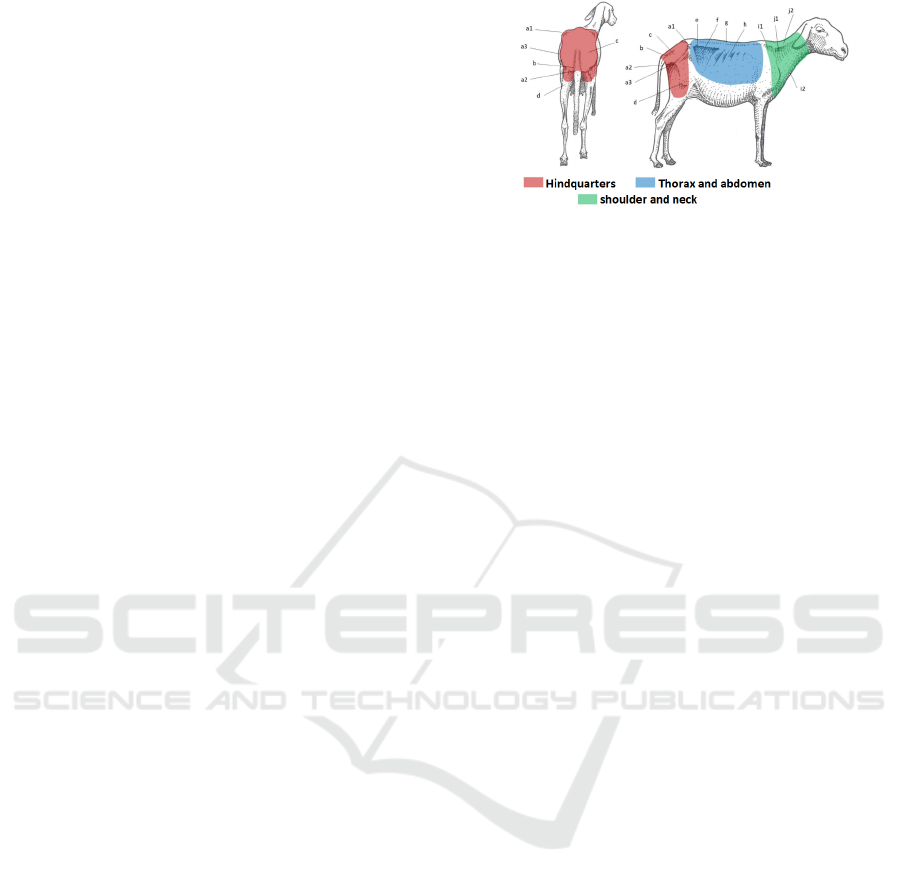

vant anatomical parts. As illustrated in Figure 2, BCS

in sheep involves evaluating three major anatomical

regions.

• Hindquarters — shown in red: includes the iliac

spines (a1), ischial spines (a2), coxo-femoral joint (a3),

Figure 2: Main anatomical zones used for BCS in sheep

(Vall, 2020).

the base of the tail and caudal vertebrae (b), pelvic cov-

erage (c), and the thighs (d).

• Thorax and Abdomen — shown in blue: covers the

transverse processes of the lumbar vertebrae (e), flank

depression (f), dorsal vertebrae spinous processes (g),

and the ribs (h).

• Shoulder and Neck — shown in green: includes the

scapula (i1), humerus joint (i2), neck hollow (j1), and

the occiput (j2).

3.1.1 Use Cases and Data Sources

From these sources, to guide the development of our

ontology according to the MOMo methodology, we

describe below five representative use cases. Sev-

eral use cases are proposed to guide the enrichment

of the ontology, based on documents selected by the

expert domain at INRAT laboratory, such as (Brug

`

ere-

Picoux, 2004; Vall, 2020), which addresses the body

condition scoring of sheep.

• BCS-Based Nutritional Diagnosis. Develop an ontol-

ogy module to diagnose undernutrition in sheep by

representing BCS scores alongside physiological states

(e.g., gestation, lactation). The ontology should sup-

port semantic rules to flag under-conditioned individu-

als and generate intervention alerts. It must be extend-

able to integrate nutritional thresholds, herd averages,

and seasonal feeding plans.

• Reproduction Management Based on BCS. Create an

ontology module to guide mating decisions based on

pre-breeding BCS scores. It will encode rules such

as “BCS ≤ 3 is required for optimal conception,” and

generate warnings if criteria are not met. The module

should be extensible to support breed-specific thresh-

olds, fertility indicators, and reproductive history.

• Climate Impact on Body Condition. Model the relation-

ship between climate events (e.g., drought, heatwaves)

and BCS trends using region-linked data. The ontology

will represent BCS measurements over time, tied to en-

vironmental conditions, enabling risk alerts. It should

be extendable to include pasture quality data, seasonal

climate profiles, and grazing system types.

• Maternal BCS and Lamb Survival Risk. Design a mod-

ule to assess lamb mortality risk based on maternal BCS

at lambing. BCS values will be linked to birth events

KEOD 2025 - 17th International Conference on Knowledge Engineering and Ontology Development

56

and lamb outcomes to support causal inference. The

model should allow extension with lamb weight, birth

season, and maternal feeding data.

• Anatomical Justification of BCS Scoring. Build an

ontology module to represent anatomical zones (e.g.,

spine, ribs, pelvis) used in BCS evaluation. Scoring de-

cisions will be justified through linked observations and

evaluator metadata. The module can be extended to in-

clude visual markers, and palpation techniques.

3.1.2 Competency Questions

Based on the five use cases previously mentioned,

here is a table (Table 2) of competency questions

aligned with each one, including the key concepts in-

volved. Competency questions are natural language

queries that define the scope and objectives of the on-

tology by illustrating the kinds of questions it should

be able to answer once developed.

Table 2: Competency questions and key concepts for the

Body Condition Score (BCS) ontology.

Competency Questions Key Concepts

Which sheep currently show

signs of undernutrition based

on BCS?

BCS, Undernutrition

Threshold, Physiologi-

cal Status

Which ewes should receive ad-

ditional feeding due to low BCS

during lactation?

Lactation Status, Feed-

ing Plan, BCS Trend

Which sheep have shown a sig-

nificant drop in BCS during a

recent climate event?

Climate Event, BCS

Over Time, Region,

Date

What is the lamb mortality risk

based on the mother’s BCS at

parturition?

Maternal BCS, Lamb

Survival, Parturition,

Risk Factor

Which anatomical indicators

justify the BCS score assigned

to a specific sheep?

Hindquarters, Thorax

and abdomen, Shoulder

and neck, Criteria

3.1.3 Identification of Existing Ontology Design

Patterns (ODPs)

We rely on the Modular Ontology Design Library

(MODL) (Shimizu et al., 2019), which provides well-

curated and consistently documented ODPs.

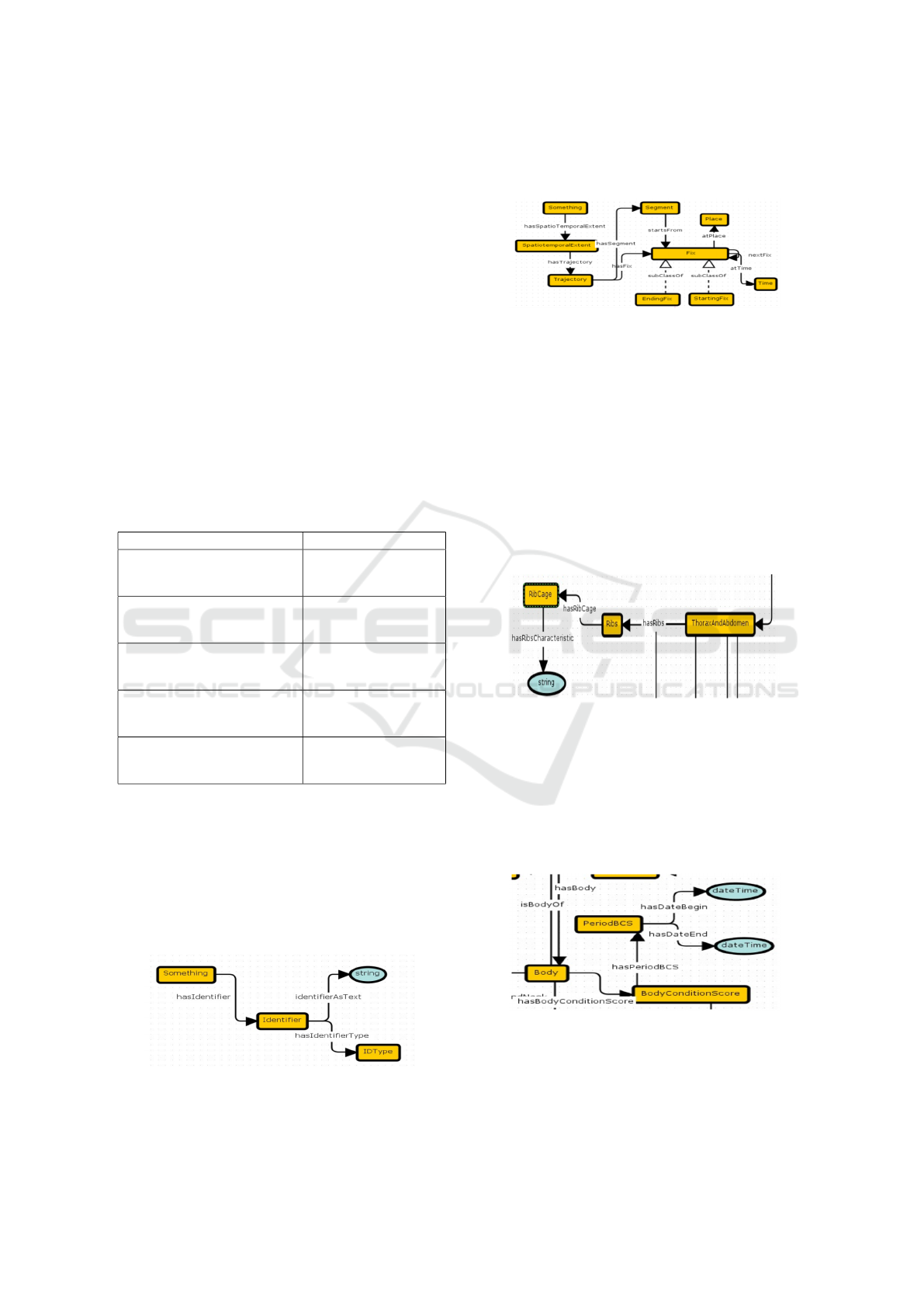

Our ontology integrates the “Identifier” pattern

(see Figure 3). Additionally, the “Spatiotemporal Ex-

Figure 3: Schematic diagram for the MODL identifier

model.

tent” pattern (see Figure 4) is adapted to enhance the

“Herd” module.

Figure 4: Schematic diagram for the MODL spatiotemporal

extent model.

3.1.4 Schema Diagrams for the Enriched

“Herd” Module

Figure 5 illustrates the ontological schema for the

“Identifier” model, featuring three main entities: Tho-

rax and Abdomen, Ribs, and Rib Cage. These entities

are linked hierarchically: ThoraxAndAbdomen con-

nects to Ribs via the hasRibs property, and Ribs con-

nects to RibCage via hasRibCage. The RibCage en-

tity includes a hasRibsCharacteristic property of type

string.

Figure 5: Schematic diagram for the identify model.

Figure 6 depicts a schema based on the “Spa-

tiotemporal Extent” pattern, modeling the body con-

dition assessment. The main entity, Body, must have

a BodyConditionScore, which is associated with Peri-

odBCS instances that include hasDateBegin and has-

DateEnd (both of type dateTime). This model allows

tracking body condition across different periods.

Figure 6: Schematic Diagram for the Spatio-Temporal

Model.

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

57

3.1.5 Document Modules and Axioms

Documentation is a key step in ontology develop-

ment, enabling communication with domain experts

and facilitating the validation of knowledge models.

Following MOMo guidelines, we present here the for-

mal axioms that structure the BCS concepts.

• Animal ⊑ ∃hasBody.Body

• Body ⊑ (≥ 1 hasBodyCondition-

Score.BodyConditionScore)

• PeriodBCS ⊑ (= 1 DateBegin :xsd:dateTime) ⊓ (= 1

DateEnd :xsd:dateTime)

• Body ⊑ ∃hasHindquarters.Hindquarters

⊓ ∃hasTailBase.TailBase ⊓

∃hasThoraxAndAbdomen.ThoraxAndAbdomen

• Hindquarters ⊑ (= 1 hasPelvicBonesAnd-

Hip.PelvicBonesAndHip) ⊓ (= 1 hasBaseOf-

Tail.TailBase) ⊓ (= 1 hasPelvis.Pelvis) ⊓ (= 1

hasThighs.Thighs)

• Pelvis ⊑ ∃hasPelvisCharacteristic :xsd:string

• Pelvis ⊑ ∃hasBaseOfTailCharacteristic :xsd:string

3.1.6 Create Ontology Diagram

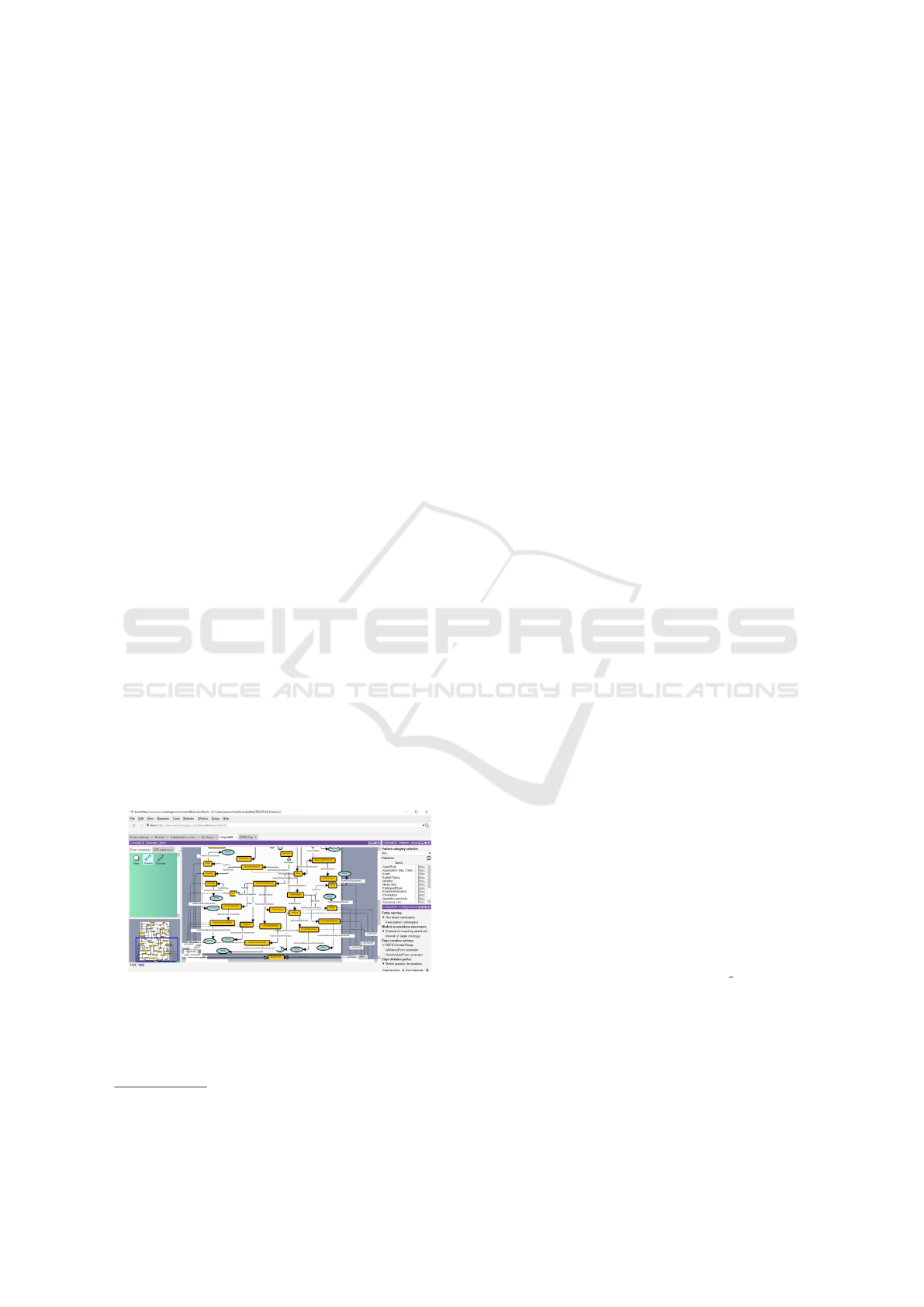

This diagram (Figure 7) presents the enriched version

of the Herd module, created using the CoModIDE

plugin

1

. The module integrates the key concepts iden-

tified in the previous use case and competency ques-

tion phases. It is now ready to be reintegrated into

the global MoonCAB ontology and will serve as the

foundation for the next modeling step: establishing

inter-module and intra-module relations. Specifically,

links will be defined between the Herd module and

other modules such as Pasture and Behavior, enabling

semantic consistency across all BCS-related compo-

nents.

Figure 7: An enriching view of the “Herd” module, visual-

ized using the CoModIDE plugin.

1

CoModIDE is a Prot

´

eg

´

e plugin for ODP-based modu-

lar ontology engineering: https://comodide.com/

3.2 Enrichment of MoonCAB by SWRL

Rules

To enable automated reasoning and explainable BCS

classification within MoonCAB, we enriched the on-

tology with a set of 200 SWRL rules derived from

anatomical features of the sheep body. These rules

utilize previously defined structural axioms that de-

scribe the anatomical composition of an animal and

enable the inference of Body Condition Score (BCS)

levels from observed body part characteristics.

• BCS = 5: This rule identifies sheep with very convex

pelvis, abundant tail base fat, invisible flank depth, and

very high shoulder fat cover as over-conditioned, as-

signing them a BCS of 5.

Herd:Animal(?x) ∧

Herd:hasCategory(?x,Herd:Sheep) ∧

Herd:hasBody(?x,?b)

∧ Herd:hasPartHindquarters(?b,?hq)∧

Herd:hasPelvicAndHipBones(?hq,?phb) ∧

Herd:hasPelvicBonesAndHipCharacteristic(?phb,

“CoveredByFat”) ∧ Herd:hasPelvis(?hq,?p) ∧

Herd:hasPelvisCharacteristic(?p,“VeryConvex”)

∧ Herd:hasThighs(?hq,?t) ∧

Herd:hasThighsCharacteristic(?t,”Bulging”)

∧ Herd:hasTailBase(?hq,?tb) ∧

Herd:hasCaudalVertebrae(?hq,?ctb) ∧

Herd:hasCaudalVertebraeCharacteristic(?ctb,

“FatAccumulation”) ∧

Herd:hasPartThoraxAndAbdomen(?b,?ta)

∧ Herd:hasDorsalLine(?ta,?dl) ∧

Herd:hasDorsalLineCharacteristic(?dl,“Invisible”)

∧ Herd:hasFlankHollows(?ta,?fh) ∧

Herd:hasFlankHollowsCharacteristic(?fh,“Invisible”)

∧ Herd:hasLumbarPalpation(?ta,?lp) ∧

Herd:hasMammillaryProcesses(?lp,?mp) ∧

Herd:hasMammillaryProcessesCharacteristic(?mp,

“BarelyPalpable”) ∧

Herd:hasLumbarVertebrae(?ta,?lv) ∧

Herd:hasVertebralAchCharacteristic(?lv,

“BarelyPalpable”) ∧

Herd:hasTransverseProcesses(?ta,?tp) ∧

Herd:hasTransverseProcessCharacteristic(?tp,

“CannotBeFelt”) ∧

Herd:hasPartShoulderAndNeck(?b,?sn)

∧ Herd:hasShoulder(?sn,?s) ∧

Herd:hasShoulderCharacteristic(?s,“ThickFatCover”)

∧ Herd:hasNeck(?sn,?n) ∧

Herd:hasNeckCharacteristic(?n,“Muscular”)

∧ Herd:hasBodyConditionScore(?b,?scoreObj)

→Herd:hasScore(?scoreObj, “Score 5”)

• Feed Supplementation During Lactation for Low

BCS Ewes: This rule triggers a feeding adjustment for

lactating ewes whose BCS falls below 2.5, indicating the

need for increased nutritional support during this energy-

demanding phase.

Herd:Animal(?x) ∧

Herd:hasCategory(?x,Herd:Sheep)

∧ Herd:hasBody(?x,?b) ∧

KEOD 2025 - 17th International Conference on Knowledge Engineering and Ontology Development

58

Herd:hasBodyConditionScore(?b,?s) ∧

Herd:hasNumericScore(?s,?scoreValue)

∧ swrlb:lessThan(?scoreValue,2.5) ∧

Herd:hasPhysiologicalStatus(?x,Herd:Lactating)

→ Herd:requiresFeedingAdjustment(?x, true)

• Predict Lamb Mortality Risk Based on Maternal

BCS: This rule predicts a high risk of lamb mortality

if the ewe’s BCS at parturition is below 2.0, highlighting

the importance of maternal condition in neonatal survival

outcomes.

Herd:Animal(?m) ∧

Herd:hasCategory(?m,Herd:Sheep) ∧

Herd:hasBodyConditionScore(?m,?s) ∧

Herd:hasNumericScore(?s,?scoreValue)

∧ swrlb:lessThan(?scoreValue, 2.0)

∧ Herd:hasChild(?m,?lamb) ∧

Herd:hasBirthEvent(?lamb,?b) ∧

Herd:hasBirthType(?b,“Parturition”)

→Herd:hasLambMortalityRisk(?lamb,“High”)

4 EVALUATION AND

VALIDATION OF NEW

MOONCAB VERSION

4.1 Evaluation Using the Pellet

Reasoner

To verify the consistency of the ontology and to detect

any potential logical conflicts, we used the Pellet

2

rea-

soner, which is designed for reasoning over Descrip-

tion Logics (DL). It accepts OWL files as input and

is capable of processing concepts, properties, and in-

stances. In particular, it allows the verification of the

ontology’s coherence and the detection of logical in-

consistencies. This reasoning phase was successfully

completed, and no inconsistencies were detected by

the reasoner.

4.2 Evaluation Using SPARQL Query

Language

To verify the accuracy and the coverage of the on-

tology, we have used the SPARQL language by re-

formulating the competency questions as SPARQL

queries. Table 3 shows some examples of competency

questions (represented in Table 2), their correspond-

ing SPARQL queries, and the results of the execution

of these queries on our ontology.

2

https://owl.cs.manchester.ac.uk/tools/fact

4.3 Evaluation Using MoonEv Tool

Evaluating ontology is crucial for its effectiveness in

information exchange and knowledge management.

This involves analyzing its clarity, structure, valid-

ity, modularity, and decision-enabling capacity. Sev-

eral tools and methodologies have been developed for

this purpose, such as Delta (Kondylakis et al., 2021),

which evaluates specific metrics, OMEVA (Gobin-

Rahimbux, 2022) for assessing ontology modules

across all possible metrics (35 metrics) in eight cat-

egories, and OOPS! (Poveda-Villal

´

on et al., 2014) for

identifying 40 common ontology pitfalls. XDTest-

ing (Ciroku and Presutti, 2022) introduces unit testing

of ontology modules. MoOnEV (Hammouda et al.,

2024) is a modular ontology evaluation and verifica-

tion tool that stands out from other existing tools due

to its comprehensive coverage of metrics. It allows

for the performance testing of ontologies through use

cases and provides in-depth reports of evaluation re-

sults to aid in correcting and improving ontology per-

formance.

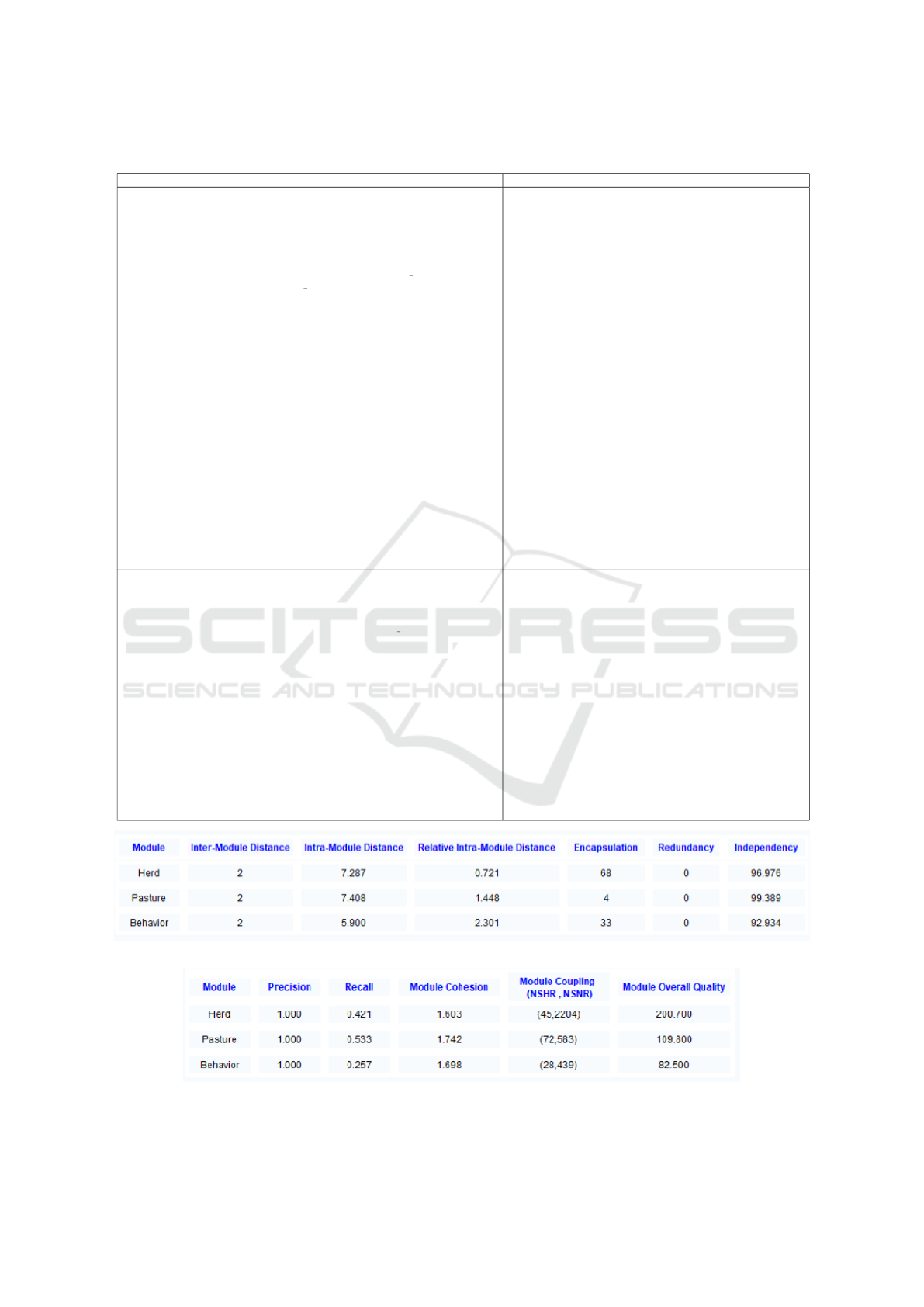

Figure 8 presents the evaluation of MoonCAB

modules based on relatedness metrics. All modules

show high independence (above 92%) and zero redun-

dancy, confirming good modular isolation. The Herd

module stands out with strong encapsulation (68) and

the lowest relative intra-module distance (0.721), in-

dicating high internal cohesion.

Figure 9 shows the results of metrics classified in

the module’s Quality category, including precision,

recall, cohesion, coupling, and overall quality score.

All modules achieve perfect precision (1.000), but re-

call varies, with the Herd module achieving the high-

est overall quality (200.700). The Behavior mod-

ule has the lowest recall (0.257) and overall quality

(82.500), indicating opportunities for structural im-

provement.

5 DISCUSSION

Compared to the ontologies presented in Section 2.3,

those lacking SWRL rule integration—or incorporat-

ing only limited rule sets—remain confined to deliv-

ering explicit, surface-level information. Such on-

tologies are incapable of performing logical inference

or capturing complex semantic relationships. In con-

trast, the MoonCAB ontology, after enrichment, com-

prises 14,653 axioms, 154 classes, 97 object proper-

ties, 62 data properties, and 234 individuals. Most

importantly, it integrates 200 SWRL rules, enabling

it to move beyond static representation and support

advanced reasoning. This allows for the inference of

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

59

Table 3: Ontology evaluation.

Competency Question SPARQL Query Result

CQ01: Which sheep cur-

rently show signs of under-

nutrition based on BCS?

SELECT ?animal ?p WHERE {

?animal h:hasBody ?body.

?body h:hasBodyConditionScore ?bcs.

?bcs h:hasPeriodBCS ?bcsp.

?bcsp h:hasDateBegin ?p.

?bcs h:hasScore ?score.

FILTER(?score = "Score 1" || ?score =

"Score 2") }

{ "head":{"vars":["animal","p"]},

"results":{"bindings":[ { "animal":{"type":"URI",

"value":".../AnimalBehavior/Herd/H01Sh02"},

"p":{"type":"literal","datatype":"...#dateTime",

"value":"2024-07-01T00:00:00"} } ] } }

CQ03: Which sheep have

shown a significant drop

in BCS during X climate

event?

SELECT ?animal ?score0 ?score1 WHERE {

?event h:hasClimateEventName "Dry

Rain".

?event h:hasPeriodClimateEvent ?pe

?pe h:hasPeriodClimateEventBegin ?peB.

?pe h:hasPeriodClimateEventEnd ?peE.

?animal h:hasBody ?body1.

?body1 h:hasBodyConditionScore ?bcs1.

?bcs1 h:hasNumericScore ?score1.

?bcs1 h:hasPeriodBCS ?bcsp.

?bcsp h:hasDateBegin ?peB.

?bcsp h:hasDateEnd ?peE.

bcsp h:hasIDPeriodBCS ?id.

?animal h:hasBody ?body0.

?body0 h:hasBodyConditionScore ?bcs0.

?bcs0 h:hasPeriodBCS ?bcsp0.

?bcsp0 h:hasIDPeriodBCS ?id0.

?bcs0 h:hasNumericScore ?score0.

BIND(xsd:integer(?id) - 1 AS ?prevID).

FILTER(?score0 - ?score1 >= 1 &&

xsd:integer(?id0) = ?prevID) }

{ "head":{"vars":["animal","score0","score1"]},

"results":{"bindings":[ { "animal":{"type":"URI",

"value":".../Animal-Behavior/Herd/H01Sh02"},

"score0":{"type":"literal",

"datatype":"...#decimal", "value":"3.0"},

"score1":{"type":"literal",

"datatype":"...#decimal", "value":"2.0"} } ] }

}

CQ04: Which anatomical

indicators justify the BCS

score assigned to a specific

sheep?

SELECT ?partclass ?property ?value

WHERE {

?animal h:hasBody ?body .

?body h:hasBodyConditionScore ?bcs .

?bcs h:hasScore ‘Score 3" .

?body ?hasPart ?part .

FILTER(STRSTARTS(STR(?hasPart),

STR(h:hasPart)))

?part rdf:type ?partclass .

FILTER(STRSTARTS(STR(?partclass),

STR(h:)))

OPTIONAL {?part ?property ?rawValue .

FILTER(STRSTARTS(STR(?property),

STR(h:)))

OPTIONAL {FILTER(isLiteral(?rawValue))

BIND(?rawValue AS ?value) }

OPTIONAL {FILTER(isIRI(?rawValue))

?rawValue ?subProp ?value .

FILTER(isLiteral(?value)) } } } }

{ "head":{"vars":["partclass","property",

"value"]}, "results":{"bindings":[

{ "partclass": {"type":"URI",

"value":".../Herd/Hindquarters"}, "property":

{"type":"URI", "value":".../Herd/hasPelvis"},

"value":{"type":"literal", "value":"Flat to

convex"} }, ... } ] }

Figure 8: MoOnEV: Module’ relatedness metrics results.

Figure 9: MoOnEV: Module’ quality metrics results.

KEOD 2025 - 17th International Conference on Knowledge Engineering and Ontology Development

60

implicit knowledge, unveiling hidden patterns and re-

lationships within agricultural datasets—an essential

feature for smart agriculture systems that require in-

telligent decision support and semantic awareness.

Our ongoing research aims to make a substantial

contribution to the management of livestock breed-

ing and the sustainable use of agricultural resources.

This objective is grounded in the design, semantic en-

richment, and quality evaluation of a domain ontol-

ogy that is intended to support decision-making pro-

cesses in agricultural applications. We have devel-

oped a mobile application that predicts the Body Con-

dition Score (BCS) of sheep using deep learning mod-

els, as shown in Figure 10. The next step is to inte-

grate the enriched MoonCAB ontology into this ap-

plication, where it will serve a critical role in explain-

ing AI-generated predictions and enhancing their in-

terpretability.

Figure 10: Mobile application interfaces for startup, video

selection, and BCS classification results.

Figure 11 presents a comparative analysis of the

XAI methods Grad-CAM++, Score-CAM, SHAP,

LIME, and their hybrid combinations. This evalua-

tion enabled us to identify the key body regions the

model relies on for its predictions by visualizing the

discriminative features involved in classifying BCS

scores—features that aim to replicate the tactile as-

sessment typically performed by human palpation un-

der real-world conditions. These insights guided us

to prioritize information and image collection on crit-

ical anatomical regions (e.g., back, rump, tail base)

to enhance model accuracy. However, as no single

method met all evaluation criteria, we transitioned to

a semantic-based approach.

However, given that our system targets both agri-

cultural experts and farmers—who vary in their levels

of technical expertise—it is essential to provide ex-

planations that are both semantically structured and

accessible. Simple XAI methods alone are insuffi-

cient to meet these demands; therefore, we move to-

ward adopting Ontology-based Explainable AI (O-

XAI). The ontology will fulfill this role by delivering

multi-level justifications: clear and intuitive for farm-

ers, yet scientifically rigorous for domain experts.

Figure 11: Attention regions generated by diverse XAI

methods (Hammouda et al., 2025).

Furthermore, it will support evidence-based decision-

making aligned with animal body condition assess-

ment, thereby enhancing the efficiency, transparency,

and sustainability of livestock management in smart

agriculture.

6 CONCLUSION AND FUTURE

WORKS

With the support of INRAT and based on expert-

validated scientific documentation, we successfully

enriched the MoonCAB ontology to comprehensively

represent the Body Condition Score (BCS) evaluation

process in sheep. The enriched ontology now contains

over 14,653 axioms, 154 classes, 97 object properties,

62 data properties, and 234 individuals. Additionally,

it integrates 200 SWRL rules, enabling semantic rea-

soning to answer complex questions related to BCS

levels, their indicators, and corresponding decision-

making scenarios. To ensure the quality, effective-

ness, and accuracy of the ontology, a multi-method

evaluation was conducted using the Pellet reasoner,

SPARQL queries, and the MoOnEV tool.

This enriched version of MoonCAB is designed to

enhance semantic explainability and provide deeper

insights into BCS detection, both at the individual

animal level and across the entire herd. This is the

first work to address BCS prediction in sheep using

computer vision, as no existing research has explored

this specific application. This pioneering contribution

opens new opportunities for automating sheep moni-

toring in a transparent and interpretable way.

As a future perspective, we aim to use the

Ontology-based eXplainable Artificial Intelligence

(O-XAI) through MoonCAB-XAI to enable transpar-

ent and interpretable BCS prediction. This leads to

a broader research question: To what extent and in

depth can ontology-based models like MoonCAB be

integrated into computational animal behavior analy-

sis systems (CABA) in general into smart agriculture

systems?

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

61

ACKNOWLEDGEMENTS

We would like to express our sincere gratitude to

our collaborators, Dr Samir Smetti and Pr Moukhtar

Mahouchi, from the Institut National de Recherche

Agronomique de Tunis (INRAT) for their valuable

support and for facilitating access to the experimen-

tal farm of the University of Jendouba, at the Higher

School of Agriculture of Kef, which enabled the sci-

entific documents used to enrich our ontology Moon-

CAB.

REFERENCES

Altukhi, Z. M., Pradhan, S., and Aljohani, N. (2025). A

systematic literature review of the latest advancements

in xai. Technologies, 13(3):93.

Amith, M., Harris, M. R., Stansbury, C., Ford, K., Manion,

F. J., and Tao, C. (2021). Expressing and executing

informed consent permissions using swrl: the all of us

use case. In American Medical Informatics Associa-

tion Annual (AMIA), volume 2021, page 197. Ameri-

can Medical Informatics Association.

Aslam, M., Segura-Velandia, D., and Goh, Y. M. (2023). A

conceptual model framework for xai requirement elic-

itation of application domain system. IEEE Access,

11:108080–108091.

Beck, T., Hancock, J. M., and Mallon, A.-M. (2009). De-

veloping a mammalian behaviour ontology. Interna-

tional Conference on Biomedical Ontology (ICBO),

page 159.

Blomqvist, E., Hammar, K., and Presutti, V. (2016). En-

gineering ontologies with patterns-the extreme design

methodology. Ontology Engineering with Ontology

Design Patterns, (25):23–50.

Brdnik, S. and

ˇ

Sumak, B. (2024). Current trends, chal-

lenges and techniques in xai field; a tertiary study of

xai research. In 2024 47th MIPRO ICT and Electron-

ics Convention (MIPRO), pages 2032–2038. IEEE.

Brug

`

ere-Picoux, J. (2004). Maladies des moutons. France

Agricole Editions.

C¸ evik, K. K. (2020). Deep learning based real-time body

condition score classification system. IEEE Access,

8:213950–213957.

Chandra, R., Tiwari, S., Rastogi, S., and Agarwal, S.

(2025). A diagnosis and treatment of liver diseases:

integrating batch processing, rule-based event detec-

tion and explainable artificial intelligence. Evolving

Systems, 16(2):1–26.

Chhetri, T. R., Hohenegger, A., Fensel, A., Kasali, M. A.,

and Adekunle, A. A. (2023). Towards improving

prediction accuracy and user-level explainability us-

ing deep learning and knowledge graphs: A study on

cassava disease. Expert Systems with Applications,

233:120955.

Ciroku, F. and Presutti, V. (2022). Providing tool support

for unit testing in extreme design. In Proceedings of

the 1st Workshop on Modular Knowledge (PWMK).

Corbucci, L., Monreale, A., Panigutti, C., Natilli, M., Smi-

raglio, S., and Pedreschi, D. (2023). Semantic enrich-

ment of explanations of ai models for healthcare. In

International Conference on Discovery Science, pages

216–229. Springer.

Corner-Thomas, R., Sewell, A., Kemp, P., Wood, B., Gray,

D., Morris, S., Blair, H., and Kenyon, P. (2020). Body

condition scoring of sheep: intra-and inter-observer

variability.

Cugny, R., Aligon, J., Chevalier, M., Roman Jimenez, G.,

and Teste, O. (2022). Autoxai: A framework to auto-

matically select the most adapted xai solution. In Pro-

ceedings of the 31st ACM International Conference on

Information & Knowledge Management, pages 315–

324.

Doh, M., Rodrigues, C. M., Boutry, N., Najman, L., Man-

cas, M., and Gosselin, B. (2025). Found in translation:

semantic approaches for enhancing ai interpretability

in face verification. arXiv preprint arXiv:2501.05471.

Donadello, I. and Dragoni, M. (2020). Sexai: introduc-

ing concepts into black boxes for explainable artificial

intelligence. In Proceedings of the Italian Workshop

on Explainable Artificial Intelligence co-located with

19th International Conference of the Italian Associa-

tion for Artificial Intelligence, XAI. it@ AIxIA 2020,

Online Event, November 25-26, 2020, volume 2742,

pages 41–54. CEUR-WS.

Dong, Y., Su, H., Zhu, J., and Zhang, B. (2017). Improv-

ing interpretability of deep neural networks with se-

mantic information. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 4306–4314.

D

´

oreas, F., Vial, F., Hammar, K., Lindberg, A., Blomqvist,

E., and Revie, C. (2017). Development of an animal

health surveillance ontology. Proceeding of the 3rd In-

ternational Conference of Animal Health Surveillance

(ICAHS)., pages 97–99.

Dragoni, M. and Donadello, I. (2022). A knowledge-based

strategy for xai: The explanation graph. Semantic Web

Journal.

Garcez, A. d., Gori, M., Lamb, L. C., Serafini, L., Spranger,

M., and Tran, S. N. (2019). Neural-symbolic comput-

ing: An effective methodology for principled integra-

tion of machine learning and reasoning. arXiv preprint

arXiv:1905.06088.

Gkoutos, G. V., Schofield, P. N., and Hoehndorf, R. (2012).

The neurobehavior ontology: an ontology for annota-

tion and integration of behavior and behavioral phe-

notypes. International Review of Neurobiology (IRN),

103:69–87.

Gobin-Rahimbux, B. (2022). Evaluation metrics for ontol-

ogy modules. In International Conference on Data

Science and Information System (ICDSIS), pages 1–6.

IEEE.

Golik, W., Dameron, O., Bugeon, J., Fatet, A., Hue, I.,

Hurtaud, C., Reichstadt, M., Sala

¨

un, M.-C., Vernet,

J., Joret, L., et al. (2012). Atol: the multi-species

livestock trait ontology. In 6th Metadata and Seman-

tics Research Conference (MTSR), pages 289–300.

Springer.

KEOD 2025 - 17th International Conference on Knowledge Engineering and Ontology Development

62

Grati, R., Fattouch, N., and Boukadi, K. (2025). Ontologies

for smart agriculture: A path toward explainable ai–a

systematic literature review. Ieee Access.

Gu, H. and et al (2017). An object-based semantic classifi-

cation method for high resolution remote sensing im-

agery using ontology. Remote Sensing (RS), 9(4):329.

Hammouda, N., Mahfoudh, M., and Boukadi, K. (2023).

Mooncab: a modular ontology for computational

analysis of animal behavior. In 2023 20th ACS/IEEE

International Conference on Computer Systems and

Applications (AICCSA), pages 1–8. IEEE.

Hammouda, N., Mahfoudh, M., and Boukadi, K. (2024).

Moonev: Modular ontology evaluation and validation

tool. Procedia Computer Science, 246:3532–3541.

Hammouda, N., Mahfoudh, M., Grati, R., and Boukadi, K.

(2025). Predicting sheep body condition scores via

explainable deep learning model. International CAIP,

30.

Hamza, M. C. and Bourabah, A. (2024). Exploratory study

on the relationship between age, reproductive stage,

body condition score, and liver biochemical profiles

in rembi breed ewes. Iranian Journal of Veterinary

Medicine, 18(2).

Horrocks, I., Patel-Schneider, P. F., Boley, H., Tabet, S.,

Grosof, B., Dean, M., et al. (2004). Swrl: A semantic

web rule language combining owl and ruleml. W3C

Member submission, 21(79):1–31.

Kondylakis, H., Nikolaos, A., Dimitra, P., Anastasios, K.,

Emmanouel, K., Kyriakos, K., Iraklis, S., Stylianos,

K., and Papadakis, N. (2021). Delta: a modular ontol-

ogy evaluation system. Information, 12(8):301.

Kong, X., Liu, S., and Zhu, L. (2024). Toward human-

centered xai in practice: A survey. Machine Intelli-

gence Research, 21(4):740–770.

Kosov, P., El Kadhi, N., Zanni-Merk, C., and Gardashova,

L. (2024a). Advancing xai: new properties to broaden

semantic-based explanations of black-box learning

models. Procedia Computer Science, 246:2292–2301.

Kosov, P., El Kadhi, N., Zanni-Merk, C., and Gardashova,

L. (2024b). Semantic-based xai: Leveraging ontol-

ogy properties to enhance explainability. In 2024 In-

ternational Conference on Decision Aid Sciences and

Applications (DASA), pages 1–5. IEEE.

Lundberg, S. M. and Lee, S.-I. (2017). A unified approach

to interpreting model predictions. Advances in neural

information processing systems, 30.

Marcos, D., Lobry, S., and Tuia, D. (2019). Semantically

interpretable activation maps: what-where-how expla-

nations within cnns. In 2019 IEEE/CVF International

Conference on Computer Vision Workshop (ICCVW),

pages 4207–4215. IEEE.

Ngo, Q. H., Kechadi, T., and Le-Khac, N.-A. (2022).

Oak4xai: model towards out-of-box explainable arti-

ficial intelligence for digital agriculture. In Interna-

tional Conference on Innovative Techniques and Ap-

plications of Artificial Intelligence, pages 238–251.

Springer.

Poveda-Villal

´

on, M., G

´

omez-P

´

erez, A., and Su

´

arez-

Figueroa, M. C. (2014). Oops!(ontology pitfall scan-

ner!): An on-line tool for ontology evaluation. In-

ternational Journal on Semantic Web and Information

Systems (IJSWIS), 10(2):7–34.

Retzlaff, C. O., Angerschmid, A., Saranti, A., Schnee-

berger, D., Roettger, R., Mueller, H., and Holzinger,

A. (2024). Post-hoc vs ante-hoc explanations: xai de-

sign guidelines for data scientists. Cognitive Systems

Research, 86:101243.

Ribeiro, M. T., Singh, S., and Guestrin, C. (2016). ” why

should i trust you?” explaining the predictions of any

classifier. In Proceedings of the 22nd ACM SIGKDD

international conference on knowledge discovery and

data mining, pages 1135–1144.

Ribeiro, M. T., Singh, S., and Guestrin, C. (2018). Anchors:

High-precision model-agnostic explanations. In Pro-

ceedings of the AAAI conference on artificial intelli-

gence, volume 32.

Rodr

´

ıguez Alvarez, J., Arroqui, M., Mangudo, P., Toloza,

J., Jatip, D., Rodriguez, J. M., Teyseyre, A., Sanz,

C., Zunino, A., Machado, C., et al. (2019). Estimat-

ing body condition score in dairy cows from depth

images using convolutional neural networks, transfer

learning and model ensembling techniques. Agron-

omy, 9(2):90.

Sajitha, P., Andrushia, A. D., Mostafa, N., Shdefat, A. Y.,

Suni, S., and Anand, N. (2023). Smart farming ap-

plication using knowledge embedded-graph convolu-

tional neural network (kegcnn) for banana quality de-

tection. Journal of Agriculture and Food Research,

14:100767.

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R.,

Parikh, D., and Batra, D. (2017). Grad-cam: Visual

explanations from deep networks via gradient-based

localization. In Proceedings of the IEEE international

conference on computer vision, pages 618–626.

Sharma, S. and Jain, S. (2024). Ontoxai: a semantic web

rule language approach for explainable artificial intel-

ligence. Cluster Computing, 27(10):14951–14975.

Shimizu, C., Hammar, K., and Hitzler, P. (2021). Modular

ontology modeling. Semantic Web (SW), (Preprint):1–

31.

Shimizu, C., Hirt, Q., and Hitzler, P. (2019). Modl:

a modular ontology design library. arXiv preprint

arXiv:1904.05405.

Sun, C., Xu, H., Chen, Y., and Zhang, D. (2024). As-xai:

Self-supervised automatic semantic interpretation for

cnn. Advanced Intelligent Systems, 6(12):2400359.

Uschold, M. and King, M. (1995). Towards a methodology

for building ontologies. Citeseer.

Vall, E. (2020). Guide harmonis

´

e de notation de l’etat cor-

porel (nec) pour les animaux de ferme du sahel: rumi-

nants de grande taille (bovins, camelins) et de petite

taille (ovins, caprins) et

´

equid

´

es (asins et

´

equins).

V

´

azquez-Mart

´

ınez, I., Tırınk, C., Salazar-Cuytun, R.,

Mezo-Solis, J. A., Garcia Herrera, R. A., Orzuna-

Orzuna, J. F., and Chay-Canul, A. J. (2023). Pre-

dicting body weight through biometric measurements

in growing hair sheep using data mining and machine

learning algorithms. Tropical Animal Health and Pro-

duction, 55(5):307.

Toward Semantic Explainable AI in Livestock: MoonCAB Enrichment for O-XAI to Sheep BCS Prediction

63