Towards Scalable and Fast UAV Deployment

Tim Felix Lakemann

a

and Martin Saska

b

Department of Cybernetics, Czech Technical University, Karlovo namesti 13, Prague, Czech Republic

Keywords:

Object Detection, Segmentation and Categorization, Omnidirectional Vision, Multi-Robot Systems, Computer

Vision for Automation.

Abstract:

This work presents a scalable and fast method for deploying Uncrewed Aerial Vehicle (UAV) swarms. De-

centralized large-scale aerial swarms rely on onboard sensing to achieve reliable relative localization in real-

world conditions. In heterogeneous research and industrial platforms, the adaptability of the individual UAVs

enables rapid deployment in diverse mission scenarios. However, frequent platform reconfiguration often re-

quires time-consuming sensor calibration and validation, which introduces significant delays and operational

overhead. To overcome this, we propose a method that enables rapid deployment and calibration of vision-

based UAV swarms in real-world environments.

1 INTRODUCTION

Collaborative multi–UAV systems improve robust-

ness and operational efficiency across a wide range of

applications, from search and rescue to environmen-

tal monitoring (Bartolomei et al., 2023). Accurate

relative localization of team members is essential for

safe navigation, collision avoidance, and coordinated

task execution (Chung et al., 2018; Chen et al., 2022).

Global Navigation Satellite System (GNSS) alone is

often insufficient for these tasks due to its limited pre-

cision, unavailability in GNSS–denied environments,

and vulnerability to interference (Xu et al., 2020;

Zhou et al., 2022). Although alternatives such as

Real Time Kinematics (RTK)-GNSS and motion cap-

ture systems provide high accuracy, they require ex-

ternal infrastructure or connectivity, making them un-

suitable for many real-world scenarios (Chung et al.,

2018).

In swarm applications, vision-based relative local-

ization systems onboard offer scalable, cost-effective,

and decentralized solutions to detect and track other

UAVs, as shown in recent studies (Li et al., 2023;

Zhao et al., 2025).

From our experience deploying large-scale

swarms with diverse robot configurations and sen-

sors, we identified sensor calibration as a primary

bottleneck for fast real-world deployment. In both

industrial and research settings–such as object de-

tection, terrain analysis, or communication–aware

navigation-sensor changes are common and often ne-

a

https://orcid.org/0009-0000-4863-3235

b

https://orcid.org/0000-0001-7106-3816

Figure 1: Overview of collaborating UAVs using our prior

auto-generated masks for save collaboration.

cessitate recalibration. Although recent vision-based

methods based on deep learning (Schilling et al.,

2021; Xu et al., 2020; Oh et al., 2023) have shown

promise, Convolutional Neural Networks (CNNs)

are typically computationally intensive, require

large annotated datasets, tend to overfit to specific

platforms, and struggle to generalize to unseen

conditions like sun reflections (Funahashi et al.,

2021). Additionally, visible parts of UAV—-such as

the rotor arms, landing gear, or camera mount–can

introduce artifacts, degrade algorithm performance,

or require manual pre-processing. Such artifacts can

lead to false detections or misclassifications, causing

onboard systems to make incorrect decisions. In the

worst case, this can result in collisions with obstacles

or other agents in a collaborating swarm. One

possible mitigation strategy is to mount the camera

so that no part of the UAV frame appears in Field of

View (FOV); however, this is often impractical for

small aerial vehicles or when omnidirectional vision

Lakemann, T. F. and Saska, M.

Towards Scalable and Fast UAV Deployment.

DOI: 10.5220/0013713500003982

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 22nd International Conference on Informatics in Control, Automation and Robotics (ICINCO 2025) - Volume 1, pages 243-250

ISBN: 978-989-758-770-2; ISSN: 2184-2809

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

243

is required. In addition, off-center mounting can shift

the center of mass, compromising flight stability,

particularly critical for agile or tightly constrained

UAV platforms.

An effective solution to mitigating reflections and

other artifacts caused by the UAV frame in camera im-

ages is to generate a mask that excludes these regions.

However, manually annotating such frame regions in-

troduces a significant bottleneck, hindering scalability

and delaying the deployment of perception pipelines

across different UAV platforms. The problem of au-

tomatically identifying and masking the visible UAV

frame has received limited attention in the literature

and, to the best of the authors’ knowledge, is often ne-

glected entirely, risking the misclassification of struc-

tural elements of the observing UAV–or handled man-

ually.

In this work, we propose a novel approach for

automatic detection and masking of the UAV frame

in on-board imagery. This method serves as an en-

abling technology for the rapid preparation and de-

ployment of large-scale aerial swarms (Fig. 1). It is

lightweight, does not require prior training, and is

adaptable to various camera models and UAV config-

urations. The approach supports user interaction and

validation, producing high-quality masks that effec-

tively exclude visible UAV structures, thereby facil-

itating faster deployment while improving the safety

and reliability of swarm operations.

The proposed approach is open-source and avail-

able at https://github.com/ctu-mrs/uvdar core/blob/

master/scripts/extract mask.py.

2 STATE OF THE ART

The automatic mask generation to extract the frame

of UAV is a problem not present in the current litera-

ture. However, object detection algorithms have been

employed to identify and mask unwanted elements in

UAV imagery. For example, in (Pargieła, 2022), the

authors used YOLOv3 to detect and mask vehicles

in images acquired from UAV, thereby enhancing the

quality of digital elevation models and orthophotos.

CNNs have been widely adopted for semantic

segmentation. These models assign class labels to

each pixel, facilitating a detailed understanding of

the scene. For example, in (Soltani et al., 2024),

the authors demonstrated the efficacy of segmentation

based on CNN in orthoimagery UAV for the classi-

fication of plant species. They use a two–step ap-

proach. First they trained a CNN-based image clas-

sification model using simple labels and applied it in

a moving-window approach over UAV orthoimagery

to create segmentation masks. In the second phase,

these segmentation masks were used to train state-of-

the-art CNN-based image segmentation models with

an encoder–decoder structure.

However, training such models requires labeled

datasets, which are often scarce in UAV applications

due to the labour-intensive nature of manual annota-

tion. To mitigate this, researchers have explored the

use of synthetic data. In (Hinniger and R

¨

uter, 2023),

the authors generated synthetic training data using

game engines to simulate UAV perspectives, thereby

augmenting real datasets and improving model per-

formance. The recent work of (Maxey et al., 2024)

introduces a simulation platform for UAV perception

tasks using Neural Radiance Fields (NeRF) (Milden-

hall et al., 2021). While the focus is on generating

synthetic datasets for tasks like obstacle avoidance

and navigation, a key contribution is the explicit 3D

modeling of the UAV structure and camera perspec-

tives, which inherently involves managing occlusions

and self-visibility. Mask-guided techniques have also

been explored in generative tasks. For instance,in

(Zhou et al., 2024), the authors used semantic masks

to control UAV-based scene synthesis, showcasing

the broader applicability of mask-based conditioning

in UAV imagery.

Recent advancements have seen the integration of

transformer architectures in UAV image segmenta-

tion. Models like the Aerial Referring Transformer

(AeroReformer) (Li and Zhao, 2025) and Pseudo

Multi-Perspective Transformer (PPTFormer) (Ji et al.,

2024) have been proposed to address the unique

challenges posed by UAV imagery, such as vary-

ing perspectives and scales. AeroReformer lever-

ages vision-language cross-attention mechanisms to

enhance segmentation accuracy, while PPTFormer in-

troduces pseudo-multiperspective learning to simu-

late diverse UAV viewpoints.

2.1 Contributions

While existing works primarily focus on the detec-

tion and segmentation of external objects, we address

the less-explored task of automatically detecting and

masking the UAV frame within onboard imagery. Ac-

curate extraction of the UAV frame enables more reli-

able downstream tasks such as object detection, track-

ing, and relative localization, by preventing false de-

tections on the UAV’s own structure. The main con-

tributions of our work are as follows:

1. We propose a novel method for the automatic de-

tection and masking of the UAV frame in onboard

UAV imagery.

2. Our approach incorporates camera-specific

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

244

heuristics and leverages spatial relationships via

a k-d tree structure, resulting in a lightweight and

adaptable solution that generalizes across differ-

ent UAV platforms and camera configurations.

3. The proposed user-interactive mask generation

pipeline does not require prior training or la-

beled datasets, enabling rapid deployment and

customization without the need for supervised

learning.

4. Automation of this process facilitates the efficient

preparation and deployment of large-scale UAV

swarms independent of the UAV platform.

3 METHOD

The method presented in this paper enables a user-

interactive mask generation pipeline that extracts the

UAV frame from camera images, improving both the

faster deployment of UAV swarms and safety. The

goal is to automatically detect the frame of the UAV

and mask out the frame of the UAV without any user

editing. Our method is not limited to a specific sys-

tem, but applies to any system that requires mask gen-

eration of shining parts, usually part of the UAV car-

rying the camera.

3.1 Image Acquisition

To achieve consistent results, the exposure time and

gain settings are fixed, but can be easily adjusted. A

dark floor as well as a bright UAV frame is benefi-

cial but is not explicitly required. Further, a dominant

light source should be placed above the UAV to en-

sure visibility of the frame of the UAV.

3.2 Automated Mask Generation

Automated mask generation is the core of the pro-

posed method. In case the image is not a grayscale

image, the image would need to be converted to

grayscale. Therefore, the grayscale image is denoted

by

I : {0, . . . , H −1} × {0, . . . ,W − 1} → {0, . . . , 255}

(1)

with H denoting the height of the image and W the

width of the image, where I(y, x) denotes the inten-

sity of the pixels with y ∈ {0, . . . , H − 1} and x ∈

{0, . . . ,W − 1}. Each candidate pixel is checked us-

ing camera-specific constraints to suppress irrelevant

regions and reduce false-positive detections. These

heuristics depend on the physical location of the cam-

era (e.g. left, right, or back on the UAV) and aim to

eliminate known areas with ambient reflections or ar-

tifacts.

To reduce false positives due to ambient reflec-

tions, we define a camera-specific geometric heuris-

tic:

H

cam

⊆ {0, . . . , H −1} × {0, . . . ,W − 1} (2)

which encodes regions of the image known to produce

spurious reflections.

We define the filtered domain as:

D

′

= (0, . . . , H −1 × 0, . . . ,W − 1) \ H cam, (3)

and restrict the image I to this domain:

I

′

= I|D

′

. (4)

Our mask generation algorithm is therefore only ap-

plied to I

′

. In I

′

pixel values exceeding a threshold σ

are considered as potential regions of the UAV frame

and stored in the set, denoted by:

P =

(y, x) ∈ D

′

| I

′

(y, x) > σ

. (5)

Further,

η < |P |, (6)

must be satisfied, with η denoting the minimum de-

tected points in the camera image. By discarding

these dark images, potentially fine masks are not cre-

ated.

To improve computational efficiency, the points in

P are stored in a k-d tree T with leaf size l. The

tree T is then used to find the k nearest neighbors of

each point in P . For each point p ∈ P , the Euclidean

distance to each of its k nearest neighbors q ∈ N

k

(p)

is computed as

d(p, q) = ∥p − q∥

2

, (7)

where N

k

(p) denotes the set of k nearest neighbors of

p. For each q ∈ N

k

(p), if

d(p, q) < τ, (8)

then, the pixel q and the pixel p are considered to be-

long to the same mask in the image, and q is added to

the mask set M

p

associated with p. The collection of

all such mask sets is denoted by

M = {M

p

| p ∈ P }. (9)

The sets in M are filled with black color.

To approximate spatial groupings of bright points,

each set M

p

is used to construct a polygon. The points

in M

p

are not explicitly ordered, which may lead to

self-intersecting polygons. These polygons are raster-

ized and used to produce a binary mask in which each

Towards Scalable and Fast UAV Deployment

245

UV Camera

UV LEDs

Figure 2: The UVDAR system attached to a UAV and used

in the experiments (Licea et al., 2023).

region is filled with black. Overlaps between differ-

ent sets M

p

are not explicitly handled; hence, inter-

secting areas may be filled multiple times, resulting

in a binary mask. Once all regions have been filled,

the contours are extracted from the previously gen-

erated binary mask. These contours are then explic-

itly drawn on the same mask image with a specified

line thickness (κ), reinforcing the region boundaries

in the binary mask. The union of all rasterized and

contoured polygons is denoted as poly(M

p

), and the

final binary mask image Imasked is defined as:

I

masked

(x) =

black, if x ∈

S

p∈P

poly(M

p

)

white, otherwise

. (10)

3.3 Interactive Mask Generation

Since validating the masks after creation is essential, a

lightweight user interface is provided to support real-

time inspection. The user monitors the live stream

from the camera and, when the mask creation is acti-

vated, the generated binary mask I

masked

, the original

image I, and their overlay I

overlay

are displayed. The

overlay image I

overlay

is calculated as a visual combi-

nation of the original image and the binary mask:

I

overlay

= overlay(I, I

masked

), (11)

where overlay denotes a pixel-wise operation that

highlights masked regions on top of the original im-

age for visualization. This allows the user to imme-

diately assess whether the mask is satisfactory or if it

should be discarded and regenerated.

4 EVALUATION

To evaluate our proposed mask generation system,

we used the UltraViolet Direction And Ranging

(UVDAR) system, a mutual relative localization

framework for UAV swarms that operates in the Ul-

tra Violet (UV) spectrum (Fig. 2) (Walter et al.,

2019; Horyna et al., 2024; Walter et al., 2018). In

(a) (b)

Figure 3: (a) Side view of the UAV frame, and (b) top view

of the UAV frame used in the experiments Two cameras

with approximately a FOV of 180 degrees.

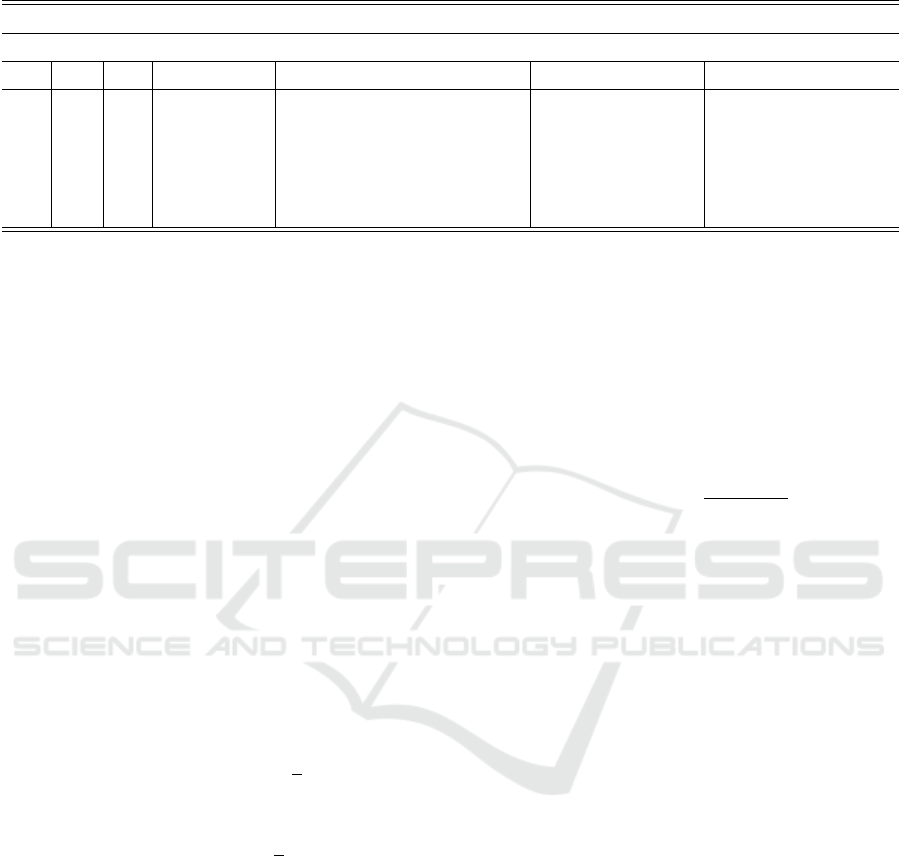

Table 1: Parameter settings used during the experiments.

Parameter Value Description

σ 120 Binarization threshold

η 20 Min. number of detected points

l 10 Leaf size of T

κ 50 Border Thickness

k 20 nearest neighbors

τ 50 max. distance between neighbors

the UVDAR system, cameras are equipped with UV

bandpass filters (Walter et al., 2018), which signifi-

cantly attenuate visible light and allow only UV light

to pass through. The swarm members are equipped

with UV-Light Emitting Diodes (LEDs) that blink in

predefined sequences, enabling the unique identifica-

tion of each UAV within the swarm. However, the

UV light emitted by these LEDs can reflect off the

structure of the observing UAV, potentially causing

false detections in the camera image. As a result, ac-

curately masking the own structure of the UAV be-

comes a critical requirement for reliable operation of

the UVDAR system. In typical indoor environments

such as offices–where ambient UV illumination is

minimal–the captured images appear predominantly

dark. Therefore, to ensure proper scene visibility, the

user was holding a strong UV light source above the

observing UAV, as described in Section 3.1, thereby

illuminating the environment for effective mask gen-

eration (see Figs. 4a and 4b). Grayscale cameras op-

erating under adequate ambient lighting conditions do

not require such additional UV illumination.

The evaluation was conducted on two UAV frames

using different exposure settings, as detailed in Tab. 2.

The high rotational speed of the propellers prevents

them from generating noticeable reflections in the

camera image. Therefore, they were removed during

our mask-generation process. Fig. 3 shows a UAV

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

246

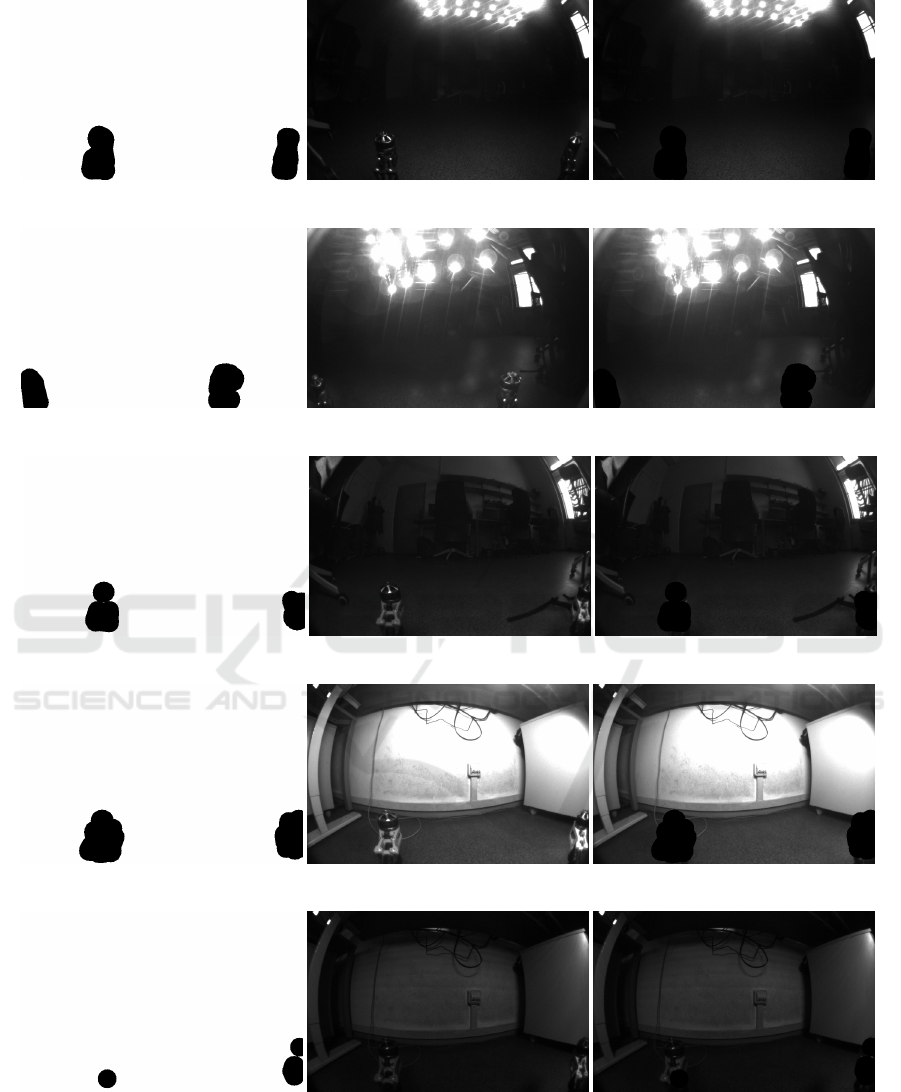

(a) Exp. 1: Sample from UAV1, right camera, with exposure setting: 5000 µs.

(b) Exp. 2: Sample from UAV1, left camera, with exposure setting: 5000 µs.

(c) Exp. 3: Sample from UAV2 right camera, with exposure setting: 25000 µs.

(d) Exp. 4: Sample from UAV2, right camera, with exposure setting: 5000 µs.

(e) Exp. 5: Sample from UAV2, right camera, with exposure setting: 5000 µs.

Figure 4: Qualitative analysis of the generated segmentation masks from different experimental trials. Left to right: generated

mask (I

masked

), input image (I), and overlay image (I

overlay

). Figures 4a–4d demonstrate successful mask extraction, while

Fig.4e shows a failure case under identical conditions to Fig.4d, caused by the removal of the external light source.

Towards Scalable and Fast UAV Deployment

247

Table 2: Average Area, Perimeter, and Compactness with standard deviation for each camera (left or right), exposure time

(µs), and UAV for each mask in the image (left and right).

Area [px

2

] Perimeter [px] Compactness

left right left right left right

Exp. UAV Cam Exposure [µs] µ ± σ µ ± σ µ ± σ µ ± σ µ ± σ µ ± σ

1 1 right 5000 8199 ± 619 11477 ± 1042 400 ± 29 471 ± 30 19.6 ± 2.3 19.3 ± 0.9

2 1 left 5000 5901 ± 438 8931 ± 568 317 ± 16 401 ± 13 17.1 ± 0.8 18.0 ± 1.2

3 2 right 25000 11268 ± 2808 7013 ± 1019 458 ± 74 342 ± 26 18.9 ± 2.1 16.8 ± 0.5

4

2 right 2500 9147 ± 3172 5479 ± 1567 392 ± 88 293 ± 49 17.4 ± 1.6 16.0 ± 0.6

5 2 right 5000 7486 ± 2206 6992 ± 1943 393 ± 69 383 ± 88 21.8 ± 4.4 21.4 ± 5.0

frame used for evaluation, equipped with two cam-

eras oriented 140 degrees apart, each with a FOV of

180 degrees. In total, we tested our approach on three

BlueFOX-USB cameras mounted on the two UAVs.

The evaluation is divided into two parts: quantitative

and qualitative analysis.

The quantitative analysis focuses on the consis-

tency and variability of the segmentation masks gen-

erated across different cameras and exposure settings.

This includes analyzing geometric properties such

as the area, perimeter, and compactness of the seg-

mented regions. Qualitative analysis, on the other

hand, evaluates the visual quality of the masks and

their ability to accurately exclude the UAV frame.

In the context of the UVDAR system, a horizon-

tal cut is applied to each frame to separate the upper

and lower image regions. This is necessary because

the upper part of the image may contain parts of the

light source illuminating the image, which should be

excluded from the analysis. We define the top and

bottom regions of the image as follows:

I

top

(y, x) = I(y, x), for y ∈

0,

3

4

H

− 1

∩ D

′

,

(12)

I

bottom

(y, x) = I(y, x), for y ∈

3

4

H

, H − 1

∩ D

′

.

(13)

The segmentation process is designed to run off-

board, on a computer with graphical output capabil-

ities. This setup is usually not feasible or cumber-

some on lightweight onboard computers such as the

NVIDIA Jetson or Intel NUC platforms commonly

used on UAVs.

4.1 Mask Shape Analysis via

Compactness Metrics

To quantitatively assess the consistency and quality of

the generated segmentation masks, we analyzed their

geometric properties under varying exposure settings,

cameras, and UAVs. In total, we evaluated 70 masks,

each generated under different conditions.

We applied connected component analysis to each

binary mask after filtering out the UAV frame, iden-

tifying valid contiguous regions. For each compo-

nent, we computed three key shape descriptors: area,

perimeter, and compactness, where compactness is

defined as:

compactness =

perimeter

2

area

. (14)

This metric captures the shape regularity of a com-

ponent, with lower values generally indicating more

compact, circular shapes, and higher values reflecting

more elongated or irregular regions.

Masks were categorized by UAV, camera orien-

tation (left or right), and exposure time. Since each

UAV included masked regions for both the left and

right arms, we evaluated these regions separately. For

each category, we computed the mean and standard

deviation of the area, perimeter, and compactness in

all valid components, as shown in Table 2.

We observed that masks generated under low ex-

posure settings exhibited higher standard deviations,

particularly in Experiment 4 (UAV 2, right camera,

2500 µs exposure time). This increased variability

indicates a greater sensitivity to lighting conditions,

where reduced exposure results in noisier and less

consistent segmentations. In contrast, masks captured

with exposure times of 5000 µs and 25000 µs showed

lower variation and more stable compactness values,

suggesting improved reliability in mask generation.

Among these, an exposure time of 5000 µs provides a

favorable balance between noise suppression and seg-

mentation quality.

4.2 Qualitative Analysis

The qualitative analysis focused on evaluating the

visual quality of the generated segmentation masks.

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

248

Figure 4 presents five examples of mask images

(I

masked

) under varying exposure settings, environ-

mental conditions (background), and camera configu-

rations, along with the corresponding input image (I)

and overlay image I

overlay

. Our approach successfully

extracted the UAV frame across all scenarios tested.

As illustrated in Fig. 4e, mask generation failed in

the absence of the artificial light source, highlight-

ing the sensitivity of the method to scene illumination.

However, as shown in Fig. 4d, the algorithm success-

fully extracted the UAV frame using the same camera

and setup, the only difference being the positioning

of the light source. This underscores the importance

of maintaining consistent and adequate lighting con-

ditions during mask generation to ensure reliable re-

sults.

4.3 Limitations

The generated segmentation masks are generally of

good quality, with relatively few false positives and

false negatives, although some noise or artifacts may

still be present. To further improve robustness, the al-

gorithm should be evaluated across a wider range of

frame types and color variations. In low-light condi-

tions, detection of the UAV frame can become more

challenging, which can occasionally lead to missed

detections.

4.4 Future Work

The proposed algorithm successfully generated masks

for the UAV frame in all evaluated scenarios. For fu-

ture work, we plan to explore the use of an unsuper-

vised Vision Transformer (ViT) to learn the structural

characteristics of different UAV frames and enable

automatic mask generation. Specifically, our goal is

to employ a self-supervised learning framework based

on a student-teacher architecture, which can learn ro-

bust representations of the frame structure without the

need for labeled data. This would further improve

the adaptability and scalability of the masking process

across varying UAV configurations.

5 CONCLUSIONS

This work introduced a novel method for automati-

cally detecting and masking the frame of a UAV in

the images of the camera onboard. By excluding

the structure of the UAV in the onboard camera, the

method reduces the risk of misclassification, prevent-

ing parts of the UAV from being interpreted as obsta-

cles or other agents in multi-UAV systems. This au-

tomation significantly enhances the scalability and ef-

ficiency of swarm deployment, addressing a task that

is otherwise labour-intensive and does not scale when

done manually. Leveraging camera-specific geomet-

ric heuristics and a k-d tree structure, the proposed

algorithm achieves accurate and efficient frame detec-

tion across varying UAV designs and camera config-

urations. Quantitative and qualitative results across

multiple platforms confirm the adaptability and ro-

bustness of the method in various operating condi-

tions. In general, the proposed approach improves the

safety of swarm navigation and onboard vision sys-

tems, while also streamlining the preparation and de-

ployment of multi-UAV systems.

ACKNOWLEDGEMENTS

This work was funded by CTU grant no

SGS23/177/OHK3/3T/13, by the Czech Science

Foundation (GA

ˇ

CR) under research project no.

23-07517S and by the European Union under the

project Robotics and advanced industrial production

(reg. no. CZ.02.01.01/00/22 008/0004590).

REFERENCES

Bartolomei, L., Teixeira, L., and Chli, M. (2023). Fast

multi-uav decentralized exploration of forests. IEEE

Robotics and Automation Letters, 8(9):5576–5583.

Chen, S., Yin, D., and Niu, Y. (2022). A survey of

robot swarms’ relative localization method. Sensors,

22(12).

Chung, S.-J., Paranjape, A. A., Dames, P., Shen, S., and

Kumar, V. (2018). A survey on aerial swarm robotics.

IEEE Transactions on Robotics, 34(4):837–855.

Funahashi, I., Yamashita, N., Yoshida, T., and Ikehara, M.

(2021). High reflection removal using cnn with de-

tection and estimation. In 2021 Asia-Pacific Signal

and Information Processing Association Annual Sum-

mit and Conference (APSIPA ASC), pages 1381–1385.

Hinniger, C. and R

¨

uter, J. (2023). Synthetic training data for

semantic segmentation of the environment from uav

perspective. Aerospace, 10(7).

Horyna, J., Kr

´

atk

´

y, V., Pritzl, V., B

´

a

ˇ

ca, T., Ferrante, E.,

and Saska, M. (2024). Fast swarming of uavs in

gnss-denied feature-poor environments without ex-

plicit communication. IEEE Robotics and Automation

Letters, 9(6):5284–5291.

Ji, D., Jin, W., Lu, H., and Zhao, F. (2024). Pptformer:

Pseudo multi-perspective transformer for uav segmen-

tation.

Li, H., Cai, Y., Hong, J., Xu, P., Cheng, H., Zhu, X., Hu,

B., Hao, Z., and Fan, Z. (2023). Vg-swarm: A vision-

based gene regulation network for uavs swarm behav-

Towards Scalable and Fast UAV Deployment

249

ior emergence. IEEE Robotics and Automation Let-

ters, 8(3):1175–1182.

Li, R. and Zhao, X. (2025). Aeroreformer: Aerial referring

transformer for uav-based referring image segmenta-

tion.

Licea, D. B., Walter, V., Ghogho, M., and Saska, M. (2023).

Optical communication-based identification for multi-

uav systems: theory and practice.

Maxey, C., Choi, J., Lee, H., Manocha, D., and Kwon,

H. (2024). Uav-sim: Nerf-based synthetic data gen-

eration for uav-based perception. In 2024 IEEE In-

ternational Conference on Robotics and Automation

(ICRA), pages 5323–5329.

Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T.,

Ramamoorthi, R., and Ng, R. (2021). Nerf: represent-

ing scenes as neural radiance fields for view synthesis.

Commun. ACM, 65(1):99–106.

Oh, X., Lim, R., Foong, S., and Tan, U.-X. (2023). Marker-

based localization system using an active ptz camera

and cnn-based ellipse detection. IEEE/ASME Trans-

actions on Mechatronics, 28(4):1984–1992.

Pargieła, K. (2022). Vehicle detection and masking in

uav images using yolo to improve photogrammetric

products. Reports on Geodesy and Geoinformatics,

114:15–23.

Schilling, F., Schiano, F., and Floreano, D. (2021). Vision-

based drone flocking in outdoor environments. IEEE

Robotics and Automation Letters, 6(2):2954–2961.

Soltani, S., Ferlian, O., Eisenhauer, N., Feilhauer, H., and

Kattenborn, T. (2024). From simple labels to semantic

image segmentation: leveraging citizen science plant

photographs for tree species mapping in drone im-

agery. Biogeosciences, 21:2909–2935.

Walter, V., Saska, M., and Franchi, A. (2018). Fast mutual

relative localization of uavs using ultraviolet led mark-

ers. In 2018 International Conference on Unmanned

Aircraft System (ICUAS 2018).

Walter, V., Staub, N., Franchi, A., and Saska, M. (2019).

Uvdar system for visual relative localization with ap-

plication to leader–follower formations of multiro-

tor uavs. IEEE Robotics and Automation Letters,

4(3):2637–2644.

Xu, H., Wang, L., Zhang, Y., Qiu, K., and Shen, S. (2020).

Decentralized visual-inertial-uwb fusion for relative

state estimation of aerial swarm. In 2020 IEEE In-

ternational Conference on Robotics and Automation

(ICRA), pages 8776–8782.

Zhao, J., Li, Q., Chi, P., and Wang, Y. (2025). Active

vision-based uav swarm with limited fov flocking in

communication-denied scenarios. IEEE Transactions

on Instrumentation and Measurement, pages 1–1.

Zhou, W., Zheng, N., and Wang, C. (2024). Synthesizing

realistic traffic events from uav perspectives: A mask-

guided generative approach based on style-modulated

transformer. IEEE Transactions on Intelligent Vehi-

cles, pages 1–16.

Zhou, X., Wen, X., Wang, Z., Gao, Y., Li, H., Wang, Q.,

Yang, T., Lu, H., Cao, Y., Xu, C., and Gao, F. (2022).

Swarm of micro flying robots in the wild. Science

Robotics, 7(66):eabm5954.

ICINCO 2025 - 22nd International Conference on Informatics in Control, Automation and Robotics

250