A Method to Construct Dynamic, Adaptable Maturity Models for

Digital Transformation

Jan M. Pawlowski

Institute Computer Science, Hochschule Ruhr West University of Applied Sciences, Lützowstr. 5, 46236 Bottrop, Germany

Keywords: Dynamic Adaptable Maturity Model Method, Maturity Model Development, Digital Transformation.

Abstract: Maturity models are a well-established method to assess and improve Digital Transformation processes in

organizations. However, many models lack theoretical foundations and specificity for a branch or sector. The

Dynamic Adaptable Maturity Model development method (DA3M) provides the steps to develop

scientifically sound, specific and relevant maturity models to be used to improve organizational performance.

The method was successfully valeted in different branches – in this paper, the method is used to develop a

maturity model with a focus on human aspects and to create an adaptation for Higher Education Institutions.

1 INTRODUCTION

Digital Transformation (DT) is crucial in the age of

digitalization with emerging trends like Artificial

Intelligence (AI), as it enables organizations to

leverage advanced technologies to enhance

efficiency, innovation, and customer experience.

However, organizations face several challenges in

this process. One significant challenge is the

integration of emerging technologies which can be

complex and resource-intensive (Vial, 2019).

Measuring the success of digital transformation

efforts can be challenging, making it difficult to

justify the expenditure (Kane et al., 2015). For this

purpose, maturity models are an important and well-

established method to assess the status of digital

transformation in organizations by providing a

structured framework to evaluate current capabilities

and identify areas for growth (Thordsen & Bick,

2023). These models help organizations to measure

their digital maturity, in some cases compared to

industry standards (Proença & Borbinha, 2016).

Some models also offer a roadmap for digital

transformation, guiding organizations through

various stages of development (Berghaus & Back,

2016). By identifying gaps in digital capabilities,

maturity models enable organizations to prioritize

investments and allocate resources effectively

(Chanias & Hess, 2016). Furthermore, they assist in

aligning digital transformation initiatives with

strategic business goals, ensuring coherence and

focus (Matt, Hess, & Benlian, 2015). Maturity models

also promote continuous improvement by providing

metrics to measure progress and success (Westerman,

Bonnet, & McAfee, 2014). Additionally, they help in

managing change by fostering a culture of innovation

and adaptability (Kane et al., 2017). Finally, maturity

models support risk management by identifying

potential challenges and mitigating risks associated

with digital transformation (Vial, 2019). However,

maturity models are often criticized – Thordsen &

Bick (2023) identify main challenges and problems of

maturity models, amongst them the lack of empirical

validations, neglecting the dynamic nature of digital

transformation maturity and the complexity of

integration into organizations’ operations.

Based on these challenges, it is the aim to develop

a method to construct maturity models which are 1)

theoretically sound, 2) based on empirical evidence,

3) adaptable to specific domains, and 4) dynamic to

emerging trends. The starting point is an analysis of a

variety of maturity models to identify their theoretical

foundation and construction method. Based on this

analysis, a new method for maturity model

construction was developed and applied it in different

scenarios.

2 BACKGROUND: DEVELOPING

MATURITY MODELS

As a starting point, I have examined frameworks that

enable organizations to assess their current status in

the digital transformation process. The focus is the

Pawlowski, J. M.

A Method to Construct Dynamic, Adaptable Maturity Models for Digital Transformation.

DOI: 10.5220/0013708000004000

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2025) - Volume 2: KEOD and KMIS, pages

385-392

ISBN: 978-989-758-769-6; ISSN: 2184-3228

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

385

use of maturity models (Wagner et al., 2023; Hein-

Pensel et al., 2023) which aim to enhance

organizational performance (Thordsen & Bick,

2023).

Numerous maturity models have been

scientifically developed and evaluated (Aras &

Büyüközkan, 2023; Ochoa-Urrego & Peña-Reyes,

2021, Proenca & Borb-inha, 2016, Santos-Neto &

Costa, 2023). These models vary in terms of

abstraction level, methodology, and scope (Williams

et al., 2019). The scope of models can be generic such

as the capability maturity model for digital

transformation (Gökalp & Martinez, 2022), other

models focus on specific industries such as the IT

industry (Gollhardt et al, 2020, Proenca & Borbinha,

2016). Further models focus just on specific aspects

of DT such as strategy, value creation, structural

changes, barriers (Vial, 2019) or focuses like

participation and inclusion (Pawlowski et al, 2025).

All these models vary in terms of abstraction level,

methodology, and scope (Williams et al., 2019).

Pöppelbuss et al (2011) have analyzed the theoretical

foundation of maturity models in the Information

Systems domain, finding that about half of the models

have a focus on conceptual work, in contrast to

empirical evidence. Also, there is not well-

established method for constructing maturity models.

Several review (Pereira & Serrano, 2020, Wendler,

2012) identified a broad variety of methods with a

focus on (systematic) literature reviews but also

constructive methods like Design Science Research

including experts in the field. Very few methods are

based on community recognition and empirical

evidence (Pereira & Serrano, 2020). Finally, the

evaluation methods of maturity models include a

wide variety of methods (Helgesson et al, 2012) such

as qualitative expert interviews (Salah et al, 2014),

case studies and surveys (Wendler, 2012).

Based on the methodological weaknesses, several

meta-methods for maturity model development have

been developed (Lasrado et al, 2016) defining the

generic steps of model development starting with

scope/ problem definition to evaluation and

improvement of the model.

As a summary, it can be stated that an enormous

number of maturity models for digital transformation

exist. However, the successful use of these model is

not ensured due to a lack of theoretical foundation and

methodological weaknesses. Therefore, it is

necessary to develop a methodology for model

development including adaptation and continuous

improvement.

3 THE DYMANIC, ADAPTABLE

MATURITY MODEL METHOD

(DA3M)

In the following, I will describe the main steps of the

method construction leading to the Dynamic

Adaptable Maturity Model Method (DA3M). As a

kernel theory, I use the (lifecycle) process theory

(Hernes, 2014, van de Ven & Poole, 1995). In

general, process theory is a framework that explains

how entities change and develop over time. The

theory explains that change follows a predetermined

sequence of stages, in this case in a lifecycle. This

corresponds to the main idea of maturity models,

understanding and initiating change in organizations.

A maturity model is used as part of this change

process to assess the current situation and initiate

change, in this case towards digital transformation.

The research methodology to construct the method

used Action Design Research (Sein et al, 2011) as a

research method. Action Design Research (ADR) is a

methodology that it is used for creating artefacts to

solve complex organizational problems in a scientific,

rigorous way. Additionally, ADR is used as part of

and as a guiding structure for the DA3M method, so

that the model construction phases are also aligned to

a well-established research method. The phases of

ADR are as follows: 1) Problem Formulation:

(Identifying and understanding the problem context),

2) Building, Intervention, and Evaluation

(Developing, implementing and evaluating the design

artefact), 3) Reflection and Learning (Analyzing the

outcomes of the BIE phase), 4) Formalization of

Learning (Documenting and disseminating the

findings and contributions of the research).

ADR is in this case used to construct the maturity

model development method as the main artefact.

Secondly, it is used in the development process to

assure that specific maturity models are developed in

a rigorous way.

As a second step, principles were defined for the

maturity model development method based on the

weaknesses and challenges shown in the analysis

above.

• Principle 1 Theoretical Foundation: A

maturity model should have a theoretical

foundation. Process theory can be used as a

generic basis, however, in other context / focus

area other theories might be more appropriate.

• Principle 2 Evidence base: A maturity model

(and its contents) should be based on scientific,

empirical evidence

• Principle 3 Adaptability: A model should take

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

386

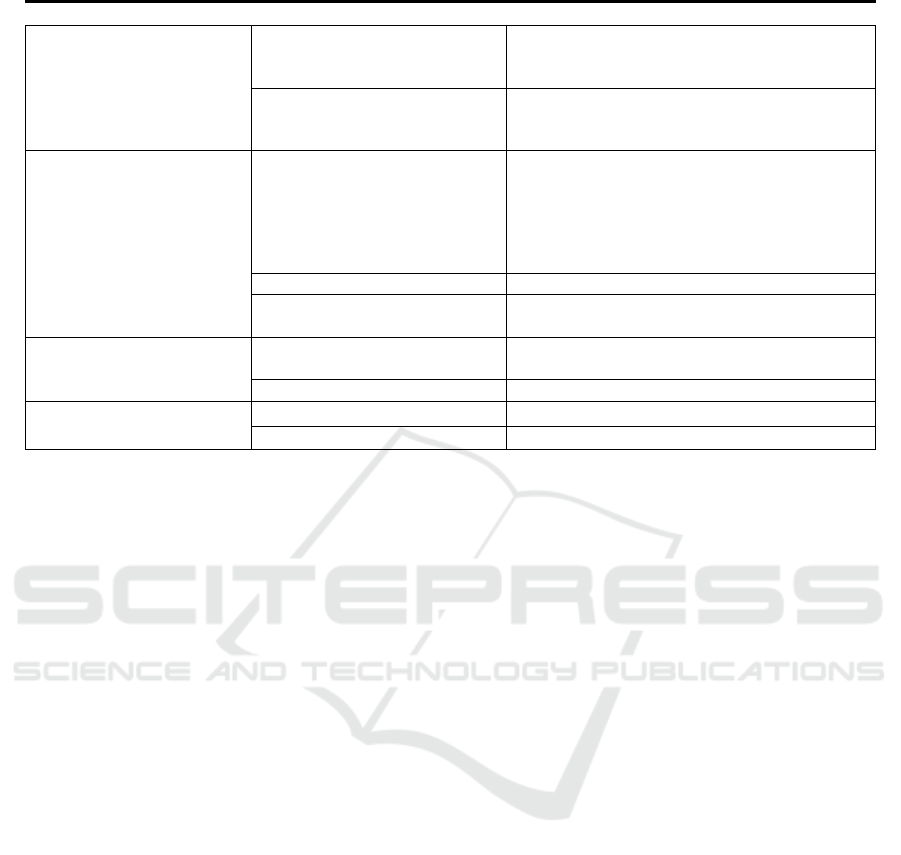

Table 1: Main phases of DA3M.

ADR Phase DA3M Phase Explanation / Activities

Problem Formulation P1.1 Problem and Scope

Definition

Defining the research problem and scope

Determining theoretical foundation

Definin

g

a model develo

p

ment

p

ro

j

ect

P1.2 Comparison of existing

models

Defining comparison criteria

Comparing relevant

existing models

Pro

p

osin

g

classes and factors

Building Intervention,

Evaluation

P2.1 Model development

Select initial factors

Analyze trends

Analyze domain

Identify additional factors

Provide evidence for factors

Collect interventions

P2.2 Model implementation Use model

P2.3 Model evaluation Evaluate model / factors

Su

gg

est im

p

rovements

Reflection and Learning P3.1 Model transfer Adapt model

Use model in different domains

P3.2 Im

p

act evaluation Evaluate im

p

act in different domains

Formalization of Learning P4.1 Model dissemination Communicate and disseminate model

P4.2 Model evolution Continuously improve model

the specific needs, requirements of a sector,

branch or focus issue into account.

• Principle 4 Dynamic model evolution: New

trends and technologies emerge in shorter

periods. A model should be updated and

improved regularly.

• Principle 5 Practical guidance: A maturity

model should not solely assess the current status

but also provide guidance on potential

interventions to initiate changes.

The method is based on the methods of Becker et al

(2009) and Thordsen & Bick (2020). The following

table summarizes the main steps.

•

The initial phase in developing a maturity model

involves a comprehensive problem and scope

definition. This phase begins with clearly defining

the research problem and the scope of the study – this

includes the description on strategic objectives (e.g.

improving competitiveness, improving employee

competences and motivation), the branch / sector (e.g.

IT industry, building and construction industry) as

well as potential focus topics (e.g. integration of AI

techniques and tools). It is essential to establish a

solid theoretical foundation (e.g. process theory) that

will guide the development of the model.

Additionally, this phase includes outlining a detailed

project plan for the model's development, ensuring

that all necessary steps and resources are identified

and allocated. This also includes the involvement of

stakeholders and experts for the model development.

Following the problem and scope definition, the next

phase involves a systematic comparison of existing

maturity models. This phase starts with defining the

criteria for comparison, which may include factors

such as the models' applicability for the above

selected sector, comprehensiveness, and theoretical

and methodological underpinnings. Relevant existing

models are then compared based on these criteria. The

outcome of this comparison is the proposal of classes

(e.g. strategy, processes, competences (Pawlowski et

al. 2025) and factors that will form the basis of the

new maturity model. This structured approach

ensures that the new model is built on a thorough

understanding of existing frameworks.

The second phase in developing a maturity model

focuses on model development, implementation,

and evaluation: The Model Development phase

begins with the selection of initial factors that will

form the foundation of the maturity model. To

incorporate current developments, it is necessary to

analyze current trends for certain technology

developments (e.g. use of AI data analysis tools) and

for the specific domain (e.g. organizational and

technological innovations). It is essential evidence is

provided for each factor to be included in the model

(e.g. the use of AI-based coding tools improves the

productivity of a programmer). Only factors

including this evidence should be incorporated in the

model. It is also recommended to collect potential

interventions (“how to improve the maturity level of

a certain factor?”), as it helps in understanding the

practical applications and impacts of the model. Last

but not least, the different levels need to be described.

Here it should be decided if maturity levels are

A Method to Construct Dynamic, Adaptable Maturity Models for Digital Transformation

387

defined generically (e.g. using a Likert scale from 1 =

no activities to 5 = continuously improved) or

specifically (one statement per factor and maturity

level). It also needs to be decided how to include

weights for specific factors as well as rating

mechanisms. These activities form the initial version

of the maturity model.

Then the Model Implementation follows. The

(adapted) model it is implemented within an

organizational context. This involves using the model

to assess the current maturity level and identify areas

for improvement. The implementation phase is

critical for testing the model's applicability and

effectiveness in real-world scenarios. This phase is

accompanied by the Model Evaluation. This phase

involves the evaluation of the overall model and its

factors (e.g. using expert interviews, or a Delphi

study). This includes assessing the model's quality

(e.g. understandability, comprehensiveness,

applicability), identifying any shortcomings, and

suggesting improvements. The evaluation phase

ensures that the model is continuously refined and up-

dated based on feedback and empirical evidence,

enhancing its reliability and utility. The second phase

can be repeated multiple times – the phases defined

ensure a systematic approach to developing,

implementing, evaluating and improving a maturity

model.

The third phase in developing a maturity model

involves model transfer and impact evaluation.

The Model Transfer focuses on adapting the maturity

model to different contexts and domains. It involves

modifying the model to ensure its relevance and

applicability across various organizational settings.

The adapted model is then used in these different

domains to assess their maturity levels and identify

areas for improvement. This step is crucial for testing

the model's versatility and ensuring it can be

effectively applied in diverse environments.

Following the transfer, the impact of the model is

evaluated in the different domains where it has been

implemented. This involves assessing the outcomes

and benefits of using the model, such as

improvements in organizational processes,

performance, and overall maturity. The evaluation

helps in understanding the model's effectiveness and

provides insights into any further adaptations or

refinements needed to enhance its impact. These

phases ensure that the maturity model is not only

versatile and adaptable but also effective in driving

improvements across various organizational contexts.

The fourth phase in developing a maturity model

involves model dissemination and continuous

improvement. The Model Dissemination focuses on

communicating and disseminating the maturity

model to the target audience such as academic experts

and professionals. It involves publishing the model in

academic journals or at conferences, and sharing it

through various professional networks. The goal is to

ensure that the model reaches a wide audience,

including researchers, practitioners, and

organizations as well as receiving further feedback

and stimulating the academic discourse.

As organizational and technological

advancements occur in shorter and shorter cycles, it

is necessary to update the model regularly to assure a

Model Evolution: This involves regularly updating

and refining the model based on feedback from its

users and new developments in the field. Continuous

improvement ensures that the model remains relevant

and up to in addressing the evolving needs of

organizations. It also involves monitoring the model's

performance and making necessary adjustments.

As a summary, DA3M defines the main phases of

maturity model development, leading to theoretically

sound, evidence-based, adapted models as a basis for

assessing and improving digital transformation in

organizations.

4 CASE STUDY: USING DA3M

In this section, the use of the DA3M for the

development of the Co-Digitalization Maturity

Model (Pawlowski et al, 2025) and the first

adaptation which was done for the Higher Education

context is outlined. The method was used to design 1)

a participatory maturity model which focuses on

human aspects (such as motivation, competencies,

participation) in digital transformation processes and

2) adaptations for different branches including the

specific needs and characteristics of a branch (here:

Higher Education Institutions).

In a first step (P1.1), the focus of the initial model is

set. Based on the initial analysis, it was clear that most

maturity models in Digital Transformation focus on

technological and neglect human aspects. Therefore,

the research scope was defined to incorporate and

focus on human aspects into maturity models. As the

theoretical foundation, three theories are used for

guidance: for the change aspect, the model is based

on Process Theory (Hernes, 2014), for the human

aspects, Self Determination Theory (Deci et al, 2017)

and the Competence-Based View of the Firm

(Freiling, 2004) is used to explain the role of

employees in organizations. Finally, an iterative

approach according to Action Design Research (Sein

et al, 2011) is applied to build, use and improve the

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

388

model. To validate the model, multiple case studies

(Yin, 2017) were run in different sectors and

domains. In this paper, one case is used to show the

applicability and usage of the model.

The second step (P1.2) started with an initial

comparison of existing models to identify potential

factors. These included general factors for DT but a

focus was put on identification of human factors (e.g.

how are employees trained for new DT

competencies). By this, a first classification and

initial set of factors was determined.

The following table summarizes the main steps

and outcomes of the first phase. It also became clear

that most models do not include the focus of the

maturity model, the human aspects.

The second phase started with the extension of the

initial model with a focus on 1) human aspects and 2)

technological trends which are not included in current

maturity models. Sample human aspects identified

and included were openness, participation, and digital

competencies. Additionally, technological trends

were identified and included such as autonomous

processes, data-based decision making, and artificial

Intelligence.

As a third step, specific aspects for the first adaptation

of the model (Higher Education) were identified.

Three types of adaptation were made:

• Additional factors: Factors which are not part of

the generic model as they are only relevant for a

specific branch / sector. Examples for Higher

Education are specific capabilities such as

pedagogical capabilities or student services.

• Modified / more specific factors: Some generic

factors need to be described in a more specific

way. As an example, the generic model includes

a factor on how processes are automated. In the

Higher Education context, it should be distingui-

shed how learning/research and administrative

processes are technology-supported.

• Irrelevant factors: In the adaptation, some factors

might not be relevant. As an example, the factor

of “use of robotics for automation” is not relevant

for HEIs.

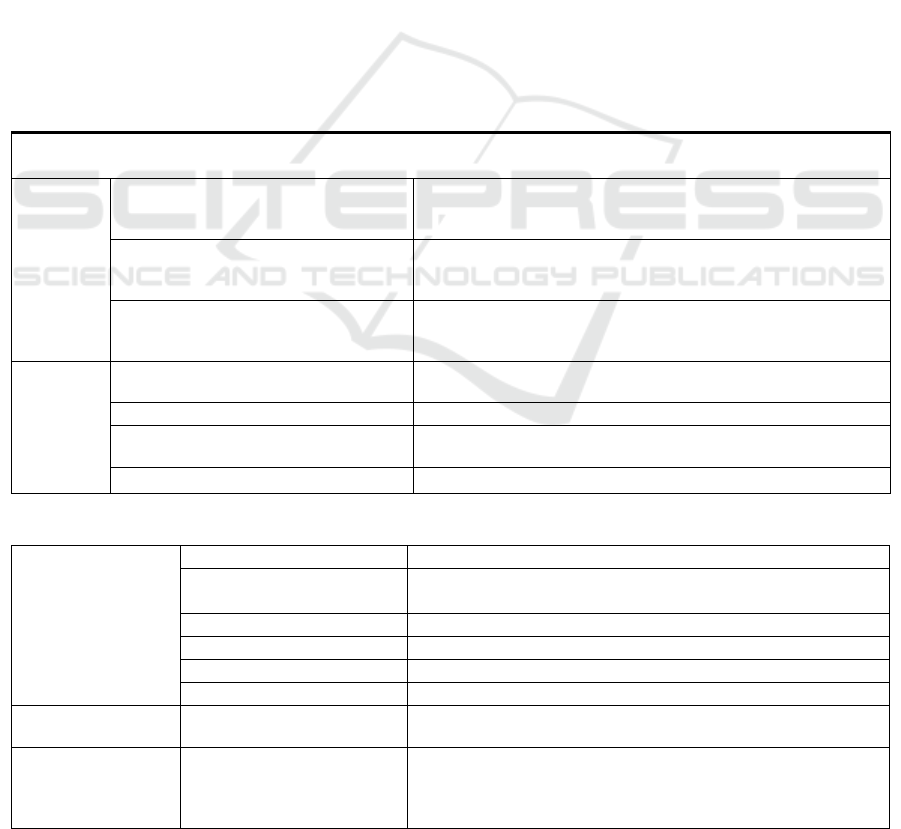

Table 2: Applying DA3M to develop a human-oriented maturity model: Phase 1.

DA3M

Phase

Explanation / Activities Outcomes

P1.1

Problem

and Scope

Definition

Defining the research problem and scope

Development of a comprehensive DT maturity model

Focus on human aspects

Adaptation for Higher Education Institutions

Determining theoretical foundation

Process theory (Hernes, 2014)

Self determination theory (Deci et al, 2017)

Competence-Based View of the Firm (Freiling, 2004)

Defining a model development project

Defining multiple case studies in different branches (e.g. Higher

Education, social welfare, IT, craft sector, construction)

Defining stakeholders and roles for model usage and evaluation

P1.2

Comparison

of existing

models

Defining comparison criteria

Focus on theoretical foundation and methodology

Practical relevance

Comparing relevant existing models

Selection of eight scientific and four practice models

Proposing classes and factors

Initial classification

Selection of most common factors

Suggest improvements Inclusion of more specific human factors

Table 3: Applying DA3M to develop a human-oriented maturity model: Phase 2.

P2.1 Model

development

Select initial factors Selection of most common factors

Analyze trends

Analysis of human aspects of DT

Analysis of emerging technologies

Analyze domain

Analysis of trends in Higher Education (first case study)

Identify additional factors

Deriving potential factors for inclusion

Provide evidence for factors

Analysis of evidence for each factor

Collect interventions

Proposing interventions for each facto

r

P2.2 Model

im

p

lementation

Use model

Model usage in different sectors

P2.3 Model evaluation Evaluate model/ factors

Expert interviews with 5-10 experts (academics and company

representatives) per case study

Model improvement (refinement of descriptions, exclusion of

redundant and unnecessary factors)

A Method to Construct Dynamic, Adaptable Maturity Models for Digital Transformation

389

Each factor was also validated analytically – so for

each factor, it was checked whether there is empirical

evidence that the factor has a positive influence on

organizations’ performance.

The model created was then used, evaluated in

three cycles (Pawlowski et al, 2025) The following

table shows the steps and outcomes of the second

phase.

The third phase contains the usage of the

(finalized) model. Here, the (long-term) impact

should also be analyzed. Last but not least, the models

need to be updated regularly to include emerging

trends and technologies.

5 EVALUATION RESULTS

After the model development and adaptation for

Higher Education, the model was applied in Higher

Education institutions. 41 respondents replied when

applying and validating the model (23 female, 18

male). Of the participant, 68% came from a

university, 32% of universities of applied sciences.

76% were professors or lecturers, 24% administrative

staff. By this participation, a very comprehensive

view of perspectives was ensured. The evaluation

focused on three key criteria based on the evaluation

method by Salah et al (2014):

• Factor Importance: Feedback on how

important a factor is for the university.

• Adaptability: Were the adapted factors (from

the generic towards the Higher Education model)

seen as important?

• Understandability: Are the model descriptions

understandable?

• Usefulness: Are the results of the assessment

useful to improve the digital transformation of a

university.

The main focus of the evaluation was on the factor

importance as this is the crucial aspect for maturity

models: to identify key aspects of Digital

Transformation. Each factor in the maturity model

was used to assess the status of an institution in terms

of maturity and importance (on a Likert scale between

“1 not important at all” and “5 very important”). By

this ranking, we can determine whether factors

identified using the DA3M method were relevant to

the participants. We combined this quantitative

assessment with open questions regarding

understandability and usefulness of the model.

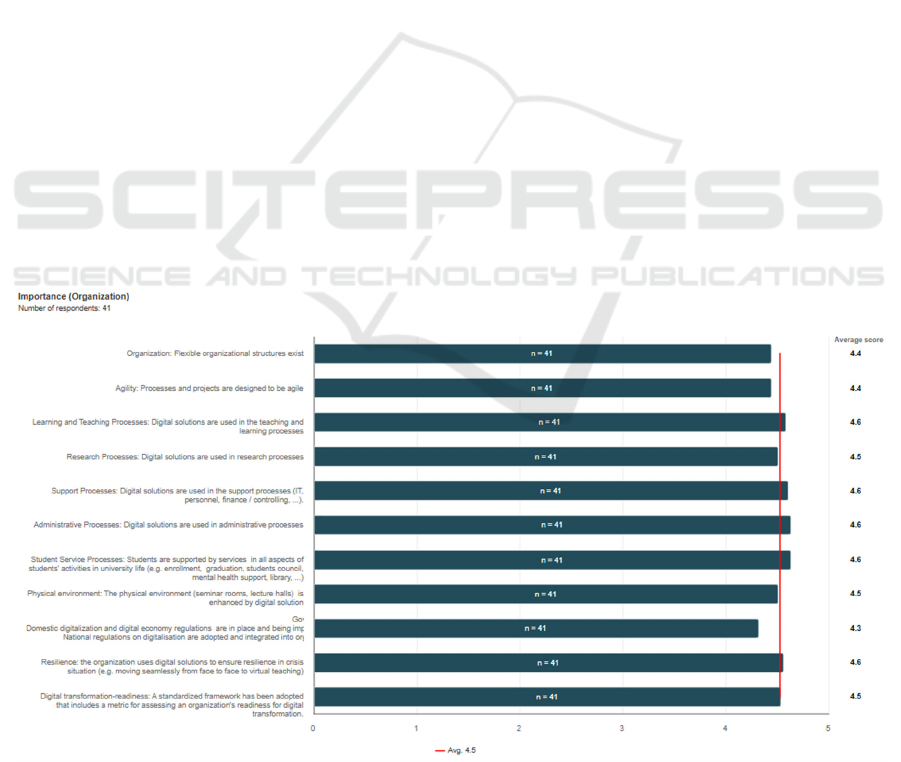

Overall, the constructed maturity model consisted

of 77 factors in nine categories. In comparison to the

base maturity model (Pawlowski et al, 2025), 18

factors were modified and adapted (e.g. specific

processes for Higher Education), 6 factors were

dropped (e.g. robotics). No factor received a lower

average importance than 3. More than 50% of the

factors had an average greater than 4. This means that

the factors have generally a strong relevance in the

opinion of the participants.

Figure 1: Sample results (factor importance) on the category “Processes”.

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

390

As a second step, the results of the modified

factors were analysed as this adaptation is one of the

main feature of DA3M. All 18 factors which were

modified and specifically adapted for the Higher

Education context received an average important

greater than 4. This means that the method has clearly

identified factors with a very high relevance for the

context of Higher Education.

One example of factors importance is shown in

the following figure. In the category “Processes”

most of the factors were adapted for the Higher

Education context (e.g. specific processes for

research or student service).

Regarding understandability, most users found

the statements of the model were very clear.

Regarding usefulness, we received only positive

comments although just half of the respondents

replied to this question. However, one user stated “the

survey is excellent elaborated, it will be very helpful

for organizations to drafting the digitalisation strategy

and implementation”. As a negative aspect, it was

mentioned that – even though the status assessment

was accurate – there were no recommendations how

to improve certain factors. This can be seen as the

next research step, not solely focusing on status

assessment but focusing on improvements.

Overall, the validated aspects of DA3M lead to

the conclusion that especially the adaption of maturity

models is clearly successful.

6 DISCUSSION

I have described a methodology for developing and

adapting maturity models for digital transformation.

The model is based on the methods of Becker et al

(2009) and Thordsen & Bick (2020) and addresses

weaknesses of many maturity models. Many maturity

models are based on the synthesis of existing models.

This weakness is addressed by clearly defined

principles and model phases. It is important to clearly

define the model scope including its theoretical

foundation. Here, process theory for generic models

seems appropriate but also other theories can be used

for specific focuses (e.g. Self Determination Theory).

Secondly, many maturity models are prescriptive

without evidence. Therefore, evidence should be

collected for each factor included in a maturity model.

Finally, generic models cannot fully support the DT

process in specific branches. Therefore, it is

necessary to include the adaptation in the model,

refining a generic model including branch-specific

characteristics and trends. It is also necessary to

continuously update models regularly to keep up with

current trends such as generative AI.

As a limitation, it is necessary to state that the

method has not been validated in the long term, i.e.,

how frequent does the model change due to new

trends and societal and market developments. The

next steps will include both long-term studies on how

maturity models evolve but also how organizations

change when using maturity models.

7 CONCLUSIONS

In this paper, a new methodology for the development

and adaptation of maturity models for digital

transformation was constructed. The approach

consists of for four main phases starting with scoping

to a long-term model evolution. The method is based

on clear principles such as providing a theoretical

foundation, evidence-base and guidance. The DA3M

method has been successfully applied for

constructing both, generic as well as branch-specific

maturity models.

REFERENCES

Aras, A., & Büyüközkan, G. (2023). Digital transformation

journey guidance: A holistic digital maturity model

based on a systematic literature review. Systems, 11(4),

213.

Becker, J., Knackstedt, R., & Pöppelbuß, J. (2009).

Developing maturity models for IT manage-ment: A

procedure model and its application. Business &

information systems engineering, 1, 213-222.

Berghaus, S., & Back, A. (2016). Stages in digital business

transformation: Results of an empiri-cal maturity study.

MCIS 2016 Proceedings, 22.

Chanias, S., & Hess, T. (2016). Understanding digital

transformation strategy formation: Insights from

Europe's automotive industry. PACIS 2016

Proceedings, 296.

Deci, E. L., Olafsen, A. H., & Ryan, R. M. (2017). Self-

determination theory in work organiza-tions: The state

of a science. Annual review of organizational

psychology and organizational behavior, 4(1), 19-43.

Freiling, J. (2004). A competence-based theory of the firm.

Management Revue, 15(1), 27-52.

Gollhardt, T., Halsbenning, S., Hermann, A., Karsakova,

A., & Becker, J. (2020). Development of a digital

transformation maturity model for IT companies. In

2020 IEEE 22nd Conference on Business Informatics

(CBI)

Gökalp, E., & Martinez, V. (2022). Digital transformation

maturity assessment: development of the digital

A Method to Construct Dynamic, Adaptable Maturity Models for Digital Transformation

391

transformation capability maturity model. International

Journal of Production Re-search, 60(20), 6282-6302.

Hein-Pensel, F., Winkler, H., Brückner, A., Wölke, M.,

Jabs, I., Mayan, I. J., & Zinke-Wehlmann, C. (2023).

Maturity assessment for Industry 5.0: A review of

existing maturity models. Journal of Manufacturing

Systems, 66, 200-210.

Helgesson, Y. Y. L., Höst, M., & Weyns, K. (2012). A

review of methods for evaluation of maturity models for

process improvement. Journal of Software: Evolution

and Process, 24(4), 436-454.

Hernes, T. (2014). A process theory of organization. Oup

Oxford.

Kane, G. C., Palmer, D., Phillips, A. N., Kiron, D., &

Buckley, N. (2017). Achieving digital maturity. MIT

Sloan Management Review, 59(1), 1-9.

Lasrado, L. A., Vatrapu, R., & Andersen, K. N. (2015).

Maturity models development in IS research: A

literature review. IRIS: Selected Papers of the

Information Systems Research Seminar in Scandinavia

Matt, C., Hess, T., & Benlian, A. (2015). Digital

transformation strategies. Business & Information

Systems Engineering, 57(5), 339-343.

Ochoa-Urrego, R. L., & Peña-Reyes, J. I. (2021). Digital

maturity models: a systematic literature review.

Digitalization: Approaches, Case Studies, and Tools for

Strategy, Transformation and Implementation, 71-85.

Pawlowski, J.M., Kocak, S., Hellwig, L., Nurhas, I. (2025):

Co-Digitalization: A Participatory Maturity Model for

Digital Transformation, International Journal of

Information Systems and Social Change, 16(1).

Pereira, R., & Serrano, J. (2020). A review of methods used

on IT maturity models development: A systematic

literature review and a critical analysis. Journal of

information technology, 35(2), 161-178.

Poeppelbuss, J., Niehaves, B., Simons, A., & Becker, J.

(2011). Maturity models in information systems

research: literature search and analysis.

Communications of the Association for In-formation

Systems, 29(1), 505-532-.

Proença, D., & Borbinha, J. (2018). Maturity models for

information systems: A state of the art. Procedia

Computer Science, 100, 1042-1049.

Salah, D., Paige, R., & Cairns, P. (2014). An evaluation

template for expert review of maturity models. In

Product-Focused Software Process Improvement: 15th

International Conference, PROFES 2014, Helsinki,

Finland, December 10-12, 2014. Proceedings 15 (pp.

318-321). Springer International Publishing.

Santos-Neto, J.B., & Costa, A. P. C. S. (2019). Enterprise

maturity models: a systematic literature review.

Enterprise Information Systems, 13(5), 719-769.

Sein, M. K., Henfridsson, O., Purao, S., Rossi, M., &

Lindgren, R. (2011). Action design re-search. MIS

quarterly, 37-56.

Thordsen, T., & Bick, M. (2020). Towards a holistic digital

maturity model. ICIS 2020 Proceed-ings. 5.

Thordsen, T., & Bick, M. (2023). A decade of digital

maturity models: much ado about nothing? Information

Systems and e-Business Management, 21(4), 947-976.

Van de Ven, A. H., & Poole, M. S. (1995). Explaining

development and change in organizations. Academy of

management review, 20(3), 510-540.

Vial, G. (2019). Understanding digital transformation: A

review and a research agenda. Journal of Strategic

Information Systems, 28(2), 118-144.

Wagner, C., Verzar, V. K., Bernnat, R., & Veit, D. J.

(2023). Digital maturity models. In Digital-ization and

sustainability (pp. 193–215). Edward Elgar Publishing.

Wendler, R. (2012). The maturity of maturity model

research: A systematic mapping study. In-formation

and software technology, 54(12), 1317-1339.

Westerman, G., Bonnet, D., & McAfee, A. (2014). Leading

digital: Turning technology into business

transformation. Harvard Business Review Press.

Williams, C., Schallmo, D., Lang, K., & Boardman, L.

(2019). Digital maturity models for small and medium-

sized enterprises: A systematic literature review. In

ISPIM Conference Proceed-ings (pp. 1–15).

Yin, R. K. (2017). Case study research and applications:

Design and methods. Sage publications.

KMIS 2025 - 17th International Conference on Knowledge Management and Information Systems

392