Art-Style Classification Using MobileNetV2: A Deep Learning

Approach

Zhengyang Wang

a

School of Information Science and Engineering, Lanzhou University, Lanzhou, Gansu, China

Keywords: Art Style Classification, MobileNetV2, Deep Learning, Transfer Learning, WikiArt.

Abstract: Painting, as a significant component of human culture, carries historical and cultural information while

shaping aesthetic perceptions. However, the complexity of artistic styles often makes it challenging for the

general public to comprehend them in depth. Leveraging artificial intelligence to popularize art knowledge

and facilitate the recognition and understanding of artistic styles intuitively has thus become increasingly

important. This study applies MobileNetV2 to develop an art style classification system that automatically

identifies eight painting genres, including Abstract Art and Romanticism, showcasing their historical and

cultural significance. The research is based on the WikiArt dataset, covering eight classic painting styles with

approximately 3,600 images. By employing data preprocessing, transfer learning, and the MobileNetV2

model, the system achieves art-style classification, with data augmentation and hyperparameter optimization

enhancing model performance. The target accuracy for the system is set at ≥65%. This study aims to provide

an innovative tool for art education and aesthetic appreciation by implementing artificial intelligence

techniques for the automatic classification of classical painting styles. The findings contribute to enhancing

public understanding and appreciation of painting art while advancing practical applications of artificial

intelligence in the art domain.

1 INTRODUCTION

The classification and management of art styles have

long posed significant challenges due to the inherent

complexity and diversity of artistic creations. Each art

style often embodies unique visual features, making

it difficult to accurately classify them using

traditional methods (Saleh & Elgammal, 2015; Tan et

al., 2018). Recent advancements in deep learning

have opened new avenues for addressing such

challenges, especially in visual classification tasks.

Convolutional Neural Networks (CNNs), as

representative models of deep learning, have

demonstrated exceptional capabilities in image

classification, achieving superior performance

compared to traditional machine learning algorithms

(Krizhevsky et al., 2017; Simonyan, 2014). For

instance, CNNs are particularly effective when

dealing with large-scale datasets, significantly

outperforming traditional models like Support Vector

Machines (SVMs) in terms of accuracy and efficiency

(Wang et al., 2020) . These advancements underscore

a

https://orcid.org/0009-0006-2954-0758

the transformative potential of deep learning in

addressing complex classification problems in

diverse domains, including art.

This study aims to leverage the MobileNetV2

model, a state-of-the-art deep learning architecture

known for its efficiency and adaptability, to classify

paintings into eight distinct art styles. By focusing on

MobileNetV2, this research investigates how the

model manages intricate visual patterns and

characteristics linked to various art styles. The

process includes adjusting the MobileNetV2 model to

enhance its effectiveness in classifying artistic styles.

Moreover, the study assesses the model’s capability

by examining both its classification accuracy and

computational efficiency.Through this approach, the

research seeks to identify potential limitations and

propose avenues for future improvements in art style

classification tasks.

The use of deep learning techniques for art style

classification marks a major advancement in

streamlining art management processes. Beyond its

practical implications, this study provides valuable

16

Wang, Z.

Art-Style Classification Using MobileNetV2: A Deep Learning Approach.

DOI: 10.5220/0013677300004670

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Data Science and Engineering (ICDSE 2025), pages 16-20

ISBN: 978-989-758-765-8

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

insights into the nuanced characteristics of different

artistic styles. By bridging the gap between artificial

intelligence and cultural heritage, this research

contributes to the growing body of knowledge on AI-

driven solutions for artistic and cultural domains,

underscoring the transformative potential of deep

learning in revolutionizing traditional fields.

The structure of this paper reflects the logical

progression of the research. It begins with an

explanation of the dataset and data preprocessing

techniques, along with the specific configuration of

the MobileNetV2 model employed in this study.

Following this, the results are presented and analyzed,

offering insights into the model’s strengths and areas

where improvement is needed. The paper concludes

by summarizing the research findings, discussing the

limitations, and proposing potential directions for

future work. By emphasizing the capabilities of

MobileNetV2, this study aims to advance the

boundaries of art style classification and lay the

groundwork for more sophisticated AI-based

solutions in the arts.

2 DATA AND METHOD

2.1 Data Collection and Description

The data used in this study comes from the publicly

available art database WikiArt. WikiArt provides a

rich collection of paintings, covering various art

styles and artists. The dataset includes eight style

categories: Abstract Art, Baroque, Cubism,

Impressionism, Post-Impressionism, Realism,

Romanticism, and Surrealism. Each style comprises

representative works from approximately five artists,

with each category containing 345 to 500 images, for

a total of 3,606 paintings.

Data augmentation was employed to improve the

model's generalization and mitigate overfitting. Data

augmentation is described as a strategy to prevent

overfitting via regularization, addressing two major

concerns: generating more data from a limited dataset

and minimizing overfitting (Maharana et al., 2022).

Common techniques implemented in this study

include image flipping, brightness adjustment,

cropping, and rotation. These augmentations

effectively reduce model overfitting to specific image

features and improve performance on the test set.

The dataset was divided into training and testing

subsets using an 80:20 split, resulting in 2,885 images

in the training set and 721 in the test set. Each image

was resized to a uniform input size of 160×160 pixels

to ensure data consistency.

2.2 Model Introduction

This study adopts MobileNetV2 as the base model

due to its lightweight architecture and efficiency.

MobileNetV2 leverages depthwise separable

convolutions to significantly reduce computational

complexity and the number of model parameters,

making it ideal for resource-constrained

environments (Sandler et al., 2028). Moreover, by

incorporating transfer learning, pre-trained weights

from large-scale datasets such as ImageNet provide

robust feature extraction capabilities, even when

working with small-scale datasets. This approach has

been shown to enhance classification performance

and minimize overfitting in tasks similar to art-style

classification (Gulzar, 2023). Additionally,

MobileNetV2's simple yet effective design ensures

compatibility with deployment on edge devices,

making it highly practical for real-world applications.

3 RESULTS AND DISCUSSION

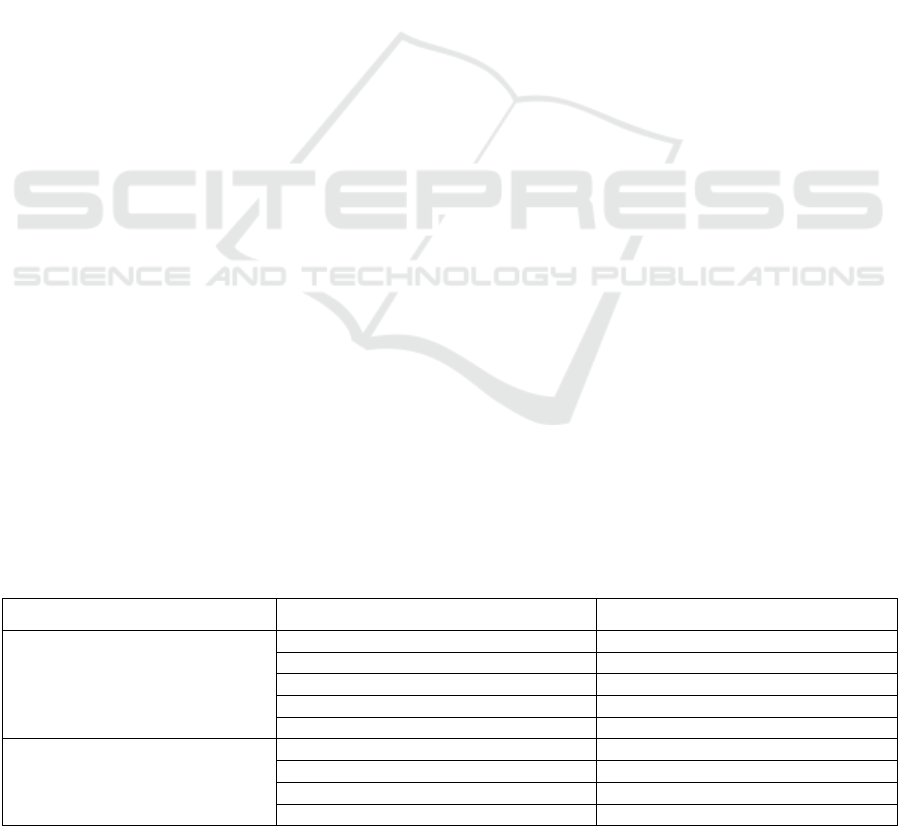

3.1 Experimental Setup

The experiment was conducted on the Edge Impulse

platform, which supports rapid model development

and deployment. The configuration details for the

neural network training are summarized in Table 1.

Table 1. Neural Network Training Configuration.

Category

Setting

Value

Training settings

Number of training cycles

15

Use learned optimizer

Disabled

Learning rate

0.0003

Training processor

CPU

Data augmentation

Enabled

Advanced training settings

Validation set size

20%

Split train/validation by key

Not used

Batch size

32

Auto-weight classes

Enabled

Art-Style Classification Using MobileNetV2: A Deep Learning Approach

17

Profile int8 model

Disabled

Neural Network architecture

Model structure

MobileNetV2 160x160 0.35

Input layer features

76,800 features

Final dense layer

None

Dropout

0.1

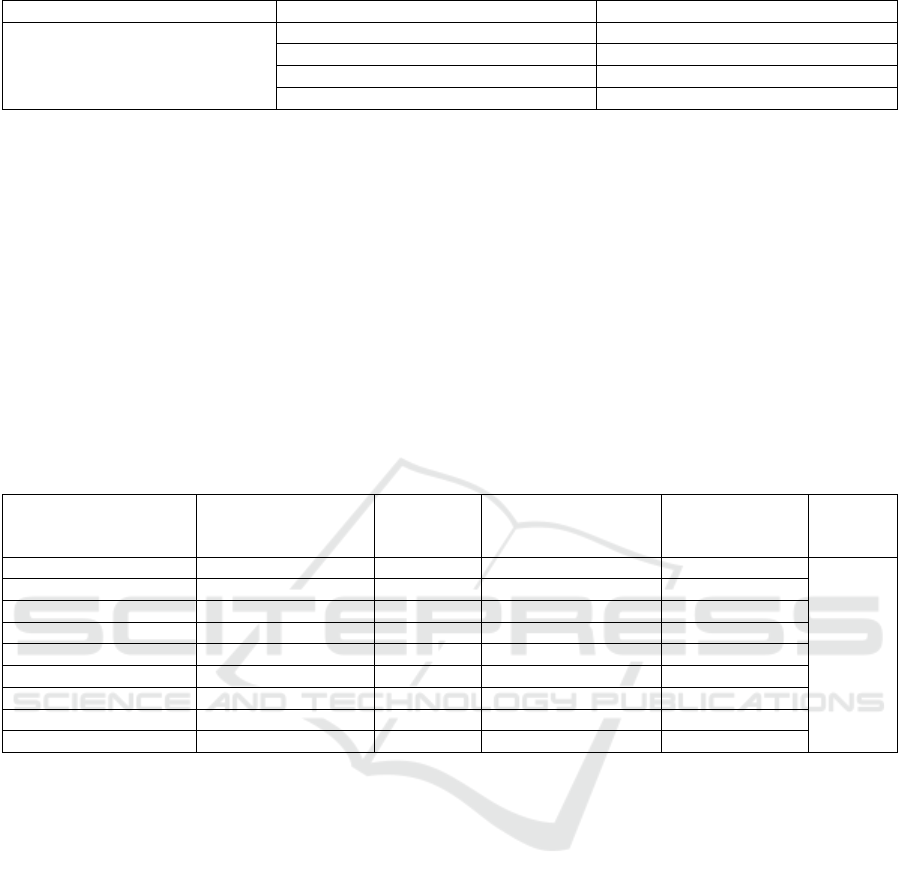

3.2 Experimental Results

Table 2 provides a summary of MobileNetV2’s

classification performance on various art styles,

detailing metrics like accuracy, F1-score, recall,

precision, and the overall ROC value.

In the classification results, styles such as Cubism

and Impressionism performed relatively well, with

accuracy exceeding 70%. In contrast, Romanticism

and Surrealism exhibited weaker classification

performance, which may be attributed to the

complexity of these styles and the similarity of

features between samples.

The results offer a detailed summary of the

model's performance, emphasizing notable strengths

and weaknesses, which are examined more

thoroughly in the next section.

Table 2. Experiment Result

Art Style

Accuracy(%)

F1

Score

Precision

Recall

ROC

Abstract Art

66.1

0.70

0.76

0.66

0.94

Baroque

69.7

0.70

0.71

0.70

Cubism

89.1

0.85

0.81

0.89

Impressionism

73.1

0.70

0.66

0.73

Post-Impressionism

62.4

0.64

0.65

0.62

Realism

60.6

0.59

0.57

0.61

Romanticism

53.3

0.57

0.62

0.53

Surrealism

69.5

0.68

0.66

0.70

Overall

67.6

0.67

0.68

0.68

3.3 Results and Discussion

The experimental results demonstrate that the art style

classification system based on MobileNetV2

performs relatively stably across most categories.

However, certain categories, particularly

Romanticism and Surrealism, exhibit relatively lower

classification accuracy. This may be attributed to the

transitional characteristics of art styles. Some artists'

works are created during historical transitions or

periods of transformation, such as the shift from

Classicism to Romanticism or from Impressionism to

Post-Impressionism. These transitional works often

blend characteristics of different styles, increasing the

difficulty for the model to classify them accurately.

Another significant challenge stems from the

overlapping features of different art styles. As

Vuttipittayamongkol et al. emphasized, class overlap

has a more pronounced negative impact on

classification accuracy compared to class imbalance,

as it leads to misclassifications even when datasets

are balanced (Vuttipittayamongkol et al., 2020). This

issue is further exacerbated by the multi-stylistic

nature of certain artists, who create works that

simultaneously reflect characteristics of multiple

styles, such as Romanticism and Realism.

Consequently, the training data for categories like

Romanticism may include traces of features from

other styles, increasing the risk of confusion for the

model.

Additionally, the ambiguity in sample features

plays a crucial role. Certain artworks exhibit high

visual similarity across different styles due to the use

of similar techniques or color treatments. For instance,

Romanticism and Surrealism share commonalities in

expressing emotions and fantasy, while

Impressionism and Post-Impressionism often overlap

ICDSE 2025 - The International Conference on Data Science and Engineering

18

in brushstroke techniques. Such ambiguities align

with findings from Vuttipittayamongkol et al., who

emphasized that addressing overlapping features is

critical to improving model performance

(Vuttipittayamongkol et al., 2020) . These factors

collectively make it challenging for the model to

clearly distinguish between styles, necessitating more

sophisticated approaches to mitigate their impact.

To address these challenges, several

improvements were made in the data processing for

this experiment. By rigorously screening the training

data, transitional-style artworks were further filtered

out to ensure the representativeness and purity of each

style category, thereby reducing feature confusion

between categories. Furthermore, the sample size was

increased, particularly for categories with complex or

easily confusable style features. By expanding the

artwork samples, the model’s understanding of

stylistic diversity was further enhanced.

Future research could focus on introducing multi-

label classification methods to enable the model to

identify multiple stylistic features that may coexist

within a single artwork. This approach aligns with the

advancements discussed by Coulibaly et al., who

proposed a Multi-Branch Neural Network (MBNN)

framework for multi-label classification (Coulibaly et

al., 2022). Their work highlights the potential of

combining multitask learning and transfer learning to

enhance the performance of classification models,

particularly for datasets with overlapping features or

complex label structures (Coulibaly et al., 2022). By

applying similar methodologies, art style

classification systems can better reflect the

complexity and diversity of art styles.

Additionally, incorporating external information,

such as the creation dates of artworks or background

information about the artists, could provide richer

contextual support for classification and enhance the

model’s recognition capability. Coulibaly et al. also

emphasized the role of external information through

pre-trained feature extractors and attention

mechanisms to improve classification accuracy

(Coulibaly et al., 2022). Inspired by this, future

models could leverage contextual data to more

effectively identify complex and transitional styles.

4 CONCLUSIONS

This study successfully developed a deep learning-

based system for art style classification, utilizing the

MobileNetV2 model combined with techniques such

as data augmentation and transfer learning.

The system achieved satisfactory accuracy in

classifying eight representative art styles. This

achievement not only provides technical support for

automated art style recognition but also offers

valuable insights into the intersection of artificial

intelligence and cultural heritage preservation.

However, despite these successes, the system's

performance is still limited by challenges such as the

overlapping complexity between art styles and the

lack of diverse annotated datasets. These limitations

indicate that there is room for improvement in data

preprocessing and feature extraction.

Building on the findings in Section 3.3, future

work could focus on introducing multi-label

classification methods to better capture the

coexistence of multiple stylistic features within a

single artwork. Additionally, integrating contextual

data, such as creation dates or artist backgrounds,

could enhance classification robustness and provide

richer insights. As highlighted in (Yu et al., 2021),

combining transfer learning with external contextual

data is a promising approach to address the challenges

of multi-label classification, offering improved model

versatility and generalization. Furthermore, the

outcomes of this study contribute not only to art

education and cultural dissemination but also to

potential applications in cultural heritage

preservation and digital management, thereby driving

technological innovation in the art domain.

REFERENCES

Coulibaly, S., Kamsu-Foguem, B., Kamissoko, D., &

Traore, D. (2022). Deep convolution neural network

sharing for the multi-label images classification.

Machine Learning with Applications, 10, 100422.

Gulzar, Y. (2023). Fruit image classification model based

on MobileNetV2 with deep transfer learning technique.

Sustainability, 15(3), 1906.

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2017).

ImageNet classification with deep convolutional neural

networks. Communications of the ACM, 60(6), 84-90.

Maharana, K., Mondal, S., & Nemade, B. (2022). A review:

Data pre-processing and data augmentation techniques.

Global Transitions Proceedings, 3(1), 91-99.

Saleh, B., & Elgammal, A. (2015). Large-scale

classification of fine-art paintings: Learning the right

metric on the right feature. arXiv preprint

arXiv:1505.00855.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., & Chen,

L. C. (2018). Mobilenetv2: Inverted residuals and linear

bottlenecks. In Proceedings of the IEEE conference on

computer vision and pattern recognition (pp. 4510-

4520).

Art-Style Classification Using MobileNetV2: A Deep Learning Approach

19

Simonyan, K. (2014). Very deep convolutional networks

for large-scale image recognition. arXiv preprint

arXiv:1409.1556.

Tan, C., Sun, F., Kong, T., Zhang, W., Yang, C., & Liu, C.

(2018). A survey on deep transfer learning. In Artificial

Neural Networks and Machine Learning–ICANN 2018:

27th International Conference on Artificial Neural

Networks, Rhodes, Greece, October 4-7, 2018,

Proceedings, Part III 27 (pp. 270-279). Springer

International Publishing.

Vuttipittayamongkol, P., Elyan, E., & Petrovski, A. (2020).

On the class overlap problem in imbalanced data

classification. Knowledge-Based Systems, 206, 106631.

Wang, P., Fan, E., & Wang, P. (2020). Comparative

analysis of image classification algorithms based on

traditional machine learning and deep learning. Pattern

Recognition Letters, 133, 102-107.

Yu, H., Yang, L. T., Zhang, Q., Armstrong, D., & Deen, M.

J. (2021). Convolutional neural networks for medical

image analysis: State-of-the-art, comparisons,

improvement and perspectives. Neurocomputing, 444,

92-110. https://doi.org/10.1016/j.neucom.2020.04.157

ICDSE 2025 - The International Conference on Data Science and Engineering

20