Edge Computing for Low Latency 5G Applications Using Q-Learning

Algorithm

Aastha A Neeralgi, Anuj Baddi, Ishwari R Naik, Madivalesh Demakkanavar, Sandeep Kulkarni

and Vijayalakshmi M

School of Computer Science and Engineering, KLE Technological University, Vidyanagar, Hubli, India

Keywords:

Edge Computing, 5G Networks, Low-Latecy, Reinforcemnt Learning, Q-Learning.

Abstract:

Next-generation technologies like industrial automa tion, augmented reality, and driverless cars depend on

edge computing for low-latency 5G applications. In real-time appli cations, achieving ultra-low latency is

essential to guarantee un interrupted communication, reduce delays, and improve user ex perience. Compu-

tational tasks are brought closer to end users by utilizing edge computing, which greatly cuts down on delays

and enhances system responsiveness. The focus here is on lowering latency, avoiding needless handovers,

and guaranteeing reliable connections by integrating Q-learning with edge computing to enhance handover

decisions in 5G networks. The state space for the Q-learning algorithm is made simpler by incorporating cru-

cial characteristics like latency and Signal-to-Noise Ratio (SNR) through preprocessing methods like normal-

ization and discretization.Effective and flexible decision-making is ensured via a well-balanced exploration-

exploitation approach and a well tuned reward system. In comparison to Random Forest model we trained,

experimental results demonstrate an impressive 7.8% reduction in latency. This framework opens the door for

developments in next-generation network technologies by offering a scalable, effective technique to handle

major issues in latency sensitive 5G applications.

1 INTRODUCTION

5G technology represents a defining moment in

the history of telecommunications, marked by high

speeds of data transfer, minimized latency, and the

ability to connect several devices simultaneously. It is

more than just an enhancement of current mobile net-

works; rather, it signifies a paradigm shift in how data

is processed, transmitted, and applied across a range

of applications. As global interconnectivity increases,

so does the demand for low-latency, high-bandwidth

applications, such as those required for autonomous

vehicles, Augmented Reality (AR), Virtual Reality

(VR), smart cities, and real-time industrial automa-

tion (Wang, 2020; Hassan et al., 2019). However,

traditional cloud computing architectures struggle to

meet these demands due to the intrinsic latency issues

associated with remote data centers.

To bridge this gap, Edge Computing (EC) has

emerged as a transformative approach, bringing com-

putational resources closer to the end-users. By

distributing data processing and relocating it near

the source of data, edge computing significantly re-

duces data transfer times, thereby mitigating latency.

This proximity enhances the performance of latency-

sensitive applications, optimizes bandwidth utiliza-

tion, and alleviates the load on central cloud infras-

tructures (Abouaomar et al., 2021a; Hassan et al.,

2019). When integrated with 5G networks, edge

computing creates a synergistic ecosystem, enabling

the development of next-generation applications that

demand rapid data processing and instantaneous re-

sponse times (Singh et al., 2016).

The framework of edge computing, however, is in-

herently heterogeneous, comprising diverse edge de-

vices such as edge servers, routers, gateways, and

numerous Internet Of Things (IoT) devices (Toka,

2021). These devices vary in their computational,

storage, and communication capabilities, presenting

both opportunities and challenges for effective re-

source management. To address these challenges, dy-

namic resource provisioning strategies, such as Lya-

punov optimization, have shown promise in managing

resources efficiently across edge devices. Such ad-

vancements ensure optimal performance within sys-

tems constrained by both latency and limited re-

Neeralgi, A. A., Baddi, A., Naik, I. R., Demakkanavar, M., Kulkarni, S. and M, V.

Edge Computing for Low Latency 5G Applications Using Q-Learning Algorithm.

DOI: 10.5220/0013643400004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 733-740

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

733

sources (Hassan et al., 2019).

The integration of edge computing with 5G tech-

nology not only improves system performance but

also drives innovation in service delivery and appli-

cation development. For instance, in smart health-

care, edge computing enables immediate monitoring

and data analysis, allowing for timely medical inter-

ventions. Similarly, in AR and VR applications, it

enhances immersive experiences by reducing latency

and facilitating seamless interactions (Hu et al., 2015;

?). These innovations illustrate the potential of edge

computing and 5G to transform industries and rede-

fine user experiences (Djigal et al., 2022).

Building on this foundation, this study introduces

a Q-learning framework designed to improve han-

dover decisions in 5G networks (Yajnanarayana et al.,

2020). By employing techniques such as normal-

ization and discretization, the framework streamlines

data processing to enhance learning efficiency and re-

duce latency (Shokrnezhad et al., 2024). These ad-

vancements directly address the latency challenges in-

herent in 5G and edge computing environments, fur-

ther strengthening their combined potential (Kodavati

and Ramarakula, 2023).

This introduction sets the foundation for under-

standing Q-learning and its application in reducing

latency in 5G networks. Section 2 reviews the Lit-

erature Survey, Section 3 discusses the Methodol-

ogy, and Section 4 explains the Implementation. Sec-

tion 5 presents Results and Analysis, highlighting the

framework’s effectiveness. Section 6 concludes with

a summary of contributions and suggests potential di-

rections for future research.

2 LITERATURE SURVEY

Edge computing has emerged as an important tech-

nological innovation in the domain of 5G communi-

cations, that satisfies the growing need for real-time

processing and data analysis. Because data process-

ing is distributed and taken closer to the end-users,

edge computing enhances the performance of appli-

cations that require fast responses (Liang et al., 2024).

The concept of edge computing moved away from tra-

ditional cloud computing systems where data process-

ing was centralized in distant data centers (Intharawi-

jitr et al., 2017a). The preliminary studies pointed

out the drawbacks of cloud computing, especially in

terms of latency and bandwidth, which became worse

with the rise of mobile and IoT applications. Shi et

al. (2016) (Sumathi et al., 2022; Abouaomar et al.,

2022) focused more on the necessity for a decentral-

ized form of computing that led to the development

of edge computing as an effective solution to these

problems (Shokrnezhad et al., 2024).

This brings about the idea of edge computing

where convergence with 5G network technologies can

lead to various applications. An example from this

domain is from autonomous vehicles. It utilizes edge

computing in order to support immediate process-

ing of vehicle sensor data (Intharawijitr et al., 2017a;

Zhang et al., 2016). As such, it creates opportuni-

ties for making decisions at lightning speed in the en-

vironment of autonomous (Abouaomar et al., 2021b;

Hu et al., 2015). Researchers have shown that it de-

creases latency, which is very necessary when fast de-

cisions have to be made (Parvez et al., 2018). Edge

computing is very supportive to manage large sensor

and device networks in city-based smart applications.

It can collect data and analyze it efficiently. Research

demonstrates that edge computing can optimize traf-

fic management systems, which can be processed lo-

cally, improve urban mobility and reduce congestion.

Healthcare is another sector which benefits from edge

computing in application such as telemedicine and

remote patient monitoring. By processing data at

the edge, healthcare providers can deliver real-time

consultations and diagnostics, improving patient out-

comes and operational efficiency (Hartmann et al.,

2022).

With these benefits comes the problem of the

adoption of edge computing in the 5G environment.

A number of challenges will need to be addressed:

Security and privacy: The decentralized architecture

of edge computing increases fears of data protec-

tion and individual privacy (Wu et al., 2024). Re-

search on security frameworks is needed in edge envi-

ronments, protecting sensitive information being pro-

cessed in such networks. Furthermore, the lack of

agreed standards for edge computing technologies

may obstruct interoperability and exacerbate issues

with heterogeneous system integrations. Ongoing ef-

forts require a collaborative process to promote devel-

opment of commonly accepted communication pro-

tocols between edge devices as well as among net-

works involved in an application. Resource pooling

on the edge can be more crucial in maintaining per-

formance while preserving resources. Research indi-

cates that sophisticated algorithms and machine learn-

ing methodologies have the potential to improve re-

source management by adapting in real-time to fluctu-

ating workloads (Djigal et al., 2022; Liu et al., 2018).

Prospects for edge computing within the 5G com-

munication framework appear positive as all the on-

going research is focused on making improvements

in its functionalities. Artificial intelligence and ma-

chine learning technologies will heavily impact the

INCOFT 2025 - International Conference on Futuristic Technology

734

development of refining edge computing procedures

for making applications more intelligent and respon-

sive (Shokrnezhad et al., 2024; Singh et al., 2016).

More, sustained development of 5G infrastructure

will likely enhance the use of edge computing, thus

giving avenues to new solutions in a host of sectors.

In generalization, from literature sources already ex-

isting on edge computing in 5G communications, this

field holds high transformative power across various

fields. Although the challenges are present, technol-

ogy and research advancements are likely to address

them, thereby making ample use of edge computing

solutions possible (Hassan et al., 2019). Increased

demand from low-latency applications edge comput-

ing will hence contribute significantly to the evolving

scene in communication technologies (Coelho et al.,

2021).To tackle these challenges and leverage the op-

portunities, we delve into the details of our proposed

approach in the next section, i.e., Methodology .

3 METHODOLOGY

To optimize latency in 5G networks, a structured ap-

proach using Q-learning, a reinforcement learning al-

gorithm, is employed. The methodology begins with

dataset generation, where MATLAB simulations em-

ulate real-world 5G conditions like congestion and

interference, producing a dataset with key attributes

such as Signal-to-Noise Ratio (SNR), latency, and

network load. This dataset is processed and struc-

tured using Python, ensuring it is suitable for machine

learning applications. Preprocessing techniques like

normalization and discretization are applied to stan-

dardize the data, enabling efficient mapping of states

to actions and enhancing the learning process.

The proposed architecture incorporates edge com-

puting to handle latency-sensitive decisions closer to

the source, allowing the system to dynamically adapt

to varying network conditions. The Q-learning frame-

work employs a reward-driven mechanism, iteratively

updating Q-values using the Bellman equation to op-

timize handover decisions and resource allocation.

This integrated methodology effectively reduces la-

tency across diverse scenarios, demonstrating scal-

ability and efficiency in real-time 5G network opti-

mization.

3.1 Dataset Generation and Description

MATLAB simulations and Python-based processing

workflows were used in an integrated method to cre-

ate the dataset for 5G network latency optimization.

The absence of publicly available datasets created es-

pecially to solve latency optimization issues in 5G

contexts served as the main driving force behind this

hybrid approach. Realistic network circumstances

were largely simulated using MATLAB, which in-

cluded a number of crucial elements such as sig-

nal interference, environmental disturbances, conges-

tion levels and device mobility. By producing raw

data that represented important performance mea-

sures including latency, Signal-to-Noise Ratio (SNR),

Signal-to-Interference-plus-Noise Ratio (SINR) and

throughput, these simulations sought to replicate the

dynamic and intricate nature of actual 5G networks.

Python libraries were used for data formatting and

structuring after the MATLAB simulations, allowing

for smooth interaction with machine learning frame-

works and guaranteeing that the data was appropriate

for reinforcement learning applications. Before start-

ing model training, the data was also put through an

initial verification process to make sure it was accu-

rate and consistent.

The dataset, which offers a comprehensive de-

scription of the network circumstances and device be-

havior, consists of twenty key attributes. Among these

variables are SINR, SNR, transmission power, net-

work load, ambient interference levels, latency, de-

vice mobility patterns and device proximity to base

stations. Each data point, which is a snapshot of cer-

tain network conditions and device parameters at a

specific time instance, provides a high-resolution im-

age of the interactions inside a 5G network. The Q-

learning model is trained on this comprehensive in-

formation, which gives it the contextual knowledge it

needs to make wise decisions. The dataset guarantees

that the model can generalize well across a variety of

scenarios, from rural low-traffic areas to urban high-

density areas, by precisely capturing the interaction of

important variables.

To provide thorough coverage of a broad range

of 5G network situations, the data generation proce-

dure was carefully designed. The dataset replicates

the subtleties of real-world network performance by

simulating a variety of situations, including variable

interference levels, varied mobility patterns, varying

congestion levels and variations in base station cov-

erage. This variety guarantees the Q-learning frame-

work’s flexibility in a variety of situations, enabling

it to learn and make the best choices in both normal

and unusual circumstances. Additionally, the dataset

documents latency in various scenarios and the vari-

ables that affect it, including transmission power lev-

els, device distance from the base station and network

congestion. The dataset is specially suitable for spe-

cific latency optimization tasks due to its dual inclu-

sion of latency metrics and influencing factors, which

Edge Computing for Low Latency 5G Applications Using Q-Learning Algorithm

735

also guarantees relevance to real-world 5G applica-

tions like industrial automation and driverless cars.

Efficiency and focus were key considerations in

the design of the dataset’s generation process. Dur-

ing the simulation phase, duplicated and unnecessary

data were removed, preventing the need for intensive

preprocessing. Every attribute in the dataset had a

distinct and significant influence on the analytical re-

sults thanks to this proactive design. This method’s

effectiveness preserved the dataset’s relevance to the

optimization goals while also saving computational

resources. To get rid of any possible irregularities

that can interfere with learning, the dataset was fur-

ther checked for consistency across all attributes. A

clean and dependable input for model training was

made possible by the meticulous curation that made

sure the data was free of noise and irregularities.

The dataset was organized into several states that

correspond to various network situations in order to

aid reinforcement learning. Device mobility, distance

to base stations, interference levels, network load, and

channel quality indicators are just a few of the char-

acteristics that are contained inside each state. The

Q-learning model was able to assess actions in a dy-

namic environment because to this structured repre-

sentation, which allowed it to adjust to shifting cir-

cumstances and determine the best latency reduction

techniques. Because of the dataset’s dynamic nature,

the model was able to simulate and evaluate real-

time situations, guaranteeing that the policies it had

learned were useful and efficient for application in the

actual world. The dataset’s extensive coverage of net-

work states and conditions not only facilitates reliable

training but also improves the model’s scalability and

performance in diverse 5G environments. Thus, this

extensive and painstakingly crafted dataset serves as

the foundation for the Q-learning architecture, allow-

ing for strong performance in 5G network latency op-

timization.

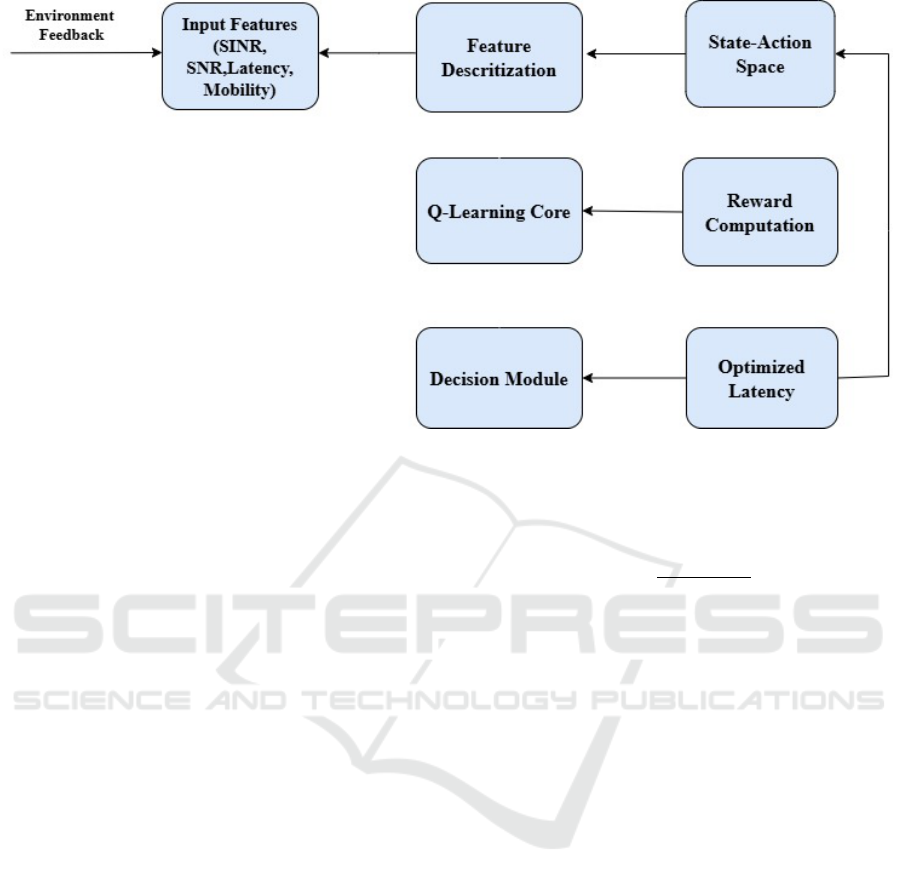

3.2 Proposed Architecture

The suggested architecture combines Q-learning with

an improved state-action space to achieve a notable

reduction in latency in 5G networks while guarantee-

ing scalability and efficiency. Using domain-specific

thresholds, continuous variables like SINR, latency,

and network load were discretized, lowering the di-

mensionality of the state space while preserving cru-

cial differences required for efficient learning. The

model can adjust to complex and dynamic network

conditions without incurring undue processing over-

head thanks to this discretization technique. In order

to determine the best methods for reducing latency,

the architecture uses a reinforcement learning frame-

work to assess state-action pairings in real-time. The

model dynamically modifies its strategies to guaran-

tee reliable performance in a variety of 5G contexts

by taking into account important contextual factors

like device mobility, network congestion, and signal

interference. Because of its versatility, the suggested

solution—which is illustrated in Fig. 1. is a work-

able and dependable foundation for latency-sensitive

applications in next-generation networks. 1

Along with the basic features of SINR, SNR, and

latency, the architecture also incorporates base station

data and device mobility to increase adaptability. The

reward function is designed to promote reliable con-

nections and low latency by penalizing unnecessary

handovers and encouraging efficient resource alloca-

tion. This architecture can be utilized to optimize la-

tency in real time in dynamic 5G applications due to

its scalability and efficiency. By focusing on impor-

tant network performance parameters and utilizing re-

inforcement learning techniques, the design provides

a solid foundation for latency optimization in real 5G

networks.

The Q-learning framework for latency optimiza-

tion was created to choose actions iteratively accord-

ing to the status of the network. Essential features

including Base Station ID, discretized SNR, and dis-

cretized latency were combined to generate states.

The Q-learning model was able to assess various net-

work circumstances and determine whether to remain

with the current base station or move to a different

one thanks to this state representation. In order to

minimize latency, a variety of activities were selected,

each of which correlated to varying degrees of net-

work load and delay reduction.

The Q-values, which indicate the anticipated fu-

ture reward for every state-action pair, were updated

using the Bellman equation. The Bellman equation

was used to repeatedly update the Q-value for a par-

ticular state-action pair by combining the immediate

reward with the anticipated future advantages. The

model was motivated to decrease latency in subse-

quent actions by the reward function, which has an

inverse relationship with latency. Higher values re-

warded lower latency, directing the agent toward the

best course of action. The following equation formal-

izes this process:

Q(s

t

, a

t

) = Q(s

t

, a

t

) + α

R

t+1

+ γ max

a

′

Q(s

t+1

, a

′

)

− Q(s

t

, a

t

)

(1)

The model continuously improves its decision-

making capacity to minimize latency by refining its

INCOFT 2025 - International Conference on Futuristic Technology

736

Figure 1: Proposed Architecture Diagram for Q-Learning.

Q-values over multiple epochs, as demonstrated in

equation1. The exploration pace is modified to strike

a balance between the exploitation of known activi-

ties and the exploration of novel ones. As the model

improves its learnt policy and concentrates more on

choosing actions that minimize latency, the explo-

ration rate, which was initially set high, falls.

4 IMPLEMENTATION

In order to construct the Q-learning framework,

network operations were simulated throughout 500

epochs, each of which represented a different 5G net-

work situation. The Q-table, which was initially set

to zero values to indicate the lack of prior knowl-

edge regarding state-action pairs, is the central com-

ponent of this system. During training, the Q-table

was iteratively updated to reflect the best latency-

reduction strategies. To guarantee algorithm com-

patibility, the dataset underwent necessary prepro-

cessing procedures like normalization and discretiza-

tion. To facilitate effective state-action mapping,

continuous data—like SINR, latency, and network

load—were transformed into discrete states. Three

possible actions were associated with each state: ”Ad-

just Power,” which involved changing the transmis-

sion power levels; ”Switch,” which involved switch-

ing to a different base station to improve the sig-

nal quality; and ”Stay,” which involved keeping the

present connection for stability. These steps were

thoughtfully created to deal with latency issues in a

variety of dynamic network scenarios.

The framework’s reward function, mathematically

defined as:

R =

1

Latency + ε

(2)

plays a critical role in guiding the learning pro-

cess. Here, R represents the reward for a specific

state-action pair, inversely correlating with the ob-

served latency to ensure higher rewards for lower la-

tency. The term Latency captures the network de-

lay in a given state, while ε, set at 0.0002, prevents

numerical instability by ensuring the denominator re-

mains non-zero, particularly in low-latency scenarios.

This formulation enabled the framework to prioritize

actions that significantly reduced delays while main-

taining numerical stability. Each update to the Q-table

was governed by the Bellman equation, progressively

refining the decision-making policy by incorporating

the rewards obtained during simulations.

A dynamic exploration-exploitation technique im-

proved the framework’s decision-making and en-

sured balanced learning during training. In order to

gather thorough knowledge about the surroundings,

the agent first investigated a large variety of state-

action pairs. The exploration rate dropped as training

went on, enabling the agent to concentrate on using

learnt policies to make the best decisions. In order to

prevent local optima and achieve reliable latency re-

duction solutions, parameters including the learning

rate, discount factor, and exploration rate were care-

fully adjusted to guarantee smooth convergence to an

ideal policy.

Extensive experiments were carried out in a vari-

Edge Computing for Low Latency 5G Applications Using Q-Learning Algorithm

737

ety of network situations, such as different levels of

congestion, mobility patterns, and interference sce-

narios, in order to confirm the framework’s perfor-

mance. Metrics like latency reduction, decision cor-

rectness, and the agent’s capacity for environment

adaptation were the main emphasis of the evalua-

tion. The outcomes showed how effective the frame-

work was in reducing latency, outperforming tradi-

tional techniques by a considerable margin. The Q-

learning-based model demonstrated its flexibility un-

der real-world 5G network conditions by utilizing it-

erative Q-table updates and a well-structured reward

function. This demonstrated its dependability for

latency-sensitive applications like augmented reality

and driverless cars. This framework’s promise as a

foundation for upcoming 5G optimization initiatives

is highlighted by the smooth integration of pretreat-

ment processes, strategic exploration, and adaptive

learning.

5 RESULTS AND ANALYSIS

The outcomes of the trial showed how well the

Q-learning framework worked to lower latency in

5G networks. The method achieved a notable

58.16% reduction in latency, outperforming conven-

tional WebRTC-based remote control (Mtowe and

Kim, 2023). Extensive simulations comparing the

goal latency values with the improved latency follow-

ing model training were used to verify this. The la-

tency improvement ranged from a maximum of 15

ms to a minimum of 3 ms and an average of 9 ms

for all devices. Device ID 3 demonstrated a decrease

in latency from 19.40 ms to 16.49 ms, whilst De-

vice ID 0 demonstrated an improvement from 57.82

ms to 54.93 ms. These findings support the method’s

promise for real-time latency optimization in 5G set-

tings, especially for applications that are sensitive to

latency.

The Q-learning framework’s flexibility and re-

silience are demonstrated by the decrease in latency

across various devices. The enhanced latency fig-

ures attest to the model’s ability to efficiently opti-

mize resource allocation and handover choices across

a range of network scenarios. The agent was given

the best methods for reducing latency by the Q-table,

which was created through iterative training. The

dataset, which comprised a variety of network set-

tings and characteristics, provided a strong basis for

model training, guaranteeing that the outcomes were

indicative of actual situations. Table 1 further illus-

trates the efficacy of the framework by summarizing

the comparison between the target and improved la-

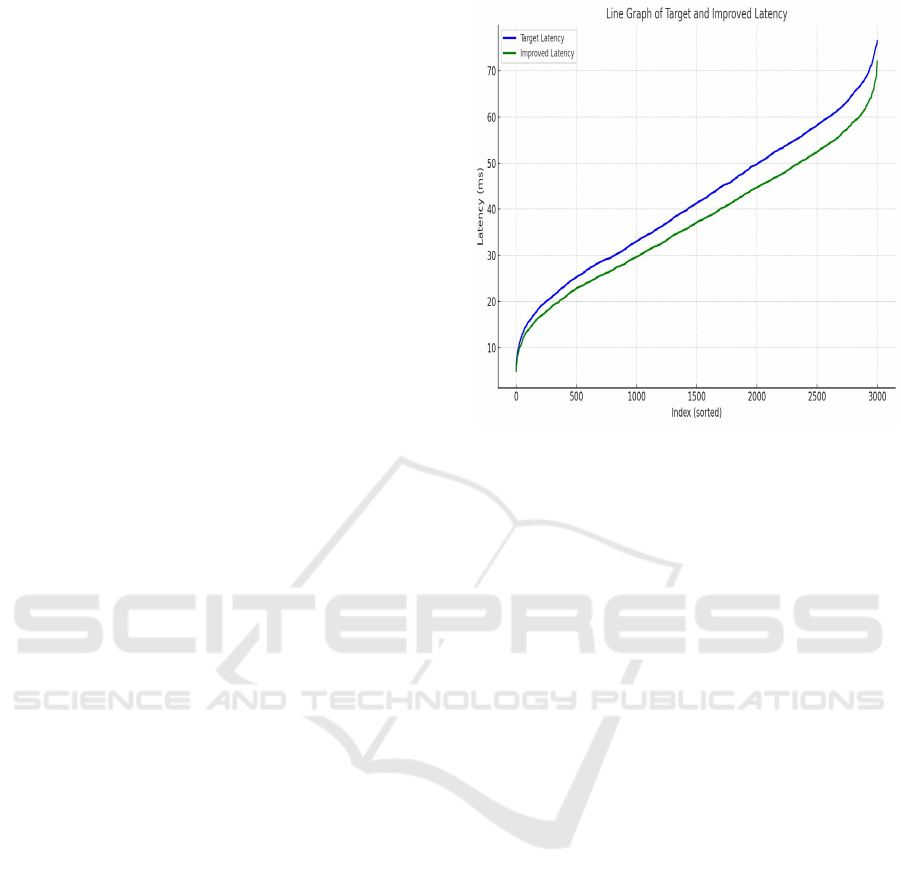

Figure 2: Line Graph of Target latency and Improved la-

tency

tency values for a subset of devices.

Figure 2 provides a graphical comparison of tar-

get latency (depicted by the blue line) and improved

latency (represented by the green line) achieved us-

ing the proposed framework. The x-axis corresponds

to the sorted sample index, while the y-axis repre-

sents latency in milliseconds. The consistent sep-

aration between the two lines highlights the frame-

work’s capability to significantly reduce latency, with

pronounced improvements observed at higher latency

ranges. These outcomes demonstrate the feasibility of

using the framework for real-time latency optimiza-

tion, making it particularly suitable for applications

like autonomous vehicles, augmented reality, and in-

dustrial automation.

The reduction in latency across a range of devices

showcases the Q-learning framework’s adaptability

and robustness. The improved latency values reflect

the framework’s ability to optimize resource alloca-

tion and handover decisions effectively under diverse

network conditions. Iterative training of the Q-table

allowed the agent to identify optimal actions for la-

tency minimization. The training dataset, composed

of various network configurations and parameters, en-

sured that the model’s performance was representa-

tive of real-world scenarios. A detailed comparison

of target and improved latency values (in ms) for se-

lected devices is presented in Table 1, further demon-

strating the framework’s efficiency.

INCOFT 2025 - International Conference on Futuristic Technology

738

Table 1: Latency Comparison: Target vs. Improved Latency

Device ID Target Latency Improved Latency

0 57.82 54.93

1 22.40 20.32

2 45.75 43.17

3 19.40 16.49

4 30.12 28.04

6 CONCLUSION AND FUTURE

SCOPE

The effectiveness of a Q-learning-based architecture

for 5G network latency optimization is demonstrated

in this study. In dynamic network scenarios, the

system employs preprocessing techniques and a cus-

tomized dataset to efficiently reduce latency and en-

hance decision-making. Streamlining the state space

enhances the learning process and computational effi-

ciency, making the proposed model suitable for real-

time applications in 5G environments. The integra-

tion of edge computing addresses the demands of

latency-sensitive tasks, such as driverless cars, smart

cities, and augmented reality systems. The architec-

ture leverages adaptive reinforcement learning strate-

gies to handle varying network conditions and opti-

mize resource allocation, establishing a dependable

framework for latency-critical applications in next-

generation networks

Future studies can enhance scalability and accu-

racy by incorporating parameters like multi-cell inter-

ference, user behavior, and advanced machine learn-

ing methods, while emphasizing standardized proto-

cols and security for broader 5G adoption.

REFERENCES

Abouaomar, A., Cherkaoui, S., Mlika, Z., and Kobbane,

A. (2021a). Resource provisioning in edge comput-

ing for latency-sensitive applications. IEEE Internet

of Things Journal, 8(14):11088–11099.

Abouaomar, A., Cherkaoui, S., Mlika, Z., and Kobbane,

A. (2021b). Resource provisioning in edge comput-

ing for latency-sensitive applications. IEEE Internet

of Things Journal, 8(14):11088–11099.

Abouaomar, A., Cherkaoui, S., Mlika, Z., and Kobbane, A.

(2022). Resource provisioning in edge computing for

latency sensitive applications.

Coelho, C. N., Kuusela, A., Li, S., Zhuang, H., Ngadiuba,

J., Aarrestad, T. K., Loncar, V., Pierini, M., Pol, A. A.,

and Summers, S. (2021). Automatic heterogeneous

quantization of deep neural networks for low-latency

inference on the edge for particle detectors. Nature

Machine Intelligence, 3(8):675–686.

Djigal, H., Xu, J., Liu, L., and Zhang, Y. (2022). Machine

and deep learning for resource allocation in multi-

access edge computing: A survey. IEEE Communi-

cations Surveys & Tutorials, 24(4):2449–2494.

Hartmann, M., Hashmi, U. S., and Imran, A. (2022).

Edge computing in smart health care systems: Re-

view, challenges, and research directions. Transac-

tions on Emerging Telecommunications Technologies,

33(3):e3710.

Hassan, N., Yau, K.-L. A., and Wu, C. (2019). Edge com-

puting in 5g: A review. IEEE Access, 7:127276–

127289.

Hu, Y. C., Patel, M., Sabella, D., Sprecher, N., and Young,

V. (2015). Mobile edge computing—a key technology

towards 5g. ETSI white paper, 11(11):1–16.

Intharawijitr, K., Iida, K., and Koga, H. (2017a). Simula-

tion study of low latency network architecture using

mobile edge computing. IEICE TRANSACTIONS on

Information and Systems, 100(5):963–972.

Intharawijitr, K., Iida, K., Koga, H., and Yamaoka, K.

(2017b). Practical enhancement and evaluation of a

low-latency network model using mobile edge com-

puting. In 2017 IEEE 41st annual computer software

and applications conference (COMPSAC), volume 1,

pages 567–574. IEEE.

Kodavati, B. and Ramarakula, M. (2023). Latency reduc-

tion in heterogeneous 5g networks integrated with re-

inforcement algorithm.

Liang, S., Jin, S., and Chen, Y. (2024). A review of edge

computing technology and its applications in power

systems. Energies, 17(13).

Liu, J., Luo, K., Zhou, Z., and Chen, X. (2018). Erp: Edge

resource pooling for data stream mobile computing.

IEEE Internet of Things Journal, 6(3):4355–4368.

Mtowe, D. P. and Kim, D. M. (2023). Edge-computing-

enabled low-latency communication for a wireless

networked control system. Electronics, 12(14).

Parvez, I., Rahmati, A., Guvenc, I., Sarwat, A. I., and Dai,

H. (2018). A survey on low latency towards 5g: Ran,

core network and caching solutions. IEEE Communi-

cations Surveys & Tutorials, 20(4):3098–3130.

Shokrnezhad, M., Taleb, T., and Dazzi, P. (2024). Dou-

ble deep q-learning-based path selection and service

placement for latency-sensitive beyond 5g applica-

tions. IEEE Transactions on Mobile Computing,

23(5):5097–5110.

Singh, S., Chiu, Y.-C., Tsai, Y.-H., and Yang, J.-S. (2016).

Mobile edge fog computing in 5g era: Architecture

and implementation. In 2016 International Computer

Symposium (ICS), pages 731–735.

Sumathi, D., Karthikeyan, S., Sivaprakash, P., and Sel-

varaj, P. (2022). Chapter eleven - 5g communi-

cation for edge computing. In Raj, P., Saini, K.,

and Surianarayanan, C., editors, Edge/Fog Computing

Paradigm: The Concept Platforms and Applications,

volume 127 of Advances in Computers, pages 307–

331. Elsevier.

Toka, L. (2021). Ultra-reliable and low-latency computing

in the edge with kubernetes. Journal of Grid Comput-

ing, 19(3):31.

Edge Computing for Low Latency 5G Applications Using Q-Learning Algorithm

739

Wang, T. (2020). Application of edge computing in 5g com-

munications. In IOP Conference Series: Materials

Science and Engineering, volume 740, page 012130.

IOP Publishing.

Wu, Y., Zhang, X., Ren, J., Xing, H., Shen, Y., and Cui, S.

(2024). Latency-aware resource allocation for mobile

edge generation and computing via deep reinforce-

ment learning.

Yajnanarayana, V., Ryd

´

en, H., and H

´

evizi, L. (2020). 5g

handover using reinforcement learning.

Zhang, J., Xie, W., Yang, F., and Bi, Q. (2016). Mobile

edge computing and field trial results for 5g low la-

tency scenario. China Communications, 13(2):174–

182.

INCOFT 2025 - International Conference on Futuristic Technology

740