5G and IoT Integration: Optimizing Connectivity for Massive

Machine-Type Communication (mMTC)

Ananya M Patil, Akhila Jaya, Tanuja Tondikatti, Janvi Chinchansur, Sandeep Kulkarni

and Vijayalakshmi M

School of Computer Science and Engineering KLE Technological University Hubballi, India

Keywords:

Bandwidth, IoT, Latency, LSTM, mMTC, Network Slicing.

Abstract:

Future wireless cellular networks are expected to not only improve broadband access for human-centric ap-

plications but also enable massive connectivity for tens of billions of devices. Internet of things(IoT) devices

are central to realizing the massive machine-type communication(mMTC) in 5G. It is expected to handle bil-

lions of IoT devices in mMTC. This research focuses on optimizing connectivity and resource allocation for

IoT devices in 5G networks to ensure scalability and efficiency, particularly in smart cities, healthcare, and

industrial IoT applications. This study proposes a predictive resource demand model combined with dynamic

network slicing to optimize connectivity and resource allocation in 5G networks. Using an ensemble of Long

Short-Term Memory (LSTM) models, resource demands are forecasted, and tailored slices are deployed to

meet the specific needs of IoT applications. Simulation results demonstrate that the proposed approach re-

duces latency and improves throughput compared to traditional resource allocation methods. Furthermore, the

prediction model achieved an accuracy of 96.15% for latency estimation and 81.14% for bandwidth forecast-

ing, highlighting the effectiveness of the approach. This research provides a scalable and efficient framework

for IoT connectivity in 5G networks, paving the way for enhanced performance in critical applications like

smart cities and healthcare.

1 INTRODUCTION

The fifth generation of wireless communication tech-

nology, or 5G, promises incredibly high speeds, lit-

tle latency, and extensive connectivity to transform

sectors and make cutting-edge applications possible.

An innovative development in wireless communica-

tion, mMTC(Bockelmann et al., 2018) in 5G is the

foundation of the Internet of Things, enabling seam-

less connectivity for millions of low-power devices in

smart cities, industries, and beyond. IoT paradigm

is the seamless integration of potentially any object

with the Internet(Li et al., 2017). Globally, there are

currently about 21.7 billion active connected devices.

More than 30 billion IoT connections are anticipated

by 2025, with an average of nearly four IoT devices

per person(Lueth, 2020). This context imposes strong

need for having optimized connectivity between IoT

devices. Optimizing connectivity for mMTC is essen-

tial to efficiently handle the massive number of IoT

devices, ensuring reliable communication, minimiz-

ing network congestion(Najm et al., 2019), and con-

serving energy.

5G wireless networks are user-centric, they must

allocate resources efficiently to meet Quality of Ser-

vice (QoS) requirements(Aljiznawi et al., 2017).

However, a significant obstacle to the growing de-

mand for the 5G cellular network is effective resource

distribution.(Tayyaba and Shah, 2019). Efficient re-

source allocation(Rehman et al., 2020) is key to opti-

mizing connectivity in mMTC, enabling reliable and

scalable communication for massive IoT device net-

works in 5G. Optimizing connectivity(Pons et al.,

2023) for mMTC ensures that these devices remain

reliably connected to the network, even under con-

ditions of extreme device density and diverse oper-

ational requirements. As the integration of 5G net-

works and IoT continues to transform communication

environments, optimising connectivity becomes cru-

cial(Imianvan and Robinson, 2024). With 5G, mobile

virtual network operators can share the physical net-

work infrastructure thanks to the Radio Access Net-

work (RAN) (Shi et al., 2020a; Foukas et al., 2017)

slicing feature. Network slicing replaces the static

distribution of resources (such as frequency, power,

and processing resources) by reserving them dynami-

574

Patil, A. M., Jaya, A., Tondikatti, T., Chinchansur, J., Kulkarni, S. and M, V.

5G and IoT Integration: Optimizing Connectivity for Massive Machine-Type Communication (mMTC).

DOI: 10.5220/0013632500004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 574-580

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

cally in response to user demand.

5G is expected to handle billions of IoT devices in

mMTC. This research focuses on optimizing connec-

tivity, resource allocation, and power management for

IoT devices in 5G networks to ensure scalability and

efficiency, particularly in smart cities, healthcare, and

industrial IoT applications. Optimizing connectivity

in mMTC is critical to meeting the demands of bil-

lions of IoT devices expected to operate within 5G

networks. These devices often have varying require-

ments for data rate, latency, and reliability, making

efficient resource allocation essential. The high den-

sity of connected devices can lead to network con-

gestion, interference, and power constraints, posing

significant challenges. By leveraging advanced tech-

niques like machine learning, dynamic spectrum man-

agement, and predictive resource allocation, mMTC

can ensure seamless device communication, enhance

network reliability, and support applications in smart

cities, healthcare, and industrial IoT.

The primary objective of this work to explore

and apply suitable machine learning algorithms on

dataset, to develop a predictive resource demand

model combined with dynamic network slicing, lever-

aging Machine Learning techniques to forecast re-

source demands in 5G networks. By accurately pre-

dicting the required bandwidth and latency for IoT

applications, the proposed model optimizes connec-

tivity and resource allocation, ensuring efficient per-

formance. The approach not only reduces latency and

improves throughput but also ensures scalability and

adaptability to dynamic network conditions.

This paper is organized as follows: Section II pro-

vide a detailed review of related work, highlighting

existing approaches for optimizing connectivity for

mMTC. This is followed by an in-depth discussion

of the proposed methodology of predicting resource

demands and network slicing in section III. In section

IV, effectiveness of these predictions is evaluated with

performance metrics such as accuracy, prediction er-

ror, and computational efficiency being analyzed. Fi-

nally paper is concluded with section V.

2 BACKGROUND

Emerging technologies such as 5G, IoT, AI, and ML

are transforming resource allocation and network op-

timization to address the growing demands of modern

services and large-scale IoT applications. Intelligent

Decision Models(IDM), based on AI and ML, enables

efficient management of 5G resources by dynamically

handling network traffic, user behavior, and configu-

ration changes. Such models when deployed together

with Software Defined Network (SDN) and Network

Function Virtualization (NFV) enable resource dis-

tribution without centralization where they obtain an

efficiency of up to 91.85% minimizing problems re-

lating to operations and maintenance issues (Logesh-

waran et al., 2023a).

Traditional resource allocation algorithms, Water-

Filling and Round Robin, ensure fairness but face is-

sues of scalability, latency, and bandwidth. Advanced

models, such as the Energy-Efficient Resource Allo-

cation Model (EERAM), show a drastic improvement

in energy efficiency at 92.97% and decrease end-to-

end latency by 25.47% in device-to-device (D2D)

communication within 5G Wireless Personal Area

Networks (Logeshwaran et al., 2023b). These models

include techniques such as power control, frequency

selection, and priority-based allocation to mitigate in-

terference.

Machine learning can predict signal strength,

bandwidth requirement, and traffic pattern to opti-

mize 5G. The algorithms used are such that random

forests and neural networks enhance Quality of Ser-

vice and reduce latency, which can be seen in the

smart cities and industrial IoT. Advanced reinforce-

ment learning frameworks such as Proximal Policy

Optimization(PPO) can effectively manage real-time

constraints (Shi et al., 2020b), and Asynchronous

Advantage Actor-Critic (A3C) models enhance the

throughput in mMTC (Yin et al., 2022). Double Deep

Q-Network (DDQN) frameworks provide hierarchi-

cal resource distribution to delay-sensitive IoT sys-

tems, ensuring ultra-low latency and reliable commu-

nication(Firouzi and Rahmani, 2024).

Federated Learning-based Resource Allocation

Models are designed to address privacy and energy

efficiency in IoT systems by processing data in a de-

centralized manner (Nguyen et al., 2021). Multi-

Agent Deep Deterministic Policy Gradient (MAD-

DPG) frameworks will allow for efficient spectrum

sharing as well as power management. This can en-

hance cooperation in dense 5G environments among

devices(Hu et al., 2024).

These innovations affect various industries rang-

ing from healthcare to education and industrial au-

tomation. In healthcare, 5G and IoT allow patients

to be monitored remotely and enables predictive an-

alytics. In education, it supports intelligent class-

rooms through augmented and virtual reality experi-

ence. These technologies improve energy and spectral

efficiency, addressing key problems such as latency to

pave the way for the progression to sixth-generation

(6G) networks (Zheng et al., 2023).

Despite the strong progress, there are still chal-

lenges such as scalability for billions of heteroge-

5G and IoT Integration: Optimizing Connectivity for Massive Machine-Type Communication (mMTC)

575

neous devices, real-time adaptivity, and ultra-low

power consumption. Security, privacy, and equitable

access are considered to be the critical problems, es-

pecially for the underserved communities and ethical

concerns such as data privacy and the digital divide.

The solution of these challenges is essential in the

successful deployment and integration of these trans-

formative technologies(Chen et al., 2019; Nguyen

et al., 2021).

3 PROPOSED WORK

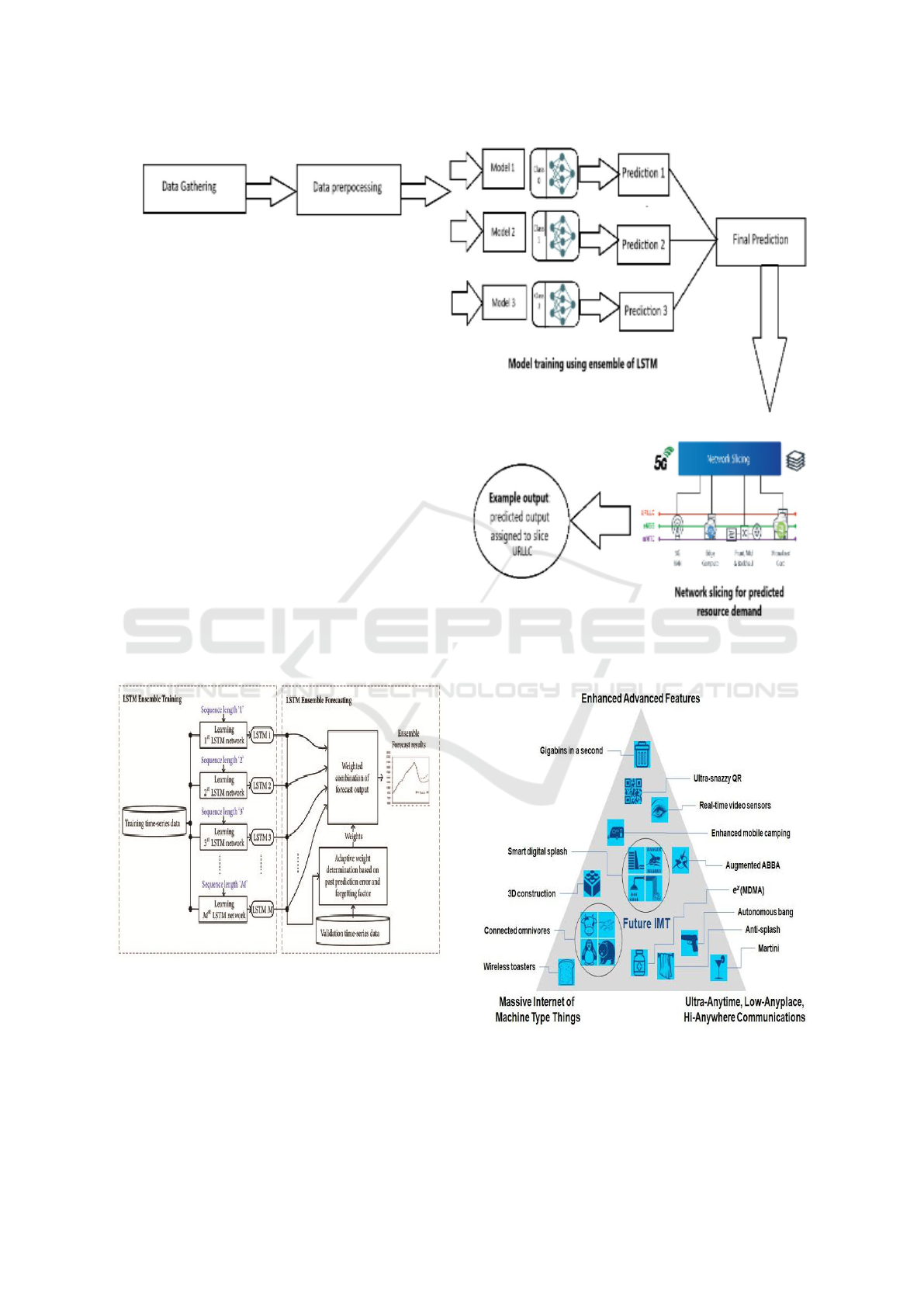

This proposed model consists of four main modules:

Data gathering, Preprocessing, Model training, Model

evaluation, and the Slicing Module, which collec-

tively form an integrated system for processing data,

training predictive models, evaluating performance,

and implementing dynamic resource allocation, as il-

lustrated in Fig. 1.

1. Preprocessing: The preprocessing phase in-

volves cleaning historical IoT traffic data. Miss-

ing values in the dataset are identified using the is-

null().sum() function, which highlights null entries

in the feature.Interpolation or imputation addresses

cases of absent or corrupted data. Then, the fea-

tures are normalized using Min-Max normalization,

thus allowing the possibility of comparing equally

scaled input values that further trains more stably and

quickly. Normalized data is transformed into time-

series sequences by applying a sliding window tech-

nique. A raw data into time-series sequences: fixed-

length windows of past observations make up each

one’s sequence used to forecast the likelihood of de-

mand in future. Split the data into training and testing.

2. Model training: The LSTM networks are de-

signed to handle sequential data and capture temporal

dependencies. It is therefore very useful in forecast-

ing resource demands in IoT traffic, which often ex-

hibits time-varying patterns. An ensemble of LSTM

models is used to improve prediction accuracy and ro-

bustness by combining outputs from multiple models

with diverse configurations.

As shown in Fig.2 it is composed of two primary

phases: LSTM Ensemble Training and forecasting. In

first phase, multiple LSTM networks are trained inde-

pendently with sequence length. This setup ensures

that each LSTM captures temporal features unique to

its assigned sequence length. These networks learn

patterns at various time resolution. This is the benefit

of ensemble of LSTM. Forecasting phase integrates

the outputs from the trained LSTM networks through

a weighted combination approach. Each model gen-

erates its own predictions such as R1(t), R2(t), and

R3(t) resource demands at time t. Once the models

have produced their predictions after being trained, all

output from the model would now be available to an

aggregation layer. The process of aggregating the pre-

dictions is through the manner of weighted average as

given in equation(1)

ˆ

R(t) =

1

N

N

∑

i=1

w

i

· R

i

(t) (1)

3. Model Evaluation: Model evaluation for our

project focuses on assessing the performance of the

LSTM ensemble model in accurately predicting re-

source allocation metrics, such as bandwidth, latency,

and signal-to-noise ratio (SNR), for IoT applications

in 5G networks. Key evaluation metrics include Mean

Absolute Error (MAE), and Mean Absolute Percent-

age Error (MAPE) to quantify prediction accuracy.

The model parameters are initialized and los func-

tion Mean Squared Error (MSE) defined to evaluate

accuracy of prediction. The Adam optimizer is used

with the learning rate of 0.001 for stable convergence.

The LSTM is trained with a batch size of 32 on 40

epochs, and learns to map input sequences into pre-

dicted resource demands.

4. Network Slicing: Network slicing concepts

provide personalised solutions for a wide range of

applications, including Enhanced Mobile Broadband

(eMBB), Ultra-Reliable Low Latency Communica-

tions (URLLC), and Massive Machine Type Com-

munications (mMTC), by separating and optimising

network slices for each use case. In the case of

mMTC, network slicing goes a step further by break-

ing the mMTC slice into sub-slices to fulfil the unique

needs of distinct IoT applications. This enables accu-

rate resource allocation, allowing low-power devices,

periodic sensors, and event-driven devices to coex-

ist without interference while optimising performance

based on their specific requirements.

This approach combines several systematic steps

so that the outputs of predictive resource demand

models will work for dynamic creation and manage-

ment of slices in the network. Predicted parameters,

such as bandwidth and delay, can give important in-

formation about the requirements for different IoT

applications (such as industrial IoT, smart cities, or

healthcare) and will act as input for determining the

slice configuration. The requested slices fall under

eMBB, URLLC, and mMTC, whose demand submis-

sion requests are the predictions. Slices under eMBB

are supposed to provide fast data access thus, they

require bandwidth and can be used by applications

with a need for quick access of data. Streaming of

videos is one example of this kind. URLLC slices

would have low latency dependence and high depend-

INCOFT 2025 - International Conference on Futuristic Technology

576

Figure 1: Architecture of model (Baccouche et al., 2020; Monem, 2021)

Figure 2: Ensemble of LSTM(Choi and Lee, 2018)

ability: these are going to be key for applications

like self-driving cars or remote surgery where delays

could prove catastrophic. mMTC slices have massive

device connectivity and energy efficiency, so would

therefore have an excellent experience in applications

such as smart cities or environmental monitors where

most IoT devices would be working flawlessly.

Figure 3: Network Slicing((@rvwomersley), 2018)

Implementation-related problems were such that

it was also very difficult to procure quality, live IoT

traffic data for test purposes in this project. Such data

5G and IoT Integration: Optimizing Connectivity for Massive Machine-Type Communication (mMTC)

577

would benefit the training and validation of predictive

models, yet it is difficult to obtain data because of pri-

vacy concerns and data sharing constraints. Another

important challenge was in the tuning of the LSTM

model parameters. The performance of the LSTM

models is highly sensitive to hyperparameters such as

number of layers, hidden units, learning rate, and se-

quence length. Finding the optimal configuration that

provides the best prediction accuracy for the resource

demand in 5G networks involves a lot of experimen-

tation and large computational resources.

4 RESULTS AND ANALYSIS

The Resource Allocation dataset, of 400 tuples is ex-

panded into an augmented set of 2,000 tuples enrich-

ing its volume and diversity. All represent a single

instance of one user’s usage scenario from the net-

work and involve nine key attributes including times-

tamp, user ID, application type, signal string, la-

tency, required bandwidth, allocated bandwidth, and

resource allocation. These attributes encapsulate

the following aspects of network performance and

application-dependent resource distribution related to

video call, voice call, video stream, emergency call

and online game, etc. The general view of this data

set is that the actual circumstances of a 5G network

are as follows: Signal Strength in the form of dBm,

latency in milliseconds, and bandwidth in megabits

per second or Kbps, respectively. Percent resource

allocation was considered to indicate the level of re-

sources being used efficiently. The strategies applied

in data augmentation ensured simulated conditions

hence providing a concrete base for training the ma-

chine learning models on predicting bandwidth and

latency that eventually helped with making the best

optimized strategy for network slicing and the alloca-

tion of resources.

Table 1: 5G Resource Allocation Dataset(Omar Sobhy,

2023)

Features Example Tuples

Timestamp 09-03-2023 10:00:00

User ID User 1

Application Type Video Call

Signal Strength -75 dBm

Latency 30 ms

Required Bandwidth 10 Mbps

Allocated Bandwidth 15 Mbps

Resource Allocation 70%

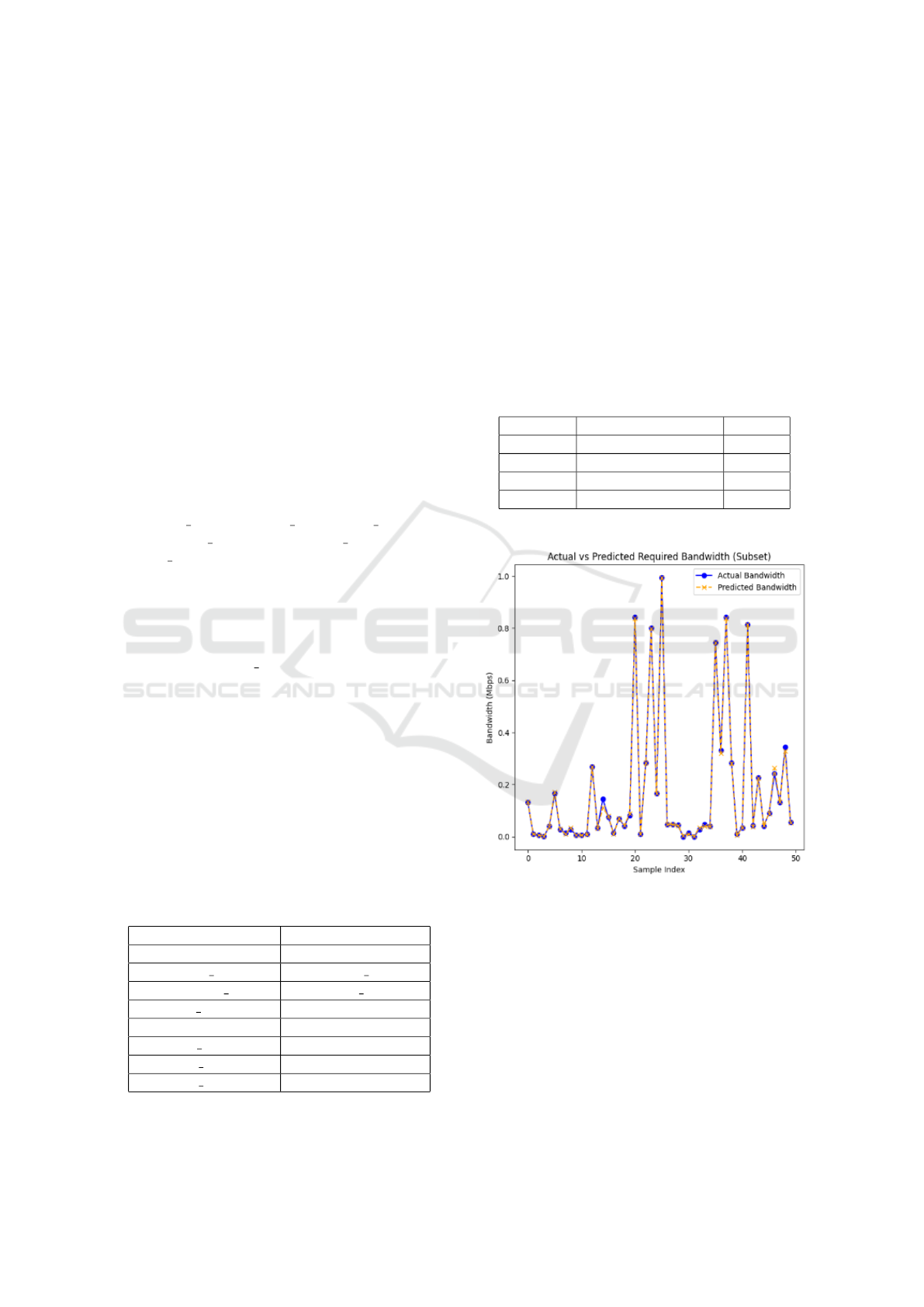

The combination of LSTM models followed by XG-

Boost regressors was successful in predicting required

bandwidth and latency for 5G applications. The

model obtained the following performance metrics:

Mean Absolute Error (MAE): Bandwidth Require-

ment: 0.0049 Latency: 0.0042 R-squared (R²): It has

high values with a good fit for bandwidth and latency

prediction. Threshold-based accuracy for 10% toler-

ance Required Bandwidth: 81.14% Latency: 96.15%

The predicted values were then further categorized

into 5G slices like URLLC, eMBB, and mMTC ac-

cording to their bandwidth and latency characteristics.

Table 2: Model performance metrics.

Metrics Predicted Attribute Result

MAE Required Bandwidth 0.0049

Accuracy Required Bandwidth 81.14%

MAE Latency 0.0042

Accuracy Latency 96.15%

Figure 4: Difference between actual and predicted required

bandwidth values

The results show that the proposed model can predict

required bandwidth and latency with adequate accu-

racy. The predicted values align well with the actual

values, as is visible from both the bandwidth and la-

tency plots. The model captures all trends properly,

including critical spikes that are highly important for

high-demand scenarios like IoT and real-time com-

munication.

The small differences are noticed especially on the

peak values; those differences could be caused by data

INCOFT 2025 - International Conference on Futuristic Technology

578

Figure 5: Difference between actual and predicted latency

values

noise or because of how the model could not really

handle extreme cases.

Figure 6: Slice Distribution

Classification of applications into the right 5G

slice based on the predicted values is practical signif-

icance in terms of efficient network resource alloca-

tion. High classification accuracy for URLLC appli-

cations, particularly in emergency services, will have

a significant impact on low-latency and highly reli-

able real-time communication. Such a model can aid

network operators in making dynamic decisions re-

garding service prioritization and resource allocation.

The original objective has been to predict key net-

work performance metrics, such as bandwidth and la-

tency, for different kinds of applications and classify

them into 5G slices. The objectives may be met by the

model through predicting bandwidth and latency and

classifying the samples of applications into URLLC,

eMBB, mMTC, or default slices. It has also classi-

fied emergency service samples correctly as URLLC,

well suitable for real-time network management and

emergency response scenarios.

The results indicate the robustness and accuracy

of the model in predicting the critical 5G parameters.

The ability to consistently predict required bandwidth

and latency highlights the model’s potential for op-

timizing resource allocation in dynamic 5G environ-

ments.

5 CONCLUSION

The proposed framework demonstrates significant

progress over existing methodologies in the 5G net-

work slicing with an ensemble learning methodology

that implements XGBoost for enhancing slice clas-

sification along with resources. This also provides

high predictions with low errors in bandwidth and la-

tency, where it depicts an improvement than the exist-

ing methods like IDM, EERAM, and A3C for enhanc-

ing energy efficiency. Integration of machine learning

techniques at every level of networking allows this

framework not only to improve coordination but also

to scale billions of IoT devices in dynamic environ-

ments. Additionally, by incorporating considerations

for privacy, fairness in access, and economical de-

ployment, the framework remains open and prepared

for emerging 6G.

This impact can further be elevated by the inclu-

sion of more diverse and realistic 5G datasets, fea-

tures encompassing network complexity, or even ad-

vanced temporal models that are Gated Recurrent

Unit(GRUs) or even attention-based LSTMs. On-

line and federated learning methodologies will open

new avenues for continuous adaptation to constantly

changing network conditions without compromise on

data privacy. The framework will provide an approach

to robust, scalable, and equitable solutions that will

cater to reliability and adaptability within the ever-

changing landscape of IoT and 5G networks, by ad-

dressing real-time updates and extreme network sce-

narios.

REFERENCES

Aljiznawi, R. A., Alkhazaali, N. H., Jabbar, S. Q., and Kad-

him, D. J. (2017). Quality of service (qos) for 5g net-

works. International Journal of Future Computer and

Communication, 6(1):27.

5G and IoT Integration: Optimizing Connectivity for Massive Machine-Type Communication (mMTC)

579

Baccouche, A., Garcia-Zapirain, B., Castillo Olea, C., and

Elmaghraby, A. (2020). Ensemble deep learning mod-

els for heart disease classification: A case study from

mexico. Information, 11(4):207.

Bockelmann, C., Pratas, N. K., Wunder, G., Saur, S.,

Navarro, M., Gregoratti, D., Vivier, G., De Carvalho,

E., Ji, Y., Stefanovi

´

c,

ˇ

C., et al. (2018). Towards mas-

sive connectivity support for scalable mmtc communi-

cations in 5g networks. IEEE access, 6:28969–28992.

Chen, M., Challita, U., Saad, W., Yin, C., and Debbah,

M. (2019). Artificial neural networks-based machine

learning for wireless networks: A tutorial. IEEE Com-

munications Surveys & Tutorials, 21(4):3039–3071.

Choi, J. Y. and Lee, B. (2018). Combining lstm network en-

semble via adaptive weighting for improved time se-

ries forecasting. Mathematical problems in engineer-

ing, 2018(1):2470171.

Firouzi, R. and Rahmani, R. (2024). Delay-sensitive re-

source allocation for iot systems in 5g o-ran networks.

Internet of Things, 26:101131.

Foukas, X., Patounas, G., Elmokashfi, A., and Marina,

M. K. (2017). Network slicing in 5g: Survey and chal-

lenges. IEEE communications magazine, 55(5):94–

100.

Hu, B., Zhang, W., Gao, Y., Du, J., and Chu, X. (2024).

Multi-agent deep deterministic policy gradient-based

computation offloading and resource allocation for

isac-aided 6g v2x networks. IEEE Internet of Things

Journal.

Imianvan, A. A. and Robinson, S. A. (2024). Enhancing

5g internet of things (iot) connectivity through com-

prehensive path loss modelling: A systematic review.

LAUTECH JOURNAL OF COMPUTING AND IN-

FORMATICS, 4(2):31–48.

Li, Y., Cheng, X., Cao, Y., Wang, D., and Yang, L. (2017).

Smart choice for the smart grid: Narrowband internet

of things (nb-iot). IEEE Internet of Things Journal,

5(3):1505–1515.

Logeshwaran, J., Kiruthiga, T., and Lloret, J. (2023a). A

novel architecture of intelligent decision model for ef-

ficient resource allocation in 5g broadband communi-

cation networks. ICTACT Journal On Soft Computing,

13(3).

Logeshwaran, J., Shanmugasundaram, N., and Lloret, J.

(2023b). Energy-efficient resource allocation model

for device-to-device communication in 5g wireless

personal area networks. International Journal of

Communication Systems, 36(13):e5524.

Lueth, K. L. (2020). State of the iot 2020: 12 billion iot

connections, surpassing non-iot for the first time. IoT

Analytics, 19(11).

Monem, M. A. (October 9, 2021). What is the importance

of network slicing? Network-Slicing-Diagram.

Najm, I. A., Hamoud, A. K., Lloret, J., and Bosch, I. (2019).

Machine learning prediction approach to enhance con-

gestion control in 5g iot environment. Electronics,

8(6):607.

Nguyen, D. C., Ding, M., Pathirana, P. N., Seneviratne, A.,

Li, J., and Poor, H. V. (2021). Federated learning for

internet of things: A comprehensive survey. IEEE

Communications Surveys & Tutorials, 23(3):1622–

1658.

Omar Sobhy, Ahmed Mousa, S. H. K. (2023). 5g resource

allocation dataset:optimizing band. Kaggle.

Pons, M., Valenzuela, E., Rodr

´

ıguez, B., Nolazco-Flores,

J. A., and Del-Valle-Soto, C. (2023). Utilization of

5g technologies in iot applications: Current limita-

tions by interference and network optimization diffi-

culties—a review. Sensors, 23(8):3876.

Rehman, W. U., Salam, T., Almogren, A., Haseeb, K., Din,

I. U., and Bouk, S. H. (2020). Improved resource al-

location in 5g mtc networks. Ieee Access, 8:49187–

49197.

(@rvwomersley), R. W. (2018). 5g triangle, enhanced ad-

vanced features. X.com post.

Shi, Y., Sagduyu, Y. E., and Erpek, T. (2020a). Reinforce-

ment learning for dynamic resource optimization in

5g radio access network slicing. In 2020 IEEE 25th

international workshop on computer aided modeling

and design of communication links and networks (CA-

MAD), pages 1–6. IEEE.

Shi, Y., Sagduyu, Y. E., and Erpek, T. (2020b). Reinforce-

ment learning for dynamic resource optimization in

5g radio access network slicing. In 2020 IEEE 25th

international workshop on computer aided modeling

and design of communication links and networks (CA-

MAD), pages 1–6. IEEE.

Tayyaba, S. K. and Shah, M. A. (2019). Resource alloca-

tion in sdn based 5g cellular networks. Peer-to-Peer

Networking and Applications, 12(2):514–538.

Yin, Z., Lin, Y., Zhang, Y., Qian, Y., Shu, F., and Li, J.

(2022). Collaborative multiagent reinforcement learn-

ing aided resource allocation for uav anti-jamming

communication. IEEE Internet of Things Journal,

9(23):23995–24008.

Zheng, G., Zhu, E., Zhang, H., Liu, Y., and Wang, C.

(2023). Research on throughput maximization of mass

device access based on 5g big connection. In Proceed-

ings of the 2023 International Conference on Commu-

nication Network and Machine Learning, pages 93–

96.

INCOFT 2025 - International Conference on Futuristic Technology

580