PRIV

´

E: Towards Privacy-Preserving Swarm Attestation

Nada El Kassem

1 a

, Wouter Hellemans

2 b

, Ioannis Siachos

3 c

, Edlira Dushku

4 d

,

Stefanos Vasileiadis

3 e

, Dimitrios S. Karas

3 f

, Liqun Chen

1 g

, Constantinos Patsakis

5 h

and Thanassis Giannetsos

3 i

1

University of Surrey, Guildford, U.K.

2

ES&S, COSIC, ESAT, KU Leuven, Leuven, Belgium

3

UBITECH Ltd., Athens, Greece

4

Aalborg University, Copenhagen, Denmark

5

University of Pireaus, Piraeus, Attiki, Greece

Keywords:

Swarm Attestation, Privacy, Direct Anonymous Attestation, Remote Attestation, In-Vehicle Networks.

Abstract:

In modern large-scale systems comprising multiple heterogeneous devices, the introduction of swarm attes-

tation schemes aims to alleviate the scalability and efficiency issues of traditional single-Prover and single-

Verifier attestation. In this paper, we propose PRIV

´

E , a privacy-preserving, scalable, and accountable swarm

attestation scheme that addresses the limitations of existing solutions. Specifically, we eliminate the assump-

tion of a trusted Verifier, which is not always applicable in real-world scenarios, as the need for the devices

to share identifiable information with the Verifier may lead to the expansion of the attack landscape. To this

end, we have designed an enhanced variant of the Direct Anonymous Attestation (DAA) protocol, offering

traceability and linkability whenever needed. This enables PRIV

´

E to achieve anonymous, privacy-preserving

attestation while also providing the capability to trace a failed attestation back to the compromised device.

To the best of our knowledge, this paper presents the first Universally Composable (UC) security model for

swarm attestation accompanied by mathematical UC security proofs, as well as experimental benchmarking

results that highlight the efficiency and scalability of the proposed scheme.

1 INTRODUCTION

In recent years, the exponential proliferation of low-

cost embedded devices and the Internet of Things

(IoT) has significantly contributed to developing

innovative environments, particularly in advancing

Intelligent Transportation Systems. Unfortunately,

these devices represent natural and attractive malware

attack targets despite many benefits. To ensure the

correctness of the operational state of a device, Re-

a

https://orcid.org/0000-0002-2827-6493

b

https://orcid.org/0000-0003-2344-044X

c

https://orcid.org/0009-0003-2310-921X

d

https://orcid.org/0000-0002-4974-9739

e

https://orcid.org/0009-0005-2193-4464

f

https://orcid.org/0000-0002-2680-6374

g

https://orcid.org/0000-0003-2680-4907

h

https://orcid.org/0000-0002-4460-9331

i

https://orcid.org/0000-0003-0663-2263

mote Attestation (RA) has been proposed to detect un-

expected modifications in the configuration of loaded

binaries and check software integrity. However, typ-

ical RA schemes assume the existence of a single

Prover and a single Verifier, introducing efficiency

and scalability issues for large-scale systems compris-

ing multiple Edge and IoT devices. In this context,

swarm attestation (Ambrosin et al., 2020) has been

proposed to enable the root Verifier (V ) to check the

sanity of a set of swarm devices simultaneously.

Several swarm attestation schemes have been pro-

posed in the literature (Asokan et al., 2015; Am-

brosin et al., 2016; Dushku et al., 2023; Le-Papin

et al., 2023) to verify the integrity and establish trust

among devices in large-scale systems. In such envi-

ronments, trust is pivotal in the decision-making pro-

cesss as it helps the devices to select collaborators,

to assess the trustworthiness of incoming data, and to

mitigate threats from untrustworthy or hostile entities.

However, in state-of-the-art solutions, the trust is typ-

El Kassem, N., Hellemans, W., Siachos, I., Dushku, E., Vasileiadis, S., Karas, D. S., Chen, L., Patsakis, C., Giannetsos and T.

PRIVÉ: Towards Privacy-Preserving Swarm Attestation.

DOI: 10.5220/0013629000003979

In Proceedings of the 22nd International Conference on Security and Cryptography (SECRYPT 2025), pages 247-262

ISBN: 978-989-758-760-3; ISSN: 2184-7711

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

247

ically confined to system integrity and dependability

aspects, excluding other important dimensions such

as identity privacy (anonymity) and evidence privacy.

In such complex and dynamic systems, we of-

ten have to make trust decisions based on incom-

plete, conflicting, or uncertain information. Tradi-

tional probabilistic models assume that we have com-

plete knowledge of all possibilities and their respec-

tive probabilities, but in dynamic, distributed environ-

ments, this is rarely the case. For example, a sys-

tem might receive conflicting data from different data

sources or communication channels. Also, not all data

sources are equally reliable, and some may be com-

promised or faulty.

In such environments, the use of an evidence-

based theory for trust assessment becomes essential,

because it allows us to explicitly represent uncer-

tainty. Rather than forcing a decision based on in-

complete data, we can account for the fact that we

do not have enough information, thus reflecting un-

certainty in our trust assessments. A core enabler in

this direction is the use of robust (swarm) attestation

mechanisms for extracting evidence on the status of

the target device in a verifiable manner. Depending

on the property of the device to be assessed, differ-

ent types of evidence may be required. In the context

of security-related trust properties, trust sources in-

clude detection mechanisms, e.g., Intrusion Detection

Systems, Misbehaviour Detection Systems, or mecha-

nisms to evaluate the existence and/or enforcement of

security claims such as secure boot, configuration in-

tegrity etc. In its simplest form, the received evidence

can be distinguished into one main category: Binary

evidence that allows the verifier to compute whether a

security claim is valid or not. For instance, this is the

case of the secure boot certificate chain that allows a

verifier to make an informed decision that the prover

has executed its secure boot process. On the other

side, there is evidence that results in claims expressed

in the form of a range. This is the case of some Misbe-

haviour Detection systems that provide a score- e.g.,

confidence level- about abnormal behaviour of a de-

vice. This diversity of the evidence outcome intro-

duces the need for robust attestation mechanisms to

enable the quantification of trustworthiness in an effi-

cient and reliable manner.

Typical swarm attestation schemes rely on a span-

ning tree (Asokan et al., 2015; Carpent et al., 2017)

to aggregate the attestation results, where swarm de-

vices are attested by neighboring devices based on a

parent-child relationship in a tree format with a cen-

tralized Verifier. To address this fixed parent-child re-

lationship, other works leverage the broadcasting ag-

gregate pattern, in which every device broadcasts its

response to the attestation challenge to its neighbors

(Kohnh

¨

auser et al., 2018). However, there has been

very limited focus on privacy issues of the swarm de-

vices (Abera et al., ). While some schemes do not

provide information about the individual devices or

devices causing a failed attestation, they do not ex-

plicitly focus on privacy (e.g., (Asokan et al., 2015),

(Ambrosin et al., 2016)). Conversely, other attestation

schemes only provide information about the devices

causing a failed attestation, such as (Ammar et al.,

2020). Moreover, two schemes are proposed in (Car-

pent et al., 2017), one of which keeps a list of the

failed devices, and the other does not.

Swarm attestation faces significant challenges in

maintaining identity and evidence privacy, especially

in large-scale systems with numerous IoT and edge

devices. In this context, we have to be able to make

trust decisions based on fresh trustworthiness evi-

dence without impeding the privacy of the overall

system. Therefore, it is crucial to conceal each de-

vice’s identity and the nature of the evidence they

present. However, the dynamic nature of such (multi-

agent) systems requires that trust assessment is not

only based on reasoning under uncertainty (evidence

may be incomplete, conflicting, or contain uncertain

information on the integrity of the device), but it also

ensures that the evidence used to compute trust opin-

ions is verifiable. Trust cannot be randomly assigned

or assumed from previous interactions but must be

continuously verified and updated through collectable

evidence. This principle aligns with the Zero-Trust

paradigm, which dictates that no entity should be in-

herently trusted without validation. Verification of ev-

idence is related to the use of Trust Anchors since they

are the starting point for verifying the chain of trust.

Consider, for instance, the case of Connected

and Cooperative Automated Mobility (CCAM) ser-

vices in the automotive domain, where it is critical

to assess trustworthiness in terms of integrity without

compromising the safety-critical profile and privacy

of the running services (e.g., Collision Avoidance).

Especially when considering the need to share such

trusting opinions between different administrative do-

mains. Swarm attestation must effectively verify the

status of these devices within the in-vehicle network

to ensure that safety decisions are made based on reli-

able sensor inputs. On the other hand, Verifiers should

not ascertain which specific device attests to which

property or the exact expected measurement values.

Disclosing such information could lead to implemen-

tation disclosure attacks, vehicle fingerprinting, or

driver behavior tracking. These concepts, while in-

terrelated, require clearer terminological distinctions

to facilitate practical trust assessment and manage-

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

248

ment within decentralized environments of mixed-

criticality.

One efficient technique that allows for checking

the integrity of the devices while preserving their

anonymity is called Direct Anonymous Attestation

(DAA). In this work, we extend the DAA to offer two

security properties besides anonymity: evidence pri-

vacy and traceability. The evidence privacy means

that the signer doesn’t disclose the signed message,

such as the current device’s configuration, to the Veri-

fier while still allowing public verifiability. We obtain

this by binding the use of the DAA signing key to a re-

stricted policy that only allows the key to sign a mes-

sage if the policy is satisfied. This subsequently al-

lows the Verifier to trust the attestation results without

accessing sensitive information. Traceability enables

the correct tracing of a device in case of any failed

attestation. The dual protection of device identity

and evidence ensures privacy and robust accountabil-

ity, making it essential for trustworthiness evidence

provision in today’s evidence-based trust assessment

frameworks.

To address the aforementioned challenges, this

paper introduces a secure and efficient attestation

scheme that can correctly make valid statements

about the integrity of both single devices and a swarm

of devices in a privacy-preserving but accountable

manner. This means that the designed scheme should

be able to provide verifiable evidence on the correct-

ness of a swarm by concealing the identities of the de-

vices, and only in the case of a possible compromise

detection should it allow for tracing back a failed at-

testation to the swarm device that caused the failure,

instead of isolating the entire swarm.

Contributions. In this paper, we pro-

pose the PRIV

´

E swarm attestation scheme for

privacy-preserving swarm attestation. We lever-

age trusted computing technologies and strong

privacy-enhancing cryptographic protocols (e.g.,

DAA (Brickell et al., 2004; Chen et al., 2023)), which

provide decentralized privacy-preserving attestation

using blind group signatures. PRIV

´

E considers an in-

terconnected network of heterogeneous IoT and Edge

devices to convince a Verifier (V ) of the integrity

of the attestation result based on signed attestation

evidence of the enrolled swarm devices. Addition-

ally, in its operation, PRIV

´

E safeguards the devices’

identity privacy but also provides traceability in case

the attested device is deemed compromised. Thus,

we do not assume the trustworthiness of the IoT/Edge

devices or the Verifier. To the best of our knowledge,

we provide the first detailed mathematical analysis

for swarm attestation schemes in the Universally

Composable (UC) model. This analysis provides

proof regarding all envisioned security and privacy

requirements in PRIV

´

E. Furthermore, we provide a

benchmarking analysis to demonstrate the protocol’s

performance and real-world applicability.

2 PRELIMINARIES

Notation.We present the notations that will be fol-

lowed throughout the paper in Table 1.

PRIV

´

E Building Blocks. To enable a privacy-

preserving and accountable swarm attestation ap-

proach, PRIV

´

E adopts DAA to compute the device

attestation with zero knowledge and relies on bilin-

ear aggregation signatures to efficiently aggregate the

devices’ signatures across the swarm.

Direct Anonymous Attestation (DAA). DAA

(Brickell et al., 2004; Brickell et al., 2008), is

a platform authentication mechanism that allows

privacy-preserving remote attestation of a device

associated with a Trusted Component (TC) but does

not support the property of traceability. In general,

DAA requires an Issuer and a set of Signers. , and

a set of Verifiers. It includes five algorithms: Setup,

Join, Sign, Verify, and Link. The issuer produces

a DAA membership credential for each signer,

corresponding to a signature of the signer’s identity.

A DAA signer consists of the Host and TC pair.

Bilinear Aggregation Signatures. The purpose of

designing aggregate signatures is to reduce the length

of digital signatures in applications that use multiple

signatures (Boneh et al., 2003b). In a general aggre-

gate signatures scheme, the aggregation can be done

by anyone without the signers’ cooperation. In par-

ticular, each given user i signs her message m

i

to ob-

tain a signature σ

i

. Consider a set of k signatures

σ

1

,...,σ

k

on messages m

1

,...,m

k

under public keys

PK

1

,...,PK

k

, respectively. In this case, anyone can

use a public aggregation algorithm to compress all k

signatures into a single signature σ, whose length is

the same as a signature on a single message. To ver-

ify it, all the original messages and public keys are

needed. In particular, given an aggregate signature σ,

public keys PK

1

,...,PK

k

and messages m

1

,...,m

k

, the

aggregate verification algorithm verifies whether the

aggregate signature σ is valid.

The bilinear aggregate signature scheme (Boneh

et al., 2003b) enables efficient aggregation, allow-

ing an arbitrary aggregating party unrelated to, and

untrusted by, the original signers to combine k pre-

existing signatures into a single aggregated signature.

The scheme consists of the following algorithms:

Key Generation. For a particular user, pick random

x ← Z

p

and compute w ← g

x

2

. x ∈ Z

p

and w ∈ G

2

are

PRIVÉ: Towards Privacy-Preserving Swarm Attestation

249

Table 1: Notation Summary.

Symbol Description Symbol Description

TC Trusted Component (x,y) The Privacy CA private key

E

j

The j

th

edge device (X ,Y) The Privacy CA public key

M

j

TC that corresponds to the j

th

edge device (x

L

,y

L

) The IoT device long-term key-pair

H

j

The host that corresponds to j

th

edge device (x

p

,y

p

) The IoT device short-term key-pair (pseudonym)

V Verifier σ

L

DAA

DAA signature on the long-term public key y

L

v Number of edge devices in the swarm σ

i

i

th

IoT device signature on its current state m

i

t TC’s private key SPK Signature based Proof of Knowledge

Q

j

E

j

’s public key known by the Privacy CA ch, f Attestation challenges

(a,b, c, d) E

j

’s credential created by the Privacy CA ρ,r, s Random numbers in Z

q

T

j

E

j

’s public tracing key known by the Opener k Number of IoT devices in the swarm

T The Opener’s Tracing Key σ

[1−n]

An aggregated signature of n IoT devices

q A prime number defining the order of cyclic groups bsn A random input in {0,1}

∗

g

1

,g

2

,g

3

Generators of the groups G

1

,G

2

,G

3

M

∗

i

i

th

IoT devices’ golden configuration (legitimate state)

Function Description Proofs Description

H Hash function: H : {0,1}

∗

−→ G

1

π

ipk

Proof of CA public key construction

e Pairing function: e : G

1

× G

2

→ G

3

π

1

,π

2

Proofs of construction of Q

j

and T

j

e(g

1

,g

2

) → g

3

π

CA

j

Proof of E

j

’s credential construction

π

L

,π

p

Proofs of construction of y

L

and y

p

, respectively

the user’s private and public keys, respectively.

Signing. For a particular user, given the public key w,

the private key x, and a message m ∈ {0,1}

∗

, compute

h ← H(w,m), where h ∈ G

1

, and σ ← h

x

.

Verification. Given a user’s public key w, a message

m, and a signature σ, compute h ← H(w, m). The sig-

nature is accepted as valid if e(σ,g

2

) = e(h,w).

Aggregation. Arbitrarily assign an index i to each

user whose signature will be aggregated, ranging

from 1 to k. Each user i provides a signature σ

i

∈ G

1

on a message m

i

∈ {0,1}

∗

of her choice. Compute

signature σ ←

∏

k

i=1

σ

i

.

Aggregate Verification. Given an aggregate signa-

ture σ ∈ G

1

for a set of users (indexed as before),

the original messages m

i

∈ {0,1}

∗

, and public keys

w

i

∈ G

2

. To verify the aggregate signature σ, com-

pute h

i

← H(w

i

,m

i

) for 1 ≤ i ≤ k, and accept if

e(σ,g

2

) =

∏

k

i=1

e(h

i

,w

i

) holds.

3 SYSTEM MODEL

We consider a static interconnected network of het-

erogeneous devices consisting of v edge devices and

k IoT devices, following a hierarchical fog computing

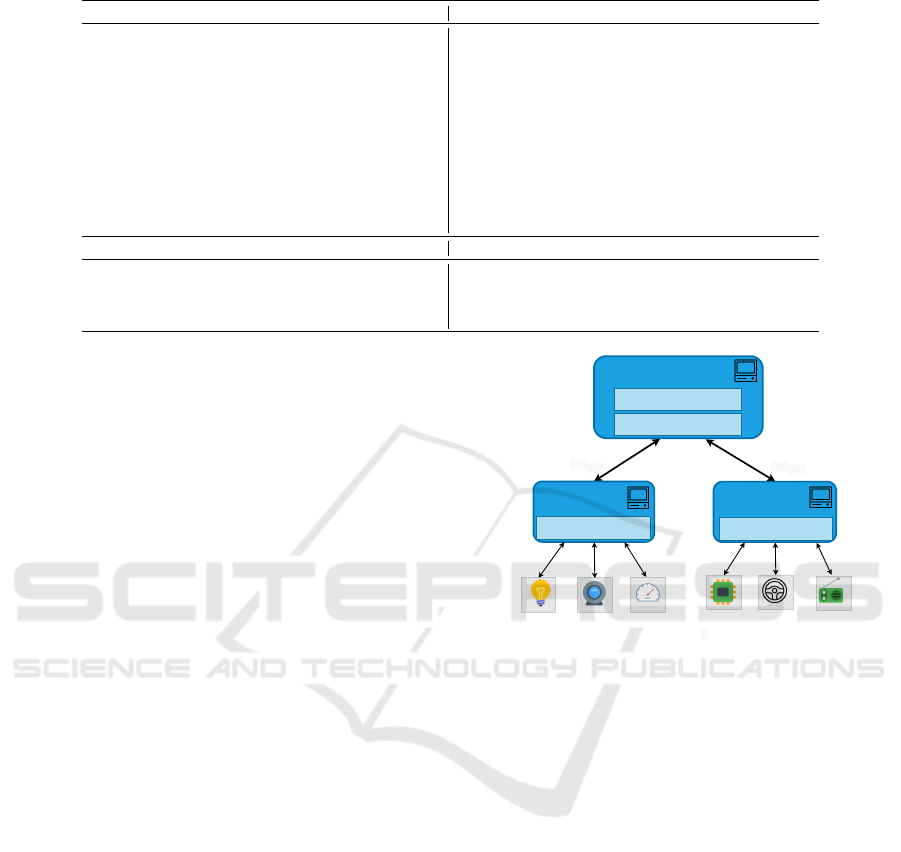

structure (ope, 2018), as depicted in Fig. 1.

IoT Devices (D): An untrusted and resource-

constrained device (e.g., a sensor), that is uniquely

identified as D

i

for i ∈ [1,k]. It is assumed that D has

a minimal Trusted Computing Base (TCB) and is con-

nected to only one edge device. The TCB includes (1)

a Read-Only Memory (ROM) containing the attesta-

tion protocol code, and (2) Secure Key Storage that is

read-accessed only by the attestation protocol.

Edge Devices (E): An untrusted, powerful device

with sizeable computational power and storage ca-

pacity. It is a combination of a Host H

j

(i.e., “nor-

Edge 2: σ

E2

DAA

Edge 3: σ

E3

DAA

Edge 1: σ

E1

DAA

σ

1

σ

3

σ

2

σ

4

σ

6

s

i:

the secret key of the i

th

IoT device and σ

i

= H(m)

si

σ

E2

DAA

σ[1−3]

σ

E3

DAA

σ[4−6]

σ[1−3] = σ

1

× σ

2

× σ

3

s

1

s

2

s

3

s

4

s

5

s

6

σ[4−6] = σ

4

× σ

5

× σ

6

σ

5

σ[1−3]× σ[4-6]

σ

E2

DAA

| σ

E3

DAA

| σ

E1

DAA

Figure 1: Overview of the Swarm Topology.

mal world”) and a Trusted Component (TC) (i.e., “se-

cure world”) such as Trusted Platform Module (TPM

2.0) (tpm, 2016). Each edge device is uniquely iden-

tified as E

j

for j ∈ [1,v]. Each E is a parent of a set of

heterogeneous IoT devices and knows its children’s

legitimate state (i.e., a hash of the binaries). Thus,

each edge device authenticates its children’s IoT de-

vices.

Verifier (V ): Any third party that initiates the attes-

tation and validates the trustworthiness of the swarm.

The Verifier knows the number of IoT devices in-

volved in the swarm. We assume an honest-but-

curious Verifier that legitimately performs the verifi-

cation but will attempt to learn all possible informa-

tion from the received attestation results.

Privacy Certification Authority (Privacy CA): A

trusted third party that acts both as an Issuer and Net-

work Operator. It is responsible for conducting the

secure setup of the fog architecture, verifying the cor-

rect creation of all cryptographic primitives, and au-

thorizing the devices to join the network.

Opener (Opener or Tracer): A trusted entity that

traces the compromised device(s) when the swarm at-

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

250

testation fails to perform recovery actions.

Threat Model. In the context of the system de-

scribed above, we consider remote software adver-

saries (Adv

SW

) that aim to compromise IoT and edge

devices in the swarm by exploiting software vulner-

abilities and injecting malicious code. Moreover, we

consider honest but curious adversaries Adv

HBC

who

are legitimate participants in the swarm but aim to

disclose the device’s privacy. Additionally, swarms

are susceptible to network adversaries (Adv

NET

) that

can forge, drop, delay, and eavesdrop on the messages

exchanged among two devices in the swarm. We con-

sider a classic Dolev-Yao (DY) (Dolev and Yao, 1983)

adversary with full control over the communication

channel between an IoT device and edge device in

the swarm, two edge devices, or one edge device and

the Verifier. However, in line with other works (Ca-

menisch et al., 2017; Wesemeyer and all, 2020), we

assume a perfectly secure channel between the Host

and the TC in an edge device. Thus, the Adv

NET

can-

not intercept the interaction between the Host and the

TC or use the TC as an oracle. Physical Adversaries

(Adv

PHY

) are beyond our scope.

4 SECURITY REQUIREMENTS

To prove the security properties of PRIV

´

E , we em-

ploy the UC model introduced in (§ 6.1), for which

we define the following high-level security properties:

Anonymity (SP1): It should be possible to conceal

the identity of honest Edge and IoT devices from Ver-

ifier V by creating anonymous signatures that per-

fectly hide the identities of the devices in a swarm.

Traceability (SP2): It should be possible for the

Opener to trace the source of an attestation failure

back to its source so that a potentially compromised

edge device can be revoked. On the other hand, an

honest parent edge device can trace its children IoT

signatures back to their source IoT devices (SP3).

Correctness (SP4): Honestly generated signatures

should always be considered valid, and honest users

should not be revoked.

Non-frameability (SP5): It should not be possible

for an adversary to create signatures that successfully

impersonate an honest device or link to honest signa-

tures. This extends to Unforgeability (SP6), where it

should be computationally infeasible to forge a signa-

ture σ on a message µ that is accepted by the verifica-

tion algorithm when no honest device signs µ.

Linkability (SP7): It should be possible for a Verifier

to check if any two DAA signatures signed under the

same basename originate from the same edge device

without breaching its privacy.

5 THE PRIV

´

E PROTOCOL

We present an overview of the main phases of our pro-

posed protocol PRIV

´

E without first including the de-

tails of internal cryptographic primitives, which will

be presented in § 5.1. The setup phase is a one-time

offline procedure performed by the Privacy Certifi-

cation Authority (Privacy CA) to guarantee the se-

cure deployment and enrollment of the devices in the

swarm and the creation of the IoT device short-term

keys (pseudonyms). The attestation process is then

initiated by the Verifier V that sends a challenge f

to one or more edge devices, which will then dis-

tribute it recursively to all its (children) swarm de-

vices. Then, each IoT Device D

i

produces and signs

its attestation evidence (attestation claim) using a pre-

certified pseudonym. All these claims are sent to the

parent Edge Device, E

j

, that aggregates them, ap-

pends its DAA signature, and sends the aggregated

claims together with its DAA signature to the root

edge (such as Edge 1 in Figure 1). When the root

edge receives all other edges’ contributions, it creates

its DAA signature, aggregates the received IoT ag-

gregated claims and appends all the DAA signatures,

including its own. This constitutes the swarm signa-

ture. The swarm signature is then forwarded to V .

After obtaining the swarm attestation report, V ver-

ifies DAA signatures and checks the total number of

IoT signatures to ensure that all IoT devices have been

included in the report. In the case of a non-valid DAA

signature or a missing IoT signature, V leverages the

novel traceability feature of PRIV

´

E to trace the result

back to the compromised device.

5.1 Detailed Description of PRIV

´

E

In this section, we provide a detailed description

of PRIV

´

E . The required notation is presented in

Tab. 1, The protocol consists of the Setup and Join

(§ 5.1.1), Attestation (§ 5.1.2), and Verification

(§ 5.1.3) phases.

5.1.1 Setup and Join Phases

The setup phase starts with the Privacy CA being

equipped with the necessary key pairs x,y ← Z

q

,

where X = g

x

2

and Y = g

y

2

. The Privacy CA, if needed,

can prove the well-formed construction of the secret

key (x, y) through the creation of a proof of knowl-

edge π

ipk

(that can also be signed by the Root Certi-

fication Authority in such systems). (X,Y ) are public

parameters shared by the Privacy CA and other sys-

tem parameters.

Edge-Join. The edge device Enrollment process

starts from an edge device E

j

, where j ∈ [1,v], that

PRIVÉ: Towards Privacy-Preserving Swarm Attestation

251

sends an enrollment request to the Privacy CA for

joining the target service graph chain. The Privacy

CA chooses a fresh nonce ρ and sends it to E

j

, who

forwards it to its embedded TC denoted by M

j

. Then,

M

j

chooses a secret key t ← Z

q

, sets its public key

Q

j

= g

t

1

. We use a policy that can be satisfied if

a selection of the Platform Configuration Registers

(PCR)s matches a predetermined value, referencing

a trusted state. We use PolicyPCR commands (of the

underlying Root-of-Trust) to ensure that the edge de-

vice signing key is inoperable if the integrity of the

Edge device is compromised (cf. Section 8 for fur-

ther details on the implementation of such commands

in the case of a Trusted Platform Module (TPM) as

the host secure element). M

j

can also compute π

1

j

as

a proof of construction of Q

j

. This proof, Q

j

, can

be signed with the embedded TC’s endorsement key,

thus elevating it to a verifiable credential over the cor-

rect construction and binding of the secret key t. The

pair (Q

j

,π

1

j

) (alongside the public part of the TC’s

root certificate) is then sent to the Privacy CA who

verifies π

1

j

and checks whether the edge device is el-

igible to join, i.e. the edge device DAA key has not

been registered before. Upon validation, the Privacy

CA picks a random r ← Z

q

and generates E

j

’s DAA

credential (a,b,c,d) by setting a = g

r

1

,b = a

y

,c =

a

x

Q

rxy

j

and d = Q

ry

j

. The Privacy CA also generates a

proof π

CA

j

on the credential construction.

Generating the Tracing Keys by the Opener. Each

M

j

sets T

j

= g

t

2

and computes π

2

j

as a proof of con-

struction of T

j

. M

j

sends (T

j

,Q

j

,π

1

j

,π

2

j

) via E

j

to the

Opener. The Opener verifies π

1

j

and π

2

j

and makes

sure that T

j

and Q

j

link to the same TC by checking

if the following holds:

e(Q

j

,g

2

) = e(g

1

,T

j

) (1)

To convince the Opener that T

j

is a correct key, M

j

may also send to the Opener the DAA credential of

Q

j

issued by the Privacy CA. After receiving all edge

devices T

j

, for all j ∈ [1, v], and their corresponding

public keys Q

j

, the Opener sets its tracing key T as

follows: T = {T

1

,T

2

,...,T

v

} and keeps the (Q

j

,T

j

)

pairs for all j ∈ [1, v].

IoT-Join. Each IoT device is equipped with a long-

term key pair (x

L

∈ Z

q

,y

L

) whose public part y

L

=

g

1

x

L

represents the IoT device’s identity and is certi-

fied by the Privacy CA. Let π

L

represent a proof of

construction of y

L

= g

1

x

L

. The enrollment of IoT de-

vices consists of the following steps:

1. Each edge device certifies its children’s IoT long-

term key by creating a DAA signature σ

L

DAA

on y

L

.

2. Once the long-term key is certified, each IoT

device creates short-term random keys (x

p

∈

Z

q

,y

p

= g

x

p

2

,π

p

), for each integer p ∈ [1,...P]

where π

p

is a proof of construction of y

p

and P is

the total number of pseudonyms (short-term ran-

dom keys) that will be certified by the edge de-

vice. This is called pseudonymity, which is the

ability of an edge device to use a resource or

service without disclosing the IoT user’s identity

while still being accountable for that action.

3. The IoT device self-certifies its public key y

p

by

creating a signature σ

p

L

on y

p

using its own long-

term secret key x

L

. This signature prevents any

other device (including edge devices and author-

ities) from signing on behalf of the IoT device

without knowing the IoT device’s long-term se-

cret signing key x

L

. Finally, the IoT device sends

(y

L

,y

p

,σ

p

L

,π

p

) for each p ∈ [1,...P] to its mother.

4. The edge device verifies π

p

and σ

p

L

on y

p

for

each p ∈ [1, . . . P]. Upon successful verification,

the edge device keeps (y

p

,y

L

,σ

L

DAA

,σ

p

L

) in its

records to be able to link each set of certified IoT

pseudonyms to their long-term key for each child

IoT device. This is particularly useful in cases

where an edge device needs to trace the identity

of its children’s IoT signatures.

We remove the index p from the remaining protocol

description and use σ

i

and (x

i

,y

i

) to denote the D

i

’s

signature and short-term key, respectively.

5.1.2 Attestation Phase

The swarm attestation is initiated by a Verifier V that

sends a challenge f to an edge device, which will then

distribute it recursively to all devices in the swarm.

The IoT devices sign their attestation results and re-

port them to their parent edge devices.

IoT Device Signature. When an IoT device receives

an attestation challenge f , it concatenates its current

configuration m

∗

i

with the challenge f . Then, signs

the attestation response m

i

= (m

∗

i

| f ) using one of its

short-term keys x

i

previously certified by its parent

edge device during the enrollment procedure. Each x

i

is only used once to achieve IoT identity privacy. The

IoT device computes a BLS signature as follows:

σ

i

= H(m

i

)

x

i

(2)

The IoT device sends σ

i

and y

i

to its parent edge. The

parent edge device checks that every received y

i

is cer-

tified, relying on its records. The edge then aggregates

all the c received children signatures σ

1

,...σ

c

in one

signature σ

[1−c]

as follows σ

[1−c]

= σ

1

× ... × σ

c

=

H(m

1

)

x

1

× . . . × H(m

c

)

x

c

. When the attestation of a

given IoT device is missing, the edge device will not

include it in the aggregation.

Edge Device Signature & Traceability. The creation

of a traceable DAA signature starts with an edge de-

vice E

j

, with an embedded trusted component M

j

,

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

252

that gets a Verifier basename bsn defined as a random

string {0,1}

∗

to enable traceability and linkability of

DAA signatures. The signature generation follows the

following steps:

1. To sign a message µ with respect to the basename

bsn, the edge device randomizes its credential by

choosing a random s ← Z

q

, sets (a

′

,b

′

,c

′

,d

′

) ←

(a

s

,b

s

,c

s

,d

s

), and then sends (µ,bsn,b

′

) to M

j

.

2. Next, M

j

calculates the link token nym = (bsn)

t

.

E

j

and M

j

calculate a a Signature based Proof of

Knowledge SPK of the secret key t and its creden-

tial as follows:

SPK{t : nym = H(bsn)

t

,d

′

= b

′t

}(bsn,µ) (3)

M

j

samples ω ← Z

q

, sets K = H(bsn)

ω

, J = b

′ω

and sends (nym,K, J) to E

j

that calculates the

challenge ch = H(a

′

,b

′

,c

′

,d

′

,nym,K,J,µ) in Z

q

and sends it to M

j

. M

j

then creates a Shnorr sig-

nature δ = ω + ch t. Finally, the SPK has a form

of (δ,ch) and is included in the DAA signature.

3. The DAA signature is (a

′

,b

′

,c

′

,d

′

,SPK,nym). To

this end, the swarm signature is

σ

[1−k]

,σ

j

DAA

,y

i

∀ i ∈ [1 − k] and j ∈ [1 − v], where k and v are

the total numbers of IoT and edge devices in the

swarm, respectively and σ

[1−k]

denotes the aggre-

gation of all the IoT signatures in the swarm. The

root edge performs this aggregation. If the attesta-

tion of some IoT devices is unsuccessful or miss-

ing, the mother edge device does not aggregate

such attestations. The final swarm aggregated sig-

nature will be as follows:

σ

[1−l]

,σ

j

DAA

,y

i

, ∀ i ∈

[1 − l] with l < k.

5.1.3 Verification Phase

Attestation Report Verification. After receiv-

ing an attestation report, the Verifier checks

the DAA signature of each edge device σ =

a

′

,b

′

,c

′

,d

′

,nym, SPK = (δ, ch)

on a message µ

w.r.t. a basename bsn that is published with the sig-

nature. In particular, the Verifier verifies SPK w.r.t.

(µ,bsn) and nym, and checks that a

′

̸= 1, e(a

′

,Y ) =

e(b

′

,g

2

), e(c

′

,g

2

) = e(a

′

d

′

,X).

We assume that the verifiers have access to the i

th

IoT

devices’ golden configuration, denoted by M

∗

i

, ∀ i ∈

[1,k]. The Verifier calculates H(M

i

), with M

i

=

(M

∗

i

| f ), and checks the following equation:

e(σ

[1−k]

,g

2

)

?

= e

H(M

1

),y

1

× .. . × e

H(M

k

),y

k

(4)

that is only valid if the IoT device’s current configu-

ration m

i

, used in Equation 2, matches the golden one

M

i

without knowing the value of m

i

; this offers IoT

evidence privacy. The Verifier claims that the swarm

attestation has failed if the aggregate result has less

than k aggregated signatures or if the verification of

Equation 4 fails. The verifier also checks that ch =

H(a

′

,b

′

,c

′

,d

′

,nym,H(bsn)

δ

nym

−ch

,b

′

δ

d

′

−ch

,µ).

Further, the Verifier can interact with the Opener

to initiate the tracing of the device with a failed

attestation.

Link. When a Verifier can access different

swarm attestation reports, she can check whether

any two DAA signatures originate from the same

edge device. Specifically, the Verifier checks if

both signatures are signed under the same base-

name known by the Verifier. More formally,

given σ

1

= (a

′

1

,b

′

1

,c

′

1

,d

′

1

,nym

1

,SPK

1

) and σ

2

=

(a

′

2

,b

′

2

,c

′

2

,d

′

2

,nym

2

,SPK

2

) on a message µ w.r.t. a

basename bsn. In this setting, the output is 1 when

both signatures are valid and nym

1

= nym

2

; other-

wise, the output is 0.

The linkability of the IoT devices in a swarm attes-

tation relies on the static topology of the swarm; each

parent edge device has in its records the set of all cer-

tified pseudonyms associated with the corresponding

IoT device long-term key (used as the identity of the

IoT device) for each child IoT device. Thus, when-

ever an IoT device creates two signatures in two dif-

ferent SA instantiations, the edge device can identify

whether these two signatures originate from the same

IoT device (the edge device uses traceability to sup-

port linkability of IoT devices).

Tracing/Opening. In PRIV

´

E , traceability consists of

two levels: The first level of swarm attestation trace-

ability relies on the traceability of the DAA signature,

which can be done by the Opener using its tracing

key. We achieve this by adding a traceability require-

ment for the existing DAA scheme (Camenisch et al.,

2016) to obtain a novel Traceable DAA protocol that

meets our security and privacy requirements. The sec-

ond level relies on the static swarm topology that links

each parent to their children. Tracing the IoT device

can be done by the edge device with the knowledge

of the IoT long-term key corresponding to the cer-

tified short-term pseudonyms. Specifically, starting

with a DAA signature σ

DAA

with a corresponding link

token nym = H(bsn)

t

, the Opener uses its tracing key

T = {T

1

,T

2

,...,T

v

} to check the following equation:

e(nym,g

2

) = e(H(bsn), T

j

) ∀ j ∈ [1 − v] (5)

A successful verification allows the Opener to recover

M

j

’s public key Q

j

corresponding to T

j

in its records,

as explained previously during the setup of the Trac-

ing Keys by the Opener. Once the edge device’s iden-

tity is recovered, it is easy for the edge device to re-

cover any of its children’s long-term public keys that

are certified by this edge device.

PRIVÉ: Towards Privacy-Preserving Swarm Attestation

253

Revocation. Any Revocation Authority (RA), which

may also be the Opener, can remove misbehaving

edge devices without revealing the edge device’s

identity. The intuition behind the revocation scheme

is to be able to deactivate any DAA credential of

an edge device created during its enrollment (Larsen

et al., 2021). We assume that a Revocation Authority

RA has access to a set of revoked DAA keys KRL.

The revocation authority checks that the DAA key

used to create the DAA signature is not in the revo-

cation list. This is done by checking that: ∀ t

∗

∈

KRL, nym ̸= H(bsn)

t

∗

. PRIV

´

E also supports revo-

cation of the IoT devices by their parent edge de-

vice. We assume the edge device has access to a set

of IoT revoked keys called the IoT-Key Revocation

List IoTKRL. It can then check that each of its chil-

dren’s IoT signatures were not produced by any key

in IoTKRL. Relying on the trust of the edge devices

and the accuracy of the updated IoTKRL, edge de-

vices would also be able to correctly revoke the IoT

devices that their keys are IoTKRL.

6 PRIV

´

E SECURITY ANALYSIS

In this section, we first introduce the Universally

Composable (UC) framework(§ 6.1), then we model

PRIV

´

E in the Universally Composable (UC) frame-

work(§ 6.2), capturing all the security and privacy re-

quirements defined in § 4.

6.1 Methodology

Due to the complexity of the PRIV

´

E scheme, it isn’t

easy to perform a complete security proof based on

traditional formal verification and symbolic modeling

techniques as the specified models might prove chal-

lenging to analyze and verify even in computational

rich machines (Meier et al., 2013). Therefore, we em-

ploy the Universal Composability (UC) model, based

on which we aim to equate the real-world network

topology to an ideal-world model, both being indis-

tinguishable from each other from the perspective of

an adversary, in which it is possible to break down the

proposed scheme into simpler building blocks with

provable security. Specifically, the security defini-

tion of our swarm attestation protocol is given with

respect to an ideal functionality F . This is depicted

in Figure 2 for a swarm of three edges and four IoT

devices. However, the UC model can be generalized

to any network topology and swarm size. In UC, an

environment E should not be able to distinguish, with

a non-negligible probability, between the two worlds:

The real world, where each of the PRIV

´

E parties:

E

j

E

j+1

Real World

A

E

j−1

D

i

D

i−1

D

i+1

D

i+2

E

F

E

j

E

j+1

Ideal World

S

E

j−1

D

i

D

i−1

D

i+2

D

i+1

Figure 2: UC security model: the Real and the Ideal world

executions are indistinguishable to the environment E.

an Issuer I , a parent edge E

j

, a child IoT device D

i

,

and a Verifier V executes its assigned part of the real-

world protocol PRIV

´

E denoted by Π. The network is

controlled by an adversary A that communicates with

the environment E. The ideal world, in which all par-

ties forward their inputs to a trusted entity, called the

ideal functionality F , which internally performs (in a

trustworthy manner) all the required tasks and creates

the parties’ outputs in the presence of a simulator S.

The endmost goal is to ensure that PRIV

´

E se-

curely realizes all internal cryptographic tasks if for

any real-world adversary A that interacts with the

swarm, running Π, there exists an ideal world simu-

lator S that interacts with the ideal functionality F so

that no probabilistic polynomial time environment E

can distinguish whether it is interacting with the real

A or the ideal world adversary S . Another key point

of UC towards reducing the computational complex-

ity of the specified protocol is the composition theo-

rem that preserves the security of PRIV

´

E , even if it is

arbitrarily composed with other instances of the same

or different protocols.

6.2 PRIV

´

E UC Model

We now explain our security model in the UC

framework based on the ideal functionality F origi-

nally proposed in (Camenisch et al., 2016) and later

adopted in (Chen et al., 2019; El Kassem et al.,

2019). We assume that F internally runs the sub-

functionalities defined in (Camenisch et al., 2016). In

protocol’s join phase, we adopt the key binding pro-

tocol introduced in (Chen et al., 2024), which ensures

an authenticated channel between the TC and the Is-

suer even in the presence of a corrupt Host; therefore,

in contrast to (Camenisch et al., 2016), we don’t need

to model the semi-authenticated channel.

The UC framework lets us focus the analysis on

a single protocol instance with a globally unique

session identifier sid. F uses session identifiers of

the form sid = (I , sid

′

) for some Issuer I and a

unique string sid

′

. In the real world, these strings are

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

254

mapped to the Issuer’s public key, and all parties use

sid to link their stored key material to the particular

Issuer. We define the Edge-JOIN, the IoT-JOIN,

and the SIGN sub-session identifiers jsid

j

, jsid

i j

and ssid, respectively, to distinguish several join

and sign sessions that might run in parallel. We

define two “macros” to determine whether a secret

key key is consistent with the internal functionality

records. This is checked at several places in the ideal

functionality interfaces and depends on whether key

belongs to an honest or corrupt party. The first macro

CheckkeyHonest is used when the functionality

stores a new key that belongs to an honest party and

checks that none of the existing valid signatures are

identified as belonging to this party. The second

macro CheckkeyCorrupt is used when storing a

new key that belongs to a corrupt party and checks

that the new key does not break the identifiability

of signatures, i.e., it checks that there is no other

known key

∗

, unequal to key, such that both keys are

identified as the owner of a signature. Both functions

output a bit b, where b = 1 indicates that the new key

is consistent with the stored information, whereas

b = 0 signals an invalid key. We assume that the

Host H

j

represents the same entity as E

j

. Thus,

M

j

and E

j

correspond to the TC and the Host in

an edge device E

j

. Finally, our model defines lists

that will be kept in the ideal functionality record

for the consistency of the joining devices, keys,

signatures, verification results, and key revocation

lists namely: EdgeMembers, EdgeKeys, EdgeSigned,

EdgeVer, IoTMembers, IoTKeys, IoTSigned, IoTVer

KRL and IoTKRL. Next, we present the interfaces of

PRIV

´

E ideal functionality F .

SETUP: On input (SETUP, sid) from an Issuer I ,

output (SETUP, sid) to S . F then expects the adver-

sary S to provide the following algorithms, namely

(Kgen, Sign, Verify, Link, and Identify), that will be

used inside the functionality as follows:

Kgen: It consists of two probabilistic sub-algorithms:

Kgen that generates keys tsk and dsk for honest TCs

and IoT devices, respectively. Note that E

j

and D

i

are

uniquely identified by their keys tsk

j

and dsk

i

, respec-

tively. We remove the indices from the keys in our

security model description. PSEUDO-Kgen that takes

dsk as an input and generates a random short-term key

pair (s,o) for honest IoT devices.

Sign: A probabilistic algorithm used for honest TCs

and IoT devices to create the type of signature re-

quired by the corresponding operation. It consists of

the following sub-algorithms:

1. sig

DAA

(tsk,µ,bsn): On input of a secret key tsk, a

message µ and a basename bsn, it outputs a DAA

signature σ

j

DAA

on behalf of E

j

.

2. sig

IoT

(dsk, m): It creates signatures on behalf of

D

i

. It runs PSEUDO-Kgen to create a short-term

key pair (s,o), then creates a signature σ

i

on a

message m using the secret short-term key s.

Aggregate: A deterministic algorithm that aggregates

the IoT signatures using agg algorithm that takes as

inputs c IoT signatures σ

1

,σ

2

,...,σ

c

and outputs an

aggregated signature σ

[1−c]

.

Verify: A deterministic algorithm that outputs a

binary result on the correctness of a signature. It con-

sists of two sub-algorithms:

1. ver

DAA

(σ

DAA

,µ,bsn): On input of a signature

σ

DAA

, a message µ and a basename bsn, it outputs

b = 1 if the signature is valid, 0 otherwise.

2. ver

IoT

(σ,m,o): On input of a signature σ, a mes-

sage m and a public short-term key o, it outputs

b = 1 if the signature is valid, 0 otherwise.

Link: (σ

1

,µ

1

,σ

2

,µ

2

): A deterministic algorithm

that checks if two signatures originate from the same

device. In the case of DAA signature linkability

checks, an extra parameter bsn is required as an in-

put. It outputs 1 if the same device generates σ

1

and

σ

2

, and 0 otherwise.

Identify: A deterministic algorithm that ensures

consistency with the ideal functionality F ’s internal

records by connecting a signature to the key used to

generate it. It consists of two sub-algorithms:

1. identify

DAA

(tsk,σ

DAA

,µ,bsn): It outputs 1 if tsk

was used to produce σ

DAA

, 0 otherwise.

2. identify

IoT

(σ,m,dsk, s): It outputs 1 if σ was pro-

duced by an IoT device D, with an identity key

dsk, on a message m using the short term key s, 0

otherwise.

On input (ALGORITHMS, sid) from S, the ideal

functionality checks that Aggregate, Verify, Link, and

Identify are deterministic, store the algorithms and

output (SETUPDONE, sid) to I .

Edge-JOIN: On input (JOIN, sid, jsid

j

, M

j

) from

E

j

, create a join session record ⟨ jsid

j

,M

j

,E

j

⟩ and

output (JOINPROCEED, sid, jsid

j

, M

j

) to I . On

input (JOINCOMPLETE,sid, jsid

j

,tsk) from S :

• Abort if I or M

j

is honest and a record ⟨M

j

,∗,∗⟩ ∈

EdgeMembers already exists.

• If M

j

and E

j

are honest, set tsk ← ⊥.

• Else, verify that the provided tsk is eligible by:

• CheckkeyHonest(tsk) = 1 if M

j

is honest and E

j

is corrupt, or CheckkeyCorrupt(tsk) = 1 if M

j

is

corrupt and E

j

is honest.

Insert ⟨M

j

,E

j

,tsk⟩ into EdgeMembers and output

(JOINED, sid, jsid

j

) to E

j

.

IoT-JOIN: On input (JOIN,sid, jsid

i j

,D

i

,E

j

) from

D

i

, create a join session record ⟨ jsid

i j

,D

i

,E

j

⟩ and

output (JOINPROCEED, sid, jsid

i j

, D

i

) to E

j

.

PRIVÉ: Towards Privacy-Preserving Swarm Attestation

255

On input (JOINCOMPLETE,sid, jsid

i j

,dsk) from S:

• Abort if I or D

i

is honest and a record ⟨D

i

,∗,∗⟩ ∈

IoTMembers already exists.

• If D

i

and E

j

are honest, set dsk ← ⊥.

• Else, verify that the provided dsk is eligible by

CheckkeyHonest(dsk) = 1 if D

i

is honest, or

CheckkeyCorrupt(dsk) = 1 if D

i

is corrupt.

Insert ⟨D

i

,E

j

,dsk⟩ into IoTMembers and output

(JOINED, sid, jsid

i j

, D

i

) to E

j

.

IoT-SIGN: For all i ∈ [1,c

j

], where c

j

is the num-

ber of E

j

’s children IoT devices participating in

the swarm attestation. On input (SIGN, sid, ssid, m

i

)

from D

i

, with m

i

is the message to be signed

by a child D

i

, if I is honest and no entry

⟨D

i

,E

j

,∗⟩ in IoTMembers, abort. Else, create

a sign session record ⟨ssid, D

i

,E

j

,m

i

⟩ and output

(SIGNPROCEED,sid,ssid) to D

i

.

• On input (SIGNCOMPLETE,sid, ssid, σ

i

) from S,

if D

i

and E

j

are honest, ignore the adversary’s sig-

nature σ

i

and internally generate the signature for a

fresh dsk or established dsk:

– Retrieve dsk from IoTKeys, if no key

exists, set dsk ← Kgen() and check

CheckkeyHonest(dsk) = 1.

– Compute signature σ

i

← sig

IoT

(dsk, m

i

) that in-

ternally runs PSEUDO-Kgen to create a short-

term key pair (s

i

,o

i

) for D

i

, then check that

ver

IoT

(σ

i

,m

i

,o

i

) and identify

IoT

(σ

i

,m

i

,dsk, s

i

)

are both equal to 1.

– Check that there is no D

′

̸= D

i

with key dsk

′

registered in IoTMembers or IoTKeys with

identify

IoT

(σ

i

,m

i

,dsk

′

,s

i

) = 1.

• Store ⟨D

i

,dsk, (s

i

,o

i

)⟩ in IoTKeys.

• Compute σ

j

[1−c

j

]

← agg(σ

1

, σ

2

, ..., σ

c

j

).

• Output (sid, ssid, σ

j

[1−c

j

]

,{σ

i

,o

i

}

i∈[1,c

j

]

) to E

j

.

AGGREGATION: Calculate the aggregation of the

v (total number of edges in a swarm) aggregated sig-

natures as follows: σ

[1−v]

← agg(σ

1

[1−c

1

]

,...σ

v

[1−c

v

]

),

and store ⟨σ

[1−v]

,σ

j

[1−c

j

]

,(σ

i

,m

i

,D

i

,o

i

)

i∈[1,l]

⟩ in

IoTSigned, where l =

∑

v

j=1

c

j

.

Edge-SIGN: On input (SIGN, sid, ssid, M

j

, µ, bsn)

from E

j

, where j ∈ [1,v]. If I is honest and no en-

try ⟨M

j

,E

j

,∗⟩ exists in EdgeMembers, abort. Else,

create a sign session record ⟨ssid, M

j

,E

j

,µ,bsn⟩ and

output (SIGNPROCEED, sid, ssid, µ, bsn) to M

j

.

On input (SIGNCOMPLETE, ssid, σ

j

DAA

) from S:

• If M

j

and E

j

are honest, ignore the adversary’s sig-

nature and internally generate the signature for a

fresh or established tsk:

– If bsn ̸= ⊥, retrieve tsk from ⟨M

j

,bsn,tsk⟩ ∈

EdgeKeys. If no such tsk exists or bsn = ⊥, set

tsk ← Kgen(). Check CheckkeyHonest(tsk) = 1

and store ⟨M

j

, bsn, tsk⟩ in EdgeKeys.

– Compute σ

j

DAA

← sig

DAA

(tsk,µ,bsn) and check

ver

DAA

(σ

j

DAA

,µ,bsn) = 1.

– Check identify

DAA

(σ

j

DAA

,µ,bsn,tsk) = 1 and

check that there is no M

′

̸= M

j

with key tsk

′

registered in EdgeMembers or EdgeKeys with

identify

DAA

(σ

j

DAA

,µ,bsn,tsk

′

) = 1.

• If E

j

and M

j

are honest, store ⟨σ

j

DAA

, µ,

bsn, M

j

, E

j

⟩ in EdgeSigned and output

(SIGNATURE,sid,ssid,σ

j

DAA

) to E

j

.

Edge-VERIFY: On input (VERIFY, sid, µ, bsn,

σ

j

DAA

, σ

[1−l]

, o

i

, KRL), i ∈ [1,l] and j ∈ [1,v] from

V .

• For each j, retrieve all pairs (tsk,M

j

) from

⟨M

j

,∗,tsk⟩ ∈ EdgeKeys where identify

DAA

(σ

j

DAA

,

µ, bsn, tsk) = 1. Set f

j

= 0 if at least one of the

following conditions holds:

CHECK 1. More than one key tsk was found.

CHECK 2. I is honest and no pair (tsk,M

j

) was found.

CHECK 3. There is an honest M

j

but no entry

⟨∗,µ,bsn,M

j

⟩ ∈ EdgeSigned exists.

CHECK 4. There is a tsk

∗

∈ KRL where

identify

DAA

(σ

j

DAA

, µ, bsn, tsk

∗

) = 1

and no pair (tsk, M

j

) for an honest M

j

was

found.

• If f

j

̸= 0, set f

j

= ver

DAA

(σ

j

DAA

, µ, bsn).

• Add ⟨σ

j

DAA

,µ,bsn,KRL, f

j

⟩ to EdgeVer, then pro-

ceed to verify the children IoT signatures.

IoT-VERIFY Output 0 if l ̸= k, else, retrieve

⟨σ

[1−v]

,σ

j

[1−c

j

]

,(σ

i

,m

i

,D

i

,o

i

)

i∈[1,l]

⟩ from IoTSigned.

For each i retrieve all pairs (dsk,D

i

) from

IoTMembers and ⟨D

i

,dsk, (s

i

,o

i

)⟩ ∈ IoTKeys

where identify

IoT

(σ

i

, m

i

, dsk, (s

i

,o

i

)) = 1. Set f

i

= 0

if at least one of the following conditions holds:

CHECK1. More than one key dsk was found.

CHECK2. If the parent edge E

j

is honest, no (dsk,D

i

)

was found.

CHECK3. There is an honest D

i

but no entry

⟨∗,∗,(σ

i

,m

i

,D

i

,∗)⟩ ∈ IoTSigned exists.

CHECK4. There is a dsk

∗

∈ IoTKRL where

identify

IoT

(σ

i

,m

i

,dsk

∗

,(s

i

,o

i

)) = 1 and

no (dsk, D

i

,(s

i

,o

i

)) for honest D

i

was found.

• If f

i

̸= 0, set f

i

= ver

IoT

(σ

i

,m

i

,o

i

) and add

⟨σ

i

,m

i

,IoTKRL, f

i

⟩ to IoTVer.

• Output (VERIFIED, 0, sid, σ

j

DAA

,σ

[1−k]

,y

i

), to V

if there exists at least one f

i

or f

j

equals to

0 for all j ∈ [1,v] and i ∈ [1,l]. Otherwise

(VERIFIED,1,sid,σ

j

DAA

,σ

[1−l]

,o

i

).

Edge-LINK: On input (LINK, sid, σ, µ, σ

′

, µ

′

, bsn)

from some party V with bsn ̸= ⊥, else:

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

256

• Output ⊥ to V if at least one signature tuple (σ, µ,

bsn) or (σ

′

, µ

′

, bsn) is not valid.

• For each tsk in EdgeMembers and EdgeKeys com-

pute b = identify

DAA

(σ, µ, bsn, tsk) and b

′

=

identify

DAA

(σ

′

, µ

′

, bsn, tsk), set f = 0 if b ̸= b

′

and

f = 1 if b = b

′

= 1.

• If f is not defined yet, set f = link(σ, µ, σ

′

, µ

′

, bsn).

Output (LINK, sid, f ) to V .

Edge-TRACE On input (TRACE,sid,σ

j

DAA

,µ,bsn)

from V , retrieve all pairs (tsk,M

j

) from

⟨M

j

,∗,tsk⟩ ∈ EdgeKeys where identify

DAA

(σ

j

DAA

, µ,

bsn, tsk) = 1. Output (TRACE,sid,E

j

) to V .

IoT-LINK: On input (LINK, sid, σ, m, σ

′

, m

′

) from

E

j

, output ⊥ to E

j

if at least one signature tuple (σ, m)

or (σ

′

,m

′

) is not valid (verified via the verify inter-

face with IoTKRL =

/

0), else:

• For each (dsk,s

i

) in IoTMembers and IoTKeys

compute b = identify

IoT

(σ,m,dsk, s) and b

′

=

identify

IoT

(σ

′

i

,m

′

,dsk

′

,s

′

) and set f = 0 if b ̸= b

′

and f = 1 if b = b

′

= 1.

• If f is not defined yet, set f = link(σ,m,σ

′

,m

′

).

• Output (LINK,sid, f ) to E

j

.

IoT-TRACE On input (TRACE, sid, σ

i

,m

i

) from E

j

,

retrieve all pairs (dsk,D

i

) from IoTMembers and

⟨D

i

,dsk, (s

i

,o

i

)⟩ ∈ IoTKeys where identify

IoT

(σ

i

, m

i

,

dsk, (s

i

,o

i

)) = 1. Output (TRACE,sid, D

i

) to E

j

.

6.3 Realizing Security Requirements

The complete security model of the intelligence part

of PRIV

´

E expressed in UC terms, including the ideal

functionality interfaces for all the internal phases and

some essential checks, is presented in § 6.2. Before

proceeding with the UC security proof in § 7, we first

map the modeled interfaces to the security properties

outlined in § 4.

Anonymity (SP1): The anonymity of honest edge

devices, equipped with a valid TC, and their benign

(children) IoT devices is guaranteed by F due to

the random choice of tsk (E

j

’s private key) and dsk

(D

i

’s private key) for the construction of every DAA

and IoT signature as part of the Edge/IoT-SIGN in-

terfaces. In case of corrupt devices (either Edge or

IoT), S provides the signature, which conveys the

signer’s identity, as the signing key is extracted from

the respective device key pair. This reflects that the

anonymity of the whole swarm is only guaranteed if

both the Edge and IoT devices are honest.

Traceability (SP2): CheckkeyHonest described in

§ 6 prevents registering an honest tsk/dsk in the

Edge/IoT-JOIN interfaces that match an existing sig-

nature, so that conflicts can be avoided and sig-

natures can always be traced back to the origin

devices. Moreover, in the context of Revocation

(SP3), CHECK4 guarantees that valid signatures in

the Edge/IoT-VERIFY interfaces are not revoked

due to the identify algorithm being deterministic.

Correctness (SP4): When an honest device success-

fully creates a signature, honest Verifiers will always

accept this signature. This is since honestly gener-

ated signatures in the Edge/IoT-SIGN interfaces pass

through ver

DAA

and ver

IoT

checks before being output

to the external environment.

Non-frameability (SP5): The non-frameability

property guarantees that this signature cannot be

linked to a legitimate one created by the target device.

In this context, CHECK3 in the Edge/IoT-VERIFY

interfaces ensures that if the edge (respectively IoT)

device is honest, then no adversary can create signa-

tures that are identified to be signed by the edge (re-

spectively IoT) device. This extends to the unforge-

ability (SP6) property, which dictates that it is com-

putationally infeasible to forge signatures.

Linkability (SP7): CheckkeyCorrupt defined in § 6.2

prevents a corrupt tsk/dsk, matching with an exist-

ing signature, from being added to the lists of existing

members and keys, thus enabling the correct linkabil-

ity of signatures.

7 PRIV

´

E SECURITY PROOF

(SKETCH)

Since we have defined the UC security model of

PRIV

´

E , we can now proceed with the security proof

of the proposed scheme, leveraging the Discrete Log-

arithm (DL) and Decisional Diffie-Hellman (DDH)

assumptions. This can be expressed as follows:

Definition 7.1. (The Discrete Logarithm (DL) as-

sumption (McCurley, 1990)) Given y ∈ G

2

, find an

integer x such that g

x

2

= y .

Definition 7.2. (Decisional Diffie-Hellman (DDH)

assumption (Boneh, 1998)) Let G be a group gen-

erated by some g. Given g

a

and g

b

, for random

and independent integers a, b ∈ Z

q

, a computation-

ally bounded adversary cannot distinguish g

ab

from

any random element g

r

in the group.

Theorem 7.1. The PRIV

´

E protocol securely realizes

F using random oracles and static corruptions when

DL and DDH assumptions hold if the CL signature

(Camenisch and Lysyanskaya, 2004) and the BLS sig-

nature (Boneh et al., 2003a) are unforgeable.

Proof of Theorem 7.1: We first present a high-level

description of our proof that consists of a sequence

of games starting with the real-world protocol exe-

cution in Game 1. Moving on to the next game, we

PRIVÉ: Towards Privacy-Preserving Swarm Attestation

257

construct one entity, C, that runs the real-world pro-

tocol for all honest parties. Then, we split C into

two pieces: an ideal functionality F and a simula-

tor S that simulates the real-world parties. Initially,

we start with an “empty” functionality F . With each

game, we gradually change F and update S accord-

ingly, moving from the real world to the ideal world,

and culminating in the full PRIV

´

E F being realized

as part of the ideal world, thus proving our proposed

security model presented in § 6.2. The endmost goal

of our proof is to prove the indistinguishability be-

tween Game 1 and Game 14, i.e., between the com-

plete real world and the fully functional ideal world.

This is done by proving that each game is indistin-

guishable from the previous one, starting from Game

1 and reaching Game 14.

Our proof of Theorem 7.1 starts with setting up

the real-world games (Game 1 and Game 2), then in-

troducing the ideal functionality in Game 3. At this

stage, the ideal functionality F only forwards its in-

puts to the simulator and simulates the real world.

From Game 4 onward, F starts executing the setup

interface on behalf of the Issuer. Moving on to Game

5, F handles simple verification and linking checks

without performing any detailed checks at this stage;

i.e., it only checks if the device belongs to a revoca-

tion list separately. In Games 6 − 7, F executes the

Join interface while performing checks to maintain

the registered keys’ consistency. It also adds checks

that allow only the devices that have successfully been

enrolled to create signatures. Game 8 proves the

anonymity of PRIV

´

E by letting F handle the sign

queries on behalf of honest devices by creating swarm

attestation using freshly generated random keys in-

stead of running the sign algorithm using the device’s

signing key. At the end of this game, we prove that by

relying on DDH and DL constructions, an external en-

vironment will notice no change from previous games

where the real-world sign algorithm was executed as

explained in our UC model (§ 6.2). The sequence of

games is explained in Figure 3. We use the “≈” sign

to express games’ indistinguishability. F

i

and S

i

in

Figure 3 represent the ideal functionality F and the

simulator S, respectively, as defined in the i

th

game

for 3 ≤ i ≤ 14. Next, we present a sketch UC proof

for Theorem 7.1.

Proof. Game 1 (Real World): This is PRIV

´

E .

Game 2 (Transition to the Ideal World): An entity

C is introduced. C receives all inputs from the hon-

est parties and simulates the real-world protocol for

them. This is equivalent to Game 1, as this change is

invisible to E .

Game 3 (Transition to the Ideal World with Differ-

ent Structure): We now split C into two parts, F and

S, where F behaves as an ideal functionality. It re-

ceives all the inputs and forwards them to S, which

simulates the real-world protocol for honest parties

and sends the outputs to F . F then forwards these

outputs to E . This game is essentially equivalent to

Game 2 with a different structure.

Game 4 (F handles the Setup): F now behaves dif-

ferently in the setup interface, as it stores the algo-

rithms defined in Section 6.2. F also performs checks

and ensures that the structure of sid, which represents

the issuer’s unique session identifier for an honest I ,

aborts if not. When I is honest, S will start simulat-

ing it. Since S is now running I , it knows its secret

key. In case I is corrupt, S extracts I ’s secret key

from π

ipk

and proceeds to the setup interface on be-

half of I . By the simulation soundness of π

ipk

, this

game transition is indistinguishable for the adversary

(Game 4 ≈ Game 3).

Game 5 (F handles the verification and Linking):

F now performs the verification and linking checks

instead of forwarding them to S. There are no pro-

tocol messages; the outputs are exactly as in the

real-world protocol. However, the only difference is

that the verification algorithms that F uses (namely

ver

DAA

and ver

IoT

) do not contain revocation checks,

so knowing KRL and IoTKRL for corrupt edge and

IoT, respectively, F performs these checks separately,

and the outcomes are equal (Game 5 ≈ Game 4).

Game 6 (F Handles the join): The join interface of

F is now changed. Specifically, F stores the mem-

bers that joined in its records. If I is honest, then

F stores the secret keys tsk and x

L

extracted from π

1

and π

L

by S for corrupt edge and IoT devices, respec-

tively. F sets the tracing key for each honest edge

device (as it already knows its key tsk) or calculates

it from the extracted tsk (for corrupt edge devices).

Only if the edge or the IoT device is already registered

in EdgeMembers or IoTMembers, F will abort the

protocol. However, I has already tested this case be-

fore continuing with the query JOINPROCEED; thus,

F will not abort. Knowing tsk and all children dsks,

F proceeds with adding the children IoT devices into

IoTMembers on behalf of edge devices. Therefore,

F and S can interact to simulate the real protocol in

all cases. Due to the simulation soundness of π

1

and

π

L

, Game 6 ≈ Game 5.

Game 7 (F Further Handles the join): If I is hon-

est, then F only allows the devices that joined to sign.

An honest edge device will always check whether it

joined with a TC in the real-world protocol, so there

is no difference for honest edge devices. In the case

that an honest M

j

performs a join protocol with a cor-

rupt edge device E

j

and an honest Issuer, S will make

a join query with F to ensure that M

j

and E

j

are in

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

258

Figure 3: Overview of the UC PRIV

´

E Proof.

EdgeMembers. Also, only joined IoT devices with

certified public keys can create signatures. The parent

edge device will check this before verifying its chil-

dren’s IoT signatures. Therefore Game 7 ≈ Game 6.