Automated Bone Fracture Detection System Using YOLOv7 with

Secure Email Data Sharing

Swarada Gade

1

, Vashita Nukala

1

, Tanaya Sutar

1

, Shravani Walunj

1

, Avinash Golande

2

,

Amruta Hingmire

2

and

Vinodkumar Bhutnal

2

1

Department of Computer Engineering, JSPM’S Rajarshi Shahu College of Engineering, Pune, India

2

School of Engineering and Technology Pimpri Chinchwad University, Pune, India

Keywords: Short Automated Bone Fracture Detection, Deep Learning Model, X-Ray Imaging, Automated Diagnosis,

Email Data Exchange, DICOM Images, Diagnostic Reporting, Secure Data Transmission, Computer Vision,

Medical Imaging, Healthcare Technology, Image Analysis, Radiology Automation.

Abstract: The demand for more precise and timely bone fractures diagnosis grew resulting in integration of advanced

technologies to medical imaging. This paper describes the development and realization of a deep learning

system for automatic detection of bone fractures in X-ray images, which uses YOLOv7 model for improved

diagnostic accuracy and efficiency. A secure mechanism to transmit medical images and reports through

email is included in the system. Furthermore, this system supports direct downloading of DICOM images as

well as creating simple diagnostic reports automatically thus taking out serial steps that increase delay time

for patient feedback. This innovative approach leverages cutting-edge deep learning techniques to address

critical healthcare needs, streamline diagnostic workflows, and enhance patient outcomes. Real-time

fracture detection in the YOLOv7 model is possible because of its use of data augmentation methods during

the process of training, which assures robustness and reliability in different scenarios. Through the

systematic methodology of data preparation, model training, and evaluation defined in this framework, the

potential of the system as a reliable asset in clinical applications is demonstrated. The proposed model

minimized the dependency on manual procedures, maximized the speed of clinical decisions, and reinstated

decision processes through standardized results. Hence, this framework serves as a noble contribution to

modern medical diagnostics.

1 INTRODUCTION

Providing accurate diagnosis of bone fractures is an

integral part of optimal patient care, with a

significant role of X-ray technology in the

identification and management of injuries.

Historically, the diagnostic phase of X-ray analysis

requires engagement and expertise from a

radiologist who scans each film to assess for

fractures. Human observation likely varies in

reliability depending on factors such as fatigue and

cognitive load, which can impact the interpretation

of diagnostic images.

Given these challenges, the motivation behind

this systems design is to establish a bone fracture

detection system for X-ray images using the model

YOLOv7, which is a real-time object detection

model. The model is established in detection of

fractures in diagnostic images, thus improving

accuracy and consequently efficiency in the clinical

decision-making process.

The system’s motive is to reduce the manual

processing with neuro-scientifically expert-grounded

design choices to ensure effective fracture

detection.This system allows for collaborative care

by ensuring that patient information can be shared

effectively, which is necessary for timely

intervention. The primary benefit of the system is

the ability at improving the diagnostic accuracy.

By the application of deep learning, the system

will then be able to process the minute detail in X-

rays to detect even very small bone fractures that

would otherwise be missed by the human eye. Early

detection followed by prompt intervention could,

therefore, lead to better outcomes for patient care. It

will be used for standardization across various

538

Gade, S., Nukala, V., Sutar, T., Walunj, S., Golande, A., Hingmire, A. and Bhutnal, V.

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing.

DOI: 10.5220/0013624300004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 538-549

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

health care facilities since one human interpretation

may vary from another, thus reducing this

variability.

This technology plays an important role in

advancing medical research. It can support large

studies over X-ray imaging that may advance the

understanding of fracture epidemiology, risk factors,

and treatment methods. Such data may also

contribute to the development of new and even

newer technologies for treating bone fractures that

will reduce the number of occurrences and make

patients recover even better.

The integration of emerging diagnostic

technologies, such as explainable AI, will further

enhance transparency and trust in the decision-

making process, so that healthcare providers are able

to understand and validate the system's conclusions.

Also, the system aligns with the green Internet of

Things framework, that enables secure data

exchange between devices while maintaining the

integrity of existing healthcare infrastructure. The

accuracy of medical imaging is also supported by

ongoing technological advancements in the field.

Studies combining traditional diagnostic methods

with modern technological integrations bring

advancements to evolving models of investigation

and data management. This system’s approach to

detecting bone fractures, combining deep learning

algorithms with secure data management, offers an

original progression in enhancing diagnostic

methods. By stimulating diagnostic accuracy,

improving workflow, and promoting positive patient

outcomes, this project aims to integrate cutting-edge

technology into clinical practice. As healthcare

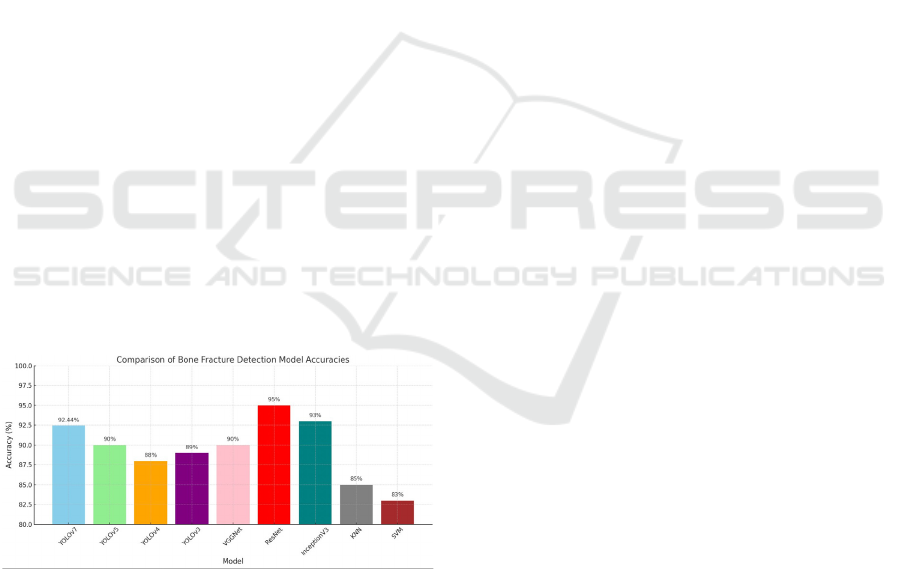

Figure 1: Accuracy comparison.

professionals adopt this modern approach, they can

support the generation of informed decisions,

leading to enhanced healthcare delivery and

improved patient care.

2 RELATED WORK

M. Nandyala, P. Kanumuri, and M. Garlapati

(Nandyala, Kanumuri et al. 2023). This paper

conducts a review and analysis focused on the

automatic diagnosis of fractures with deep learning

application techniques. Here, an attempt has been

made to set up which deep learning models that can

potentially be applied for the automatic fracture

detection and classification from medical images are

the most promising in terms of efficiency and

reliability. For this purpose, authors have

extensively analyzed existing literature and

attempted to present the most important

methodologies used, performance metrics, and

validation processes across a variety of studies. By

synthesizing findings from several studies, one will

be able to note the trends, strengths, and limitations

of current approaches in order to illustrate insights to

best practices in the development and

implementation of deep learning algorithms for

fracture diagnosis.

S. Shelmerdine, H. Liu, O. Arthurs, R. White,

and N. Sebire (Shelmerdine, Liu et al. 2022) AI in

fracture detection has been the dominant line of

research and commercialization in medicine.

Children are the primary victims of the inadvertent

over- or under-diagnosis of injuries. This is as a

result of missed out fractures. The study focuses on

the AI tools’ diagnostic performance for pediatric

fracture detection on imaging. It also aims to

compare its performance with human readers where

possible. Following a systematic review between

2011 and 2021, databases including MEDLINE,

Embase, and Cochrane Library were analyzed. Nine

from 362 identified eligible articles have found

fracture detection being most frequent with the

elbow mostly studied. Most of the studies were done

using data from single institutions, deep learning

algorithms were usually used, and external

validation was lacking. The figures for AI were from

88.8% to 97.9% in terms of accuracy. In those

researches where AI and the readers werecompared,

AI has been discovered to be more sensitive (though

the difference is not significant). The analysis

pointed out AI’s unclear algos and thereby limited

genabilities due to the lack of effective validation

and a heterogeneous dataset. Such future research

would benefit not only from the diverse patient

populations of multi center studies around the world

but also be the cornerstone of real-world

assessments. Hence, it can be argued that such future

research would give a strong ground and potential

for the tools to help doctors in pediatric ways.

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

539

S. Rathor and D. P. Yadav (Rathor, Yadav et al.

2020) Given the frequency of the fractures and

drawbacks of hand identification with pictures of X-

Ray, the authors of this review addressed the

significance of automated fracture detection. To

differentiate between healthy and damaged bones, a

DNN model is suggested. Overfitting on a tiny

dataset is eliminated using data augmentation

techniques, yielding a dataset of 4000 photos. DNN

outperforms earlier techniques with accuracy of

92.4% in differentiating between broken and healthy

bone.Additionally, certain subgroups yield accuracy

rates of 95% and 93%, demonstrating the model’s

resilience.

S. Yang, W. Cao, B. Yin, C. Feng, S. He and

G.Fan (Yang, Cao et al. 2020) The paper is a

thorough review of diagnostic efficiency of deep

learning technology for orthopedic fractures. It

introduces various researches which have used deep

learning algorithms for medical imaging purposes

including X-rays and CT scans. The authors

examine how these algorithms performed on their

diagnostic tasks and to what accuracy these methods

were compared with the standard ones. The article

addresses the considerations that affect the

diagnostic performance including the models used,

the quality of the datasets and the imaging

modalities.

J. Zou and M. R. Arshad (Zou and Arshad,

2024). The present article studies the customization

and introduction of enhanced YOLOv7 algorithm

for predicting of whole body fractures from medical

images. The authors start with the problem of good

fracture detection since different fractures can be of

different sizes, at different locations and need to

have dependable and efficient tools. They conduct a

complete evaluation of the images using a large

database of medical images containing different

fractures of the bone.

Puttagunta, M., Ravi, S (Puttagunta and Ravi,

2021) This paper examines the numerous DL

architectures, from CNNs, to RNNs, to hybrid

models, and explains how they are effective and

ineffective at dealing with different medical

imaging, whether that be X-rays, MRIs, CT scans, or

ultrasounds. The review is about how models can be

trained on huge datasets to recognize patterns that

might indicate certain diseases or abnormalities, like

tumors or fractures. In this article, the authors

provide cases where deep learning systems have

been used in a clinical setting, not to replace the

radiologist or any other medical personnel, but to

help them make the most accurate and timely

diagnosis possible.

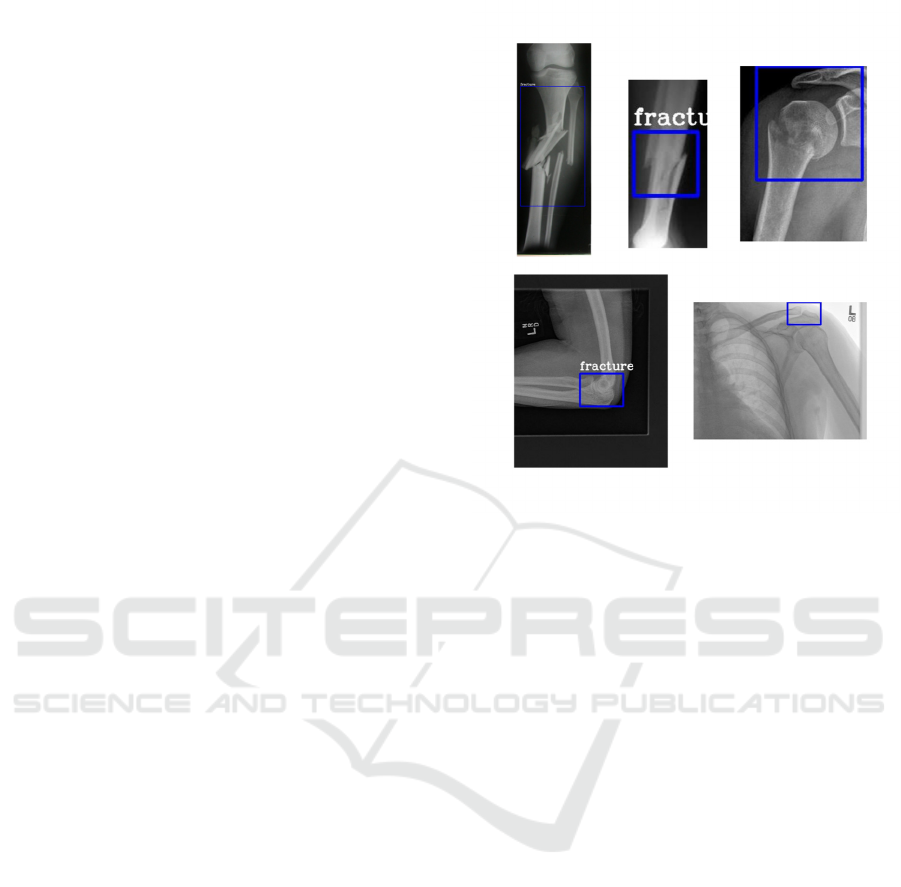

Figure 2: Bone fracture detection output.

W. Kim, S. Kim, Y. Kim, G. Moon, Y. Jeong

and H. S. Choi: (Kim, Kim et al. 2022) In this

study, the researchers propose a novel approach that

leverages advanced object detection algorithms to

automatically identify and classify facial bone

fractures from medical imaging data. It describes the

performance metrics of the system, which compares

the diagnostic accuracy with conventional methods.

The authors point out that their CA-FBFD system

would take less time to diagnose and would have

more consistent fracture identification which would

really help radiologists and other medical

professionals in a clinical setting.

L. T. Martin al. (Martin, Nelson et al. 2022) It is

no doubt that the increasing diversity of the data has

the potential of pointing health decision-making in a

new direction. However, the problems are quite

profound. As such, the difficulties include setting

data sharing and interoperability stan- dards,

developing new methodological and workforce

models, and, more importantly, ensuring strong data

stewardship and governance that will accompany the

data to protect public health data integrity. The

review including literature, environmental scan, and

insights from the National Commission to

Transform Public Health Data Systems inform the

discussion of this article on the major obstacles in

data sharing and reuse. It highlights the sector’s

possibilities to close the gaps by improved

interoperability and better data ownership practices.

INCOFT 2025 - International Conference on Futuristic Technology

540

S. Dash, S. K. Shakyawar , M. Sharma, and

others (Dash, Shakyawar et al. 2019) The challenges

that are associated with using big data in healthcare

are discussed in the study, including issues with data

privacy, interoperability, and the need for skilled

professionals for assessing and interpreting the data.

They go over a variety of big data management

techniques and technologies, such as frameworks for

processing large data sets, data integration tools, and

data storage options. These techniques and

technologies can be applied to enhance patient

outcomes, manage healthcare more effectively, and

ultimately transform the way that healthcare is

delivered.

Aiello, M., Esposito, G., Pagliari, G (Aiello,

Esposito et al. 2021) The authors begin by providing

context on the importance of DICOM as a

foundational standard that governs the storage,

transmission, and sharing of medical imaging data.

The paper highlights DICOM’s structured data

format, which includes not only image data but also

rich metadata. This metadata encompasses essential

information such as patient demographics, imaging

protocols, and clinical notes, facilitating better

organization and retrieval of information. Such

structured data is crucial for effective analysis in big

data applications.

Sun, Y.; al. (Sun, Li et al. 2023) The paper

aimed at improving detection accuracy and

efficiency in various applications by outlining the

limitations of existing YOLO models, particularly in

terms of their performance in complex environments

and under varying conditions. To counter these

challenges, the authors included a number of major

adjustments to the YOLOv7 framework. Key to

these includes the development of the Parallel

Backbone Architecture, known as PBA, to facilitate

better feature extraction at several scales. The

authors carried out extensive experiments against the

baseline YOLOv7 and other state-of-the-art object

detection models to validate the performance of the

PBA-YOLOv7 model. This is accomplished by

using benchmark datasets on the model, hence

assessing performance on metrics such as precision,

recall, and mean Average Precision (mAP). Based

on performance, PBA-YOLOv7 performs much

better compared to its predecessors, especially in

complex scenarios related to occlusion or change of

object size.

A related study was conducted by Shahnaj

Rahman, Parvin, and Abdur (Shahnaj, Rahman et al.

2024), wherein the proposed model was

strengthened by integrating different imaging

modalities like CT scans, X-rays, and MRI scans,

resulting in a more extensive collection of images.

They applied advanced architectures in deep

learning, such as convolutional neural networks

(CNNs), to appropriately analyze these different

types of images. Their paper highlights the

importance of multimodal data in capturing the

intensity of fractures, as the appearance can vary

greatly from one imaging method to another.

Research conducted by Gupta A. (Gupta, 2024)

discusses various optimization algorithms such as

Stochastic Gradient Descent and its variants. The

author also studies optimizers like Adam and

AdaGrad, highlighting their individual advantages

when applied in specific cases. The paper compares

the performance of these optimizers using empirical

data, providing valuable insights into their

effectiveness across different deep learning tasks.

A new loss function was proposed by Zheng Z.

et al. (Zheng, Wang et al. 2019), named Distance-

IoU Loss, which aims to improve the accuracy and

efficiency of bounding boxes. The authors highlight

shortcomings of existing loss functions like

Intersection over Union (IoU) and its variants, which

often encounter convergence issues, speed

limitations, and optimization stability problems. To

support their argument, the authors conducted

extensive experiments using well-known benchmark

datasets such as Pascal VOC and COCO. The results

showed that models utilizing Distance-IoU Loss

performed better than those using conventional IoU-

based loss functions. The paper provides a robust

comparison of detection accuracy and training time,

offering strong evidence to validate the effectiveness

of the proposed method.

Li X. et al. (Li, Wang et al. 2020) introduced a

novel loss function designed to enhance the

performance of dense object detection tasks,

particularly in situations with class imbalance and

overlapping bounding boxes. The authors propose a

"generalized focal loss" that addresses these

challenges through two key innovations: qualified

bounding boxes and a distributed approach to box

regression. The concept of qualified bounding boxes

allows the model to prioritize certain boxes based on

their confidence and relevance, helping to mitigate

the impact of noise from less relevant detections.

Beyaz et al. (Beyaz, Acıcı et al. 2020) reported a

convolutional neural network (CNN) architecture

achieving an accuracy of 97.4%. The study involved

a total of 1,341 femoral neck fracture images,

including 765 non-fracture images. The probability

threshold for the current version process was set at

0.5.

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

541

In the research conducted by Mutasa et al.

(Mutasa, Varada et al. 2020), the main focus was on

convolutional neural networks to automate the

detection and classification of femoral neck fractures

in medical images. This research aims to overcome

the challenges of accurately diagnosing femoral

neck fractures, which is crucial for determining

appropriate treatment and care for patients. The

model was trained using a dataset of annotated X-

ray images containing both normal and fractured

femoral necks. CNNs enabled the system to learn

spatial hierarchies of features directly from raw

image data, automating feature extraction that

previously required significant expertise and manual

intervention. These deep learning models are

designed not just to detect fractures but also to

determine their type and severity, enabling doctors

to make better decisions for patient care. The results

from the study indicate that these models outperform

conventional diagnostic methods, sometimes even

surpassing human radiologists, providing greater

precision and faster results. Such capabilities enable

consistent and reliable fracture detection, reduce

diagnostic errors, and facilitate quicker decision-

making processes. The integration of these

automated systems into clinical practice could

greatly assist radiologists, particularly in low-

resource settings or high-volume environments, by

facilitating timely and effective patient care. Overall,

the study highlights the transformative potential of

deep learning in medical imaging, particularly in the

diagnosis and classification of femoral neck

fractures, leading to improved health outcomes.

Deep learning methods show great potential to

revolutionize medical imaging and enhance

healthcare outcomes, especially in diagnosing and

classifying femoral neck fractures.

3 METHODOLOGY

We propose a thorough approach to detect objects

using the latest deep learning architectures,

YOLOv7 in particular. To improve detection

accuracy and operating efficiency, our strategy

incorporates multiple components: training

techniques, model architecture, assessment metrics,

and data preparation.

The trained deep learning models assist in extracting

observations/assessments from pictures. Much use

of CNN-based techniques is utilized in this research

to recognize and categorize images according to

their characteristics. This section will highlight

preparation procedures of the data for model

architecture and evaluation of the model

performance. This section incorporates all the steps

of methodology including training processes,

evaluation metrics, model architecture, and data pre-

processing to ensure maximized predictability of the

learnt model.

𝜎=

∑

(𝑥

−𝜇)

(1)

𝑟=

()()

√

(2)

𝑡=

√

(3)

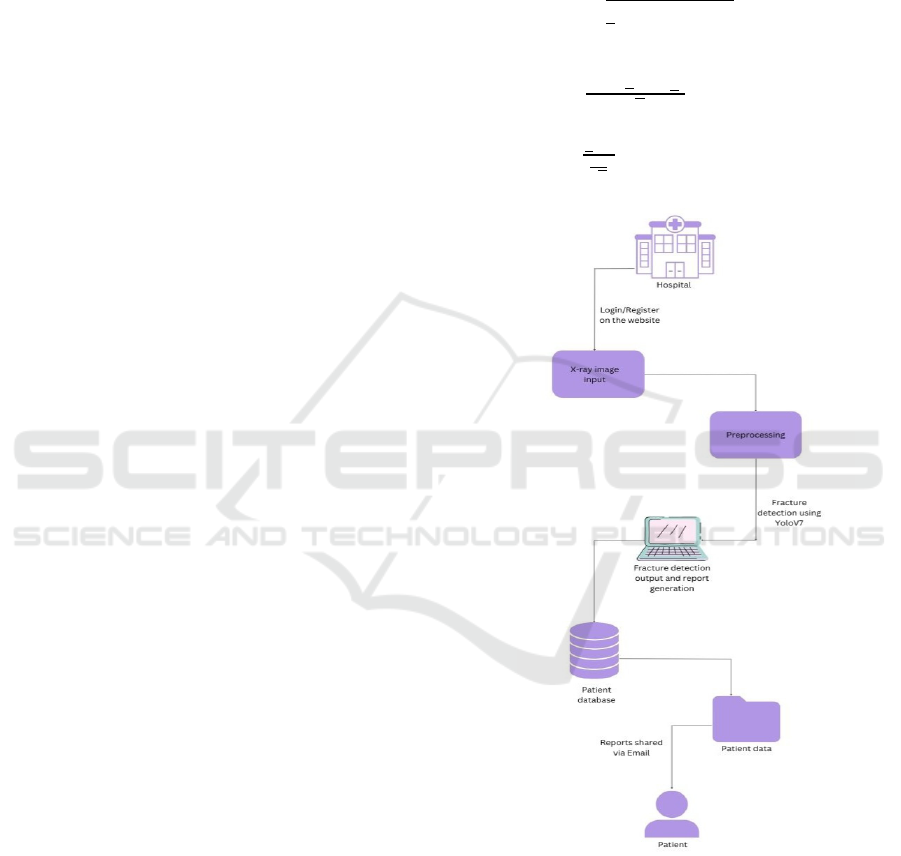

Figure 3: System architecture.

YOLOv7: This architecture is optimized for

performance and efficiency, making use of a new

backbone network and tuned anchor box settings. It

achieves a great mAP while keeping the inference

time small, so it allows real-time detection. The first

task was to generate an appropriately diverse dataset

of representative objects of interest. The collected

INCOFT 2025 - International Conference on Futuristic Technology

542

data was then preprocessed to have the same size;

each image was scaled to 640 x 640 pixels for

YOLOv7 model input. Among the various data

augmentation techniques applied were random

rotations, flips, and color variations, ensuring

diversity in the training dataset. This was done to

counter overfitting and make the model robust with

regard to changes.

Our proposed system employs the highly

accurate and fast YOLOv7 architecture. The

architecture

detection cases. While maintaining the positive traits

of earlier YOLO versions, it adds numerous features

to enhance its performance.

3.1 Training Deep Learning Model

Data preparation constitutes one of the critical

components in any deep learning project. The

quality and quantity of data directly influence the

model's ability to generalize from the data in training

to unseen data.

Dataset Collection: For this study, we collected a

dataset containing images labeled either fractured or

normal. The dataset is taken from public medical

imaging databases, supplemented with synthetically

generated images using image transformation

techniques. The diversity of this dataset is essential

for strong model performance in real-time

applications across various domains.

Data Augmentation: Data augmentation is a

statistical methodology used to artificially enhance

the training dataset size by implementing various

transformations on the images. This includes

rotations, scaling, flipping, and color alterations.

These transformations help augment the data,

thereby addressing overfitting and making the model

robust. Augmentations were applied dynamically

during training, with each epoch exposing the model

to different image variations.

𝛴(𝑧

)=

∑

(4)

𝑙=

∑∑

𝑦

,

𝑙𝑜𝑔(𝑦

,

^)

(5)

𝑤

()

=𝑤

()

−𝜂

(6)

𝐽

(

𝜃

)

=

∑

𝑙(𝑦

−𝑦

)

^

+ 𝜆||𝜃

(7)

𝑅𝑀𝑆𝐸 =

∑

(𝑇𝑟𝑢𝑒

−𝑃𝑟𝑒𝑑

)

(8)

𝛼=

(9)

𝛽=

(10)

𝛾=2.

.

(11)

where:

𝛼 represents prescision

𝛽 represents Recall

𝛾 represents F1-Score

Data Splitting: The dataset was divided into training,

validation, and test sets with proportions of 80%,

10%, and 10% respectively. This division facilitates

effective model training, smooth hyperparameter

tuning, and performance evaluation.

In this application, we use a combination of

CNNs and YOLOv7 architecture to effectively

classify and detect bone fractures in X-ray images.

Together, these technologies enable image

classification and object detection, with high

sensitivity to subtle differences in bone fractures.

CNNs are effective for image-related tasks due

to their hierarchical feature extraction capabilities.

The architecture consists of multiple layers that

process the input image:

Convolutional Layers: These layers apply

convolutional operations using filters (kernels) to

learn image features such as edges, textures, and

shapes.

Activation Functions: Applied after

convolutional layers to introduce non-linearity,

enabling the network to learn complex patterns.

Pooling Layers: Pooling layers (e.g., max

pooling) reduce the spatial dimensions of feature

maps, decreasing computational load and mitigating

overfitting while preserving key features.

Fully Connected Layers: These final layers take

the flattened output from previous layers to perform

classification tasks, outputting probabilities for each

class (healthy or fractured).

YOLOv7 is an advanced object detection

framework providing real-time detection

capabilities, ideal for medical image analysis:

Single-Stage Detector: Processes the entire

image in a single forward pass, enhancing speed and

efficiency.

Grid System: Divides the input image into a grid

to predict bounding boxes and class probabilities for

each cell, enabling multi-object detection.

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

543

Feature Pyramid Networks (FPN): Extracts

features at multiple scales, crucial for detecting both

small and large fractures.

Anchor Boxes: Handles different aspect ratios

and sizes of fractures effectively, improving

detection accuracy.

Preprocessing steps before model input include

image resizing (to 640x640 pixels), normalization

(scaling pixel values between 0 and 1), and data

augmentation (e.g., rotation, flipping, brightness

adjustment).

The training process involves:

Loss Function: Categorical cross-entropy loss for

classification, with additional localization and

confidence loss for object detection.

Optimizer: Adaptive optimizers like Adam or

SGD are used to minimize loss during training,

enhancing model fit.

Training Stages: The model undergoes multiple

training stages with regular validation checks to

monitor performance and prevent overfitting.

Model Compilation: The model is compiled with

the chosen optimizer, loss functions, and evaluation

metrics. Adam optimizer and binary cross-entropy

loss are commonly used for binary classification

tasks.

The integration of CNNs and YOLOv7 provides

a robust framework for detecting bone fractures

from X-ray images. Leveraging the strengths of both

architectures improves diagnostic accuracy and

supports timely medical interventions. Advanced

image processing techniques and effective training

strategies further enhance model performance for

real-world clinical applications.

3.2 Performance Analysis

Evaluating the model’s performance is crucial to

determine its effectiveness in classifying unseen

data. Evaluation is conducted using the test dataset,

independent of the training and validation datasets.

Evaluation Metrics: Key metrics include

accuracy, precision, recall, F1-score, and Area

Under the Curve (AUC). Accuracy measures the

proportion of correctly classified instances, while

precision and recall provide insights into the

model’s performance on positive cases. The F1-

score balances precision and recall, offering a

comprehensive performance metric.

SYSTEM CONFIGURATION

The software applications used included:

a) Ubuntu 22.04 LTS or Windows 10/11

b) Python 3.9 or higher

c) Django 5.0.6

d) TensorFlow 2.16.2

e) Ultralytics YOLOv7

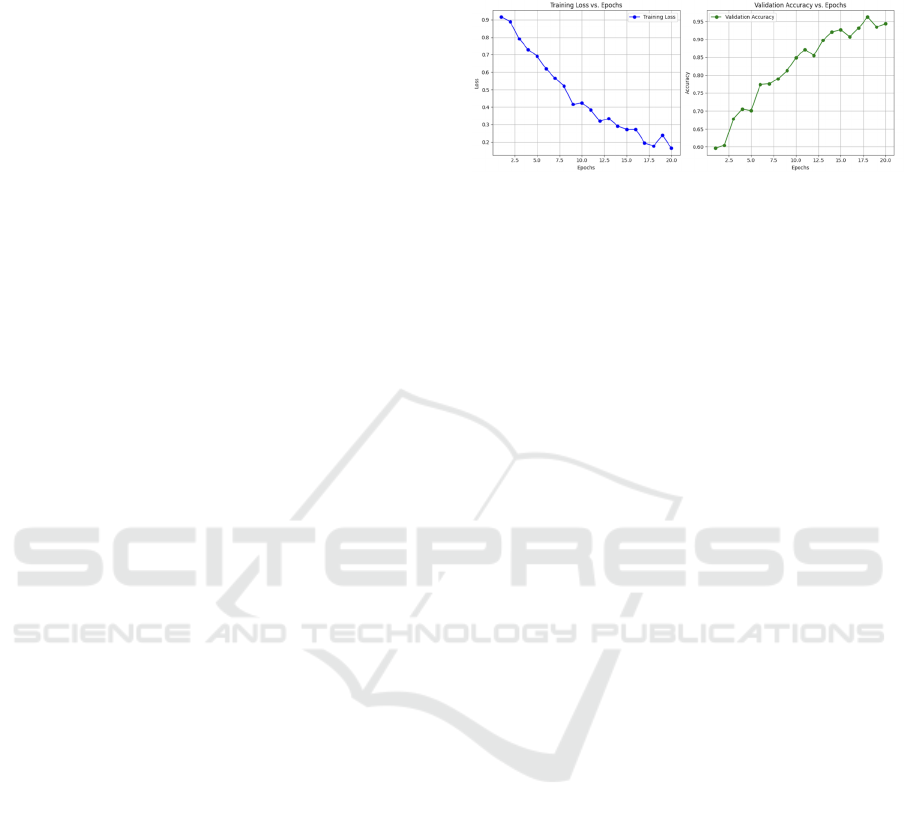

Figure 4: Training vs Epoch and Accuracy vs Epoch.

3.3 Training Parameter Setting

Training was conducted using carefully selected

parameters to optimize performance:

Batch Size: A batch size of 32 balanced memory

utilization and convergence speed, ensuring efficient

GPU resource use while maintaining data diversity

in each iteration.

Learning Rate: An initial learning rate of 0.001

was set, with a scheduler reducing it by a factor of

0.1 after a set number of epochs without validation

loss improvement. This approach refines learning as

the model approaches optimal weights.

Number of Epochs: Models were trained for 50

epochs, providing sufficient learning time without

overfitting. Early stopping was implemented to halt

training when validation loss did not improve for

three consecutive epochs.

Optimizer: Adaptive optimizers like Adam were

used to optimize learning, with binary cross-entropy

loss suitable for binary classification tasks. This

comprehensive methodology supports robust model

training, performance evaluation, and application in

medical image analysis.

4 RESULT ANALY SIS

The system involves new techniques for fracture

detection in bones through advanced deep learning

methods combined with state-of-the-art medical

diagnostic techniques. It achieves up to 92.44%

accuracy in classification using the YOLOv7

architecture, demonstrating high precision and

reliability, making it suitable for healthcare

applications.

A pipeline integrating this network with resizing,

normalization, and advanced data augmentation is in

place. It can adapt to various sizes, orientations, and

imaging conditions of fractures. By employing

INCOFT 2025 - International Conference on Futuristic Technology

544

anchor box methods followed by Feature Pyramid

Networks, the system enhances its ability to

precisely identify fractures, ensuring dependable and

consistent detection across complex datasets. These

capabilities are crucial for minimizing diagnostic

errors and enabling timely intervention for patients.

The YOLOv7 architecture strengthens the

system’s feature extraction, allowing it to detect

even minor cracks that might be missed through

manual analysis. This ability to analyze patterns in

X-ray images is a valuable asset for radiologists, as

it helps reduce mental and decision fatigue. The

system’s real-time detection capabilities, supported

by extensive training data and refined through

adaptive learning rate scheduling and regularization

techniques, make it an effective tool for time-

sensitive and critical situations, such as emergency

cases where rapid diagnosis can be life-saving.

In addition to its diagnostic function, the system

includes a secure email feature for transmitting

clinical images and reports, facilitating easier

communication among healthcare professionals.

This feature ensures strong data protection and

enhances collaborative efforts within medical teams.

The system speeds up patient management

workflows by minimizing delays in sharing

important information, resulting in quicker and more

efficient treatment. These efficiencies are especially

beneficial in resource-limited settings, where prompt

access to diagnostic information is crucial.

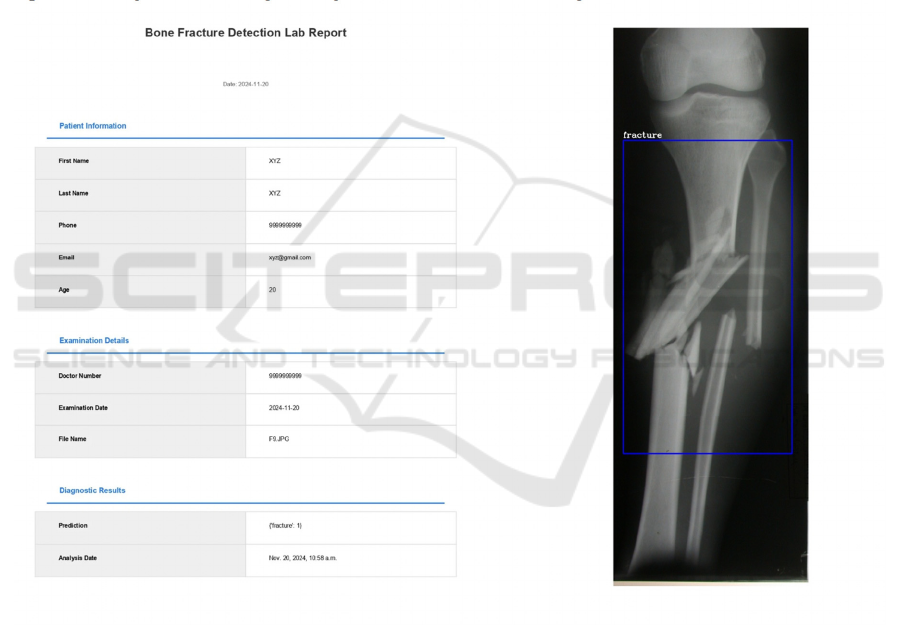

Figure 5: Generated report.

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

545

Table 1: Comparison with other papers.

Aspect Details

Tas

k

Bone Fracture Classification

Model Used YOLOv7 (You Only Look Once v7)

Fracture Types Classified Normal vs. Fractured bones

Image Modality X-ray Images

Detection Approach Object detection (real-time, single-stage)

Preprocessing - Resizing images to 640x640

- Normalization of pixel values to [0, 1]

- Data augmentation (rotation, flipping, scaling, color

variations)

Training Data 500 X-ray images (fractured and normal)

Model Evaluation Metrics Accuracy, Precision, Recall, F1-Score

Accuracy 92.44%

Precision 93%

Recall 95%

F1 score 93.44%

Performance High accuracy for detecting bone fractures

Real time application Yes

Training duration 50 epochs with Adam optimize

r

Loss function Categorical Cross-Entropy

5 CONCLUSIONS

It has been established that a YOLOv7-based

framework works efficiently in line with the

requirements proposed, aiming at real-time detection

of bone fractures with a high accuracy of 92.44%.

The system, by combining fracture detection

features with CNN-based feature extraction

capabilities, effectively handles fractures of various

sizes and complexities. Strong preprocessing

techniques and optimized training convergence

enhance the model’s reliability, making it highly

deployable from a clinical perspective.

Real-time detection, automated report generation,

and secure data sharing improve diagnostic

workflows, ensure patient data privacy, and facilitate

communication among medical personnel. The

architecture is scalable and flexible, adaptable to

broader healthcare settings, thereby transforming

diagnostic practices. In essence, deep learning has

significantly contributed to advancing medical

imaging processes, leading to better patient

outcomes and fostering innovations in healthcare

technology solutions.

REFERENCES

Swapna, N., & Malge, R. (2022). Classification and

detection of bone fracture using machine learning.

International Journal for Research in Applied Science

& Engineering Technology (IJRASET), 10(2), 45523.

https://doi.org/10.22214/ijraset.2022.45523

Thian, Y. L., Li, Y., Jagmohan, P., Sia, D., Chan, V. E. Y.,

Tan, R. T., & Tan, R. T. (2019). Convolutional neural

networks for automated fracture detection and

localization on wrist radiographs. Radiology: Artificial

INCOFT 2025 - International Conference on Futuristic Technology

546

Intelligence, 1(1), e180001.

https://doi.org/10.1148/ryai.2019180001

Yadav, D. P., & Rathor, S. (2020). Bone fracture detection

and classification using deep learning approach. In

2020 International Conference on Power Electronics

& IoT Applications in Renewable Energy and its

Control (PARC) (pp. 282-285). IEEE.

https://doi.org/10.1109/PARC49193.2020.236611

Yamamoto, N., et al. (2020). An automated fracture

detection from pelvic CT images with 3-D

convolutional neural networks. In 2020 International

Symposium on Community-centric Systems (CcS) (pp.

1-6). IEEE.

https://doi.org/10.1109/CcS49175.2020.9231453

Karimunnisa, S., Savarapu, P. R., Madupu, R. K., Basha,

C. Z., & Neelakanteswara, P. (2020). Detection of

bone fractures automatically with enhanced

performance with better combination of filtering and

neural networks. In 2020 Second International

Conference on Inventive Research in Computing

Applications (ICIRCA) (pp. 189-193). IEEE.

https://doi.org/10.1109/ICIRCA48905.2020.9183085

Savaris, A., Gimenes Marquez Filho, A. A., Rodrigues

Pires de Mello, R., Colonetti, G. B., Von

Wangenheim, A., & Krechel, D. (2017). Integrating a

PACS network to a statewide telemedicine system: A

case study of the Santa Catarina state integrated

telemedicine and telehealth system. In 2017 IEEE 30th

International Symposium on Computer-Based Medical

Systems (CBMS) (pp. 356-357). IEEE.

https://doi.org/10.1109/CBMS.2017.128

Basha, C. Z., Reddy, M. R. K., Nikhil, K. H. S.,

Venkatesh, P. S. M., & Asish, A. V. (2020). Enhanced

computer aided bone fracture detection employing X-

ray images by Harris corner technique. In 2020 Fourth

International Conference on Computing

Methodologies and Communication (ICCMC) (pp.

991-995). IEEE.

https://doi.org/10.1109/ICCMC48092.2020.ICCMC-

000184

Rao, K., Srinivas, Y., & Chakravarty, S. (2022). A

systematic approach to diagnosis and categorization of

bone fractures in X-ray imagery. International Journal

of Healthcare Management, 1(1), 1-12.

https://doi.org/10.1080/20479700.2022.2097765

Widyaningrum, R., Sela, E. I., Pulungan, R., & Septiarini,

A. (2023). Automatic segmentation of periapical

radiograph using color histogram and machine

learning for osteoporosis detection. International

Journal of Dentistry, Article ID 6662911, 9.

https://doi.org/10.1155/2023/6662911

Antani, S., Long, L. R., Thoma, G. R., et al. (2003).

Anatomical shape representation in spine X-ray

images. In VIIP 2003: Proceedings of the 3rd IASTED

International Conference on Visualization, Imaging

and Image Processing (pp. 1-6). IASTED.

Abbas, W., et al. (2020). Lower leg bone fracture

detection and classification using Faster R-CNN for

X-ray images. In 2020 IEEE 23rd International

Multitopic Conference (INMIC) (pp. 1-6). IEEE.

https://doi.org/10.1109/INMIC50486.2020.9318052

Yang, L., Gao, S., Li, P., Shi, J., & Zhou, F. (2022).

Recognition and segmentation of individual bone

fragments with a deep learning approach in CT scans

of complex intertrochanteric fractures: A retrospective

study. Journal of Digital Imaging,

35(6), 1681-1689.

https://doi.org/10.1007/s10278-022-00669-w

Ma, Y., & Luo, Y. (2021). Bone fracture detection through

the two-stage system of Crack-Sensitive

Convolutional Neural Network. Informatics in

Medicine Unlocked, 22, 100452.

https://doi.org/10.1016/j.imu.2020.100452

Wang, M., Yao, J., Zhang, G., et al. (2021). ParallelNet:

Multiple backbone network for detection tasks on

thigh bone fracture. Multimedia Systems, 27, 1091–

1100. https://doi.org/10.1007/s00530-021-00783-9

Abbas, W., Adnan, S., Dr., J., & Ahmad, W. (2021).

Analysis of tibia-fibula bone fracture using deep

learning technique of X-ray images. International

Journal for Multiscale Computational Engineering,

19, 1-11.

https://doi.org/10.1615/IntJMultCompEng.202103613

7

Beyaz, S., Acıcı, K., & Sümer, E. (2020). Femoral neck

fracture detection in X-ray images using deep learning

and genetic algorithm approaches. Joint Diseases and

Related Surgery, 31(2), 175-183.

https://doi.org/10.5606/ehc.2020.72163

Jones, R. M., Sharma, A., Hotchkiss, R., et al. (2020).

Assessment of a deep-learning system for fracture

detection in musculoskeletal radiographs. npj Digital

Medicine, 3, 144. https://doi.org/10.1038/s41746-020-

00352-w

Dupuis, M., Delbos, L., Veil, R., & Adamsbaum, C.

(2022). External validation of a commercially

available deep learning algorithm for fracture

detection in children. Diagnostic and Interventional

Imaging, 103(3), 151–159.

https://doi.org/10.1016/j.diii.2021.10.007

Hardalaç, F., Uysal, F., Peker, O., et al. (2022). Fracture

detection in wrist X-ray images using deep learning-

based object detection models. Sensors, 22(3), 1285.

https://doi.org/10.3390/s22031285

Pranata, Y., Wang, K.-C., Wang, J.-C., et al. (2019). Deep

learning and SURF for automated classification and

detection of calcaneus fractures in CT images.

Computers in Biology and Medicine, 171, 1-8.

https://doi.org/10.1016/j.cmpb.2019.02.006

Mutasa, S., Varada, S., Goel, A., Wong, T. T., & Rasiej,

M. J. (2020). Advanced deep learning techniques

applied to automated femoral neck fracture detection

and classification. Journal of Digital Imaging, 33(5),

1209–1217. https://doi.org/10.1007/s10278-020-

00364-8

Nandyala, M., Kanumuri, P., & Garlapati, M. (2023).

Automatic diagnosis of fracture using deep learning

and external validation: A systematic review and

meta-analysis. International Journal of Intelligent

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

547

Systems and Applications Engineering, 11(2), 192–

200. https://doi.org/10.46328/ijisae.4781

Tigade, A. V., & Shaikh, S. M. (2021). A hybrid approach

for classification of bone fracture using DWT, GLCM,

and KNN. Journal of Computational and Theoretical

Nanoscience, 18(4), 1419–1425.

https://www.naturalspublishing.com/files/published/1r

886bfj97787b.pdf

Shelmerdine, S., White, R., Liu, H., Arthurs, O., & Sebire,

N. (2022). Artificial intelligence for radiological

paediatric fracture assessment: A systematic review.

Insights into Imaging, 13, 10.

https://doi.org/10.1186/s13244-022-01234-3

Yang, S., Yin, B., Cao, W., Feng, C., Fan, G., & He, S.

(2020). Diagnostic accuracy of deep learning in

orthopaedic fractures: A systematic review and meta-

analysis. Clinical Radiology, 75, 1–10.

https://doi.org/10.1016/j.crad.2020.05.021

Zou, J., & Arshad, M. R. (2024). Detection of whole body

bone fractures based on improved YOLOv7.

Biomedical Signal Processing and Control, 91,

105995. https://doi.org/10.1016/j.bspc.2024.105995

Puttagunta, M., & Ravi, S. (2021). Medical image analysis

based on deep learning approach. Multimedia Tools

and Applications, 80, 24365–24398.

https://doi.org/10.1007/s11042-021-10707-4

Zhang, H., & Qie, Y. (2023). Applying deep learning to

medical imaging: A review. Applied Sciences, 13,

10521. https://doi.org/10.3390/app131810521

Tanzi, L., Vezzetti, E., Aprato, A., Audisio, A., & Massè,

A. (2020). Computer-aided diagnosis system for bone

fracture detection and classification: A review on deep

learning techniques.

Moon, G., Kim, S., Kim, W., Jeong, Y., & Choi, H.-S.

(2022). Computer-aided facial bone fracture diagnosis

(CA-FBFD) system based on object detection model.

IEEE Access, 10, 79061–79070.

https://doi.org/10.1109/ACCESS.2022.3192389

Tan, H., Xu, H., Yu, N., Yu, Y., Duan, H., Fan, Q., & Z.

T. (2023). The value of deep learning-based computer

aided diagnostic system in improving diagnostic

performance of rib fractures in acute blunt trauma.

BMC Medical Imaging, 23(1), 55.

https://doi.org/10.1186/s12880-023-01012-7

Martin, L. T., Nelson, C., Yeung, D., Acosta, J. D.,

Qureshi, N., Blagg, T., & Chandra, A. (2022). The

issues of interoperability and data connectedness for

public health. Big Data, 10(S1), S19-S24.

https://doi.org/10.1089/big.2022.0207

Dash, S., Shakyawar, S. K., Sharma, M., et al. (2019). Big

data in healthcare: Management, analysis, and future

prospects. Journal of Big Data, 6, 54.

https://doi.org/10.1186/s40537-019-0217-0

Aiello, M., Esposito, G., Pagliari, G., et al. (2021). How

does DICOM support big data management?

Investigating its use in the medical imaging

community. Insights into Imaging, 12, 164.

https://doi.org/10.1186/s13244-021-01081-8

Sun, Y., Li, Y., Li, S., Duan, Z., Ning, H., & Zhang, Y.

(2023). PBA-YOLOv7: An object detection method

based on an improved YOLOv7 network. Applied

Sciences, 13, 10436.

https://doi.org/10.3390/app131810436

Zha, K. Y., Yan, L., & Zeng, G. (2022). A comprehensive

review of AI techniques for bone fracture detection.

arXiv preprint. https://arxiv.org/abs/2207.02696

Wang, C.-Y., Bochkovskiy, A., & Liao, H.-Y. M. (2023).

YOLOv7: Trainable bag-of-freebies sets new state-of-

the-art for real-time object detectors. In Proceedings

of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR) (pp. 7464-7475).

https://doi.org/10.1109/CVPR52729.2023.00721

Medaramatla, S., Samhitha, C., Pande, S., & Vinta, S.

(2024). Detection of hand bone fractures in X-ray

images using hybrid YOLO NAS. IEEE Access, 1.

https://doi.org/10.1109/ACCESS.2024.3379760

Kheaksong, A., Sanguansat, P., Samothai, P., Dindam, T.,

Srisomboon, K., & Lee, W. (2022). Analysis of

modern image classification platforms for bone

fracture detection. In Proceedings of the 6th

International Conference on Information Technology

(InCIT) (pp. 471-474).

https://doi.org/10.1109/InCIT56086.2022.10067836

Yigzaw, K. Y., Olabarriaga, S., Michalas, A., Marco-Ruiz,

L., Hillen, C., Verginadis, Y., Oliveira, M. T.,

Krefting, D., Penzel, T., Bowden, J., Bellika, J., &

Chomutare, T. (2022). Health data security and

privacy: Challenges and solutions for the future.

Computer Systems Science and Engineering.

https://doi.org/10.1016/B978-0-12-823413-6.00014-8

Kumar, K. P., Prathap, B. R., Thiruthuvanathan, M. M.,

Murthy, H., & Pillai, V. J. (2024). Secure approach to

sharing digitized medical data in a cloud environment.

Data Science and Management, 7(2), 108-118.

https://doi.org/10.1016/j.dsm.2023.12.001

Zukarnain, Z., Muneer, A., Atirah, N., & Almohammedi,

A. (2022). Medi-Block record secure data sharing in

healthcare system: Issues, solutions, and challenges.

Computer Systems Science and Engineering.

https://doi.org/10.32604/csse.2023.034448

Guo, H., Wang, Y., Ding, H., & Chen, J. (2023). Bone

fracture detection based on an improved YOLOv7

model. IEEE Access, 11, 7635-7645.

https://doi.org/10.1109/ACCESS.2023.10476607

Parvin, S., & Rahman, A. (2024). A real-time human bone

fracture detection and classification from multi-modal

images using deep learning technique. Applied

Intelligence, 54, 1-17. https://doi.org/10.1007/s10489-

024-05588-7

Gupta, A. (2024). A comprehensive guide on optimizers in

deep learning. Analytics Vidhya.

https://www.analyticsvidhya.com/blog/2021/10/a-

comprehensive-guide-on-deep-learning-optimizers/

Kingma, D., & Ba, J. (2015). Adam: A method for

stochastic optimization. In Proceedings of the

International Conference on Learning Representations

(ICLR). https://doi.org/10.48550/arXiv.1412.6980

Ruder, S. (2016). An overview of gradient descent

optimization algorithms.

https://doi.org/10.48550/arXiv.1609.04747

INCOFT 2025 - International Conference on Futuristic Technology

548

Wu, T., & Dong, Y. (2023). YOLO-SE: Improved

YOLOv8 for remote sensing object detection and

recognition. Applied Sciences, 13(24), Article 12977.

https://doi.org/10.3390/app132412977

Das, P., Chakraborty, A., Sankar, R., Singh, O. K., Ray,

H., & Ghosh, A. (2023). Deep learning-based object

detection algorithms on image and video. In

Proceedings of the 2023 3rd International Conference

on Intelligent Technologies (CONIT), 1–6.

https://doi.org/10.1109/CONIT59222.2023.10205601

Lou, H., Duan, X., Guo, J., Liu, H., Gu, J., Bi, L., & Chen,

H. (2023). DC-YOLOv8: Small size object detection

algorithm based on camera sensor.

https://doi.org/10.20944/preprints202304.0124.v1

Hussain, M. (2023). YOLO-v1 to YOLO-v8, the rise of

YOLO and its complementary nature toward digital

manufacturing and industrial defect detection.

Machines and Tooling, 11(7), Article 677.

https://doi.org/10.3390/machines11070677

Deo, G., Totlani, J., & Mahamuni, C. (2023). A survey on

bone fracture detection methods using image

processing and artificial intelligence (AI) approaches.

https://doi.org/10.1063/5.0188460

Meena, T., & Roy, S. (2022). Bone fracture detection

using deep supervised learning from radiological

images: A paradigm shift. Diagnostics, 12, Article

2420. https://doi.org/10.3390/diagnostics12102420

Redmon, J., & Farhadi, A. (2018). YOLOv3: An

incremental improvement. CoRR.

https://doi.org/10.48550/arXiv.1804.02767

Mehra, A. (2023). Understanding YOLOv8 architecture,

applications, and features. Labellerr.

https://www.labellerr.com/blog/understanding-yolov8-

architecture-applications-features/

He, K., Zhang, X., Ren, S., & Sun, J. (2014). Spatial

pyramid pooling in deep convolutional networks for

visual recognition. In Proceedings of the European

Conference on Computer Vision (ECCV), 346-361.

https://doi.org/10.1007/978-3-319-10578-9_23

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., & Ren, D.

(2019). Distance-IoU loss: Faster and better learning

for bounding box regression. CoRR.

http://arxiv.org/abs/1911.08287

Li, X., Wang, W., Wu, L., Chen, S., Hu, X., Li, J., Tang,

J., & Yang, J. (2020). Generalized focal loss: Learning

qualified and distributed bounding boxes for dense

object detection.

https://doi.org/10.48550/arXiv.2006.04388

Yang, G., Wang, J., Nie, Z., Yang, H., & Yu, S. (2023). A

lightweight YOLOv8 tomato detection algorithm

combining feature enhancement and attention.

Agronomy, 13(7), Article 1824.

https://doi.org/10.3390/agronomy13071824

Li, Y., Fan, Q., Huang, H., Han, Z., & Gu, Q. (2023). A

modified YOLOv8 detection network for UAV aerial

image recognition. Drones, 7(5), Article 304.

https://doi.org/10.3390/drones7050304

Saxena, S. (2021). Binary cross entropy/log loss for binary

classification. Analytics Vidhya.

https://www.analyticsvidhya.com/blog/2021/03/binary

-cross-entropy-log-loss-for-binary-classification/

https://doi.org/10.22214/ijraset.2022.45523

Automated Bone Fracture Detection System Using YOLOv7 with Secure Email Data Sharing

549