Real-Time Integrity Monitoring in Online Exams Using Deep Learning

Model

Guruprasad Konnurmath, Pratham Shirol, Nitin Nagaral, Devaraj Hireraddi, Kushal Patil

and Girish Dongrekar

Department of Computer Science and Engineering, KLE Technological University, Hubballi, India

Keywords:

Cheat Detection, YOLOv8 Model, Online Examinations, Real-Time Monitoring, Academic Integrity,

Unauthorized Gadgets.

Abstract:

The rapid shift towards online education and remote assessments has intensified the challenge of ensuring aca-

demic integrity. This research presents a comprehensive integrity monitoring called as cheat detection system

utilizing the YOLOv8 model, a state of art deep learning model designed to enhance the credibility of online

examinations. Our system employs a live video feed from a webcam to monitor examinees or individuals

taking an examination in real-time, detecting multiple persons, unauthorized gadgets (such as mobile phones,

laptops, and headphones), and eye movements indicative of potential cheating. The YOLOv8 model is trained

to accurately recognize these objects and behaviors, triggering immediate alerts upon suspicious detection.

The research paper details the design, implementation, and evaluation of this system, demonstrating its effi-

cacy in maintaining the integrity of online exams. Our results indicate that the system can significantly reduce

cheating incidents, offering a robust solution applicable to educational institutions, certification bodies, and

other scenarios requiring stringent monitoring.

1 INTRODUCTION

Cheating in online examinations has emerged as a sig-

nificant challenge in the digital age, exacerbated by

the widespread adoption of remote learning and as-

sessment tools (Nguyen, Rienties, et al. 2020). The

integrity of online assessments is frequently compro-

mised by candidates using unauthorized devices, col-

laborating with others, or employing other deceitful

strategies. Various methodologies have been pro-

posed and implemented to mitigate these issues, in-

cluding traditional proctoring, automated invigilation

systems, and advanced AI-driven solutions. Exist-

ing works leverage facial recognition, behavior anal-

ysis, and object detection to monitor and flag suspi-

cious activities (Krafka, Khosla, et al. 2016), (Chu,

Ouyang, et al. 2022). However, these methods of-

ten suffer from limitations in accuracy, adaptability,

and real-time performance, underscoring the need for

more robust and dynamic solutions. In this project,

we propose a comprehensive cheat detection system

leveraging the YOLOv8 model, renowned for its ef-

ficiency in object detection tasks. The system in-

tegrates a live video feed from a webcam to moni-

tor examinees in real-time. Our approach focuses on

detecting multiple persons in the frame, identifying

the presence of gadgets such as mobile phones, lap-

tops, and headphones, and implementing gaze detec-

tion to monitor eye movements. The model is trained

to recognize these objects and activities with high

precision, leveraging a diverse and annotated dataset

that includes various cheating scenarios and environ-

ments. The system utilizes the YOLOv8 architecture

comprising deep learning model due to its capabil-

ity to perform real-time object detection with mini-

mal latency, making it ideal for live surveillance ap-

plications. By incorporating transfer learning tech-

niques, we fine-tuned the YOLOv8 model on our cus-

tom dataset to improve its detection accuracy for spe-

cific cheating-related objects and behaviors. Addi-

tionally, we implemented advanced gaze detection al-

gorithms that analyze eye movements to identify pat-

terns indicative of cheating, such as frequently look-

ing off-screen or towards a concealed note. This is

achieved using a combination of convolutional neu-

ral networks (CNNs) and eye-tracking methodologies

integrated with the YOLOv8 detection framework.

The cheat detection system features a real-time alert

Konnurmath, G., Shirol, P., Nagaral, N., Hireraddi, D., Patil, K. and Dongrekar, G.

Real-Time Integrity Monitoring in Online Exams Using Deep Learning Model.

DOI: 10.5220/0013620700004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 427-432

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

427

mechanism that overlays alert messages directly on

the video frame whenever suspicious behavior is de-

tected. This ensures that invigilators can take immedi-

ate action without delay. The alerts are accompanied

by bounding boxes and labels that specify the type of

detected object or activity, providing clear and action-

able information. For comprehensive monitoring, the

system supports multi-camera setups, allowing cov-

erage of the examination room from different angles

to eliminate blind spots. Each camera feed is pro-

cessed independently, and the results are aggregated

to provide a holistic view of the examinee’s activities.

To maintain the system’s performance, we employed

continuous monitoring and periodic retraining strate-

gies. The model’s performance metrics, including

precision, recall, and mean Average Precision (mAP),

are regularly evaluated, and the model is updated with

new data to adapt to emerging cheating techniques.

The research paper is structured to provide a compre-

hensive overview of our project and its findings. It

includes detailed sections on the system architecture,

data collection and annotation processes, model train-

ing and evaluation, implementation of gaze detection,

real-time alert generation, and performance analysis.

Our results demonstrate the efficacy of the proposed

system in enhancing the integrity and fairness of the

examination process. (Soltane and Laouar, 20121),

(Jadi, 2021)

2 PROPOSED METHODOLOGY

2.1 Methodology

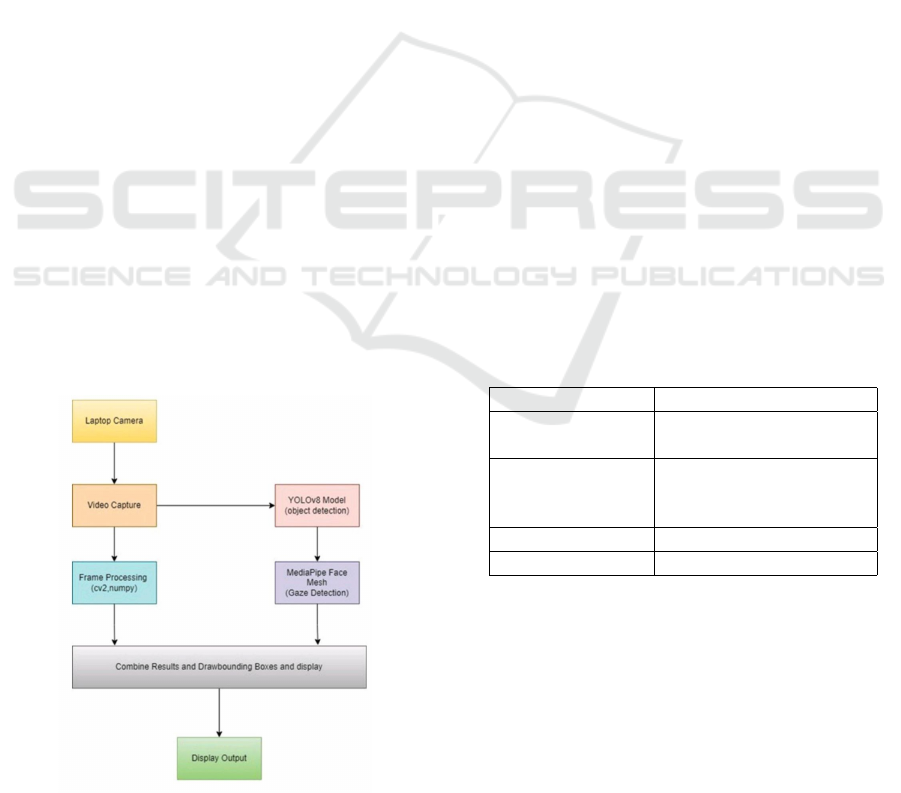

Figure 1: Proposed Methodology

The proposed framework for building a cheat de-

tection model in online examinations involves a series

of steps as seen in figure 1. It begins with data collec-

tion, where a comprehensive dataset of images captur-

ing various cheating behaviors during simulated on-

line exams is gathered. The collected data then under-

goes preprocessing and splitting into training, valida-

tion, and testing subsets. Subsequently, the YOLOv8

(You Only Look Once, Version 8) object detection

model is employed and trained on the prepared dataset

to detect and classify cheating behaviors present in the

images. The trained YOLOv8 model is then used to

classify input images into ”Cheating” or ”No Cheat-

ing” categories based on the detected cheating behav-

iors. The performance of the trained model is evalu-

ated using appropriate metrics, and the evaluation re-

sults can be used to refine and improve the model fur-

ther through a feedback loop. The framework lever-

ages computer vision techniques and the YOLOv8

model to automatically detect and classify cheating

behaviors, thereby enhancing academic integrity and

creating a fair assessment environment for online ex-

aminations.

2.2 Dataset Description

To develop and evaluate a robust cheat detection sys-

tem for online examinations, a comprehensive dataset

was manually collected. The dataset consists of im-

ages and annotations capturing various cheating be-

haviors exhibited by individuals during simulated ex-

amination scenarios. The complete dataset summary

is shown in table 1.

Table 1: Dataset Summary

Description Details

Participants

(M/F)

30 (15/15)

Scenarios Down, Left, Right, Mo-

bile, Away, Earphone,

Multiple

Environment Controlled Exam

Purpose Cheating Analysis

2.2.1 Data Collection

A total of 30 participants, comprising 15 males and

15 females, were recruited for the data collection pro-

cess. These participants were instructed to simulate

cheating behaviors in a controlled environment, mim-

icking real-world scenarios encountered during online

examinations.

INCOFT 2025 - International Conference on Futuristic Technology

428

2.2.2 Cheating Scenarios

The participants were asked to engage in various

cheating activities, including but not limited to:

• Using mobile phones: Participants were in-

structed to hold and interact with their mobile

phones, simulating the act of accessing unautho-

rized information or communicating with others

during the examination.

• Wearing headphones: Participants wore head-

phones, mimicking the behavior of receiving au-

dio prompts or instructions from external sources.

• Utilizing smartwatches: Participants wore smart-

watches, representing the potential use of such de-

vices to access or receive information covertly.

• Looking away from the screen: Participants were

instructed to frequently shift their gaze away from

the simulated examination screen, indicating po-

tential cheating by referring to external resources

or seeking assistance from others.

• Exhibiting suspicious body language: Participants

were encouraged to exhibit suspicious body lan-

guage, such as fidgeting, nervous movements, or

suspicious postures, which could potentially indi-

cate cheating behavior.

2.2.3 Data Annotation

For each captured image, detailed annotations were

provided, indicating the presence or absence of vari-

ous cheating behaviors. These annotations included:

• Phone detected: Images where the participant was

holding or interacting with a mobile phone were

labeled as ”Phone detected.”

• Headphone detected: Images where the partic-

ipant was wearing headphones were labeled as

”Headphone detected.”

• Smartwatch detected: Images where the partici-

pant was wearing a smartwatch were labeled as

”Smartwatch detected.”

• Looking away from the screen: Images where

the participant’s gaze was directed away from the

simulated examination screen for an extended pe-

riod were labeled as ”Looking away from the

screen.”

The final dataset comprises approximately 1,200 an-

notated images, capturing a diverse range of cheating

behaviors and scenarios. This dataset will serve as a

valuable resource for training and evaluating machine

learning models aimed at detecting cheating attempts

during online examinations.

2.3 Equations

Gaze Estimation: The gaze scores lx

s

core, ly

s

core,

rx

s

core, and ry

s

core for the left and right eyes are cal-

culated using the landmark coordinates obtained from

the MediaPipe FaceMesh model. These scores rep-

resent the relative position of the iris within the eye

region.

Left Eye X Gaze Score:

lx

s

core =

f ace

2

d[468, 0] − f ace

2

d[130, 0]

f ace

2

d[243, 0] − f ace

2

d[130, 0]

, (1)

Left Eye Y Gaze Score:

ly

s

core =

f ace

2

d[468, 1] − f ace

2

d[27, 1]

f ace

2

d[23,1] − f ace

2

d[27, 1]

, (2)

Right Eye X Gaze Score:

rx

s

core =

f ace

2

d[473, 0] − f ace

2

d[463, 0]

f ace

2

d[359,0] − f ace

2

d[463, 0]

, (3)

Right Eye Y Gaze Score:

ry

s

core =

f ace

2

d[473,1] − f ace

2

d[257, 1]

f ace

2

d[253,1] − f ace

2

d[257, 1]

, (4)

where f ace

2

d is an array containing the 2D facial

landmark coordinates.

Gaze Direction: The gaze direction is determined

based on the gaze scores using the following condi-

tions:

direction =

Looking Right, if lx

s

core > 0.6 or rx

s

core > 0.6

Looking Left, if lx

s

core < 0.4 or rx

s

core < 0.4

Looking Up, if ly

s

core > 0.6 or ry

s

core > 0.6

Looking Down, if ly

s

core < 0.4 or ry

s

core < 0.4

Looking Straight, otherwise

(5)

The provided equations are used to estimate gaze

direction from facial landmark coordinates obtained

from the MediaPipe FaceMesh model. Equations

(1) and (2) calculate the horizontal and vertical gaze

scores for the left eye, respectively, by taking the

relative position of the iris landmark with respect to

the eye’s corner and top/bottom landmarks. Equa-

tions (3) and (4) perform similar calculations for the

right eye. Equation (5) then determines the overall

gaze direction (Looking Right, Left, Up, Down, or

Straight) based on these gaze scores, using predefined

thresholds. This gaze estimation technique allows for

real-time tracking of eye movements and gaze direc-

tion, which can be useful in various applications such

as human-computer interaction, attention monitoring,

and behavioral analysis. Head Pose Direction: The

head pose direction is determined based on the ad-

justed rotation vectors using the following conditions:

Real-Time Integrity Monitoring in Online Exams Using Deep Learning Model

429

head direction =

Facing Right :

ifl gaze rvec[2][0] > 0

Facing Left :

ifl gaze rvec[2][0] < 0

Facing Forward :

otherwise

(6)

The head pose direction is determined from the

adjusted rotation vectors of the left and right eye land-

marks. If the x-component of either eye’s rotation

vector is positive, the head is classified as ”Facing

Right”. If the x-component is negative for either eye,

the head is classified as ”Facing Left”. In all other

cases, where the x-components are zero for both eyes,

the head pose is classified as ”Facing Forward”. This

approach utilizes the rotation information extracted

from facial landmarks to estimate the overall head ori-

entation.

3 RESULTS

Figure 2: YOLOv8 training results

The figure 2., presents a comprehensive overview

of a YOLOv8 object detection model’s training

progress. It showcases various training and valida-

tion loss metrics (box, class, and dfl losses) which

consistently decrease over time, indicating effective

learning. Simultaneously, performance metrics such

as precision, recall, and mean Average Precision

(mAP) demonstrate steady improvement, eventually

stabilizing at high values. This overall trend across

multiple metrics suggests that the model is learning

successfully, generalizing well to validation data, and

achieving strong object detection performance as the

training progresses through its epochs.

The provided figure 3., illustrates a cheat detection

system in an online examination, effectively identify-

ing the examinee’s face and gaze direction, along with

detecting the presence of a mobile phone. The system

indicates the examinee is looking down at the device,

suggesting potential cheating behavior.

Figure 3: Mobile Detected

Figure 4: Multiple Persons Detected

The provided figure 4.,illustrates a cheat detection

system in an online examination, identifying the pres-

ence of multiple faces within the frame. The system

highlights the primary examinee looking down and

raises an alert for multiple detected faces, indicating

potential collusion or assistance.

Figure 5: Gaze Direction

The provided figure 5.,demonstrates a cheat detec-

tion system that tracks and labels an examinee’s gaze

direction in various orientations: looking down, left,

right, and forward. The system’s accurate gaze detec-

tion, indicated by green dots on the eyes and corre-

INCOFT 2025 - International Conference on Futuristic Technology

430

sponding labels, allows for comprehensive monitor-

ing of the examinee’s attention during an online ex-

amination. This capability is essential for identifying

suspicious behavior, ensuring the integrity of the ex-

amination process.

Table 2: YOLOv8 Object Detection Model Results

Metric Value

Precision 0.9920

Recall 0.9987

mAP50 0.9950

mAP50-95 0.9348

Model Performance Evaluation and Updation

• Evaluation Metrics: The model’s performance

was assessed using the metrics as seen in table 2.

• Evaluation Process: The evaluation was con-

ducted on a validation dataset comprising various

annotated images with various exam cheating sce-

narios. The confusion matrix was used to cal-

culate precision and recall, while mAP was cal-

culated following the standard COCO evaluation

method.

• Model Updating:

– Data Collection: New data was collected from

recent exams, focusing on emerging cheating

techniques.

– Data Annotation: The new dataset was anno-

tated with labels for cheating-related objects

and activities.

– Model Retraining: The YOLOv8 model was

retrained using the combined original and new

datasets.

• Documentation and Reporting: Each evaluation

and retraining cycle is meticulously documented.

Reports include performance metrics, changes in

model architecture, and qualitative assessments of

the model’s detection capabilities.

4 CONCLUSIONS

Our cheat detection system leveraging the YOLOv8

model demonstrated remarkable accuracy in identify-

ing multiple persons, unauthorized gadgets, and sus-

picious gaze directions. This system significantly en-

hances the integrity of online examinations by pro-

viding real-time alerts, thereby reducing the chances

of cheating. The solution’s versatility allows its ap-

plication across educational institutions, certification

bodies, and other scenarios requiring stringent moni-

toring and compliance. Furthermore, the potential for

adaptation to secure remote work environments and

online interviews showcases the broad applicability

of AI-driven solutions. This project underscores the

critical role of advanced object detection models in

maintaining fairness and credibility in various online

activities. The integration of deep learning models

like YOLOv8 is crucial for integrity detection dur-

ing online examinations, ensuring a fair and secure

assessment environment. Continuous innovation in

cheat detection methodologies is essential to keep up

with evolving challenges and maintain the trust and

reliability of online platforms.

REFERENCES

Nguyen, Q., Rienties, B., and Tempelaar, D. (2020). Explor-

ing the benefits of online education and the challenges

of online proctoring. Computers & Education.

Khawaji, A., Mitra, K., and Alhaddad, A. (2021). Cheat-

ing in online examinations: A review of existing

techniques and countermeasures. IEEE Access, 9,

160377–160396.

Krafka, K., Khosla, A., Kellnhofer, P., Kannan, H., Bhan-

darkar, S., Matusik, W., and Torralba, A. (2016). Eye

tracking for everyone. In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recog-

nition (CVPR), 2176–2184.

Bochkovskiy, A., Wang, C. Y., and Liao, H. Y. M. (2022).

YOLOv8: Accurate, scalable, and fast object detec-

tion. arXiv preprint, arXiv:2208.08972.

Chu, X., Ouyang, W., Yang, W., and Wang, X. (2022).

Multicue gaze estimation for virtual reality. IEEE

Transactions on Visualization and Computer Graph-

ics, 28(10), 3593–3603.

Yin, L., Chen, X., Sun, Y., Worm, T., and Reale, M.

(2008). A high-resolution 3D dynamic facial expres-

sion database. In Proceedings of the IEEE Interna-

tional Conference on Automatic Face and Gesture

Recognition (FG), 1–6.

Soltane, M., and Laouar, M. R. (2021). A smart system

to detect cheating in the online exam. In Proceed-

ings of the 2021 International Conference on Infor-

mation Systems and Advanced Technologies (ICISAT).

doi:10.1109/ICISAT54145.2021.9678418.

Jadi, A. (2021). A new method for detecting cheat-

ing in online exams during the COVID-19

pandemic. International Journal of Computer

Science and Network Security, 21(4), 110–118.

doi:10.22937/IJCSNS.2021.21.4.17.

Tiong, L. C. O., and Lee, H. J. (2021). E-cheating preven-

tion measures: Detection of cheating in online exami-

nations using a deep learning approach – A case study.

arXiv preprint, arXiv:2101.09841.

Barrientos, A., Cuadros, M., Alba, J., and Cruz, A. S.

(2021). Implementation of a remote system for the su-

Real-Time Integrity Monitoring in Online Exams Using Deep Learning Model

431

pervision of online exams using artificial intelligence

cameras. In Proceedings of the 2021 IEEE Engineer-

ing International Research Conference (EIRCON),

1–6. doi:10.1109/EIRCON52903.2021.9613352.

INCOFT 2025 - International Conference on Futuristic Technology

432