Intelligent Satellite Image Classification Using Deep Convolutional

Neural Networks

Manoj Bhaskar

1

, Chetan Barde

1

, Prakash Ranjan

1

Nawneet Kumar

1

and Sujit Kumar

2

1

Dept. of Electronics and Communication Engineering, Indian Institute of Information Technology Bhagalpur, Bhagalpur,

Bihar, India

2

Dept. of Electrical Engineering, Government Engineering College Banka, Bihar Engineering University, Science,

Technology and Technical Education Department, Bihar, India

Keywords:

Satellite Image, Classification, Deep Learning, Batch Normalization.

Abstract:

Image classification plays a key role in remote sensing, image analysis, and pattern recognition. Detecting

and classifying objects in satellite images is vital for applications like marine monitoring, land use planning,

ecological studies, and military operations. Satellite images, with their rich spatial and temporal information,

help address many real-world challenges. However, classifying these images is challenging due to limited data

availability, varying quality, and uneven distribution. Deep learning (DL) algorithms have become popular

for satellite image classification because of their effectiveness in tasks such as land-use planning, disaster

response, and resource management. This study introduces a deep convolutional neural network (DCNN)

model combined with batch normalization (BN) for classifying satellite images. The dataset includes images

from remote sensing satellites categorized into four classes: cloudy regions, deserts, water bodies, and green

areas. CNNs are well-suited for image processing, as they automatically extract key features like edges and

textures. Batch normalization improves training by stabilizing inputs within the network layers, making the

process faster and more efficient. Our proposed model demonstrates high accuracy in classifying satellite

images, achieving an overall performance of 94.50%, outperforming existing methods. This shows its potential

for real-world applications.

1 INTRODUCTION

Satellite image processing involves analyzing data

collected by Earth-orbiting satellites using sensors

like cameras and radar. These images provide valu-

able insights into weather, land use, and more (Voigt,

2007) (Nguyen, 2019). A major challenge is handling

the vast amount of data generated by these sensors,

which is too complex for manual processing. Ad-

vanced algorithms are used to interpret the images,

applying techniques like enhancement, classification,

and feature extraction (Fu, 2018). These methods

are widely used in fields like environmental moni-

toring, agriculture, and urban planning, helping track

changes in crops, urban areas, and forests (Singh,

2022) (Padmanaban, 2019).

Satellite image processing involves analyzing data

captured by Earth-orbiting satellites using sensors

like cameras and radar. These images provide valu-

able insights into weather patterns, land use, and

other critical aspects of Earth’s surface. However,

the sheer volume of data generated by satellite sen-

sors presents a major challenge. Advanced computer

algorithms are necessary to process and interpret this

complex data effectively. Key techniques include im-

age enhancement, feature extraction, and classifica-

tion. Feature extraction identifies specific elements in

an image, such as roads or buildings, making it useful

for applications in environmental monitoring, agri-

culture, and urban planning. For example, satellite

images are used to track crop health, urban growth,

and deforestation rates. With technological advances,

satellite image processing continues to play a vital

role in managing Earth’s resources.

Deep learning has emerged as a powerful tool for

satellite image classification. Inspired by the human

brain, deep learning uses neural networks with multi-

ple layers—input, hidden, and output layers (Diker,

2022). These networks process data through inter-

connected neurons using weights, biases, and acti-

vation functions like ReLU, sigmoid, or tanh. This

354

Bhaskar, M., Barde, C., Ranjan, P., Kumar, N. and Kumar, S.

Intelligent Satellite Image Classification Using Deep Convolutional Neural Networks.

DOI: 10.5220/0013616500004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 354-359

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

structured approach enables deep learning models to

extract meaningful features and classify satellite im-

ages efficiently. Several studies demonstrate the ad-

vancements in satellite image processing using deep

learning. (Tripodi, 2022) developed an automated

pipeline for 3D reconstruction of landscapes from

high-resolution satellite images. This method used

convolutional neural networks (CNNs) to extract se-

mantic features and classify data into 16 categories,

producing detailed 3D maps. Similarly, (Anggi-

ratih, 2019) combined the ZFNet CNN architecture

with the Random Forest algorithm to enhance fea-

ture extraction and classification. While the model

achieved 87.5% accuracy for large vessel detection,

it struggled with smaller vessels, achieving less than

50% accuracy. (Munirah Alkhelaiwi, 2021) intro-

duced privacy-preserving deep learning (PPDL) to

safeguard sensitive satellite image data. Their ap-

proach allowed CNN models to train directly on en-

crypted data without significant computational over-

head. Testing on a dataset from Saudi Arabia demon-

strated that the method effectively balanced data util-

ity and privacy. (Ghazaleh Serati, 2022) applied a

conditional generative adversarial network (CGAN)

to extract building footprints in Yangon City, Myan-

mar, using optical satellite images. Their model

achieved 71% completeness, 81% correctness, and

an F1 score of 69% for building footprint extraction,

demonstrating the potential of CGANs for urban map-

ping tasks. (Ashwini, 2023) developed an ensem-

ble method for classifying satellite images into cat-

egories such as Cloudy, Desert, Water, and Green

areas. The approach combined confidence scores

from four classifiers—Decision Tree, Random For-

est, Gaussian Na

¨

ıve Bayes, and Support Vector Clas-

sifier—achieving an overall accuracy of 92%. (T. Yo-

gesh, 2024) used a dataset of 5,631 satellite images

to classify cloud-covered areas, deserts, green land-

scapes, and water bodies. They employed the VGG-

16 CNN architecture, achieving high validation accu-

racy and showcasing the potential of deep learning for

satellite image classification.

These advancements highlight the importance of

deep learning in improving the accuracy and effi-

ciency of satellite image processing. Techniques like

CNNs, ensemble classifiers, and generative networks

are continuously pushing the boundaries of what can

be achieved, making satellite image analysis a crucial

tool for addressing real-world challenges.

This research focuses on improving satellite im-

age classification using a DCNN combined with batch

normalization (BN). DCNN have emerged as a piv-

otal tool in modern machine learning, particularly for

tasks involving image and signal processing. Their

ability to automatically extract and learn hierarchi-

cal features from raw input data makes them highly

effective for complex pattern recognition problems.

DCNN leverage multiple convolutional layers to cap-

ture intricate spatial and temporal relationships in the

data. BN enhances this process by keeping the fea-

ture distributions stable, which helps the model train

faster and more effectively. Together, DCNN and

BN enable the model to handle large and complex

datasets while ensuring accurate results. The model

is designed to classify satellite images into four cat-

egories: water bodies, green areas, cloudy regions,

and deserts. Experimental results show that the pro-

posed model achieves high accuracy in classification.

The study also compares its performance with other

deep learning methods, demonstrating that the pro-

posed approach is more effective.

The study presents a DCNN model combined with

batch BN to enhance the classification of satellite im-

ages. CNNs are effective for image classification

as they automatically extract important features from

images and classify them accurately. Batch normal-

ization improves the learning process by ensuring the

features maintain consistent distributions, which sta-

bilizes and speeds up the training. This combination

helps the model learn strong and reliable features, en-

abling accurate classification of large and complex

image datasets. The model successfully categorizes

satellite images into four types: water bodies, green

areas, cloudy areas, and deserts. The proposed model

achieves high accuracy, which is validated by exper-

imental results. The model is also compared with

other deep learning methods to demonstrate its ef-

fectiveness. The paper is organized as follows: Sec-

tion 1 provides an introduction to various satellite im-

age classification approaches. Section 2 outlines the

proposed methodology for satellite image classifica-

tion. Section 3 presents the results and discussion of

the classification model, and Section 4 concludes the

work.

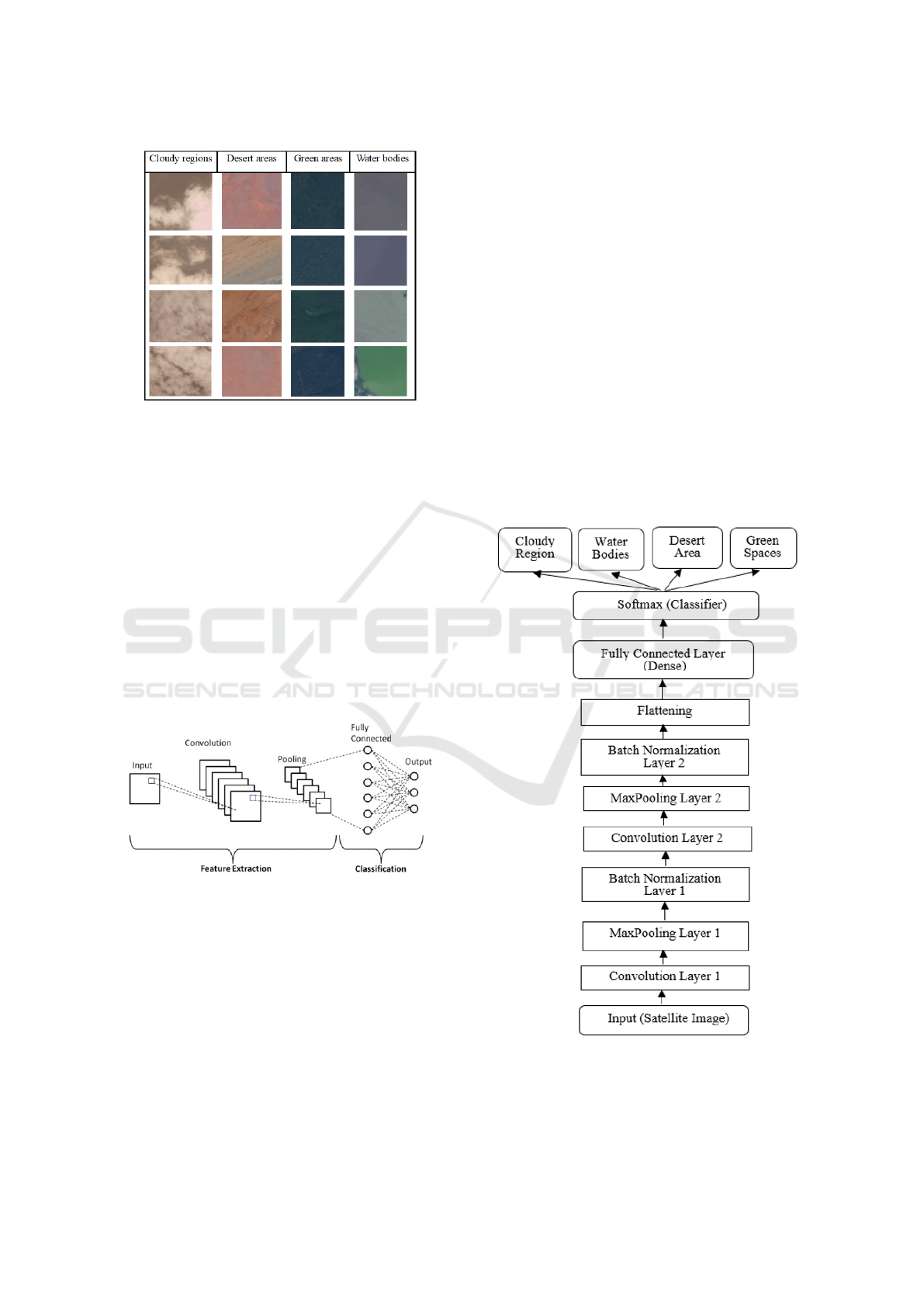

2 DATA DESCRIPTION

This study used the RSI-CB256 dataset for satel-

lite image classification. The dataset is publicly

available online and referenced in (Available online,

2022). Fig. 1. shows sample images from the dataset,

which contains 2,000 satellite images evenly divided

into four categories: 500 images each for cloudy re-

gions, desert areas, green areas, and water bodies.

These categories are labeled as follows: cloudy re-

gions are Class 1, desert areas are Class 2, green areas

are Class 3, and water bodies are Class 4.

Intelligent Satellite Image Classification Using Deep Convolutional Neural Networks

355

Figure 1: Samples of satellite images from Dataset-RSI-

CB256.

3 METHODOLOGY

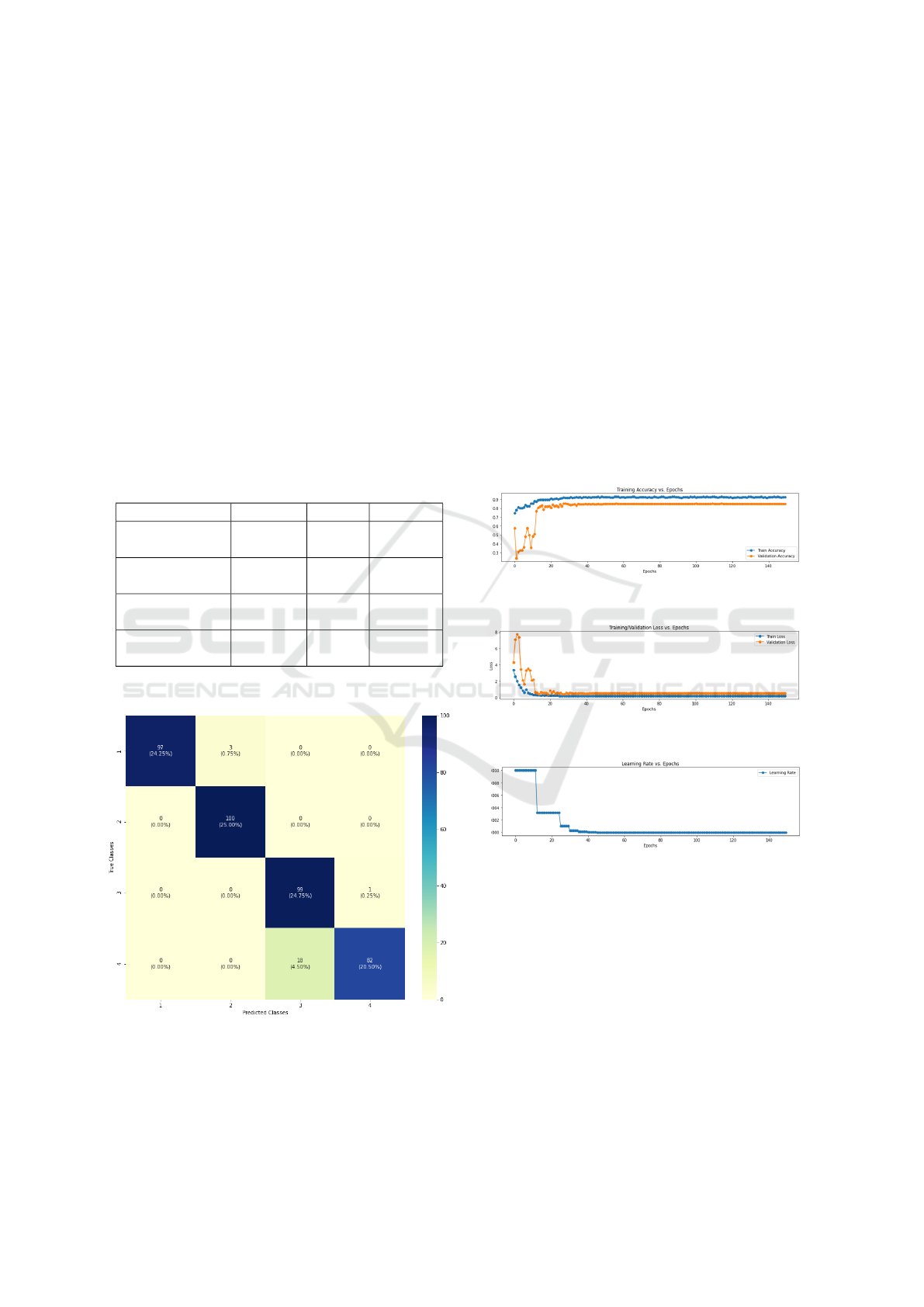

This research focuses on classifying satellite images

into four types of terrain using a DCNN model com-

bined with BN. The process starts with a satellite im-

age as input, which is then classified into one of the

four terrain categories. The methodology involves

three steps: pre-processing the images, extracting fea-

ture maps, and classifying the images based on these

features. Fig. 2. illustrates the basic structure of the

CNN model, which includes three main layers: con-

volutional layers for feature extraction, pooling layers

for reducing data size, and fully connected layers for

final classification (Ozbay, 2023) (Onal, 2020).

Figure 2: Basic architecture of CNN

The framework of the proposed model is depicted

in Fig. 3. It begins by processing the input image

as a matrix. The convolutional layer extracts features

from the image by sliding filters across it, perform-

ing matrix multiplications at each position, and creat-

ing a feature map that highlights specific characteris-

tics of the image. Next, the pooling layer reduces the

size of the feature map while retaining essential infor-

mation, often using max pooling to capture the most

significant values. The batch normalization layer sta-

bilizes the inputs across layers by normalizing them

based on batch mean and variance (Yamashita, 2018)

(Narin, 2021) This helps mitigate internal covariate

shifts, speeds up convergence, allows for higher learn-

ing rates, reduces overfitting (often eliminating the

need for dropout), and improves overall classification

performance, particularly for satellite image classifi-

cation tasks. The flattening layer then converts the

matrix data from the previous layers into a single vec-

tor, making it suitable for processing in fully con-

nected layers. The fully connected layer processes

this vector by associating it with learned weights and

applying an activation function, enabling the model

to identify high-level features necessary for classifi-

cation. Finally, the output layer classifies the input

image into specific categories. It uses fully connected

neurons to predict class probabilities and applies a

SoftMax classifier at the end to select the category

with the highest probability as the model output. The

DCNN architecture, with BN, is designed for accu-

rate classification, effectively mapping complex im-

age data into categories.

Figure 3: The framework of the proposed model.

INCOFT 2025 - International Conference on Futuristic Technology

356

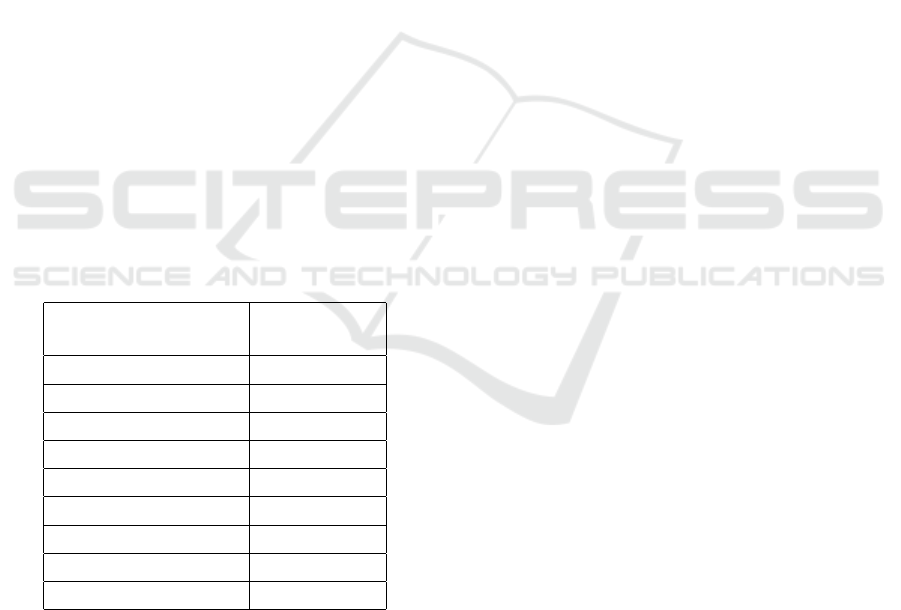

4 EVALUATION OF THE

PROPOSED MODEL

The performance of the proposed model is mea-

sured using metrics like Accuracy, Precision, Recall,

and F1 score, all derived from a multi-class confusion

matrix (Musali, 2024). The confusion matrix pro-

vides a detailed evaluation of the model performance

by comparing predicted labels (shown in rows) with

true labels (shown in columns). It highlights correct

predictions on its diagonal and is essential for calcu-

lating the key performance metrics. Figure 4 presents

the confusion matrix for the proposed CNN model,

showing an accuracy of 94.50%. Table 1. summarizes

the performance metrics, including the precision, re-

call, and F1 score, demonstrating the effectiveness of

the model in classifying satellite images accurately.

Table 1: Performance score of the proposed model

Precision Recall F1-score

Cloudy regions

(Class 1)

1.00 O.97 0.98

Desert areas

(Class 2)

0.97 1.00 0.99

Green areas

(Class 3)

0.85 0.99 0.91

Water bodies

(Class 4)

0.99 0.82 0.90

Figure 4: Confusion matrix of the proposed model.

Fig. 5. shows the accuracy of the developed CNN

model, with the best training and validation accuracy

achieved at 150 epochs. This plot illustrates how

the model’s accuracy improves as it trains, reaching

92.80%. Fig. 6. presents the model’s loss, which

shows the decrease in the error between the model’s

predictions and the actual target values on the training

data. The loss stabilizes at around 150 epochs, reach-

ing 84.75%, indicating that the model is learning ef-

fectively. Together, these figures reflect the model’s

learning process and how it improves over time. Fig.

7. shows the Learning Rate vs. Epoch plot, which

tracks how the learning rate changes during train-

ing. The learning rate controls the size of adjust-

ments the model makes to its weights based on errors.

If the learning rate is too high, the model can make

large, unstable changes, while a low rate can slow

down progress. Adjusting the learning rate through-

out training, using methods like learning rate decay,

helps the model find a balance between fast learning

and stability, ultimately improving performance and

accuracy

Figure 5: Model accuracy of the proposed model.

Figure 6: Model loss of the proposed model.

Figure 7: Model learning rate of the proposed model.

5 TRAINING, VALIDATION AND

TESTING OF THE MODEL

The dataset used in this study consists of remote

sensing satellite images, which are divided into four

categories: cloudy regions, desert areas, water bodies,

and green spaces. To prevent overfitting, a dropout

rate of 0.2 is applied in the hidden layer, which helps

the model generalize better and speeds up the learn-

ing process. During training, the Adam optimizer is

Intelligent Satellite Image Classification Using Deep Convolutional Neural Networks

357

used to adjust the weights and biases of the model. A

fully connected dense layer with a Softmax activation

function is then used to classify the images into one of

the four categories. The model is implemented using

TensorFlow version 1.0, with 80% of the data used for

training. A small portion of the training data is also

set aside for validation, while the remaining 20% is

reserved for testing the model’s performance.

6 RESULTS AND DISCUSSION

This study introduces a DCNN architecture com-

bined with BN for classifying satellite images. The

dataset used in this study consists of images from re-

mote sensing satellites, categorized into four classes:

cloudy regions, desert areas, water bodies, and green

spaces. The performance of the proposed model

is compared with traditional statistical methods and

other deep learning models. Table 2. shows that our

CNN model achieves the highest accuracy in satel-

lite image prediction, with an accuracy of 94.50%.

In comparison, the RNN, which is often used for

time series forecasting, had lower performance in this

task. The other methods tested, including SVM, Ran-

dom Forest, KNN, Decision Tree, PPDL, VGG16,

ResNet50, and DenseNet121, achieved accuracies of

72.84%, 84.20%, 80.56%, 75.33%, 90.92%, 89.00%,

89.80%, and 90.50%, respectively.

Table 2: Comparison of results with existing methods

Methods Accuracy

(%)

SVM 72.84

Random Forest 84.20

KNN 80.56

Decision Tree 75.33

PPDL Techniques 90.92

VGG16 89.00%

ResNet50 89.80%

DenseNet121 [9] 90.50%

The Proposed Model 94.50%

7 CONCLUSIONS

In this study, a DCNN model with BN is pro-

posed to classify satellite images into four categories:

cloudy regions, desert areas, water bodies, and green

spaces. Satellite images are commonly used to solve

various problems in remote sensing, but classifying

them can be difficult due to issues like data avail-

ability, quality, quantity, and distribution. Traditional

methods often struggle to handle these challenges

accurately. To improve the classification accuracy,

a new approach is introduced that combines feature

generation, feature selection, and model design. The

proposed BN-based CNN model is effective in cap-

turing complex patterns in satellite images, achieving

better results during training and testing compared to

other methods using the same dataset. The results

show that this model is highly effective for satellite

image classification. The study also suggests that fu-

ture research should focus on reducing the model’s

complexity and further improving its performance,

with batch normalization playing a key role in en-

hancing stability and speeding up training.

ACKNOWLEDGMENT

The author would like to thank Dilara Ozdemir for

providing the Satellite Remote Sensing Image dataset

RSI-CB256, which includes categories such as cloudy

regions, desert areas, water bodies, and green areas,

and is available in resources like the GitHub reposi-

tory by Dilara Ozdemir.

REFERENCES

Voigt, Stefan, Thomas Kemper, Torsten Riedlinger, Ralph

Kiefl, Klaas Scholte, and Harald Mehl. (2007). Satel-

lite image analysis for disaster and crisis-management

support. IEEE transactions on geoscience and remote

sensing. vol. 45, no. 6 pp. 1520-1528. IEEE

Nguyen, Thi Mai, Tang-Huang Lin, and Hai-Po Chan

(2019). The environmental effects of urban develop-

ment in Hanoi. Vietnam from satellite and meteoro-

logical observations from 1999–2016. Sustainability.

vol. 11, no. 6 pp. 1768.

Fu, Hualian, Yuan Shen, Jun Liu, Guangjun He, Jinsong

Chen, Ping Liu, Jing Qian, and Jun Li. (2018). Cloud

detection for FY meteorology satellite based on en-

semble thresholds and random forests approach. Re-

mote Sensing, vol. 11, no. 1, pp. 44.

Singh, Kamal Kant, Dhiraj Kumar Singh, Narinder Kumar

Thakur, Sanjay Kumar Dewali, Harendra Singh Negi,

Snehmani, and Varunendra Dutta Mishra. (2022). De-

tection and mapping of snow avalanche debris from

Western Himalaya, India using remote sensing satel-

lite images. Geocarto International. vol. 37, no. 9, pp.

2561-2579.

Padmanaban, Rajchandar, Avit K. Bhowmik, and Pedro

Cabral. (2019). Satellite image fusion to detect chang-

ing surface permeability and emerging urban heat is-

INCOFT 2025 - International Conference on Futuristic Technology

358

lands in a fast-growing city. PloS one, vol. 14, no. 1,

pp. e0208949.

Diker, Fadime, and

˙

Ilker Erkan. (2022). Classification of

satellite images with deep convolutional neural net-

works and its effect on architecture. Eskis¸ehir Tech-

nical University Journal of Science and Technology

A-Applied Sciences and Engineering. vol. 23, pp. 31-

41.

Tripodi, S., N. Girard, G. Fonteix, L. Duan, W. Mapurisa,

M. Leras, F. Trastour, Y. Tarabalka, and L. Laurore

(2022). Brightearth: Pipeline for on-the-fly 3D recon-

struction of urban and rural scenes from one satellite

image. ISPRS Annals of the Photogrammetry. Remote

Sensing and Spatial Information Sciences. vol. 3, pp.

263-270.

Anggiratih, Endang, and Agfianto Eko Putra. (2019). Ship

identification on satellite image using convolutional

neural network and random forest,” IJCCS (Indone-

sian Journal of Computing and Cybernetics Systems),

vol. 13, no. 2, pp. 117-126.

Alkhelaiwi, Munirah, Wadii Boulila, Jawad Ahmad, Anis

Koubaa, and Maha Driss. (2021). An efficient ap-

proach based on privacy-preserving deep learning for

satellite image classification. Remote Sensing. vol. 13,

no. 11 pp. 2021.

Ghazaleh Serati, Amin Sedaghat, Nazila Mohammadi and

Jonathan Li. (2022). Digital surface model generation

from high-resolution satellite stereo imagery based on

structural similarity. Geocarto International. vol. 37,

no. 26, pp. 11390-11419.

Ashwini, K., R. Bhuvaneswari, and Perla Sree Neha.

(2023). Remote Sensing Image Classification Based

on Confidence Score of Ensemble Machine Learning

Classifiers. In 2023 International Conference on Evo-

lutionary Algorithms and Soft Computing Techniques

(EASCT). pp. 1-6. IEEE.

T. Yogesh and S. V. S. Devi. (2024). Enhancing Remote

Sensing Image Classification: A Strategic Integration

of Deep Learning Technique and Transfer Learning

Approach. Second International Conference on Data

Science and Information System (ICDSIS), Hassan,

India, pp. 1-5.

Available online: https://www.kaggle.com/datasets/

mahmoudreda55/satellite-image-classification (ac-

cessed on 20 October 2022).

¨

Ozbay, Erdal, and Muhammed Yıldırım. . (2023). Classifi-

cation of satellite images for ecology management us-

ing deep features obtained from convolutional neural

network models. Iran Journal of Computer Science.

vol. 6, no. 3, pp. 185-193.

¨

Onal, Merve Kesım, Engin Avci, Fatih

¨

Ozyurt, and Ayhan

Orhan. (2020). Classification of minerals using ma-

chine learning methods. In 2020 28th Signal Process-

ing and Communications Applications Conference.

(SIU), pp. 1-4. IEEE.

Yamashita, Rikiya, Mizuho Nishio, Richard Kinh Gian Do,

and Kaori Togashi. (2018). Convolutional neural net-

works: an overview and application in radiology. In-

sights into imaging. vol.9, pp. 611-629.

Narın, Derya, and Tu

˘

gba

¨

Ozge Onur. (2021). Investigation

of the effect of edge detection algorithms in the de-

tection of covid-19 patients with convolutional neural

network-based features on chest x-ray images. In 2021

29th Signal Processing and Communications Applica-

tions Conference, (SIU), pp. 1-4. IEEE.

Ioffe, Sergey. (2015). Batch normalization: Accelerating

deep network training by reducing internal covariate

shift. arXiv preprint arXiv:1502.03167.

Musali, Suresh Kumar, Rajeshwari Janthakal, and

Nuvvusetty Rajasekhar. (2024). Holdout based

blending approaches for improved satellite image

classification. International Journal of Electrical and

Computer Engineering (IJECE) vol. 14, no. 4 (2024):

3127-3136.

Intelligent Satellite Image Classification Using Deep Convolutional Neural Networks

359