Realization of Advanced Driver Assistance System Using FPGA for

Transportation Applications

Ganesh Racha, Yedukondalu Kamatham, Srinivasa Rao Perumalla, Battuwar Akhila,

Adarsh Gangula and Amrith Patalay

Department of Electronics & Communication Engineering, CVR College of Engineering, Hyderabad, India

Keywords: ADAS, Vehicle Transportation, FPGA, Road Safety, Human Security, Accidents, HDL.

Abstract: Transportation is the basic mode of commuting and transporting goods from one place to another place. There

are distinct modes of transportation that are available through air, water, road, etc. Road-based transportation

is the most used mode for many human beings. The increased usage of road transportation is causing accidents

which is a major significant global concern. Hence, there is a requirement for Advanced Driver Assistance

Systems (ADAS) to reduce the number of road accidents. ADAS has been introduced to reduce human errors,

which are one of the leading causes of accidents, such as distracted driving, fatigue, or poor judgment. This

work deals with the design of an ADAS controller using Verilog HDL and AMD Xilinx FPGA EDA tools.

The proposed design uses an algorithm for determining lane departure, adaptive cruise control, blind spot

detection, parking assistance, driver monitoring, collision detection, etc. This algorithm is tested and

simulated for different test cases for measuring safety and security of passengers.

1 INTRODUCTION

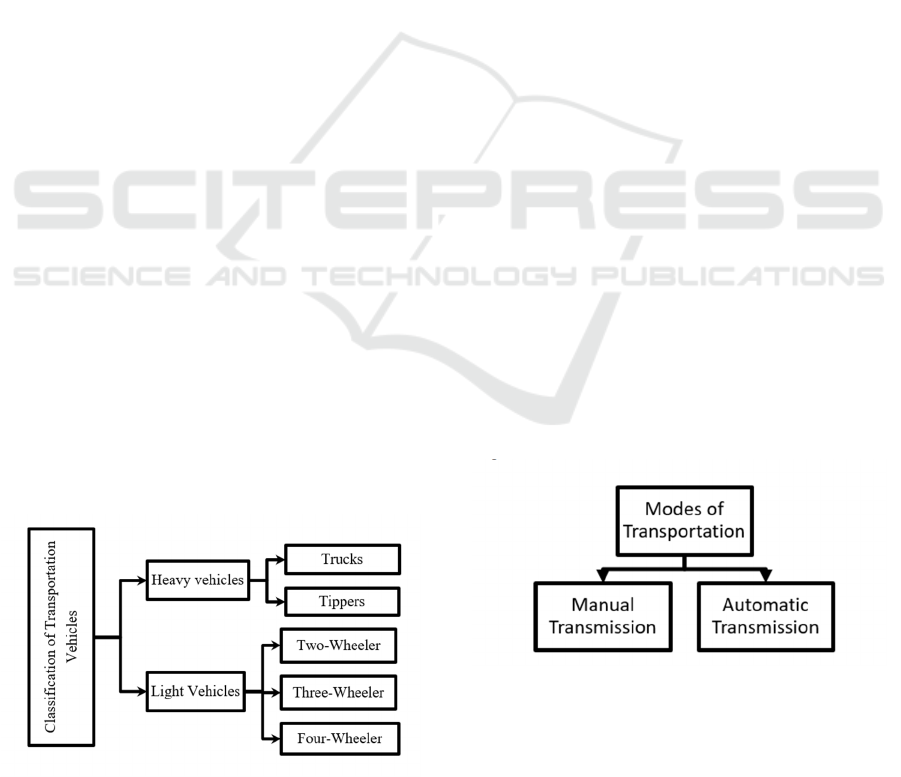

Land transportation is the most used mode of

transportation and used to commute from one place to

another. Land transportation is very vast, it is divided

into two types, i.e. Heavy vehicles and Light

Vehicles as shown in Figure 1. Heavy vehicles are

used to commute or transport over longer distances,

they are heavier, with an all-terrain build quality.

Figure 1: Classification of Transportation Vehicles

The heavy vehicles comprise Trucks, Tippers, etc,

that can transport many people or commodities and

are usually used for long-distance routes. Light

vehicles are generally used for urban commuting and

transportation. They are used to carry and transport

light commodities. Light vehicles comprise two,

three, and four-wheelers. Vehicles have two

transmission modes, one as manual mode, and the

other is automatic mode of transmission as shown in

Figure 2.

Figure 2: Modes of Transportation

In the manual mode of transmission, the gearbox

and clutch must be engaged manually for vehicle

movement, whereas in the automatic transmission

mode, the clutch is engaged automatically, and the

Racha, G., Kamatham, Y., Perumalla, S. R., Akhila, B., Gangula, A. and Patalay, A.

Realization of Advanced Driver Assistance System Using FPGA for Transportation Applications.

DOI: 10.5220/0013614400004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 301-309

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

301

vehicle adjusts the gear according to the requirement

of the user. This type of automatic advanced driving

mechanism is used to make driving comfortable and

smoother. Advanced Driver Assistance Systems

(ADAS) are a group of technologies that are installed

in cars to make driving safer and more convenient.

Using a variety of sensors, cameras, radar, and other

technologies, these systems keep an eye on the

surroundings of the car, warn the driver of any

dangers, and in some cases, step in to stop collisions.

The following are the main objectives of ADAS:

Improving safety by lowering the likelihood of

crashes, particularly those initiated by human

mistakes. With difficult activities like parking,

changing lanes, and keeping a safe following

distance, drivers can get help from ADAS. By

automating processes like lane centering and speed

adjustments, driving becomes more comfortable

overall. Semi-autonomous and fully autonomous

vehicles are built on the foundation of ADAS. The

degree of intervention is typically used to categorize

each ADAS element, ranging from Level 0 (no

automation) to Level 5 (complete automation) as

shown in Table I.

Table 1: Levels of Transportation Vehicles

S. No Level Automation

1 0 Manual Mode (No Automation)

2 1 Human Assistance

3 2 Partial Automation

4 3 Tentative Automation

5 4 Constrained Automation

6 5 Complete Automation

Level 0 completely works in manual mode. Level

1 provides a feature for the driver to monitor the

vehicle. Level 2 automates vehicle monitoring,

steering, and acceleration with the help of ADAS.

Level 3 uses ADAS with environmental capabilities

using human intervention. Level 4 uses Level 3

features with certain specific conditions. Level 5

works with full automation. This work deals with the

design of Level 2-based ADAS design using AMD

Xilinx FPGA with Verilog HDL. The rest of the paper

is organized as follows: The literature review of

vehicles is given in section II. Section III gives the

design specifications and analysis of the ADAS Level

2 system. Section IV gives the design synthesis and

simulation test case analysis for the ADAS system.

The conclusions drawn are presented in Section V.

2 LITERATURE REVIEW OF

VEHICLES

Adaptive Cruise Control (ACC) is an advanced

feature that controls speed and distance adjustments

between vehicles. Using sensors, it detects the

vehicles ahead and maintains a safe following

distance without driver intervention. This reduces

stress during long drives and helps for smoother

traffic flow, particularly on highways. Some systems

include stop-and-go functionality for city traffic.

Even with its benefits, ACC faces challenges like

sensor accuracy, ensuring that drivers remain

attentive while driving, and addressing any legal

concerns. These issues must be resolved for ACC to

achieve widespread adoption and improve road safety

(Vahidi, Eskandarian, et al. , 2003). Lane Centering

Assistance (LCA) is a driver assistance system

designed to keep vehicles aligned in the center of their

lane. It uses sensors like cameras and radar to monitor

the road markings and the vehicle's position. It adjusts

the steering to the correct position even on curved

roads and at different speeds (Bifulco, Simonelli, et

al. , 2013). LCA enhances safety and reduces driver

workload by maintaining a steady path, mainly while

they are driving on highways. LCA does have issues

which mainly include handling complex road

conditions and ensuring smooth integration with

other driving systems (Tolosana, Ayerbe, et al. ,

2017).

The Advanced Parking Assistance System helps

drivers park safely and easily by using sensors and

automatic controls. It integrates vehicle position data,

planning of path for parking, and Human Machine

Interface (HMI) to guide drivers from the parking lot

entrance to a parking area. The system gives

suggestions on steering and movement commands,

displayed on a screen or by audio. It adjusts for driver

errors and uses a path-planning method that generates

simple, easy-to-follow routes. The main aim of the

system is to reduce stress and improve parking

efficiency, especially for elderly drivers (Wada,

Yoon, et al. , 2003). Blind spot detection helps drivers

identify areas around their vehicles that are hard to

see which can cause accidents. Modern systems use

cameras or sensors to alert drivers visually. Some

advanced systems include physical warnings, like

force-feedback pedals and steering wheel resistance

to actively warn drivers of potential collisions. These

INCOFT 2025 - International Conference on Futuristic Technology

302

systems can detect vehicles in blind spots and give

warnings to guide drivers away from danger. For

example, when a driver tries to change lanes into an

occupied spot, the steering wheel resists turning

(Racine, Cramer, et al. , 2010). Collision avoidance

systems help drivers prevent accidents by warning

about potential dangers or even taking control of the

vehicle if necessary. These systems rely on sensors to

detect accidents and provide alerts, or even apply

brakes or steering by themselves to avoid a crash.

Automated collision avoidance systems can act when

drivers fail to respond to emergencies, ensuring

safety. However, this system is not so advanced yet,

so it sometimes gives false alarms about the hazards

and has problems facing liability issues. There are

more Advanced systems being developed to enhance

accuracy and reliability (Vahidi and Eskandarian,

2003).

The driver's fatigue detection system monitors the

driver’s alertness level using sensors. These sensors

track facial movements, and eye and head position to

determine if the driver shows signs of fatigue. When

the system detects drowsiness or a lack of attention it

generates an alert to warn the driver and apply the

brakes. This system is very useful in preventing

accidents caused by fatigue which ensures safe

driving conditions for long-distance travel (Hossan,

Alamgir, et al. , 2016). Adaptive Cruise Control helps

drivers maintain a safe distance and desired speed on

roads by automatically controlling acceleration and

brakes. However, its performance can vary in heavy

traffic or when vehicles suddenly cut in, often

requiring manual driving by the driver. Even though

ACC helps reduce drivers' efforts by maintaining

speed and a good distance between vehicles, it needs

more improvements in the system for a better

experience for the driver (Marsden, McDonald, et al.

, 2001). An Adaptive Driver Voice Alert System

adjusts voice alerts based on the driver’s emotions to

enhance safety. It uses facial emotion recognition to

detect moods like happiness, anger or fear.

Depending on the detected mood, the system

modifies alerts to ensure they are clear. For example,

during anger, alerts become more detailed to reduce

aggressive driving. This emotionally adaptive

approach helps drivers respond better to alerts,

improving road safety (Sarala, Yadav, et al. , 2018).

This system explores decision-making processes for

Level 0 ADAS in Russian public transport, focusing

on enhancing road safety. Two new systems, Turn

Assist System (TAS) and Lateral Clearance Warning

(LCW), are proposed along with Forward Collision

Warning (FCW), Pedestrian Collision Warning

(PCW), and Blind Spot Monitoring (BSM). Testing

in MATLAB/Simulink confirmed that the systems

meet the requirements and improve safety (Wassouf,

Korekov, et al. , 2023). The buses lag in adopting

autonomous safety systems, but cities like London are

introducing ADAS for buses. On-road trials show that

Forward Collision Warning (FCW) reduces

pedestrian collision risks. Some tests show that

vehicle retarders maintain safe deceleration rates for

emergency stopping, for both sitting and standing

passengers (Blades, Douglas, et al. , 2020).

A driver behavioral analysis system studies

driving patterns to improve advanced driver

assistance systems. It uses data like vehicle speed,

distance, and acceleration to understand driver habits

and reaction delays. By analyzing these behaviors, it

helps create systems that adapt to individual drivers,

improving comfort and safety. The system also

identifies factors like vehicle spacing and reaction

time, which influence driver actions (Chen, Zhao, et

al. , 2018). Future advancements in Advanced Driver

Assistance Systems (ADAS) focus on greater

automation and enhanced safety features. ADAS is

expected to integrate more software-driven

functionalities, contributing to the rise of more

autonomous vehicles. These systems will

increasingly rely on advanced sensors like Light

Detection and Ranging (LiDAR) and cameras along

with AI algorithms, to provide intelligent driving

support. Key trends include improvement in lane

departure warnings, adaptive cruise control, and

driver monitoring. However, challenges like cost

reduction, testing reliability, and user safety concerns

need to be addressed. ADAS will continue to evolve,

becoming integral to safer and smarter transportation

(Kaur and Sobti, 2017). Drowsiness detection using

facial landmarks helps identify if a driver is sleepy by

analyzing their face. This system monitors eye

movements, blinks, and yawns using a feature known

as Eye Aspect Ratio (EAR), which tracks eye closure

duration to determine drowsiness. If the EAR stays

below a threshold for a few seconds, an alarm is

triggered to alert the driver. Preliminary tests showed

87% accuracy, making it a promising tool for

reducing accidents caused by driver fatigue (Cueva

and Cordero, 2020).

AI-based ADAS has been proposed to predict the

dangers that can happen due to road conditions and is

designed to operate on an embedded platform

(Huang, Chen, et al. , 2022). Autonomous vehicles

rely on Advanced Driver Assistance Systems

(ADAS) for driving and parking tasks. The use of

Frequency Modulated Continuous Wave (FMCW)

radar in collision avoidance, particularly for detecting

proximity collisions is a problem for short-range

Realization of Advanced Driver Assistance System Using FPGA for Transportation Applications

303

radars (Ekolle, et al. , 2023). The adoption of

Autonomous Vehicles (AVs) remains limited due to

public mistrust, even though there are many

advancements in the system. This precise

customization of the Support Vector Machine (SVM)

enhances safety and driver support (Hwang, Jung, et

al. , 2022). Facial gestures and emotion recognition

are critical components of Driver Assistance to

enhance road safety. By analyzing eye and lip

movements, the sensors classify emotions into happy,

angry, sad, or surprised (Dragaš, Grbić, et al. , 2021).

The system aims to detect driver inattentiveness or

fatigue and switch the car to automatic mode if

necessary (Agrawal, Giripunje, et al. , 2013). The

system focuses on eye monitoring to detect driver

fatigue and prevent accidents. A front-facing camera

records a video, from which frames are extracted to

detect faces using Histogram of Oriented Gradients

(HOG) and Support Vector Machine (SVM). Facial

landmarks identify eye and mouth positions, allowing

the calculation of Eye Aspect Ratio (EAR) and Mouth

Aspect Ratio (MAR) to estimate fatigue. When

drowsiness is detected, the system alerts the driver

(Kaur, Ponnala, et al. , 2023).

3 DESIGN SPECIFICATIONS

AND ANALYSIS OF ADAS LEVEL 2

SYSTEM

Advanced Driver Assistance Systems (ADAS) are a

group of technologies that are installed in cars to

make driving safer and more convenient. Using a

variety of sensors, cameras, radar, and other

technologies, these systems keep an eye on the

surroundings of the car, warn the driver of any

dangers, and in some cases, step in to stop collisions.

The main objective of ADAS is to improve safety by

lowering the likelihood of crashes, particularly those

initiated by human mistakes. With difficult activities

like parking, changing lanes, and keeping a safe

following distance, drivers can get help from us. By

automating processes like lane centering and speed

adjustments, driving becomes more comfortable.

The Level 2 ADAS refers to systems that provide

"partial automation," which means that under some

circumstances, the car can control both steering and

acceleration/deceleration. However, the driver must

always be actively involved and prepared to take

over. Although level 2 systems are commonly

referred to as "hands-off," this term only applies in a

limited sense because the driver is still required to

keep an eye on the vehicle and surroundings. The

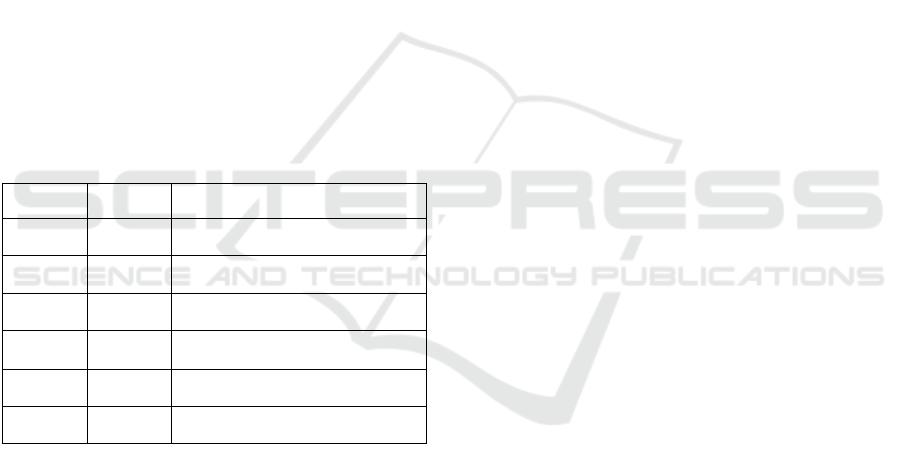

architecture of the FPGA-based ADAS system is

shown in Figure 3.

Figure 3: FPGA-based ADAS system architecture

The FPGA-based ADAS system architecture is

designed by taking the inputs from the camera, radar,

side camera, ultrasonic sensor, and the data of eye

position. This architecture generates the steering

signal, brake signal, and warning signal as outputs.

The ADAS system is designed by using the following

functionalities of Level 2:

1. Lane Departure Warning (LDW)

2. Blind Spot Detection (BSD)

3. Driver Monitoring (DM)

4. Adaptive Cruise Control (ACC)

5. Collision Detection (CD)

6. Parking Assistance (PA)

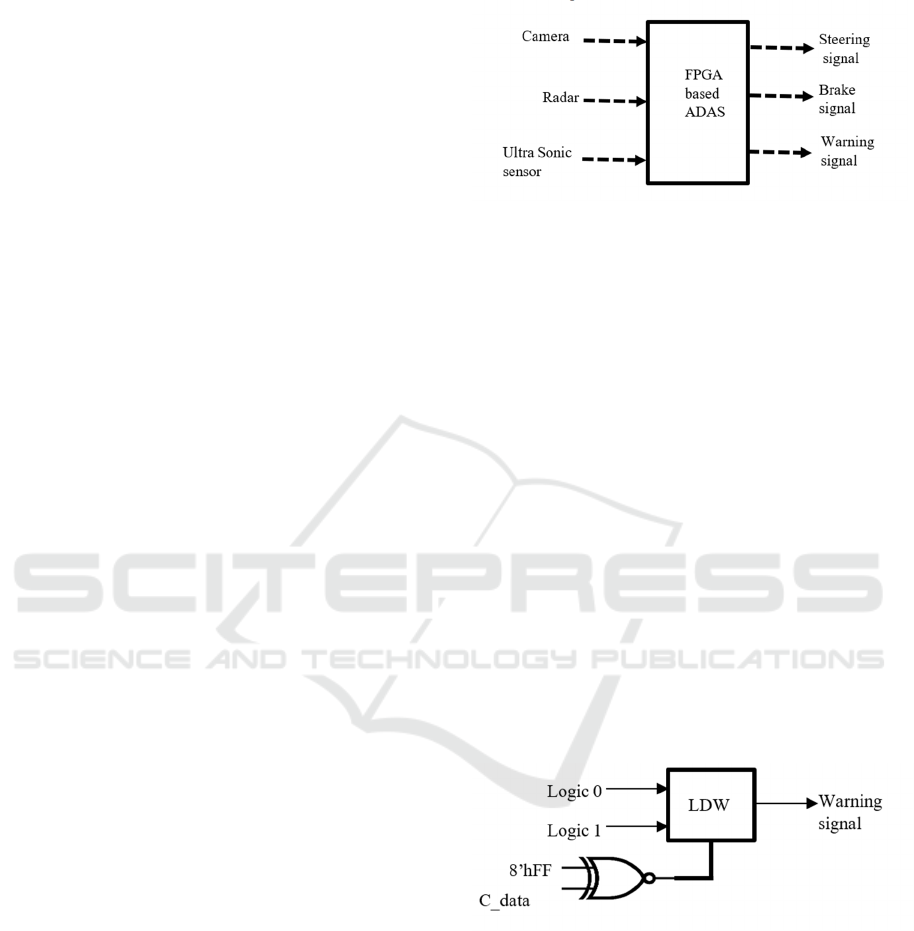

3.1 Lane Departure Warning (LDW)

The LDW system helps to detect when a vehicle starts

to move out of its lane. The design of LDW is shown

in Figure 4. It processes data from a camera(C_data),

represented as an 8-bit signal (lane_position), to

determine whether the vehicle is still within safe lane

boundaries or not.

Figure 4: Design of Lane Departure Warning (LDW)

3.2 Blind Spot Detection (BSD)

The BSD system is designed to monitor areas around

the vehicle that are difficult for the driver to see,

particularly the blind spots. The design of BSD is

shown in Figure 5. This system uses data from Side

Cameras (SC). When a vehicle is detected in the blind

spot, it activates a warning signal to alert the driver.

INCOFT 2025 - International Conference on Futuristic Technology

304

Figure 5: Design of Blind Spot Detection

3.3 Driver Monitoring (DM)

The DM is designed to track the driver's level of

attentiveness and alertness. The design of BSD is

shown in Figure 6. This system uses a camera to

monitor the driver’s eye position(C_data), and if

signs of drowsiness or distraction are detected, it

activates a warning to alert the driver.

Figure 6: Design of Driver Monitoring

3.4 Adaptive Cruise Control (ACC)

The ACC system adjusts the vehicle's speed based on

the distance to the vehicle ahead. The design of ACC

is shown in Figure 7. The system uses input and

distance from a radar sensor that is

Radar_data(R_data).

Figure 7: Design of Adaptive Cruise Control

3.5 Collision Detection (CD)

The CD system is designed to prevent accidents by

monitoring the distance to nearby obstacles and

activating the vehicle's brakes if a collision is going

to happen. It uses input from radar that is obstacle

data(O_data). The design of the CD is shown in

Figure 8.

Figure 8: Design of CD

3.6 Parking Assistance (PA)

The Parking Assistance system is designed to help

drivers park safely by detecting nearby obstacles

during parking. The design of PA is shown in Figure

9. It uses ultrasonic sensors(U_data) to measure the

distance between the vehicle and surrounding objects.

Figure 9: Design of Parking Assistance

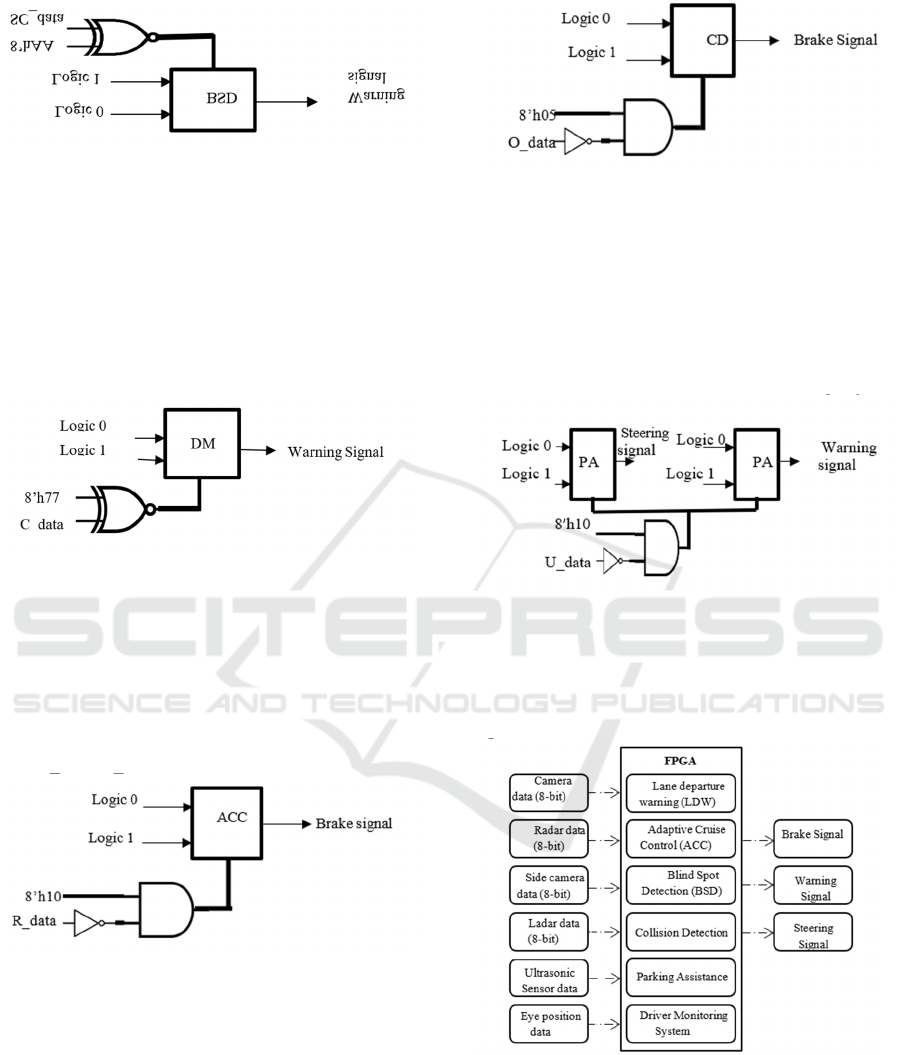

The design of FPGA-based ADAS is shown in

Figure 10. This system generates brake, warning, and

steering signals as per the algorithm and the design

inputs.

Figure 10: Design of FPGA-based ADAS

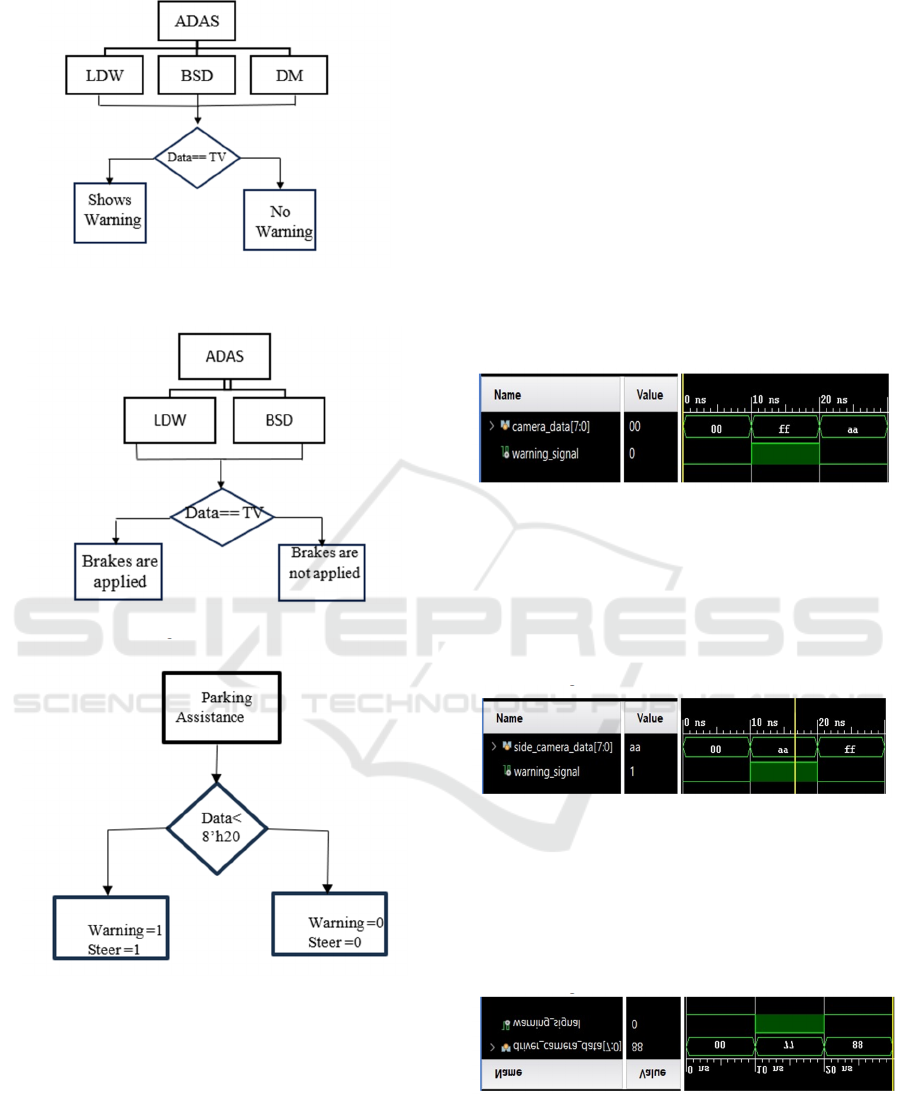

The algorithm used for brake, warning signal, and

steering signals is shown in Figures 11 to 13. The

algorithm which generates a warning signal is shown

in Figure 11. The algorithm that generates the brake

signal is shown in Figure 12. The algorithm that

generates the steering signal is shown in Figure 13.

Realization of Advanced Driver Assistance System Using FPGA for Transportation Applications

305

Figure 11: Algorithm for

warning

signal

Figure 12:

Algorithm

for warning signal

Figure 13:

Algorithm

for steer signal

In Figure 11 when the data is equal to the

threshold then a warning signal is shown. In Figure

12 when the data is less than the TV, then the brakes

are applied. In Figure 13 when the data is less than

the threshold, then warning is shown, and steering is

controlled. This data can be camera data, radar data,

side camera data, ultrasonic sensor data, and the data

of eye position based on the functionality of ADAS.

4 DESIGN SIMULATION AND

SYNTHESIS RESULTS OF

ADAS LEVEL 2 SYSTEM

The simulation and synthesis of ADAS done by using

Verilog HDL and FPGA design tools. The

simulations for different test cases and conditions are

shown below.

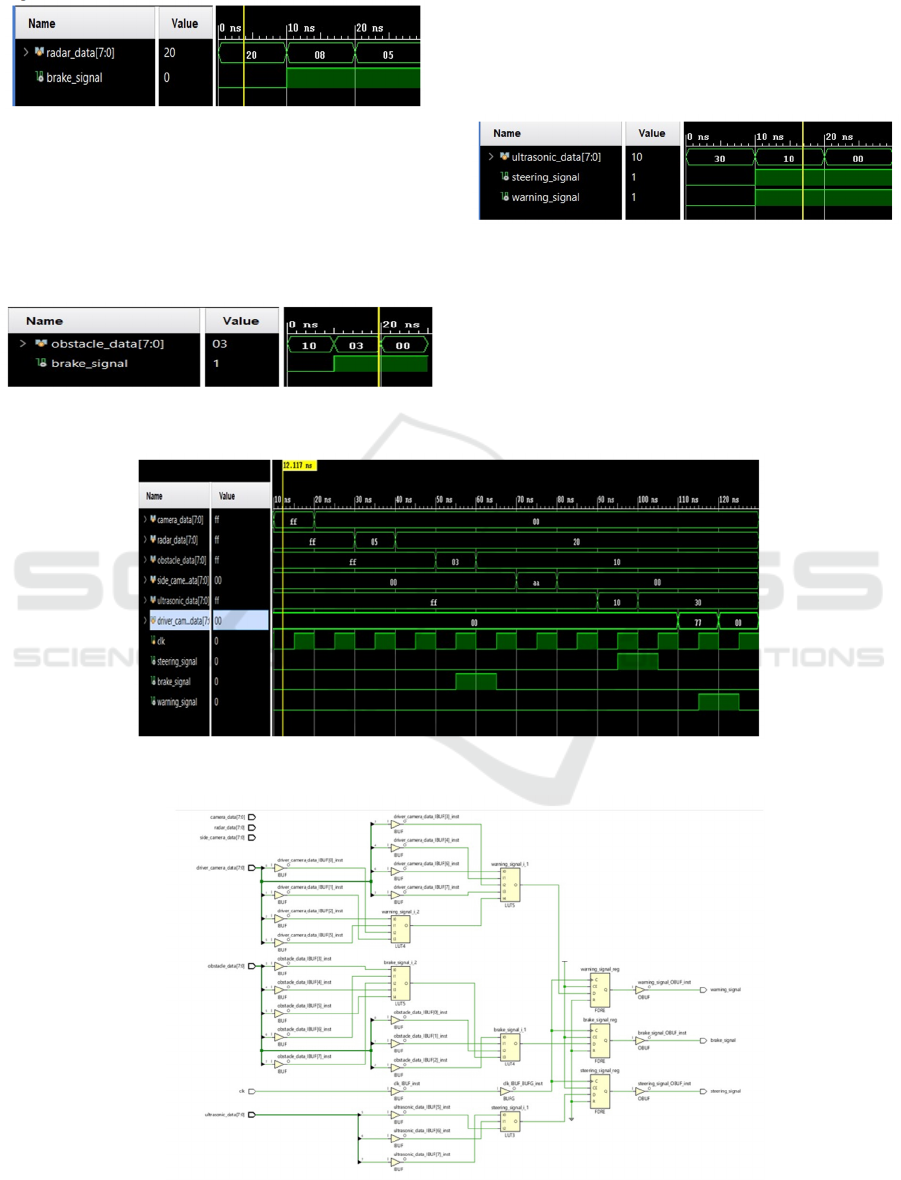

4.1 Lane Departure Warning (LDW)

Let the TV be 8’hFF. If the camera_data value is less

than or greater than the threshold then the warning

signal is displayed. The simulation is shown in Figure

14.

Figure 14: Simualtion of LDW test case

4.2 Blind Spot Detection (BSD)

Let the threshold be 8’hAA. If the side_camera_data

value is less than or greater than the threshold then

the warning signal is displayed. The simulation is

shown in Figure 15.

Figure 15: Simualtion of BSD test case

4.3 Driver Monitoring (DM)

Let the threshold be 8’h77. If the driver_camera_data

value is less than or greater than the threshold then

the warning signal is displayed. The simulation is

shown in Figure 16.

Figure 16: Simualtion of DM test case

4.4 Adaptive Cruise Control (ACC)

Let the threshold be 8’h10. If the side_camera_data

value is lesser or reater than the threshold then, the

INCOFT 2025 - International Conference on Futuristic Technology

306

brake signal is displayed. The simulation is shown in

Figure 17.

Figure 17: Simualtion of ACC test case

4.5 Collision Detection (CD)

Let the threshold be 8’h05. If the Obstacle_data value

is less than the threshold then the brake signal is

enabled. The simulation is shown in Figure 18.

Figure 18: Simulation of CD test case

4.6 Parking Assistance (PA)

Let the threshold be 8’h20. If the Ultrasonic_data

value is less than the threshold then the steering and

warning signals are enabled. The simulation is shown

in Figure 19.

Figure 19: Simulation of CD test case

The detailed simulation result for all test cases and

the synthesis design are shown in Figure 20 and

Figure 21.

Figure 20: Simulation of ADAS for all test cases

Figure 21: Synthesis of ADAS for all test cases

Realization of Advanced Driver Assistance System Using FPGA for Transportation Applications

307

5 CONCLUSIONS

Using Artix FPGA technology and a 4-bit encoding

technique, this study presents an energy-efficient

FPGA-based Advanced Driver Assistance Systems

(ADAS) system. For essential ADAS features, the

architecture provides real-time performance. The

system provides an optimal blend of computing

capability and resource efficiency with an

impressively low on-chip power consumption of

0.466 W and efficient utilization of merely 5 Look-

Up Tables (LUTs). For safety-critical automotive

applications, its parallel processing capabilities

guarantee quick and precise decision-making. This

small and expandable solution shows how FPGA

technology can revolutionize vehicle safety, facilitate

integration into energy-efficient automotive

platforms, and open the door for more sustainable and

advanced ADAS solutions.

REFERENCES

A. Vahidi and A. Eskandarian, "Research advances in

intelligent collision avoidance and adaptive cruise

control," in IEEE Transactions on Intelligent

Transportation Systems, vol. 4, no. 3, pp. 143-153,

Sept. 2003, doi: 10.1109/TITS.2003.821292

Gennaro Nicola Bifulco, Luigi Pariota, Fulvio Simonelli,

Roberta Di Pace, Development and testing of a fully

Adaptive Cruise Control system, Transportation

Research Part C: Emerging Technologies, Volume

29,2013, ISSN 0968-

090X,https://doi.org/10.1016/j.trc.2011.07.001.

I. Ballesteros-Tolosana, P. Rodriguez-Ayerbe, S. Olaru, R.

Deborne and G. Pita-Gil, "Lane centering assistance

system design for large speed variation and curved

roads," 2017 IEEE Conference on Control Technology

and Applications (CCTA), Maui, HI, USA, 2017, pp.

267-273, doi: 10.1109/CCTA.2017.8062474.

M. Wada, Kang Sup Yoon, and H. Hashimoto,

"Development of advanced parking assistance system,"

in IEEE Transactions on Industrial Electronics, vol. 50,

no. 1, pp. 4-17, Feb. 2003, doi:

10.1109/TIE.2002.807690.

D. P. Racine, N. B. Cramer, and M. H. Zadeh, "Active blind

spot crash avoidance system: A haptic solution to blind

spot collisions," 2010 IEEE International Symposium

on Haptic Audio Visual Environments and Games,

Phoenix, AZ, USA, 2010, pp. 1-5, doi:

10.1109/HAVE.2010.5623977.

A. Vahidi and A. Eskandarian, "Research advances in

intelligent collision avoidance and adaptive cruise

control," in IEEE Transactions on Intelligent

Transportation Systems, vol. 4, no. 3, pp. 143-153,

Sept. 2003, doi: 10.1109/TITS.2003.821292.

Hossan, Alamgir & Bin Kashem, Faisal & Hasan, Md &

Naher, Sabkiun & Rahman, Md. (2016). A Smart

System for Driver Fatigue Detection, Remote

Notification, and Semi-Automatic Parking of Vehicles

to Prevent Road Accidents.

10.1109/MEDITEC.2016.7835371.

Reg Marsden, Mike McDonald, Mark Brackstone, Towards

an understanding of adaptive cruise control,

Transportation Research Part C: Emerging

Technologies, https://doi.org/10.1016/ S0968-

090X(00)00022-X.

S. M. Sarala, D. H. Sharath Yadav and A. Ansari,

"Emotionally Adaptive Driver Voice Alert System for

Advanced Driver Assistance System (ADAS)

Applications," 2018 International Conference on Smart

Systems and Inventive Technology (ICSSIT),

Tirunelveli, India, 2018, pp. 509-512, doi:

10.1109/ICSSIT.2018.8748541.

Y. Wassouf, E. M. Korekov, and V. V. Serebrenny,

"Decision Making for Advanced Driver Assistance

Systems for Public Transport," 2023 5th International

Youth Conference on Radio Electronics, Electrical and

Power Engineering (REEPE), Moscow, Russian

Federation, 2023, pp. 1-6, doi: 10.1109/

REEPE57272.2023.10086753.

Blades, L., Douglas, R., Early, J., Lo, C.Y. and Best, R.,

2020. Advanced driver-assistance systems for city bus

applications (No. 2020-01-1208). SAE Technical

Paper.

H. Chen, F. Zhao, K. Huang, and Y. Tian, "Driver Behavior

Analysis for Advanced Driver Assistance System,"

2018 IEEE 7th Data Driven Control and Learning

Systems Conference (DDCLS), Enshi, China, 2018, pp.

492-497, doi: 10.1109 /DDCLS.2018.8516059.

.P. Kaur and R. Sobti, "Current challenges in modeling

advanced driver assistance systems: Future trends and

advancements," 2017 2nd IEEE International

Conference on Intelligent Transportation Engineering

(ICITE), Singapore, 2017, pp. 236-240, doi:

10.1109/ICITE.2017.8056916.

L. D. S. Cueva and J. Cordero, "Advanced Driver

Assistance System for the drowsiness detection using

facial landmarks," 2020 15th Iberian Conference on

Information Systems and Technologies (CISTI),

Seville, Spain, 2020, pp. 1-4, doi: 10.23919/

CISTI49556.2020.9140893.

X. -H. Huang, Z. -H. Chen, A. Ahamad and C. -C. Sun,

"ADAS E-Bike: Auxiliary ADAS Module For Electric

Power-assisted Bicycle," 2022 IET International

Conference on Engineering Technologies and

Applications (IET-ICETA), Changhua, Taiwan, 2022,

pp. 1-2, doi: 10.1109/IET-

ICETA56553.2022.9971513.

Z. E. Ekolle et al., "A Reliable 79GHz Band Ultra-Short

Range Radar for ADAS/AD Vehicles Using FMCW

Technology," 2023 IEEE International Automated

Vehicle Validation Conference (IAVVC), Austin, TX,

USA, 2023, pp. 1-6, doi:

10.1109/IAVVC57316.2023.10328083.

INCOFT 2025 - International Conference on Futuristic Technology

308

G. Hwang, D. Jung, Y. Goh and J. -M. Chung, "Personal

Driving Style-based ADAS Customization in Diverse

Traffic Environments using SVM for Public Driving

Safety," 2022 13th International Conference on

Information and Communication Technology

Convergence (ICTC), Jeju Island, Korea, Republic of,

2022, pp. 1938-1940, doi:

10.1109/ICTC55196.2022.9952997.

S. Dragaš, R. Grbić, M. Brisinello and K. Lazić,

"Development and Implementation of Lane Departure

Warning System on ADAS Alpha Board," 2021

International Symposium ELMAR, Zadar, Croatia,

2021, pp. 53-58, doi:

10.1109/ELMAR52657.2021.9550915.

U. Agrawal, S. Giripunje and P. Bajaj, "Emotion and

Gesture Recognition with Soft Computing Tool for

Drivers Assistance System in Human Centered

Transportation," 2013 IEEE International Conference

on Systems, Man, and Cybernetics, Manchester, UK,

2013, pp. 4612-4616, doi: 10.1109/SMC.2013.785.

J. Kaur and R. Ponnala, "Driver’s Drowsiness Detection

System Using Machine Learning," 2023 International

Conference on Advances in Computation,

Communication and Information Technology

(ICAICCIT), Faridabad, India, 2023, pp. 339-343, doi:

10.1109/ICAICCIT60255.2023. 10465952.

Realization of Advanced Driver Assistance System Using FPGA for Transportation Applications

309