ProctorEdge: Advanced AI Examination Monitoring and Security

System

Neeraj S, Kollipara Rohith and Ani R

Department of Computer Science and Applications, Amrita School of Computing, Amrita Vishwa Vidyapeetham,

Amritapuri, India

Keywords:

AI, Online Proctoring, Deep Learning, Facial Recognition, Mobile Detection, Academic Integrity, YOLO.

Abstract:

Rapid development of artificial intelligence and deep learning technologies has strongly transformed the online

examination scenario in light of ensuring academic integrity. This paper presents an automated AI proctoring

system that utilizes computer vision with facial recognition, smartphone detection, and audio detection tech-

niques for monitoring a student’s action during online assessments. These technologies are supposed to be

integrated in order to implement a system that can detect suspicious behaviors and cheating behaviors during

remote exam ination. This research goes through the methodologies involved, challenges, and further enhance-

ments needed for improving the accuracy and efficiency of proctoring systems. This work thus becomes an

all-inclusive guide for educators and developers on how to fully implement some practical online proctoring

solutions in educational settings.

1 INTRODUCTION

Online exams are increasingly becoming the norm

and, therefore, very convenient and flexible for the

student and instructor. However, online exams also

come with their chal lenges, such as integrity. On-

line exam cheating and academic dishonesty can in-

validate assessments of learners, thereby undermin-

ing the entire education process. Our project has been

centered on developing a robust proctoring system

that would apply facial recognition technology, audio

identification, and device detection to monitor and de-

ter online exams cheating.

Key Features:

1. Facial Recognition: The system uses facial recog-

nition technology to authenticate the student sit-

ting for the examination to make sure that the per-

son sitting to attempt the test is the correct person.

2. Audio Identification: Through the recognition of

sounds in the surrounding environment, the sys-

tem checks if the student uses any other device or

communicates with someone during the examina-

tion.

3. Device Detection: The system checks if any ad-

ditional devices have been connected or are being

used by the student during the examination, trig-

gers proctors to potential cheating behavior.

4. Tab Monitoring: The system tracks the browser

tabs open on the student’s device and alerts the

proctor if any unauthorized tabs are found.

5. Alerts-Real time: Provide a real-time alert system

such that the proctor becomes informed in real

time as to any suspicious activity within their as-

signed exam, hence prepared to intervene at all

times.

2 RELATED WORKS

Online student authentication and proctoring sys-

tem based on multi modal biometrics technology

(Labayen et al., 2021) advocates the use of an online

proctoring system designed to monitor students tak-

ing the examination with commercial solutions such

as continuous biometric recognition by way of face,

voice, and typing. Secure authentication is guaran-

teed by gathering data from webcams, microphones,

and keyboards in cloud servers that then match the

data with various models of biometrics. Human su-

pervision may be dispensed with at some levels due to

the advanced use of AI algorithms for error-correcting

capabilities over conditions. Future improvements

could include student recognition with other devices

when taking tests.

S, N., Rohith, K. and R, A.

ProctorEdge: Advanced AI Examination Monitoring and Security System.

DOI: 10.5220/0013612700004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 229-237

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

229

Automated proctoring system using computer vi-

sion techniques (Maniar et al., 2021) is a proctoring

system. The system has features of eye gaze track-

ing, mouth detection, object detection, head posture

estimation via facial landmark detection. Further, the

system transcribes the audio recording of the student

voice into text using the Google speech recognition

API, to detect conversations. The techniques used

mostly are: biometric recognition (face recognition,

eye gaze tracking, mouth detection, head pose esti-

mation), object detection, cloud storage. The sys-

tem used dlib’s pre-trained network for real-time eye

tracking and head pose detection. Challenges include

accurate eye tracking and head pose estimation. Al-

though the system alerts multiple persons, it does

not provide continuous identity verification. The fu-

ture upgrades can also include the detection of other

devices and continuous student authentication.

ProctoXpert–An AI Based Online Proctoring Sys-

tem (Chougule et al., 2024) outlines a proctoring sys-

tem using AI for face recognition, audio detection,

and biometric authentication with live monitoring for

anomaly detection. It supports post-exam review with

encrypted data for security. The key methodologies

include AI-based biometric recognition, misbehav-

ior detection, and facial/audio analysis using Convo-

lutional Neural Networks (CNNs) for tracking head

position and eye movement to monitor the student’s

screen view. While the system benefits from AI-

driven accuracy, challenges remain in detecting light-

ing conditions and background noise. Future en-

hancements may focus on identifying device use and

improving additional monitoring data accuracy.

An incremental training on deep learning face

recognition for M-Learning online exam proctoring

(Ganidisastra and Bandung, 2021) elaborates on the

accuracy issues that exist in today’s proc- toring-

based face recognition systems, and more specifically

about the system’s performance during the illumina-

tion change and minimal changes to the facial struc-

ture. The paper points out the pros and cons of these

techniques by testing four face detectors; including

Haar-cascade, LBP, MTCNN, and YOLO- face, along

with testing one Facenet model. A deep learning-

based face detector is better than the earlier methods.

The Facenet model, which was batch trained, resulted

in 98% accuracy but, in this incremental training ver-

sion, the dataset is quite smaller. Viola-Jones/Haar-

cascade : A traditional approach, based on integral

images and Adaboost classifiers but poses and light-

ing variations affect significantly. Local Binary Pat-

tern (LBP) : Makes local texture de-scriptions but

is posing and lighting challenging. Multi-Task Cas-

caded CNN (MTCNN) : This has three stages of

CNN to efficiently generate candidate windows and

enhance face detection with the preservation of real-

time performance YOLO-face : Deep learning algo-

rithm based on YOLOv3; specially optimized for high

precision face detection with very rapid speed in de-

tection.

Multiple instance learning for cheating detection

and localization in online examinations (Liu et al.,

2024) presents CHEESE, an advanced cheating de-

tection framework utilizing multiple instance learn-

ing to improve upon existing proctoring systems. It

integrates body posture, background, eye gaze, head

posture, and facial features through a spatio-temporal

graph module, achieving an AUC score of 87.58 on

three datasets. Key challenges addressed include

video anomaly detection, with the Multiscale Tempo-

ral Network (MTN) capturing temporal dependencies

in video clips. CHEESE consists of a label genera-

tor that produces anomaly scores using multiple in-

stance learning and a feature encoder enhanced by

C3D or I3D models, incorporating a self-guided at-

tention module. This framework combines features

from OpenFace 2.0 and C3D/I3D to analyze can-

didates’ behavior holistically. Future enhancements

may focus on detecting unauthorized devices used

during exams and generating alerts.

A Systematic Review of Deep Learning Based

Online Exam Proctoring Systems for Abnormal

Student Behaviour Detection(Abbas and Hameed,

2022)discusses the challenges of ensuring academic

integrity in online exams, highlighting the need for

advanced technologies like deep learning to moni-

tor abnormal student behavior. It investigates the ef-

fectiveness of deep learning algorithms in detecting

cheating during assessments through a systematic lit-

erature review of 137 publications, narrowing down

to 41 relevant studies on AI-based proctoring systems.

The research identifies a key limitation: existing proc-

toring systems cannot fully prevent all cheating meth-

ods due to their evolving nature, necessitating ongo-

ing improvements. Benefits of deep learning include

enhanced detection capabilities and better exam se-

curity, while drawbacks involve technical challenges

and privacy concerns

AI-based online proctor- ing system (Aurelia

et al., 2024) addresses the need for proper online as-

sessment methods that result in a rise of AI-powered

proctoring services. The proposed work here is on de-

veloping a visual proctoring system based on webcam

input, with emotion detection, head movement detec-

tion, and unauthorized objects that occur during ex-

ams using facial expression recognition with convo-

lutional neural networks. The system improves mon-

itoring and automates suspicious behavior detection

INCOFT 2025 - International Conference on Futuristic Technology

230

but is likely to require human intervention and could

possibly generate false positives. Further improve-

ments will be made towards refining AI algorithms

for more accuracy. The goal here is to develop a de-

pendable proctoring system that ensures integrity in

online examination settings.

AI based Proctoring System – A Review (Paul

et al., 2024) discussed the necessity of improving

security and integrity in online examinations more

than ever with the introduction of the latter. It in-

troduces an AI-based proctoring system, monitoring

students remotely from a remote location via webcam

while attempting an online exam using computer vi-

sion and machine learning. The key technologies are

facial recognition, eye tracking, and keystroke analy-

sis, which can identify behaviors linked to cheating.

The study applies both qualitative and quantitative re-

search methods in evaluating the performance of the

system. The system, with its benefits of live monitor-

ing and enhanced security of exams, presents several

concerns over privacy and data protection. Future up-

dates might be able to result in higher detection rates

and user-friendliness. The key goal is to develop a re-

liable AI proctoring system to ensure cheating is com-

pletely prohibited and exams are held without any in-

cidence of cheating for academic institutions.

Analysis on AI Proctoring System Using Differ-

ent ML Models (Sharma et al., 2024) focuses on the

need for effective proctoring techniques in Even with

the COVID- 19 pandemic, online exams became the

new norm for education. In this development, the use

of AI- based proctoring techniques that were based on

screen capture, keyboard analysis, facial recognition,

and algorithms in detecting suspicious activity was

highlighted. YOLO object detection for real-time ob-

ject identification and FaceNet for facial recognition

were suggested for usage. The system ensures more

secure exams through automatic monitoring, though

issues overlap against false positives. It will be plus

to privacy. Further advancements will enable it to add

the detection mechanism of mobile devices; further

improve the algorithms for better performance. The

key is making an almost effective proctoring system

that is ensured not to let the integrity of the exams

compromised and will do better than what exists cur-

rently.

Automated smart artifical intelligence-based proc-

toring system using deep learning(Verma et al., 2024)

addresses the emergent demand of effective online ed-

ucation and difficulties in fair and secure examination

processes while conducting an examination online. It

discusses building a complete proctoring system, us-

ing AI and deep learning technologies to watch test-

takers during their exams. Some of the key features

are user authentication, text and voice detection, ac-

tive window detection, gaze estimation, and phone

detection to detect possible cheating. The system

employs Dlib’s facial keypoint detector and OpenCV

for movement tracking, while using YOLOv3 trained

on the COCO dataset for identifying individuals and

mobile devices in webcam streams. It uses super-

vised classification methods such as PCA and SVM

for tasks such as mouth state identification and face

spoofing detection. Though the suggested system

enhances proctoring capacities and automates work-

loads, there are also such challenges as privacy con-

cerns and continuous improvement. With algorithms

further refined for more precise and efficient results,

immediate feedback to instructors will now be pos-

sible regarding the online test-taker’s behavioral and

emotional responses during actual tests.

Face Verification Component for Offline Proctor-

ing System using one-shot learning (Karthik et al.,

2022), the authors present an offline proctoring sys-

tem with one-shot learning, which identifies the iden-

tity through it, for reducing issues like seat-swapping

and lighting variations. MTCNN has been utilized for

face detection, and a pre-trained Siamese network is

used for face verification with 83.65 percentage accu-

racy on different orientations. Yet, problems with ex-

treme angles and false negatives persist; real-time op-

timization and further incorporation are possible fu-

ture improvements.

Heuristic-Based Automatic Online Proctoring

System (Raj et al., 2015) A heuristic-based proctoring

system with anomaly detection and student monitor-

ing during online exams. Main features include face

detection, behavioral analysis, and continuous track-

ing of the candidate’s screen view. Heuristics-Based

Rules Detection The use of heuristic rules flags suspi-

cious activities such as prolonged absence or unusual

gaze patterns. While the system is significantly ef-

fective in anomaly detection, it has problems in han-

dling diverse lighting and environmental conditions

that make accurate detection challenging. Going for-

ward, there would be scalability and false positives

concerns in the behavioral detection aspect.

An Intelligent System for Online Exam Monitor-

ing (Prathish et al., 2016) A multi-modal proctoring

system that uses video, audio, and active window cap-

ture to detect malpractice is introduced. Such fea-

tures include face detection, yaw angle estimation for

head pose analysis, and audio variation tracking, inte-

grated into a rule-based inference system. Such fea-

tures identify misconducts such as multi-face detec-

tion, prolonged face disappearance, and irregular au-

dio or window activity. However, the challenges are

handling diverse scenarios and real-time implemen-

ProctorEdge: Advanced AI Examination Monitoring and Security System

231

tation as it achieved 80 percentage accuracy during

tests. Future improvements include further scalability

and enhanced feature detection to enable even wider

use.

Real time Face Detection and Optimal Face Map-

ping for Online Classes(Archana et al., 2022), the sys-

tem used a combination of Local Binary Pattern His-

togram (LBPH) and Convolutional Neural Networks

(CNN) for face recognition with an accuracy of up

to 95 percentage. The system consists of a web-

based interface developed in the Django framework.

A Haar-cascade classifier has been used for real-time

face detection. Although the system has shown great

accuracy and efficiency, some challenges like varia-

tions in lighting conditions and facial occlusions ex-

ist. Future improvements involve using better quality

webcams along with diverse datasets that can enhance

further reliability and accuracy.

Strategies for Improving Object Detection in

RealTime Projects that use Deep Learning Tech-

nology(Abed and Murugan, 2023), Projects using

Deep Learning Technology have made tremendous

improvements by developing improved versions of

YOLO, particularly in the form of YOLOv7 and

YOLOv8. The best- known improvements are in

speed and mAP, thus achieving a very high score

of real-time applications. These developed models

are optimized through PyTorch, allowing efficiency

in training, fine-tuning, and deploying deep learn-

ing models. Among techniques involved data aug-

mentation, class balancing, ensemble learning used to

address various challenges and limitations, the chal-

lenges consist of unbalanced data sets; small object

detection; varying lighting. Introduction of variations

to the data set training it makes them quite robust as

per different kinds of variations in reality. Class bal-

ancing ensures well performance of all kinds of mod-

els across categories and further proceeds with IoT

integration so that this technology could better real-

ize live implementation. overall system efficiency.

Through IoT, the data transmission and processing

will be much faster, and the performance will be more

fluid. However, there are some critical issues like ac-

curacy of small objects and changing light illumina-

tion within a short time span that need to be overcome.

3 METHODOLOGY

The proposed system is going to allow the best com-

puter vision techniques amalgamate with the most ad-

vanced models of machine learning for online proc-

toring. Making it safe and efficient is possible. An im-

portant aspect of making up the system should com-

prise the facial identification of the examinee. It cap-

tures the face and even tracks the direction of sight

and detection of instances wherein the candidate got

diverted from viewing the screen through eye track-

ing. Audio detection will include all unauthorized and

background noise which might be captured hearing

them and lastly, device control for noticing any gadget

outside such as mobile phones etc. All the techniques

above are implicit in the hybrid technique. It’s all-

inclusive in such a way that it is easy to find and mark

suspicious behaviors inside the hall of examination.

Facial recognition establishes that the right candidate

is present for the test while eye tracking and audio

identification trace the cheating behavior by detecting

gaze deviation, prolongation of distraction, or illegal

communication. The device monitoring is recognition

of any use of equipment to compromise the integrity

of the examination.

The integration of the above technologies pro-

vides a safe, real-time monitoring solution to com-

mon problems that may arise in the remote proctoring

setup, such as identity fraud, cheating attempts, and

distracting external factors undermining the credibil-

ity and fairness of an online examination.

Figure 1: System Architecture

3.1 Facial Recognition

This module identifies the student using facial recog-

nition and tracks eye movement to detect off-screen

gazing.

3.1.1 Facial Landmarks Identification

The system captures face structures using Dlib pre-

computed models: This step identifies 68 crucial

key facial landmarks in any subject’s face which de-

termine how to calculate their head direction and

gaze. Nose point, eye-corns are captured along face-

boundary.

INCOFT 2025 - International Conference on Futuristic Technology

232

3.1.2 Estimating Yaw Angles Calculations

It estimates the yaw angle using a head pose estima-

tion technique with cv2.solvePnP(). It computes the

3D rotation of the head based on the relationship be-

tween predefined 3D facial points like the nose tip,

eyes, and mouth corners with their corresponding 2D

points in the image. The yaw angle as the head rota-

tion around the X-axis horizontally is retrieved from

the rotation vector as

yawangle = np.degrees(rotationVector[1])[0] (1)

That is, rotation vector actually represents the yaw ro-

tation component in radians, so this should be con-

verted to degrees.

3.1.3 Extended Gaze Deviation Detection

When the yaw angle exceeds the defined thresholds,

for example greater than the RIGHT YAW THRESH-

OLD or less than the LEFT YAW THRESHOLD,

longer than the alert duration of 3 seconds is set and

the system flags a warning. It is this that allows for

prompt detection of prolonged distractions or looking

away from the screen.

3.2 Smartphone and Tablet detection

This module determines the use of a smartphone or

tablet through a mobile detection model. The YOLO

version 8 was customized and fine tuned on a cus-

tomized dataset of 3250 images. The training process

included number of epochs = 55, batch size = 16 and

image size = 640 pixels for accurate identification of

a mobile device.

3.3 Audio Identification

The audio identification module continuously moni-

tors the environment for unauthorized sounds, such as

communication or external device usage. Using mi-

crophones connected to the student’s device, the sys-

tem analyzes audio data in real time to enhance the

proctoring process.

3.3.1 Audio Monitoring

The system detects sounds above a predefined fre-

quency threshold of 500 Hz, flagging them as suspi-

cious and generating alerts for the proctor. This al-

lows for immediate investigation of potential cheating

incidents.

3.3.2 Frequency-Based Flagging

By applying a frequency-based method, the system

ensures that only sounds indicative of potential cheat-

ing are flagged, effectively reducing false positives

from normal environmental noise.

3.4 Tab Monitoring

3.4.1 Browser Activity Monitoring

The system uses the browser’s APIs to track any at-

tempt that the student makes to change tabs or open

a new tab. In case the student opens an unauthorized

tab, the system flags that particular action and notifies

the proctor.

Table 1: Tab Monitoring APIs

API Description

chrome.tabs.onActivated To detect switching to a different tab

chrome.tabs.onCreated To detect when a new tab is opened

chrome.tabs.onUpdated To detect changes in a tab, such as a URL change

3.4.2 Real-time Tab Alerts

Anything the student does that has to do with opening

an unauthorized tab will immediately be sent to the

proctor’s attention, meaning that the student cannot

look up anything they are unsure about during the test.

3.5 Inference System

The outputs from audio capture, video input, and sys-

tem usage are integrated into a rule-based inference

system. These outputs are processed by the system,

which classifies them to identify potential miscon-

duct. The analysis combines all time frames where

the yaw angle and audio output exceed the threshold

value, along with any variations in window changes,

to generate an output log. If any one of these fac-

tors is detected in a given time frame, it can indicate

a potential for misconduct. The results highlight the

likelihood of malpractice, along with the associated

time frame

4 EXPERIMENTAL RESULTS

In this study, we deployed the pre-trained face and

mobile detection models. We made use of the dlib

library to perform the face detection as well as the

localization of facial landmarks. For the face detec-

tion, we implemented the frontal face detector by dlib,

while for the facial landmark detection, we used the

ProctorEdge: Advanced AI Examination Monitoring and Security System

233

"shape predictor 68 face landmarks.dat" model. The

alerting system was set at a threshold of 3 seconds to

detect prolonged gaze deviations and send alerts out

whenever users look away from the screen for longer

durations. In addition to tracking the user’s gaze di-

rection, the system efficiently detects the presence of

multiple faces. This is crucial for proctoring scenar-

ios, where the presence of additional individuals can

indicate misconduct. The system raises an alert when

more than one face is detected in the frame. For mo-

bile detection, we employed a pre-trained object de-

tection model YOLO version 8 that was fine-tuned

on a custom dataset of smartphone and tablet images.

This model was adapted to quite accurately detect mo-

bile devices in real-time, enhancing the system’s ca-

pability to monitor and alert when unauthorized de-

vices are in use.

4.1 Face Detection Scenarios

A pre-trained model was run on various test scenarios

to revalidate the accuracy in face detection and head

orientation estimation. Test results showed reliable

face detection and tracking during changes in position

of the head

4.2 Yaw Angle Detection

The system calculates the yaw angle to detect whether

the user is looking straight, to the left, or to right. The

yaw angle threshold for detecting a left-ward gaze

was set at -1° and for a right-ward gaze at -2°. This

setup helped in precisely identifying if the user was

looking sideways, allowing for timely alerts during

instances of gaze deviation.

4.2.1 Looking Straight

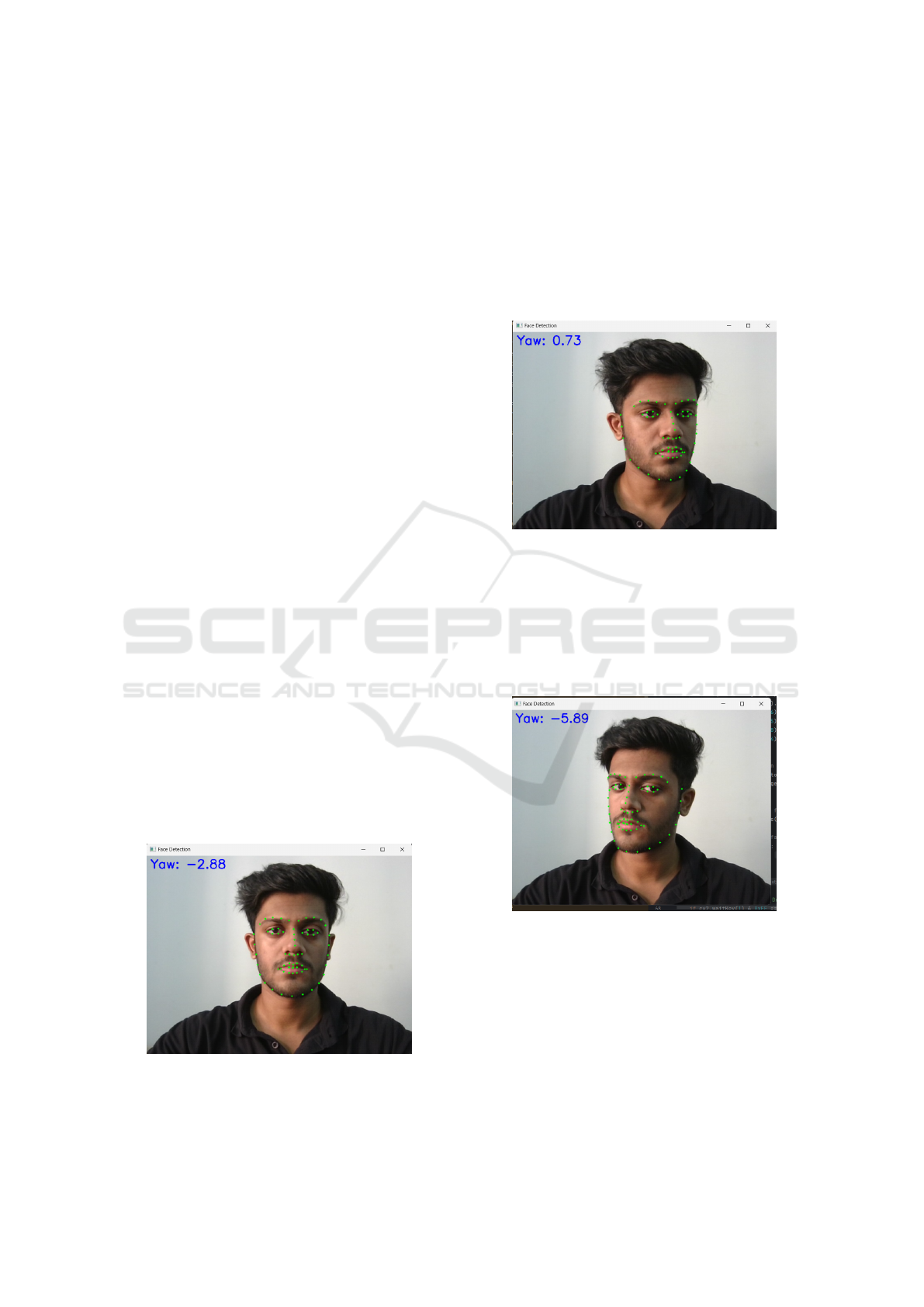

Figure 2: Yaw angle of face looking straight.

When The user’s face is directly in front of the

camera, indicating that the user is looking straight.

The figure below demonstrates the output when the

user is looking straight at the camera (see Fig.2).

4.2.2 Looking Left

When the examinee looked to the left, the system

tracked the movement and calculated the yaw angle

(see Fig.3).

Figure 3: Yaw angle of face looking left.

4.2.3 Looking Right

When the examinee looked to the right, the system

tracked the movement and calculated the yaw an-

gle.(see Fig.4).

Figure 4: Yaw angle of face looking right.

4.3 Mobile Detection

The training process included dataset of 3250 images ,

number of epochs = 55, batch size = 16 and image size

= 640 pixels for accurate identification of a mobile

device.

After training final precision = 99%, recall =

92.7%, mAP50 = 97.6%, mAP50-95 = 77.4%

INCOFT 2025 - International Conference on Futuristic Technology

234

Table 2: Performance Metrics for YOLOv8 Training

Epoch Precision Recall mAP50 mAP50-95

1 0.678 0.874 0.881 0.599

6 0.0303 0.707 0.0409 0.00887

11 0.00292 0.341 0.00268 0.00065

16 0.00486 0.415 0.00394 0.0015

21 0.837 0.876 0.913 0.592

26 0.734 0.944 0.855 0.582

31 0.809 0.931 0.903 0.648

36 0.899 0.902 0.953 0.662

41 0.951 0.949 0.974 0.743

46 0.973 0.870 0.960 0.743

51 0.981 0.927 0.973 0.740

55 0.990 0.927 0.976 0.774

4.4 Alert System

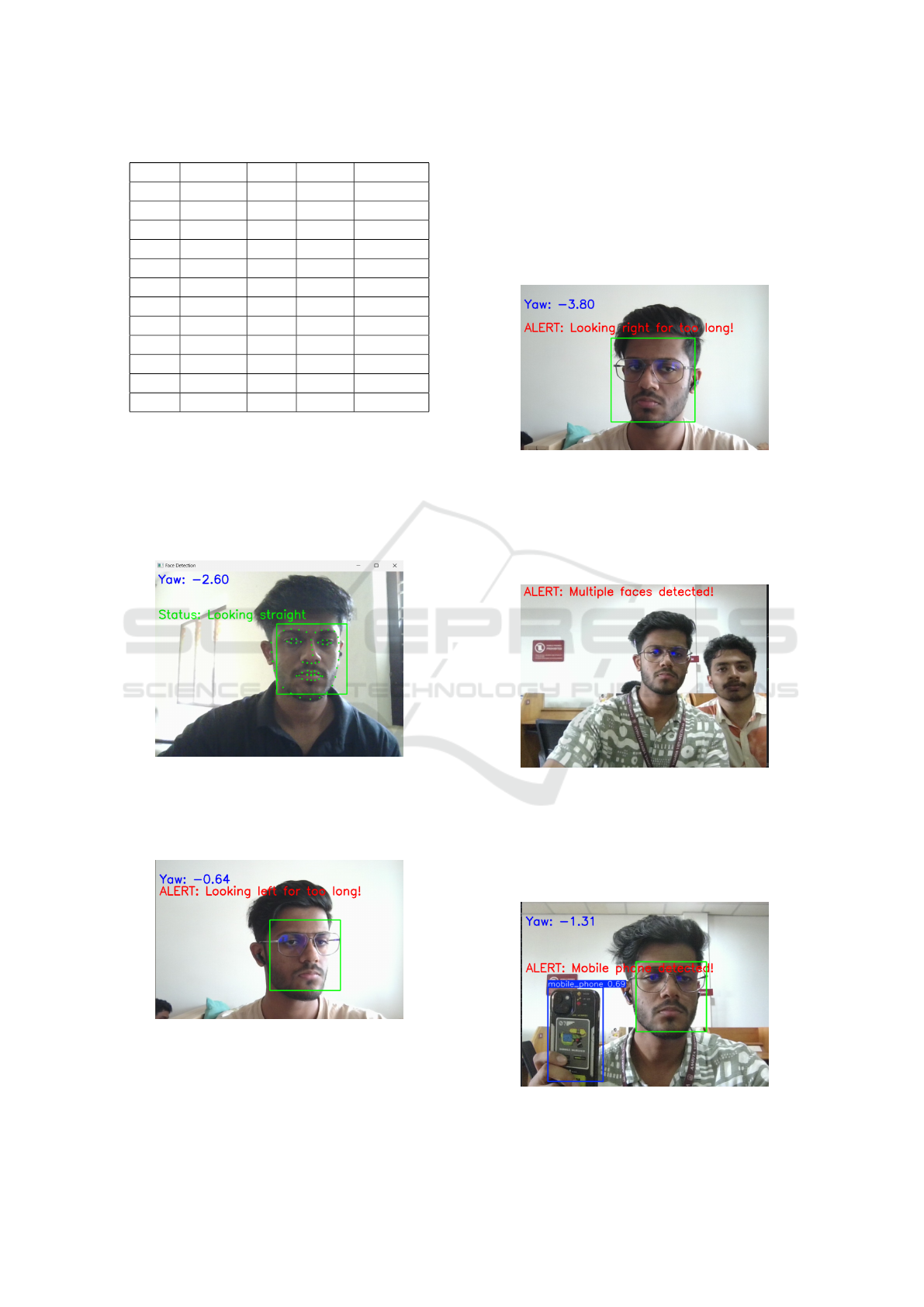

4.4.1 Face Looking Straight

The system effectively detected the face when looking

straight at the camera(see Fig.5).

Figure 5: Output of face looking straight.

4.4.2 Face Looking Left

Figure 6: Output of face looking left.

When the user looks to the left, the yaw angle be-

comes negative. For instance, when the yaw angle ex-

ceeds the threshold of -1°, the system detects that the

user is looking left. An alert is triggered if this condi-

tion persists for more than 3 seconds (see Fig.6).

4.4.3 Face Looking Right

Similarly, when the yaw angle exceeds 2°, indicating

that the user is looking right, the system issues an alert

after the defined threshold duration (see Fig.7)

Figure 7: Output of face looking right.

4.4.4 Multiple Faces

In scenarios where multiple faces were present, the

system triggered alerts(see Fig.8).

Figure 8: Output of face looking right.

4.4.5 Mobile Detection

In scenarios where use of smartphone was detected,

the system triggered alerts (see Fig.9).

Figure 9: Smartphone detected

ProctorEdge: Advanced AI Examination Monitoring and Security System

235

4.4.6 No Face Detected

In scenarios where no face was present, the system

triggered alerts(see Fig.10).

Figure 10: Output indicating that no face was detected.

4.5 Inference System Rules

Table 3: Rules and Decisions for Online Examination Proc-

toring

Number Rules Decision

Rule 1 If face of the examinee is

missing from the frame at

any point of time during the

examination

Malpractice

Rule 2 Face shifting significantly

from the initial position

more than three times

Warning

Rule 3 Face shifting significantly

from the initial position

more than six times

Malpractice

Rule 4 If multiple faces are de-

tected in the frame during

examination

Malpractice

Rule 5 Sound above the thresh-

old of mean-centered am-

plitude for more than 2

times

Warning

Rule 6 Sound above the threshold

amplitude for more than 5

times

Malpractice

Rule 7 Opening any window other

than the online examination

window

Malpractice

Rule 8 Detection of smartphones Malpractice

.

5 CONCLUSION

The proposed AI-based proctoring system will ensure

academic integrity in online examinations through fa-

cial recognition, mobile detection, audio monitoring,

and tab tracking. The experimental results confirm

the accuracy of detecting gaze deviations, unautho-

rized devices, and multiple faces. Thus, this provides

a secure and reliable solution for remote assessments.

Future improvements may include iris scanning to im-

prove identity verification and algorithm refinement

to work better under various conditions while reduc-

ing false positives and implementation of software de-

fined radio (SDR) to detect mobile phones within a

specified range of the exam attending system. This

system provides a solid foundation for secure, fair,

and transparent examinations on the Web.

REFERENCES

Abbas, M. A. E. and Hameed, S. (2022). A systematic re-

view of deep learning based online exam proctoring

systems for abnormal student behaviour detection. In-

ternational Journal of Scientific Research in Science,

Engineering and Technology, page 192.

Abed, N. and Murugan, R. (2023). Strategies for improv-

ing object detection in real-time projects that use deep

learning technology. In 2023 IEEE 8th International

Conference for Convergence in Technology (I2CT),

pages 1–6. IEEE.

Archana, M., Nitish, C., and Harikumar, S. (2022). Real

time face detection and optimal face mapping for on-

line classes. In Journal of Physics: Conference Series,

volume 2161, page 012063. IOP Publishing.

Aurelia, S., Thanuja, R., Chowdhury, S., and Hu, Y.-C.

(2024). Ai-based online proctoring: A review of the

state-of-the-art techniques and open challenges. Mul-

timedia Tools & Applications, 83(11).

Chougule, M., Bagul, S., Gharat, M., Malve, S., and

Kayande, D. (2024). Proctoxpert – an ai based online

proctoring system. In 2024 3rd International Confer-

ence for Innovation in Technology (INOCON), pages

1–8.

Ganidisastra, A. H. S. and Bandung, Y. (2021). An in-

cremental training on deep learning face recognition

for m-learning online exam proctoring. In 2021 IEEE

Asia Pacific Conference on Wireless and Mobile (AP-

WiMob), pages 213–219.

Karthik, P., Chowdary, P. N. V., Bhargav, M., Dhanush,

G., and Gopakumar, G. (2022). Face verification

component for offline proctoring system using one-

shot learning. In 2022 7th International Conference

on Communication and Electronics Systems (ICCES),

pages 1521–1526. IEEE.

Labayen, M., Vea, R., Flórez, J., Aginako, N., and Sierra, B.

(2021). Online student authentication and proctoring

INCOFT 2025 - International Conference on Futuristic Technology

236

system based on multimodal biometrics technology.

IEEE Access, 9:72398–72411.

Liu, Y., Ren, J., Xu, J., Bai, X., Kaur, R., and Xia, F.

(2024). Multiple instance learning for cheating de-

tection and localization in online examinations. IEEE

Transactions on Cognitive and Developmental Sys-

tems, 16(4):1315–1326.

Maniar, S., Sukhani, K., Shah, K., and Dhage, S. (2021).

Automated proctoring system using computer vision

techniques. In 2021 International Conference on Sys-

tem, Computation, Automation and Networking (IC-

SCAN), pages 1–6.

Paul, J. S., Farhath, O., and Selvan, M. P. (2024). Ai based

proctoring system – a review. In 2024 International

Conference on Inventive Computation Technologies

(ICICT), pages 1–5.

Prathish, S., Bijlani, K., et al. (2016). An intelligent system

for online exam monitoring. In 2016 International

conference on information science (ICIS), pages 138–

143. IEEE.

Raj, R. V., Narayanan, S. A., and Bijlani, K. (2015).

Heuristic-based automatic online proctoring system.

In 2015 IEEE 15th International Conference on Ad-

vanced Learning Technologies, pages 458–459. IEEE.

Sharma, S., Manna, A., and Arunachalam, N. (2024). Anal-

ysis on ai proctoring system using various ml models.

In 2024 10th International Conference on Communi-

cation and Signal Processing (ICCSP), pages 1179–

1184.

Verma, P., Malhotra, N., Suri, R., and Kumar, R. (2024).

Automated smart artificial intelligence-based proctor-

ing system using deep learning. Soft Computing,

28(4):3479–3489.

ProctorEdge: Advanced AI Examination Monitoring and Security System

237