DeepSecure: An AI-Powered System for Real-Time Detection and

Prevention of DeepFake Image Uploads

Jitha K, Neethu Dominic, Nadiya Hafsath K P, Nafeesathul Kamariyya, Nahva C and Ranjinee R

Department of Computer Science & Engineering, MEA Engineering College, Perinthalmanna, Kerala, India

Keywords:

Deepfake Detection, Image Manipulation, AI and Machine Learning, Real-Time Monitoring, Content

Moderation, Convolutional Neural Networks (CNN), Xception Model, Flutter Application Development,

Firebase Integration, User Experience, Mobile Application Security, Dataset Collection and Preprocessing,

Model Evaluation Metrics, Precision and Recall, Prototype Development.

Abstract:

Deepfake images are considered a major online threat because they have the potential to be used maliciously,

aimed at manipulation and deception of individuals or disseminating fake news as well an invasion of privacy.

The technology industry is advancing at a rapid pace, soon this would be possible to develop high-fidelity but

fake images which are quite harmful in use. It is increasingly apparent that the detection and prevention of fake

news from spreading, requires urgent work on systematic approaches. This paper introduces an AI-powered

solution made to detect and prevent any deepfake image uploads from happening in real-time. The system

has been designed to improve digital security by protecting online platforms and its users from the evils of

deepfakes.

1 INTRODUCTION

Deepfake photos are a serious cause of dangerous dig-

ital security increase and have appeared to looted con-

fidence in online content. The further the technology

for this type of deepfake advances, the harder it is to

tell authentic images from adulterated ones a situation

full of risks not only for individuals and organizations

but also society as a whole. Advances in the ability to

create deepfakes had left security experts deeply con-

cerned and such an attainment can only spark a more

urgent need for better detection systems.

1.1 Background and Motivation

In order to keep up with rapidly changing technolo-

gies, traditional detection methods are no longer able

to cope and the marketplace is now in desperate

search of real-time solutions. This project is moti-

vated by the growing occurrence of deepfake images,

and their resulting threats in this diverse range from

privacy to social/political stability. The proposed sys-

tem makes use of AI and deep learning to improve

real-time detection by logging image uploads, detect-

ing malicious content proactively and an interface for

admin users.

1.2 Problem Statement

Deepfakes are specifically designed to overcome

those challenges of detection due, in part, because

they rely on a very specific approach by the adversary:

one that does not involve creating ”new” data from

scratch doesn’t display signs of manipulation in their

raw form. A more top-line, real-time detection and

prevention system is urgently required to prevent dig-

ital platforms/apps growing additional organs of ma-

nipulated content that expose billions of users. With-

out such a system, deepfakes will further erode trust

in digital media; hence it is important to have an end-

to-end solution that can accurately tackle these chal-

lenges way before they happen.

2 LITERATURE SURVEY

The growing prevalence of deepfake technology has

become a significant threat to cyber security and the

integrity of visual content in these last years. A large

number of studies have been conducted to overcome

these challenges in various ways ranging from detect-

ing and averting the misuse of deepfake images. In

the rest of this literature survey, we aim to highlight

K, J., Dominic, N., Hafsath K P, N., Kamariyya, N., C, N. and R, R.

DeepSecure: An AI-Powered System for Real-Time Detection and Prevention of DeepFake Image Uploads.

DOI: 10.5220/0013611600004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 181-188

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

181

some key research in deepfake detection along with

methods used and their strengths. This review of the

literature not only sheds light on existing tools and

methodologies in state-of-the-art but also reveals lim-

itations to be filled which becomes a backbone for our

proposed system.

2.1 Deepfake Detection Systems: A

Comparative Analysis

Most of the deepfake detection methods of manip-

ulated facial images explore other ways and means

in different approaches, for instance, developing the

system FaceForensics++ developed by R

¨

ossler et

al. (2019) , which utilizes trained XceptionNet and

MesoNet on a very extensive dataset to carry out ef-

fective detection without any issues for the detec-

tion of facial manipulation. Generally, one of its ap-

proaches is deemed to analyze posts after their up-

load and still contains delays, and thus calls for an

immediate, real-time solution in the implementation

by DeepSecure (R

¨

ossler et al., 2019).

Ivanov et al. (2020) proposed a deep learning sys-

tem combining ResNet50 CNN with FSRCNN to en-

hance the detection accuracy up to 95.5% by improv-

ing the image clarity. However, it is not easy to de-

ploy on mobile or in real-time due to its computa-

tion requirements. DeepSecure overcomes the limi-

tation of computational requirements because of op-

timized cloud-based processing, which allows for ef-

ficient and real-time detection while also managing

resource demands (Ivanov et al., 2020).

The morphed face detection system by Raghaven-

dra et al. (2017) makes use of VGG19 and

AlexNet models having P-CRC, which lends it an-

other strength for its digital as well as print scanned

morph detection. However because of the effective-

ness, one cannot apply it for better adaptability in

other manipulation because one has to constraint it

for morphing detection. DeepSecure advances this

method by expanding it in such a manner that it could

adapt itself to a number of wider manipulations in

making it multiple scenario adaptive (Raghavendra

et al., 2017).

Qurat-ul-ain et al. (2021) applied ELA using

VGG-16 and ResNet50 in detecting the forged faces.

The ELA techniques improved accuracy but small

datasets led to overfitting, which generally impacts

the real-world performance. DeepSecure is trained

over a large diverse dataset that makes it robust for

different applications in real life (ul ain et al., 2021).

The model by Kim and Cho (2021) utilizes

ResNet18 with a multi-channel convolutional ap-

proach to improve the detection on compressed im-

ages as well as even low-quality inputs. However, it

lacks real-time performance, thereby cannot be prac-

tically applied in the real world. DeepSecure has

been designed to perform at real-time, overcoming the

problem by offering instant detection-a need of the

hour, in order to prevent swift spread of manipulated

content (Kim and Cho, 2021).

Another important system by Zhang et al. (2018)

is based on SPN and SVM classification for morph

detection, which proves effective even with com-

pressed images. However, this is only morphing

and does not extend to other types of manipulations.

DeepSecure has stronger detection algorithms that

cover a wider range of manipulations, including deep-

fakes, thus making it more user-friendly across differ-

ent media platforms (Zhang et al., 2018).

Scherhag et al. (2019) conducted a survey on mor-

phing attacks and their detection techniques. This

study has a very wide survey of the morphing at-

tacks and its detection techniques with many theoret-

ical insights. However, it lacks practical implementa-

tion. The work was actually designed as being practi-

cal and deployable at the same time since it provides

real-time detection for immediate response (Scherhag

et al., 2019b).

The PRNU system developed by Scherhag et al.

(2019) combines spatial and spectral features toward

the goal of achieving very high detection rates, while

its high computational cost does not enable real-time

application. DeepSecure emphasizes scalability and

resource efficiency, which will be beneficial in mo-

bile and also real-time environments (Scherhag et al.,

2019a).

Abdullah et al. (2024) performed an in-depth re-

view of various deepfake detection techniques, es-

tablished their strength and weakness, but this did

not help in providing immediate solutions for real-

time applications. DeepSecure addresses the real-

time capabilities and offers a loop for improvement

because deepfake techniques change over time (Ab-

dullah et al., 2024).

Kuznetsov (2020) focused on remote sensing im-

age forgery detection using CNNs. It was highly ac-

curate for splicing and copy-move forgeries. Its do-

main specificity is less, so it cannot be applied gen-

erally in deepfake detection scenarios. DeepSecure,

a facial image manipulation detection technique de-

signed specifically, gives an efficient approach toward

applications in social media, news, and media verifi-

cation (Kuznetsov, 2020).

The current techniques have various drawbacks,

such as focusing on particular types of manipulation,

analysis offline, high computational cost, and lim-

ited adaptability. DeepSecure addresses the gap by

INCOFT 2025 - International Conference on Futuristic Technology

182

providing real-time scalability and adaptability in de-

tection towards various types of manipulations and

using resources efficiently along with better perfor-

mance through integrated user feedback. These im-

provements position DeepSecure as a much-needed

solution in the larger landscape of deepfake detection,

suitable for a wide range of practical applications.

3 METHODOLOGY

To develop a good efficient deep fake detection sys-

tem that produces high precision and real-time re-

sults using an efficient content management system,

several important steps are involved. The starting

point for the process includes having good authentic

and manipulated datasets that are then normalized and

augmented to enable consistent training. This dataset

is utilized in training the CNN Xception model with a

well-known complex deepfake pattern behavior with

validation and testing steps that ensure strong behav-

ior across wide-ranging inputs.

Then, to detect manipulated images in real time,

it flags manipulated images immediately when they

are uploaded. This system includes the rule-based

flagging mechanism, which increases accuracy levels

for suspicious content. Through an admin dashboard,

flagged images are checked by humans and decisions

made, incorporating feedback into further refinement

over time, which creates a closed-loop system that in-

creases accuracy and prevention.

Figure 1: Block Diagram for DeepSecure

3.1 Data Collection and Pre-processing

Datasets will be derived from actual as well as deep-

fake images to provide adequately diverse training.

Noise and inconsistencies are cleaned out in the pre-

processing stage from images. Data augmentation in

the dataset includes rotation, scaling, and flipping to

provide more images in the dataset as well as en-

hance generalization across different scenarios. The

processed images are provided together in orderable

forms for convenient access for training and testing

purposes.

3.2 Model Training and Deepfake

Detection

The CNN Xception model would, therefore be the

core of deepfake detection capabilities in the system.

This deep learning model is trained on the labeled

preprocessed datasets for images so that patterns of

deepfakes can be identified with high precision. That

means, authenticity or otherwise of some images can

be learnt by the model during the training phase. Af-

ter this training, the model is validated by training it

on an independent validation dataset. This further ex-

tends the workings of the model’s parameters to fine-

tune its performance. It is at the final stage of testing

that the model ensures whether it is generalizing well

on completely unseen data, as this new attempt made

is beyond the training data.

3.3 Real-Time Monitoring and Flagging

In the real-time detection, the system processes im-

ages right after they are uploaded and applies the

trained CNN Xception model in real-time to deter-

mine whether the content is a deepfake. It has a mech-

anism of real-time monitoring where it goes on check-

ing upload images as they happen, allowing timely

intervention in case of a deepfake. There is a flag-

ging mechanism, based on a rule-based system that

flags images exhibiting marks of manipulation. All

the flagged pictures are therefore set aside for a closer

look so that one does not distribute immediately, leav-

ing only verified material to be sent.

3.4 Admin Interface for Review

The admin dashboard is a centered interface where

flagged images are reviewed by the administrator.

The admins interface is the center by which admin-

istrators view, review, and give judgment to flagged

content. This happens through the approval/rejection

workflow, whereby legit images get approved, while

those that are considered to be deepfakes get rejected.

The feedback from the admins during this process is

recorded and further used to refine over time the de-

tection system. These improve the accuracy of the

model as well as the efficiency of the entire system

concerning manipulations’ detection.

3.5 Prevention and System Feedback

A prevention module prevents those flagged images

from public sharing until in-depth review. Moreover,

a mechanism of feedback is created when the deci-

sions of the admins are reflected through the system

DeepSecure: An AI-Powered System for Real-Time Detection and Prevention of DeepFake Image Uploads

183

so that improvement can constantly be done to the

detection model as well as to the flagging processes

themselves. The said process of improvement gives

the system new manipulation tactics that can also en-

hance the entire system performance.

4 SYSTEM DESIGN

The system uses state-of-the-art machine learning

models combined with cloud computing and mech-

anisms involving human oversight to immediately de-

tect, flag, and govern manipulated image uploads in

real time. We have an AI deepfake detection algo-

rithm (CNN Xception) implemented in combination

with the application of a cloud storage infrastructure

and an administrative review interface. Each of these

parts is critical to ensure that there is timely detec-

tion and prevention of harmful content while learning

and improving the system using feedback loops. Ar-

chitecture leverages real-time image analysis, cloud-

based functions, and human oversight for a solution

robust and scalable to the deepfake problem.

Figure 2: System Diagram

This shows the system design diagram in which

architecture and workflow have been applied on an

AI-based deep fake detection system to identify im-

ages that have been altered or fake images based on

a CNN Xception model. In fact, the whole work-

flow can be categorized into core components. It

works separately and supports the real-time detection,

flagging of deep fake images, further review, and re-

sponse during the uploading process from the mobile

application. Each of these components plays an es-

sential role, thus making the system efficient to work

with both automated and manual intervention func-

tionalities.

4.1 Mobile App (User)

The mobile application acts as the front-end interface

that the user interacts with. Users upload images from

their mobile devices. These may be of various types;

they could be genuine or manipulated, and thus, the

system needs to analyze them to determine the ac-

tual authenticity of the images. It sends a connection

to the back-end where the uploaded image is passed

on to the cloud-based storage, and then further ana-

lyzed by the AI-based deepfake detection algorithm.

After success, it waits for feedback regarding its sta-

tus; whether the received image has been approved or

flagged.

4.2 Firebase Storage

Firebase Storage is an image repository that holds

all the images uploaded from the mobile application.

The user would upload the image, and immediately,

the image goes straight to Firebase Storage to be-

gin further processing. Other than providing image

storage, Firebase Storage assists in efficient access

and communication of inter-component functionali-

ties between other pieces of the system like the cloud

function and admin interface.

4.3 Cloud Function AI Deepfake

Detection Algorithm CNN Xception

The detection capability of the system depends upon

a cloud function that processes the AI deepfake de-

tection algorithm by using the CNN Xception model.

Once the image is uploaded, the cloud function pro-

ceeds to process this image against the trained deep

learning model, which had been trained on a sig-

nificant dataset of authentic and deepfake images to

identify signature manipulation details. If the model

deems the image suspicious or may be a deepfake,

it sends the image back to Firebase Storage where it

flags the administrator to review. If the image passes

through the test, no disruption occurs.

4.4 Flagging mechanism and feedback

loop

The flagged image is stored back in Firebase Storage

with the label specifying that it needs further review

via the admin interface. Such images can also be sub-

jected to scrutiny from humans before any kind of ac-

tion is taken in this direction so that false positives

would not affect the user’s experience.

4.5 Admin Interface

The admin component is essential to include human

oversight in the detection workflow. Suspicious im-

ages that the system flags will go through the ad-

min interface for administrators to review and de-

cide if the image is valid. Images approved will be

allowed to continue through the platform while go-

ing through a deepfakes detection blocking upload or

INCOFT 2025 - International Conference on Futuristic Technology

184

sharing. This enhances the detection ability of the

system because feedback is available on every deci-

sion taken. Real cases from real life help in learn-

ing and refining the detection parameters, thereby en-

hancing performance.

5 IMPLEMENTATION

In making the AI-based deepfake detection system, it

would have multiple components placed, connected

to work towards offering an image-detecting capabil-

ity while simultaneously being attractive and trust-

generating. This has an implementation level that

spans over cross-platform app development, cloud

storage, secured user management, and sophisticated

model-based detection with capabilities to analyze

real-time.

5.1 Application Implementation

Applications are designed on Flutter, where an appli-

cation can be developed and run on mobile as well as

web by using only one code. Thus the consistent look

and feel of things can be implemented, without be-

ing worried about some different version. It includes

many features, like hot reloading, for the real time

visualization of changes that can enhance faster di-

agnosis of the problems as well as the designing of

the user interface. The app had the easy navigation

feature such as uploading images, access to flagged

content, and the admin section for uploading images,

viewing flagged content, and access to flagged con-

tent for the administrator who tracks and manages the

administration on flagged content and maintains the

user accounts for setting up restrictions to sensitive ar-

eas of the application with scalable performance. The

admin interface also enables moderators to view up-

loaded content flagged appropriately, along with up-

load time and flags applied, and approve or reject sub-

missions with ease. Future plans include embedding

an analytics dashboard to track trends in flagged con-

tent and user interactions, which will help improve the

system further.

5.2 Dataset Collection and

Preprocessing

The success of a deep fake detection system relies

heavily on curating a good dataset. Therefore, the

training data were well balanced in content as real

images and artificially manipulated images obtained

from either open datasets or private repositories. Data

preprocessing also enables enhancement in quality

and the increased performance of the model because

all the images can be resized to the standard di-

mension for uniformity with normalized pixel values

within an equivalent range. The technique of data

augmentation includes rotation, flipping, and any kind

of color adjustment to ensure the model generalizes

well and doesn’t overfit. A subset of training, valida-

tion and testing enables learning effectively and vali-

dates its performance on new data.

5.3 CNN Xception Model

Implementation

This deepfake detection model is based on the archi-

tecture of Xception, which is a CNN optimized for

image classification. The algorithm is described be-

low:

Algorithm 1 Steps for CNN Xception Model

Step 1: Input Layer: Accept an input image of a prede-

fined size (e.g., 299x299 pixels for Xception).

Step 2: Initial Convolutional Layers: Apply a standard

convolution operation followed by batch nor-

malization and activation functions (ReLU).

Use a 2D convolution layer with a kernel size

(e.g., 3x3) and a stride (e.g., 2) to down-sample

the input.

Step 3: Depthwise Separable Convolution Blocks: For

each block:

a. Depthwise Convolution: Apply a depthwise

convolution to each channel separately.

b. Pointwise Convolution: Follow it with a

pointwise convolution (1x1) to mix the out-

put channels.

c. Activation: Apply batch normalization and

a nonlinear activation function (usually

ReLU).

Step 4: Residual Connections: After each block, add

a residual connection that skips the block and

adds the input to the output, helping in better

gradient flow.

Step 5: Pooling Layers: Use global average pooling at

the end of the convolutional blocks to reduce

dimensionality and prevent overfitting.

Step 6: Fully Connected Layer: Flatten the output

from the pooling layer and connect it to a dense

layer. Use a softmax activation function for

multi-class classification or a sigmoid activa-

tion for binary classification.

Step 7: Output Layer: Output probabilities for each

class (real vs. deepfake).

DeepSecure: An AI-Powered System for Real-Time Detection and Prevention of DeepFake Image Uploads

185

5.4 Model Training

The dataset was split to optimize the model’s learning

and performance assessment; generally, 70% of the

data is used to train the model, while 20% is used

for validation and 10% is used for testing. It allows

the model to learn from a substantial dataset, but at

the same time, validate its generalization capability

on data it hasn’t seen before. The hyperparameters-

tuning during training include using validation to fine-

tune parameters such as learning rate and batch size,

while early stopping and dropout are applied to avoid

overfitting. Such a structure in the training process

ensures that the model is able to pick patterns relating

to the manipulated images.

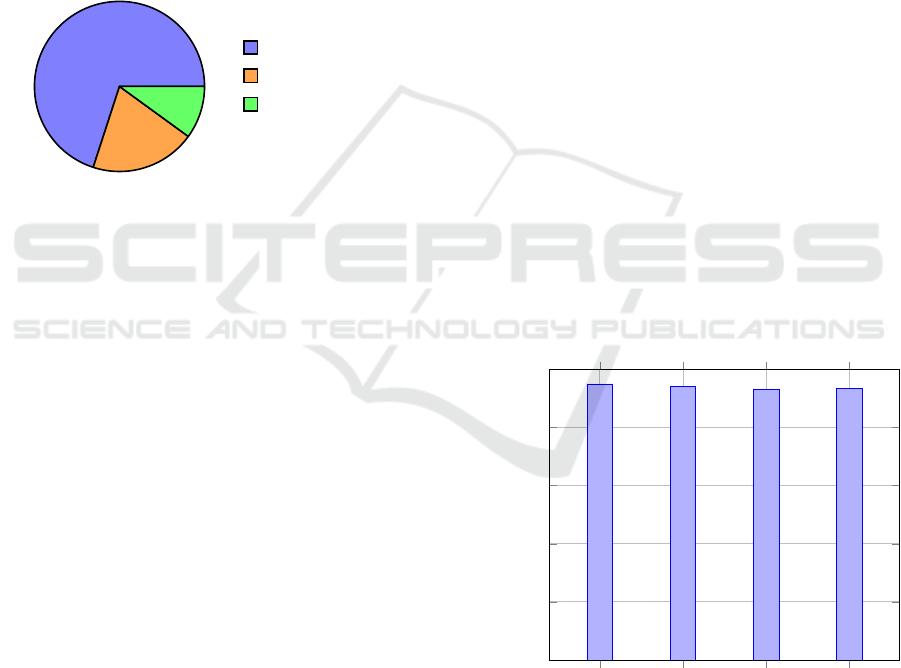

70%

20%

10%

Training Data

Validation Data

Testing Data

Figure 3: Dataset Split for Model Training

5.5 Integration of the Trained Model

The Xception model is integrated in the Flutter appli-

cation after training to enable deepfake in real-time.

TensorFlow Lite optimizes the model so that it can

run on pretty low processing devices with decent effi-

ciency. As soon as one uploads an image, the model

processes the image and provides immediate response

by labelling the content as real or fake; this is what

builds a user’s trust and further gives the users the

capability of verifying the authenticity of something.

Platform channels in Flutter aid the model to com-

municate with the app interface in such a way that

integration is fluent.

5.6 Performance Testing and

Evaluation

After the integration, model testing is carried out con-

cerning accuracy, precision, recall, and F1 scores to

classify real images with manipulated ones. The de-

veloped application is tested under severe conditions,

such as different resolution of images and network

speeds, to assess its reliability. In this development

phase, user feedback collected is used to enhance in-

terface usability and pinpoint areas needing improve-

ment. This phase aimed at balancing the detection

accuracies with the speed to ensure a robust user-

friendly experience responsive to real requirements.

6 RESULTS AND DISCUSSION

The performance of the proposed deepfake detection

system is analyzed using several key metrics includ-

ing accuracy, efficiency, and user experience.

6.1 Model Performance

The CNN Xception model was tested on a dedicated

dataset with the following results:

• Accuracy: 95%, thus offering high reliability in

real-manipulated image discrimination.

• Precision: 94%. It shows that the model’s fake

image identification with almost zero false posi-

tives.

• Recall: 93%, indicating its suitability in classify-

ing most of the fake contents.

• F1 Score: 93.5%, which is a good balance for the

correct classification of the true images as well as

fake ones.

These results indicate that real-time deepfake de-

tection using the model is appropriate since it has

learned well enough to discern between the original

and the fake images.

Accuracy

Precision Recall F1 Score

0

20

40

60

80

100

95

94

93

93.5

Metrics

Percentage (%)

Model Performance Metrics

Figure 4: Performance Metrics of the CNN Xception Model

6.2 Real-Time Detection and User

Experience

On mobile devices with TensorFlow Lite, the system

averaged 1.5 seconds per image latency, which trans-

INCOFT 2025 - International Conference on Futuristic Technology

186

lates to nearly instant feedback for users regarding the

authenticity of their images. Users’ responses indi-

cated satisfaction with the application’s responsive-

ness and navigability, with special appreciation shown

for the intuitive design of the upload and admin re-

view interfaces.

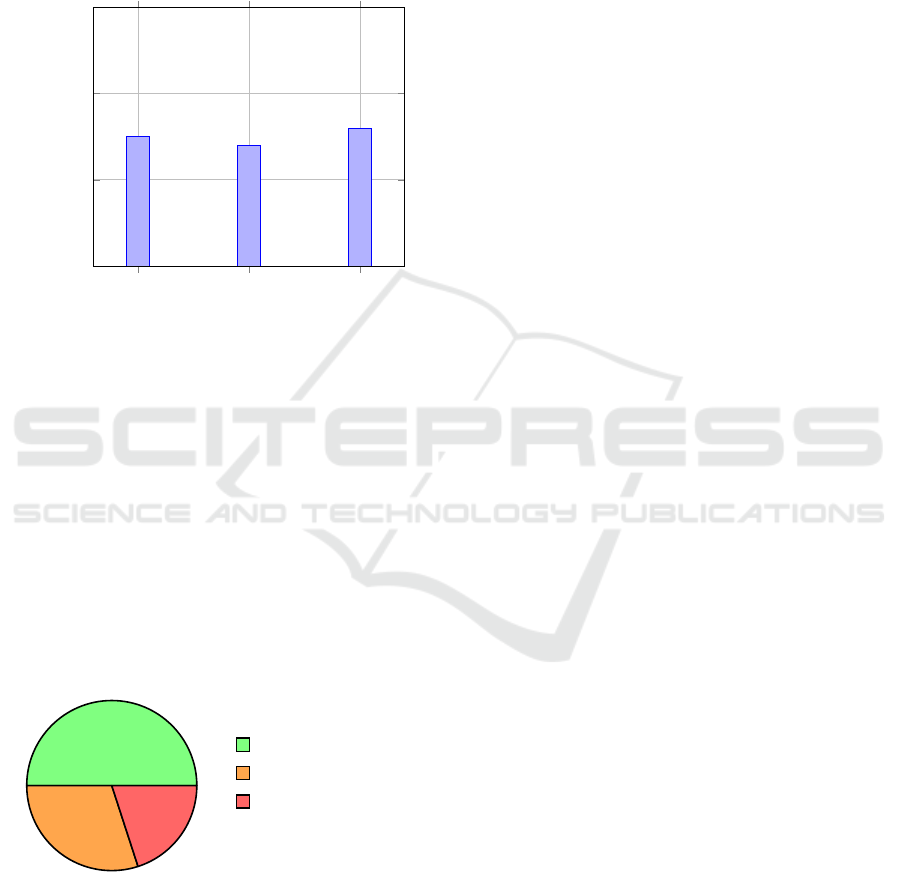

Test 1 Test 2 Test 3

0

1

2

3

1.5

1.4

1.6

Test Number

Latency (seconds)

Average Latency for Image Processing

Figure 5: Average Latency for Image Processing on Mobile

Devices

6.3 Admin Interface and Content

Moderation

The admin interface was designed for efficient re-

views of flagged content, with all decisions logged

in Firebase for proper traceability and transparency.

Upon testing, administrators noted a general review

time of 2 seconds for every flagged image, benefiting

from the streamlined process it afforded in modera-

tion. This setup enabled quick decision-making, sup-

ported by the necessary information relevant to any

flagged image.

50%

30%

20%

less than 2 sec

2-4 sec

greater than 4 sec

Figure 6: Admin Review Time Distribution for Flagged Im-

ages

6.4 Practical Implications

Overall, the system demonstrated high accuracy and

responded well in time, meeting the essential require-

ments for real-time mobile applications. Periodic up-

dates to the datasets and model calibration would be

necessary to keep pace with the new technologies

emerging from deepfakes; however, this implemen-

tation provides a solid foundation for effectively de-

tecting real-time manipulation in images.

7 FUTURE DIRECTION

It has a huge potential for development as future

improvement may be achieved through training on

larger and more diverse datasets or by applying trans-

fer learning to achieve higher accuracy. Optimizing

the real-time processes may reduce latency and fur-

ther improve the user experience. Blockchain for

image provenance verification and AI for content

moderation can expand its applicability. The valida-

tion mechanisms are crowdsourced so that user feed-

back towards continuing performance improvement

becomes possible, whereas application in journalism,

social media, and digital forensics can serve as an ef-

fective solution for fighting disinformation. Also, in-

terdisciplinary cooperation will contribute to the de-

velopment of this system and facilitate explainable AI

to earn trust in its users.

8 CONCLUSION

Altogether, the developed AI deep fake detection sys-

tem is a success and practical approach that can be

used in real-time using manipulated images. The

given balanced performance of 95% accuracy, 94%

precision, and recall set at 93% brings reliable solu-

tions for most content moderation tasks. Notwith-

standing its modest results, user-friendly interfaces

accompanied by real-time feedback support user ex-

perience and engagement further. Because this sys-

tem creates the bases of new advancements in tech-

nology and deepfake techniques, further works should

improve continuously, enhance datasets, and enlarge

their applications areas to other fields. This type of

work indicates a necessity to develop more effective

counter-measures against misinformation that under-

mines the integrity of digital content these days.

REFERENCES

Abdullah, S. M., Cheruvu, A., Kanchi, S., Chung, T., Gao,

P., Jadliwala, M., and Viswanath, B. (2024). An anal-

ysis of recent advances in deepfake image detection in

an evolving threat landscape. In 2024 IEEE Sympo-

sium on Security and Privacy (SP), pages 91–109.

DeepSecure: An AI-Powered System for Real-Time Detection and Prevention of DeepFake Image Uploads

187

Ivanov, N. S., Arzhskov, A. V., and Ivanenko, V. G. (2020).

Combining deep learning and super-resolution algo-

rithms for deep fake detection. In 2020 IEEE Confer-

ence of Russian Young Researchers in Electrical and

Electronic Engineering (EIConRus), pages 326–328.

Kim, E. and Cho, S. (2021). Exposing fake faces through

deep neural networks combining content and trace

feature extractors. IEEE Access, 9:123493–123503.

Kuznetsov, A. (2020). On deep learning approach in re-

mote sensing data forgery detection. In 2020 Inter-

national Conference on Information Technology and

Nanotechnology (ITNT), pages 1–4.

Raghavendra, R., Raja, K. B., Venkatesh, S., and Busch,

C. (2017). Transferable deep-cnn features for detect-

ing digital and print-scanned morphed face images. In

2017 IEEE Conference on Computer Vision and Pat-

tern Recognition Workshops (CVPRW), pages 1822–

1830.

R

¨

ossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies,

J., and Niessner, M. (2019). Faceforensics++: Learn-

ing to detect manipulated facial images. In 2019

IEEE/CVF International Conference on Computer Vi-

sion (ICCV), pages 1–11.

Scherhag, U., Debiasi, L., Rathgeb, C., Busch, C., and Uhl,

A. (2019a). Detection of face morphing attacks based

on prnu analysis. IEEE Transactions on Biometrics,

Behavior, and Identity Science, 1(4):302–317.

Scherhag, U., Rathgeb, C., Merkle, J., Breithaupt, R., and

Busch, C. (2019b). Face recognition systems under

morphing attacks: A survey. IEEE Access, 7:23012–

23026.

ul ain, Q., Nida, N., Irtaza, A., and Ilyas, N. (2021). Forged

face detection using ela and deep learning techniques.

In 2021 International Bhurban Conference on Applied

Sciences and Technologies (IBCAST), pages 271–275.

Zhang, L.-B., Peng, F., and Long, M. (2018). Face mor-

phing detection using fourier spectrum of sensor pat-

tern noise. In 2018 IEEE International Conference on

Multimedia and Expo (ICME), pages 1–6.

INCOFT 2025 - International Conference on Futuristic Technology

188