Interpretability in AI for Early Lung Cancer Diagnosis: Fostering

Confidence in Healthcare

Sukanya Walishetti

1 a

, Subhasareddy Khot

1 b

, Shreya Marihal

1 c

,Prathmesh Kittur

1 d

,

Shantala Giraddi

2 e

and Prema T. Akkasaligar

1 f

1

Department of Computer Science and Engineering, KLE Technological University’s Dr. M. S. Sheshgiri College of

Engineering and Technology, Belagavi, Karnataka, India

2

KLE Technological University’s B.V.B. College of Engineering and Technology, Hubballi, Karnataka, India

Keywords:

Explainable AI (XAI), Convolutional Neural Networks (CNN), Medical Imaging, Grad-CAM, ResNet-18,

MobileNetv4, Chest X-Ray Analysis.

Abstract:

Lung cancer represents a significant cause of death, making early detection essential for better survival rates.

Lung nodules, which are small tissue masses in the lungs, can be initial indicators of lung cancer but are often

difficult to detect in chest X-rays due to their subtle appearance and potential overlap with normal anatomical

features. Analyzing these images manually is both error-prone and inefficient, often leading to discrepancies.

This research integrates convolutional neural networks (CNNs), specifically ResNet-18 and MobileNetV4,

with explainable AI techniques such as Grad-CAM to overcome these challenges. The ResNet-18 model

demonstrates high accuracy in nodule classification, while MobileNetV4 also shows strong performance, high-

lighting the potential of deep learning in this area. Grad-CAM is used to provide interpretability by visually

highlighting the regions of chest X-rays that influence the model’s predictions. This transparency is essential

for gaining trust from medical professionals, as it addresses the clinical need for accountability and supports

more informed diagnostic decisions.

1 INTRODUCTION

Lung cancer is the one of the primary causes of cancer

as there is increase in number of deaths related to lung

cancer. In majority of cases, lung cancer is detected

during critical stages where treatment is very limited.

Lung cancer is the leading cause of cancer-related

deaths globally, accounting for 1.8 million deaths in

2020 (18% of all cancer-related deaths). Early detec-

tion significantly improves outcomes, with localized

cases achieving a five-year survival rate of 61.2%. In

Malaysia, lung cancer constitutes 10% of all cancer

cases but has a notably low five-year survival rate of

9.0% (95% CI: 8.4–9.7).(Sachithanandan et al., 2024)

LDCT screening overcomes lung cancer mortality by

20–61%, yet its high cost and limited accessibility

a

https://orcid.org/0009-0009-5394-8181

b

https://orcid.org/0009-0003-4366-6718

c

https://orcid.org/0009-0007-0295-4931

d

https://orcid.org/0009-0002-8257-0172

e

https://orcid.org/0000-0003-3929-3819

f

https://orcid.org/0000-0003-2214-9389

hinder widespread adoption .

Timely detection of lung cancer is critically

important as it increases the survival rates but the

drawback is positive decisions cannot be taken as the

results obtained from the X-rays may be sometimes

inaccurate or invasive. The machine learning lan-

guage has created major important opportunities to

detect the lung cancer in early stage through medical

images and patient data. The implementation of AI in

healthcare sector must not only gives accurate data,

but it also gives explanation about how it reached to

the conclusion. In the recent times with the help of

AI and medical imaging has given significant results

in terms of detection of lung cancer in its early stage

using the assistance of deep learning techniques. It is

useful in detecting the features that may be difficult

to analyze by the human radiologists. However,

it is essential for AI systems to not only provide

high accuracy but also offer transparency in their

decision-making processes. This clarity is essential

in building trust among healthcare professionals, as it

ensures that AI-driven conclusions can be understood

and confidently integrated into clinical practice.

Walishetti, S., Khot, S., Marihal, S., Kittur, P., Giraddi, S. and Akkasaligar, P. T.

Interpretability in AI for Early Lung Cancer Diagnosis: Fostering Confidence in Healthcare.

DOI: 10.5220/0013610300004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 3, pages 143-151

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

143

Deep learning methods, especially convolutional

neural networks (CNNs), are among the most promi-

nent solutions for early lung cancer detection. These

techniques are commonly applied to medical imaging

modalities like chest X-rays and CT scans, achieving

notable accuracy in identifying lung nodules. Ad-

vanced CNN variants, such as ResNet-18, have been

particularly effective in analyzing medical images

for tumor detection. Some models also incorporate

patient-specific data, including medical history

and genetic markers, to improve the precision of

predictions. Early detection frameworks are designed

to pinpoint minute changes in lung tissues that is

signify the early stages of cancer, potentially leading

to better patient prognosis.

A 2024 study (Divya et al., 2024) underscored the

high detection performance of CNN models in cate-

gorizing lung cancer cases. However, these systems

face significant obstacles, such as their reliance on

extensive computational resources and the availabil-

ity of large, annotated datasets for effective scaling.

The challenges in early lung cancer detection with AI

include the need for significant computational power,

large labeled datasets, and handling data imbalance.

Moreover, issues like limited model generalization,

interpretability, and ethical concerns related to patient

data privacy make it difficult to implement these

systems effectively in clinical practice.

The present paper focuses on developing an

accurate AI model for early lung cancer detection,

integrating explainable AI techniques to ensure

transparency. The model aims to improve clinical

decision-making by providing interpretable insights

for healthcare professionals, boosting patient con-

fidence. The model’s effectiveness is assessed in

practical scenarios to ensure its dependability and ef-

ficiency in clinical settings, enhancing early detection

and treatment outcomes.

The structure of this paper is as follows. Section

2 presents a concise overview of recent research in

the field. Section 3 outlines the problem statement,

background information, and the proposed method-

ology. Section 4 details the implementation process,

followed by the outcomes and an analysis of the re-

sults. Lastly, Section 5 wraps up the paper.

2 LITERATURE SURVEY

Advancements in imaging technologies and machine

learning have revolutionized lung cancer detection.

The survey delves into a variety of innovative

methodologies proposed by researchers for early

detection, classification, and diagnosis of lung cancer.

It highlights the strengths of these computational

models while addressing the challenges faced in

achieving widespread clinical application.

(Praveena et al., 2022) MobileNet V2: Efficient

Lung Cancer Detection Models for Mobile Devices

are discussed. It is an used on a small dataset

efficiently. But to ensure clinical reliability, di-

verse datasets need to be evaluated for it. (Elnakib

et al., 2020) reported that CNN models using archi-

tectures like AlexNet demonstrate high accuracy in

early detection but require significant computational

resources, limiting their real-world application. (Wu-

lan et al., 2021) reported that probabilistic neural net-

works demonstrate notable accuracy using X-rays but

may experience misclassifications due to poor-quality

images.

(Ingle et al., 2021) reported that AdaBoost-based

models demonstrate strong accuracy in predicting

lung cancer types, but their performance can be

impacted by dataset imbalances. (Maalem et al.,

2022) Hybrid models (CNN + Faster R-CNN) out-

perform traditional methods but demand high compu-

tational resources, hindering use in under-resourced

settings.(Mukherjee and Bohra, 2020) CNN models

minimize human intervention for CT scan-based lung

cancer detection, but hardware requirements hinder

real-time applications. (Thallam et al., 2020) Com-

bining SVM, random forest, and ANN outperforms

single models, but handling noisy data remains a lim-

itation.

(Aharonu and Kumar, 2023) stated that CAD

systems using neural networks demonstrate high

accuracy, but challenges remain with noisy (Thaseen

et al., 2022) Artificial neural networks (ANN)

combined with segmentation and noise reduction

show high early detection accuracy.(Prasad et al.,

2023) reported that EfficientNet B3 models effec-

tively classify lung cancer on CT scans, but broader

generalization to varied datasets remains necessary.

(Ravi et al., 2023) AlexNet combined with SVM

achieves high sensitivity but requires large annotated

datasets for general applicability. (Kalaivani et al.,

2020) reported that CNNs for image processing

exhibit strong accuracy in lung cancer detection,

demonstrating significant potential for clinical use.

INCOFT 2025 - International Conference on Futuristic Technology

144

In conclusion, the majority of the studies are con-

cerned with the determinant value, a few have ex-

plored explainable artificial intelligence approaches.

There is a deficiency in determining specific nodule

regions, which is imperative in gaining the confidence

of the medical practitioners. The aim of this research

is to close these gaps through the use of Grad-CAM

and other techniques that not only provide predictions

but also expose the inner workings of the model. It in-

creases the trust in lung nodule detection.

3 PROPOSED METHODOLOGY

Lung cancer is the one of the deadliest disease, where

early detection is vital for improving survival rates.

Chest X-rays are widely used for screening, but man-

ual analysis is complex, often leading to diagnostic in-

accuracies. Deep learning models, such as ResNet-18

and MobileNetV4, show promise for detecting nodule

but face trust issues due to their lack of transparency.

Explainable AI technique, such as Grad-CAM, over-

comes the issue by creating visual representation into

the model predictions. Grad-CAM highlights the re-

gion in X-ray, improving interpretability, fostering

trust among the medical professionals.

Since lung cancer is a major cause of death, early

detection is crucial in today’s world. The primary

method for detecting lung cancer is X-ray imaging;

however, it is often insufficient for identifying tumors

because cancerous tissues are very minute in the early

stages and difficult to detect. The goal of this study

is to create a model that enhances early lung can-

cer detection using deep learning technique and Grad-

CAM, which highlights the exact location of nodule,

thereby increasing the confidence of the medical com-

munity. The objectives of this study are as follows:

– To develop deep learning AI model for early lung

cancer detection

– To implement the explainable AI techniques to

identify minute cancerous tissues in early stage

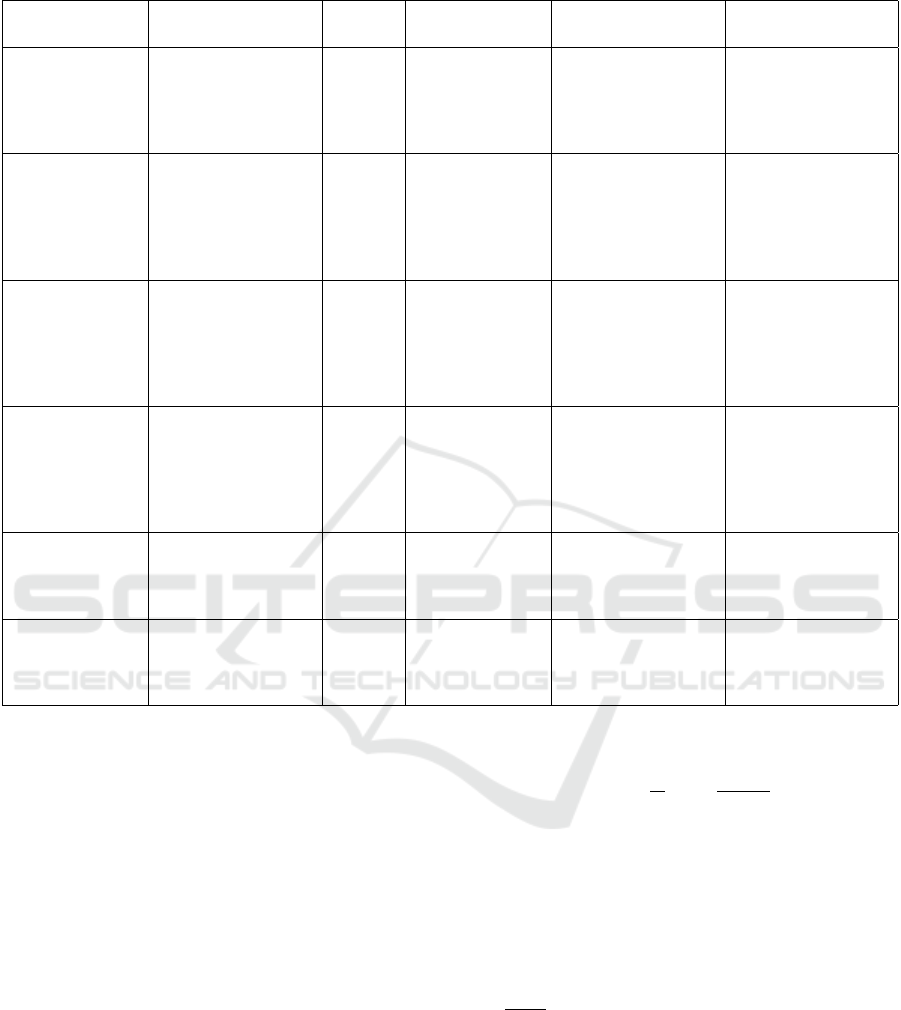

The system is proposed for automated lung cancer

classification. Figure 1 shows the block diagram

of the proposed methodology. The X-ray images,

sourced from the JSRT dataset, which includes chest

X-ray images, their associated diagnoses, and the

location of lung nodules, are uploaded by the user

through the interface and then undergo the required

transformations to prepare them for future processing.

The JSRT created this dataset to offer a standardized

dataset for the creation and assessment of CAD

systems for identifying lung cancer. This dataset

includes 154 nodule images and their corresponding

locations, and 93 no nodule images. The original

images were in .IMG format with a resolution of

2048×2048 pixels, encoded with 16-bit unassigned

numbers. These images were converted to .png

usable format using “Image J” (Schneider, 2012).

Preprocessing includes resizing the given input X-ray

images to 224×224 pixels and normalizing the pixel

values. By standardizing the input, the model ensures

consistency of the images and provides accurate

results across the system. The data augmentation

module addresses the challenge of the limited dataset

size by expanding the original dataset of 247 X-ray

images to a total of 10,000 samples. This module

applies various augmentation techniques, such as

geometric transformations like flipping, rotation, and

cropping. The augmentation process introduces di-

versity into the dataset, enhancing the model’s ability

to generalize and reducing the risk of overfitting.

The module ensures that the dataset is well-balanced

between the two classes (nodule present and nodule

absent), enabling the models to learn effectively from

both categories.

The training module is the important system,

where the two advanced deep learning models

like ResNet-18 and MobileNetV4 are trained, The

training features differentiate the chest X-rays into

nodule present and nodule absent categories.

ResNet-18 is a deep convolutional neural network

renowned for its strong effectiveness in image

classification. It utilizes residual learning with skip

connections, which help train deeper networks by

alleviating the vanishing gradient issue. In this study,

a modified ResNet-18 model features an architecture

with multiple convolutional layers, batch normal-

ization, and ReLU activation functions, enhancing

feature extraction and learning capabilities. To tackle

class imbalance in the dataset, a weighted Cross-

entropy loss function is used, ensuring balanced

learning from both classes. This method harnesses

the strengths of ResNet-18’s architecture and adapts

it for effective lung nodule detection.

MobileNetV4 is a lightweight and efficient

neural network architecture specifically designed

for resource-constrained environments, providing a

faster alternative to larger, more complex models.

A custom MobileNetV4 inspired model developed,

incorporating a simpler architecture composed of

convolutional layers, max-pooling, and dropout to

reduce overfitting. To address the issue of class im-

balance in the dataset, a weighted Cross-entropy loss

Interpretability in AI for Early Lung Cancer Diagnosis: Fostering Confidence in Healthcare

145

Table 1: Comparative Analysis of Diagnostic Models for Chest X-ray Imaging.

Methodology Dataset Description Year Performance Pa-

rameter

Limitation Gap Identified

DenseNet-121

(Wedisinghe and

Fernando, 2024)

Dataset has 247 im-

ages of frontal chest

X-ray.

2024 Training accuracy:

85%, Validation

accuracy: 73%.

Early detection issues,

lack of diversified

datasets, and technical

challenges in AI

integration.

Challenges in public

education, AI adop-

tion, and follow-up

processes.

Combined AI-

assisted chest

radiography

and LDCT (Sa-

chithanandan

et al., 2024)

Dataset of 16,551 X-

rays; 389 indetermi-

nate pulmonary nod-

ules (IPNs) detected

(2.35% yield).

2024 Sensitivity: 96%,

Specificity: 100%,

High diagnostic

accuracy: 96%.

Mixed attitudes to-

wards AI, technical

integration issues,

poor follow-up on

detected nodules.

Research on public

education, follow-up

strategies, and AI

adoption needed.

CNN Model (Jose

et al., 2024)

Dataset of 6034

balanced images

(cancerous and non-

cancerous cases).

2024 Accuracy: 96.4%,

Sensitivity:

83.3%, Speci-

ficity: 91.7%,

AUC: High ROC

performance.

Overfitting risks; re-

liance on augmenta-

tion techniques like

rotations and flips.

Limited clinical

validation and inter-

pretability issues.

CNN for Lung

Cancer Classifica-

tion (Divya et al.,

2024)

Dataset of 16,000 im-

ages covering adeno-

carcinoma, large cell

carcinoma, squamous

cell carcinoma, and

normal cases.

2024 Accuracy: 90%. High data require-

ments; overreliance

on X-rays limits gen-

eralizability.

Need for transfer

learning techniques

and healthcare inte-

gration.

Ante-hoc Concept

Bottleneck Model

(Rafferty et al.,

2024)

Dataset of 2374 chest

X-rays from the

public MIMIC-CXR

database.

2024 Accuracy: 97.1%,

AUC: 0.9495.

Small dataset limits

generalizability.

Validation on larger

datasets needed.

CNN and ResNet-

50 (Sekhar et al.,

2024)

Kaggle dataset:

120,561,416 CT

images (benign, ma-

lignant, normal).

2024 CNN: 95% accu-

racy, ResNet-50:

96%.

Limited to two ar-

chitectures; requires

broader experimenta-

tion.

Results lack gen-

eralization across

datasets.

function is utilized, ensuring the model effectively

learned from two classes. This approach maintained

the efficiency of the MobileNetV4 design while

tailoring it to the specific requirements of the lung

nodule detection.

The explainability module enhances the system

transparency by using Grad-CAM. Grad-CAM

generates heatmaps that takes input X-ray images, by

highlighting the region which is the most influenced

the models predictions. These visual feedback allows

the medical professionals to check whether the model

is focusing on clinically relevant areas such as the

nodule, are present in the lung rather than irrelevant

features.

The first step involves calculating the gradients of

the target class score (x

c

) concerning the feature maps

(B

m

) in the final convolutional layer. The important

feature map weights are calculated using Equation(1):

α

c

m

=

1

N

∑

a

∑

b

∂x

c

∂B

m,ab

(1)

Here:

• α

c

m

represents Importance weight of the m-th fea-

ture map.

• N represents the overall count of pixels in the

feature map.

•

∂x

c

∂B

m,ab

is the gradient of the target score in relation

to the activation B

m,ab

.

Further class activation map (CAM)is generated

through a weighted combination of feature maps,

as described in Equation(2):

L

ab

CAM

= ReLU

∑

m

α

c

m

B

m,ab

(2)

The ReLU activation ensures that only positive

sample contributions to the target class are

INCOFT 2025 - International Conference on Futuristic Technology

146

Figure 1: Proposed methodology for lung nodule detection

retained, effectively highlighting regions in the

input X-ray image that play a significant role

in the model’s predictions. By focusing on

these regions, the model generates heatmaps that

provide interpretable visual feedback.

4 RESULTS AND DISCUSSIONS

The execution is performed in Python, ensuring seam-

less integration with the PyTorch framework. The

dataset is trained on Google Colab’s GPU environ-

ment, utilizing a high-performance NVIDIA GPU

to accelerate training, particularly for large datasets

and using data augmentation tasks. The training em-

ployed using PyTorch with mixed-precision, enabled

through torch.cuda.amp to enhance computational ef-

ficiency and reduce memory consumption.

Figure 2: Example Chest X-ray Image Illustrating a without

Nodule

The study introduces a lung nodule detection sys-

tem utilizing chest X-ray images, developed with

Python and the PyTorch framework. The JSRT

dataset, comprising 247 chest X-ray images (157 with

Figure 3: Example Chest X-ray Image Illustrating a Nodule

nodules and 93 without nodules), serves as the foun-

dation. Each image has a resolution of 2048x2048

pixels. The preprocessing phase involves resizing the

images to 224x224 pixels and normalizing pixel val-

ues to meet the specifications of pre-trained models.

To improve the model’s robustness and generaliza-

tion, augmentation methods such as horizontal and

vertical flips and random rotations up to 15 degrees

are employed. Figure 2 and Figure 3 illustrate exam-

ples of images with and without nodules.

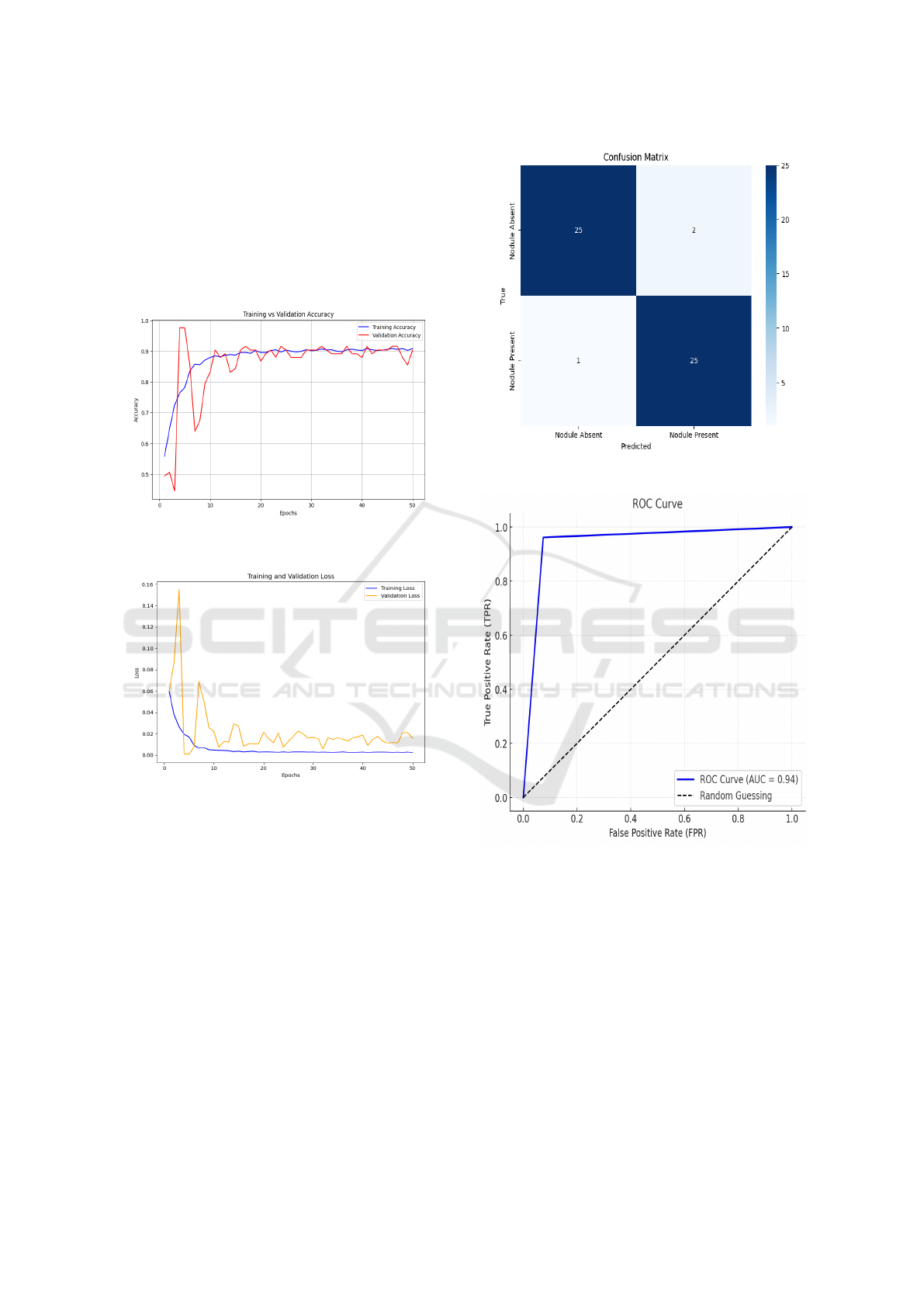

ResNet-18:

The ResNet-18 model is pretrained on the Ima-

geNet, is replaced by modifying its final fully con-

nected layer to accommodate binary segmentation.

Training is done with learning rate of 0.01 using SGD

of momentum(0.9) and weight decay of(1e-4). A fo-

cal loss function is utilized to handle class imbal-

ance with parameters alpha=0.25 and beta=2.0. The

model trained with the help of augmented dataset in-

cluding 10,000 X-ray images. The model is trained

for 50 epochs. The Figure 4 shows training accu-

racy achieved is 90.84% and the validation accuracy

is 90.36%. The results demonstrates that the data aug-

mentation has a positive impact on the model perfor-

mance. Figure 5 shows the training and validation

loss for ResNet-18, with both decreasing sharply in

Interpretability in AI for Early Lung Cancer Diagnosis: Fostering Confidence in Healthcare

147

the initial epochs and stabilizing thereafter. The close

alignment of the curves indicates effective learning

with minimal overfitting. Figure 6 presents the con-

fusion matrix, where the model correctly classifies 25

nodule absent images as nodule absent and 25 nodule

present images as nodule present, with only 2 false

positives and 1 false negative. This indicates high

classification accuracy with minimal errors.

Figure 4: Training vs. Validation Accuracy for ResNet-18

Figure 5: Training vs. Validation Loss for ResNet-18

The Figure 7 shows the ROC curve for the

ResNet-18. The curve moves steeply upward, which

indicates great performance in separating positive

and negative classes. The score for AUC (area under

the curve) is 0.94, is quite high, indicating that the

model has good classification ability.

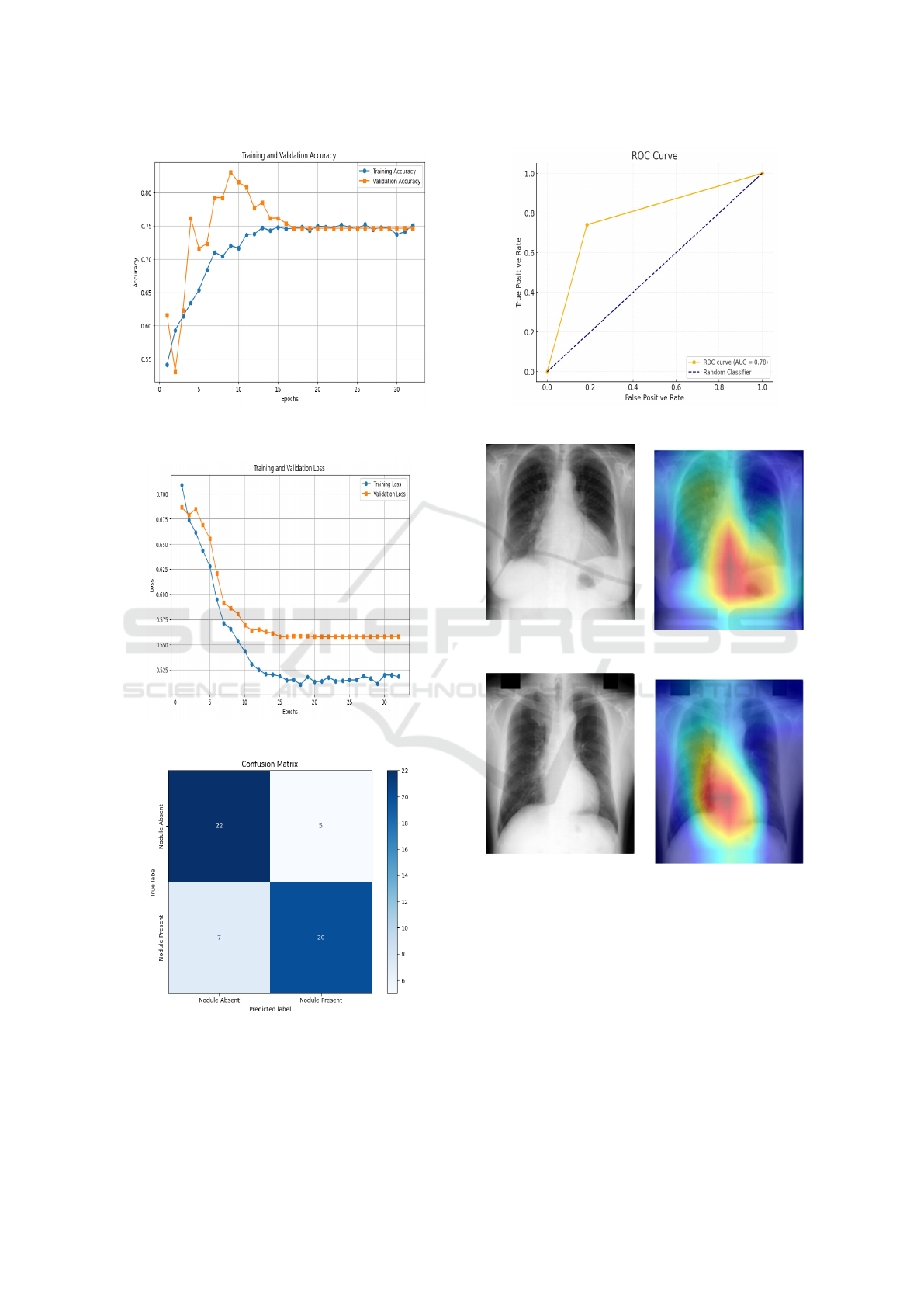

MobileNetV4: The MobileNetV4 offers the ad-

vantage of faster inference or lower computational

cost. Linear SGD with 0.001 and momentum of 0.9

is employed for training with a batch size of 8 and

StepLR is a learning rate scheduler that decreases the

learning rate by a factor of 0.1 after every 5 epochs.

Loss function is Cross-entropy loss with balanced

class weights, and training is carried on for 50 epochs.

Figure 8 shows training accuracy is 75% and valida-

tion accuracy is 74%. Figure 9 shows a steady de-

Figure 6: Confusion Matrix for ResNet-18

Figure 7: ROC Curve for ResNet-18

cline in training and validation loss, stabilizing after

20 epochs, indicating effective convergence and mini-

mal overfitting. Figure 10 presents the confusion ma-

trix, where the model correctly classifies 22 nodule

absent images as nodule absent and 20 nodule present

images as nodule present, with only a few misclassi-

fications, demonstrating its reliability in lung nodule

detection.

The Figure 11 shows ROC curve representing the

true positive rate (TPR) versus false positive rate

(FPR) using their respective range of thresholds. This

graph shows an AUC value of 0.78, meaning that the

model is better than a random classifier. The curve

INCOFT 2025 - International Conference on Futuristic Technology

148

Figure 8: Training vs. Validation Accuracy for Mo-

bileNetV4

Figure 9: Training vs. Validation Loss for MobileNetV4

Figure 10: Confusion Matrix for MobileNetV4

indicates that the model has generally good perfor-

mance, though not perfect in terms of distinguishing

true positives and false positives across all thresholds.

The Table 2 shows the comparison of the perfor-

Figure 11: ROC Curve for MobileNetV4

Figure 12: Lung Nodule

Detection (a)

Figure 13: Grad-CAM (b)

Figure 14: Lung Nodule

Detection(c)

Figure 15: Grad-CAM (d)

mance between models. From Table 2, it is observed

that ResNet-18 provides high accuracy and reliability

for detecting lung cancer nodules. As shown in Ta-

ble 2, ResNet-18 outperforms MobileNetV4 in terms

of accuracy. Moreover, both models surpass the per-

formance of the existing paper (Wedisinghe and Fer-

nando, 2024), where DenseNet21 attained a training

accuracy of 85% and a validation accuracy of 73%.

The narrow gap between training and validation accu-

racies presented in this paper highlights the model’s

ability to generalize effectively from the augmented

dataset.

Interpretability in AI for Early Lung Cancer Diagnosis: Fostering Confidence in Healthcare

149

Further, Grad-CAM is applied to both ResNet-18

and MobileNetV4 to assess interpretability. Grad-

CAM is a technique that visualizes the important ar-

eas of an input image that have the greatest influence

on the model’s decision. By generating heatmaps,

Grad-CAM highlights areas in the image with high at-

tention (represented in red), indicating regions likely

associated with lung cancer nodules, while blue ar-

eas represent less relevant regions. This allows for a

better understanding of the model’s behavior, making

it more interpretable. Figure 13 and Figure 15 illus-

trate the application of Grad-CAM on chest X-rays to

highlight the regions contributing most to the predic-

tion of lung cancer by ResNet-18 and MobileNetV4

models. Both models successfully identify clinically

significant regions, as shown by the dark areas in the

heatmap. This interpretability helps medical profes-

sionals understand the model’s predictions and aligns

its focus with relevant clinical features, enabling reli-

able and transparent diagnosis.

Table 2: Performance Comparison of ResNet-18,

MobileNet-V4 and DenseNet-121 Classifiers.

Name of Classifier ResNet-18 MobileNet-

V4

DenseNet-

121 (We-

disinghe

and Fer-

nando,

2024)

Training Accuracy 90.84% 75% 85%

Validation Accu-

racy

90.36% 74% 73%

Precision 94.41% 77.93% -

Recall 94.34% 77.78% -

F1 Score 94.34% 77.75% -

5 CONCLUSIONS

This paper introduces lung nodule detection in chest

X-ray images.The problem is approached using deep

learning models such as ResNet-18 and MobileNetV4

trained on an augmented dataset of 10,000 images.

ResNet-18 attained a validation accuracy of 90.36%

while MobileNetV4 gained 74%. The ResNet-18

model outperformed MobileNetV4 in accuracy, mak-

ing it more appropriate for accurate lung nodule de-

tection. The use of Grad-CAM for model explainabil-

ity assures that the systems outcomes are transparent.

Future work will focus on increasing the dataset re-

fining models for real-time use, and integrating the

system into clinical environments.

REFERENCES

Aharonu, M. and Kumar, R. L. (2023). Systematic Review

of Deep Learning Techniques for Lung Cancer De-

tection. International Journal of Advanced Computer

Science and Applications, 14(3).

Divya, N., Dhilip, P., Manish, S., and Abilash, I. (2024).

Deep Learning Based Lung Cancer Prediction Us-

ing CNN. In 2024 International Conference on Sig-

nal Processing, Computation, Electronics, Power and

Telecommunication (IConSCEPT), pages 1–4. IEEE.

Elnakib, A., Amer, H. M., and Abou-Chadi, F. E. (2020).

Early Lung Cancer Detection Using Deep Learning

Optimization.

Hatuwal, B. K. and Thapa, H. C. (2020). Lung can-

cer detection using convolutional neural network on

histopathological images. Int. J. Comput. Trends Tech-

nol, 68(10):21–24.

Ingle, K., Chaskar, U., and Rathod, S. (2021). Lung Can-

cer Types Prediction Using Machine Learning Ap-

proach. In 2021 IEEE International Conference on

Electronics, Computing and Communication Tech-

nologies (CONECCT), pages 01–06. IEEE.

Jose, P. S. H., Sagar, J. E., Abisheik, S., Nelson, R.,

Venkatesh, M., and Keerthana, B. (2024). Leverag-

ing Convolutional Neural Networks and Multimodal

Imaging Data for Accurate and Early Lung Can-

cer Screening. In 2024 International Conference on

Inventive Computation Technologies (ICICT), pages

1258–1264. IEEE.

Kalaivani, N., Manimaran, N., Sophia, S., and Devi, D.

(2020). Deep Learning Based Lung Cancer Detec-

tion and Classification. In IOP conference series:

materials science and engineering, volume 994, page

012026. IOP Publishing.

Maalem, S., Bouhamed, M. M., and Gasmi, M. (2022). A

Deep-Based Compound Model for Lung Cancer De-

tection. In 2022 4th International Conference on Pat-

tern Analysis and Intelligent Systems (PAIS), pages 1–

4. IEEE.

Mukherjee, S. and Bohra, S. (2020). Lung Cancer Dis-

ease Diagnosis Using Machine Learning Approach. In

2020 3rd International Conference on Intelligent Sus-

tainable Systems (ICISS), pages 207–211. IEEE.

Prasad, P. H. S., Daswanth, N. M. V. S., Kumar, C. V. S. P.,

Yeeramally, N., Mohan, V. M., and Satish, T. (2023).

Detection Of Lung Cancer using VGG-16. In 2023 7th

International Conference on Computing Methodolo-

gies and Communication (ICCMC), pages 860–865.

IEEE.

Praveena, M., Ravi, A., Srikanth, T., Praveen, B. H., Kr-

ishna, B. S., and Mallik, A. S. (2022). Lung Cancer

Detection Using Deep Learning Approach CNN. In

2022 7th International Conference on Communication

and Electronics Systems (ICCES), pages 1418–1423.

IEEE.

Rafferty, A., Ramaesh, R., and Rajan, A. (2024). Trans-

parent and Clinically Interpretable AI for Lung Can-

cer Detection in Chest X-Rays. arXiv preprint

arXiv:2403.19444.

INCOFT 2025 - International Conference on Futuristic Technology

150

Ravi, V., Acharya, V., and Alazab, M. (2023). A Mul-

tichannel EfficientNet Deep learning-Based Stack-

ing Ensemble Approach For Lung Disease Detec-

tion Using Chest X-Ray Images. Cluster Computing,

26(2):1181–1203.

Sachithanandan, A., Lockman, H., Azman, R. R., Tho,

L., Ban, E., and Ramon, V. (2024). The Potential

Role of Artificial Intelligence-Assisted Chest X-ray

Imaging in Detecting Early-Stage Lung Cancer In The

Community—A Proposed Algorithm For Lung Can-

cer Screening In Malaysia. The Medical Journal of

Malaysia, 79(1):9–14.

Schneider, C. A. R. W. A. E. K. (2012). Nih image to im-

agej: 25 years of image analysis. Nature Methods, 9.

Sekhar, K. R., Tayaru, G., Chakravarthy, A. K., Gopiraju,

B., Lakshmanarao, A., and Krishna, T. S. (2024). An

Efficient Lung Cancer Detection Model using Con-

vnets and Residual Neural Networks. In 2024 Fourth

International Conference on Advances in Electrical,

Computing, Communication and Sustainable Tech-

nologies (ICAECT), pages 1–5. IEEE.

Thallam, C., Peruboyina, A., Raju, S. S. T., and Sampath,

N. (2020). Early Stage Lung Cancer Prediction Us-

ing Various Machine Learning Techniques. In 2020

4th International Conference on Electronics, Commu-

nication and Aerospace Technology (ICECA), pages

1285–1292. IEEE.

Thaseen, M., UmaMaheswaran, S., Naik, D. A., Aware,

M. S., Pundhir, P., and Pant, B. (2022). A Review

of Using CNN Approach for Lung Cancer Detection

through Machine Learning. In 2022 2nd International

Conference on Advance Computing and Innovative

Technologies in Engineering (ICACITE), pages 1236–

1239. IEEE.

Wedisinghe, H. and Fernando, T. (2024). Explainable AI

for Lung Cancer Detection: A Path to Confidence. In

2024 4th International Conference on Advanced Re-

search in Computing (ICARC), pages 13–18. IEEE.

Wulan, T. D., Kurniastuti, I., and Nerisafitra, P. (2021).

Lung Cancer Classification in X-Ray Images Us-

ing Probabilistic Neural Network. In 2021 Interna-

tional Conference on Computer Science, Information

Technology, and Electrical Engineering (ICOMITEE),

pages 35–39. IEEE.

Interpretability in AI for Early Lung Cancer Diagnosis: Fostering Confidence in Healthcare

151