Enhancing COVID-19 Diagnosis with Deep Learning Models

DenseNet and ResNet on Medical Imaging Data

S. Chowdri

1

, S. Shanthi

1

, R. Dhanaeswaran

1

, P. Giridar Prasad

1

, K. Nirmala Devi

1

and A. Kavitha

2

1

Dept. of Computer Science and Engineering, Kongu Engineering College, Erode, India

2

Dept. of Computer Science and Engineering, Government College of Engineering (IRTT), Erode, India

Keywords: Covid-19 Diagnosis, Deep Learning, DenseNet, ResNet, CT Scan, Chest X-Ray, Image Classification,

Accuracy.

Abstract: The COVID-19 pandemic has put immense pressure on health systems worldwide. Early and accurate

diagnosis is thus an important modality of management of the disease. RT-PCR tests are the gold standard for

diagnosis but suffer from a number of limitations such as high processing time and occasional false negatives.

Diagnostic imaging via chest X rays and CT scans offers a rapid, non-invasive alternative for detecting

COVID-19-induced lung abnormalities. This study evaluates the performance of various configurations of

DenseNet (121, 169, 201) and ResNet-152 for automated COVID-19 detection using chest X-rays and CT

scans. More in particular, DenseNet-201 yielded a good result of approximately 96% accuracy for CT scans

and 99% for X-rays when trained with the Adam optimizer using a batch size of 32. It highlights that the

choice of optimizer and batch size has paramount importance. DenseNet-201’s efficient gradient flow, feature

reuse, and parameter utilization make it especially suitable for medical imaging applications with limited

annotated datasets. Its robust feature extraction capabilities position it as a reliable diagnostic tool, potentially

enhancing clinical workflows and accelerating COVID-19 diagnosis. This study underscores DenseNet-201’s

potential to improve patient outcomes and pandemic management through accurate, automated medical image

analysis.

1 INTRODUCTION

COVID-19 is a disease that appeared suddenly at the

end of 2019, caused by the virus SARS-CoV-2, and

has since caused huge destruction to the health,

economies, and daily life of the world. It is transmitted

by aerosols, droplets, contaminated surfaces, and air.

The symptoms range from very mild to the most

severe and can involve fever, cough, difficulty

breathing, severe problems in the lungs, organ failure,

and, in extreme cases, death. For example, the Delta

and Omicron variants spread so quickly because of

their high contagion rate, sometimes for evading

immunity. This is not mentioning the vaccine

coverage that was reached or the lingering effects that

many people were still battling against COVID-19 in

mid-2024.Conventional medical imaging represents

another field where CNNs revolutionized the way it

presents unparalleled capabilities for the detection,

segmentation, and classification of different image

classes.

It is of great help in diagnosing diseases of the

lungs, such as lung cancer, tuberculosis, and

pneumonia, all at once. During the pandemic period of

COVID-19, CNNs became important for researching

chest X-ray features along with computed

tomography. The specialty in architectures of CNN

comes forth through ResNets and VGG, and then

further Inception, aside from others. Each of them is

unique and has its particular power, like residual

connections and skip connections in ResNet networks,

which enable the training of deeper networks; making

simpler designs boosts feature learning in VGG, while

Inception further improves this with its two most

innovative connectivity ideas: convolutional blocks

on several parallel branches with concatenated feature

maps. It allows every layer to directly feed into all

previous ones, encourages feature reuse, and makes

the flow of gradients better. This architecture will

learn both low-level and high-level features for

finding subtle abnormalities in a medical image.The

efficiency and resistance to overfitting make

506

Chowdri, S., Shanthi, S., Dhanaeswaran, R., Giridar Prasad, P., Devi, K. N. and Kavidha, A.

Enhancing COVID-19 Diagnosis with Deep Learning Models DenseNet and ResNet on Medical Imaging Data.

DOI: 10.5220/0013595700004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 2, pages 506-513

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

DenseNet very relevant to high-dimensional data

associated with medical applications. Most of the

recent works on COVID-19 diagnostics, tumor

segmentation, skin lesion detection, and diabetic

retinopathy classification have been based on this

approach. Compared to ResNet and VGG models,

DenseNet is more parameter-efficient with high

accuracy and fewer parameters, hence having less risk

of overfitting. This is of extreme value in providing

accurate diagnoses in resource-poor settings. This

would, in turn, enable the radiologists to provide

accurate and reproducible results on well-

preprocessed and well-annotated datasets with

significantly less workload. Its performance can be

improved by performing hyperparameter tuning, with

evaluation metrics such as accuracy and AUC-ROC.

DenseNet bridges advanced AI technology with

practical healthcare applications; thus, it holds

tremendous potential to revolutionize diagnostics and

support clinicians worldwide in combating COVID-

19 and other diseases.

2 LITERATURE REVIEW

A study designed a CAD system that classified chest

X- rays into COVID-19 pneumonia, other pneumonia,

and normal cases using transfer learning -based CNN

following the use of preprocessing techniques like

removal of the diaphragm region and histogram

equalization(A. T, S. S, N. K, A. K, D. R. B and N.

Rajkumar, Sentiment Analysis on Covid-19 Data

Using BERT Model, 2024 International Conference

on Advances in Modern Age Technologies for Healt,

n.d.) (Nirmala Devi, K., Shanthi, S., Hemanandhini,

K., Haritha, S., Aarthy, S. (2022). Analysis of

COVID-19 Epidemic Disease Dynamics Using Deep

Learning. In Kim, J.H., Deep, K., Geem, Z.W.,

Sadollah, A., Yadav, A. (Eds) Proceed, n.d.).The

model achieved an accuracy of 94. 5% on a dataset of

8,474 images and reported that the performance was

enhanced significantly with the use of preprocessing

techniques and revealed how significant image

enhancement is towards the achievement of better

performance in COVID-19 detection (Heidari et al.,

2020). The analysis of deep learning models to

identify COVID- 19 from chest X-rays on a dataset of

5,000 images showed the four CNNs that were part of

the experiment, including ResNet18, ResNet50,

SqueezeNet, and DenseNet-121, achieving a 98%

sensitivity rate and a 90% specificity rate after transfer

learning training. Precision in high lighting the

infected lung regions of COVID-19 was observed in

the heatmaps generated by the models while matching

with the annotations made by the radiologists. The

results are promising, but the study points out that

larger datasets need to be created for even more

reliable accuracy assessments (Minaee et al., 2020).

ACoS is an abbreviation for Automatic COVID

Screening system in this study which is the

classification of patients into normal, suspected, and

infected with COVID 19 using radiomic texture

descriptors from chest X-rays. The ensemble uses a

majority 3 voting of five supervised classifiers in a

two- phase classification approach. The validation

was performed using 258 images with an accuracy of

98. 06% in the first phase (normal vs. abnormal) and

91. 33% in the second phase (pneumonia vs. COVID-

19). The obtained results manifested a statistical

difference and even surpassed some of the techniques

currently used for COVID-19 detection (Chandra et

al., 2021). A recent study presents a Deep

Convolutional Neural Network (CNN)-based

approach for the detection of COVID-19 from chest

X-ray images. Models used in this solution are

DenseNet201, ResNet50V2, and Inceptionv3, which

are specifically trained and then combined using a

weighted average ensembling. With 538 images

positive for COVID-19 and 468 negative images for

COVID-19, the model was able to achieve a

classification accuracy of 91.62%. In addition, the

study created an intuitive graphical user interface

application to make medical practitioners quickly

detect the existence of COVID-19 in the chest X-ray

images (Das et al., 2021). A study proved that AI can

be used to automate and improve the detection

accuracy for COVID-19 using Chest X-ray (CXR) and

CT images. Besides, AI can be also utilized in DL

techniques such as Convolutional Neural Networks

(CNN). This paper dis cusses research works on this

topic, challenges, and recent breakthroughs on the DL

based classification of COVID-19. The review also

suggests further research that should further improve

the performance and reliability of automated systems

for COVID-19 image classification (Aggarwal et al.,

2022). An article classifies COVID-19 patient

individuals using chest X-ray scans and com pares

various CNN models that base their work on deep

learning. In this, a dataset consisting of 6432 samples

from the Kaggle repository was tested using data

augmentation with Xception, ResNeXt, and Inception

V3. It was seen that among these, Xception is having

the highest accuracy as 97. 97%. The findings of the

analysis are not medical but show that automated deep

learning techniques might be useful for the screening

of COVID-19 patients (Jain et al., 2021). To overcome

the deficiencies of previous networks, a paper

proposed a dual path way, 11-layer deep 3D

Enhancing COVID-19 Diagnosis with Deep Learning Models DenseNet and ResNet on Medical Imaging Data

507

Convolutional Neural Network for the segmentation

of brain lesions. It fused an efficient dense training

scheme with a dual pathway architecture that

computes multi-scale input. The proposed approach

demonstrated superior performance on benchmarking

BRATS 2015 and ISLES 2015 experiments in multi-

channel MRI data of ischemic stroke, brain tumors,

and traumatic brain injuries. The method is found to

be effective and computationally efficient and thus

suitable for research as well as clinical applications

(Kamnitsas et al., 2017). It performed an experiment

using Transfer Learning to evaluate the state-of-the-

art convolutional neural networks on X-ray images

from patients infected with COVID 19, bacterial

pneumonia, and normal conditions. Based on the

study, the Deep Learning models were shown to detect

COVID-19 with as high as 96. 78% accuracy, 98. 66%

4 sensitivity, and 96. 46% specificity. Results indicate

that X- ray imaging could be an important step toward

the diagnosis of COVID-19; thus, more research needs

to be done in the medical field (Apostolopoulos &

Mpesiana, 2020). A research introduced COVID-

CAPS, a model of capsule network for the substitution

of CNN diagnosis in COVID-19 using X-ray images.

Compared to the previously designed CNN model,

COVID-CAPS reported to have an accuracy of 95.

7%, sensitivity of 90%, and specificity of 95. 8%,

which solved most drawbacks. These included small

amounts of sample data and loss of spatial information

in CNNs. It is promising as a diagnostic tool for

COVID-19, as further improvement to 98.3%

accuracy and 98.6% specificity was achieved by pre-

training with an X-ray image dataset (Afshar et al.,

2020). A study designed clinical predictive models for

the identification of COVID-19 cases based on

laboratory data and deep learning. It tested these

models on data from 600 patients, with an impressive

accuracy of 86. 66%, F1-score of 91. 89%, and a recall

of 99. 42%. The implications are that these clinical

predictive models may help the medical professional

validate the reliability of results from laboratories and

efficiently use resources during the pandemic (Alakus

& Turkoglu, 2020)

3 EXPERIMENTAL SETUP

3.1 Dataset

3.1.1 CT Scan

This dataset was downloaded from Kaggle, "COVID-

19 CT Scan Dataset" by Dr. Surabhi Thorat, and it

consists of COVID and Non-COVID images, which

are well-labeled, hence perfect for training diagnostic

machine learning models. Images were then split into

two categories: COVID-19 and Non-COVID

combined into a single DataFrame for easy

manipulation, then stratified into an 80:20 training-

validation split of 6095 training and 1525 validation.

Stratification has ensured that both categories are well

represented, hence the integrity of the data is

maintained without bias during model training and

validation.

3.1.2 X Ray

The dataset used in this research has been curated by

Prashant from Kaggle and is titled "Chest X-ray

(COVID-19 & Pneumonia)". The dataset comprises a

collection of X-ray images of cases related to

COVID-19, pneumonia, and healthy ones. In this

work, cases of pneumonia are not taken into

consideration, hence, the classes considered for

analysis are COVID-19 and Non- COVID. In all, the

dataset holds 2,159 images. It is split into an 80:20

stratified split for 1,726 training samples and 433

validation samples that maintain a balanced class

representation. Label them, categorize the images as

COVID-19 and Non-COVID, and merge them into

one DataFrame to accelerate the processing that can

later be easily split with the assurance that this would

maintain the integrity of the data and representativity

of the sets for a proper training and validation

process.

3.2 Data Augmentation

To enhance the generalization ability of our models

and compensate for the relatively limited size of the

dataset, various data augmentation techniques were

employed. These included:

Table 1: Data Augmentation Techniques.

Augmentation

Technique

Description

Rotation Range Rotates images randomly within the

s

p

ecified de

g

ree ran

g

e.

Width Shift

Range

Randomly shifts images horizontally by

a specified fraction.

Height Shift

Ran

g

e

Randomly shifts images vertically by a

s

p

ecified fraction.

Shear Range Applies random shearing

transformations to the ima

g

es.

Zoom Ran

g

e Randoml

y

zooms in or out on ima

g

es.

Horizontal Fli

p

Randoml

y

fli

p

s ima

g

es horizontall

y

.

Rescale Normalizes pixel values by

scaling them by specified factor.

INCOFT 2025 - International Conference on Futuristic Technology

508

3.3 Model Architecture

Traditional CNNs have sequential information flow,

from one layer to the next. However, in DenseNet

every layer receives input from all preceding layers

and passes its own feature maps to all subsequent

layers. So, the i-th layer receives feature maps of all

the previous layers,

𝑥0, 𝑥1, 𝑥2, … 𝑥𝑙 − 1, 𝑎𝑠 𝑖𝑛𝑝𝑢𝑡:

𝑥1 =𝐻1([𝑥0,𝑥1,𝑥2,…..,𝑥𝑙−1]) (1)

where H1 is a composite function of batch

normalization, ReLU and convolution. Dense

connectivity leads to the +following benefits: Direct

connections between layers would allow gradients to

flow more easily in the backwards propagation pass

so as to mitigate the problem of vanishing gradients.

Layers could read the feature maps of all preceding

layers, so reuse of features is encouraged and

redundancy is reduced. This architecture uses fewer

parameters than its traditional counterparts at the

same depth due to the fact that it does not have to

learn redundant feature maps in the first place.

A Dense Net consists of several dense blocks. The

dense block itself comprises multiple convolutional

layers, which are connected densely. Between the

dense blocks, transition layers are provided to carry

out the down sampling operations and decrease the

spatial dimensions of the feature maps. Dense Block:

It is a sequence of layers wherein each layer is fed

forward to every other layer. Each layer feature maps

are concatenated with all inputs from the following

layers. Transition Layer: It lies between the dense

blocks. It utilizes a 1x1 convolution to compress

features, followed by feature map reducing a 2x2

average pooling operation along with batch

normalization. Dense Net exists in many

configurations that are primarily different in depth,

concerning the number of layers. Currently, three

variants are widely used, such as DenseNet121,

DenseNet169, and DenseNet201.

Here, each number corresponds to the total

quantity of the layers inside the network, counting

both convolutional, pooling, and fully connected

layers.

Table 2: Comparison between Traditional CNN and

DenseNet.

Feature Traditional

CNN

DenseNet

Connectivity Sequential Dense (each

layer connected

to all previous

layers)

Gradient Flow Can be

hindered by

depth

(vanishing

g

radient

)

Improved due

to direct

connections

Feature Reuse Limite

d

Extensive

Parameter

Efficiency

Higher due to

redundant

feature ma

p

s

Lower, more

efficient

3.3.1 DenseNet 121

It has 121 layers. It offers an excellent balance

between depth and computational efficiency and best

applicable in places where computation is much

needed.

3.3.2 DenseNet 169

It adds to the architecture through adding more layers,

therefore giving depth for feature extraction when the

complexity goes up a notch. This variation is very

good for tasks which need the recognition of fine

details and is computationally costly.

3.3.3 DenseNet 201

Being one of the deepest variants, with a count of 201

layers, it provides the finest feature extraction

capability. In particular, it is well-suited for more

complex applications but with huge computational

and memory resource requirement

3.3.4 ResNet 152

ResNet-152 deep learning model was designed to

solve problems with the vanishing gradient in deep

neural networks. The gradients can flow through

shortcut connections that bypass layers. It is built

from residual blocks with two or three convolutional

layers, with batch normalization and ReLU for

activation. These shortcut connections add the input

of the block directly to the output, hence enabling the

network to learn residual functions. ResNet-152 is a

152-layer network with about 60.2 million

parameters. An architecture so deep, trained

efficiently for very deep networks, improves the

Enhancing COVID-19 Diagnosis with Deep Learning Models DenseNet and ResNet on Medical Imaging Data

509

accuracy and performance on many tasks like image

classification and medical image analysis

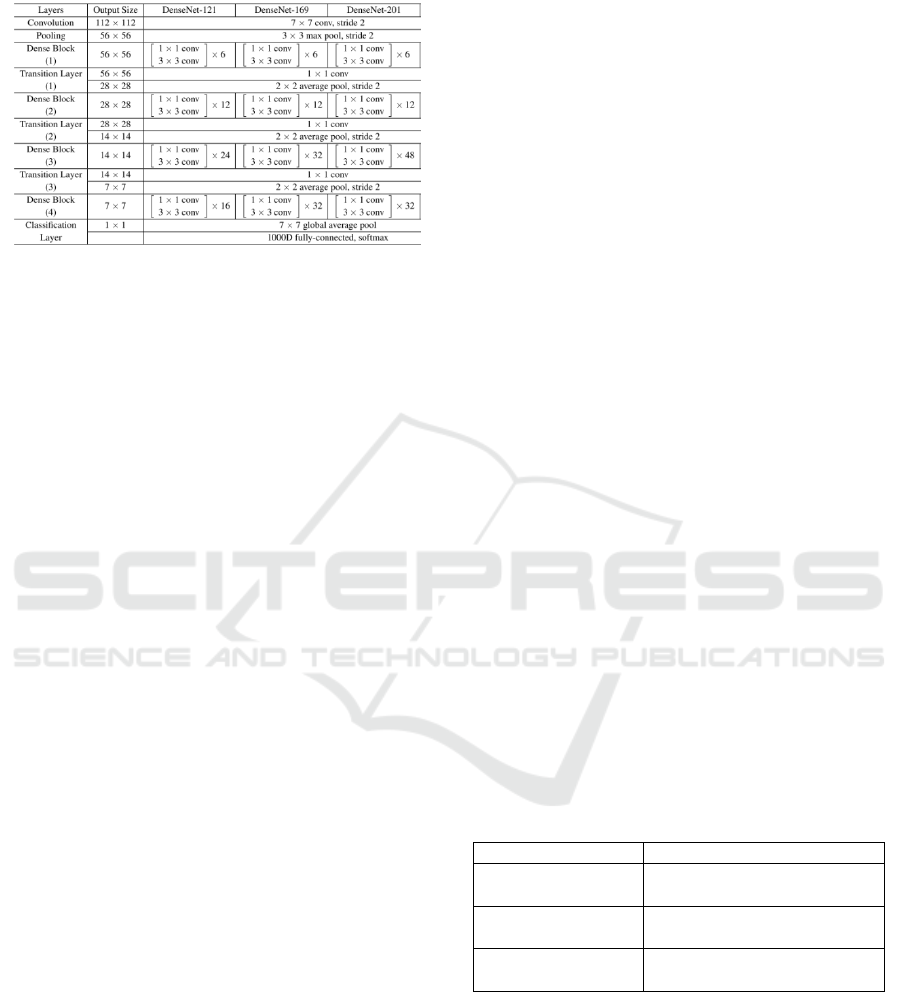

Figure 1: Differentiation of layers between DenseNet

Architectures.

3.4 Methodology

This paper uses the complete dataset of COVID-

19 X-ray and CT-scan images downloaded from

Kaggle for training and testing machine learning

models for diagnosis. A variety of DenseNet

architecture variants, including DenseNet-101,

DenseNet-121, and DenseNet-201, are considered

along with ResNet for the analysis using transfer

learning techniques. Transfer learning was applied by

initializing the weights of DenseNet pre-trained on

ImageNet. This ensured that the starting point for our

models was considerably robust and had leveraged

some of the rich feature representations learned from

a diverse set of images. The dataset was well-

structured, split roughly 80-20 between training and

validation sets; this helps prevent bias and maintains

integrity in the dataset by ensuring that the

distribution of COVID-19 and non-COVID-19

images remains constant across both sets.

Accordingly, early stopping during training kept a

constant eye on the model's performance on the

validation set and stopped it when it saw it did not

improve anymore. In that way, this technique avoids

overfitting; by doing so, it keeps the model

generalizing even to new data fed into it. Each of

these trained models was then comprehensively

evaluated against different metrics, namely AUC-

ROC, accuracy, specificity, recall, and precision.

AUC-ROC expresses the measure of how well the

model is capable of distinguishing between classes,

while accuracy is the general correctness of the

predictions made by the model. Specificity will

explain how well the model provides the true

negatives, and recall will provide the performance of

identifying true positives. Precision provided a view

into how well that model identified true positives

among all that were predicted as positive.

3.5 Training And Optimisation

In this work, transfer learning is adopted by

initializing the DenseNet models with pre-trained

weights from ImageNet. In that way, the DNNs will

leverage the rich feature representations they have

learned on such a large and diverse dataset for fast

convergence and improvement in performance

toward the COVID-19 diagnosis task. In training, the

base layers in DenseNet were frozen in order to

maintain all the valuable pre-trained features while

training the head for classification, for adapting it to

our binary classification task. The custom head

consists of a global average pooling layer followed by

fully connected layers, culminating in a sigmoid

activation that outputs class probabilities using the

loss function as Binary cross-entropy.

The DenseNet is densely connected; this aids in

better gradient flow and feature propagation, which

can be of great help for such complex tasks as medical

image classification. An optimization of the model

performance was basically carried out with the Adam

optimizer because it has an adaptive learning rate and

it is efficient in handling sparse gradients, which

helps speed up the convergence.

However, in order to really assess model

performance, further exploration of the models with

different optimizers is intended, including RMSprop,

SGD, and Adam, along with various batch sizes. Each

of these optimizers has its merits: Adam is adaptive

with its learning rates but sometimes converges to

suboptimal solutions; SGD offers better

generalization but needs careful tuning and may result

in slow convergence; and RMSprop acts best on non-

stationary problems, though it can converge more

slowly than Adam on deeper networks.

Table 3: Model Architecture.

Component Description

Global Average

Pooling

Reduces spatial dimensions of

feature maps

Dense Layer (ReLu) Adds non-linearity and

enhances learnin

g

Output Layer

(

Si

g

moid

)

Binary output for

classification

Batch size does play an important role while

training a model. Larger batch size provides smoother

estimates of gradient, faster convergence, requires a

lot of memory, though carries the risk of trapping into

a local minimum; smaller batch size introduces extra

noise into the gradient estimation. This might help the

models from escaping local minima with somewhat

slow convergence. We systematically try these

INCOFT 2025 - International Conference on Futuristic Technology

510

various optimizers and batch sizes in order to see

what the best combination of the two might be that

yields better performance for our particular

classification problem.

4 RESULT AND DISCUSSION

Table 4: Accuracy for DenseNet121 for CT SCAN

Dataset.

Table 5: Accuracy for DenseNet169 for CT SCAN Dataset

Optimizer Batch

Size

(

16

)

Batch

Size

(

32

)

Batch

Size

(

64

)

Adam 95. 20 94. 50 92. 80

RMS

p

ro

p

94. 00 93. 50 91. 50

SGD 89. 80 88. 50 86. 70

Table 6: Accuracy for DenseNet201 for CT SCAN

Dataset.

Optimizer Batch

Size(16)

Batch

Size(32)

Batch

Size(64)

Adam 94. 00 96. 90 95. 70

RMS

p

ro

p

94. 50 96. 10 95. 10

SGD 93. 30 95. 20 94. 30

Table 7: Accuracy for ResNet152 for CT SCAN Dataset.

Optimizer Batch

Size(16)

Batch

Size(32)

Batch

Size(64)

Adam 94. 50 95. 70 94. 00

RMSprop 92. 80 93. 50 92. 10

SGD 91. 80 92. 20 90. 50

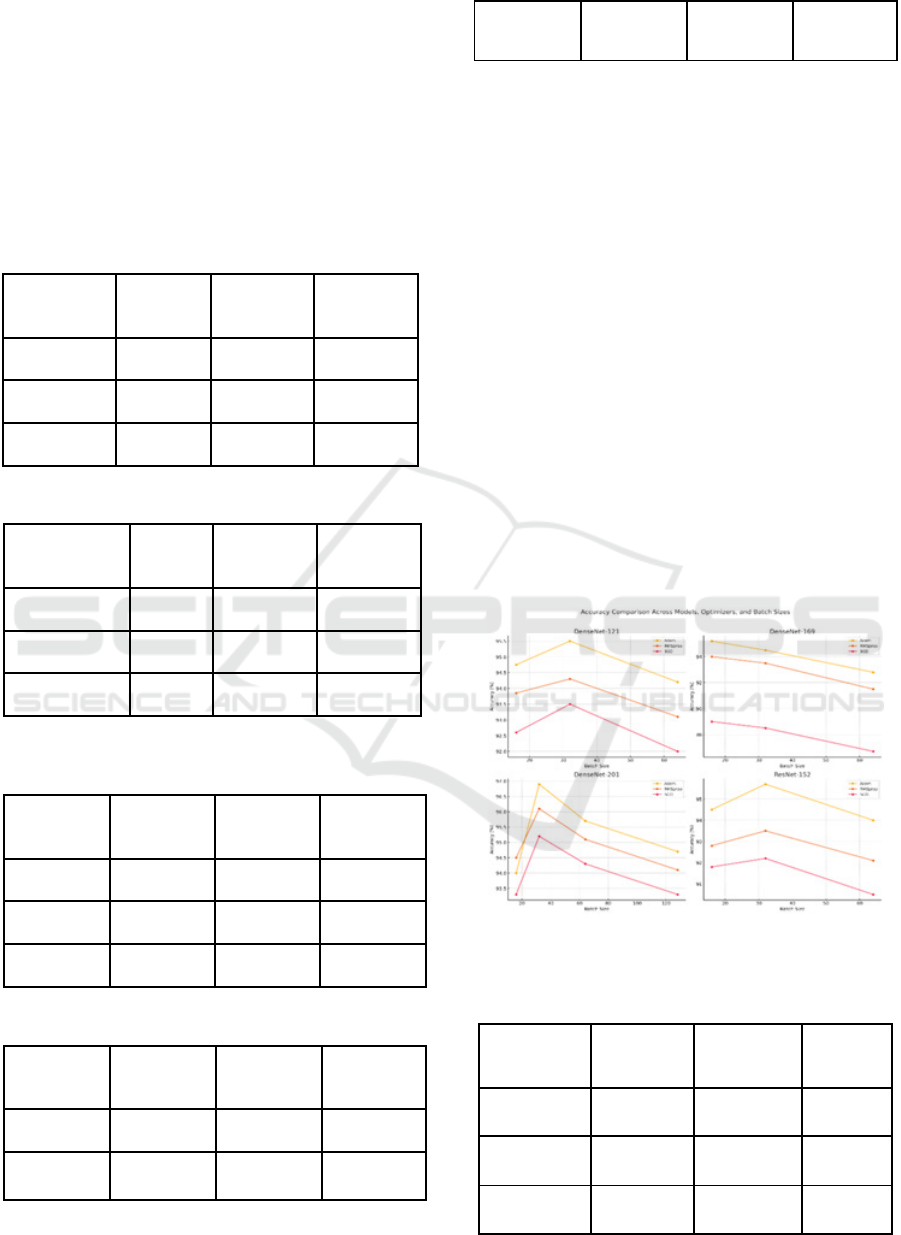

Comparing all different models, namely

DenseNet-121, DenseNet-169, DenseNet-201, and

ResNet-152, with a set of different optimizers and

batch sizes, it can be found that the best results come

out to be from DenseNet-201. With the Adam

optimizer and batch size of 32, this has achieved an

accuracy of 96.9%. Relatively, one more working

combination for the DenseNet-201 model was with

RMSprop as the optimizer and a batch size of 32,

which yielded the same result of 96.1% accuracy. The

best after that is DenseNet-121, which reaches a peak

of 95.5% with the Adam optimizer and batch size 32.

Next comes DenseNet-169, which, compared to its

other variants, reached a peak of 95.2% with the

Adam optimizer and batch size of 16. ResNet-152

was competitive but reached a peak of 95.7% only at

the Adam optimizer with a batch size of 32.

Therefore, in all models, the best performance was

obtained when, for the Adam optimizer, the batch size

was set to 32. Thus, it is the best configuration. On

the other hand, DenseNet-201 yielded the best

performance on the Covid-19 CT-scan dataset when

using Adam with a batch size of 32.

Figure 2: Comparison of various DenseNet and ResNet

Architectures for CT scan Dataset.

Table 8: Accuracy for DenseNet121 for X Ray Dataset.

Optimizer Batch

Size

(

16

)

Batch

Size

(

32

)

Batch

Size

(

64

)

Adam 97. 85 98. 50 98. 20

RMSprop 97. 70 98. 00 97. 85

SGD 96. 50 97. 00 96. 70

Optimizer Batch

Size(16)

Batch

Size(32)

Batch

Size(64)

Adam 94. 75 95. 50 94. 20

RMS

p

ro

p

93. 85 94. 30 93. 10

SGD 92. 60 93. 50 92. 00

Enhancing COVID-19 Diagnosis with Deep Learning Models DenseNet and ResNet on Medical Imaging Data

511

Table 9: Accuracy for DenseNet169 for X Ray Dataset.

Optimizer Batch

Size(16)

Batch

Size(32)

Batch

Size(64)

Adam 96. 70 98. 20 97. 30

RMSprop 95. 90 97. 50 96. 50

SGD 94. 50 96. 20 95. 00

Table 10: Accuracy for DenseNet201 for X Ray Dataset.

Optimizer Batch

Size

(

16

)

Batch

Size

(

32

)

Batch

Size

(

64

)

Adam 98. 90 99. 30 98. 95

RMSprop 98. 30 98. 85 98. 50

SGD 97. 50 98. 20 97. 80

Table 11: Accuracy for ResNet152 for X Ray Dataset.

Optimizer Batch

Size(16)

Batch

Size(32)

Batch

Size(64)

Adam 94. 50 95. 20 94. 00

RMSprop 93. 70 94. 50 93. 20

SGD 92. 50 93. 00 91. 80

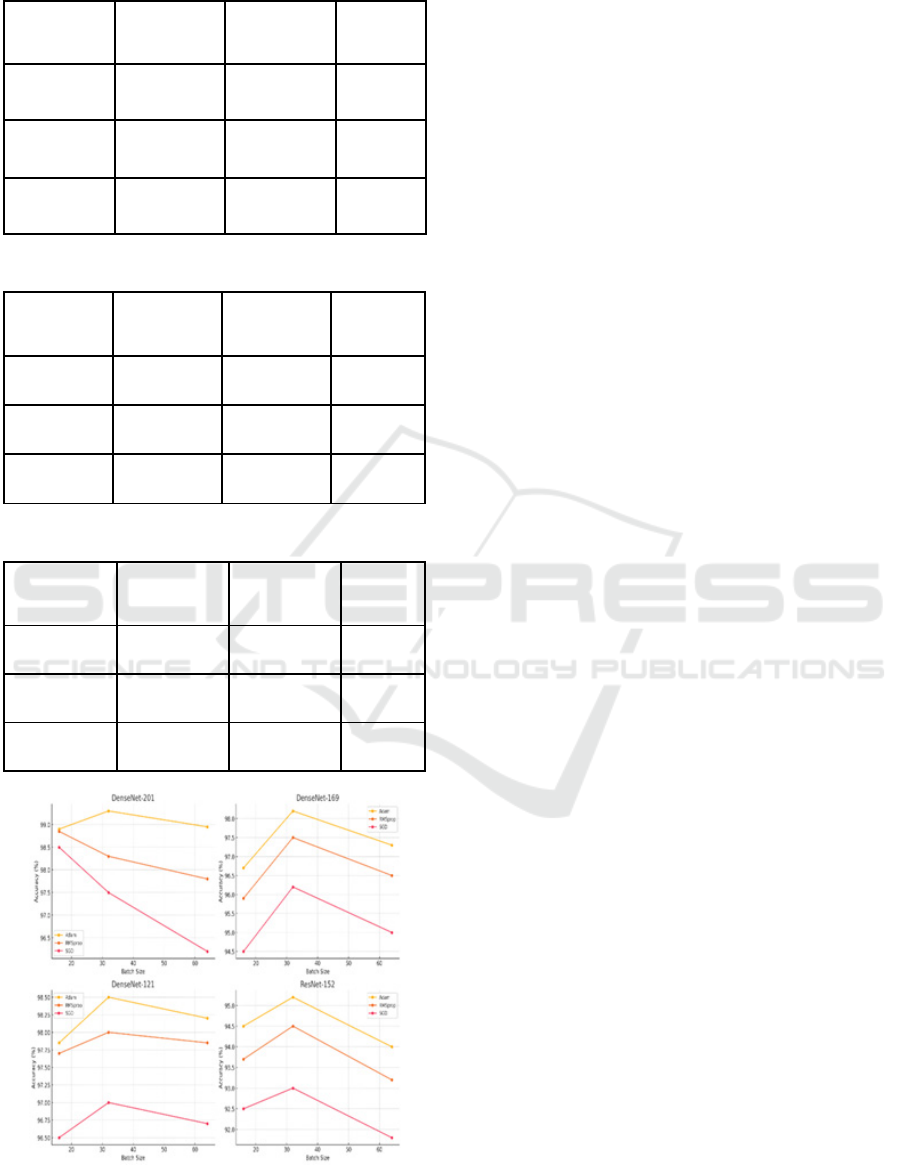

Figure 3: Comparison of various DenseNet and ResNet

Architectures for X-ray Dataset.

A comparison of the accuracies attained for the

various models using the X-ray dataset shows that the

highest, from DenseNet-201 with the Adam

optimizer and batch size of 32, was 99.30%. This is

closely followed by DenseNet-121, which had

attained an accuracy of 98.50% under the same

conditions and consistently showed quite good

performance under other batch sizes and optimizers.

Among these, DenseNet-169 had the highest

accuracy of 98.20% with the Adam optimizer and

batch size 32, ranking slightly after the top two.

ResNet-152 is a little lagging and achieved a

maximum of 95.70% accuracy under similar settings.

Hence, DenseNet-201 and DenseNet-121 are the best

models for this dataset, especially with the Adam

optimizer and batch size 32, predestining these

models for X-ray image classification tasks.

ACKNOWLEDGEMENTS

The author acknowledges AICTE for providing the

fund for conducting this research. AICTE, the

reference no. F.No.8-80/FDC/RPS/POLICY-1/2021-

22/ Dated: 18.02.2022.

REFERENCES

A. T, S. S, N. K, A. K, D. R. B and N. Rajkumar, Sentiment

Analysis on Covid-19 data Using BERT Model, 2024

International Conference on Advances in Modern Age

Technologies for Healt. (n.d.).

Afshar, P., Heidarian, S., Naderkhani, F., Oikonomou, A.,

Plataniotis, K. N., & Mohammadi, A. (2020). COVID-

CAPS: A capsule network-based framework for

identification of COVID-19 cases from X-ray images.

Pattern Recognition Letters, 138, 638–643.

https://doi.org/10.1016/J.PATREC.2020.09.010

Aggarwal, P., Mishra, N. K., Fatimah, B., Singh, P., Gupta,

A., & Joshi, S. D. (2022). COVID-19 image

classification using deep learning: Advances,

challenges and opportunities. Computers in Biology

and Medicine, 144, 105350.

https://doi.org/10.1016/J.COMPBIOMED.2022.10535

0

Alakus, T. B., & Turkoglu, I. (2020). Comparison of deep

learning approaches to predict COVID-19 infection.

Chaos, Solitons & Fractals, 140, 110120.

https://doi.org/10.1016/J.CHAOS.2020.110120

Apostolopoulos, I. D., & Mpesiana, T. A. (2020). Covid-

19: automatic detection from X-ray images utilizing

transfer learning with convolutional neural networks.

Physical and Engineering Sciences in Medicine, 43(2),

635–640. https://doi.org/10.1007/s13246-020-00865-4

INCOFT 2025 - International Conference on Futuristic Technology

512

Chandra, T. B., Verma, K., Singh, B. K., Jain, D., & Netam,

S. S. (2021). Coronavirus disease (COVID-19)

detection in Chest X-Ray images using majority voting

based classifier ensemble. Expert Systems with

Applications, 165, 113909.

https://doi.org/10.1016/J.ESWA.2020.113909

Das, A. K., Ghosh, S., Thunder, S., Dutta, R., Agarwal, S.,

& Chakrabarti, A. (2021). Automatic COVID-19

detection from X-ray images using ensemble learning

with convolutional neural network. Pattern Analysis

and Applications, 24(3), 1111–1124.

https://doi.org/10.1007/s10044-021-00970-4

Heidari, M., Mirniaharikandehei, S., Khuzani, A. Z.,

Danala, G., Qiu, Y., & Zheng, B. (2020). Improving the

performance of CNN to predict the likelihood of

COVID-19 using chest X-ray images with

preprocessing algorithms. International Journal of

Medical Informatics, 144, 104284.

https://doi.org/10.1016/J.IJMEDINF.2020.104284

Jain, R., Gupta, M., Taneja, S., & Hemanth, D. J. (2021).

Deep learning based detection and analysis of COVID-

19 on chest X-ray images. Applied Intelligence, 51(3),

1690–1700. https://doi.org/10.1007/s10489-020-

01902-1

Kamnitsas, K., Ledig, C., Newcombe, V. F. J., Simpson, J.

P., Kane, A. D., Menon, D. K., Rueckert, D., &

Glocker, B. (2017). Efficient multi-scale 3D CNN with

fully connected CRF for accurate brain lesion

segmentation. Medical Image Analysis, 36, 61–78.

https://doi.org/10.1016/J.MEDIA.2016.10.004

Minaee, S., Kafieh, R., Sonka, M., Yazdani, S., &

Jamalipour Soufi, G. (2020). Deep-COVID: Predicting

COVID-19 from chest X-ray images using deep

transfer learning. Medical Image Analysis, 65, 101794.

https://doi.org/10.1016/J.MEDIA.2020.101794

Nirmala Devi, K., Shanthi, S., Hemanandhini, K., Haritha,

S., Aarthy, S. (2022). Analysis of COVID-19 Epidemic

Disease Dynamics Using Deep Learning. In Kim, J.H.,

Deep, K., Geem, Z.W., Sadollah, A., Yadav, A. (eds)

Proceed. (n.d.).

Enhancing COVID-19 Diagnosis with Deep Learning Models DenseNet and ResNet on Medical Imaging Data

513