Hybrid CNN-ResNet50 Model for Brain Tumor Classification Using

Transfer Learning

Sheenam Middha

1a

, Sonam Khattar

1b

and Tushar Verma

2c

Department of Computer Science and Engineering, Chandigarh University, Mohali, Punjab, India

Keywords: Hybrid Deep Learning CNN Model, MRI Images, Brain Tumor, Classification.

Abstract: A tumor is fatal cancers that can affect both adults and minors. A brain tumor's treatment depends on an early

and precise diagnosis. Finding the brain tumor with computer-aided technologies is a crucial first step for

physicians. Experts can spot tumors more quickly and easily thanks to these devices. But conventional

procedures also prevent mistakes from happening. This article uses magnetic resonance imaging (MRI) to

diagnose brain tumors. A hybrid approach that uses CNN models—one of the deep learning networks—for

diagnosis has been put forth. One of the CNN models, Resnet50 architecture, serves as the foundation.97.67%

accuracy rate is achieved with this model. The model that performed the best out of all of them has been used

to classify the images of brain tumors. Consequently, further analyses in the literature indicate that the

suggested method is practical and useful for brain tumor detection in computer-aided systems.

1 INTRODUCTION

A brain tumor is an abnormal development of cells

inside the brain. While some brain tumors are benign,

some could be cancerous. Brain tumors that originate

from the actual tissue of the brain are known as

primary brain tumors. Metastasis is the term used to

describe a malignant tumor that has moved from

another area of the body to the brain. The type,

location, and range of the tumor can all affect the

available treatment options. Therapy or symptom

reduction is the main goal of treatment. The tumor

symptoms include migraines and recurrent

headaches. It may still lead to visual impairment. At

this point, science may not know enough about what

caused the tumor's extraordinary growth in the first

place. Tumors can be classified based on two factors,

such as where they originated from and whether or

not they are cancerous. A benign tumor is a non-

cancerous tumor that does not impact any other

portion of the human body (Chen, Liu, et al. 2018),(

Sultan, Upadhyay, et al. 2019)

(Hossain, Shishir, et al. 2019). They have a modest

pace of expansion and are easily recognizable.

a

https://orcid.org/0000-0002-0639-5539

b

https://orcid.org/0000-0002-5444-4358

c

https://orcid.org/0000-0002-6696-4537

Malignant brain tumors, which are founded on

cancer and can impact other brain regions, can be

extremely violent and terrifying since they can be

difficult to diagnose. When it comes to detecting a

tumor, the physicians will decide between an X-ray

and magnetic resonance imaging (MRI). If no

examination is able to provide sufficient information,

an MRI scan may be appropriate. The MRI scan uses

radio waves and magnetism features to create

flawless images.

MRI scan of the brain can provide a safe and

painless experiment that uses magnetic fields and

radio waves to provide detailed images of the human

brain. As an alternative to a Computed Tomography

(CT) scan, an MRI scan doesn't use radiation. MRI

scanners typically have a large magnet field in the

shape of a doughnut with a channel in the middle. The

patients will be positioned on a table that slides into

the channel for this testing procedure. Numerous

locations with better opening in MRI machines are

available, which can help individuals who are

claustrophobic (Anaraki, Ayati, et al. 2019),

(Özyurt, Sert, et al. 2019). A brain examination

called an MRI machine is offered in radiology centers

and hospitals. During the testing procedure, radio

444

Middha, S., Khattar, S. and Verma, T.

Hybrid CNN-ResNet50 Model for Brain Tumor Classification Using Transfer Learning.

DOI: 10.5220/0013594400004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futur istic Technology (INCOFT 2025) - Volume 2, pages 444-448

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

waves are used to pinpoint the magnetic location of

the atoms in the human body. These signals can then

be chosen by a powerful antenna and transmitted to a

computer. The computer is capable of carrying out

millions of estimations, producing clear and white

photographs of the body.

Figure 1: MRI images

Stages Of Brain Cancer

• Under a microscope, grade I tumors appear

nearly normal and are characterized by

sluggish growth. These tumors are classified

as benign. Since these tumors usually have

distinct borders, surgical removal of them is

less difficult.

• Low-grade malignant tumors, or grade II

tumors, develop more slowly than other

tumor types and have the ability to invade

adjacent brain tissue. Under a microscope,

the cells of Grade II tumors appear slightly

aberrant, suggesting a degree of malignancy.

• Anaplastic or malignant tumors, commonly

referred to as grade III tumors, grow quickly

and are more aggressive. Under a

microscope, the cells in these tumors appear

incredibly aberrant, and they are probably

going to migrate into adjacent brain tissue.

Grade III cancers include anaplastic

oligodendroglioma and anaplastic

astrocytoma. Aggressive treatment is needed

for these tumors, which includes radiation,

chemotherapy, and surgery. A thorough

treatment strategy and close monitoring are

crucial because the overall prognosis is less

favorable and there is a higher chance of

recurrence as compared to lower-grade

cancers.

• Grade IV tumors that are highly aggressive

include the widely recognized glioblastoma

multiforme. These tumors exhibit highly

rapid growth and, when observed under a

microscope, the cells appear highly

abnormal. Grade IV tumors often induce

angiogenesis to facilitate their rapid

proliferation. Despite intensive treatment, the

outlook for Grade IV tumors is quite

unfavorable and often involves a

combination of radiation, chemotherapy, and

surgery. The average survival duration for

individuals diagnosed with glioblastoma is

typically between 15 and 18 months.

The paper is organized in such a way that section 2

provides a full overview of the pertinent work, and

Section 3 provides a thorough overview of the

proposed system along with implementation details.

In Section 4, the comprehensive experimental

outcomes are displayed. The results are shown in

Section 5.

2 LITRATURE SURVEY

(Zotin et al. 2018) proposed FCM clustering-based

medical image processing system for MRI brain

tumor edge identification is presented. The input

image is enhanced by BCET after being denoised

with a median filter. After segmenting the picture

using the FCM clustering approach, the Canny edge

detector is used to create an edge map of the brain

tumor. The suggested approach works better since the

Canny method is used on perfect set of images that

are divided into homogeneous regions and have

superior quality because of the FCM and BCET.

Consequently, the suggested approach yields good

estimators, presenting great image quality for medical

specialists to analyze. An analysis of the edge maps

by a medical expert revealed that the segmentation

accuracy is 10-15% better in specific tumor pathology

cases compared to the comparable expert estimations.

The experimental study that was carried out proved

how stable the edge map produced by the suggested

technique was against the effects of noise.

An innovative CNN architecture that differs from

the ones typically utilized in computer vision is

introduced by (Havaei et al. 2017). Our CNN utilizes

both local and more global contextual aspects at the

same time. Moreover, our networks have a final layer

that is a convolutional version of a fully connected

layer, which allows a 40-fold speedup over most

typical CNN implementations. To address tumor

label imbalance issues, we also provide a two-phase

training protocol. Finally, we study a cascade design

in which a second-class CNN uses the output of a

first-class CNN as an additional information source.

Hybrid CNN-ResNet50 Model for Brain Tumor Classification Using Transfer Learning

445

(Hollon et al. 2020) provided a parallel approach

that uses deep convolutional neural networks (CNNs)

in conjunction with label-free optical imaging

technique stimulated Raman histology to detect

disease at predict almost real-time. In particular, our

CNNs—which were trained on more than 2.5 million

SRH images—can diagnose brain tumors in the

operating room in less than 150 seconds, which is

orders of magnitude quicker than traditional methods

(which take, say, 20–30 minutes).

(Arif F et al. 2022) In order to enhance

performance and streamline the medical picture

segmentation process, a deep learning classifier and

Berkeley's wavelet transformation (BWT) have been

the foundation of the suggested system's research.

Utilizing the gray-level-co-existence matrix (GLCM)

approach, significant features are identified from each

segmented tissue and then optimized using a genetic

algorithm. Based on factors including accuracy,

sensitivity, specificity, spatial overlap, AVME, FoM,

Jaccard's coefficient, and coefficient of dice, the

creative outcome of the employed approach was

evaluated.

(Alsaif et al. 2022) The suggested approach

performs exceptionally well for the initial cluster

centers and size. Segmentation is carried out utilizing

BWT methods, which have lower computational

speed and accuracy. This paper suggests a method to

divide the brain tissue that involves very little human

intervention. The primary motive of this approach is

to expedite the process of patient identification for

neurosurgeons or other human experts. Comparing

the testing results to the most advanced technology,

the accuracy is 98.5%. There is still room for

improvement in terms of computational time, system

complexity, and memory usage when executing the

algorithms. The same methodology can also be

applied to the identification and examination of

various illnesses present in other bodily organs, such

as the kidney, liver, or lungs. It is possible to employ

several classifiers with optimization techniques.

Utilizing the Faster R-CNN deep learning

architecture, (R. Sa et al. 2017) propose a method to

identify intervertebral discs in X-ray pictures.

Scientists employ this CNN to enhance the accuracy

and efficiency of intervertebral disc recognition, a

vital stage in diagnosing spinal problems. Their

methodology demonstrates significant improvements

in detection accuracy compared to traditional

approaches, highlighting the potential of Faster R-

CNN for application in medical image processing.

The study demonstrates how sophisticated deep

learning methods may improve radiology's capacity

for diagnosis. This problem was resolved by (R. Sa et

al. 2017). Traditional machine learning methods

require a manually generated feature for

classification. However, without requiring human

feature extraction, deep learning systems can be

developed to yield accurate classification results.

Since there are a lot of MRI pictures in the first

dataset, we use a 23-layer CNN to build our models

at first.

(Alanazi, Muhannad Faleh et al. 2022) To

evaluate how well convolutional neural networks

(CNNs) perform on brain magnetic resonance

imaging (MRI), they are built from the ground up

using various layers. The 22-layer, binary-

classification (tumor or no tumor) isolated-CNN

model is then utilized once more to re-adjust the

weights of the neurons for the purpose of classifying

brain MRI pictures into tumor subclasses using the

transfer-learning concept. This results in a high

accuracy of 95.75% for the transfer-learned model

developed for the MRI images from the same MRI

machine. The created transfer-learned model has also

been validated using brain MRI images from another

machine to verify its general competence, flexibility,

and reliability for future real-time application. The

results show that the proposed model achieves a high

accuracy of 96.89% for a previously unobserved

brain MRI dataset. Thus, the recommended deep

learning.

3 METHODOLOGY

The Hybrid approach for Brain Tumor detection

using CNN with ResNet50 was proposed and detailed

description is given below:

This methodology follows three step process.

Firstly, trained the data. Secondly, various pooling

techniques are applied and finally classifiers are used

to find features. The Concatenating pooling layer

from the ResNet50 model yielded the final features.

Ultimately, a concatenated feature vector measuring

4096 × 1 is obtained. Because each pre-trained CNN

model's final pooling layers seek to gather the best

features for classifying the target class rather than

irrelevant features.

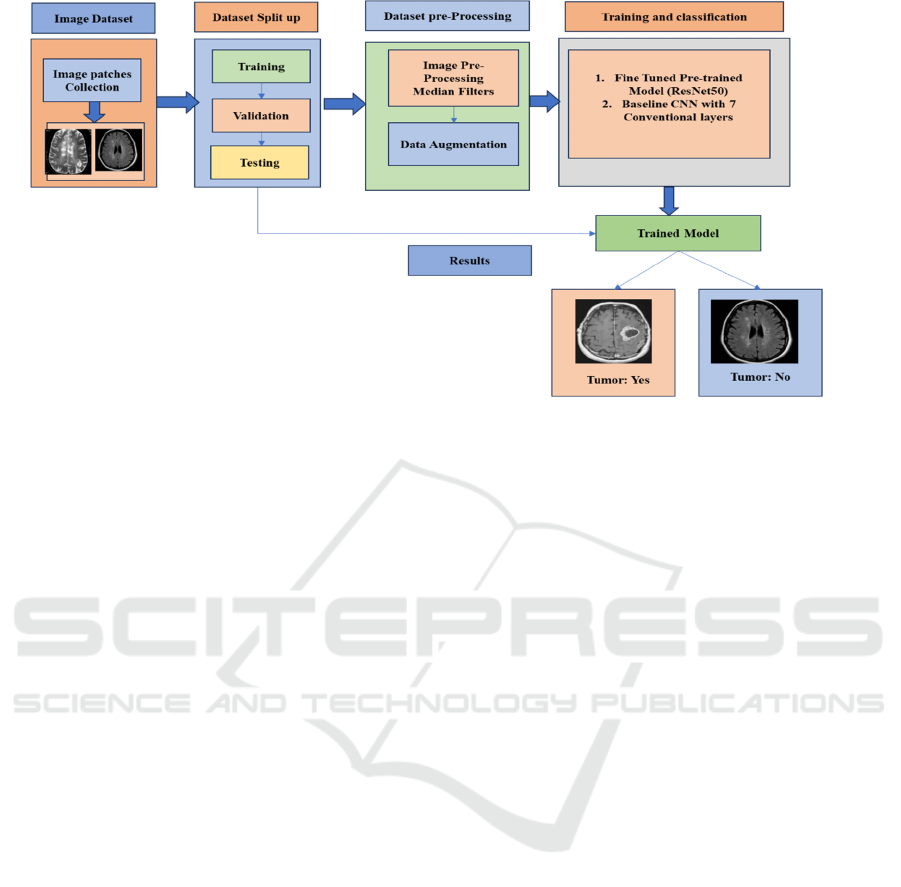

Figure 2 presents the suggested hybrid deep

learning model. It performs Radiography

classification method using two base models and a

heading model. Concatenating with CNN models

with ResNet50 results in a single feature vector. The

output metrics are examined using the deep neural

network classifier. Because of their easy training

times and straightforward structure, two pre-trained

models are recommended.

INCOFT 2025 - International Conference on Futuristic Technology

446

Figure 2: Proposed model for detection of Brain Tumor images

The first layer of this neural network architecture

is an input layer that can handle 224x224 images with

three colour channels. It makes use of a ResNet50

model that has already been trained and produces 7x7

feature maps with 2048 channels. These

characteristics are then further refined by a Conv2D

layer with 16 filters, which adds non-linearity while

lowering complexity. The spatial dimensions are then

reduced to 3x3 while maintaining the depth using a

MaxPooling2D layer. Following the application

of another MaxPooling2D layer that further decreases

the dimensions to 1x1, another Conv2D layer with 32

filters is applied. The spatial data is condensed by the

global average pooling layer into a 32-dimensional

vector. To avoid overfitting, this vector goes through

a dropout layer after passing through a dense layer

with 512 units.

4 RESULTS AND DISCUSSIONS

The trials were conducted in the Google Colab

environment. Computation was done with both CPU

and GPU. Utilized were a Tesla K80 accelerator,

Xeon CPU running at 3.35 GHz, and a 20 GB RAM.

The accuracy of our model during training and

validation is shown in Figure 3. The Keras callback’s

function computed it. Accuracy in training and

validation was observed when using varying numbers

of epochs. We discovered that the Hybrid model had

the maximum accuracy for both training and

validation after 6 epoches.

We can observe from the previously mentioned

graphs that validation accuracy increases in tandem

with training accuracy. As loss decreases, so does the

validation loss. To improve the results, we can adjust

the hyperparameters of the learning rate, train the

model on more photos, or simply train it for more

epochs. Our test accuracy is 97.5 percent thanks to the

evaluate () technique.

5 CONCLUSIONS

Due of various diversities of medical images, image

segmentation is important in medical image

processing. We employed MRI scans for brain tumor

segmentation. Brain tumor segmentation and

classification are the two main uses of MRI. This

paper uses CNN modes with seven layers to classify

photos of brain tumors. Using Resnet50 architecture

as a foundation, a hybrid model is introduced. The

developed hybrid model has a 97.67% accuracy rate

and Loss is 0.02%. Additionally, many models are

used to classify images of brain tumors. The hybrid

model that was created has the highest accuracy rate.

The accuracy of previous architectures, including the

classical Resnet architecture, has significantly

improved with the release of the upgraded Resnet50

architecture

REFERENCES

W. Chen, B. Liu, S. Peng, J. Sun, and X. Qiao, “Computer-

aided grading of gliomas combining automatic

segmentation and radiomics,” Int. J. Biomed. Imaging,

2018.

H. H. Sultan, N. M. Salem, and W. Al-Atabany, “Multi-

classification of brain tumor images using deep neural

Hybrid CNN-ResNet50 Model for Brain Tumor Classification Using Transfer Learning

447

network,” IEEE Access, vol. 7, pp. 69215–69225,

2019.

T. Hossain, F. Shishir, M. Ashraf, M. Al Nasim, and F.

Shah, “Brain tumor detection using convolutional

neural network,” in Proc. IEEE Int. Conf., 2019, pp. 1–

6.

A. K. Anaraki, M. Ayati, and F. Kazemi, “Magnetic

resonance imaging-based brain tumor grades

classification and grading via convolutional neural

networks and genetic algorithms,” Biocybern. Biomed.

Eng., vol. 39, no. 1, pp. 63–74, 2019.

F. Özyurt, E. Sert, E. Avci, and E. Dogantekin, “Brain

tumor detection based on convolutional neural network

with neutrosophic expert maximum fuzzy sure

entropy,” Measurement, vol. 147, 2019.

Khattar, S., & Bajaj, R. (2023, December). A Deep

Learning Approach of Skin Cancer Classification using

Hybrid CNN-Inception Model. In 2023 Global

Conference on Information Technologies and

Communications (GCITC) (pp. 1-7). IEEE.

A. Zotin, K. Simonov, M. Kurako, Y. Hamad, and S.

Kirillova, “Edge detection in MRI brain tumor images

based on fuzzy c-means clustering,” Procedia

Computer Science, vol. 126, pp. 1261-1270, 2018.

H. Greenspan, A. Ruf, and J. Goldberger, “Constrained

Gaussian Mixture Model Framework for Automatic

Segmentation of MR brain Images,” IEEE Transactions

on Medical Imaging, vol. 25, no. 9, pp. 1233-1245,

2018.

M. Havaei, A. Davy, D. Warde-Farley, A. Biard, A.

Courville, Y. Bengio, C. Pal, P. M. Jodoin, and H.

Larochelle, “Brain tumor segmentation with deep

neural networks,” Medical Image Analysis, vol. 35, no.

1, pp. 18-31, 2017.

T. C. Hollon, B. Pandian, A. R. Adapa, E. Urias, A. V. Save,

S. S. S. Khalsa, D. G. Eichberg, R. S. D’Amico, Z. U.

Farooq, S. Lewis, et al., “Near real-time intraoperative

brain tumor diagnosis using stimulated raman histology

and deep neural networks,” Nat Med., vol. 26, no. 1, pp.

52–58, 2020.

Goudarzi, H., Pournajaf, K., & Zarrin, M. (2022). "A F.

Muhammad Arif, A. S. Shamsudheen, O. Geman, D.

Izdrui, and D. Vicoveanu, “Brain tumor detection and

classification by MRI using biologically inspired

orthogonal wavelet transform and deep learning

techniques,” J. Healthcare Eng., 2022

F. Muhammad Arif, A. S. Shamsudheen, O. Geman, D.

Izdrui, and D. Vicoveanu, “Brain tumor detection and

classification by MRI using biologically inspired

orthogonal wavelet transform and deep learning

techniques,” J. Healthcare Eng., 2022

INCOFT 2025 - International Conference on Futuristic Technology

448