Research on Autonomous Navigation Algorithm of UAV Based on

Visual SLAM

Kai Huang

1

, Jinming Zhang

1

, Wei Zhang

1

, Daoyang Xiong

1,*

, Weiwei Wang

2

and Senyu Song

1

1

Tianjin Richsoft Electric Power Information Technology Co., Ltd, Tianjin, 300000, China

2

State Grid Information & Telecommunication Co., Ltd,Beijing, 102200, China

Keywords: Visual SLAM, UAV Autonomous Navigation Algorithm, Drone, Autonomous Navigation.

Abstract: In this paper, the autonomous navigation algorithm of UAV based on visual SLAM is studied to improve the

accuracy of autonomous positioning of UAV in complex environments and improve its ability to construct

maps. To do this, it is necessary to use the installed camera and IMU unit to record images and IMU data in

real time. Then, the ORB method is used to extract and match the features, and the autonomous navigation

algorithm is used to expand the pose estimation, and the Bundle Adjustment is used to optimize it. The results

of this paper show that in the indoor environment, the final pose difference after closed-loop optimization has

been significantly reduced by 0.08 meters, and the map reconstruction accuracy has reached 0.03 meters. In

the outdoor environment, the final positioning error is also significantly reduced by 0.09 meters, and the map

reconstruction accuracy is 0.05 meters. After experiments, it can be seen that the SLAM-based autonomous

navigation algorithm in this study can show good adaptability and robustness in different environments. The

research in this paper will provide reliable theoretical and practical support for the further development of

UAV autonomous navigation technology.

1 INTRODUCTION

At present, drone technology has developed rapidly,

and its application fields are constantly expanding,

and it is applied to many fields such as military

reconnaissance and environmental monitoring (Li,

Zhang, et al. 2023). However, there are still many

problems in the autonomous navigation performance

of current UAV technology in complex and dynamic

environments, especially the problems of positioning

accuracy and environmental perception, which are

relatively serious. Visual SLAM is an effective

autonomous navigation technology (Li, Li, et al.

2024), which can use cameras to obtain high-

definition images and sequences, carry out real-time

positioning, and complete map construction tasks,

which has become an important method to assist the

autonomous navigation of UAVs. Based on this, this

paper will study the autonomous navigation

algorithm of UAV based on SLAM (Ma, Wang, et al.

2021). In this paper, the performance of the algorithm

is verified through specific research processes and

experiments. In this paper, experiments are used to

verify the results. In this paper, the ORB feature

extraction and matching method is used to carry out

feature point detection, and then the algorithm is used

to complete the pose estimation work, and the Bundle

Adjustment is used to optimize the pose (Ukaegbu,

Tartibu, et al. 2022), (Wang, Kooistra, et al. 2024).

At the same time, the loopback detection and closed-

loop optimization work were combined to improve

the positioning accuracy and map consistency of the

UAV. The results show that in the indoor

environment, the pose error is reduced from 0.10

meters to 0.02 meters, and the map reconstruction

accuracy reaches 0.03 meters. In the outdoor

environment, the positioning error finally reached

0.04 meters, which is a decrease from the original

0.15 meters, and the map construction accuracy also

reached 0.05 meters, which is a relatively good result.

It can be seen that this study has good practical

application value. In addition, this study has many

contributions, such as the study in this paper proves

that the UAV autonomous navigation algorithm

based on visual SLAM can show high adaptability

and robustness in different complex environments.

Moreover, the improved method in this paper mainly

combines IMU data and closed-loop optimization,

which can provide good theoretical and practical

support for the further improvement and subsequent

Huang, K., Zhang, J., Zhang, W., Xiong, D., Wang, W. and Song, S.

Research on Autonomous Navigation Algorithm of UAV Based on Visual SLAM.

DOI: 10.5220/0013537400004664

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 3rd International Conference on Futuristic Technology (INCOFT 2025) - Volume 1, pages 157-163

ISBN: 978-989-758-763-4

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

157

optimization and development of UAV autonomous

navigation algorithms. In addition, the research in this

paper also provides a certain reference value for the

subsequent application of visual SLAM technology in

UAV autonomous navigation.

2 RELATED WORKS

The research in this paper needs a certain theoretical

basis and framework. According to this study, the

relevant projects in this paper include:

2.1 Theory and Framework of Visual

SLAM

Visual SLAM uses cameras to acquire detailed image

sequences and combine them with sensor data,

allowing drones to achieve effective positioning in

the context of the location and build detailed maps to

aid subsequent research. It mainly includes feature

extraction and feature matching, pose estimation,

map update module, etc. Common SLAM algorithms

include LSD.SLAM and ORB.SLAM (Xu, Chen, et

al. 2024). The combination of visual data and IMU

data can improve the positioning accuracy and

robustness of autonomous navigation of UAVs. It

uses the global information provided by visual data

and the short-term and accurate motion estimation

provided by IMU data to carry out its work. Visual

SLAM based on deep learning. Visual SLAM can be

based on deep learning technology to enhance key

aspects such as feature extraction and matching. Deep

learning can automatically learn environmental

features, thereby improving the generalization ability

of SLAM UAV autonomous navigation algorithms

(Youn, Ko, et al. 2021).

2.2 Multi-View and Multi-Modal

SLAM

Based on the integration of multiple cameras and

sensors (such as vision cameras), the robustness and

accuracy of vision SLAM can be improved. The

multi-perspective will also provide people with a

wide variety of environmental information. It is worth

noting that multimodal fusion also helps SLAM

overcome the limitations of a single sensor device.

Moreover, it will also help drones to improve their

navigation capabilities in complex environments

(Zeng, Yu, et al. 2023); Real-time and resource

optimization. The visual SLAM algorithm needs to be

implemented on an embedded system with limited

resources. It can be seen that its main research

directions need to include real-time and resource

optimization. At present, the research basically

focuses on the lightweight processing and efficient

implementation of the algorithm, such as the SLAM

algorithm based on sparse features. At present, the

above research results are conducive to the research

of this paper and provide a basis for the further

development of UAV autonomous navigation

algorithm based on visual SLAM.

3 RESEARCH METHODS

3.1 Data Acquisition and Preprocessing

In this study, data acquisition and pre-processing

were the initial steps. The process requires a number

of components, such as sensor configuration and

environment preparation, data logging, and pre-

processing. Select and configure cameras and IMUs

for SLAM-based UAV systems. Common cameras

are RGB, RGB. D。 IMUs are mainly used to provide

data on acceleration and angular velocity. It is

important to stably install these sensors on vision-

based SLAM-based drones. The most important thing

is to make sure they work in sync. To this end, these

sensors are calibrated to obtain similar focal lengths,

principal point offsets, and external parameters

similar to IMUs, so as to ensure a high degree of data

accuracy (Zhang, Xie, et al. 2023).

Choose an environment with sufficient feature

points to start data collection. For this purpose, you

can choose an indoor environment or an outdoor

environment, and ensure that the environment has

rich textures, geometric features, etc. For example,

there are walls or building features (Zhang, Zhong, et

al. 2024). Then, the flight path of the drone began to

be designed, ensuring that the entire environment

could be covered and a variety of perspectives could

be obtained. During the flight of the UAV, it is

necessary to ensure that it can record the complete

image sequence and IMU data obtained by the camera

in real time to ensure the stability and continuity of

the data. At the same time, the time synchronization

between the image data and the IMU data is ensured.

The preprocessing of the acquired image data and

IMU data is completed. Image pre-processing needs

to include denoising and grayscale, distortion

correction, and thus improve the quality of the image.

In addition, IMU data pre-processing involves

removing noise, drift, and proceeding with

subsequent steps based on the filter balance data. In

addition, the processed data should be directly

INCOFT 2025 - International Conference on Futuristic Technology

158

converted into a format that matches the input

requirements of the SLAM algorithm, and then stored

in the database and file system to facilitate the

development and testing of the algorithm in the

future.

3.2 Feature extraction and Matching

Feature point detection is the key in feature extraction

and matching. Its job is to extract points from the

image that have obvious local features. In general,

people are accustomed to using feature point

detection algorithms such as ORB, SLFT, or SUFR.

In this paper, ORB is mainly used. The process of

feature detection is as follows: (1) detection of FAST

corners. For this, FAST should be used to perform

corner detection, see Eq. (1).

pq

I

It−>

(1)

In Eq. (1),

p

I

is the gray value of the pixel

p

;

q

I

is

q

the grayscale value of the pixel;

t

is the

threshold.

(2) Filter feature points. For this reason, NMS

should be performed for the detected corners to

screen out strong features, as shown in Eq. (2).

NMS( , ) if ( ) ( ) ( )

I

pIpIqqNp=>∀∈

(2)

In Eq. (2),

NMS( , )

I

p

it is the strong feature

that is screened out;

()Np

is

p

the neighborhood of

the point.

Feature description is used to turn each generated

feature point into a unique descriptor, so as to serve

the subsequent matching work. In this algorithm, the

BRIEF descriptor is used to complete the rotation

invariance processing. See Eq. (3) for details.

BRIEF( ) if ( ) ( )

x

y

p

Ip d Ip d=+<+

(3)

In the formula

()

3

,

BRIEF( )

p

is the descriptor,

x

d

and

y

d

is the pair of points that describe the sub.

(4) Rotational invariance. Based on this

algorithm, the descriptor is also processed with

rotation invariance, so that it has high rotation

robustness. See Eq. (4) for details.

arctan

y

x

I

I

θ

=

(4

)

In Eq. (4),

θ

it is the result of the rotational

invariance treatment;

y

I

,

x

I

is the image gradient.

(5) Feature matching. Feature matching is the

comparison of feature descriptors to determine the

correspondence between different images [6]. A

common method is Brute.Force matching. The

Brute.Force match can be expressed by Eq. (5).

( , ) desc ( ) desc ( )

pq

i

dpq i i=−

(5

)

In Eq. (5),

(,)dpq

is

p

the

q

result of the

feature matching of and;

desc ( )

p

i

And

desc ( )

q

i

is

the

p

q

descriptor of the feature point and the two

feature points.

(6) Two-way matching and verification. To

improve the reliability of feature matching, two-way

matching and cross-validation should also be used.

Pose estimation is a key part of the UAV

autonomous navigation algorithm based on visual

SLAM in this study. It needs to calculate the specific

position and attitude of the drone in space from the

matched image feature points. Firstly, the algorithm

is used to estimate the initial pose of the UAV by

using the matched image feature point pairs. Then,

the 3D points in space and the corresponding 2D

image points are used to find the pose of the drone.

See Eq. (6) for details.

()

2

min ,

ii

R

ti R t

π

−+

pP

(6

)

In Eq. (6),

i

p

are the points of the 2D image;

i

P

are 3D points;

R

is a rotation matrix;

t

is a

translational vector;

π

is a projection function.

Third, perform pose optimization. The BA

method is used to optimize the initial estimation pose.

In this process, it is generally necessary to adjust the

camera pose at each angle of view at the same time,

and adjust their respective 3D point positions. Then,

point the image. The error of the projection point is

minimized to improve the accuracy of pose

estimation [7].

Research on Autonomous Navigation Algorithm of UAV Based on Visual SLAM

159

3.3 Build a Map

In the research of UAV autonomous navigation

algorithm based on visual SLAM, map construction

is an extremely critical step. It can comprehensively

integrate the feature information contained in the

environment into a single, complete map model, so as

to make the autonomous navigation of drones more

accurate. First, it uses the detected feature points

based on the initial pose estimation to build an

initialized sparse point cloud map [8]. When the

drone is constantly moving, the SLAM system will

detect and match new feature points. Whenever a new

perspective and feature point is obtained, the map is

updated to show that the environment has changed,

adding new feature points and adjusting the position

of existing feature points. If the drone has already

passed through the same location, the SLAM system

will turn on loopback detection to identify the area as

one that has already been visited. Generally speaking,

it will match the feature points, and when the loop is

detected, the UAV system will automatically do

closed-loop optimization, and adjust each feature

point and pose in the map based on the global

optimization. At the same time, the accumulated error

is eliminated to ensure the consistency and accuracy

of the map. In addition, the algorithm can also help

the drone to establish a deeper and more stereoscopic

visual density, so as to make the drone navigation

more refined. Moreover, in order to ensure the

efficient management of map data, the system will

also automatically store these map data and store it in

a specific file or database section, and at the same

time, compress and index according to different needs

to ensure the fast access and use of data.

4 RESULTS AND DISCUSSION

4.1 Experimental Content

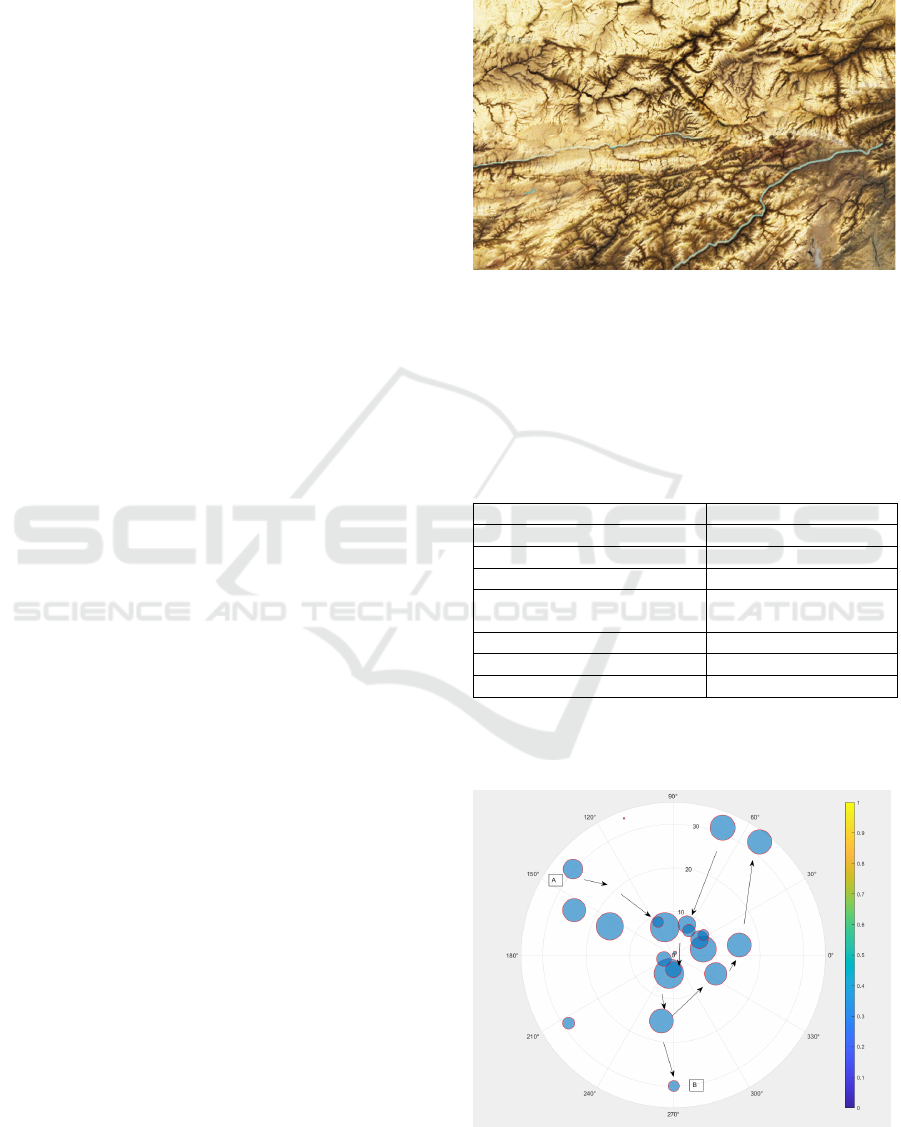

The environment of the experiment. The environment

of this experiment is two environments with rich

feature points, one is indoor environment and the

other is outdoor environment, so as to test the

performance of the algorithm in different scenarios.

(The configuration of the experiment is to install a

high-definition RGB camera and IMU sensor on the

drone to ensure the synchronization of data

acquisition, and the test range is 12*36km, as shown

in Figure 1.)

Monday's analysis, however, in the analysis of the

test range, we can see that there are relatively many

obstacles in the entire test range, and the terrain is

relatively complex, and the overall situation is

relatively difficult, and the drone needs to calculate

the obstacles in order to realize the planning and

testing of the entire azimuth

Figure 1: UAV test range

4.2 Path Planning Results for

Autonomous Navigation

The results of this experiment are shown in Table 1,

Table 2 and Table 3.

Table 1: Indoor environment experimental results

Ite

m

Value

Path Len

g

th

(

m

)

50

Initial Pose Error

(

m

)

0.05

Final Pose Error (m) 0.02

Map Reconstruction

Accurac

y

(

m

)

0.03

Loo

p

Closure Detections 3

Pre.Loop Closure Error (m) 0.10

Post.Loop Closure Error (m) 0.02

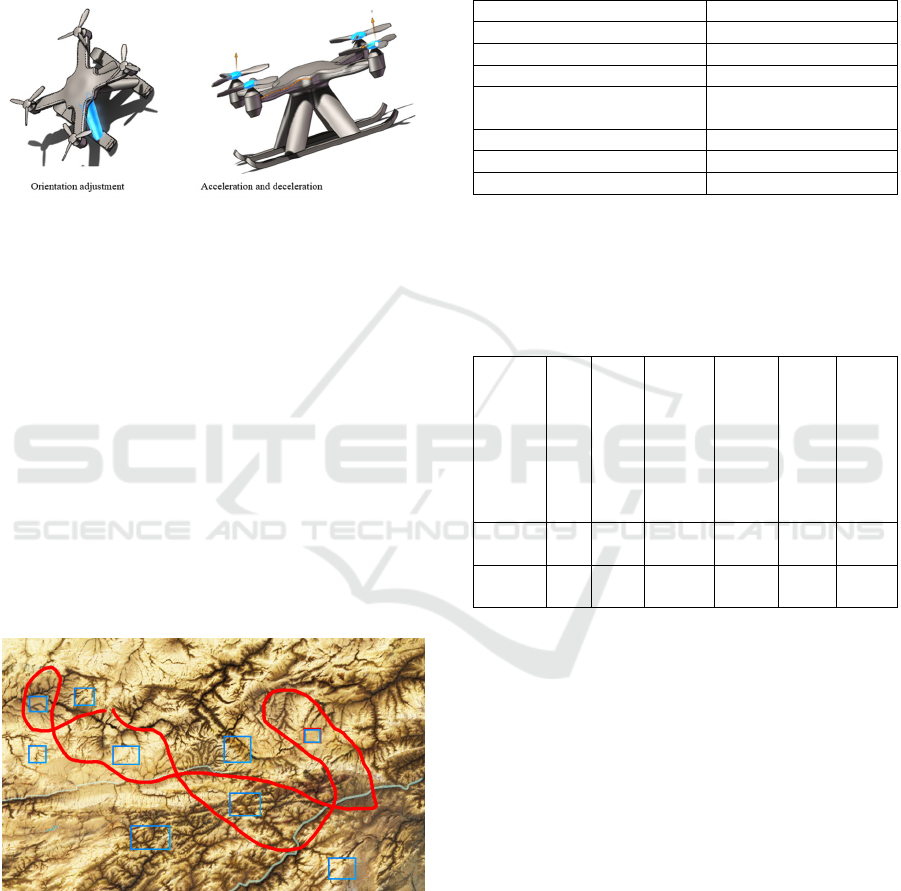

The overall change in path planning is shown in

Figure 2.

Figure 2: Path change of the UAV

INCOFT 2025 - International Conference on Futuristic Technology

160

In the whole analysis process, its direction and

steering degree are generally good to achieve the

expected data analysis and reach the final goal In the

whole analysis process, the drone should adjust

according to the direction, direction and content to

achieve its own expectations and avoid external

influences and obstacles.

Figure 3: Navigation adjustment of the UAV

Through the direction of the UAV and its own

acceleration to achieve the expected point, and realize

the overall planning of the aircraft, realize the

adjustment of the entire direction, and the overall

change of the UAV and the comprehensive

orientation of the SLAM design, the analysis results

are relatively good, and the overall meets the

expected requirements.

4.3 SLAM Obstacle Avoidance Results

of UAVs

In the test map, the UAV performs path planning,

successfully bypasses the obstacle point, and

completes the video inspection of the range content,

as shown in Figure 4.

Figure 4: Autonomous navigation and inspection of uavs

It is found in Figure 4 that the UAV can realize

the obstacle avoidance and adjust the direction

according to its own posture to complete the

autonomous planning of the whole navigation In the

process of UAV testing and analysis, the integrity of

the navigation planning is relatively good, and finally

it can reach the expected closer point to realize the

overall test and distribution In the process of path

planning, the fault point avoidance should be carried

out, so the result of fault point avoidance is shown in

Table 2.

Table 2: Outdoor environment experimental results

Item Value

Path Length (m) 200

Initial Pose Error

(

m

)

0.08

Final Pose Error

(

m

)

0.05

Map Reconstruction

Accurac

y

(

m

)

0.05

Loo

p

Closure Detections 5

Pre.Loo

p

Closure Error

(

m

)

0.15

Post.Loop Closure Error (m) 0.04

However, there will be some errors in the evasion

process, and the errors should be analyzed, and the

results are shown in Table 3.

Table 3: Summary of experimental results

Enviro

nment

Ini

tial

Po

se

Err

or

(m

)

Fin

al

Pos

e

Err

or

(m)

Map

Recon

structi

on

Accur

acy

(m)

Loop

Clos

ure

Dete

ction

s

Pre.

Loo

p

Clos

ure

Erro

r

(m)

Post.

Loop

Clos

ure

Error

(m)

Indoor 0.0

5

0.0

2

0.03 3 0.10 0.02

Outdo

o

r

0.0

8

0.0

5

0.05 5 0.15 0.04

4.4 Overall Changes in Autonomous

Navigation

Several conclusions can be obtained through the

analysis, (1) the positioning accuracy is high. It can

be seen from Table 1, Table 2 and Table 3 that in the

two experimental environments, the final pose error

of the visual SLAM UAV autonomous navigation

algorithm adopted this time is significantly reduced

by 80% after closed-loop optimization, which proves

that closed-loop optimization can improve the

positioning accuracy. (2) Map reconstruction

accuracy. The algorithm can construct high-precision

maps in both indoor and outdoor environments, and

the reconstruction accuracy of the indoor

environment is 0.02 meters higher than that of the

outdoor environment, which may be due to the more

stable indoor feature points. (3) Loopback detection

and closed-loop optimization. Through experiments,

Research on Autonomous Navigation Algorithm of UAV Based on Visual SLAM

161

it can be seen that the loopback detection of the

algorithm can be successfully started in many

positions, and based on the closed-loop optimization

operation, the cumulative error can be significantly

reduced (reduced by 80%), and the consistency and

accuracy of the map can be improved. It can be seen

that the algorithm can have good autonomous

navigation performance of UAVs in different

environments, and can provide accurate positioning,

and the quality is also very high in map construction,

which can be applied to a variety of application

scenarios.

5 CONCLUSIONS

In this paper, a visual SLAM-based UAV

autonomous navigation algorithm is obtained, which

has superior performance in all aspects. From the

analysis of this paper, it can be seen that the

conclusions of the study are as follows:

First, high adaptability and robustness. From the

research in this paper, it can be seen that in the indoor

environment, after the closed-loop optimization of the

algorithm, the autonomous navigation experiment of

the UAV shows that in the indoor environment, the

final pose error is greatly reduced, and the map

reconstruction accuracy is 0.03 meters. In the outdoor

environment, the final positioning error has also been

significantly reduced, from the original 0.15 meters to

0.04 meters, and the map accuracy has reached 0.05

meters. It can be seen that the algorithm in this study

can show high adaptability and robustness in the

environment of different complex conditions. In

addition, the positioning accuracy and map

construction ability of the algorithm are very high.

Second, the algorithm can effectively eliminate

the accumulated error of UAV autonomous

navigation through loop detection and closed-loop

optimization. Through the experiments in this paper,

it can be seen that the loopback detection has been

successfully triggered 3 times in this laboratory test.

At the same time, it was successfully triggered 5

times in outdoor experiments. The success of each

loopback detection makes the map constructed by the

algorithm more consistent and accurate. This shows

that the UAV autonomous navigation algorithm

based on visual SLAM can effectively adjust the

navigation ability of repeated paths and ensure a

certain stability.

Thirdly, the algorithm can ensure the flight

stability and accurate navigation ability of the UAV

in different scenarios, and save labor costs. It can be

seen from the research in this paper that the

combination of IMU data, visual SLAM and UAV

technology can greatly improve the robustness and

real-time detection of the system in dynamic

environments, so as to ensure the flight quality of

UAV in complex scenes and improve its navigation

ability.

ACKNOWLEDGEMENT

State Grid Information and Communication Industry

Group Independent Investment Project , Research and

Application of Key Technologies for the Support

System of Large-Scale Promotion of UAV Based on

Integration Technology(546810230006).

REFERENCES

Li, D. D., Zhang, F. B., Feng, J. X., Wang, Z. J., Fan, J. H.,

Li, Y., et al. (2023). LD-SLAM: A Robust and Accurate

GNSS-Aided Multi-Map Method for Long-Distance

Visual SLAM. Remote Sensing, 15(18),22.

Li, Z. Y., Li, H. G., Liu, Y., Jin, L. Y., & Wang, C. Q.

(2024). Indoor fixed-point hovering control for UAVs

based on visual inertial SLAM. Robotic Intelligence

and Automation,2(3),22.

Ma, Y. F., Wang, S. X., Yu, D. L., & Zhu, K. H. (2021).

Robust visual-inertial odometry with point and line

features for blade inspection UAV. Industrial Robot-the

International Journal of Robotics Research and

Application, 48(2), 179-188.

Ukaegbu, U. F., Tartibu, L. K., Lim, C. W., & Amer Soc

Mechanical, E. (2022, Oct 30-Nov 03). SUPERVISED

AND UNSUPERVISED DEEP LEARNING

APPLICATIONS FOR VISUAL SLAM: A REVIEW.

Paper presented at the ASME International Mechanical

Engineering Congress and Exposition (IMECE),

Columbus, OH,2(3),32.

Wang, K. W., Kooistra, L., Pan, R. X., Wang, W. S., &

Valente, J. (2024). UAV-based simultaneous

localization and mapping in outdoor environments: A

systematic scoping review. Journal of Field Robotics,

41(5), 1617-1642.

Xu, J. Q., Chen, Z., Chen, J., Zhou, J. Y., & Du, X. F.

(2024). A precise localization algorithm for unmanned

aerial vehicles integrating visual-internal odometry and

cartographer. Journal of Measurements in Engineering,

12(2), 284-297.

Youn, W., Ko, H., Choi, H., Choi, I., Baek, J. H., & Myung,

H. (2021). Collision-free Autonomous Navigation of A

Small UAV Using Low-cost Sensors in GPS-denied

Environments. International Journal of Control

Automation and Systems, 19(2), 953-968.

Zeng, Q. X., Yu, H. N., Ji, X. F., Tao, X. D., & Hu, Y. X.

(2023). Fast and Robust Semidirect Monocular Visual-

INCOFT 2025 - International Conference on Futuristic Technology

162

Inertial Odometry for UAV. Ieee Sensors Journal,

23(20), 25254-25262.

Zhang, H. H., Xie, C., Toriya, H., Shishido, H., & Kitahara,

I. (2023). Vehicle Localization in a Completed City-

Scale 3D Scene Using Aerial Images and an On-Board

Stereo Camera. Remote Sensing, 15(15),23.

Zhuang, L. C., Zhong, X. R., Xu, L. J., Tian, C. B., & Yu,

W. S. (2024). Visual SLAM for Unmanned Aerial

Vehicles: Localization and Perception. Sensors,

24(10),92.

Research on Autonomous Navigation Algorithm of UAV Based on Visual SLAM

163