MMSIA: Towards AI Systems Maturity Assessment

Rub

´

en M

´

arquez Villalta

1,2 a

, Javier Verdugo Lara

1,2 b

, Mois

´

es Rodr

´

ıguez Monje

1,2 c

and Mario Piattini Velthuis

1,2 d

1

AQCLab Software Quality, C/ de Moledores s/n, 13071 Ciudad Real, Spain

2

Instituto de Tecnolog

´

ıas y Sistemas de Informaci

´

on (UCLM), Paseo Universidad, 4, 13071 Ciudad Real, Spain

Keywords:

Maturity Model, Artificial Intelligence, Software Processes, ISO/IEC 33000, ISO/IEC 5338.

Abstract:

The emergence of artificial intelligence (AI) has caused a technological revolution in society in recent years,

and a growing number of companies are implementing or creating systems that use this technology across a

range of industries. In order to guarantee quality procedures on these systems, there is an immediate demand

for quality standards as a result of this rise. Based on a variety of international standards, including the

ISO/IEC 5338 standard as a reference model for AI processes and the ISO/IEC 33000 family of standards

to establish a software process assessment and maturity model, this paper shows an Artificial Intelligence

Software Maturity Model (called MMSIA). The main objective of the MMSIA model is to give businesses

creating AI systems a framework for evaluating and continuously improving the software processes used in

the creation of these kinds of systems, which will raise the level of AI applications.

1 INTRODUCTION

Artificial Intelligence (AI) has become a fundamental

technology in today’s society, and this has been re-

flected in recent years, where it is increasingly com-

mon to see people using tools that use AI techniques

in their daily lives. In recent years, this discipline

has made its way into different areas of society, such

as medicine (Wang and Preininger, 2019); business

decision-making and data analysis (Enholm et al.,

2022); or the automation of repetitive and tedious jobs

in general (Ribeiro et al., 2021).

The new digital transformation that AI is driving

and the impact it has had on the world is remarkable,

even being compared to the industrial or digital rev-

olutions, as, in these years, AI is changing aspects

of our society in a radical way (Makridakis, 2017).

Moving to the business world, more and more orga-

nizations are looking to use AI to gain an advantage

over their competitors and gain value. Looking ahead,

it is expected that by 2030, AI could contribute $15.7

trillion to the global economy, equivalent to a 14%

increase in global GDP (Anand and Verweij, 2017).

a

https://orcid.org/0000-0002-1907-1701

b

https://orcid.org/0000-0002-2526-2918

c

https://orcid.org/0000-0003-2155-7409

d

https://orcid.org/0000-0002-7212-8279

Moreover, 55% of organizations have incorporated

AI into one of their processes (Bughin et al., 2018).

Similarly, this growth has shown that 59% of compa-

nies that have incorporated AI systems into their pro-

cesses in recent years have increased their revenues,

and 42% have seen a decrease in their costs. Fur-

thermore, if we look at scientific journal publications,

these have increased by 4.5% for the last years for

those related to the field of AI, and 30.2% for the con-

ference publications (Maslej et al., 2024).

However, as in other technological areas, the de-

velopment of AI software tools involves certain par-

ticularities that present additional risks and challenges

to those already found in traditional software devel-

opment, and that need to be addressed and taken into

account throughout these developments. Studies such

as the European Commission’s White Paper On Artifi-

cial Intelligence - A European approach to excellence

and trust (European Commission, 2020) already ad-

dress the concern for establishing regulatory frame-

works and standards that promote responsible and

quality development in the field of AI. It is worth not-

ing that different institutions are already focusing on

mitigating this lack of regulation. Such is the case of

the European Union (EU) with the proposed compre-

hensive regulation on AI in the EU AI Act (European

Commission, 2021).

Although advances in AI regulation are yielding

Villalta, R. M., Lara, J. V., Monje, M. R., Velthuis and M. P.

MMSIA: Towards AI Systems Maturity Assessment.

DOI: 10.5220/0013523100003964

In Proceedings of the 20th International Conference on Software Technologies (ICSOFT 2025), pages 273-280

ISBN: 978-989-758-757-3; ISSN: 2184-2833

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

273

results, there is a critical gap in creating frameworks

directly focused on companies engaged in the devel-

opment of AI systems to assist them in their develop-

ment processes. While research to support organiza-

tions in these processes is scarce, certain advances are

beginning to emerge, such as the recent publication of

the ISO/IEC 5338 (ISO, 2023c) standard. This stan-

dard aims to identify and define a reference model for

process improvement in the field of AI.

Therefore, this paper presents the Artificial Intel-

ligence Software Maturity Model (MMSIA with its

acronym in Spanish), based on the ISO/IEC 33000

(ISO, 2015a) family of standards and using the recent

ISO/IEC 5338 standard as a process reference model.

This model has been developed with the objective that

it can be incorporated in organizations (either in spe-

cific projects or in the global organization) to improve

the quality of the processes necessary in the develop-

ment of AI systems and, in this way, to obtain systems

with a sufficiently high level of quality to satisfy the

requirements of the client and with appropriate effi-

ciency.

This is followed by an indication of how the rest

of the document is organized. The next section pro-

vides more detail on the context of this work. Section

3 provides more details about the new ISO/IEC 5338

standard, on which the model presented is based. Sec-

tion 4 describes the proposed MMSIA model, indicat-

ing the processes involved, the maturity levels and the

phases of which it is composed. Finally, the last sec-

tion presents lessons learned, conclusions and future

work.

2 CONTEXT

In order to provide a set of best practices, guide-

lines, and norms that assist companies in managing

and improving the quality of their software products

and guaranteeing customer satisfaction, a number of

standards, frameworks, and models have emerged in

the literature in recent years.

There are two distinct aspects of software qual-

ity: processes and products. In the case of advances

in quality improvement focused on AI products, the

ISO/IEC 25059 (ISO, 2023b) standard modifies four

of the eight essential characteristics that a quality soft-

ware product must have as established in ISO/IEC

25010 (ISO, 2023a). Also, this standard introduces

new important sub-characteristics to be taken into ac-

count in AI products, such as transparency, functional

adaptability or interoperability. Additionally, other

works, like (Oviedo et al., 2024) have made progress

to improve the quality of AI products through the de-

velopment of an environment for assessing the suit-

ability of AI systems.

Nevertheless, there is a direct correlation between

processes and product, so that if the quality of the

software processes used for the development of AI

systems were improved, they would produce quality

products (Pino et al., 2006). The activities, methods,

procedures, and tools applied to create and maintain

the final product constitute these processes. In light of

this, companies that employ a software process-based

strategy concentrate on the ongoing enhancement of

their processes, which requires the accurate definition

and identification of activities, roles, inputs, and out-

puts (Oktaba and Piattini, 2008).

In the case of advances in the publication of stan-

dards and norms that offer quality improvement in the

lifecycle processes of AI systems, these are scarce,

and associations such as the International Standard-

ization Organization (ISO) have started to unify a

standard under the AI processes. However, it is still

common to use standards, frameworks and models,

focused on improving the processes used in tradi-

tional software development, for the AI field. Some

of these standards or models are the following:

• Capability and Maturity Model Integrated

(CMMI) v3.0 (CMMI Institute, 2023): Defines

a process assessment framework that helps

organizations beyond software engineering to

understand their current level of capability and

performance, providing a roadmap for optimizing

business outcomes.

• ISO/IEC/IEEE 12207 (ISO, 2017): Establishes

a process reference model focusing on life cycle

processes for the development and maintenance

of traditional software systems.

• ISO/IEC 33000 family of standards (ISO, 2015a):

Provides a framework for software process assess-

ment and continuous process improvement, re-

placing ISO/IEC 15504, better known as Software

Process Improvement and Capability dEtermina-

tion (SPICE).

• Maturity Model for Software Engineering

(MMIS) v2.0 (Rodriguez et al., 2021): Proposes

a framework for assessing and improving the

quality of development processes, in accordance

with ISO/IEC 33000 and ISO/IEC 12207.

• SMMT maturity model (Sonntag et al., 2024):

Presents a maturity model designed to assess the

ability of manufacturing companies to incorporate

AI tools into their processes to optimize their op-

erations.

• AI and Big Data Maturity Model (Fornasiero

et al., 2025): Proposes a maturity model to eval-

ICSOFT 2025 - 20th International Conference on Software Technologies

274

uate the capacity of industry companies in the

adoption and management of AI and Big Data so-

lutions within their operations, with the objective

of optimizing their processes and decision mak-

ing.

The models found in the literature, such as the last

two, are focused on companies that want to incor-

porate AI-related tools or solutions in some of their

processes, and not on companies that develop those

AI solutions and want to improve their development

life cycle processes. Other standards and models,

such as CMMI or MMIS have been highly extended

in software development, nevertheless, the field of

AI software requires some specialization. This do-

main involves a much more specific set of tasks, roles

and processes that need to be addressed directly for

the improvement of development. For example, new

roles as data engineer or data scientist, due to the

great importance of data in the creation of such sys-

tems; in addition to new development activities such

as data extraction, preparation, documentation, moni-

toring and maintenance, activities to be taken into ac-

count, because if they are not well implemented, they

can lead to risks and challenges (Sugali, 2021), such

as the correct partitioning of training and test sets,

the incomprehensibility of the developed AI models,

overfitting problems, the absence of good measures

of effectiveness or documentation problems. These

issues have to be addressed and taken into account at

the process level in order to anticipate them.

In relation to the aforementioned situation, it is

important that organizations dedicated to the devel-

opment of AI systems focus on the improvement and

correct definition of the processes used for the devel-

opment of these systems. Thus, ISO has recently pub-

lished a standard known as ISO/IEC 5338 “AI sys-

tem life cycle processes” (ISO, 2023c), which estab-

lishes the bases and good practices of the necessary

processes that organizations must follow for the de-

velopment of AI systems. The ISO/IEC 5338 has

been designed to serve as an AI process reference

model. It provides a suite of processes designed to

assist in defining, controlling, managing, implement-

ing and improving AI systems. These AI systems,

to which the standard refers, are based on those sys-

tems that use Machine Learning and/or Heuristic Sys-

tems. This implies systems that make explicit use of

data or expert knowledge for the learning of models

and hence the inference of the required knowledge.

ISO/IEC 5338 is the result of the consolidation of

other more general standards, such as ISO/IEC/IEEE

15288 “System life cycle processes” (ISO, 2023d)

and ISO/IEC/IEEE 12207 “Software life cycle pro-

cesses” (ISO, 2017), whose processes have been in-

tegrated for this new standard. In addition, other

standards more specific to the field of AI have also

been integrated, such as ISO/IEC 22989 “Artificial

intelligence concepts and terminology” (ISO, 2022a)

and ISO/IEC 23053 “Framework for Artificial Intelli-

gence (AI) Systems Using Machine Learning (ML)”

(ISO, 2022b), which define concepts specific to AI

development and a framework describing the compo-

nents and functions involved in the development of AI

systems, respectively. The latter two standards have

been used to incorporate AI-specific processes and to

modify existing processes in ISO/IEC/IEEE 12207 by

incorporating key aspects and concepts from this area.

In detail, ISO/IEC 5338 defines a total of 33 pro-

cesses, and as in ISO/IEC/IEEE 12207, the processes

have also been grouped into 4 large groups, according

to a set of aspects that organizations developing AI

systems must take into account. These aspects range

from the management of different elements involved

in the development to support, including the evalua-

tion of the performance of the processes themselves,

resulting in a classification of processes based on the

organizational objectives that it provides and giving

rise to:

• Agreement Processes: to secure an agreement be-

tween two organizations with the objective of pro-

viding an AI product or service.

• Organizational Project-Enabling Processes: to

follow a process-oriented approach which guides

projects and provides the resources and infrastruc-

ture needed to achieve the objectives.

• Technical Management Processes: to provide the

resources, establish the plans, monitor, evaluate

and manage the progress of the implementation

of a project.

• Technical Processes: to define the requirements

and needs of the client, and to transform them into

the design, development and testing of the final

product.

Although this standard establishes a process ref-

erence model for AI software, it is necessary to ana-

lyze which are the particularities offered in relation to

those already implemented for traditional software.

3 DIFFERENCES BETWEEN

ISO/IEC 5338 STANDARD AND

ISO/IEC 12207

One of the first steps in the construction of the matu-

rity model for AI systems has been the analysis and

MMSIA: Towards AI Systems Maturity Assessment

275

subsequent comparison of the ISO/IEC 5338 stan-

dard, focused on the best practices and processes nec-

essary for the development of systems under an AI life

cycle, and the ISO/IEC/IEEE 12207 standard, more

focused on a traditional software development envi-

ronment and whose processes extend to the AI stan-

dard mentioned above.

Through this analysis, the changes, extensions and

reductions introduced by this new standard have been

verified in order to define the MMSIA model. Thus,

by focusing in detail on the types of modifications

that have been carried out for the creation of ISO/IEC

5338, 3 types of processes can be distinguished:

• Generic Processes [G], for those processes that

have not undergone specific modifications as a re-

sult of their incorporation in the AI field and are

used entirely as defined in ISO/IEC/IEEE 15288

and ISO/IEC/IEEE 12207.

• Modified Processes [M], for those processes that

have undergone changes, additions or deletions in

their elements (purpose, outcomes or activities) in

order to adjust them to the AI environment, since

certain specific particularities of that field must be

taken into account.

• AI-Specific Processes [AI], for those processes

with specific AI characteristics, which have been

expressly defined for this standard.

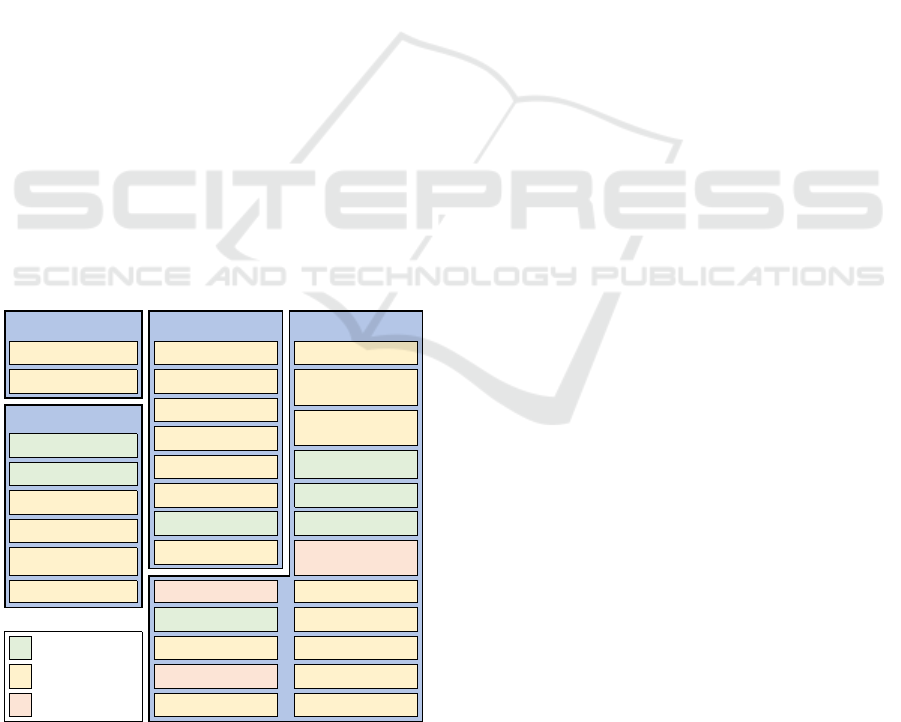

The Figure 1 shows a summary of the processes

included in ISO/IEC 5338 grouped by scope and iden-

tifying those that have been modified.

Technical management

processes

Technical processes

Project planning process

Business or mission analysis

process

Project assessment and control

process

Generic Processes [G]

Transition process Validation process

Modified Processes [M]

Continuous validation process Operation process

AI-Specific Processes [AI]

Maintenance process Disposal process

Human resource management

process

Quality management process

Knowledge management

process

AI data engineering process

Implementation process

Integration process

Verification process

Agreement Processes

Acquisition process

Supply process

Life cycle model management

process

Organizational project-

enabling processes

Desing definition process

Measurement process

System analysis process

Quality assurance process

Knowledge acquisition process

Infrastructure management

process

Portfolio management process

Stakeholder needs and

requirements definition process

Decision management process

System requirements definition

process

Risk management process

Architecture definition process

Configuration management

process

Information management

process

Figure 1: Software lifecycle process groups according to

ISO/IEC 5338.

Three processes that have been created specifi-

cally for this AI standard can be identified, they are

the following:

• Knowledge acquisition process: Knowledge of

the domain and the problem are essential in AI

systems, so this process seeks to provide the nec-

essary knowledge for the development of models

used by these systems. The results of this process

are the correct identification, storage and trace-

ability of the knowledge with the model. In addi-

tion, activities such as the definition of the scope,

the search for knowledge sources or the perfor-

mance of knowledge acquisition tasks about the

domain and the problem are included.

• AI data engineering process: The objective of this

process is to ensure that the data can be used in the

model. Some outputs of this process are the ac-

quisition of data sets, the correct formatting and

preparation of training and test data, the provi-

sion of metadata for data documentation, trace-

ability and maintenance, or the identification of

automated processes for data extraction or pro-

cessing. Some of the activities included in the

process are acquisition or selection, labeling, dig-

itization, quality analysis, documentation, clean-

ing and preparation, and data protection.

• Continuous validation process: Its objective is to

control that the AI models would work correctly

once the system has gone into production, since

the behavior of a model or its performance may be

affected. The results of the process are the addi-

tion of a validation log and the decision to perform

AI model retraining. The results of the process are

the addition of a validation record and the decision

to perform model retraining. This includes a set of

activities such as monitoring data drift, model per-

formance or any aspect that affects the model and

may be altered over time.

As for the other 30 processes, 7 of them have not

been modified with respect to the original standards,

so they can be directly applied in this field. And the

rest (23) have undergone modifications in some of

their aspects, purpose, results, activities or tasks, to

adjust them to the particularities of the field of AI,

which has been necessary to analyze and study their

impact. Some of these modifications are due to the

importance of data/knowledge in an AI project, such

as the problems added in the acquisition of data for

the acquisition process or the importance of the qual-

ity of the dataset in the quality management process.

It is also important to highlight that new roles are in-

troduced in this field such as data scientists, which

must be considered in the human resources manage-

ment process, or the inclusion of new phases in the

development of AI systems, such as algorithm selec-

ICSOFT 2025 - 20th International Conference on Software Technologies

276

tion or model training, which is remarkably important

in the implementation process.

Consequently, a reference model of processes for

creating high-quality AI systems is established. Nev-

ertheless, a model designed to assist enterprises in

creating AI systems in assessing and continuously en-

hancing these specified processes involved in their de-

velopment is required.

4 MMSIA, A MATURITY MODEL

FOR AI PROCESS

ASSESSMENT

Once the analysis of the ISO/IEC 5338 standard has

been carried out, the Artificial Intelligence Software

Maturity Model (titled MMSIA, with its acronym in

Spanish) has been defined, a model through which

companies dedicated to developing AI systems can

evaluate and improve their software processes at both

the project and organizational levels, which is based

on the following points:

• ISO/IEC 5338: The new ISO standard that

presents a reference model for AI system lifecy-

cle processes.

• ISO/IEC 33000 family of standards: The latest

version of the existing ISO standards for deter-

mining process capability and organizational ma-

turity.

Based on ISO/IEC 33004 (ISO, 2015b), it is deter-

mined that for the defined MMSIA model, a domain

of the reference model is specified that represents the

processes that are considered fundamental for the de-

velopment of AI systems by a small AI development

organization (although it can be applied to any type of

organization), from the conception of the need to the

construction and production of the system that satis-

fies that need.

The process reference model provides a structured

collection of processes useful for the development of

AI systems, together with best practices that describe

the characteristics of these processes. For the cre-

ation of the AI system lifecycle reference model for

the MMSIA model, the processes described in the

ISO/IEC 5338 standard were used as a basis.

Therefore, several of the processes proposed by

ISO/IEC 5338, such as, the operation process, mainte-

nance process or the disposal process, are outside the

scope of the domain defined for the MMSIA model.

Thus, the MMSIA model makes use of a total of 24

processes taken from ISO/IEC 5338. Each process

identifies: a purpose; a set of outcomes, which repre-

sent the characteristics that must be implemented to

satisfy the process; a set of activities and tasks, used

to interpret and guarantee these outcomes; and work

products.

Once the process reference model is provided, a

mapping of the relation between the defined processes

and their intended context of use is necessary. This re-

lationship is described in the way the processes form

part of the levels described in the maturity model and

regarding a number of aspects that AI system devel-

opment organizations need to consider in terms of

project management, organizational management, re-

source management, data and expert knowledge man-

agement, engineering, model development and train-

ing, and product and process performance evaluation.

The agreement to include the processes in the pro-

cess reference model and their allocation to the differ-

ent maturity levels has a theoretical basis supported

by a set of experts with experience in the develop-

ment of maturity models, as is the case of the MMIS

model, used as a maturity model for the assessment of

the processes required in traditional software develop-

ment, through the ISO/IEC/IEEE 12207 standard.

In this way, five maturity levels are defined, where

each level will introduce new processes to be imple-

mented in the organization and will represent a fur-

ther step in the incremental approach to organiza-

tional maturity. The following list shows the classi-

fication of the processes of the reference model and

their correspondence with the maturity levels.

• Level 1: Basic. Project Planning Process, Imple-

mentation Process and AI Data Engineering Pro-

cess

• Level 2: Managed. Supply Process, Life Cy-

cle Model Management Process, Quality As-

surance Process, Project Assessment and Con-

trol Process, Measurement Process, Configura-

tion Management Process, Stakeholder Needs and

Requirements Definition Process and Knowledge

Acquisition Process

• Level 3: Established. Infrastructure Man-

agement Process, Human Resources Manage-

ment Process, Decision Management Process,

Risk Management Process, Architecture Defi-

nition Process, Integration Process, Verification

Process, Validation Process, System Require-

ments Definition Process and Continuous Valida-

tion Process

• Level 4: Predictable. Portfolio Management

Process

• Level 5: Innovative. Knowledge Management

Process and Business Analysis Process

Once the processes necessary for the development

of AI systems have been established through a ref-

MMSIA: Towards AI Systems Maturity Assessment

277

erence model with the different maturity levels, the

MMSIA model establishes a measurement and as-

sessment process using the ISO/IEC 33000 standards.

One of the most essential aspects of assessing the pro-

cesses of an organization based on the ISO/IEC 33000

family is the measurement of the capability level of

each process. Assessing the capability of a software

process means determining the level at which a pro-

cess is implemented in an organization. To measure

the capability of a process, a series of Process At-

tributes (PAs) are defined, which represent elements

that allow evaluating, individually, a specific aspect

of the capabilities and aptitudes of a process. These

attributes are transversal and apply to all processes.

These attributes are composed of management prac-

tices and generic work products. These elements

represent the process capability indicators, through

which their individual measurement is performed to

determine the degree of achievement of the attribute

and, therefore, the level of process capability to be

evaluated. The following list shows these capabil-

ity levels and the corresponding process attributes de-

fined by ISO/IEC 33020 (ISO, 2019).

• Level 0. Incomplete Process.

• Level 1. Performed Process. Process perfor-

mance (PA 1.1)

• Level 2. Managed Process. Performance man-

agement (PA 2.1) and Document information

management (PA 2.2)

• Level 3. Established Process. Process definition

(PA 3.1), Process deployment (PA 3.2) and Pro-

cess assurance (PA 3.3)

• Level 4. Predictable Process. Quantitative anal-

ysis (PA 4.1) and Quantitative control (PA 4.2)

• Level 5. Innovating Process. Process innovation

(PA 5.1)

The assessment of the capability level of a par-

ticular process can be made on the outcomes and the

attributes observed in the assessment of each of the

attributes of the evaluated process.

After establishing the different processes and their

attributes and characteristics in the MMSIA model,

the assessment process with its different stages has

been defined. The evaluation process consists of a

systematic evaluation in which an evaluator profile

and another more proficient profile participate as the

main evaluator, with the latter performing the task of

reviewing the evaluations carried out. (Unterkalm-

steiner et al., 2011) This process begins with a doc-

umentary review of the different elements involved in

the development of the AI systems to be evaluated,

which is carried out through a series of interviews

with the different people involved in the development

of these systems. These interviews are composed by

a series of questions to the interviewees about ele-

ments, outcomes or activities that are necessary in the

AI systems development life cycle, which have been

extracted through the characteristics offered by each

of the processes in the process reference model. The

questions are divided into different sections of the AI

system development lifecycle, such as the design and

development phase, evaluation and continuous valida-

tion, or the data and knowledge acquisition and refine-

ment phase. Throughout the interviews, those people

involved will show a series of evidences, which will

allow the evaluator to determine observations about

the degree of implementation, on the project or or-

ganization, of the processes that have been used in

the development life cycle. Based on this evidence,

an assessment stage of the capability level of each

of the processes involved will be carried out. For

this, the process attributes, defined in ISO/IEC 33020,

and the individual results used for PA 1.1 are used.

Each process attribute is a measurable property of the

process capability within this process measurement

framework.

Consequently, the process attribute assessment is

an evaluator’s judgment on the fulfillment of the ele-

ments of the process attribute and defined outcomes,

through the evidence and findings detected in the

evaluation. A process attribute or outcome is mea-

sured using an ordinal scale, which has the following

grades: N (Not implemented) for those process at-

tributes that have no evidence of their definition in the

process under assessment; P (Partially implemented)

for those process attributes that have some evidence of

focusing and achieving it; L (Largely implemented)

for those process attributes that have evidence of a

systematic approach and significant achievement; and

F (Fully implemented) for those process attributes

that have evidence of a comprehensive and systematic

approach and full achievement. To obtain the value of

the capability level of each process, an aggregation

method is used based on the following criterion: a

process is at capability level X if all the process at-

tributes of the previous levels have a rating of “Fully

implemented” (F) and the process attributes of capa-

bility level X have a rating of at least “Largely imple-

mented” (L).

Once the capability levels of an organization’s

processes have been obtained, the organizational ma-

turity assessment is carried out. This assessment con-

sists of determining the degree to which an organi-

zation performs the processes necessary to contribute

to the fulfillment of its business objectives in an AI

development environment. Using the process profiles

ICSOFT 2025 - 20th International Conference on Software Technologies

278

defined for each of the maturity levels listed above, it

is possible to represent organizational behaviors and

assist in continuous and incremental process improve-

ment. However, in order to define a maturity level for

an organization, it is necessary to establish a corre-

spondence between the capability levels for the pro-

cesses and the maturity levels for the organization.

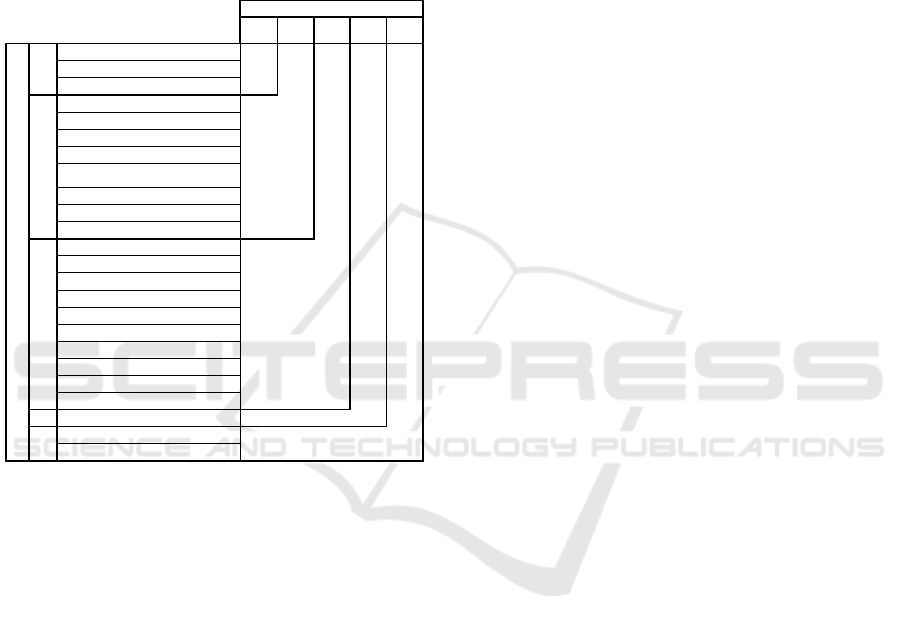

For this reason, the Figure 2 represents relationships

between capability levels and corresponding maturity

levels for the MMSIA model.

Level

1

Level

2

Level

3

Level

4

Level

5

Project planning process

Implementation process

AI data engineering process

Supply process

Life cycle model management process

Project assessment and control process

Measurement process

Stakeholder needs and requirements definition

process

Configuration management process

Quality assurance process

Knowledge acquisition process

Decision management process

Infrastructure management process

Human resource management process

Risk management process

Verification process

Validation process

System requirements definition process

Architecture definition process

Integration process

Continuous validation process

ML4

Portfolio management process

Knowledge management process

Business or mission analysis process

Target for compliance with

ML3

Target for ML4

ML5

Target for compliance with ML5

Capability levels

Organizational maturity levels

ML1

Target

ML1

Target for compliance with ML4

(some of these processes must achieve capability level 4)

Target for compliance with ML5

(the processes selected from the previous level must achieve capability level 5)

ML2

Target for

compliance with

ML2

ML3

Figure 2: Correlation between maturity levels and capabil-

ity levels.

5 CONCLUSIONS

This paper has shown that AI has become an essen-

tial tool nowadays with the aim of making people’s

lives easier. Therefore, it is important that in order

to build complete and flawless AI solutions, it is nec-

essary to give importance to the quality assurance of

the final product in the life cycle of such systems, not

only directly, but also through the improvement of the

quality of the processes involved in its development.

Nevertheless, the advances in the assessment and

improvement of the quality of the processes involved

in the development of AI systems are scarce. Among

them, the recent ISO/IEC 5338 standard stands out,

which identifies and defines a reference model for the

improvement of AI processes. While this is a great

step forward, there is still a need for tools directly

oriented to enterprises. For this reason, the contri-

bution of this work has been a first step in solving this

problem, with the creation of an assessment model to-

gether with an organizational maturity model for the

development of AI systems, based on the ISO/IEC

5338 standard. Through this model, it is intended to

provide AI development organizations with a frame-

work that allows its incorporation in order to carry

out an assessment and continuous improvement of the

quality of the processes used for such development.

While the definition of this model establishes a

foundation and a starting point to help AI organiza-

tions improve the quality of their processes, further

progress is needed in order to improve it to a more

robust model. These next steps are:

1. A real case study needs to be carried out in which

the proposed model is tested and put into prac-

tice. For this purpose, a validation process is al-

ready underway in collaboration with a Spanish

company specialized in developing technological

solutions through the use of AI techniques. In this

process, we are conducting a series of interviews

with people involved in a project developed by

this company, in order to extract a series of evi-

dences and findings on the degree of implemen-

tation of the processes used on the project devel-

oped. The objective of this case study is to ver-

ify the practical viability of the application of the

model, detecting possible defects and improve-

ments that lead to the improvement of the model.

On the other hand, we are currently discussing

with other companies to carrying out more case

studies.

2. The implementation of an software tool will be

carried out to register the results in a repository of

the findings detected in the process evaluations, as

well as to define, plan and monitor the improve-

ment actions traced to the findings. In this way,

the aim is to provide support to companies to mea-

sure the capacity of their processes, and to carry

out automatic assessments, with the objective of

offering continuous improvement to the organiza-

tion.

ACKNOWLEDGEMENTS

This research has been supported by the ADA-

GIO project (JCCM/SBPLY/21/180501/000061),

funded by the European Union and UCLM Own

Research Plan, co-financed at 85% by the European

Regional Development Fund (FEDER) UNION

(2022-GRIN-34110); AETHER project (MICIU/AEI

/10.13039/501100011033, PID2020-112540RB-

C42); SAFER project: Analysis and Validation of

MMSIA: Towards AI Systems Maturity Assessment

279

Software and Web Resources (FEDER and the State

Research Agency (AEI) of the Spanish Govern-

ment: PID2019-104735RB-C42); CASIA project:

Calidad de Sistemas de Inteligencia Artificial (EXP.

13/23/IN/002), funded by the Junta de Comunidades

de Castilla-La Mancha and FEDER, and AIMM

project: Artificial Intelligent Maturity Model (EXP.

13/24/IN/057), funded by the Junta de Comunidades

de Castilla-La Mancha and FEDER.

REFERENCES

Anand, S. and Verweij, G. (2017). Sizing the prize: What’s

the real value of AI for your business and how can you

capitalise? APO: Analysis & Policy Observatory.

Bughin, J., Seong, J., Manyika, J., Chui, M., and Joshi, R.

(2018). Notes from the AI frontier: Modeling the im-

pact of AI on the world economy. McKinsey Global

Institute, 4(1).

CMMI Institute (2023). CMMI V3.0.

Enholm, I. M., Papagiannidis, E., Mikalef, P., and Krogstie,

J. (2022). Artificial Intelligence and Business Value:

a Literature Review. Information Systems Frontiers,

24(5):1709–1734.

European Commission (2020). White Paper On Artificial

Intelligence - A European approach to excellence and

trust.

European Commission (2021). Laying Down Harmonised

Rules On Artificial Intelligence (Artificial Intelligence

Act) and Amending Certain Union Legislative Acts.

Proposal for a regulation of the European parliament

and of the council.

Fornasiero, R., Kiebler, L., Falsafi, M., and Sardesai, S.

(2025). Proposing a maturity model for assessing arti-

ficial intelligence and big data in the process indus-

try. International Journal of Production Research,

63(4):1235–1255.

ISO (2015a). ISO/IEC 33000 Family.

ISO (2015b). ISO/IEC 33004:2015 Information technology

— Process assessment — Requirements for process

reference, process assessment and maturity models.

ISO (2017). ISO/IEC/IEEE 12207:2017 Systems and soft-

ware engineering — Software life cycle processes.

ISO (2019). ISO/IEC 33020:2019 Information technol-

ogy — Process assessment — Process measurement

framework for assessment of process capability.

ISO (2022a). ISO/IEC 22989:2022 Information technol-

ogy — Artificial intelligence — Artificial intelligence

concepts and terminology.

ISO (2022b). ISO/IEC 23053:2022 Framework for Artifi-

cial Intelligence (AI) Systems Using Machine Learn-

ing (ML).

ISO (2023a). ISO/IEC 25010:2023 Systems and soft-

ware engineering — Systems and software Quality

Requirements and Evaluation (SQuaRE) — Product

quality model.

ISO (2023b). ISO/IEC 25059:2023 Software engineering

— Systems and software Quality Requirements and

Evaluation (SQuaRE) — Quality model for AI sys-

tems.

ISO (2023c). ISO/IEC 5338:2023 Information technology

— Artificial intelligence — AI system life cycle pro-

cesses.

ISO (2023d). ISO/IEC/IEEE 15288:2023 Systems and soft-

ware engineering — System life cycle processes.

Makridakis, S. (2017). The forthcoming Artificial Intelli-

gence (AI) revolution: Its impact on society and firms.

Futures, 90:46–60.

Maslej, N., Fattorini, L., Perrault, R., Parli, V., Reuel, A.,

Brynjolfsson, E., Etchemendy, J., Ligett, K., Lyons,

T., Manyika, J., Niebles, J. C., Shoham, Y., Wald, R.,

, and Clark, J. (2024). The AI Index 2024 Annual

Report.

Oktaba, H. and Piattini, M. (2008). Software Process Im-

provement for Small and Medium Enterprises: Tech-

niques and Case Studies: Techniques and Case Stud-

ies. IGI Global.

Oviedo, J., Rodriguez, M., and Piattini, M. (2024). An En-

vironment for the Assessment of the Functional Suit-

ability of AI Systems. In 17th International Confer-

ence on the Quality of Information and Communica-

tions Technology. Accepted.

Pino, F. J., Garc

´

ıa, F., Ruiz, F., and Piattini, M. (2006).

Adaptaci

´

on de las normas iso/iec 12207: 2002 e

iso/iec 15504: 2003 para la evaluaci

´

on de la madurez

de procesos software en pa

´

ıses en desarrollo. IEEE

Latin America Transactions, 4:17–24.

Ribeiro, J., Lima, R., Eckhardt, T., and Paiva, S. (2021).

Robotic Process Automation and Artificial Intelli-

gence in Industry 4.0 - A Literature review. Procedia

Computer Science, 181:51–58.

Rodriguez, M., Verdugo, J., Pino, F., Delgado, B., and Pi-

attini, M. (2021). Software Development Process As-

sessment With MMIS v. 2, an ISO/IEC 33000-Based

Model. IT Professional, 23:17–23.

Sonntag, M., Mehmann, S., Mehmann, J., and Teuteberg,

F. (2024). Development and evaluation of a matu-

rity model for ai deployment capability of manufac-

turing companies. Information Systems Management,

42(1):37–67.

Sugali, K. (2021). Software testing: Issues and challenges

of artificial intelligence & machine learning. IJAIA.

Unterkalmsteiner, M., Gorschek, T., Islam, A. M., Cheng,

C. K., Permadi, R. B., and Feldt, R. (2011). Evalu-

ation and measurement of software process improve-

ment—a systematic literature review. IEEE Transac-

tions on Software Engineering, 38(2):398–424.

Wang, F. and Preininger, A. (2019). AI in Health: State of

the Art, Challenges, and Future Directions. Yearbook

of medical informatics, 28:16–26.

ICSOFT 2025 - 20th International Conference on Software Technologies

280