Artificial Intelligence Harm and Accountability by Businesses: A

Systematic Literature Review

Michael Dzigbordi Dzandu

1a

, Sylvester Tetey Asiedu

1b

, Buddhi Pathak

2c

and Sergio De Cesare

1d

1

School of Applied Management and Centre for Digital Business Research, Westminster Business School,

University of Westminster, 35 Marylebone Road, London, U.K.

2

Bristol Business School, University of West of England, Frenchay Campus, Bristol, BS16 1QY, U.K.

Keywords: Artificial Intelligence, Accountability, Harm, Risk, Businesses, Consumers, Framework.

Abstract: This study reviews the literature on artificial intelligence (AI) harms caused by businesses, their impact on

stakeholders, and the available remedial mechanisms. Using the PRISMA method, relevant articles were

sourced from the Scopus database and critically analysed. The data revealed that only 38 articles were

published on the topic between 2012 and 2024, with 21 of these in 2024 alone. Key AI harms identified

include economic and employment displacement, user harm, bias and discrimination, the digital divide, and

environmental harm. While an explicit AI harm accountability framework was not found, related frameworks

were derived from six cognate areas: data governance, decision-making, ethical AI, legal frameworks,

responsible AI, and AI implementation. Five themes—AI transparency, accountability, decision-making,

ethics, and risk—emerged as central to the literature. The study concludes that accountability for AI harms

by businesses has been an afterthought relative to the rapid adoption of AI during the review period.

Developing a robust AI accountability framework to guide businesses in mitigating AI harm is therefore

imperative.

1 INTRODUCTION

Artificial intelligence (AI) continues to dominate

headlines due to its transformative capabilities.

Consequently, the adoption and use of AI in business

operations have grown considerably in recent years.

The drivers of this increased adoption include AI’s

decision-making capabilities, high-speed processing

of large datasets, responsiveness to business

processes (Arora et al., 2024; Kennedy & Campos,

2024; de Pedraza & Vollbracht, 2023; Santos et al.,

2024), and service innovation (Alshahrani et al.,

2024). However, the development, adoption, and use

of AI by businesses are not without challenges

(Abercrombie et al., 2024; Corrêa et al., 2023). While

AI can improve business operations, enhance

performance, and promote transparency and

accountability (Gouiaa & Huang, 2024; Robles &

a

https://orcid.org/0000-0002-3486-7150

b

https://orcid.org/0000-0003-4549-1061

c

https://orcid.org/0000-0001-9801-642X

d

https://orcid.org/0000-0002-2559-0567

Mallinson, 2023), it can also cause harm. This

underscores the urgent need for robust accountability

systems to mitigate the negative effects of AI

development and use by businesses (Mazzacuva,

2021).

The need for businesses to balance leveraging AI

as an enabling tool for innovation with ensuring

accountability cannot be overstated (Schneider et al.,

2023). While AI offers numerous benefits,

opportunities, and capabilities for businesses and

society, it can also result in significant negative

consequences and harm to various stakeholders within

the ecosystem (de Siles, 2021). For instance, AI

algorithmic biases have been shown to cause

exclusion, marginalisation, and even loss of life. A

review of the taxonomy of AI harm (Abercrombie et

al., 2024) indicates that these harms can affect

consumers, employees, businesses, and society at

1012

Dzandu, M. D., Asiedu, S. T., Pathak, B. and De Cesare, S.

Artificial Intelligence Harm and Accountability by Businesses: A Systematic Literature Review.

DOI: 10.5220/0013486100003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 1, pages 1012-1019

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

large. However, there is a lack of documented

accounts of AI harms and the accountability

frameworks employed by businesses to govern AI

products, services, and systems. To design robust AI

accountability strategies, it is crucial for businesses to

understand the specific harms caused by their AI

systems and the accountability frameworks currently

in place. This understanding will also aid

policymakers in assessing existing frameworks and

developing policies to ensure transparency,

responsibility, and fairness in the development and use

of AI systems, products, and services by businesses.

Artificial intelligence (AI) regulation is one of the

most pressing technological and societal concerns

today, with AI accountability forming a critical

component of this regulatory framework. The need

for an accountable framework to ensure that AI-

enabled systems, products, and services developed

and used by businesses align with societal and

business values is imperative. Reported AI harms in

business, including reputational damage such as loss

of confidence, trust, and privacy (Abercrombie et al.,

2024), have heightened the focus on AI

accountability as a means to reduce risks. Current

efforts to regulate AI include initiatives such as the

EU AI Act (2024) and the OECD AI Principles

(OECD, 2025), which provide frameworks for AI

accountability. However, there is limited synthesis of

the literature on trends in publications related to AI

harm accountability in business and evidence of AI-

related harms in this context. This study aims to

address this gap by contributing to the emerging body

of knowledge on AI harm and accountability in

business.

The study will contribute to the theoretical

understanding of the breadth and depth of literature

on AI harm and accountability. It will also assist

developers, organisational employees, businesses,

and consumers of AI products, systems, and services

in recognising their collective responsibility to reduce

AI harm in business. Furthermore, the study will

highlight the harms of AI in business and examine

existing AI accountability frameworks, thereby

informing future research on the subject.

Additionally, the study will offer a tool for assessing

the level of AI accountability based on disclosure,

providing essential information to mitigate the

societal impact of AI harm. The key research

questions this study seeks to address are:

i. What is the trend in publications on AI harm

accountability over the past 10 years?

ii. What are the AI harms caused by

businesses’ use of AI, and what AI

accountability frameworks currently exist?

iii. What are the thematic issues addressed in the

current literature on AI harm accountability,

and what are the direction for future

research?

2 METHODOLOGY

Data was sourced from Scopus database due to its

wide bibliometric coverage of top information

systems (IS) databases. The search query was based

on the keywords Artificial AND Intelligence AND

(Harm OR Risk) AND Accountability AND Business

OR Enterprise OR Entity OR Entities OR

Corporation. The researcher adopted the PRISMA

method (Aslam & Jawaid, 2023) to source and

analyse relevant literature for the study. The use of

the PRISMA method was informed by its application

in earlier, related studies on the subject (Dzandu &

Asiedu, 2024; Enholm et al., 2022). Following the

systematic literature review approach (Kitchenham,

2004), the researchers adhered to the processes of

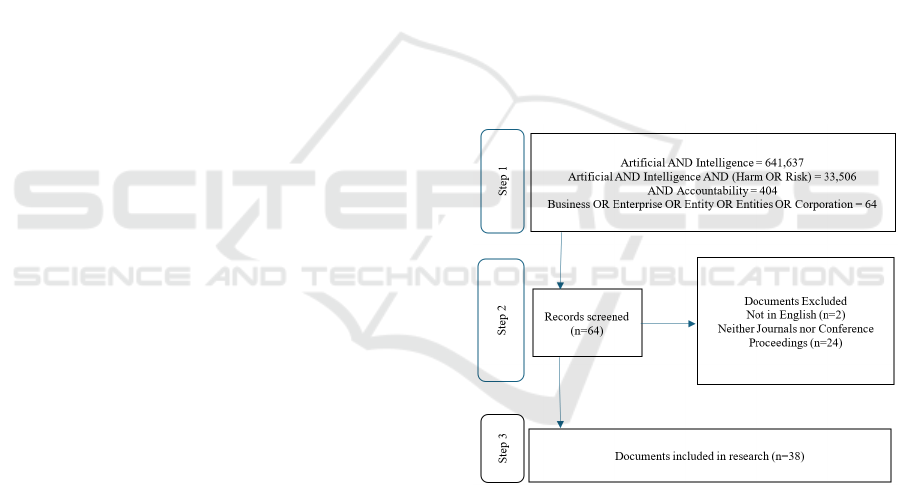

identification, screening, and inclusion (Figure 1).

Figure 1: Summary of the literature search for analysis

In step 1, the identification stage, the researchers

searched the Scopus database using the terms

“Artificial and intelligence,” “risk or harm,”

“accountability,” and “business or enterprise or entity

or entities or corporation.” The search was not limited

to any specific year or duration. The search focused

on the explicit mention of these terms in the titles,

abstracts, and keywords, yielding a total of 64

relevant documents. This was followed by step 2, the

screening stage, where the 64 documents were

critically reviewed by examining their titles,

abstracts, and keywords for relevance and validity.

Artificial Intelligence Harm and Accountability by Businesses: A Systematic Literature Review

1013

An exclusion criterion was applied, limiting the

source types to journal articles or conference

proceedings published in English. Finally, in step 3,

38 documents were deemed valid, relevant, and

appropriate for the study and were downloaded for

literature review analysis. Of these, 25 were journal

articles, 10 were conference papers, and 3 were

review documents.

The analysis utilised Excel for trend analysis,

VOS Viewer software for co-occurrence

visualisation, and cluster or thematic analysis (Goksu,

2021). This was complemented by NVivo software

for qualitative analysis of the articles, enabling the

identification of AI harms and accountability

frameworks. Finally, Biblioshiny was employed to

create a thematic map of publications on AI harm and

accountability by businesses, facilitating a discussion

of current issues and future research directions on the

subject.

3 RESULTS AND DISCUSSION

The literature analysis focused on addressing the key

research questions regarding the trends in research

publications on AI harm by businesses, the types of

harm caused by the development and use of AI in

business, and relevant AI accountability frameworks.

The analysis also examined the key issues addressed

in the current literature and identified opportunities

for advancing research on AI harm and accountability

in business contexts.

3.1 Trend of Publications on AI Harm

Accountability

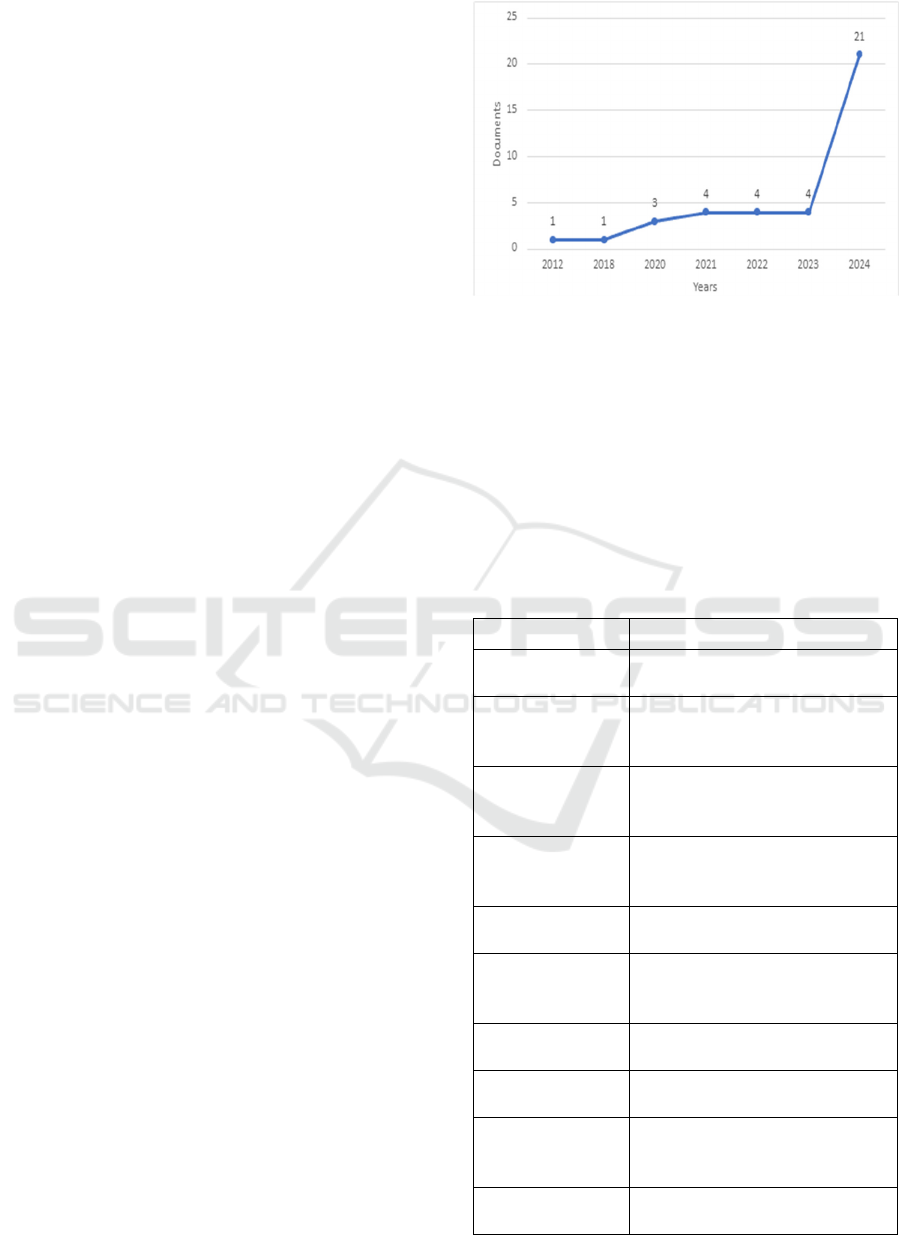

A trend analysis revealed that the term AI harm and

accountability possibly emerged in 2012 when one

paper was published on the topic (Figure 2). This

remain the case until 3 papers were published in 2020

on the subject and 4 papers annually between 2021

and 2023.

There has been a sharp increase in the number of

papers published on AI harm and accountability by

businesses, rising from 4 to 21. This trend highlights

the growing societal and scholarly attention to the

problems of AI harm caused by businesses and the

need for accountability among all stakeholders,

including developers, organisations, employees, and

consumers. This is unsurprising, as AI accountability

appears to be an afterthought, gaining prominence

only after recent concerns were raised about the direct

and indirect negative impacts of AI on society.

Figure 2: Trend of publications on AI harm and

accountability (2012 – 2024).

3.2 Types of AI Harm and

Accountability Frameworks

This study also aimed to identify some of the AI

harms caused by AI developed and used by

businesses (Table 1) and the AI accountability

frameworks (Table 2) currently documented in the

literature on the subject.

Table 1: AI harms by businesses.

Type of AI harm References

Bias and

Discrimination

Wörsdörfer, 2023; Hickok,

2024; Kouroutakis, 2024;

Transparency

and

Accountabilit

y

Wörsdörfer, 2023; Boyer et al,

2022; Hickok, 2024;

Privacy and

Data Protection

Concerns

Boyer et al, 2022;

Economic and

Employment

Displacemen

t

Yakoot et al, 2021; Davinder et

al, 2022

Exacerbation of

Di

g

ital Divide

Kouroutakis, 2024

Unfair Decision-

Making and

Exclusion

Rezaei, et al. (2024); Davinder

et al, 2022

Environmental

Har

m

Wörsdörfer, 2023;

User harm and

inconvenience

Besinger et al. (2024)

Misinformation

and

Manipulation

Camilleri, 2024; Senadheera et

al. (2024),

Government and

Ethical Failures

Senadheera et al, 2024; Yakoot

et al, 2021; Wörsdörfe

r

, 2023;

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1014

The results indicate that several types of AI harm

are caused by the development and use of AI by

businesses, affecting stakeholders including

developers, employees, businesses, customers, and

wider society. The AI harms identified in the current

literature align with those reported by Abercrombie et

al. (2024) in their project on the taxonomy of

algorithmic, AI, and digital harm. For instance, the

exploitation of customer data by businesses for

marketing raises significant concerns about privacy

and data protection (Boyer et al., 2022). Furthermore,

algorithmic bias is known to result in unfair decision-

making (Rezaei et al., 2024; Davinder et al., 2022),

causing discrimination (Wörsdörfer, 2023; Hickok,

2024; Kouroutakis, 2024) and the marginalisation of

minority groups within society. Businesses have also

suffered reputational damage due to issues such as

economic and employment displacement (Yakoot et

al., 2021; Davinder et al., 2022).

The analysis of the literature did not reveal an

explicit AI harm accountability framework.

However, it was observed that current AI harm

accountability is derived from cognate frameworks,

including the data governance framework, AI

decision-making framework, AI legal framework, AI

ethical framework, Responsible AI framework, and

AI implementation framework (Table 2).

Table 2: Summary of related AI Accountability

frameworks.

Framewor

k

References

Data governance

framewor

k

Tremblay and Kohli

(2023)

AI decision making

framewor

k

Kouroutakis (2024)

AI ethical framewor

k

Kouroutakis (2024)

Legal framework for

AI

Kouroutakis (2024)

Responsible AI

framewor

k

Besinger et al. (2024)

AI implementation

framewor

k

Akramov & Valiev

(2024)

An all-purpose data governance framework

(Tremblay and Kohli, 2023) is regarded as a

foundational tool for countries, businesses, and

society to achieve digital resilience. The

establishment of a permanent data governance

framework supports data governance, ownership and

stewardship, standardisation and interoperability, as

well as the competencies required to enhance data

analytics functions, including AI solutions.

According to Kouroutakis (2024), there remains a

lack of an accountable AI framework. To ensure

transparency in AI solutions, it is therefore imperative

to establish accountable decision-making frameworks

to mitigate systemic biases in AI models. Kouroutakis

(2024) also advocates for people-centred AI legal and

ethical frameworks to bridge the emerging AI divide

in society through AI training and promotion. These

frameworks would create user awareness and

knowledge about AI technologies while ensuring fair

and equitable access to them across society.

A Responsible AI framework (Besinger et al.,

2024) ensures that developers, businesses,

employees, and customers understand their roles

within the AI ecosystem and their liabilities for any

potential harm caused. Akramov and Valiev (2024)

identified an AI implementation framework as a

proxy for an AI accountability framework. According

to them, an AI implementation framework ensures

moral accountability by all stakeholders in business

during every phase of AI development, deployment,

use, and retirement.

There is evidence to suggest that the governments

of some countries have made considerable efforts to

ensure AI harm accountability through policy

frameworks such as the EU AI Act (2024) and the

OECD AI Principles (OECD, 2025). While these acts

provide some regulatory guidance for businesses,

they do not explicitly address AI harm accountability.

It is therefore imperative that future research focuses

on developing a dynamic AI accountability

framework for businesses.

3.3 Current Thematic Underpinning of

AI Harm and Accountability

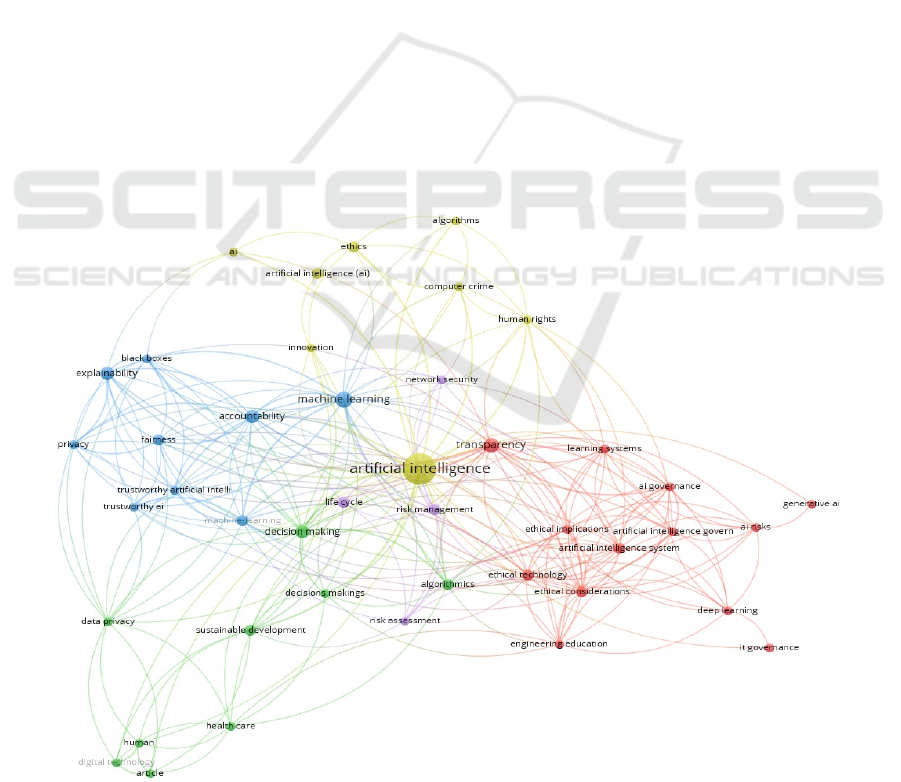

To understand the thematic issues addressed in

the current literature on AI harm accountability, a co-

occurrence clustering analysis was conducted. The

analysis revealed that, between 2012 and 2024, AI

accountability has been most closely associated with

transparency and machine learning. A cluster analysis

of the 38 articles, based on search terms in the titles,

abstracts, and keywords, identified five clusters

(Figure 3). These clusters represent the dominant and

sub-themes explored in the current literature on AI

harm and accountability in businesses. The key

clusters are AI transparency, AI accountability, AI

decision-making, AI ethics, and AI risk.

Cluster 1 (red - bottom right) is dominated by AI

transparency. This cluster highlights the importance

of a comprehensive understanding of AI transparency

through a top-down approach, starting with general

IT governance, AI governance, and AI systems

governance, down to the governance of generative

AI. Furthermore, the debate on AI transparency

Artificial Intelligence Harm and Accountability by Businesses: A Systematic Literature Review

1015

should encompass ethical technology considerations

and the broader implications of AI for businesses and

society. Emphasis is placed on the need for

transparency in disclosing AI risks, as well as in

engineering education, learning systems, and the

development of deep learning models.

Another finding of the study is the focus on

machine learning and AI accountability (blue -

middle left cluster). This cluster emphasises the need

for AI accountability in addressing harm caused by

the development and use of AI and machine learning

models and systems by businesses. The findings

highlight key AI accountability issues, including

privacy, trustworthy AI, fairness in access and use of

AI for business operations, explainability of AI

models and processes leading to AI outcomes, and the

imperative to demystify the black box conundrum.

The data for the study also revealed that AI

decision-making (green - bottom left cluster) is a

source of AI harm caused by businesses. The findings

raise concerns about the environmental harm

associated with an overreliance on AI decision-

making for sustainable development. Such reliance

has the potential to contribute to environmental

issues, including biodiversity loss, carbon emissions,

electronic waste, excessive energy and water

consumption for powering data warehouses, storage

racks, and servers, and uncontrolled pollution

(Abercrombie et al., 2024). Additionally, incorrect AI

decision-making in critical sectors like healthcare can

result in fatalities, hence, the need for robust AI

accountability frameworks to prevent direct AI-

induced physical harm, such as bodily injury, loss of

life, and deterioration of personal health, is critical

(Abercrombie et al., 2024). AI decision-making also

leads to harm in the form of data privacy breaches,

resulting in impersonation, identity theft, loss of

personality rights, intellectual property or copyright

infringement, and a general loss of autonomy or

agency (Abercrombie et al., 2024). Algorithmic harm

caused by businesses developing and utilising AI for

decision-making is also well-documented in the

current literature. Additionally, there has been

ongoing debate about the superiority of human

decision-making over AI decision-making,

particularly regarding the quality, precision,

accuracy, and reliability of AI decisions compared to

human intelligence.

Studies have demonstrated the relevance of AI

ethics (top cluster) in the use of AI by businesses,

particularly in service delivery, where it raises

privacy, security, and socio-emotional concerns

(Kennedy & Campos, 2024; Singh, 2024). Critics

have

highlighted ethical concerns regarding the

Figure 3: Co-occurrence visualization of AI harm and accountability in business.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1016

Figure 4: Thematic Map of studies on AI harm and accountability in business.

negative impact of AI on human cognitive and

thinking skills, knowledge creation, and

competencies. The study also underscores the ethical

challenges of AI innovations, including AI-driven

computer crimes and algorithmic biases, which have

significant consequences, such as racial

discrimination through biased facial recognition in

crime detection. Human rights activists have raised

fundamental concerns about the development,

deployment, and use of AI.

In business, AI harm is evident in monopolistic

practices, where financially endowed companies

exploit AI to the detriment of less-resourced

competitors, thereby creating an AI divide within

business ecosystems (Abercrombie et al., 2024). This

situation is deemed unethical as it exacerbates

inequalities in competitive advantage. AI ethics is

recognised as a cornerstone of AI governance in

business and a critical consideration in fostering

accountability, ensuring responsible and transparent

AI use, and promoting fair access to AI systems for

business operations (Singh, 2024)

The mid-bottom cluster is identified as AI risk

management. The harm caused by the development

and use of AI by businesses poses significant risks to

stakeholders within the business ecosystem,

including investors, developers, employees,

consumers, and regulators. The current debate on

managing and assigning accountability for AI harm in

businesses acknowledges the need to extend AI risk

management across the entire lifecycle of an AI

system, service, or product. This necessitates the

development of a comprehensive AI risk assessment

protocol that ensures accountability for AI harm,

spanning ideation, development, deployment, use,

and disposal of AI systems. Such an assessment must

prioritise network security, ensuring that systems

supporting AI are secure to guarantee safe, ethical,

and responsible AI-enabled operations (Kennedy &

Campos, 2024).

3.4 Implications and Future Research

Directions

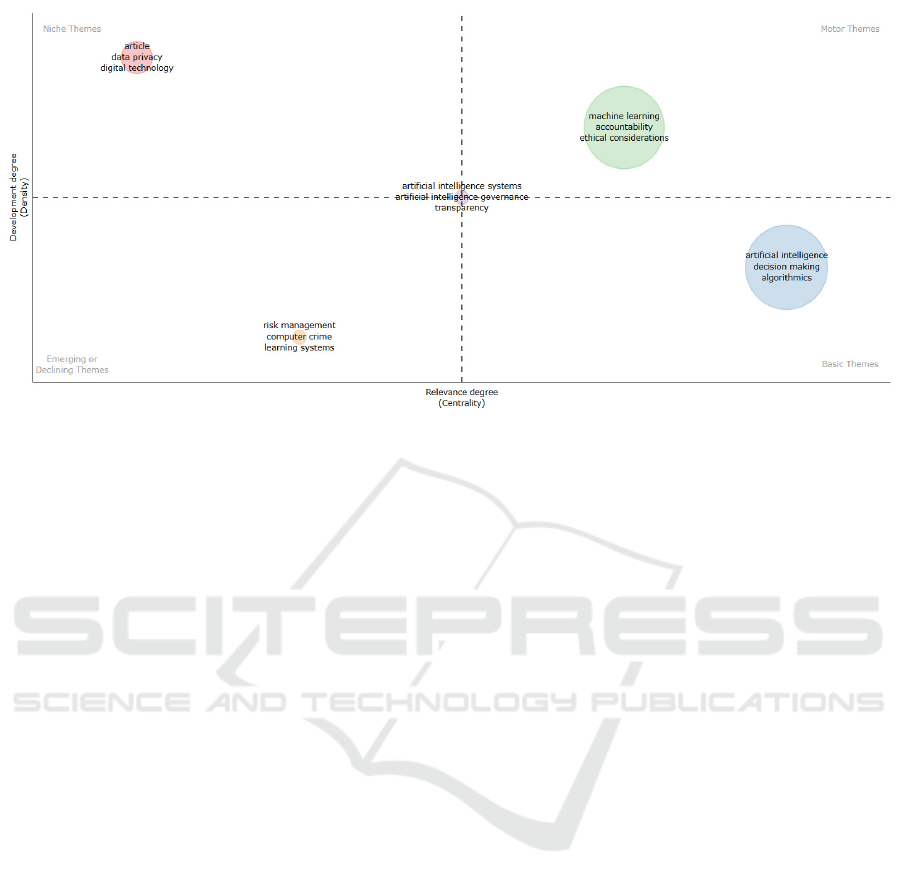

The thematic map (Figure 3) presents the current

status and future directions of research development

within the field of AI harm and accountability in

business. The map illustrates the strength (density) of

the clusters or their growth, alongside the relevance

of publications in this subject area (Cobo et al., 2011;

Cahlik, 2000). It was observed that the overall

centrality and density of publications on AI harm and

accountability in business have predominantly

focused on AI systems, AI governance, and

transparency (Figure 4). This highlights the

interdependencies between broader AI governance

and its strong connection to achieving AI system

transparency through accountability.

The results indicate that the motor themes of

machine learning, accountability, and ethical

considerations are well-developed and foundational

for driving future research on AI harm accountability

in business. The niche theme quadrant highlights

current publications on AI harm accountability in

business, particularly in the areas of article/document

mining, data privacy, and advancements in AI

technologies. While these areas may currently seem

superficial to understanding AI harm accountability

Artificial Intelligence Harm and Accountability by Businesses: A Systematic Literature Review

1017

in business, there is a pressing need for focused future

research to establish stronger connections between

these themes and machine learning, AI ethics, and

accountability.

The basic themes that emerged from the analysis

of current publications on AI harm accountability in

business include the general debate on artificial

intelligence, decision-making, and algorithms. The

connection between AI harm accountability in

business and these broader issues is crucial for

advancing scholarship in the field. Focused research

is therefore needed to explore how algorithmic and AI

decision-making can be made accountable and how

this can enhance accountability for AI harm in

business. The emerging/declining theme quadrant

underscores the need for dedicated research on AI

risk management, with particular attention to AI harm

caused by learning systems and computer-mediated

crimes.

4 CONCLUSIONS

This review paper examined AI harm and

accountability in business to understand publication

trends on the subject, identify instances of AI harm

by businesses, and explore existing AI accountability

frameworks. The study also investigated current

thematic research areas and future directions for

research development within the field of AI harm and

accountability in business. The findings revealed a

paucity of literature on the subject, suggesting that AI

harm accountability may have been an afterthought in

response to the rapid development and use of AI-

enabled systems by businesses.

The study identified several types of AI harm

caused by businesses, including economic and

employment displacement, user harm, bias and

discrimination, the digital divide, and environmental

harm. While no explicit AI harm accountability

framework was uncovered, two related policy

frameworks - the EU AI Act (2024) and the OECD

AI Principles (2025) offer some regulatory guidance

for businesses. Additionally, the current AI harm

accountability framework is informed by six cognate

frameworks: data governance, decision-making,

ethical, legal, responsible AI, and AI implementation

frameworks.

The study highlights the need for future research

to address the lack of a robust and explicit AI

accountability framework for businesses. Five

thematic areas were identified - AI transparency, AI

accountability, AI decision-making, AI ethics, and AI

risk, which form the foundation of research on AI

harm and accountability in business.

The main limitation of this study is the use of a

single data source (Scopus) for the literature search.

Although Scopus is considered the largest academic

electronic database globally, relying on a single data

source may have excluded relevant articles indexed in

other databases, thereby limiting the number of

documents identified. Future studies could address

this limitation by extending the data sources to

include platforms such as Web of Science,

EBSCOhost, and Business Source Complete to

ensure more comprehensive coverage.

The relatively small number of articles identified

on AI harm accountability highlights a gap in the

literature. Future research could expand and diversify

the search terms, screening criteria, and

inclusion/exclusion criteria to broaden the scope of

search outputs. Additionally, incorporating

categorisation and deeper analysis could enhance the

novelty and depth of future studies on the subject.

REFERENCES

Abercrombie, G., Benbouzid, D., Giudici, P., Golpayegani,

D., Hernandez, J., Noro, P., ... & Waltersdorfer, L.

(2024). A collaborative, Human-Centred taxonomy of

AI, algorithmic, and automation harms. arXiv preprint

arXiv:2407.01294.

Akramov, J., & Valiev, B. (2024). The Level of

Implementing AI and its Framework for Creating

Structured Strategy for Corporations. In 2024 4th

International Conference on Advance Computing and

Innovative Technologies in Engineering (ICACITE)

(pp. 1583-1587). IEEE.

Alshahrani, A., Griva, A., Dennehy, D., & Mäntymäki, M.

(2024). Artificial intelligence and decision-making in

government functions: opportunities, challenges and

future research. Transforming Government: People,

Process and Policy, 18(4), 678–698.

https://doi.org/10.1108/TG-06-2024-0131.

Arora, A., Gupta, M., Mehmi, S., Khanna, T., Chopra, G.,

Kaur, R., & Vats, P. (2024). Towards Intelligent

Governance: The Role of AI in Policymaking and

Decision Support for E-Governance (pp. 229–240).

https://doi.org/10.1007/978-981-99-8612-5_19.

Aslam, W., & Jawaid, S. T. (2023). Systematic Review of

Green Banking Adoption: Following PRISMA

Protocols. IIM Kozhikode Society & Management

Review, 12 (2), 213-233.

Besinger, P., Vejnoska, D., & Ansari, F. (2024).

Responsible AI (RAI) in Manufacturing: A Qualitative

Framework. Procedia Computer Science, 232, 813-

822.

Boyer, P., Donia, J., Whyne, C., Burns, D., & Shaw, J.

(2022). Regulatory regimes and procedural values for

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1018

health-related motion data in the United States and

Canada. Health Policy and Technology, 11(3), 100648.

Cahlik T. (2000). Comparison of the maps of science.

Scientometrics, 49, 373–387.

10.1023/A:1010581421990.

Camilleri, M. A. (2024). Artificial intelligence governance:

Ethical considerations and implications for social

responsibility. Data Science Approaches for

Sustainable Development, 41 (7).

Cobo M. J., Martínez M. Á., Gutiérrez-Salcedo M., Fujita

H., Herrera-Viedma E. (2015). 25 years at knowledge-

based systems: a bibliometric analysis. Knowl. Based

Syst. 80, 3–13. 10.1016/j.knosys.2014.12.035.

Cobo, M. J., López-Herrera, A. G., Herrera-Viedma, E.,

and Herrera, F. (2011). Science mapping software

tools: Review, analysis, and cooperative study among

tools,’’ J. Amer. Soc. Inf. Sci. Technol., vol. 62, no. 7,

pp. 1382–1402, doi: 10.1002/asi.21525.

Corrêa, N. K., Galvão, C., Santos, J. W., Del Pino, C., Pinto,

E. P., Barbosa, C., Massmann, D., Mambrini, R.,

Galvão, L., Terem, E., & de Oliveira, N. (2023).

Worldwide AI ethics: A review of 200 guidelines and

recommendations for AI governance. Patterns, 4(10).

https://doi.org/10.1016/j.patter.2023.100857.

Davinder Kaur, Suleyman Uslu, Kaley J. Rittichier, and

Arjan Durresi. (2022). Trustworthy Artificial

Intelligence: A Review. ACM Computing Surveys. 55,

2, Article 39, 38 pages.

https://doi.org/10.1145/3491209.

de Pedraza, P., & Vollbracht, I. (2023). General theory of

data, artificial intelligence and governance. Humanities

and Social Sciences Communications, 10(1).

https://doi.org/10.1057/s41599-023-02096-w.

de Siles, Emile Loza. (2021). Ai, on the law of the elephant:

toward understanding artificial intelligence. Buffalo

Law Review, 69(5), 1389-1470.

Dzandu, M.D. and Asiedu, S.T. (2024). The Cart before the

Horse or the Horse before the Cart – towards AI Policy

for Business Practices in Africa. In iSCSi’24 -

International Conference on Industry Sciences and

Computer Science Innovation. Porto, Portugal 29 - 31

Oct 2024 Elsevier.

Enholm, I.M., Papagiannidis, E., Mikalef, P. et al. (2022).

Artificial Intelligence and Business Value: a Literature

Review. Inf Syst Front 24, 1709–1734.

https://doi.org/10.1007/s10796-021-10186-w.

EU AI Act (2024), EU AI Act: first regulation on artificial

intelligence. Available at:

https://www.europarl.europa.eu/topics/en/article/2023

0601STO93804/eu-ai-act-first-regulation-on-artificial-

intelligence#more-on-the-eus-digital-measures-1.

accessed 20/01/2025.

Goksu, I. (2021). Bibliometric mapping of mobile

learning. Telematics and Informatics, 56, 101491.

Gouiaa, R., & Huang, R. (2024). How Do Corporate

Governance, Artificial Intelligence, And Innovation

Interact? Findings From Different Industries. Risk

Governance and Control: Financial Markets and

Institutions, 14(1), 35–52.

https://doi.org/10.22495/rgcv14i1p3.

Hickok, M. (2024). Public procurement of artificial

intelligence systems: new risks and future proofing. AI

& Soc 39, 1213–1227. https://doi.org/10.1007/s00146-

022-01572-2.

Kennedy, W. M., & Campos, D. V. (2024). Vernacularizing

Taxonomies of Harm is Essential for Operationalizing

Holistic AI Safety. In Proceedings of the AAAI/ACM

Conference on AI, Ethics, and Society (Vol. 7, pp. 698-

710).

Kitchenham, B. (2004). Procedures for performing

systematic reviews. Keele University, Issue.

Kouroutakis, A. (2024). Rule of law in the AI era:

addressing accountability, and the digital divide.

Discover Artificial Intelligence, 4(1), 1-11.

Mazzacuva, F. (2021). The Impact of AI on Corporate

Criminal Liability: Algorithmic Misconduct in the

Prism of Derivative and Holistic Theories. Revue

Internationale de Droit Penal, 92(1), 143-158.

OECD (2025). OECD AI Principles overview. Available at:

https://oecd.ai/en/ai-principles, accessed 20/01/2025.

Rezaei, M., Pironti, M., & Quaglia, R. (2024). AI in

knowledge sharing, which ethical challenges are raised

in decision-making processes for organisations?.

Management Decision.

Santos, R., Brandão, A., Veloso, B., & Popoli, P. (2024).

The use of AI in government and its risks: lessons from

the private sector. Transforming Government: People,

Process and Policy. https://doi.org/10.1108/TG-02-

2024-0038.

Schneider, J., Abraham, R., Meske, C., & Vom Brocke, J.

(2023). Artificial Intelligence Governance for

Businesses. Information Systems Management, 40(3),

229–249.

https://doi.org/10.1080/10580530.2022.2085825.

Senadheera, S., Yigitcanlar, T., Desouza, K. C.,

Mossberger, K., Corchado, J., Mehmood, R., ... &

Cheong, P. H. (2024). Understanding Chatbot Adoption

in Local Governments: A Review and Framework.

Journal of Urban Technology, 1-35.

Singh, C. L. (2024). Implications of AI and Metaverse in

Media and Communication Spaces. SN Computer

Science, 5(8), 1098.

Tremblay, M. C., Kohli, R., & Rivero, C. (2023). Data is

the New Protein: How the Commonwealth of Virginia

Built Digital Resilience Muscle and Rebounded from

Opioid and COVID Shocks. MIS Quarterly, 47(1).

Wörsdörfer, M. (2024). Mitigating the adverse effects of AI

with the European Union's artificial intelligence act:

Hype or hope?. Global Business and Organizational

Excellence, 43(3), 106-126.

Yakoot, M.S., Elgibaly, A.A., Ragab, A.M.S. et al. (2021).

Well integrity management in mature fields: a state-of-

the-art review on the system structure and maturity. J

Petrol Explor Prod Technol 11, 1833–1853.

https://doi.org/10.1007/s13202-021-01154-w.

Artificial Intelligence Harm and Accountability by Businesses: A Systematic Literature Review

1019