Evaluating a Bimodal User Verification Robustness Against Synthetic

Data Attacks

Sandeep Gupta

1,4

, Rajesh Kumar

2

, Kiran Raja

3

, Bruno Crispo

4

and Carsten Maple

5

1

Centre for Secure Information Technologies (CSIT), Queen’s University Belfast, U.K.

2

Bucknell University, U.S.A.

3

Norwegian University of Science and Technology (NTNU), Norway

4

Department of Information Engineering & Computer Science (DISI), University of Trento, Italy

5

Secure Cyber Systems Research Group (SCSRG), Warwick Manufacturing Group (WMG), University of Warwick,

Coventry, U.K.

Keywords:

Smartphones, Biometrics, User Verification, Synthetic Data, Robustness.

Abstract:

Smartphones balance security and convenience by offering both knowledge-based (PINs, patterns) and

biometric (facial, fingerprint) verification methods. However, studies have reported that PINs and patterns

can be readily circumvented, while synthetically manipulated face data can easily deceive smartphone facial

verification mechanisms. In this paper, we design a bimodal user verification mechanism that combines

behavioral (pickup gesture) and biological (face) biometrics for user verification on smartphones. This work

establishes a baseline for single-user verification scenarios on smartphones using a one-class verification

model. The evaluation is performed in two stages: first, performance is assessed in both unimodal and bimodal

settings using publicly available datasets; second, the robustness of the employed biological and behavioral

traits is examined against four diverse attacks. Our findings emphasize the necessity of investigating diverse

attack vectors, particularly fully synthetic data, to design robust user verification mechanisms.

1 INTRODUCTION

Smartphones now offer a variety of security options,

ranging from traditional PINs and patterns to more

sophisticated biometric methods like facial and fin-

gerprint verification, ensuring users can choose the

best mechanism to protect their devices. According to

the Android specifications (Android, 2025a), biomet-

rics can be a more convenient option but potentially

less secure way of verifying a user’s identity with

a device. The documentation further specifies three

classes for biometric sensors, i.e., Class 1 (formerly

Convenience), Class 2 (formerly Weak), and Class

3 (formerly Strong). These classes are determined

based on their spoof and impostor acceptance rates

and the security of the biometric pipeline. Moreover,

each class has a set of constraints, privileges, and

prerequisites. Biometric-based mechanisms can offer

a balance between security and convenience. How-

ever, Android uses biometrics as a secondary tier of

verification, in contrast to knowledge-based mecha-

nisms, which serve as the primary tier of verification

on smartphones. This implies that in the event of

biometric verification failure, the smartphone will re-

vert to knowledge-based mechanisms (Markert et al.,

2021; Smith-Creasey, 2024).

Studies have reported that knowledge-based veri-

fication mechanisms can be easily circumvented us-

ing (1) smudge-, (2) shoulder-surfing-, and (3) pass-

word inference attacks (Liang et al., 2020). Weak

passwords can be readily cracked using brute force

attacks, whereas complex passwords can add an over-

whelming cognitive load on end-users. Similarly, the

pattern-based password can be prone to shoulder surf-

ing and smudge attacks (Yang et al., 2022). Based on

available studies, the drawbacks of knowledge-based

verification mechanisms render them extremely unre-

liable as a first tier of user verification mechanism for

safeguarding numerous sensitive apps and services on

the user’s smartphone from adversaries (Gupta et al.,

2022b).

Nevertheless, recent advancements in digital face

manipulation techniques, such as swapping, morph-

ing, retouching, and synthesis, pose a significant

threat to identity and access management (Ibsen et al.,

2022; Gupta and Crispo, 2023). Studies (Tolosana

et al., 2022; Ramachandran et al., 2021) describe

digital face manipulations such as face synthesis (i.e.,

Gupta, S., Kumar, R., Raja, K., Crispo, B., Maple and C.

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks.

DOI: 10.5220/0013450100003979

In Proceedings of the 22nd International Conference on Security and Cryptography (SECRYPT 2025), pages 25-36

ISBN: 978-989-758-760-3; ISSN: 2184-7711

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

25

Biometric Traits

Baseline Verification Models

Behavioral (Pickup)

Biological (Face)

Pickup

Face

UNIMODAL

FLF (Pickup + Face)

SLF (Pickup + Face)

BIMODAL

Robustness Evaluation Methodology

Pickup

Face

FLF SLF

BASELINE

MODEL

COMPROMISED

MODALITY

Pickup

Face

Pickup

Face

Combine

Pickup

Face

Combine

ATTACK

GENERATION

AS1 AS2 AS3 AS4

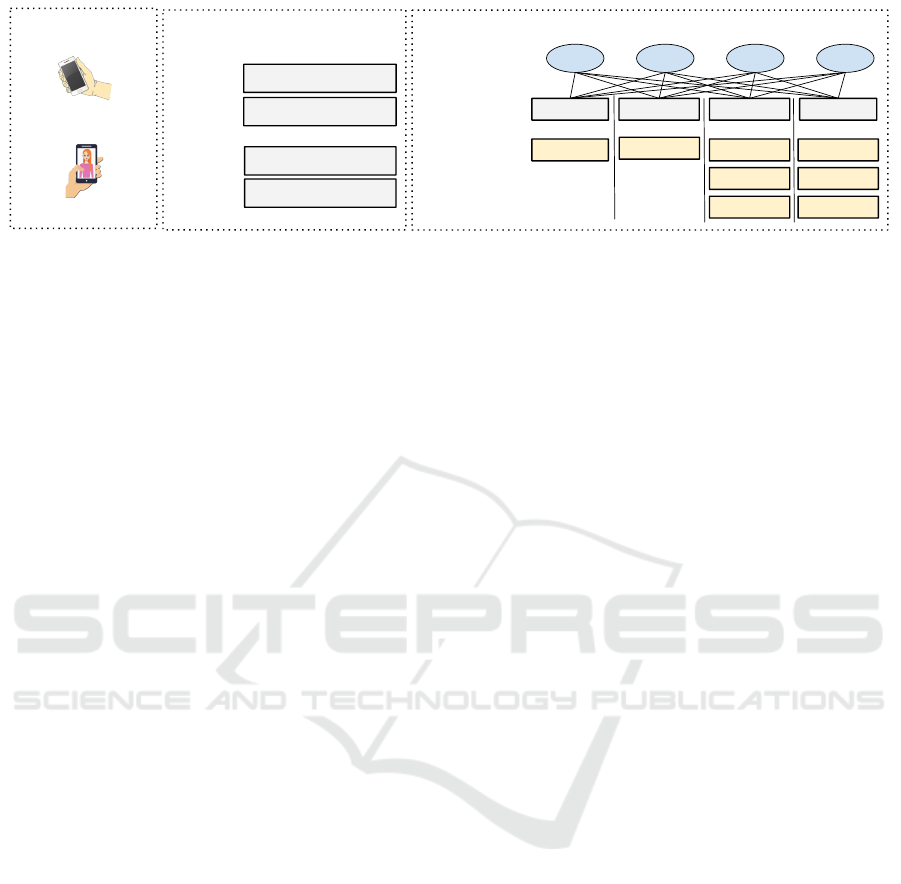

Figure 1: Pickup gestures and facial biometrics are chosen for user verification on smartphones. Two unimodal models (pickup

and face) and two bimodal models (feature-level fusion [FLF] and score-level fusion [SLF]) are designed as baselines. The

robustness of each baseline model is evaluated under modality compromise using four diverse attacks.

synthetic face images creation), identity swap (i.e., a

subject’s face in a video is replaced), face morphing

(i.e., face samples containing two or more individuals

face biometric information), attribute manipulation

(i.e., face editing or face retouching), and expres-

sion swap (i.e., face reenactment to alter the face

expression). Such manipulations in facial verification

systems are required to be addressed to strengthen

biometric systems robustness.

In this paper, we design a bimodal user verifica-

tion mechanism combining pickup gestures and facial

recognition. As illustrated in Figure 1, this mecha-

nism is leveraged as a baseline for investigating the

efficacy of the constituent behavioral and biological

traits for user verification. We first assess the per-

formance of the proposed mechanism in both uni-

modal and bimodal settings using publicly available

datasets. Subsequently, we investigate attack method-

ologies using synthetic data to evaluate the robust-

ness of biometric mechanisms for user verification on

smartphones. The Equal Error Rate (EER) of models

designed using pickup, face, feature-level fusion, and

score-level fusion against the four attacks are com-

pared in Figure 8.

The main contributions are outlined as follows:

• Investigate the robustness of a user verification

mechanism designed using behavioral and biologi-

cal biometric traits. The objective is to enhance the

security and reliability of biometrics so that they

can be promoted to the first tier of user verification

on smartphones.

• Present an architecture of the baseline bimodal user

verification mechanism and describe the blueprint

of underlying modules: data acquisition, feature

extraction and fusion, verification, and storage.

• Design a one-class verification model using the Lo-

cal Outlier Factor (LOF) classifier that incorporates

strong features extracted from user pickup gestures

and face images by leveraging TSFresh and convo-

lutional neural network (CNN) for efficient feature

engineering.

• Evaluate the proposed mechanism against four di-

verse attacks using synthetically generated data to

substantiate its robustness. We observe that Gener-

ative Adversarial Network (GAN) data attack pose

a serious threat to the face modality in contrast to

pickup gesture.

2 RELATED WORK

In this section, we investigate papers related to user

verification mechanisms that exploit facial or hand

movement biometric modalities to enhance smart-

phone security. Table 1 provides a comparison of

the face- or hand-movement-based recognition mech-

anisms that have evolved in recent years. Prior stud-

ies (Gupta et al., 2023; Kumar et al., 2018) have

demonstrated the utility of motion sensors, touch ges-

tures, and their fusion in securing smart devices, ad-

dressing evolving security needs. The integration of

biological and behavioral biometrics has the potential

to enhance user recognition systems by effectively

addressing orthogonal requirements like security and

convenience (Gupta et al., 2023).

Fathy et al. (Fathy et al., 2015) study the face

modality for active authentication. They benchmark

four different approaches for face matching on videos

consisting of faces captured by the smartphone front-

facing camera. The first method decomposes face

images into Eigenfaces (EF). The second approach

improves the basic Eigenfaces technique using Linear

Discriminant Analysis (LDA), i.e., Fisherfaces (FF).

The third approach utilizes Large-Margin Nearest

Neighbour (LMNN) designed for metric learning for

improved classification performance, particularly in

cases where the features have different scales. The

fourth approach utilizes Sparse Representation-based

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

26

Table 1: A comparison of recent face and hand-moment-based recognition mechanisms based on the algorithm/classifier used,

dataset, and performance.

Modality/Reference Algorithm/Classifier Dataset Performance

Face (Fathy et al., 2015) Eigenfaces (EF), Fisherfaces (FF), Large-

Margin Nearest Neighbour (LMNN), Sparse

Representation-based Classification (SRC)

750 videos from 50

different users

Recognition rates EF - 93.25%, FF

- 93.53%, LMNN - 94.65%, SRC -

93.25%

Face (Amos et al., 2016) FaceNet’s nn4 network with a triplet loss func-

tion

Labeled Faces in

the Wild (LFW)

Accuracy 92.92%

Face (Chen et al., 2018) DNN LFW Accuracy 99.55%

Face (Duong et al., 2019) DNN LFW, Megaface Accuracy LFW - 99.73, Megaface -

91.3%

Hand movement (Centeno et al., 2017) Motion patterns, Autoencoder 120 subjects EER 2.2%

Hand movement (Amini et al., 2018) Long Short-Term Memory (LSTM), frequency

and time domain features from motion sensors

47 subjects Accuracy 96.70%

Hand movement (Choi et al., 2021) IMU, ResNets, LSTMs 44 subjects Accuracy 96.31%

Hand movement (Li et al., 2021) CNNs 100 subjects EER 1.0%

Hand movement (Sun et al., 2016) Absolute distances measurement 19 subjects False Acceptance Rate (FAR) 0.27%,

False Rejection Rate (FRR) 4.65%

Hand movement (Boshoff et al., 2023) Single class classifier 10 subjects Accuracy 81.51%, TAR 82.45%

Face, voice, swipe (Gupta, 2020) Ensemble Bagged Tree 86 subjects TAR 96.48%, FAR 0.02%

Classification (SRC), expressing faces as a linear

combination of a dictionary of face images. Amos

et al. (Amos et al., 2016) present OpenFace, a facial

recognition library emphasizing smartphone applica-

tions. The library utilizes a modified FaceNet’s nn4

network incorporating a triplet loss function. Dur-

ing testing on the Labeled Faces in the Wild (LFW)

dataset, it demonstrated an accuracy of 92.92%.

Chen et al. (Chen et al., 2018) design Mobile-

FaceNets that use fewer than a million parameters,

making the model suitable for instantaneous face

verification on mobile and embedded devices. Mo-

bileFaceNet verified faces in the LFW dataset with

99.55% accuracy. The input resolution is also mod-

ified from 112 × 112 to either 112 × 96 or 96 × 96

to lower the overall computational cost. Duong et

al. (Duong et al., 2019) propose MobiFace, which

verified the faces in the LFW database with 99.73%

accuracy and the faces in the Megaface database with

91.3% accuracy. MobiFace consists of a combination

of 3 × 3 convolutional layers and depth-wise separa-

ble convolutional layers, along with a series of Bot-

tleneck blocks and Residual Bottleneck blocks. Ad-

ditionally, it incorporates a 1 × 1 convolutional layer

and a fully connected layer. Subsequently, a fast

down-sampling strategy is devised by decreasing the

spatial dimensions of layers or blocks with an input

size exceeding 14 × 14.

A recent study by Zhao et al. (Zhao et al., 2023)

propose AttAuth that continuously authenticates users

using touch patterns and associated Inertial Mea-

surement Unit (IMU) readings. The author utilized

(TCA-RNN). TCA-RNN is a temporal and channel-

specific attention-based recurrent neural network that

is well-suited for continuous motion data and non-

continuous touch patterns. AttAuth is evaluated on

a dataset of 100 users obtaining 2.5% EER. Centeno

et al. (Centeno et al., 2017) propose a continuous

smartphone authentication system that relies on mo-

tion data recorded by an accelerometer during par-

ticipants’ engagement in three activities (browsing a

map, reading, and writing contents) and two states of

bodily movement (seated and ambulatory). The sys-

tem authenticates users for online services and relies

on a deep learning auto-encoder to classify genuine

and impostor users, achieving an EER as low as

2.2% in a continuous authentication setup. Along the

same lines, DeepAuth (Amini et al., 2018) presents

a framework for continuous user re-authentication

for authenticating users on mobile apps that facili-

tate users for shopping. DeepAuth re-authenticates

a user with only 20 seconds of hand movement data

with 96.70% accuracy. The authors evaluate the

mechanism on 47 users’ datasets that consisted of

acceleration (captured by accelerometer) and rotation

(captured by gyroscope) of users’ hand movements

while they shopped on the target app. The data is

transformed into a series of time and frequency do-

main features, which are subsequently input into an

LSTM model with negative sampling to build a re-

authentication framework. The LSTM-based model

outperforms four classical classifiers, namely Gradi-

ent Boosting, Logistic Regression, Random Forest,

and Support Vector Machine, achieving significant

improvements in accuracy.

Choi et al. (Choi et al., 2021) propose a continu-

ous user authentication model that implicitly utilizes

hand-object manipulation behavior. This behavior is

acquired through a system based on inertial measure-

ment units (IMU) mounted on fingers and hands. The

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks

27

method applies deep residual networks (ResNets) and

bidirectional LSTMs to learn discriminative features

from the hand-object interactions to classify whether

the genuine user or an impostor performs the inter-

action. The authors use two public datasets to eval-

uate the method, obtaining an average accuracy of

96.31%. Li et al. (Li et al., 2021) propose DeFFusion

for continuous authentication that uses deep feature

fusion with Convolutional Neural Networks (CNNs).

The system employs the accelerometer and gyroscope

to record users’ mixed motion patterns, encompass-

ing movements like touch gestures on screens and

motion patterns like arm movements and gaits. The

system utilizes CNNs to extract features from various

modalities, including touch gestures, keystrokes, and

accelerometer data. Subsequently, a unified feature

vector representing the behavior of users is generated

by fusing features at different levels of abstraction us-

ing a deep feature fusion network. The classification

model is designed using a one-class support vector

machine (OC-SVM) classifier that reported a mean

EER of 1.00%.

DIVERAUTH (Gupta, 2020) is a driver verification

mechanism to guarantee the security and safety of rid-

ers using on-demand rides and ride-sharing services.

The mechanism employs the Ensemble Bagged Tree

classifier that exploits swipe, voice, and facial modal-

ities to verify drivers as they interact with the client

application while accepting a new ride. When eval-

uated on an 86-user dataset, the mechanism reported

a TAR and FAR of 96.48% and 0.02%, respectively.

Sun et al. (Sun et al., 2016) propose a user authen-

tication mechanism that relies on 3D hand gesture

signatures. This approach utilizes 3D acceleration

information obtained through an accelerometer when

a user performs gestures to unlock their phone. The

absolute distance measurement is used to evaluate the

mechanism on a 19-user dataset, reporting a FAR of

0.27% and an FRR of 4.65%. Boshoff et al. (Boshoff

et al., 2023) proposes a gesture-based authentication

mechanism that can recognize a user by exploiting the

motion to remove the smartphone from the pocket.

The authors design a classification model using a

single class classifier and evaluate the model on 500

samples collected from 10 users, achieving an accu-

racy of 81.51% with a TAR of 82.45%.

3 PILOT STUDY

3.1 Threat Model

Our increasing reliance on smartphones for multitudi-

nous tasks makes them a lucrative target for adver-

saries (Gupta et al., 2019). Users can be susceptible

to financial fraud, data theft, or other adverse inci-

dents if their smartphones are misplaced or stolen.

Users can select knowledge-based or biometric-based

user verification. Studies have identified numerous

shortcomings in knowledge-based verification (Liang

et al., 2020; Yang et al., 2022). In contrast, biomet-

ric mechanisms can provide more security, but they

are used as a secondary tier of verification. Should

biometric authentication fail (including due to forced

failure), the device will fall back to knowledge-based

authentication, which can be easily breached. We

cogitate a scenario in which the adversary possesses

a user’s smartphone, is aware of both types of veri-

fication mechanisms available on the device, and is

capable of breaching its security using generated data.

We then evaluate the proposed baseline mechanism

robustness using synthetically generated data, which

are further explained in Section 5

3.2 System Architecture

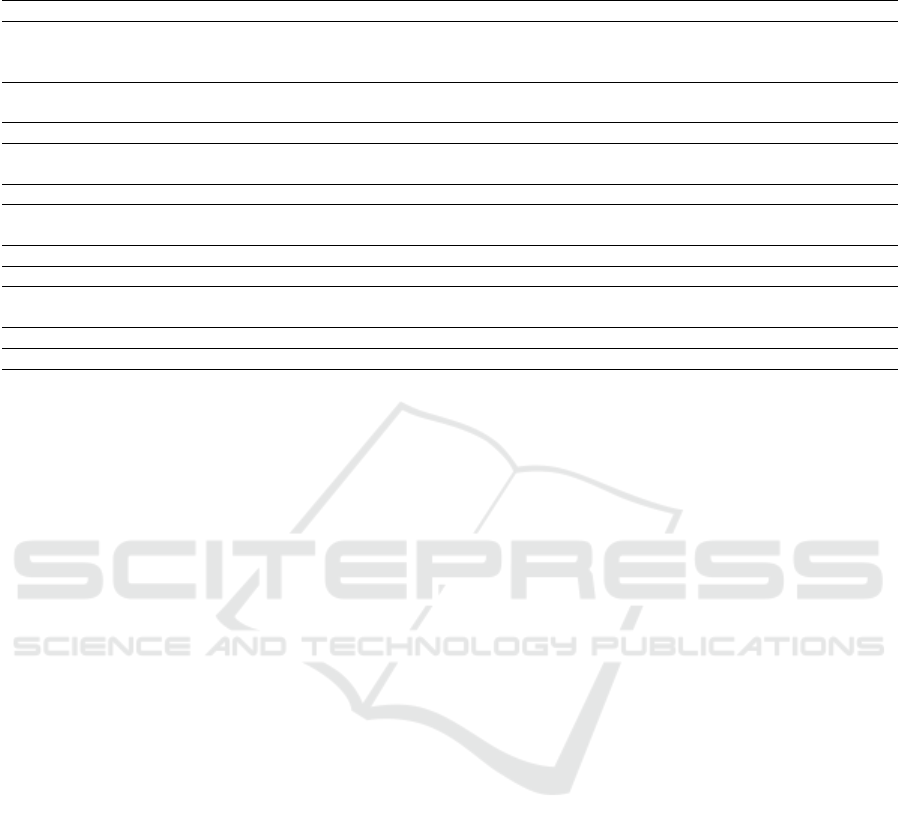

Figure 2 presents the system architecture of the pro-

posed mechanism. It comprises modules such as Data

Acquisition, Feature Extractor and Fusion, Classi-

fier, and Storage. The Data Acquisition module is

equipped with four integrated motion sensors that

capture the micro hand movements of the users when

they pickup the smartphone, along with a front cam-

era for snapping their facial images. Section 4.2 ex-

plains how the Feature Extraction and Fusion module

extracts features from a user pickup gesture using

the Tsfresh library and cropped face images using a

CNN, which are fused to generate a unique signature.

Section 4.3 describes the one-class classifier for de-

signing a user verification model. The classifier is

trained with a user’s pickup gesture and face during

enrollment to obtain a verification model. The storage

module securely stored the trained models. During

normal operation, the input query, i.e., fused pickup

gesture and face features, is directly fed to the user’s

trained model to accept or reject the query.

3.3 Sensors Details

The mechanism uses built-in Front Camera, and

four motion sensors viz. Accelerometer, Gyroscope,

Magnetometer, and Gravity sensor embedded into

smartphones. The front-facing camera is required

for capturing the user’s face image, while motion

sensors are necessary to detect the pickup gesture.

The Accelerometer precisely measures acceleration

in three-dimensional space, excluding gravity. Ad-

ditionally, the Gyroscope is employed to identify ro-

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

28

Enroll-

ment?

Tsfresh & ResNet

Data Acquisition

Features Extractor and

Fusion

Storage

Trained

Models

Yes

No

Classifier

Train OC models

Yes/No

Motion

Sensors

Front

Camera

PICKUP

FACE

Figure 2: The mechanism acquires a user’s pickup gesture

from a resting position (i.e., when the user first picked her

smartphone) to a raised position (i.e., when the user brings

it in front of her and presses a button for taking a selfie) for

clicking a face image. Data Acquisition, Feature Extractor

and Fusion, Classifier, and Storage are the major modules

in the proposed system.

tational angle changes over time, sensing spin and

twist movements. The Magnetometer plays a crucial

role in determining the magnetic north by detecting

the direction of the most intense magnetic force at a

specific location. Lastly, the Gravity sensor measures

gravity’s direction and intensity, completing the set of

sensors required for designing the system.

4 USER VERIFICATION

MECHANISM DESIGN

METHODOLOGY

This section explains the methodology for dataset

generation, feature extraction, and classifier selection

to design the proposed user verification mechanism as

a based for robustness evaluation.

4.1 Datasets

We generate a chimerical dataset comprising of 86

users with 140 samples per user that contain the

pickup gesture data and face images obtained from

two publicly available datasets, i.e., pickup gesture

dataset (Gupta et al., 2020) and MOBIO dataset (Mc-

Cool et al., 2012). It can be safely assumed that

acquiring these two modalities from two different

subjects will not affect the proof-of-concept design

because both modalities are two independent biomet-

rics (Gupta et al., 2022a). However, we ensure that

data belonging to the male users and female users for

both datasets are combined appropriately. Also, com-

bining the independent datasets self-anonymize the

participants for experimental purposes that indirectly

benefits participants’ privacy (Joshi et al., 2024).

4.2 Feature Extraction and Fusion

The feature extraction process involves extracting

more interpretable features from sensor data, reducing

data dimensionality, better separability of data points,

and minimization of overfitting risks. Feature fusion

combines data from multiple sources or modalities to

improve the robustness of a biometric system. Fea-

ture extraction and fusion are useful for faster model

training and inference times.

4.2.1 Pickup Gesture

Motion sensors (Android, 2025b) described in Sec-

tion 3.3 captured the user’s pickup gesture three-

dimensional space, X , Y , and Z. We extract useful and

productive features from the three raw streams per

sensor using Tsfresh library (Tsfresh, 2025). Tsfresh

library can provide many features useful for system-

atic feature engineering for sequential data. A suit-

able feature distribution identification can help reduce

classifier space redundancy (Perera and Patel, 2021).

The Tsfresh library offers sixty-three methods for

characterizing time series, and a comprehensive set

of 794-time series features can be computed (Christ

et al., 2018). These sixty-three methods can be useful

for deriving simple features like absolute energy, en-

tropy, linear least-squares regression, mean, variance,

skew, kurtosis, peaks, and valleys, as well as complex

features like time setback symmetry statistics. The

library also supports feature selection capabilities for

identifying highly significant and less influential at-

tributes. We exploit the feature extraction and se-

lection process to get 100 features per sensor that

provides 400 features.

We apply min-max normalization on the extracted

features for each user. We implement it by training

the normalization model on the user training data

and saving the model object. Subsequently, we use

the model to normalize the user testing data and the

impostors’ dataset.

4.2.2 Face

We use ResNet-100 network using Elasticface

loss (Boutros et al., 2022) to obtain low-dimensional

facial representation (embedding) for a given user’s

image, extracted from the second last layer of the

network. The ResNet-100 network input layer takes

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks

29

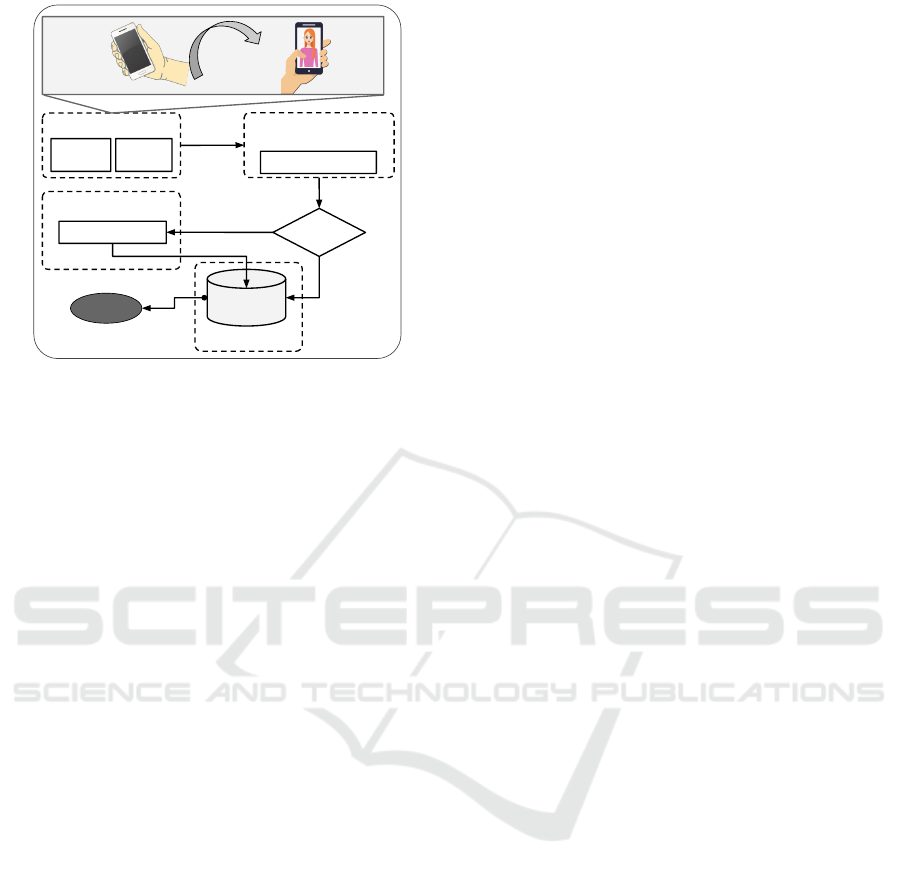

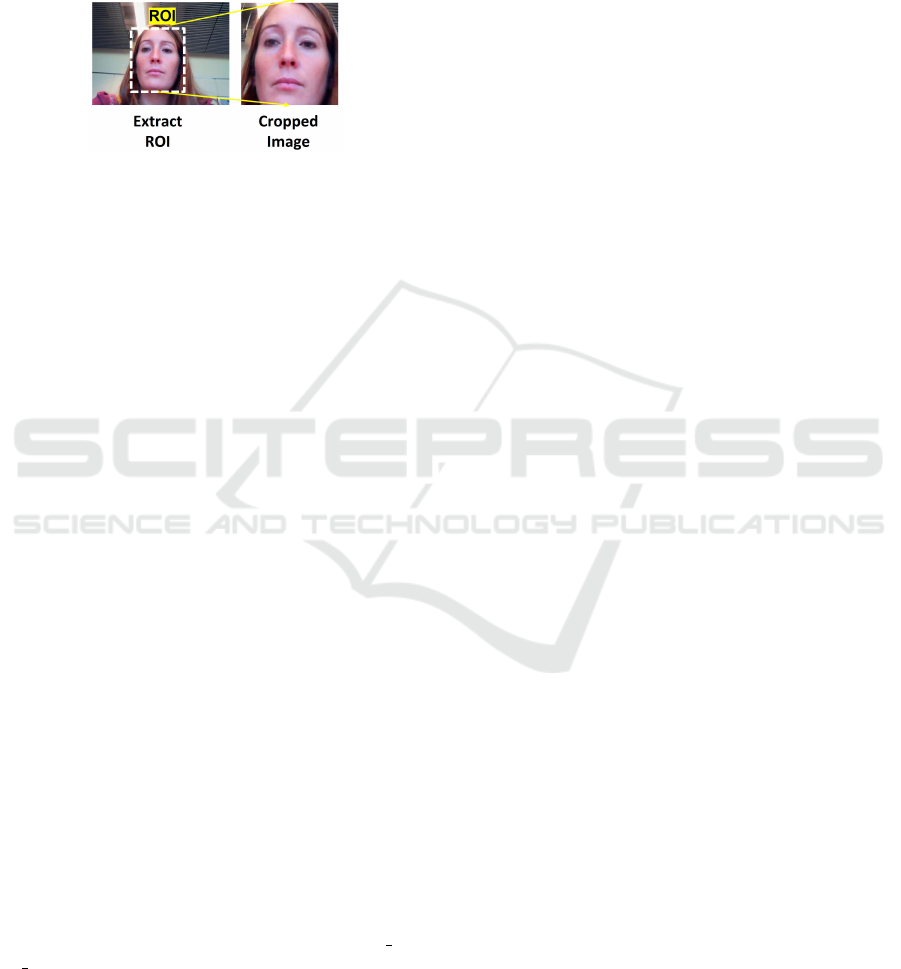

fixed-size face images. We crop the user’s face image,

extracting the ROI using a face detection algorithm as

shown in Figure 3 to feed to the network. The network

evaluation involves processing images with dimen-

sions 112 × 112 × 3. These images are normalized to

ensure pixel values fall within −1 to 1, generating 512

feature embeddings.

Figure 3: Prepossessing image to extract the region of

interest using face detection algorithm

4.2.3 Fusion Strategy

We apply feature- and score-level fusion. Fusion

plays a crucial role in improving accuracy and robust-

ness.

• Feature Fusion: we concatenate 400 features of

pickup gesture and 512 features of face modality to

generate a combined dataset with 912 features per

observation.

• Score Fusion: We combine the scores produced by

the classifier for pickup gesture and face modality,

obtaining a mean score. Genuine scores (σ

n

) are

transformed into normalized scores (σ

n

) using a lo-

gistic function: σ

n

= 1/(1 +exp(−β×σ

s

)) (Kumar

et al., 2018). The optimal value of β is determined

by validating a model performance on the training

set genuine scores. The logistic function is more

useful over other normalization methods for one-

class verification, where only genuine scores are

available during the training of a verification model.

4.3 Classifier Selection

We employ the Local Outlier Factor (LOF) approach,

which assesses the local density deviation concerning

neighboring samples for a given data point. (Gupta

et al., 2022b). The anomaly score is calculated based

on how isolated the object is compared to its sur-

rounding neighborhood. Fine-tuning the classifier in-

volves choosing (1) the number of neighbors and one

of three search algorithms, namely Auto, ball tree,

kd tree, or brute force, for obtaining optimal nearest

neighbors; (2) specifying the outlier contamination

percentage to pre-determine the proportion of out-

liers; and (3) selecting a distance metric from two

options viz. Euclidean distance or Manhattan distance

to calculate optimal pairwise distances between sam-

ples.

Hyper-parameter search can be an efficient

method for optimizing a model’s performance. The

best combination of hyper-parameters reduces the risk

of overfitting, which is essential for a resilient and

accurate model design. We apply BayesSearchCV

to search the optimal hyperparameters combination

from the predefined parameter grid. The parameters

selected are (i) number of neighbors - 10, 20, and 25,

(ii) leaf size passed to BallTree or KDTree - 20 and

30, and (iii) metric - cosine for computing cosine sim-

ilarity between two samples. BayesSearchCV uses

stepwise Bayesian optimization, i.e., a fixed number

of parameter settings is sampled from specified dis-

tributions, to obtain the most promising hyperparam-

eters in the problem space.

5 ROBUSTNESS EVALUATION

METHODOLOGY

This section outlines a data generation methodol-

ogy to establish the robustness of our proposed user

verification mechanism. Generated data samples

can be classified as either fully synthetic or semi-

synthetic (Joshi et al., 2024). Semi-synthetic sam-

ples typically represent real subjects with modified

semantics, while fully synthetic samples are gener-

ated directly from a learned distribution, often using

generative models or random sampling.

5.1 Attack Scenario 1 (AS1)

The first attack scenario involves the use of data sam-

ples that do not belong to the genuine user, i.e., U

S

/∈

U

G

. This scenario represents the case of zero-effort

generated data (Agrawal et al., 2022). We simulate

the attack on individual and combined modalities by

using samples from other users in the dataset to query

the genuine user model.

5.2 Attack Scenario 2 (AS2)

We use a statistical method to generate random sam-

ples for each user. This scenario represents the case

of semi-synthetically generated data. Assume U

n×m

is the training dataset of a user having n samples

and m features. We first determine the minimum and

maximum value in U

n×m

and then generate N random

sample with m features for pickup gesture and face

modality. After that, we combine random pickup

gesture and face modality to generate the combined

random sample.

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

30

5.3 Attack Scenario 3 (AS3)

A masterprint attack, a deceptive technique similar to

a wolf attack (Nguyen et al., 2022), where an adver-

sary employs a synthetic biometric sample to mimic

a genuine trait. We obtain a master biometric data

sample for each modality, i.e., pickup gesture and

face, by statistically averaging the training datasets

(U

n×m

) across all 86 users, ensuring a representative

sample for each modality. Individual master biomet-

ric data from the pickup gesture and face modality are

combined to generate a combined master biometric

data sample.

5.4 Attack Scenario 4 (AS4)

We apply generative adversarial networks (GANs) to

generate fully synthetic data. Typically, GANs consist

of a generator and a discriminator that creates syn-

thetic samples using an iterative process until samples

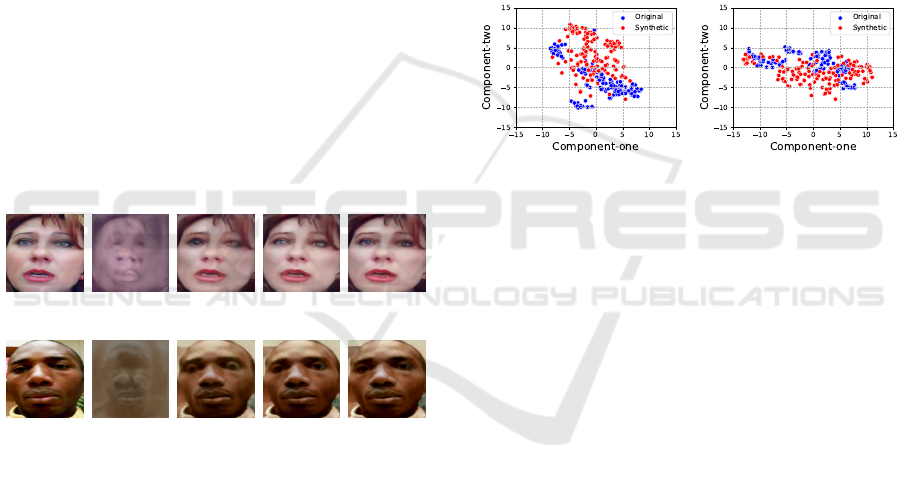

are indistinguishable from real data. Figure 4 displays

arbitrarily selected users’ synthetic images generated

using StyleGAN2 (Karras et al., 2020) after 100, 500,

1000 and 1500 iterations. It can be observed that a

user’s synthetic image after 1500 iterations is highly

comparable to the original image of the user.

User25:Original I-100 I-500 I-1000 I-1500

User62:Original I-100 I-500 I-1000 I-1500

Figure 4: Comparison of the original image quality with

the synthetic images generated at different epoch counts

(100, 500, 1000, and 1500) by the NVIDIA StyleGAN2

generator (Karras et al., 2020). The higher the number of

iterations, the closer the generated faces are to the reference

face.

We utilize Conditional Tabular GAN (CT-

GAN) (Xu et al., 2019) to generate synthetic data

representing pickup gesture. CTGAN is an extension

of the traditional GAN architecture that exploits fully

connected networks in both the generator and dis-

criminator components to effectively determine pos-

sible correlations between the features, represented as

columns in the table. This allows the model to pre-

cisely understand the given tabular data distribution

and generate synthetic samples using the distribution

knowledge. CTGAN can overcome the limitations

of traditional GANs by effectively handling non-

Gaussian and multi-modal distributions using mode-

specific normalization techniques (Mo and Kumar,

2022).

Figure 5 compares genuine and synthetic pickup

data of the arbitrarily selected users. To visualize

the user’s genuine and synthetic pickup data, we used

t-SNE (T-Distributed Stochastic Neighbor Embed-

ding). The perplexity is set to 40, and the number of

optimization iterations is set to 300 (LJPvd and Hin-

ton, 2008). t-SNE transforms data points with simi-

larities to obtain cumulative probabilities. The goal is

to reduce the Kullback-Leibler (KL) divergence be-

tween the high-dimensional data and the cumulative

probabilities associated with the low-dimensional em-

bedding. The KL divergence score after 300 iterations

for User25 and User62 are 0.47 and 0.55, respectively.

User25 (KL-0.47) User62 (KL-0.56)

Figure 5: Illustration of original vs. synthetic (generated

using CTGAN) pickup gesture data of users 25 and 62. D

KL

(KL divergence or relative entropy or I-divergence) after

300 iterations determines the difference between the prob-

ability distributions of original and synthetic data points

in the t-SNE transformed dimensions. The D

KL

tending

to zero means the two probability distributions are more

identical.

6 EVALUATION

A user verification process can be described as a one-

to-one comparison during inference. The legitimacy

of a user is verified using a process that involves

comparing the user’s biometric probe(s) with their

corresponding biometric reference(s). We report the

results using a True Acceptance Rate (TAR), False

Acceptance Rate (FAR), and Detection Error Trade-

off (DET) Curve. Readers interested in performance

metrics can refer to Gupta et al. (Gupta et al., 2023)

for more information.

6.1 Results

6.1.1 Performance Evaluation

We train the models using a holdout testing method

segregating a user’s samples into separate and non-

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks

31

overlapping training and testing sets. The user’s train-

ing dataset is used for generating one-class verifica-

tion models. Figure 6 compares the performance of

individual models designed using pickup gesture and

face, and their combination (pickup gesture + face)

using feature- and score-level fusion in terms of TAR.

Pickup

Face

Feature-level

fusion

Score-level

fusion

80

85

90

95

100

89.10

98.23

97.73

98.09

Model type

True acceptance rate (%)

Figure 6: Verification model’s performance (%) designed

with pickup gesture, face, and their combination (pickup

gesture + face) using feature- and score-level fusion

The model, in a unimodal setting, achieves an

average TAR of 89.10% and 98.23% for the pickup

gesture and face, respectively. Whereas, in a bimodal

setting, it achieves an average TAR of 97.73% and

98.09% for the combined modality using feature-level

and score-level fusion, respectively, to verify a gen-

uine user.

6.1.2 Robustness Evaluation

The robustness of unimodal and bimodal baseline

models are evaluated using the corresponding attack

datasets generated as described in Section 5. Table 2

presents the robustness results in terms of FAR. An

average FAR of 4.73% for pickup gesture and 0.44%

for face modality under AS1. Further, the average

FARs for pickup gesture and face against AS2 attack

are 0% and 20.93%, against AS3 are 0% each, and

against AS4 are 2.22% and 75.34%. We note that the

pickup gesture is more robust against attack scenarios

2, 3, and 4 than the face modality.

Subsequently, we apply feature-level and score-

level fusion that significantly improve FAR to 0.33%

and 1.89% against AS1. An average FAR of 15.58%,

0.34%, and 5.46% against AS2 with combined modal-

ity, only pickup gesture, and only face compromised,

respectively, are observed after applying feature-level

fusion. The FAR remains 0% against AS3 with com-

bined or individual modality compromised. The com-

bined model against synthetic data attack with com-

bined pickup gesture and face modality compromised

does not perform efficiently, exhibiting an average

FAR of 78.72%, 0.81%, and 27.09%. We note that the

FAR for compromised face and combined modalities

is much higher than the compromised pickup gesture

modality.

An average FAR of 4.07%, 1.2%, and 53.49%

against AS2 with combined modality, only pickup

gesture, and only face compromised, respectively, are

observed after applying score-level fusion. The FAR

remains 0% against the AS3 with combine and face

modality compromised. However, a slight increase

of 0.47% in the FAR with pickup gesture compro-

mised. The combined model against synthetic data at-

tack with combined pickup gesture and face modality

compromised does not perform efficiently, exhibiting

an average FAR of 56.63%, 14.07%, and 89.53%,

which is less effective than the feature-level fusion.

Here also we note that the FAR for compromised

face and combined modalities is much higher than the

compromised pickup gesture modality.

6.2 Verification Model Analysis

Figure 7 compares the DET plots for pickup, face,

combined feature-level fusion, and combined score-

level fusion for all 86 users. The DET plot closer to

the origin (i.e., towards the coordinates [0,0]) sug-

gests that the system tends to perform better. The

integration of pickup and facial data at the score level

notably enhances the performance of the verification

model.

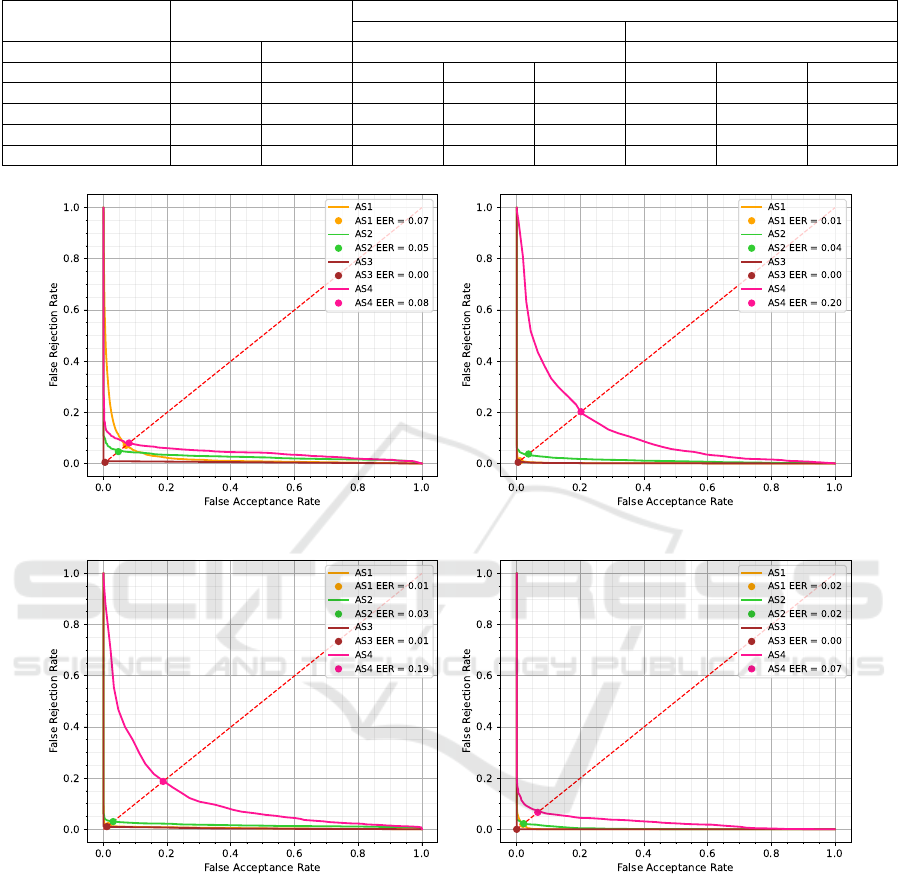

Figure 7a, 7b, 7c, and 7d compares the DET

curves for individual and combined modalities under

four attack scenarios. The pickup modality is rela-

tively more robust to the AS4 than face as a minimal

increase of 0.01 in the EER under the AS4 from

the AS1 and EER under AS2 and AS3 attacks only

reduced. The face modality is highly susceptible to

AS4; there is a sharp increase in EER by 20% in

contrast to the AS1 (baseline). The modalities fusion

tends to improve the robustness of the model, which

can be examined in Figure 7c and 7d. Particularly, the

score-level-fusion exhibits higher reliance than the

feature-level-fusion for the model against each attack

scenario. It can be deduced that the fusion of modal-

ities can improve system robustness by mitigating

the limitations or uncertainties present in individual

modalities.

7 DISCUSSIONS, LIMITATIONS &

FUTURE DIRECTIONS

In the context of smartphones, knowledge-based veri-

fication mechanisms are easily susceptible to smudge

attacks, shoulder surfing attacks, and password infer-

ence attacks, whereas digital face manipulation al-

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

32

Table 2: The robustness evaluation results of each verification model against the four set of attacks in term of FAR (%).

Individual Modality Combined Modalities

Feature-level fusion Score-level fusion

Baseline Models Pickup Face Combine Combine

Compromised Modality Pickup Face Combine Pickup Face Combine Pickup Face

AS1 4.73 0.44 0.33 - - 1.89 - -

AS2 0.0 20.93 15.58 0.34 5.46 4.07 1.4 53.49

AS3 0.0 0.0 0.0 0.0 0.0 0.0 0.47 0.0

AS4 2.44 75.34 78.72 0.81 27.09 56.63 14.07 89.53

(a) Pickup (b) Face

(c) Combined feature-level fusion (d) Combined score-level fusion

Figure 7: Decision Error Trade-off curves for individual and combined modalities under four attack scenarios. The pickup

modality is relatively more robust to AS4 than face as the EER increased only by 0.01 under the AS4 from the AS1 in contrast

to the face modality where the EER for the AS4 increases by 20% from the AS1 (baseline). Overall, the score-level fusion

exhibits higher reliance than the feature-level fusion.

gorithms, e.g., swapping, morphing, retouching, or

synthesizing, can be detrimental to underlying facial

verification techniques. Further, Android specifica-

tions described that biometrics can be used as a sec-

ondary tier of verification compared to knowledge-

based mechanisms for verifying smartphone users.

However, an attacker can deliberately distort the sec-

ondary verification tier, causing smartphones to revert

to the first verification tier, thus exposing the afore-

mentioned vulnerabilities. Owing to the disparities in

leveraging resources, vulnerabilities, and threats, bio-

metrics can provide higher security and convenience

compared to the prevalent knowledge-based authen-

tication for user verification on smartphones (Gupta

et al., 2023).

The advancement of System-on-chip (SoC) tech-

nology enables the fabrication of smarter, more com-

pact, highly precise, and efficient sensors. Moreover,

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks

33

they can be easily managed and controlled by sim-

ple software APIs, leading to the implementation of

robust user verification systems based on biometrics.

The newer smartphones supporting biometric authen-

tication provide inbuilt hardware security features

where the user’s biometric data can be stored securely.

Samsung (Samsung, 2025), Xiaomi (Samsung, 2025),

Oppo (Zhang et al., 2020), and Huawei (Busch

et al., 2020) facilitate trusted execution environments

(TEEs) that can be effective for implementing veri-

fication module and storing sensitive data, thus, ful-

filling basic requirements for designing a biometric

system. Additionally, leading smartphone manufac-

turers like Samsung, Xiaomi, Oppo, and Huawei are

continually improving sensors, e.g., cameras, motion

sensors, etc., that can be leveraged for acquiring both

these modalities, thus, opening avenues for replac-

ing conventional pin- or password-based verification

mechanisms with more robust and usable biometric-

based user verification mechanisms.

Figure 8 shows the comparison of EERs of four

baseline user verification mechanisms against four

attacks. Our evaluation highlights the vulnerability

of facial recognition mechanism against the GAN

generated synthetic data attack. Studies have also

demonstrated that facial verification models based

on DNNs lack robustness against adversarial attacks,

including imperceptible perturbations (P

´

erez et al.,

2023; Xu et al., 2022). However, concerning the

results reported in Section 6.1.1, pickup modality per-

forms better against all four attacks than face modal-

ity, which can be observed in Figure 7a and Fig-

ure 7b. Combined feature-level and score-level fusion

comparatively improved the performance against the

AS2 (refer Figure 7c and 7d). AI-synthesized data

can adversely impact the trustworthiness of biomet-

rics mechanisms. Future work in this direction can

investigate solutions against synthetic data attacks.

8 CONCLUSIONS

We investigated the robustness of behavioral and bi-

ological biometric traits against four different types

of attacks using a bimodal user verification system.

The baseline bimodal mechanism is designed using

a one-class verification approach employing a LOF

classifier and evaluated on a dataset of 86 users. It

does not require access to impostor samples for train-

ing from other users, which is critical to users’ data

privacy. The mechanism obtained a TAR of 89.10%

with a FAR of 4.73%, 0%, 0%, and 2.44% against

four attacks respectively, for only pickup modality

and a TAR of 98.23% with a FAR of 0.44%, 20.93%,

AS1 AS2 AS3 AS4

−0.02

0.00

0.02

0.04

0.06

0.08

0.10

0.12

0.14

0.16

0.18

0.20

Attack type

EER (%)

Pickup

Face

Feature-level fusion Score-level fusion

Figure 8: Comparison of EER of the user verification mech-

anism against four attack scenarios (AS1 to AS4) before and

after fusion

0%, and 75.34% against four attacks respectively, for

only face modality. The mechanism with modality

fusion obtained a TAR of 97.73% with a FAR of

0.33%, 20.93%, 0%, and 78.72% against four attacks

after feature-level fusion and a TAR of 98.09% with a

FAR of 1.89%, 4.07%, 0%, and 56.63% against four

attacks respectively after score-level fusion. As the

primary goal is to enhance robustness, a slight reduc-

tion in TAR after fusion, relative to the face TAR,

can be considered an acceptable trade-off. The fusion

of two modalities enhanced the model’s performance

and overall robustness. However, the model’s robust-

ness against the synthetic face data attack can be the

future work.

ACKNOWLEDGMENT

With the support of MUR PNRR Project PE SERICS

- EMDAS (PE00000014) - CUP B53C22003950001

funded by the European Union under NextGenera-

tionEU.

REFERENCES

Agrawal, M., Mehrotra, P., Kumar, R., and Shah, R. R.

(2022). Gantouch: An attack-resilient framework for

touch-based continuous authentication system. IEEE

Transactions on Biometrics, Behavior, and Identity

Science, 4(4):533–543.

Amini, S., Noroozi, V., Pande, A., Gupte, S., Yu, P. S.,

and Kanich, C. (2018). Deepauth: A framework

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

34

for continuous user re-authentication in mobile apps.

In Proceedings of the 27

th

ACM International Con-

ference on Information and Knowledge Management,

pages 2027–2035.

Amos, B., Ludwiczuk, B., Satyanarayanan, M., et al.

(2016). Openface: A general-purpose face recognition

library with mobile applications. CMU School of

Computer Science, 6(2):20.

Android (Accessed on 10-01-2025a). Biometrics. https://so

urce.android.com/docs/security/features/biometric.

online web resource.

Android (Accessed on 10-01-2025b). Developers guide:

Sensorevent. https://developer.android.com/referenc

e/android/hardware/SensorEvent.html. online web

resource.

Boshoff, D., Scriba, A., Nkrow, R., and Hancke, G. P.

(2023). Phone pick-up authentication: A gesture-

based smartphone authentication mechanism. In Pro-

ceedings of the IEEE International Conference on In-

dustrial Technology (ICIT), pages 1–6. IEEE.

Boutros, F., Damer, N., Kirchbuchner, F., and Kuijper, A.

(2022). Elasticface: Elastic margin loss for deep face

recognition. In Proceedings of the IEEE/CVF Con-

ference on Computer Vision and Pattern Recognition,

pages 1578–1587.

Busch, M., Westphal, J., and M

¨

uller, T. (2020). Unearthing

the {TrustedCore}: A critical review on {Huawei’s}

trusted execution environment. In Proceedings of the

14

th

USENIX Workshop on Offensive Technologies

(WOOT 20).

Centeno, M. P., van Moorsel, A., and Castruccio, S.

(2017). Smartphone continuous authentication us-

ing deep learning autoencoders. In Proceedings of

the 15

th

Annual Conference on Privacy, Security and

Trust (PST), pages 147–1478. IEEE.

Chen, S., Liu, Y., Gao, X., and Han, Z. (2018). Mobile-

facenets: Efficient cnns for accurate real-time face

verification on mobile devices. In Proceedings of the

13

th

Chinese Conference on Biometric Recognition,

pages 428–438. Springer.

Choi, K., Ryu, H., and Kim, J. (2021). Deep residual

networks for user authentication via hand-object ma-

nipulations. Sensors, 21(9):2981.

Christ, M., Braun, N., Neuffer, J., and Kempa-Liehr, A. W.

(2018). Time series feature extraction on basis of

scalable hypothesis tests (tsfresh–a python package).

Neurocomputing, 307:72–77.

Duong, C. N., Quach, K. G., Jalata, I., Le, N., and Luu, K.

(2019). Mobiface: A lightweight deep learning face

recognition on mobile devices. In Proceedings of the

10

th

international conference on biometrics theory,

applications and systems (BTAS), pages 1–6. IEEE.

Fathy, M. E., Patel, V. M., and Chellappa, R. (2015).

Face-based active authentication on mobile devices.

In Proceedings of the IEEE International Conference

on acoustics, speech and signal processing (ICASSP),

pages 1687–1691. IEEE.

Gupta, S. (2020). Next-generation user authentication

schemes for IoT applications. PhD thesis, PhD thesis,

Ph.D. dissertation, University of Trento, Italy.

Gupta, S., Buriro, A., and Crispo, B. (2019). A risk-driven

model to minimize the effects of human factors on

smart devices. In International Workshop on Emerg-

ing Technologies for Authorization and Authentica-

tion, pages 156–170. Springer.

Gupta, S., Buriro, A., and Crispo, B. (2020). A chimerical

dataset combining physiological and behavioral bio-

metric traits for reliable user authentication on smart

devices and ecosystems. Data in brief, 28:104924.

Gupta, S. and Crispo, B. (2023). Usable identity and access

management schemes for smart cities. In Collabora-

tive Approaches for Cyber Security in Cyber-Physical

Systems, pages 47–61. Springer.

Gupta, S., Kacimi, M., and Crispo, B. (2022a). Step

& turn—a novel bimodal behavioral biometric-based

user verification scheme for physical access control.

Computers & Security, 118:102722.

Gupta, S., Kumar, R., Kacimi, M., and Crispo, B. (2022b).

Ideauth: A novel behavioral biometric-based implicit

deauthentication scheme for smartphones. Pattern

Recognition Letters, 157:8–15.

Gupta, S., Maple, C., Crispo, B., Raja, K., Yautsiukhin,

A., and Martinelli, F. (2023). A survey of human-

computer interaction (hci) & natural habits-based be-

havioural biometric modalities for user recognition

schemes. Pattern Recognition, page 109453.

Ibsen, M., Rathgeb, C., Fischer, D., Drozdowski, P.,

and Busch, C. (2022). Digital face manipulation

in biometric systems. In Proceedings of the Hand-

book of Digital Face Manipulation and Detection:

From DeepFakes to Morphing Attacks, pages 27–43.

Springer International Publishing Cham.

Joshi, I., Grimmer, M., Rathgeb, C., Busch, C., Bremond,

F., and Dantcheva, A. (2024). Synthetic data in human

analysis: A survey. IEEE Transactions on Pattern

Analysis and Machine Intelligence.

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J.,

and Aila, T. (2020). Training generative adversarial

networks with limited data. In Proceedings of the

NeurIPS, pages 1–12.

Kumar, R., Kundu, P. P., and Phoha, V. V. (2018). Continu-

ous authentication using one-class classifiers and their

fusion. In Proceedings of the 4

th

International Con-

ference on Identity, Security, and Behavior Analysis

(ISBA), pages 1–8. IEEE.

Li, Y., Tao, P., Deng, S., and Zhou, G. (2021). Deffusion:

Cnn-based continuous authentication using deep fea-

ture fusion. ACM Transactions on Sensor Networks

(TOSN).

Liang, Y., Samtani, S., Guo, B., and Yu, Z. (2020). Behav-

ioral biometrics for continuous authentication in the

internet-of-things era: An artificial intelligence per-

spective. IEEE Internet of Things Journal, 7(9):9128–

9143.

LJPvd, M. and Hinton, G. (2008). Visualizing high-

dimensional data using t-sne. J Mach Learn Res,

9(2579-2605):9.

Markert, P., Bailey, D. V., Golla, M., D

¨

urmuth, M., and

Aviv, A. J. (2021). On the security of smartphone un-

Evaluating a Bimodal User Verification Robustness Against Synthetic Data Attacks

35

lock pins. ACM Transactions on Privacy and Security

(TOPS), 24(4):1–36.

McCool, C., Marcel, S., Hadid, A., Pietik

¨

ainen, M., Mate-

jka, P., Cernock

`

y, J., Poh, N., Kittler, J., Larcher, A.,

Levy, C., et al. (2012). Bi-modal person recognition

on a mobile phone: using mobile phone data. In Pro-

ceedings of the International Conference on Multime-

dia and Expo Workshops (ICMEW), pages 635–640.

IEEE.

Mo, J. H. and Kumar, R. (2022). ictgan–an attack mitigation

technique for random-vector attack on accelerometer-

based gait authentication systems. In Proceedings of

the IEEE International Joint Conference on Biomet-

rics (IJCB), pages 1–9. IEEE.

Nguyen, H. H., Marcel, S., Yamagishi, J., and Echizen,

I. (2022). Master face attacks on face recognition

systems. IEEE Transactions on Biometrics, Behavior,

and Identity Science, 4(3):398–411.

Perera, P. and Patel, V. M. (2021). A joint representation

learning and feature modeling approach for one-class

recognition. In 2020 25th International Conference on

Pattern Recognition (ICPR), pages 6600–6607. IEEE.

P

´

erez, J. C., Alfarra, M., Thabet, A., Arbel

´

aez, P., and

Ghanem, B. (2023). Towards characterizing the se-

mantic robustness of face recognition. In Proceedings

of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition, pages 315–325.

Ramachandran, S., Nadimpalli, A. V., and Rattani, A.

(2021). An experimental evaluation on deepfake de-

tection using deep face recognition. In Proceedings

of the International Carnahan Conference on Security

Technology (ICCST), pages 1–6. IEEE.

Samsung (Accessed on 10-01-2025). 3 embedded hardware

security features your smartphone needs. https://insi

ghts.samsung.com/2018/06/22/3-embedded-hardwar

e-security-features-your-smartphone-needs/. online

web resource.

Smith-Creasey, M. (2024). Continuous biometric authenti-

cation systems: An overview.

Sun, Z., Wang, Y., Qu, G., and Zhou, Z. (2016). A 3-d

hand gesture signature based biometric authentication

system for smartphones. Security and Communication

Networks, 9(11):1359–1373.

Tolosana, R., Vera-Rodriguez, R., Fierrez, J., Morales, A.,

and Ortega-Garcia, J. (2022). An introduction to dig-

ital face manipulation. In Proceedings of the Hand-

book of Digital Face Manipulation and Detection:

From DeepFakes to Morphing Attacks, pages 3–26.

Springer International Publishing Cham.

Tsfresh (Accessed on 10-01-2025). Overview on extracted

features. https://tsfresh.readthedocs.io/en/latest/text/li

st of features.html. online web resource.

Xu, L., Skoularidou, M., Cuesta-Infante, A., and Veera-

machaneni, K. (2019). Modeling tabular data using

conditional gan. In Proceedings of the advances in

neural information processing systems, volume 32.

Xu, Y., Raja, K., Ramachandra, R., and Busch, C. (2022).

Adversarial attacks on face recognition systems. In

Proceedings of the Handbook of Digital Face Manip-

ulation and Detection: From DeepFakes to Morphing

Attacks, pages 139–161. Springer International Pub-

lishing Cham.

Yang, G.-C., Hu, Q., and Asghar, M. R. (2022). Tim:

Secure and usable authentication for smartphones.

Journal of Information Security and Applications,

71:103374.

Zhang, Q., Wang, L., Zhou, H., Jiang, K., and He, W.

(2020). Method and apparatus for securely calling fin-

gerprint information, and mobile terminal. US Patent

10,713,381.

Zhao, C., Gao, F., and Shen, Z. (2023). Attauth: An implicit

authentication framework for smartphone users using

multi-modality data. IEEE Internet of Things Journal.

SECRYPT 2025 - 22nd International Conference on Security and Cryptography

36