Misbehavior Detection in Connected Vehicle: Pre-Bayesian Majority

Game Framework

Adil Attiaoui

1,3 a

, Mouna Elmachkour

2

, Abdellatif Kobbane

1 b

and Marwane Ayaida

3 c

1

ENSIAS, Mohammed V University in Rabat, Morocco

2

FSJES-SALE, Mohammed V University in Rabat, Morocco

3

Polytechnique Hauts-de-France, CNRS, Univ. Lille, UMR 8520 - IEMN, F-59313 Valenciennes, France

Keywords:

Misbehavior Detection, Connected Vehicles, Bayesian Games, Majority Game, Vehicular Ad Hoc Networks,

Trust Mechanisms, Collaborative Decision-Making, Ephemeral Networks.

Abstract:

Ephemeral networks, such as vehicular ad hoc networks, face significant security challenges due to their tran-

sient nature and susceptibility to malicious nodes. Traditional trust mechanisms often struggle with dynamic

topologies and short-lived interactions, particularly when adversarial nodes spread misinformation. This paper

proposes a dual-game theoretical framework combining pre-Bayesian belief updates with majority voting to

enhance collaborative misbehavior detection in decentralized vehicular networks. The approach models node

interactions through two sequential games: a pre-Bayesian game where nodes assess information credibility

based on individual beliefs, followed by a majority game that aggregates collective decisions to refine trust

evaluations. Simulations across scenarios with varying malicious node proportions demonstrate the frame-

work’s adaptability, showing consistent belief convergence toward accurate classifications despite increased

adversarial influence. Results indicate robust performance even when 40% of nodes exhibit malicious behav-

ior, though convergence delays highlight challenges in highly adversarial environments. The study underscores

the importance of maintaining benign node majorities for system stability and suggests future integrations with

machine learning for scalability. This work provides a foundation for secure, real-time decision-making in ap-

plications requiring reliable ephemeral networks, such as connected vehicle systems.

1 INTRODUCTION

The rapid expansion of peer-to-peer communication

between wireless devices has led to the emergence

of ephemeral networks, characterized by their tran-

sient nature due to the unpredictable presence of mo-

bile nodes. These networks, which are prevalent

in applications such as vehicular ad hoc networks

(VANETs), mobile social networks, and wireless sen-

sor networks, offer substantial utility but are also

highly vulnerable to malicious activities. Malicious

nodes can manipulate data, disseminate false informa-

tion, or disrupt communication, thereby compromis-

ing network performance. For example, in VANETs,

a malicious vehicle might inject false traffic informa-

tion, causing significant disruptions or even accidents,

a

https://orcid.org/0009-0007-9549-6692

b

https://orcid.org/0000-0003-3593-4084

c

https://orcid.org/0000-0003-2319-3493

while others may refuse to forward packets, under-

mining routing efficiency.

While connected vehicles promise enhanced road

safety and improved driver experiences by reducing

accidents, they also require significant investments in

infrastructure and equipment to ensure reliable, real-

time road perception. The accuracy and reliability

of these systems depend on robust validation mech-

anisms capable of detecting and mitigating malicious

or erroneous data. Hardware or software failures, in-

correct data processing, and data reliability issues can

degrade data quality, further complicating the sys-

tem’s ability to deliver accurate road perception.

In the absence of centrally managed oversight in

these transitory networks, ensuring trust and coop-

eration among neighboring nodes becomes critical.

However, individual nodes often exhibit selfish be-

havior, driven by resource constraints and uncertain-

ties about the intentions and reliability of other partic-

ipants. These uncertainties ranging from the accuracy

Attiaoui, A., Elmachkour, M., Kobbane, A. and Ayaida, M.

Misbehavior Detection in Connected Vehicle: Pre-Bayesian Majority Game Framework.

DOI: 10.5220/0013359100003941

In Proceedings of the 11th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2025), pages 529-536

ISBN: 978-989-758-745-0; ISSN: 2184-495X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

529

of detection mechanisms to the potential value of col-

laboration can deter cooperation, hampering efforts to

enhance security and performance(Ben Elallid et al.,

2023). Various strategies have been proposed to de-

tect and mitigate misbehavior in such networks. Com-

prehensive reviews, such as those in (van der Heij-

den et al., 2019) and (Loukas et al., 2019), highlight

diverse solutions for cyber-physical systems (CPSs)

and intelligent transportation systems, particularly

for transient networks with unique node constraints.

For example, (Liu et al., 2006) examined attacker-

defender interactions in ad hoc networks, modeling

scenarios where malicious nodes could attack or not

while defenders alternated between monitoring and

non-monitoring states. Their game-theoretic analy-

sis provided valuable insights into optimizing inde-

pendent decision-making strategies (Manshaei et al.,

2013). However, this approach focused on individ-

ual agent interactions and did not address the broader

challenge of identifying misbehaving nodes across an

entire network.

Reputation-based systems rely on building a

credit history for nodes based on their past be-

havior(Hendrikx et al., 2015). While effective in

some contexts, they require continuous monitoring

and long-term data storage, making them less suit-

able for ephemeral networks where connections are

brief(Hendrikx et al., 2015). To address trust and

reliability in vehicular networks, (Attiaoui et al.,

2024) propose a reputation-based game-theoretic trust

mechanism that dynamically adjusts trust matrices

based on past interactions. Unlike traditional ap-

proaches, their framework penalizes malicious behav-

ior while rewarding true contributions without requir-

ing persistent data storage.

In other research, a local revocation process has

been proposed to account for the dynamic nature of

ephemeral networks (Raya et al., 2008) (Arshad et al.,

2018). In this process, a benign node, acting as an

initiator, is assumed to detect or suspect a malicious

node. It then broadcasts the identification (ID) of the

target node, marking it as an accused node. Sub-

sequently, neighboring benign nodes participate in a

local voting-based mechanism to determine whether

the target node should be discredited. The authors of

(Raya et al., 2008) and (Liu et al., 2010) analyzed

this local revocation process as a sequential voting

game, wherein a benign node can adopt one of three

strategies regarding the target node: voting, abstain-

ing, or self-sacrificing. A benign node’s decision to

vote or abstain is guided by economic considerations

within the game. Alternatively, it may employ a self-

sacrificing strategy, invalidating both its identity and

that of the target node.

The author in (Liu et al., 2010) have indicated two

major limitations of revocation processes in VANETs:

first, assuming complete information among nodes,

and second, the problem of false-positive and false-

negative rates in misbehavior detection. To address

these issues, (Alabdel Abass et al., 2017) introduced

an evolutionary game model where benign nodes co-

operate in a voting game in order to refine revocation

decisions and reduce unnecessary or overly aggres-

sive actions. Various other studies proposed weighted

voting schemes in clustering architectures (Raja et al.,

2015) and (Kim, 2016), and collaborative false accu-

sation prevention(Masdari, 2016). (Naja et al., 2020)

tackled the decision-making problems of VANETs by

proposing a GMDP model in order to find the opti-

mal dissemination of alert messages. Their approach

minimizes redundancy and delay while maximizing

message reachability, leveraging Mean Field Approx-

imation (MFA) to take up inter-vehicle dependencies

in decision making.

In (Diakonikolas and Pavlou, 2019) the authors

investigated the inverse power index issue in design-

ing weighted voting games, concluding that the prob-

lem is computationally complex for a wide range of

semi-value families. In another study, (Subba et al.,

2016) proposed an intrusion detection system (IDS)

utilizing election leader concepts and a hybrid IDS

model to reduce continuous node monitoring in mo-

bile ad hoc networks (MANETs). They later ex-

panded on this work in (Subba et al., 2018), incor-

porating a multi-layer game-theoretic approach to ad-

dress challenges related to dynamic network topolo-

gies in VANETs. While these methodologies effec-

tively reduce IDS traffic, they fail to consider the un-

certainties of node behavior and the role of incentives

in local voting games. Other researchers (Kerrache

et al., 2018) explored the impact of incentives on

misbehavior detection within UAV-assisted VANETs.

The authors in (Silva et al., 2019) introduced a voting

mechanism designed to generate new strategies based

on existing expert-derived ones, selecting the most ef-

fective strategies while accounting for opponent mod-

els. However, this framework does not specifically

address the challenge of identifying malicious nodes

in ephemeral networks.

In the absence of centralized oversight, ensuring

trust and cooperation among neighboring nodes in

ephemeral networks becomes critical. However, in-

dividual nodes often act selfishly due to resource con-

straints and uncertainties about the reliability and in-

tentions of others. Addressing these challenges re-

quires incentive mechanisms that encourage collabo-

ration and adapt to varying behaviors under uncertain

conditions. Such mechanisms are essential to detect

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

530

malicious nodes, enhance security, and ensure the re-

liability and performance of ephemeral networks, par-

ticularly in critical applications like connected vehi-

cles.

2 SYSTEM MODEL

2.1 Assumptions and Problem

Description

2.1.1 Network Model

We investigate the problem of detecting misbehav-

ior in networks where connections between nodes are

short-lived, and centralized management is not avail-

able. To illustrate our approach, we use vehicular

ad hoc networks (VANETs) as a typical example of

ephemeral networks. In this setting, nodes, such as

vehicles, are assumed to be equipped with the nec-

essary capabilities for wireless communication. The

network operates over a contention-based medium,

such as IEEE 802.11p, which reflects the character-

istics of wireless channel access in VANETs (Raya

et al., 2008). It is also assumed that a certificate au-

thority or similar entity has already authenticated the

nodes, ensuring that each node has a unique identifier.

Within this network, we classify the nodes into

two categories: malicious and benign. Malicious

nodes aim to disrupt operations by spreading false in-

formation. For example, a malicious vehicle might

transmit incorrect data to manipulate the behavior of a

following vehicle, potentially altering the optimal dis-

tance between them (Ferdowsi et al., 2018). Benign

nodes, on the other hand, are equipped with moni-

toring capabilities designed to detect irregularities or

fraudulent signals. For example, an autonomous vehi-

cle might use anti-spoofing techniques to identify and

counteract fake GPS signals (Behfarnia and Eslami,

2018).

2.1.2 Problem Definition

The widespread adoption of connected vehicles offers

the potential to significantly enhance road safety and

driving experiences by reducing accidents and im-

proving real-time road perception. However, achiev-

ing these benefits presents several challenges, partic-

ularly in ensuring accurate and reliable communica-

tion, which requires substantial investments in infras-

tructure and equipment. The reliability of such sys-

tems hinges on robust validation mechanisms to de-

tect and mitigate erroneous or malicious data. Fail-

ures in hardware or software, incorrect data process-

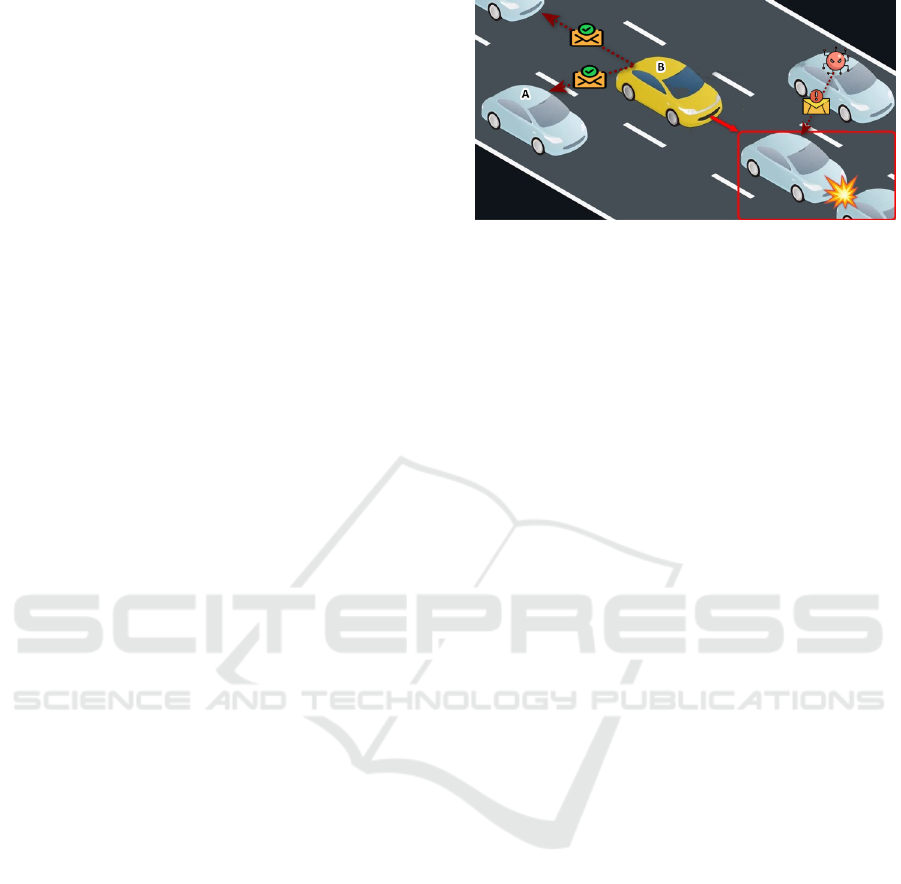

Figure 1: Security Risks in Autonomous Vehicle Networks.

ing, and unreliable information can degrade data qual-

ity and compromise the system’s overall performance.

In decentralized vehicular networks, where no

central authority exists, vehicles (or nodes) must nav-

igate the dual challenges of maintaining trust and col-

laborating effectively under uncertain conditions. For

example, malicious nodes may deliberately dissemi-

nate false information to disrupt traffic or compromise

safety, while benign nodes strive to preserve an accu-

rate and truthful flow of information. This dynamic

highlights the pressing need for mechanisms that en-

able vehicles to autonomously validate and respond to

shared data in real time, even in environments charac-

terized by fleeting connections and incomplete infor-

mation.

Consider a scenario involving vehicles A and B,

as illustrated in Figure 1. Suppose vehicle B de-

tects an object on the road and aims to communi-

cate this information to vehicle A. To do so, vehicle

B sends a message to vehicle A. Upon receiving the

message, vehicle A processes the data without em-

ploying any verification or trust mechanisms. This

approach exposes the system to significant risks, es-

pecially in the presence of malicious actors within the

network. Without robust mechanisms in place, the

network becomes vulnerable to misinformation and

malicious manipulation of data, to address these chal-

lenges. Such mechanisms are essential to mitigate

the impact of malicious behavior and ensure the relia-

bility of collective perception in connected vehicular

networks.

2.2 Problem Formulation

2.2.1 Parameters

To model the collaborative misbehavior detection

mechanism, we define the following parameters:

• Players (Vehicles): Represented as nodes in the

network, each vehicle is classified as either be-

nign (aiming to maintain safety and truthful com-

munication) or malicious (seeking to spread false

Misbehavior Detection in Connected Vehicle: Pre-Bayesian Majority Game Framework

531

information or disrupt the system).

• Actions: Each vehicle can choose to:

– Accept (A): Trust the received information and

act upon it.

– Reject (R): Disregard the information as false.

• Types:

– Benign nodes (t = 1): Prioritize safety and cor-

rectness, aiming to propagate truthful informa-

tion.

– Malicious nodes (t = 2): Seek to spread false

information or obstruct correct data dissemina-

tion.

• Belief Distribution (p

t

i

): Represents the prior be-

lief of a node i about the veracity of the received

information. For example, p

t

i

= 0.75 means the

node believes the information is true with 75%

confidence.

• Information Truth (Truth): Indicates whether

the information is true (Truth = 1) or false

(Truth = 0).

• Threshold (λ): A consensus parameter for the

majority game, typically set as λ = ⌈N/2⌉, where

N is the total number of vehicles.

2.2.2 Game Definition and Notations

The misbehavior detection mechanism is modeled us-

ing a dual-game framework:

• First Game: Pre-Bayesian Game

– Players: N vehicles, i ∈ {1,2, ... ,N}.

– Actions: Each vehicle i decides to either:

*

Accept (A): Trust and act on the received in-

formation.

*

Reject (R): Disregard the information.

– Utility Function: Each vehicle’s utility is de-

fined by its type (t

i

) and the veracity of the in-

formation (Truth):

U

i

= p

t

i

·g(A,t

i

,Truth)+(1− p

t

i

)·g(R,t

i

,Truth),

(1)

where:

*

p

t

i

: Belief about the information’s truthful-

ness.

*

g(A,t

i

,Truth): Payoff for accepting the infor-

mation.

*

g(R,t

i

,Truth): Payoff for rejecting the infor-

mation.

• Second Game: Majority Game

– Set of Players: The same set of players N is

used in this game, representing the connected

vehicles in the network. Each player i ∈ N has

previously made a decision in the first game

(the pre-Bayesian game) and must now make a

collective decision based on the choices of the

other players.

– Set of Actions: In this game, the actions of the

players are defined as follows:

*

Make the Majority Decision: Each vehicle

must decide to accept or reject the received in-

formation based on the majority decision from

other players in the network. The majority

decision is defined by a threshold λ = ⌈

|N|

2

⌉,

where |N| is the total number of players (vehi-

cles). If the majority of players accept the in-

formation, it is considered valid and accepted.

If the majority rejects the information, it is

considered false and rejected.

*

Maximizing Road Safety: Each vehicle

strives to ensure that its individual decision

aligns with the majority’s choice to maximize

road safety and improve the driving experi-

ence. The goal is to reach a consensus with-

out requiring direct communication between

the vehicles or exchange of information.

– Interaction Between the Games: The link be-

tween the first game (pre-Bayesian) and the

second game (majority) is established by the

variable truth, which serves as a pipe between

the two games. This variable determines the

truthfulness of the information through the vot-

ing process. The value of truth (whether true

or false) influences the beliefs of the players in

the pre-Bayesian game, which impacts their fu-

ture decisions in the majority game. In other

words, learning from the first game (via be-

lief adjustment p

t

i

) influences the actions in the

second game, thereby enhancing the collective

decision-making dynamics.

– Utility Function of the Second Game: The

utility in this second game depends on the out-

come of the majority vote:

*

If the majority accepts the information, this

can be interpreted as an indication that the in-

formation is likely true (Truth = 1).

*

If the majority rejects the information, it may

indicate that the information is likely false

(Truth = 0).

The utility of player i in this game can be mod-

eled by a Bernoulli function, which adjusts the

utility based on the majority vote result:

U

′

i

= U

i

+ ∆U

i

, (2)

where ∆U

i

represents the adjustment in utility

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

532

based on the alignment of player i’s decision

with the majority’s choice.

Thus, each vehicle adjusts its beliefs about the

truthfulness of the received information based

on the collective decisions of the other vehi-

cles. This process helps to reinforce trust in the

information and improves the safety and effec-

tiveness of the decisions made in a connected

driving environment.

2.2.3 Payoff Design

The payoff for each player is determined based on its

action, type, and the truthfulness of the information:

• For a Benign Node (t = 1):

– g(A,1, Truth = 1): High payoff if the node ac-

cepts true information, as it promotes safety

(e.g., g(A,1,1) = 20).

– g(R,1, Truth = 1): Negative payoff if the node

rejects true information, potentially causing ac-

cidents (e.g., g(R,1,1) = −5).

– g(A,1, Truth = 0): Negative payoff for accept-

ing false information, leading to false alarms

(e.g., g(A,1,0) = −10).

– g(R,1, Truth = 0): High payoff for reject-

ing false information, maintaining safety (e.g.,

g(R,1,0) = 25).

• For a Malicious Node (t = 2):

– g(A,2, Truth = 1): Negative payoff if the node

accepts true information, as it fails to disrupt

the system (e.g., g(A,2,1) = −10).

– g(R,2, Truth = 1): Positive payoff for reject-

ing true information, partially achieving its goal

(e.g., g(R,2,1) = 5).

– g(A,2, Truth = 0): Positive payoff for ac-

cepting false information, spreading confusion

(e.g., g(A,2,0) = 15).

– g(R,2, Truth = 0): Neutral or negative payoff

for rejecting false information, as it fails to dis-

rupt (e.g., g(R,2,0) = −5).

The payoff structure ensures that benign nodes

prioritize accuracy and safety, while malicious nodes

aim to disrupt the system.

3 SIMULATION RESULTS

To thoroughly evaluate the proposed Cooperative

Bayesian Q-Learning model with Ex-Post Validation,

we conducted extensive simulations under various

scenarios. Each simulation was designed to test the

model’s performance, resilience, and adaptability un-

der different levels of adversarial influence.

3.1 Simulation Setup

The simulation environment consists of 23 nodes in-

teracting over 300 iterations. Among these nodes,

Player 23 is designated as an Honest node. This

player is used as a benchmark to observe the evolution

of its belief regarding the honesty or maliciousness of

other players.

• Belief Tracking:

– The red line in our results illustrates Player 23’s

belief about malicious nodes.

– The green line shows its belief about honest

nodes.

3.2 Malicious Node Scenarios

To simulate varying levels of adversarial behavior, we

explored four distinct scenarios, each with a different

percentage of malicious nodes:

• Scenario 1: 10% malicious nodes

• Scenario 2: 25% malicious nodes

• Scenario 3: 35% malicious nodes

• Scenario 4: 40% malicious nodes

Each scenario progressively increases the propor-

tion of malicious nodes, enabling us to analyze how

the model responds to greater levels of adversarial in-

fluence.

3.3 Tools and Framework

The simulations were implemented in MATLAB,

leveraging its robust computational and visualization

capabilities to perform Q-Learning, belief updates,

and coalition management. The experiments were re-

peated to ensure consistency in results, and each setup

adhered to the following conditions:

• Belief Initialization: Beliefs are initialized ran-

domly while ensuring each node has accurate self-

knowledge.

• Action Space: Nodes choose between two ac-

tions accept or reject messages based on updated

probabilities derived from Q-values.

• Coalition Dynamics: Nodes with beliefs falling

below a threshold of 30% are excluded from the

coalition, and their interactions are halted.

This systematic approach ensures a comprehen-

sive evaluation of the proposed model across diverse

settings, providing insights into its behavior under in-

creasing levels of malicious activity.

Misbehavior Detection in Connected Vehicle: Pre-Bayesian Majority Game Framework

533

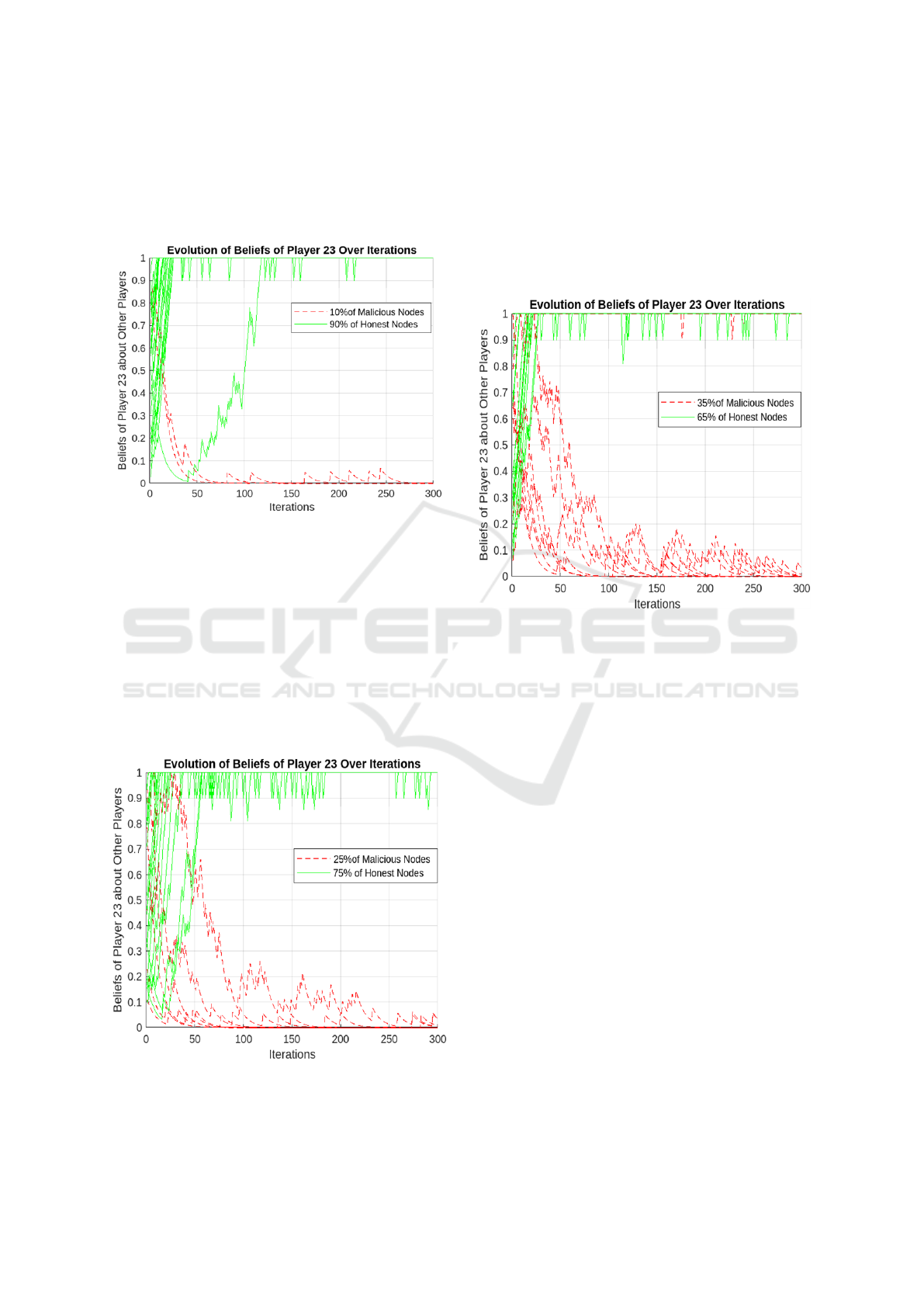

The numerical results depicted in Figures 2, 3, 4,

and 5 provide a detailed understanding of how Player

23’s beliefs evolve under varying levels of malicious

node presence (10%, 25%, 35%, and 40%) across it-

erations.

Figure 2: Case 1: Evolution of Player 23’s Beliefs Over

Iterations with 10% Malicious Nodes.

In Figure 2, where the network contains 10% ma-

licious nodes, Player 23’s beliefs converge quickly

to stable and accurate values. The small proportion

of malicious nodes ensures that benign nodes domi-

nate the network, allowing the collaborative detection

mechanism to efficiently filter out false information.

This scenario demonstrates the system’s robustness in

environments with low adversarial influence, where

trust and cooperation among nodes remain relatively

unchallenged.

Figure 3: Case 2: Evolution of Player 23’s Beliefs Over

Iterations with 25% Malicious Nodes.

In Figure 3, with 25% malicious nodes, the con-

vergence process is slightly slower compared to the

10% case. The increased presence of malicious nodes

introduces more conflicting information, which de-

lays the belief adjustment process. However, the pro-

posed mechanism successfully enables Player 23 to

identify malicious behavior and align its beliefs with

the truth over time.

Figure 4: Case 3: Evolution of Player 23’s Beliefs Over

Iterations with 35% Malicious Nodes.

In Figure 4, where 35% of the nodes are mali-

cious, the challenges posed by a higher proportion of

adversaries are evident. The belief evolution displays

noticeable fluctuations in the initial iterations, reflect-

ing the increased noise and uncertainty introduced by

malicious nodes. However, the slower convergence

indicates the growing difficulty of maintaining trust

as adversarial influence increases.

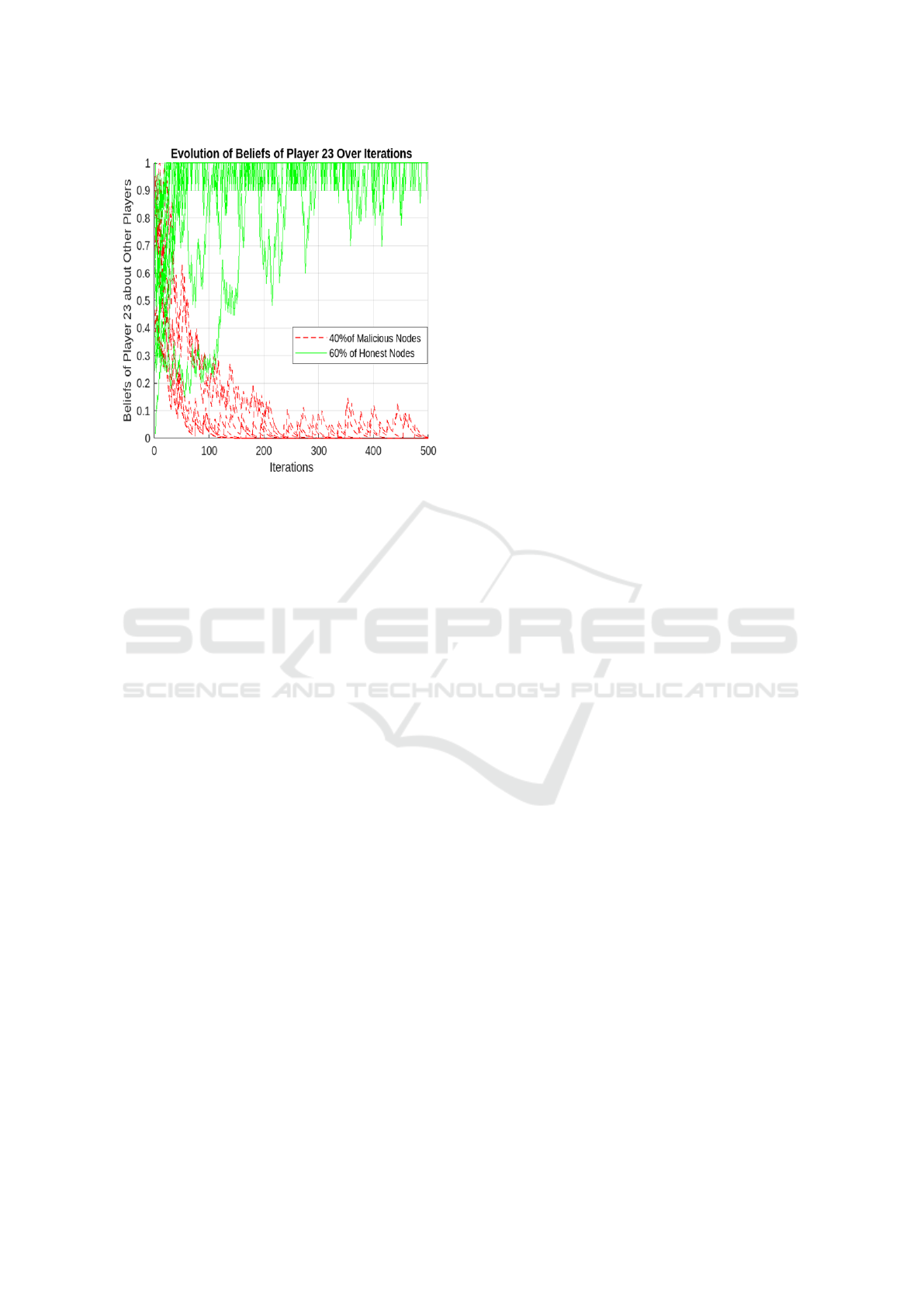

Finally, Figure 5 illustrates the most adversarial

scenario, with 40% malicious nodes. Here, Player

23 experiences significant belief fluctuations in the

early stages due to the near-critical threshold of ma-

licious node influence. The high proportion of ma-

licious nodes undermines the network’s ability to

achieve consensus, making belief updates more chal-

lenging. Nevertheless, the framework continues to

enable gradual convergence toward accurate beliefs,

albeit with reduced efficiency.

In summary, the results demonstrate that as the

percentage of malicious nodes increases, the belief

convergence process becomes slower and more sus-

ceptible to fluctuations. However, the framework con-

sistently enables Player 23 to adapt and refine its be-

liefs, even in highly adversarial scenarios. The crit-

ical threshold around 40% malicious nodes empha-

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

534

Figure 5: Case 4: Evolution of Player 23’s Beliefs Over

Iterations with 40% Malicious Nodes.

sizes the importance of preserving a majority of be-

nign nodes to ensure the system’s effectiveness and

stability. These findings validate the robustness of the

proposed framework while identifying opportunities

for further optimization in high-adversarial environ-

ments.

4 CONCLUSIONS

The paper presents a misbehavior detection frame-

work for connected vehicular networks, leveraging

a dual-game approach that combines a pre-Bayesian

game and a majority decision-making game. The pro-

posed method addresses critical challenges in decen-

tralized, ephemeral networks by enabling nodes to

collaboratively and autonomously validate informa-

tion in the presence of adversarial activities. Through

a carefully designed belief update mechanism and

reputation-based trust models, the framework ensures

the reliability of shared data while mitigating the im-

pact of malicious nodes.

Numerical simulations under varying proportions

of malicious nodes (10%, 25%, 35%, and 40%) high-

light the framework’s robustness and adaptability. Re-

sults demonstrate that the belief evolution process is

influenced by the percentage of adversarial nodes,

with slower convergence and more pronounced fluc-

tuations observed in higher-adversarial scenarios. De-

spite these challenges, the proposed system maintains

its effectiveness, achieving accurate and reliable be-

lief stabilization even when 40% of nodes exhibit ma-

licious behavior.

These findings validate the potential of the pro-

posed framework to enhance security and decision-

making in connected vehicular networks. However,

future work is needed to optimize the framework for

scenarios with extremely high adversarial influence

and to evaluate its scalability in larger, more com-

plex networks. Additionally, integrating advanced

machine learning techniques and real-world vehicu-

lar datasets could further improve the system’s per-

formance and adaptability in diverse operational en-

vironments.

ACKNOWLEDGEMENTS

This research was supported by the CNRST as part of

the PhD-ASsociate Scholarship – PASS program.

REFERENCES

Alabdel Abass, A. A., Mandayam, N. B., and Gajic, Z.

(2017). An evolutionary game model for threat re-

vocation in ephemeral networks. In 2017 51st An-

nual Conference on Information Sciences and Systems

(CISS), pages 1–5.

Arshad, M., Ullah, Z., Ahmad, N., Khalid, M., Criuck-

shank, H., and Cao, Y. (2018). A survey of

local/cooperative-based malicious information detec-

tion techniques in vanets. EURASIP Journal on Wire-

less Communications and Networking, 2018.

Attiaoui, A., Elmachkour, M., Ayaida, M., and Kobbane,

A. (2024). Strategic trust: Reputation-based mech-

anisms for mitigating malicious behavior in vehicular

collective perception. In 2024 16th International Con-

ference on Communication Software and Networks

(ICCSN), pages 137–141.

Behfarnia, A. and Eslami, A. (2018). Risk assessment of

autonomous vehicles using bayesian defense graphs.

In 2018 IEEE 88th Vehicular Technology Conference

(VTC-Fall), pages 1–5.

Ben Elallid, B., Abouaomar, A., Benamar, N., and Kob-

bane, A. (2023). Vehicles control: Collision avoid-

ance using federated deep reinforcement learning. In

GLOBECOM 2023 - 2023 IEEE Global Communica-

tions Conference, pages 4369–4374.

Diakonikolas, I. and Pavlou, C. (2019). On the complexity

of the inverse semivalue problem for weighted voting

games. Proceedings of the AAAI Conference on Arti-

ficial Intelligence, 33(01):1869–1876.

Ferdowsi, A., Challita, U., Saad, W., and Mandayam,

N. B. (2018). Robust deep reinforcement learning for

security and safety in autonomous vehicle systems.

In 2018 21st International Conference on Intelligent

Transportation Systems (ITSC), pages 307–312.

Hendrikx, F., Bubendorfer, K., and Chard, R. (2015). Rep-

utation systems: A survey and taxonomy. Journal of

Parallel and Distributed Computing, 75:184–197.

Misbehavior Detection in Connected Vehicle: Pre-Bayesian Majority Game Framework

535

Kerrache, C. A., Lakas, A., Lagraa, N., and Barka, E.

(2018). Uav-assisted technique for the detection of

malicious and selfish nodes in vanets. Vehicular Com-

munications, 11:1–11.

Kim, S. (2016). Effective certificate revocation scheme

based on weighted voting game approach. IET Infor-

mation Security, 10(4):180–187.

Liu, B., Chiang, J., and Hu, Y.-C. (2010). Limits on revo-

cation in vanets.

Liu, Y., Comaniciu, C., and Man, H. (2006). Modelling

misbehaviour in ad hoc networks: a game theoretic

approach for intrusion detection. IJSN, 1:243–254.

Loukas, G., Karapistoli, E., Panaousis, E., Sarigiannidis, P.,

Bezemskij, A., and Vuong, T. (2019). A taxonomy

and survey of cyber-physical intrusion detection ap-

proaches for vehicles. Ad Hoc Networks, 84:124–147.

Manshaei, M. H., Zhu, Q., Alpcan, T., Bacs¸ar, T., and

Hubaux, J.-P. (2013). Game theory meets network se-

curity and privacy. 45(3).

Masdari, M. (2016). Towards secure localized certificate

revocation in mobile ad-hoc networks. IETE Technical

Review, 34:1–11.

Naja, A., Oualhaj, O. A., Boulmalf, M., Essaaidi, M., and

Kobbane, A. (2020). Alert message dissemination

using graph-based markov decision process model in

vanets. In ICC 2020 - 2020 IEEE International Con-

ference on Communications (ICC), pages 1–6.

Raja, K., D, A., and Ravi, V. (2015). A reliant certificate re-

vocation of malicious nodes in manets. Wireless Per-

sonal Communications, 90.

Raya, M., Manshaei, M. H., F

´

elegyhazi, M., and Hubaux,

J.-P. (2008). Revocation games in ephemeral net-

works. In Proceedings of the 15th ACM Conference

on Computer and Communications Security, CCS ’08,

page 199–210, New York, NY, USA. Association for

Computing Machinery.

Silva, C., Moraes, R. O., Lelis, L. H. S., and Gal, K. (2019).

Strategy generation for multiunit real-time games via

voting. IEEE Transactions on Games, 11(4):426–435.

Subba, B., Biswas, S., and Karmakar, S. (2016). Intrusion

detection in mobile ad-hoc networks: Bayesian game

formulation. Engineering Science and Technology, an

International Journal, 19(2):782–799.

Subba, B., Biswas, S., and Karmakar, S. (2018). A game

theory based multi layered intrusion detection frame-

work for vanet. Future Generation Computer Systems,

82:12–28.

van der Heijden, R. W., Dietzel, S., Leinm

¨

uller, T., and

Kargl, F. (2019). Survey on misbehavior detection in

cooperative intelligent transportation systems. IEEE

Communications Surveys & Tutorials, 21(1):779–811.

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

536